《Kubernetes部署篇:Ubuntu20.04基于containerd二进制部署K8S 1.25.14集群(多主多从)》

一、架构图

如下图所示:

![]()

二、部署说明

2.1、部署流程

1、系统环境初始化,主要包括 主机名设置、主机hosts解析、关闭防火墙、关闭swap分区、修改系统参数、时间时区同步、修改内核参数、启用ipvs模式。

2、使用一键生成K8S集群证书工具创建证书文件。

3、二进制安装etcd集群。

4、二进制安装Nginx + keepalived高可用负载均衡软件。

5、二进制安装containerd容器引擎。

6、二进制安装kube-apiserver、kube-controller-manager、kube-scheduler。

7、配置TLS启动引导。

8、二进制安装kubelet、kube-proxy。

9、安装coredns、calico,主要包括镜像下载及yaml文件配置修改。

10、集群测试,主要包括 集群状态检查、DNS测试、高可用测试、功能性测试。

2.2、资源说明

1、使用apt工具依赖软件,如下所示:

| 软件 | 版本 |

|---|---|

| conntrack | 1.4.5 |

| ebtables | 2.0.11 |

| socat | 1.7.3 |

总结:

1、ebtables(以太网桥规则管理工具):这是一个用于在Linux系统中管理以太网桥规则的工具。在Kubernetes中,ebtables 用于在网络分区中实现容器之间的隔离和通信。

2、socat(网络工具):这是一个用于在Linux系统中建立各种类型网络连接的工具。在Kubernetes网络中,socat可以 用于创建端口转发、代理和转发等网络连接。

3、conntrack:这是一个用于连接跟踪的内核模块和工具。它允许您跟踪网络连接的状态和信息,如源IP地址、目标IP地址、端口号等。

2、二进制文件版本,如下所示:

| 软件 | 版本 |

|---|---|

| etcd | 3.5.6 |

| kube-apiserver | v1.25.14 |

| kube-controller-manager | v1.25.14 |

| kube-scheduler | v1.25.14 |

| kubelet | v1.25.14 |

| kube-proxy | v1.25.14 |

| kubectl | v1.25.14 |

3、coredns和calico资源清单模板文件,如下所示:

| 名称 | 版本 |

|---|---|

| coredns | v1.9.3 |

| calico | v3.26.1 |

4、容器镜像文件版本,如下所示:

| pause镜像 | coredns镜像 | calico镜像 |

|---|---|---|

| registry.k8s.io/pause:3.8 | registry.k8s.io/coredns/coredns:v1.9.3 | docker.io/calico/cni:v3.26.1 |

| - | - | docker.io/calico/kube-controllers:v3.26.1 |

| - | - | docker.io/calico/node:v3.26.1 |

说明:国内的服务器无法访问registry.k8s.io仓库,可以替换为registry.cn-hangzhou.aliyuncs.com/google_containers。

6、containerd容器二进制文件版本,如下所示:

| 名称 | 版本 |

|---|---|

| containerd | v1.7.2 |

| runc | v1.1.7 |

| cni-plugins | v1.2.0 |

| crictl | v1.26.0 |

或者根据官方文档,自1.1版本之后,提供了cri-tools、containerd、runc、cni二进制合集包

| 名称 | 版本 |

|---|---|

| cri-containerd-cni | v1.7.2 |

2.3、资源下载

Ubuntu20.04基于containerd二进制部署K8S 1.25.14集群资源合集

2.4、问题总结

问题一、K8S版本与calico版本对应关系?

| calico版本 | calico yml文件下载 | 支持K8S版本 |

|---|---|---|

| v3.24.6 | calico.yml | v1.22、v1.23、v1.24、v1.25 |

| v3.25.2 | calico.yml | v1.23、v1.24、v1.25、v1.26 |

| v3.26.1 | calico.yml | v1.24、v1.25、v1.26、v1.27 |

问题二:为什么安装containerd,需要同时安装runc及cni网络插件?

安装containerd,需要同时安装runc及cni网络插件。Containerd不能直接操作容器,需要通过runc来运行容器。默认Containerd管理的容器仅有lo网络(无法访问容器之外的网络),如果需要访问容器之外的网络则需要安装CNI网络插件。CNI(Container Network Interface) 是一套容器网络接口规范,用于为容器分配ip地址,通过CNI插件Containerd管理的容器可以访问容器之外的网络。

问题三:为什么安装cri-tools?

cri-tools(容器运行时工具):这是一个 用于与Kubernetes容器运行时接口(CRI)进行交互的命令行工具集。它提供了一些有用的功能,如创建、销毁和管理容器等。

问题四:为什么需要配置TLS启动引导

在一个kubernetes集群中,工作节点上的kubelet和kube-proxy组件需要与Kubernetes控制平面组件通信,尤其是kube-apiserver。为了确保通信是私密的、安全的,我们强烈建议工作节点上的kubelet和kube-proxy组件使用客户端TLS证书。 但是当worker工作节点非常多时,这种客户端证书签发需要大量工作,同时也会增加集群扩展复杂度,为了简化流程,Kubernetes引入了TLS bootstraping机制来自动颁发客户端证书。

问题五:kubernetes二进制文件下载方式有哪些?

方式一:官网下载地址

方式二:Github下载地址

注意:如果下载很慢,请使用Github加速下载

三、环境信息

- 1、部署规划

| 主机名 | K8S版本 | 系统版本 | 内核版本 | IP地址 | 备注 |

|---|---|---|---|---|---|

| k8s-master-12 | 1.25.14 | Ubuntu 20.04.5 LTS | 5.15.0-69-generic | 192.168.1.12 | master节点 + etcd节点 |

| k8s-master-13 | 1.25.14 | Ubuntu 20.04.5 LTS | 5.15.0-69-generic | 192.168.1.13 | master节点 + etcd节点 |

| k8s-master-14 | 1.25.14 | Ubuntu 20.04.5 LTS | 5.15.0-69-generic | 192.168.1.14 | master节点 + etcd节点 |

| k8s-worker-15 | 1.25.14 | Ubuntu 20.04.5 LTS | 5.15.0-69-generic | 192.168.1.15 | worker节点 |

| - | - | - | - | 192.168.1.110 | vip(虚拟ip) |

| k8s-lb-01 | - | Ubuntu 20.04.5 LTS | 5.15.0-69-generic | 192.168.1.16 | Nginx + keepalived |

| k8s-lb-02 | - | Ubuntu 20.04.5 LTS | 5.15.0-69-generic | 192.168.1.17 | Nginx + keepalived |

- 2、集群网段

| 宿主机 | 集群Pod网段 | 集群Service网段 |

|---|---|---|

| 192.168.1.0/24 | 10.48.0.0/16 | 10.96.0.0/16 |

说明:定义10.96.0.10为CoreDNS的Service IP地址。

- 3、服务端口

| 服务名称 | 端口默认值 | 参数 | 协议 | 端口 | 必须开启 | 说明 |

|---|---|---|---|---|---|---|

| kube-apiserver | 6443 | –secure-port | TCP(HTTPS) | 安全端口 | 是 | - |

| kube-controller-manager | 10257 | –secure-port | TCP(HTTPS) | 安全端口 | 建议开启 | 认证与授权 |

| kube-scheduler | 10259 | –secure-port | TCP(HTTPS) | 安全端口 | 建议开启 | 认证与授权 |

| kubelet | 10248 | –healthz-port | TCP(HTTP) | 健康检测端口 | 建议开启 | - |

| kubelet | 10250 | –port | TCP(HTTPS) | 安全端口 | 是 | 认证与授权 |

| kubelet | 10255 | –read-only-port | TCP (HTTP) | 非安全端口 | 建议开启 | - |

| kube-proxy | 10249 | –metrics-port | TCP(HTTP) | 指标端口 | 建议开启 | - |

| kube-proxy | 10256 | –healthz-port | TCP(HTTP) | 健康检测端口 | 建议开启 | - |

| NodePort | 30000-36000 | –service-node-port-range | TCP/UDP | - | - | - |

| etcd | 2379 | - | TCP | - | - | etcd客户端请求端口 |

| etcd | 2380 | - | TCP | - | - | etcd节点之间通信端口 |

四、初始化环境

4.1、主机名设置

说明:分别在对应的节点IP上设置主机名。

| 主机IP | 设置主机名 |

|---|---|

| 192.168.1.12 | hostnamectl set-hostname k8s-master-12 && bash |

| 192.168.1.13 | hostnamectl set-hostname k8s-master-13 && bash |

| 192.168.1.14 | hostnamectl set-hostname k8s-master-14 && bash |

| 192.168.1.15 | hostnamectl set-hostname k8s-worker-15 && bash |

| 192.168.1.16 | hostnamectl set-hostname k8s-lb-01 && bash |

| 192.168.1.17 | hostnamectl set-hostname k8s-lb-02 && bash |

4.2、配置主机hosts

说明:以下操作无论是master节点、worker节点、kube-lb节点均需要执行。

cat >> /etc/hosts <<EOF

192.168.1.12 k8s-master-12

192.168.1.13 k8s-master-13

192.168.1.14 k8s-master-14

192.168.1.15 k8s-worker-15

EOF

4.3、关闭防火墙

说明:以下操作无论是master节点、worker节点、kube-lb节点均需要执行。

ufw disable && systemctl stop ufw && systemctl disable ufw

4.4、关闭swap分区

说明:以下操作无论是master节点、worker节点、kube-lb节点均需要执行。

swapoff -a

sed -i '/swap/ s/^/#/' /etc/fstab

4.5、修改系统参数

说明:以下操作无论是master节点、worker节点、kube-lb节点均需要执行。

cat >>/etc/security/limits.conf <<EOF

* soft nofile 65535

* hard nofile 65535

* soft nproc 65535

* hard nproc 65535

* soft memlock unlimited

* hard memlock unlimited

EOF

4.6、时间时区同步

说明:以下操作无论是master节点、worker节点、kube-lb节点均需要执行。

1、设置时区为Asia/Shanghai,如果已经是则请忽略

root@k8s-master-12:~# timedatectl

Local time: 五 2023-03-31 14:11:36 CST

Universal time: 五 2023-03-31 06:11:36 UTC

RTC time: 五 2023-03-31 06:11:36

Time zone: Asia/Shanghai (CST, +0800)

System clock synchronized: yes

NTP service: active

RTC in local TZ: no

4.7、修改内核参数

说明:以下操作无论是master节点、worker节点均需要执行。

说明:有一些ipv4的流量不能走iptables链,因为linux内核是一个过滤器,所有流量都会经过他,然后再匹配是否可进入当前应用进程去处理,所以会导致流量丢失。配置k8s.conf文件,如下所示:

- 1、加载br_netfilter和overlay模块

cat <<EOF | tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

- 2、设置所需的sysctl参数

cat <<EOF | tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system

4.8、启用IPVS模式

说明:以下操作无论是master节点、worker节点节点均需要执行。

# kube-proxy开启ipvs的前提需要加载以下的内核模块

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

注意:如果出现modprobe: FATAL: Module nf_conntrack_ipv4 not found in directory /lib/modules/5.15.0-69-generic错误,这是因为使用了高内核,当前内核版本为5.15.0-69-generic,在高版本内核已经把nf_conntrack_ipv4替换为nf_conntrack了。

- 1、安装ipvs

apt -y install ipvsadm ipset sysstat conntrack ebtables socat

总结:

1、ipvsadm:这是一个用于管理Linux内核中的IP虚拟服务器(IPVS)的工具。它允许您配置和管理负载均衡、网络地址转换(NAT)和透明代理等功能。

2、ipset:这是一个用于管理Linux内核中的IP集合的工具。它允许您创建和管理IP地址、IP地址范围和端口号的集合,以便在防火墙规则中使用。

3、sysstat:这是一个用于系统性能监控和报告的工具集。它包括一些实用程序,如sar、iostat和mpstat,用于收集和显示系统资源使用情况的统计信息。

4、conntrack:这是一个用于连接跟踪的内核模块和工具。它允许您跟踪网络连接的状态和信息,如源IP地址、目标IP地址、端口号等。

- 2、加载内核模块脚本

cat > /etc/profile.d/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

- 3、赋予权限

chmod 755 /etc/profile.d/ipvs.modules

- 4、执行加载模块脚本

bash /etc/profile.d/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

- 5、设置开机自动加载模块

cat <<EOF | tee /etc/modules-load.d/nf_conntrack_ipv4.conf

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

EOF

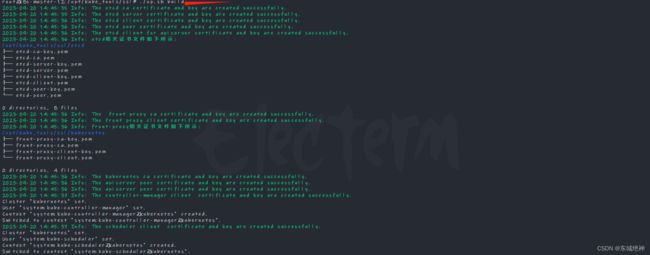

五、创建K8S集群证书

说明:在K8S集群任意一台主机上操作,这里默认在k8s-master-12主机上操作。

5.1、创建证书

1、下载创建证书工具

基于cfssl工具集一键生成二进制kubernetes集群相关证书

5.2、分发证书

说明:在k8s-master-12主机上生成完证书之后,需要将相关证书复制到K8S集群节点。

2、与etcd相关证书

说明:在当前环境中,etcd节点分别为k8s-master-12、k8s-master-13、k8s-master-14,所以需要提前在3个节点上创建/etc/kubernetes/pki证书目录,并把与etcd相关的证书拷贝到3个节点的/etc/kubernetes/pki目录下。

# 1、分别在etcd服务各个节点创建证书目录

mkdir -p /etc/kubernetes/pki

# 2、将etcd相关证书拷贝到各个节点/etc/kubernetes/pki/etcd目录下

3、与master节点相关证书及kubeconfig文件

说明:在当前环境中,master节点分别为k8s-master-12、k8s-master-13、k8s-master-14,所以需要提前在3个节点上创建/etc/kubernetes/pki证书目录,如果etcd与master节点为同一主机,可不用创建。同时把与master相关的证书文件及kubeconfig文件拷贝到3个节点的/etc/kubernetes/pki目录下。

# 1、分别在master各个节点创建证书目录

mkdir -p /etc/kubernetes/pki

# 2、将master相关证书拷贝到各个节点/etc/kubernetes/pki目录下

# 3、将master相关的kubeconfig文件拷贝到/etc/kubernetes/pki目录下

4、与worker节点相关证书

说明:在当前环境中,worker节点分别为k8s-worker-15,所以需要提前在这个节点上创建/etc/kubernetes/pki证书目录,同时把与worker节点相关的证书文件及kubeconfig文件拷贝到这个节点的/etc/kubernetes/pki目录下,如果有多个worker节点,需同样进行此操作。

# 1、分别在worker节点上创建证书目录

mkdir -p /etc/kubernetes/pki

# 2、将worker相关证书拷贝到各个节点/etc/kubernetes/pki目录下

# 3、将worker相关的kubeconfig文件拷贝到/etc/kubernetes/pki目录下

六、安装etcd集群

说明:以下操作需在etcd服务所有节点上操作。当前环境中etcd集群节点分别为k8s-master-12、k8s-master-13、k8s-master-14。

6.1、下载etcd二进制文件

tar axf etcd-v3.5.6-linux-amd64.tar.gz

mv etcd-v3.5.6-linux-amd64/etcd* /usr/bin

6.2、创建service文件

| etcd01节点 |

root@k8s-master-12:~# vim /etc/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd

ExecStart=/usr/local/bin/etcd \

--name=etcd01 \

--cert-file=/etc/kubernetes/pki/etcd-server.pem \

--key-file=/etc/kubernetes/pki/etcd-server-key.pem \

--peer-cert-file=/etc/kubernetes/pki/etcd-peer.pem \

--peer-key-file=/etc/kubernetes/pki/etcd-peer-key.pem \

--trusted-ca-file=/etc/kubernetes/pki/etcd-ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/pki/etcd-ca.pem \

--initial-advertise-peer-urls=https://192.168.1.12:2380 \

--listen-peer-urls=https://192.168.1.12:2380 \

--listen-client-urls=https://192.168.1.12:2379,http://127.0.0.1:2379 \

--advertise-client-urls=https://192.168.1.12:2379 \

--initial-cluster-token=etcd-cluster \

--initial-cluster="etcd01=https://192.168.1.12:2380,etcd02=https://192.168.1.13:2380,etcd03=https://192.168.1.14:2380" \

--initial-cluster-state=new \

--data-dir=/var/lib/etcd \

--wal-dir="" \

--snapshot-count=50000 \

--auto-compaction-retention=1 \

--auto-compaction-mode=periodic \

--max-request-bytes=10485760 \

--quota-backend-bytes=8589934592

Restart=always

RestartSec=15

LimitNOFILE=65536

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

| etcd02节点 |

root@k8s-master-13:~# vim /etc/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd

ExecStart=/usr/local/bin/etcd \

--name=etcd02 \

--cert-file=/etc/kubernetes/pki/etcd-server.pem \

--key-file=/etc/kubernetes/pki/etcd-server-key.pem \

--peer-cert-file=/etc/kubernetes/pki/etcd-peer.pem \

--peer-key-file=/etc/kubernetes/pki/etcd-peer-key.pem \

--trusted-ca-file=/etc/kubernetes/pki/etcd-ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/pki/etcd-ca.pem \

--initial-advertise-peer-urls=https://192.168.1.13:2380 \

--listen-peer-urls=https://192.168.1.13:2380 \

--listen-client-urls=https://192.168.1.13:2379,http://127.0.0.1:2379 \

--advertise-client-urls=https://192.168.1.13:2379 \

--initial-cluster-token=etcd-cluster \

--initial-cluster="etcd01=https://192.168.1.12:2380,etcd02=https://192.168.1.13:2380,etcd03=https://192.168.1.14:2380" \

--initial-cluster-state=new \

--data-dir=/var/lib/etcd \

--wal-dir="" \

--snapshot-count=50000 \

--auto-compaction-retention=1 \

--auto-compaction-mode=periodic \

--max-request-bytes=10485760 \

--quota-backend-bytes=8589934592

Restart=always

RestartSec=15

LimitNOFILE=65536

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

| etcd03节点 |

root@k8s-master-14:~# vim /etc/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd

ExecStart=/usr/local/bin/etcd \

--name=etcd03 \

--cert-file=/etc/kubernetes/pki/etcd-server.pem \

--key-file=/etc/kubernetes/pki/etcd-server-key.pem \

--peer-cert-file=/etc/kubernetes/pki/etcd-peer.pem \

--peer-key-file=/etc/kubernetes/pki/etcd-peer-key.pem \

--trusted-ca-file=/etc/kubernetes/pki/etcd-ca.pem \

--peer-trusted-ca-file=/etc/kubernetes/pki/etcd-ca.pem \

--initial-advertise-peer-urls=https://192.168.1.14:2380 \

--listen-peer-urls=https://192.168.1.14:2380 \

--listen-client-urls=https://192.168.1.14:2379,http://127.0.0.1:2379 \

--advertise-client-urls=https://192.168.1.14:2379 \

--initial-cluster-token=etcd-cluster \

--initial-cluster="etcd01=https://192.168.1.12:2380,etcd02=https://192.168.1.13:2380,etcd03=https://192.168.1.14:2380" \

--initial-cluster-state=new \

--data-dir=/var/lib/etcd \

--wal-dir="" \

--snapshot-count=50000 \

--auto-compaction-retention=1 \

--auto-compaction-mode=periodic \

--max-request-bytes=10485760 \

--quota-backend-bytes=8589934592

Restart=always

RestartSec=15

LimitNOFILE=65536

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

6.3、启动etcd服务

说明:必须创建先etcd数据目录和工作目录。

mkdir /var/lib/etcd && chmod 700 /var/lib/etcd

systemctl daemon-reload && systemctl enable etcd && systemctl restart etcd

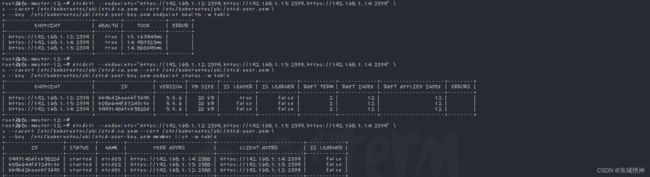

6.4、检查etcd集群状态

etcdctl --endpoints="https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.14:2379" \

--cacert /etc/kubernetes/pki/etcd-ca.pem --cert /etc/kubernetes/pki/etcd-peer.pem \

--key /etc/kubernetes/pki/etcd-peer-key.pem endpoint health -w table

etcdctl --endpoints="https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.14:2379" \

--cacert /etc/kubernetes/pki/etcd-ca.pem --cert /etc/kubernetes/pki/etcd-peer.pem \

--key /etc/kubernetes/pki/etcd-peer-key.pem endpoint status -w table

etcdctl --endpoints="https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.14:2379" \

--cacert /etc/kubernetes/pki/etcd-ca.pem --cert /etc/kubernetes/pki/etcd-peer.pem \

--key /etc/kubernetes/pki/etcd-peer-key.pem member list -w table

七、安装负载均衡高可用

k8s的高可用,主要是实现Master节点的高可用。APIServer以HTTP API提供接口服务,可以使用常规的四层或七层代理实现高可用和负载均衡。如Nginx、Haproxy等代理服务器。使用vip和keepalive实现,代理服务器的高可用,然后将集群的访问地址从APIServer的地址改为vip的地址,这样就实现了K8S集群的高可用。 这里采用的方案是Nginx+keepalived。

7.1、部署Nginx

- 1、编译Nginx

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

wget http://nginx.org/download/nginx-1.22.1.tar.gz

tar axf nginx-1.22.1.tar.gz && cd nginx-1.22.1

./configure --prefix=/usr/local/nginx --with-http_ssl_module \

--with-http_stub_status_module --with-pcre \

--with-http_gzip_static_module --with-stream --with-stream_ssl_module

make && make install

说明:Nginx采用二进制部署方式。这一步操作,目的是得到Nginx服务的二进制文件。

- 2、创建目录

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

mkdir -p /etc/kube-lb/logs

mkdir -p /etc/kube-lb/conf

mkdir -p /etc/kube-lb/sbin

- 3、复制二进制文件

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

cp /usr/local/nginx/sbin/nginx /etc/kube-lb/sbin/kube-lb

chmod +x /etc/kube-lb/sbin/kube-lb

- 4、创建kube-lb的配置文件

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

vim /etc/kube-lb/conf/kube-lb.conf

user root;

worker_processes 1;

error_log /etc/kube-lb/logs/error.log warn;

events {

worker_connections 3000;

}

stream {

upstream kube-apiserver {

server 192.168.1.12:6443 max_fails=2 fail_timeout=3s;

server 192.168.1.13:6443 max_fails=2 fail_timeout=3s;

server 192.168.1.14:6443 max_fails=2 fail_timeout=3s;

}

server {

listen 0.0.0.0:6443;

proxy_connect_timeout 1s;

proxy_pass kube-apiserver;

}

}

- 5、创建kube-lb的systemd unit文件

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

vim /etc/systemd/system/kube-lb.service

[Unit]

Description=kube-lb proxy

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=forking

ExecStartPre=/etc/kube-lb/sbin/kube-lb -c /etc/kube-lb/conf/kube-lb.conf -p /etc/kube-lb -t

ExecStart=/etc/kube-lb/sbin/kube-lb -c /etc/kube-lb/conf/kube-lb.conf -p /etc/kube-lb

ExecReload=/etc/kube-lb/sbin/kube-lb -c /etc/kube-lb/conf/kube-lb.conf -p /etc/kube-lb -s reload

PrivateTmp=true

Restart=always

RestartSec=15

StartLimitInterval=0

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

- 6、启动kube-lb服务,并设置开机自启

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

systemctl daemon-reload

systemctl restart kube-lb

systemctl enable kube-lb

7.2、部署keepalived

- 1、编译keepalived

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

wget https://www.keepalived.org/software/keepalived-2.2.7.tar.gz

tar axf keepalived-2.2.7.tar.gz

cd keepalived-2.2.7

./configure --prefix=/usr/local/keepalived

说明:keepalived采用二进制部署方式。这一步操作,目的是得到keepalived服务的二进制文件。

- 2、创建目录

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

mkdir -p /etc/keepalived/conf

mkdir -p /etc/keepalived/sbin

- 3、复制二进制文件

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

cp /usr/local/keepalived/sbin/keepalived /etc/keepalived/sbin/keepalived

chmod +x /etc/keepalived/sbin/keepalived

- 4、kube-lb-01节点创建keepalived 配置文件

说明:以下操作只需在kube-lb-01节点执行。

vim /etc/keepalived/conf/keepalived.conf

global_defs {

}

vrrp_track_process check-kube-lb {

process kube-lb

weight -60

delay 3

}

vrrp_instance VI-01 {

state MASTER

priority 120

unicast_src_ip 192.168.1.16

unicast_peer {

192.168.1.17

}

dont_track_primary

interface ens33

virtual_router_id 222

advert_int 3

track_process {

check-kube-lb

}

virtual_ipaddress {

192.168.1.110

}

}

- 5、kube-lb-02节点创建keepalived 配置文件

说明:以下操作只需在kube-lb-02节点执行。

vim /etc/keepalived/conf/keepalived.conf

global_defs {

}

vrrp_track_process check-kube-lb {

process kube-lb

weight -60

delay 3

}

vrrp_instance VI-01 {

state BACKUP

priority 119

unicast_src_ip 192.168.1.17

unicast_peer {

192.168.1.16

}

dont_track_primary

interface ens33

virtual_router_id 222

advert_int 3

track_process {

check-kube-lb

}

virtual_ipaddress {

192.168.1.110

}

}

- 6、创建keepalived的systemd unit文件

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

vim /etc/systemd/system/keepalived.service

[Unit]

Description=VRRP High Availability Monitor

After=network-online.target syslog.target

Wants=network-online.target

Documentation=https://keepalived.org/manpage.html

[Service]

Type=forking

KillMode=process

ExecStart=/etc/keepalived/sbin/keepalived -D -f /etc/keepalived/conf/keepalived.conf

ExecReload=/bin/kill -HUP $MAINPID

[Install]

WantedBy=multi-user.target

- 7、启动keepalived服务,并设置开机自启

说明:以下操作kube-lb-01节点和kube-lb-02节点均需要执行。

systemctl daemon-reload

systemctl restart keepalived

systemctl enable keepalived

八、安装containerd容器引擎

说明:以下操作master节点和worker节点均需操作。

1、解压安装

tar axf cri-containerd-cni-1.7.2-linux-amd64.tar.gz -C /

3、生成默认配置文件

mkdir -p /etc/containerd && containerd config default > /etc/containerd/config.toml

4、配置systemd cgroup驱动

sed -i 's#SystemdCgroup = false#SystemdCgroup = true#g' /etc/containerd/config.toml

5、重载沙箱pause镜像

sed -i 's#sandbox_image = "registry.k8s.io/pause:3.8"#sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.8"#g' /etc/containerd/config.toml

6、设置cni插件在创建网络配置文件时使用的模板文件路径

sed -i 's#conf_template = ""#conf_template = "/etc/cni/net.d/cni-default.conf"#g' /etc/containerd/config.toml

6、网络插件CNI配置文件

rm -f /etc/cni/net.d/10-containerd-net.conflist

cat <<EOF | sudo tee /etc/cni/net.d/cni-default.conf

{

"name": "mynet",

"cniVersion": "1.0.0",

"type": "bridge",

"bridge": "mynet0",

"isDefaultGateway": true,

"ipMasq": true,

"hairpinMode": true,

"ipam": {

"type": "host-local",

"subnet": "10.48.0.0/16"

}

}

EOF

说明:

1、10.48.0.0/16是集群pod网段

2、cni-default.conf 文件中cniVersion的版本号要与10-containerd-net.conflist版本中定义的保持一致。

7、重启containerd服务并设置开机自启

systemctl daemon-reload && systemctl restart containerd && systemctl enable containerd

安装完成后,确保k8s集群所有节点的runc和containerd版本如下所示:

root@k8s-master-12:~# containerd -v

containerd github.com/containerd/containerd v1.7.2 0cae528dd6cb557f7201036e9f43420650207b58

root@k8s-master-12:~# runc -v

runc version 1.1.7

commit: v1.1.7-0-g860f061b

spec: 1.0.2-dev

go: go1.20.4

libseccomp: 2.5.1

说明:由于网络问题,无法下载国外的K8S镜像,所以这里使用阿里云的镜像仓库地址registry.cn-hangzhou.aliyuncs.com/google_containers代替。如果你有阿里云的账号,可以对 containerd配置镜像加速地址来实现快速下载镜像。

九、安装kubernetes集群

9.1、安装kube-apiserver

说明:以下操作需在所有master节点上执行。

1、准备kube-apiserver二进制文件

wget https://dl.k8s.io/v1.25.14/bin/linux/amd64/kube-apiserver

mv kube-apiserver /usr/local/bin

2、创建 /etc/systemd/system/kube-apiserver.service服务启动文件

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--allow-privileged=true \

--anonymous-auth=false \

--authorization-mode=Node,RBAC \

--enable-admission-plugins=NodeRestriction \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--etcd-cafile=/etc/kubernetes/pki/etcd-ca.pem \

--etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.pem \

--etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client-key.pem \

--etcd-servers=https://192.168.1.12:2379,https://192.168.1.13:2379,https://192.168.1.14:2379 \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client-key.pem \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-cluster-ip-range=10.96.0.0/16 \

--service-node-port-range=30000-36000 \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--requestheader-allowed-names="" \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--enable-aggregator-routing=true \

--token-auth-file=/etc/kubernetes/pki/token.csv \

--v=2

Restart=always

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

3、启动kube-apiserver服务

systemctl daemon-reload && systemctl enable kube-apiserver && systemctl start kube-apiserver

9.2、安装kube-controller-manager

说明:以下操作需在所有master节点上执行。

1、准备kube-controller-manager二进制文件

wget https://dl.k8s.io/v1.25.14/bin/linux/amd64/kube-controller-manager

mv kube-controller-manager /usr/local/bin

2、创建 /etc/systemd/system/kube-controller-manager.service服务启动文件

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--allocate-node-cidrs=true \

--authentication-kubeconfig=/etc/kubernetes/pki/controller-manager.kubeconfig \

--authorization-kubeconfig=/etc/kubernetes/pki/controller-manager.kubeconfig \

--kubeconfig=/etc/kubernetes/pki/controller-manager.kubeconfig \

--bind-address=0.0.0.0 \

--secure-port=10257 \

--cluster-cidr=10.48.0.0/16 \

--service-cluster-ip-range=10.96.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--cluster-signing-duration=876000h \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--leader-elect=true \

--node-cidr-mask-size=24 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--use-service-account-credentials=true \

--v=2

Restart=always

RestartSec=5

[Install]

WantedBy=multi-user.target

3、启动kube-controller-manager服务

systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl start kube-controller-manager

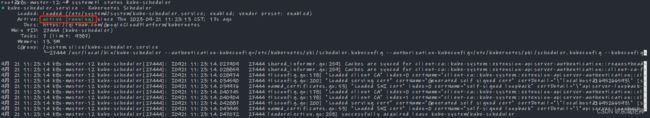

4、检查kube-controller-manager服务状态,如下图所示:

9.3、安装kube-scheduler

说明:以下操作需在所有master节点上执行。

1、准备kube-scheduler二进制文件

wget https://dl.k8s.io/v1.25.14/bin/linux/amd64/kube-scheduler

mv kube-scheduler /usr/local/bin

2、创建 /etc/systemd/system/kube-scheduler.service服务启动文件

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--authentication-kubeconfig=/etc/kubernetes/pki/scheduler.kubeconfig \

--authorization-kubeconfig=/etc/kubernetes/pki/scheduler.kubeconfig \

--kubeconfig=/etc/kubernetes/pki/scheduler.kubeconfig \

--bind-address=0.0.0.0 \

--secure-port=10259 \

--leader-elect=true \

--v=2

Restart=always

RestartSec=5

[Install]

WantedBy=multi-user.target

3、启动kube-scheduler服务

systemctl daemon-reload && systemctl enable kube-scheduler && systemctl start kube-scheduler

9.4、安装kubectl

说明:为了后续3台master节点主机能够使用kubectl命令,以下操作需在所有master节点上执行。

1、准备kubectl二进制文件

wget https://dl.k8s.io/v1.25.14/bin/linux/amd64/kubectl

mv kubectl /usr/local/bin

2、设置环境变量,对kubectl进行授权,这样kubectl命令可以使用这个证书对k8s集群进行管理

export KUBECONFIG=/etc/kubernetes/pki/admin.kubeconfig

echo "export KUBECONFIG=/etc/kubernetes/pki/admin.kubeconfig" >> /etc/profile

source /etc/profile

3、启动kubectl自动补全功能

apt-get install bash-completion -y

kubectl completion bash | sudo tee /etc/bash_completion.d/kubectl > /dev/null

sudo chmod a+r /etc/bash_completion.d/kubectl

9.5、配置TLS启动引导

说明:配置TLS启动引导只需在其中一个master节点执行,这里默认就在k8s-master-12节点上执行。

1、授权创建证书签名请求(CSR)

启动引导节点被身份认证为system:bootstrappers组的成员, 它需要被授权创建证书签名请求(CSR)并在证书被签名之后将其取回。 幸运的是,Kubernetes提供了一个 ClusterRole,为system:node-bootstrapper,其中精确地封装了这些许可。

为了实现这一点,你只需要创建ClusterRoleBinding,将system:bootstrappers组绑定到集群角色system:node-bootstrapper。

# 1、创建create-csrs-for-bootstrapping.yaml文件

vim create-csrs-for-bootstrapping.yaml

# 允许启动引导节点创建CSR

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: create-csrs-for-bootstrapping

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:node-bootstrapper

apiGroup: rbac.authorization.k8s.io

# 2、执行yaml文件

kubectl apply -f create-csrs-for-bootstrapping.yaml

2、授权自动批复system:bootstrappers组的所有CSR

为了对CSR进行批复,你需要告诉控制器管理器批复这些CSR是可接受的。 这是通过将RBAC访问权限授予正确的组来实现的。

要允许kubelet请求并接收新的证书,可以创建一个ClusterRoleBinding将启动引导节点所处的组system:bootstrappers 绑定到为其赋予访问权限的ClusterRole system:certificates.k8s.io:certificatesigningrequests:nodeclient。

# 1、创建auto-approve-csrs-for-group.yaml文件

vim auto-approve-csrs-for-group.yaml

# 批复 "system:bootstrappers" 组的所有 CSR

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

#2、执行yaml文件

kubectl apply -f auto-approve-csrs-for-group.yaml

3、授权批复system:nodes组的CSR续约请求

要允许kubelet对其客户端证书执行续期操作,可以创建一个ClusterRoleBinding将正常工作的节点所处的组system:nodes绑定到为其授予访问许可的ClusterRole system:certificates.k8s.io:certificatesigningrequests:selfnodeclient。

#1、创建auto-approve-renewals-for-nodes.yaml文件

vim auto-approve-renewals-for-nodes.yaml

# 批复"system:nodes"组的CSR续约请求

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: auto-approve-renewals-for-nodes

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

#2、执行yaml文件

kubectl apply -f auto-approve-renewals-for-nodes.yaml

9.6、安装kubelet

说明:以下操作需在所有master节点和所有worker节点上执行。

1、准备kubelet二进制文件

wget https://dl.k8s.io/v1.25.14/bin/linux/amd64/kubelet

mv kubelet /usr/local/bin

2、创建/var/lib/kubelet目录

#1、创建kubelet工作目录

mkdir -p /var/lib/kubelet

#2、创建kubelet-config.yaml文件中定义staticPodPath目录

mkdir -p /etc/kubernetes/manifests

3、创建/var/lib/kubelet/kubelet-config.yaml

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /run/systemd/resolve/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 15m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

4、创建/etc/systemd/system/kubelet.service服务启动文件

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/pki/kubelet-bootstrap.kubeconfig \

--kubeconfig=/var/lib/kubelet/kubelet.kubeconfig \

--config=/var/lib/kubelet/kubelet-config.yaml \

--container-runtime=remote \

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \

[Install]

WantedBy=multi-user.target

5、启动kubelet服务

systemctl daemon-reload && systemctl enable kubelet && systemctl start kubelet

6、检查kubelet服务状态,如下图所示:

9.7、安装kube-proxy

1、准备kube-proxy二进制文件

wget https://dl.k8s.io/v1.25.14/bin/linux/amd64/kube-proxy

mv kube-proxy /usr/local/bin

2、创建/var/lib/kube-proxy目录

# 创建kube-proxy工作目录

mkdir -p /var/lib/kube-proxy

3、创建/var/lib/kube-proxy/kube-proxy-config.yaml

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /etc/kubernetes/pki/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 10.48.0.0/16

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms

4、创建/etc/systemd/system/kube-proxy.service服务启动文件

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-proxy \

--config=/var/lib/kube-proxy/kube-proxy-config.yaml \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

5、启动kube-proxy服务

systemctl daemon-reload && systemctl enable kube-proxy && systemctl start kube-proxy

6、检查kube-proxy服务状态,如下图所示:

9.8、镜像下载

说明:以下操作需在所有master节点和所有worker节点上执行。

1、镜像下载

#!/bin/bash

pause_version=3.8

coredns_version=v1.9.3

calico_version=v3.26.1

registry_address=registry.cn-hangzhou.aliyuncs.com/google_containers

ctr -n k8s.io image pull ${registry_address}/pause:${pause_version}

ctr -n k8s.io image pull ${registry_address}/coredns:${coredns_version}

ctr -n k8s.io image pull docker.io/calico/cni:${calico_version}

ctr -n k8s.io image pull docker.io/calico/node:${calico_version}

ctr -n k8s.io image pull docker.io/calico/kube-controllers:${calico_version}

2、镜像导出

#!/bin/bash

pause_version=3.8

coredns_version=v1.9.3

calico_version=v3.26.1

registry_address=registry.cn-hangzhou.aliyuncs.com/google_containers

ctr -n k8s.io image export pause-${pause_version}.tar.gz ${registry_address}/pause:${pause_version}

ctr -n k8s.io image export coredns-${coredns_version}.tar.gz ${registry_address}/coredns:${coredns_version}

ctr -n k8s.io image export calico-cni-${calico_version}.tar.gz docker.io/calico/cni:${calico_version}

ctr -n k8s.io image export calico-node-${calico_version}.tar.gz docker.io/calico/node:${calico_version}

ctr -n k8s.io image export calico-kube-controllers-${calico_version}.tar.gz docker.io/calico/kube-controllers:${calico_version}

3、镜像导入

#!/bin/bash

pause_version=3.8

coredns_version=v1.9.3

calico_version=v3.26.1

ctr -n k8s.io image import pause-${pause_version}.tar.gz

ctr -n k8s.io image import coredns-${coredns_version}.tar.gz

ctr -n k8s.io image import calico-cni-${calico_version}.tar.gz

ctr -n k8s.io image import calico-node-${calico_version}.tar.gz

ctr -n k8s.io image import calico-kube-controllers-${calico_version}.tar.gz

9.9、安装coredns

说明:以下操作在任意一个master节点上执行,这里默认在k8s-master-12节点执行。

1、下载coredns.yaml.base模板文件

cp coredns.yaml.base coredns.yaml

2、修改coredns.yaml文件

sed -i 's#__DNS__SERVER__#10.96.0.10#g' coredns.yaml

sed -i 's#__DNS__MEMORY__LIMIT__#256Mi#g' coredns.yaml

sed -i 's#__DNS__DOMAIN__#cluster.local#g' coredns.yaml

sed -i 's#registry.k8s.io/coredns/coredns:v1.9.3#registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.9.3#g' coredns.yaml```

<font face="方正舒体" color=#000000 size=3> **3、使用kubectl执行coredns.yaml文件**

```bash

kubectl apply -f coredns.yaml

注意:当部署在Kubernetes中的CoreDNS Pod检测到环路时,CoreDNS Pod将开始“CrashLoopBackOff”,请根据此链接中的方法解决问题。

9.10、安装calico

说明:以下操作在任意一个master节点上执行,这里默认在k8s-master-12节点执行。

1、下载calico.yaml文件

2、修改calico.yaml文件,增加IP_AUTODETECTION_METHOD字段

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

- name: IP_AUTODETECTION_METHOD # 增加内容

value: can-reach=192.168.1.12 # 增加内容

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Auto-detect the BGP IP address.

- name: IP

value: "autodetect"

# Enable IPIP

- name: CALICO_IPV4POOL_IPIP

value: "Always"

# Enable or Disable VXLAN on the default IP pool.

- name: CALICO_IPV4POOL_VXLAN

value: "Never"

# Enable or Disable VXLAN on the default IPv6 IP pool.

- name: CALICO_IPV6POOL_VXLAN

value: "Never"

总结:官方提供的yaml文件中,ip识别策略(IPDETECTMETHOD)没有配置,即默认为first-found,这会导致一个网络异常的ip作为nodeIP被注册,从而影响node-to-node mesh 。我们可以修改成 can-reach 或者 interface 的策略,尝试连接某一个Ready的node的IP,以此选择出正确的IP。

3、修改calico.yaml文件,修改CALICO_IPV4POOL_CIDR字段

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "10.48.0.0/16"

4、执行calico.yaml文件

root@k8s-master-12:~# kubectl apply -f calico.yaml

9.11、设置节点污点和标签

说明:以下操作在任意一个master节点上执行,这里默认在k8s-master-12节点执行。

1、设置所有master节点的污点和标签

# k8s-master-12节点

kubectl label node k8s-master-12 node-role.kubernetes.io/master=""

kubectl label node k8s-master-12 node-role.kubernetes.io/control-plane=""

kubectl taint nodes k8s-master-12 node-role.kubernetes.io/master=:NoSchedule

kubectl taint nodes k8s-master-12 node-role.kubernetes.io/control-plane=:NoSchedule

# k8s-master-13节点

kubectl label node k8s-master-13 node-role.kubernetes.io/master=""

kubectl label node k8s-master-13 node-role.kubernetes.io/control-plane=""

kubectl taint nodes k8s-master-13 node-role.kubernetes.io/master=:NoSchedule

kubectl taint nodes k8s-master-13 node-role.kubernetes.io/control-plane=:NoSchedule

# k8s-master-14节点

kubectl label node k8s-master-14 node-role.kubernetes.io/master=""

kubectl label node k8s-master-14 node-role.kubernetes.io/control-plane=""

kubectl taint nodes k8s-master-14 node-role.kubernetes.io/master=:NoSchedule

kubectl taint nodes k8s-master-14 node-role.kubernetes.io/control-plane=:NoSchedule

2、检查所有master节点的污点和标签

root@k8s-master-12:~# kubectl describe nodes k8s-master-12 | grep -A 1 -i taints

Taints: node-role.kubernetes.io/control-plane:NoSchedule

node-role.kubernetes.io/master:NoSchedule

root@k8s-master-12:~# kubectl describe nodes k8s-master-13 | grep -A 1 -i taints

Taints: node-role.kubernetes.io/control-plane:NoSchedule

node-role.kubernetes.io/master:NoSchedule

root@k8s-master-12:~# kubectl describe nodes k8s-master-14 | grep -A 1 -i taints

Taints: node-role.kubernetes.io/control-plane:NoSchedule

node-role.kubernetes.io/master:NoSchedule

2、设置所有worker节点标签

kubectl label node k8s-worker-15 node-role.kubernetes.io/worker=""

十、k8s集群测试

10.1、检查集群状态

说明:以下操作在任意一个master节点上执行,这里默认在k8s-master-12节点执行。

1、查看集群节点状态(必须)

kubectl get nodes -o wide

kubectl get cs

kubectl get pods -A -o wide

kubectl get svc -A -o wide

10.1、DNS测试

说明:以下操作在任意一个master节点上执行,这里默认在k8s-master-12节点执行。

kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- sh

nslookup kubernetes.default

ping www.baidu.com

说明:如果部署完之后发现无法解析kubernetes.default和ping通百度网站,可以尝试重启K8S集群节点所有master节点主机。

10.2、高可用测试

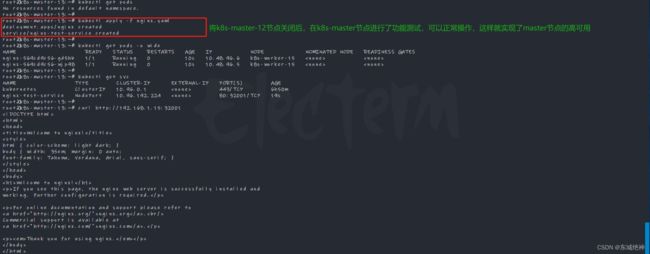

1、关闭k8s-master12节点,通过k8s-master-13节点查看集群状态,如下图所示:

2、关闭kube-lb-01点前,可发现vip在k8s-lb-01节点主机上,如下图所示:

3、关闭kube-lb-01点后,发现vip进行了主备切换,在k8s-lb-02节点主机上,如下图所示:

10.3、功能性测试

说明:以下操作在任意一个master节点上执行,这里默认在k8s-master-12节点执行。

1、部署一个简单的Nginx服务(必须)

vim nginx.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

imagePullPolicy: IfNotPresent

ports:

- name: http

protocol: TCP

containerPort: 80

resources:

limits:

cpu: "1.0"

memory: 512Mi

requests:

cpu: "0.5"

memory: 128Mi

---

apiVersion: v1

kind: Service

metadata:

annotations:

name: nginx-test-service

spec:

ports:

- port: 80

targetPort: 80

nodePort: 32001

protocol: TCP

selector:

app: nginx

sessionAffinity: None

type: NodePort

总结:整理不易,如果对你有帮助,可否点赞关注一下?

更多详细内容请参考:企业级K8s集群运维实战