如何使用tensorboard及打开tensorboard生成文件

一、使用tensorboard

tensorboard中常用函数

1、writer.add_scalar()

def add_scalar(

self,

tag,

scalar_value,

global_step=None,

walltime=None,

new_style=False,

double_precision=False,

):

Args:

tag (string): Data identifier

scalar_value (float or string/blobname): Value to save

global_step (int): Global step value to record

walltime (float): Optional override default walltime (time.time())with seconds after epoch of event

new_style (boolean): Whether to use new style (tensor field) or old style (simple_value field).

New style could lead to faster data loading.

tag:所画图标的title,str类型,注意引号

scalar_value:需要保存的数值,对应y轴的y值

global_step:当前的全局步数,对应x轴

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter()

for i in range(100):

writer.add_scalar('y=2x', i * 2, i)

writer.close()

2、writer.add_image()

作用:Add image data to summary.

def add_image(

self, tag, img_tensor, global_step=None, walltime=None, dataformats="CHW"

):

Args:

tag (string): Data identifier

img_tensor (torch.Tensor, numpy.array, or string/blobname): Image data#注意此处要求数据类型

global_step (int): Global step value to record

walltime (float): Optional override default walltime (time.time()) seconds after epoch of event

dataformats (string): Image data format specification of the form CHW, HWC, HW, WH, etc.

#其中dataformats默认为(3, H, W),C指channel,H指height,W指wide。

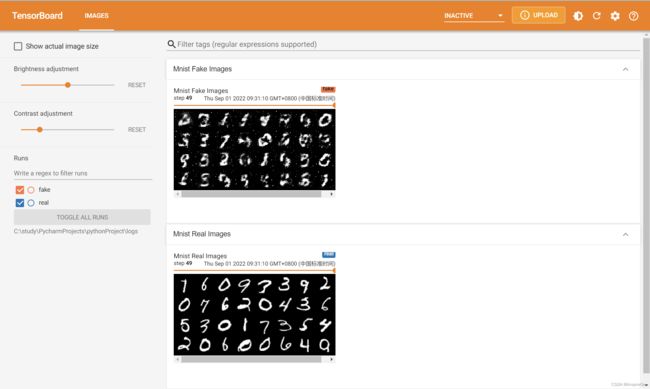

3、writer.add_images()

作用:Add batched image data to summary.

def add_images(

self, tag, img_tensor, global_step=None, walltime=None, dataformats="NCHW"

):

Args:

tag (string): Data identifier

img_tensor (torch.Tensor, numpy.array, or string/blobname): Image data

global_step (int): Global step value to record

walltime (float): Optional override default walltime (time.time())

seconds after epoch of event

dataformats (string): Image data format specification of the form

CHW, NHWC, CHW, HWC, HW, WH, etc.

#其中dataformats默认为(N, 3, H, W),N指batch_size. C指channel,H指height,W指wide。

若img_tensor不是3 channels,一定要先用torch.reshape()方法将其修改为3 channels,否则会报错

output = torch.reshape(output,([-1, 3, H, W]))

4、writer.add_graph()

add_graph(model, input_to_model=None, verbose=False, use_strict_trace=True)

Parameters

model (torch.nn.Module) – Model to draw.

input_to_model (torch.Tensor or list of torch.Tensor) – A variable or a tuple of variables to be fed.

verbose (bool) – Whether to print graph structure in console.

use_strict_trace (bool) – Whether to pass keyword argument strict to torch.jit.trace. Pass False when

you want the tracer to record your mutable container types (list, dict)

二、打开tensorboard生成的文件

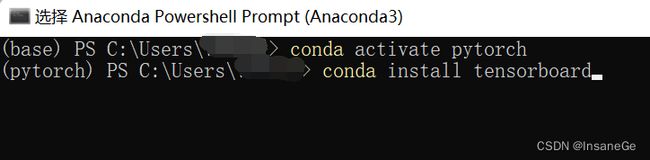

1、安装tensorboard,我使用的是自己创建的命名为pytorch的conda环境

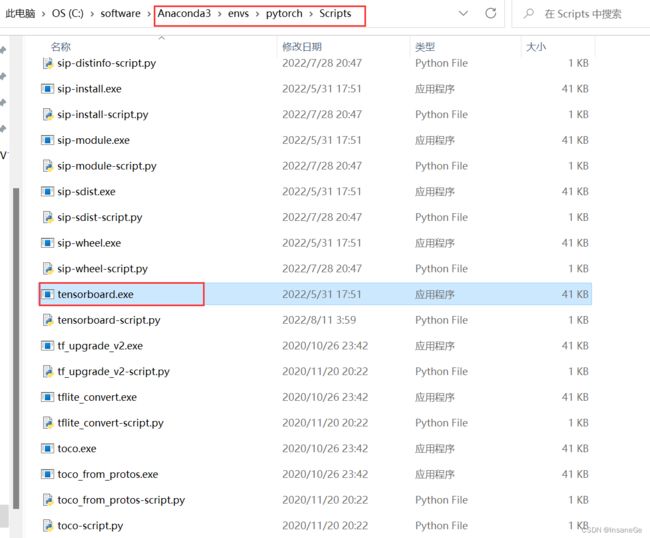

安装完后可以在自己的anacanda环境对应目录下找到tensorboard.exe,将其加入到环境变量的Path中即可。

在cmd中输入tensorboard -h,出现下面的结果说明已经添加了

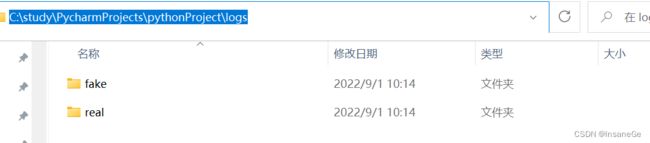

2、训练模型。训练后得到一个log文件,它就是我们要可视化的数据,复制该文件的路径。

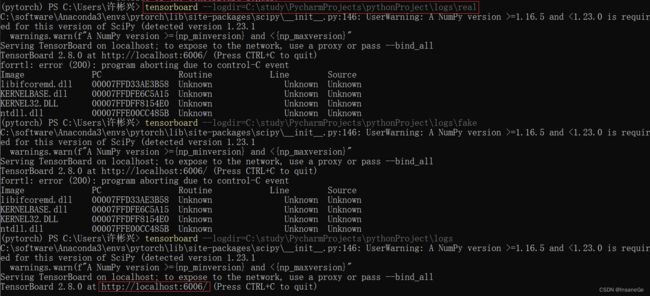

3、在cmd或者Pycharm的Terminal中输入tensorboard --logdir=log文件所在路径。得到结果:TensorBoard 2.8.0 at http://localhost:6006/ (Press CTRL+C to quit)

若需要同时打开多个,可以输入 tensorboard --logdir={log文件所在路径} –port=6007 创建不同端口号的网址。

4、不要关闭cmd,把网址http://localhost:6006/输入到浏览器即可打开tensorboard

三、本地浏览器显示服务器中Tensorboard的内容操作步骤

如果代码和项目都在云服务器中,这时如果需要在本地浏览器中查看Tensorboard的运行效果,就需要按照如下的步骤进行操作:

将服务器中Tensorboard的端口6006映射到本地端口16006,在终端中输入:

ssh -L 16006:127.0.0.1:6006 用户名@服务器ip -p 22

22为服务器的端口号

输入服务器登录密码

激活服务器端python环境,在终端中运行:tensorboard --logdir={tensorboard文件位置}

在本地服务器输入:http://localhost:16006