啃K8s之快速入门,以及哭吧S(k8s)单节点部署

啃K8s之快速入门,以及哭吧S(k8s)单节点部署

- 一:Kubernets概述

-

- 1.1:Kubernets是什么?

- 1.2:Kubernets特性

- 1.3:Kubernets群集架构与组件

-

- 1.3.1:master组件

- 1.3.2:node组件

- 1.3.3:etcd集群介绍:etcd集群在这里分布的部署到了三个节点上

- 二:Kubernets核心概念

-

- 2.1:Pod

- 2.2:Controllers

- 2.3:Service

- 2.4:kubernets平台环境部署方式

- 三:单节点部署

-

- 3.1:ETCD部署

- 3.2:Node节点上部署Docker

- 3.3:flannel容器集群网络部署理论

-

- 3.3.1:flannel理论

- 3.4:flannel网络配置

- 3.5:部署master组件理论

- 3.6:部署master组件

- 3.7:node01节点部署

- 3.8:node02节点部署

一:Kubernets概述

1.1:Kubernets是什么?

-

Kubernets是Google在2014年开源的一个容器集群管理系统,Kubernets简称K8s

-

K8s用于容器化应用程序的部署,扩展和管理

-

K8s提供了容器编排,资源调度,弹性伸缩,部署管理,服务发现等一系列功能

-

Kubernets目标是让部署容器化应用简单高效

-

官方网站:http://www.kubernets.io

1.2:Kubernets特性

-

自我修复

- 在节点故障时重新启动失败的容器,替换和重新部署,保证预期的副本数量;杀死健康价差是把的容器,并且在未准备号之前不会处理客户端请求,确保线上服务不中断

-

弹性伸缩

- 使用命令、UI或者基于CPU使用情况自动快速扩容和缩容应用程序实例,保证应用业务高峰并发时的高可用性;业务低峰时回收资源,以最小成本运行服务

-

自动部署和回滚

- K8S采用滚动更新策略更新应用,一次更新一个Pod,而不是同时删除所有Pod,如果更新过程中出现问题,将会回滚更改,确保升级不受影响

-

服务发现和负载均衡

- K8S为多个容器提供一个统一访问入口(内部IP地址和一个DNS名称),并且负载均衡关联的所有容器,使得用户无需考虑容器IP问题

-

机密和配置管理

- 管理机密数据和应用程序配置,而不需要把敏感数据暴露在镜像里,提高敏感数据安全性。并可以将一些常用的配置存储在K8S中,方便应用程序使用

-

存储编排

- 挂载外部存储系统,无论是来自本地存储,公有云(如AWS),还是网络存储(如NFS、GlusterFS、Ceph)都作为集群资源的一部分使用,极大提高存储使用灵活性

-

批处理

- 提供一次性任务,定时任务;满足批量数据处理和分析的场景

1.3:Kubernets群集架构与组件

1.3.1:master组件

- kube-apiserver

kubernets API,集群的统一入口,各组件协调者,以RESTful API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给Etcd存储

- kube-controller-manager

处理群集中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的

- kube-scheduler

根据调度算法为新创建的Pod选择一个Node节点,可以任意部署,可以部署在同一个节点上,也可以部署在不同的节点上

- etcd

分布式键值存储系统,用于保存集群状态数据,比如Pod、Service等对象信息

1.3.2:node组件

- kubelet

kubelet是Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷、下载secret、获取容器和节点状态等工作。kubelet将每个Pod转换成一组容器

- kube-proxy

在node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作

- docker或rocket

容器引擎,运行容器

1.3.3:etcd集群介绍:etcd集群在这里分布的部署到了三个节点上

-

etcd是CoreOS团队于2013年6月发起的开源项目,基于go语言开发,目标是构建一个高可用的分布式键值(key-value)数据库。etcd内部采用raft协议作为一致性算法。

etcd集群数据无中心化集群,有如下特点:

1、简单:安装配置简单,而且提供了HTTP进行交互,使用也很简单

2、安全:支持SSL证书验证

3、快速:根据官方提供的benchmark数据,单实例支持每秒2k+读操作

4、可靠:采用raft算法,实现分布式数据的可用性和一致性

二:Kubernets核心概念

2.1:Pod

- 最小部署单元

- 一组容器的集合

- 一个Pod中的容器共享网络命名空间

- Pod是短暂的

2.2:Controllers

- RelicaSet:确保预期的Pod副本数量

- Deployment:无状态应用部署

- StatefulSet:有状态应用部署

- DaemonSet:确保所有Node运行同一个Pod

- Job:一次性任务

- Cronjob:定时任务

- 更高级层次对象,部署和管理Pod

2.3:Service

- 防止Pod失联

- 定义一组Pod的访问策略

2.4:kubernets平台环境部署方式

- 官方提供的三种部署方式

- minikube

- Minikube是一个工具,可以在本地快速运营一个单点的kubernets,仅用于尝试kubernets或日常开发的用户使用

- kubeadm

- kubeadm也是一个工具,提供kubeadm init和kubeadm join,用于快速部署kubernets集群

- 二进制包

- 推荐,从官方下载发行版的二进制包,手动部署每个组件,组成kubernets集群。下载地址:https://gitgub.com/kubernetes/kubernetes/releases

- minikube

三:单节点部署

- 环境

| 角色 | IP地址 | 组件 |

|---|---|---|

| master | 20.0.0.51 | kube-apiserver,kube-controller-manager,kube-schedule,etcd |

| node1 | 20.0.0.54 | kubelet,kube-proxy,docker,fannel,etcd |

| node2 | 20.0.0.56 | kubelet,kube-proxy,docker,fannel,etcd |

- 修改主机名,清除防火墙规则

[root@localhost ~]# iptables -F

[root@localhost ~]# setenforce 0

[root@localhost ~]# hostnamectl set-hostname master

[root@localhost ~]# su

[root@master ~]#

[root@localhost ~]# iptables -F

[root@localhost ~]# setenforce 0

[root@localhost ~]# hostnamectl set-hostname node1

[root@localhost ~]# su

[root@node1 ~]#

[root@localhost ~]# iptables -F

[root@localhost ~]# setenforce 0

[root@localhost ~]# hostnamectl set-hostname node2

[root@localhost ~]# su

[root@node2 ~]#

3.1:ETCD部署

- master主机创建k8s文件夹并上传etcd脚本,下载cffssl官方证书生成工具

[root@master ~]# mkdir k8s

[root@master ~]# cd k8s

[root@master k8s]# rz -E '上传etcd脚本'

rz waiting to receive.

[root@master k8s]# ls

etcd-cert.sh etcd.sh

[root@master k8s]# mkdir etcd-cert

[root@master k8s]# mv etcd-cert.sh etcd-cert

'下载证书制作工具'

[root@master k8s]# vim cfssl.sh

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

'下载cfssl官方包'

[root@master k8s]# bash cfssl.sh '运行下载工具的脚本'

[root@master k8s]# ls /usr/local/bin/

cfssl cfssl-certinfo cfssljson

'cfssl:生成证书工具、cfssljson:通过传入json文件生成证书、cfssl-certinfo查看证书信息'

- 开始制作证书

1.定义ca证书

[root@master k8s]# cd etcd-cert/

[root@master etcd-cert]# ls

etcd-cert.sh

[root@master etcd-cert]# cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h" '失效时间,10年'

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

2.实现证书签名

[root@master etcd-cert]# cat > ca-csr.json <<EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

3.生产证书,生成ca-key.pem和ca.pem

[root@master etcd-cert]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

4.指定etcd三个节点之间的通信验证

[root@master etcd-cert]# cat > server-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"20.0.0.51",

"20.0.0.54",

"20.0.0.56"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF

5.生成ETCD证书,server-key.pem和server.pem

[root@master etcd-cert]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

[root@master etcd-cert]# ls

ca-config.json ca-csr.json ca.pem server.csr server-key.pem

ca.csr ca-key.pem etcd-cert.sh server-csr.json server.pem

- 下载并解压ETCD二进制包,下载地址:https://github.com/etcd-io/etcd/releases

[root@master etcd-cert]# cd ..

[root@master k8s]# rz -E '我已经下载好了,直接上传,和kubernetes-server的软件也一起上传'

rz waiting to receive.

[root@master k8s]# ls

etcd-cert etcd.sh etcd-v3.3.10-linux-amd64.tar.gz

[root@master k8s]# tar zxvf etcd-v3.3.10-linux-amd64.tar.gz

[root@master k8s]# ls etcd-v3.3.10-linux-amd64

Documentation etcd etcdctl README-etcdctl.md README.md READMEv2-etcdctl.md

- 创建配置文件,命令文件和证书文件夹,并移动相应文件到相应目录

[root@master k8s]# mkdir /opt/etcd/{cfg,bin,ssl} -p

[root@master k8s]# ls /opt/etcd/

bin cfg ssl

[root@master k8s]# mv etcd-v3.3.10-linux-amd64/etcd etcd-v3.3.10-linux-amd64/etcdctl /opt/etcd/bin/ '移动命令到刚刚创建的 bin目录'

[root@master k8s]# ls /opt/etcd/bin/

etcd etcdctl

[root@master k8s]# cp etcd-cert/*.pem /opt/etcd/ssl '将证书文件复制到刚刚创建的ssl目录'

[root@master k8s]# ls /opt/etcd/ssl

ca-key.pem ca.pem server-key.pem server.pem

[root@master k8s]# vim etcd.sh '查看配置文件'

...省略内容

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380" '2380端口是etcd内部通信端口'

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379" '2379是单个etcd对外提供的端口'

...省略内容

- 进入卡住状态等待其他节点加入

'主节点执行脚本并声明本地节点名称和地址,此时会进入监控状态,等待其他节点加入,等待时间2分钟'

[root@master k8s]# ls /opt/etcd/cfg/ '此时查看这个目录是没有文件的'

[root@master k8s]# bash etcd.sh etcd01 20.0.0.51 etcd02=https://20.0.0.54:2380,etcd03=https://20.0.0.56:2380 '执行命令进入监控状态'

Created symlink from /etc/systemd/system/multi-user.target.wants/etcd.service to /usr/lib/systemd/system/etcd.service.

[root@master k8s]# ls /opt/etcd/cfg/ '此时重新打开终端,发现已经生成了文件'

etcd

- 拷贝证书和启动脚本到两个工作节点

[root@master ~]# scp -r /opt/etcd/ root@20.0.0.54:/opt

[root@master ~]# scp -r /opt/etcd/ root@20.0.0.56:/opt

[root@master ~]# scp /usr/lib/systemd/system/etcd.service root@20.0.0.54:/usr/lib/systemd/system/

[root@master ~]# scp /usr/lib/systemd/system/etcd.service root@20.0.0.56:/usr/lib/systemd/system/

- node1和node2两个工作节点修改修改etcd配置文件,修改相应的名称和IP地址

[root@node1 ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02" '修改为etcd02'

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://20.0.0.54:2380" '从这行开始的四行指向自己的IP地址'

ETCD_LISTEN_CLIENT_URLS="https://20.0.0.54:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://20.0.0.54:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://20.0.0.54:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://20.0.0.51:2380,etcd02=https://20.0.0.54:2380,etcd23=https://20.0.0.56:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

'node2上与node1修改一样的地方'

'先开启主节点的集群脚本,然后两个节点启动etcd,就是上面的卡住状态'

[root@master k8s]# bash etcd.sh etcd01 192.168.233.131 etcd02=https://192.168.233.132:2380,etcd03=https://192.168.233.133:2380

[root@node1 ~]# systemctl start etcd

[root@node1 ~]# systemctl status etcd

- 检查集群状态:注意相对路径

[root@master k8s]# cd /opt/etcd/ssl/

[root@master ssl]# ls

ca-key.pem ca.pem server-key.pem server.pem

[root@master ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://20.0.0.51:2379,https://20.0.0.54:2379,https://20.0.0.56:2379" cluster-health

member fd96add358673de is healthy: got healthy result from https://20.0.0.54:2379

member 577f945f818a49d5 is healthy: got healthy result from https://20.0.0.51:2379

member ea62b791f6930412 is healthy: got healthy result from https://20.0.0.56:2379

cluster is healthy '集群是健康的,没问题'

- 以上为ETCD群集搭建完成

3.2:Node节点上部署Docker

- 两个node节点部署Docker,不在赘述,如有疑问,可参阅我之前的博客

3.3:flannel容器集群网络部署理论

3.3.1:flannel理论

-

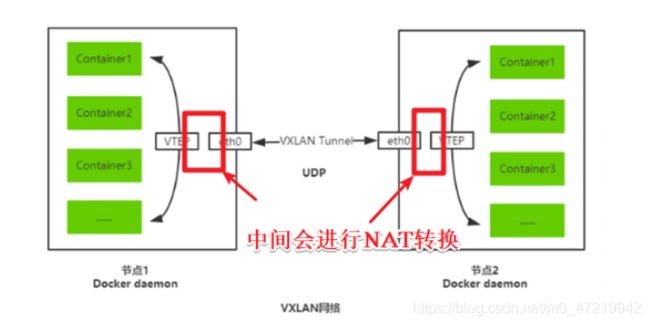

Overlay Network:覆盖网络,在基础网络上叠加的一种虚拟化网络技术模式,该网络中的主机通过虚拟链路连接起来

-

VXLAN:将源数据包封装到UDP中,并使用基础网络的IP/MAC作为外层报文头进行封装,然后在以太网上进行传输,到达目的地后由隧道端点解封装并将数据发送给目标地址

-

Flannel:是Overlay网络的一种,也是将源数据包封装在另一种网络包里面进行路由转发和通信,目前已经支持UDP、VXLAN、AWS VPC和GCE路由等数据转发方式

-

**VXLAN隧道端点(VTEP):**VTEP(VXLAN Tunnel Endpoint )负责VXLAN报文的封装与解封装。每个VTEP具备两个接口:一个是本地桥接接口,负责原始以太帧接收和发送,另一个是IP接口,负责VXLAN数据帧接收和发送。VTEP可以是物理交换机或软件交换机。

-

基于VXLAN的Overlay网络将帧封装为VXLAN数据包后,将传输帧。这些网络中的封装和解封装由称为虚拟隧道端点(VTEP)的实体完成。VTEPS可以作为虚拟机管理程序服务器中的虚拟网桥,特定于VXLAN的虚拟应用程序或能够处理VXLAN的交换硬件在您的overlay网络中实施。

-

Flannel是CoreOS团队针对 Kubernetes设计的一个网络规划服务,简单来说,它的功能是让集群中的不同节点主机创建的 Docker容器都具有全集群唯一的虚拟IP地址。而且它还能在这些IP地址之间建立一个覆盖网络(overlay Network),通过这个覆盖网络,将数据包原封不动地传递到目标容器内

-

ETCD在这里的作用:为Flannel提供说明

- 存储管理 Flannel可分配的IP地址段资源

- 监控ETCD中每个Pod的实际地址,并在内存中建立维护Pod节点路由表

-

Fannel容器集群网络部署结构图

总结:内层套一个fannel虚拟地址,外层套一个真实的物理的地址,实现不同节点的通信

3.4:flannel网络配置

- master节点写入分配的子网段到ETCD中,供flannel使用

[root@master ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://20.0.0.51:2379,https://20.0.0.54:2379,https://20.0.0.56:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

{"Network": "172.17.0.0/16","Backend":{"Type":"vxlan"}}

[root@master ssl]# /opt/etcd/bin/etcdctl --ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem --endpoints="https://20.0.0.51:2379,https://20.0.0.54:2379,https://20.0.0.56:2379" get /coreos.com/network/config '查看写入的信息'

{"Network": "172.17.0.0/16","Backend":{"Type":"vxlan"}}

- 拷贝到所有node节点(只需要部署在node节点即可)

[root@master ssl]# cd /root/k8s/

[root@master k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz root@20.0.0.54:/root

[root@master k8s]# scp flannel-v0.10.0-linux-amd64.tar.gz root@20.0.0.56:/root

[root@node1 ~]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

flanneld

mk-docker-opts.sh

README.md

'谁需要跑pod,谁就需要安装flannel网络'

- node节点创建k8s工作目录,将两个脚本移动到对应工作目录

[root@node1 ~]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@node1 ~]# mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/

- 两个node节点都编辑flannel.sh脚本:创建配置文件与启动脚本,定义的端口是2379,节点对外提供的端口

[root@node1 ~]# vim flannel.sh

#!/bin/bash

ETCD_ENDPOINTS=${1:-"http://127.0.0.1:2379"}

cat <<EOF >/opt/kubernetes/cfg/flanneld

FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem"

EOF

cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

- 执行脚本,开启flannel网络功能

[root@node1 ~]# bash flannel.sh https://20.0.0.51:2379,https://20.0.0.54:2379,https://20.0.0.56:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service

- 配置docker连接flannel网络

[root@node1 ~]# vim /usr/lib/systemd/system/docker.service

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

EnvironmentFile=/run/flannel/subnet.env '#添加这行'

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS'#添加$后面' -H fd:// --containerd=/run/containerd/containerd.sock

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

- 查看flannel分配给docker的IP地址

[root@node1 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.54.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.54.1/24 --ip-masq=false --mtu=1450"

[root@node2 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.88.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.88.1/24 --ip-masq=false --mtu=1450"

[root@node2 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.88.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.88.1/24 --ip-masq=false --mtu=1450"

- 重启Docker服务,查看IP地址变化

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl restart docker.service

[root@node1 ~]# ifconfig

'两个节点应该能查看到各自对应的flannel网络的网段'

- 测试ping通对方docker0网卡 证明flannel起到路由作用

[root@node1 ~]# ping 172.17.88.1

PING 172.17.88.1 (172.17.88.1) 56(84) bytes of data.

64 bytes from 172.17.88.1: icmp_seq=1 ttl=64 time=0.326 ms

64 bytes from 172.17.88.1: icmp_seq=2 ttl=64 time=0.521 ms

- 创建容器测试两个node节点是否可以互联互通

docker run -it centos:7 /bin/bash

yum install net-tools -y

ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.54.2 netmask 255.255.255.0 broadcast 172.17.54.255

ether 02:42:ac:11:36:02 txqueuelen 0 (Ethernet)

RX packets 16340 bytes 12480605 (11.9 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 8267 bytes 449807 (439.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

'再次测试ping通两个node中的centos:7容器'

[root@98be51e32899 /]# ping 172.17.88.2

PING 172.17.88.2 (172.17.88.2) 56(84) bytes of data.

64 bytes from 172.17.88.2: icmp_seq=1 ttl=62 time=0.686 ms

3.5:部署master组件理论

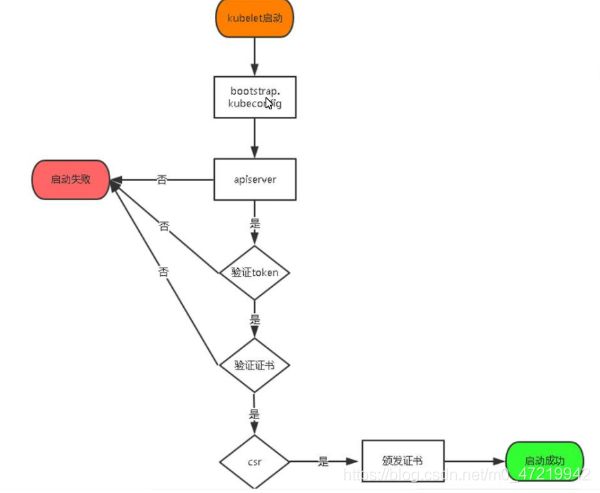

- 下图是node节点的kubectl启动的流程图,根据此流程图,我们需要在master节点将kubelet-bootstrap用户绑定到集群,然后部署一些证书认证使node节点能够被master节点检测到并且成功连接。

3.6:部署master组件

- master节点操作,api-server生成证书

[root@master k8s]# unzip master.zip

[root@master k8s]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@master k8s]# mkdir k8s-cert

[root@master k8s]# cd k8s-cert/

[root@master k8s-cert]# ls

k8s-cert.sh

[root@master k8s-cert]# vim k8s-cert.sh

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

#-----------------------

cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"20.0.0.51", //master1

"20.0.0.52", //master2

"20.0.0.100", //vip

"20.0.0.55", //lb (master)

"20.0.0.57", //lb (backup)

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

#-----------------------

cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

#-----------------------

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

'为什么没有写node节点的IP地址?因为如果写了node节点IP地址,后期增加或者删除node节点的时候会非常麻烦'

- 生成k8s证书

[root@master k8s-cert]# bash k8s-cert.sh '生成证书'

[root@master k8s-cert]# ls *.pem

admin-key.pem ca-key.pem kube-proxy-key.pem server-key.pem

admin.pem ca.pem kube-proxy.pem server.pem

[root@master k8s-cert]# cp ca*.pem server*.pem /opt/kubernets/ssl/ '复制证书到工作目录'

[root@master k8s-cert]# ls /opt/kubernets/ssl/

ca-key.pem ca.pem server-key.pem server.pem

- 解压k8s服务器端压缩包

[root@master k8s-cert]# cd ..

[root@master k8s]# ls

cfssl.sh etcd-v3.3.10-linux-amd64 k8s-cert

etcd-cert etcd-v3.3.10-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz

etcd.sh flannel-v0.10.0-linux-amd64.tar.gz master.zip

[root@master k8s]# tar zxvf kubernetes-server-linux-amd64.tar.gz

- 复制服务器端关键命令到k8s工作目录中

[root@master k8s]# cd /root/k8s/kubernetes/server/bin

[root@master bin]# cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

[root@master bin]# ls /opt/kubernetes/bin/

kube-apiserver kube-controller-manager kubectl kube-scheduler

- 编辑令牌并绑定角色kubelet-bootstrap

[root@master bin]# cd /root/k8s/

[root@master k8s]# head -c 16 /dev/urandom | od -An -t x | tr -d ' ' '生成随机序列号'

5859222b3e631f660b51c63f01416e2e

[root@master k8s]# vim /opt/kubernets/cfg/token.csv

5859222b3e631f660b51c63f01416e2e,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

'序列号,用户名,id,角色,这个用户是master用来管理node节点的'

- 二进制文件,token,证书都准备好,开启apiserver,将数据存放在etcd集群中并检查kube状态

[root@master k8s]# bash apiserver.sh 20.0.0.51 https://20.0.0.51:2379,https://20.0.0.54:2379,https://20.0.0.56:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

[root@master k8s]# ps aux | grep kube

root 20687 17.2 8.1 400404 313108 ? Ssl 18:21 0:10 /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://20.0.0.51:2379,https://20.0.0.54:2379,https://20.0.0.56:2379 --bind-address=20.0.0.51 --secure-port=6443 --advertise-address=20.0.0.51 --allow-privileged=true --service-cluster-ip-range=10.0.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=RBAC,Node --kubelet-https=true --enable-bootstrap-token-auth --token-auth-file=/opt/kubernetes/cfg/token.csv --service-node-port-range=30000-50000 --tls-cert-file=/opt/kubernetes/ssl/server.pem --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem --client-ca-file=/opt/kubernetes/ssl/ca.pem --service-account-key-file=/opt/kubernetes/ssl/ca-key.pem --etcd-cafile=/opt/etcd/ssl/ca.pem --etcd-certfile=/opt/etcd/ssl/server.pem --etcd-keyfile=/opt/etcd/ssl/server-key.pem

root 20722 0.0 0.0 112724 984 pts/0 S+ 18:22 0:00 grep --color=auto kube

[root@master k8s]# cat /opt/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \

--v=4 \

--etcd-servers=https://20.0.0.51:2379,https://20.0.0.54:2379,https://20.0.0.56:2379 \

--bind-address=20.0.0.51 \

--secure-port=6443 \ '其实就是443,https协议通信端口'

--advertise-address=20.0.0.51 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=30000-50000 \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

[root@master k8s]# netstat -ntap |grep 6443 '监听的https端口'

tcp 0 0 20.0.0.51:6443 0.0.0.0:* LISTEN 20687/kube-apiserve

tcp 0 0 20.0.0.51:6443 20.0.0.51:57840 ESTABLISHED 20687/kube-apiserve

tcp 0 0 20.0.0.51:57840 20.0.0.51:6443 ESTABLISHED 20687/kube-apiserve

[root@master k8s]# netstat -ntap |grep 8080 '监听的http端口'

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 20687/kube-apiserve

- 启动scheduler服务

[root@localhost k8s]# ./scheduler.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

[root@localhost k8s]# ps aux | grep ku

- 启动controller-manager

[root@localhost k8s]# chmod +x controller-manager.sh

[root@localhost k8s]# ./controller-manager.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/sys

- 查看master节点状态

[root@localhost k8s]# /opt/kubernetes/bin/kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

3.7:node01节点部署

- master节点上将kubectl和kube-proxy拷贝到node节点

[root@master k8s]# cd kubernetes/server/bin/

[root@master bin]# scp kubelet kube-proxy root@20.0.0.54:/opt/kubernetes/bin/

root@20.0.0.54's password:

kubelet 100% 168MB 84.2MB/s 00:02

kube-proxy 100% 48MB 121.5MB/s 00:00

[root@master bin]# scp kubelet kube-proxy root@20.0.0.56:/opt/kubernetes/bin/

- node节点解压node.zip(复制node.zip到/root目录下再解压)

[root@node1 ~]# rz -E

rz waiting to receive.

[root@node1 ~]# ls

anaconda-ks.cfg node.zip 视频 音乐

flannel.sh README.md 图片 桌面

flannel-v0.10.0-linux-amd64.tar.gz 公共 文档

initial-setup-ks.cfg 模板 下载

[root@node1 ~]# unzip node.zip

Archive: node.zip

inflating: proxy.sh

inflating: kubelet.sh

- master节点创建kubeconfig目录

[root@master bin]# mkdir kubeconfig

[root@master bin]# cd kubeconfig/

[root@master kubeconfig]# rz -E

rz waiting to receive.

[root@master kubeconfig]# mv kubeconfig.sh kubeconfig

[root@master kubeconfig]# vim kubeconfig

----------------删除以下部分-----------------------

# 创建 TLS Bootstrapping Token

#BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

BOOTSTRAP_TOKEN=0fb61c46f8991b718eb38d27b605b008

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

[root@master kubeconfig]# cat /opt/kubernetes/cfg/token.csv

c3a5b4de7ee91da8049a84223c713f4b,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

[root@master kubeconfig]# vim kubeconfig '将上面的随机码复制到token这里'

'配置文件修改为tokenID'

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=c3a5b4de7ee91da8049a84223c713f4b \

--kubeconfig=bootstrap.kubeconfig

[root@master kubeconfig]# vim /etc/profile '设置环境变量'

export PATH=$PATH:/opt/kubernetes/bin/

[root@master kubeconfig]# source /etc/profile

[root@master kubeconfig]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

- 生成配置文件并拷贝到node节点

[root@master kubeconfig]# bash kubeconfig 20.0.0.51 /root/k8s/k8s-cert/

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" created.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" created.

Switched to context "default".

[root@master kubeconfig]# ls

bootstrap.kubeconfig kubeconfig kube-proxy.kubeconfig

[root@master kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@20.0.0.54:/opt/kubernetes/cfg/

root@20.0.0.54's password:

bootstrap.kubeconfig 100% 2163 1.3MB/s 00:00

kube-proxy.kubeconfig 100% 6269 3.4MB/s 00:00

[root@master kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@20.0.0.56:/opt/kubernetes/cfg/

root@20.0.0.56's password:

bootstrap.kubeconfig 100% 2163 2.0MB/s 00:00

kube-proxy.kubeconfig 100% 6269 7.1MB/s 00:00

- 创建bootstrap角色并赋予权限用于连接apiserver请求签名(关键)

[root@master kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

- node01节点操作生成kubelet kubelet.config配置文件

[root@node1 ~]# bash kubelet.sh 20.0.0.54

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@node1 ~]# ps aux |grep kube

root 21417 0.1 0.5 334104 20792 ? Ssl 17:30 0:04 /opt/kubernetes/bin/flanneld --ip-masq --etcd-endpoints=https://20.0.0.51:2379,https://20.0.0.54:2379,https://20.0.0.56:2379 -etcd-cafile=/opt/etcd/ssl/ca.pem -etcd-certfile=/opt/etcd/ssl/server.pem -etcd-keyfile=/opt/etcd/ssl/server-key.pem

root 28408 6.2 1.1 414592 45696 ? Ssl 18:50 0:00 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --hostname-override=20.0.0.54 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet.config --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

root 28437 0.0 0.0 112724 988 pts/0 S+ 18:51 0:00 grep --color=auto kube

- master上检查到node01节点的请求,查看证书状态

[root@master kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-VsTi52ACNqwGisF5WLAjCYrZfF0DlnW7_kmy8pVLpwo 35s kubelet-bootstrap Pending 'pending:等待集群给该节点办法证书'

- master颁发证书,再次查看证书状态

[root@master kubeconfig]# kubectl certificate approve node-csr-VsTi52ACNqwGisF5WLAjCYrZfF0DlnW7_kmy8pVLpwo

certificatesigningrequest.certificates.k8s.io/node-csr-VsTi52ACNqwGisF5WLAjCYrZfF0DlnW7_kmy8pVLpwo approved

[root@master kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-VsTi52ACNqwGisF5WLAjCYrZfF0DlnW7_kmy8pVLpwo 2m51s kubelet-bootstrap Approved,Issued '已经被允许加入集群'

- 查看集群状态

[root@master kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

20.0.0.54 Ready <none> 64s v1.12.3

'如果有一个节点noready,检查kubelet,如果很多节点noready,那就检查apiserver,如果没问题再检查VIP地址,keepalived'

- 在node01节点操作,启动proxy服务

[root@node1 ~]# bash proxy.sh 20.0.0.54

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@node1 ~]# systemctl status kube-proxy.service

● kube-proxy.service - Kubernetes Proxy

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since 二 2020-09-29 18:55:55 CST; 16s ago

Main PID: 29635 (kube-proxy)

Tasks: 0

Memory: 7.4M

CGroup: /system.slice/kube-proxy.service

‣ 29635 /opt/kubernetes/bin/kube-proxy --logtostderr=true --v...

9月 29 18:56:02 node1 kube-proxy[29635]: I0929 18:56:02.196358 29635...e

9月 29 18:56:03 node1 kube-proxy[29635]: I0929 18:56:03.610597 29635...e

9月 29 18:56:04 node1 kube-proxy[29635]: I0929 18:56:04.203166 29635...e

9月 29 18:56:05 node1 kube-proxy[29635]: I0929 18:56:05.618508 29635...e

9月 29 18:56:06 node1 kube-proxy[29635]: I0929 18:56:06.211270 29635...e

9月 29 18:56:07 node1 kube-proxy[29635]: I0929 18:56:07.626712 29635...e

9月 29 18:56:08 node1 kube-proxy[29635]: I0929 18:56:08.217611 29635...e

9月 29 18:56:09 node1 kube-proxy[29635]: I0929 18:56:09.634554 29635...e

9月 29 18:56:10 node1 kube-proxy[29635]: I0929 18:56:10.226145 29635...e

9月 29 18:56:11 node1 kube-proxy[29635]: I0929 18:56:11.641058 29635...e

Hint: Some lines were ellipsized, use -l to show in full.

3.8:node02节点部署

- 将node01之前生成的/opt/kubernetes目录复制到其他节点进行修改即可

[root@node1 ~]# scp -r /opt/kubernetes/ root@20.0.0.56:/opt/

The authenticity of host '20.0.0.56 (20.0.0.56)' can't be established.

ECDSA key fingerprint is SHA256:O3USJ+o5D4lvJCMq3+P0XqYRhwQgbzx5T29AhpmJDrY.

ECDSA key fingerprint is MD5:84:2a:4e:22:1d:37:7f:ee:d5:f6:00:db:14:56:87:99.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '20.0.0.56' (ECDSA) to the list of known hosts.

root@20.0.0.56's password:

flanneld 100% 223 390.8KB/s 00:00

bootstrap.kubeconfig 100% 2163 1.5MB/s 00:00

kube-proxy.kubeconfig 100% 6269 6.9MB/s 00:00

kubelet 100% 373 200.6KB/s 00:00

kubelet.config 100% 263 213.6KB/s 00:00

kubelet.kubeconfig 100% 2292 2.0MB/s 00:00

kube-proxy 100% 185 194.6KB/s 00:00

mk-docker-opts.sh 100% 2139 2.0MB/s 00:00

scp: /opt//kubernetes/bin/flanneld: Text file busy

kubelet 100% 168MB 127.9MB/s 00:01

kube-proxy 100% 48MB 135.5MB/s 00:00

kubelet.crt 100% 2165 2.9MB/s 00:00

kubelet.key 100% 1679 2.2MB/s 00:00

kubelet-client-2020-09-29-18-53-39.pem 100% 1269 541.6KB/s 00:00

kubelet-client-current.pem 100% 1269 423.5KB/s 00:00

'把kubelet,kube-proxy的service文件拷贝到node2中'

[root@node1 ~]# scp /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@20.0.0.56:/usr/lib/systemd/system

root@20.0.0.56's password:

kubelet.service 100% 264 142.8KB/s 00:00

kube-proxy.service 100% 264 292.4KB/s 00:00

- 在node02上操作,进行修改,首先删除复制过来的证书,等会node02会自行申请证书

[root@node2 ~]# cd /opt/kubernetes/ssl/

[root@node2 ssl]# rm -rf *

- 修改配置文件kubelet kubelet.config kube-proxy(三个配置文件,主要就是三个IP地址)

[root@node2 ssl]# cd ../cfg/

[root@node2 cfg]# vim kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=20.0.0.56 \ '修改为本机地址,下面同样修改为本机IP'

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--config=/opt/kubernetes/cfg/kubelet.config \

--cert-dir=/opt/kubernetes/ssl \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

[root@node2 cfg]# vim kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 20.0.0.56

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local.

failSwapOn: false

authentication:

anonymous:

enabled: true

[root@node2 cfg]# vim kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=20.0.0.56 \

--cluster-cidr=10.0.0.0/24 \

--proxy-mode=ipvs \

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"

- 启动服务

[root@node2 cfg]# systemctl start kubelet.service

[root@node2 cfg]# systemctl enable kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

[root@node2 cfg]# systemctl start kube-proxy.service

[root@node2 cfg]# systemctl enable kube-proxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

- 在master上操作查看请求并授权许可加入群集

[root@master kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-O8etApnHzGD9de7ge18cJ4sAF7967GAoK3GYJo-Zepo 67s kubelet-bootstrap Pending

node-csr-VsTi52ACNqwGisF5WLAjCYrZfF0DlnW7_kmy8pVLpwo 20m kubelet-bootstrap Approved,Issued

[root@master kubeconfig]# kubectl certificate approve node-csr-O8etApnHzGD9de7ge18cJ4sAF7967GAoK3GYJo-Zepo

certificatesigningrequest.certificates.k8s.io/node-csr-O8etApnHzGD9de7ge18cJ4sAF7967GAoK3GYJo-Zepo approved

- 查看群集中的节点,即加入成功

[root@master kubeconfig]# kubectl get node

NAME STATUS ROLES AGE VERSION

20.0.0.54 Ready <none> 19m v1.12.3

20.0.0.56 Ready <none> 14s v1.12.3