基于Flink1.8的Flink On Yarn的启动流程

基于Flink1.8版本,分析 On Yarn模式的任务提交过程:

- 明确提交模式:Job模式和Session模式

- 总览yarn提交流程(基于1.8)

- 分析启动命令,确定Main方法入口

- 结合关键类分析启动过程

- 总结Yarn启动流程 -

Yarn提交模式

- Job模式(小Session模式)

- Session模式

Job模式

每个Flink Job单独在yarn上声明一个Flink集群,即提交一次,生成一个Yarn-Session。

./bin/flink run -m yarn-cluster -yn 2 -yjm 1024 -ytm 1024 ./examples/batch/WordCount.jar ...

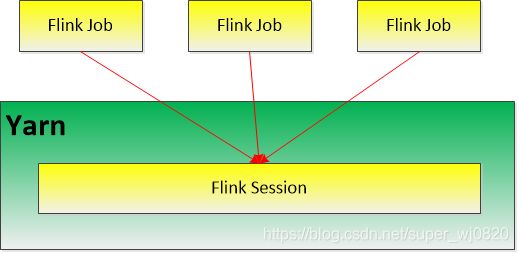

Session模式

常驻Session,yarn集群中维护Flink Master,即一个yarn application master,运行多个job。

启动任务之前需要先启动一个一直运行的Flink集群:

1启动一个一直运行的flink集群

./bin/yarn-session.sh -n 2 -jm 1024 -tm 1024 -d

2 附着到一个已存在的flink yarn session

./bin/yarn-session.sh -id application_1463870264508_0029

总览yarn提交流程(基于1.8)

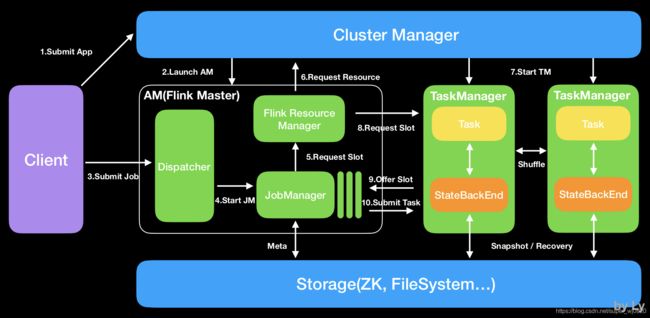

Flink架构

-

Dispatcher(Application Master)提供REST接口来接收client的application提交,它负责启动JM和提交application,同时运行Web UI。

-

ResourceManager:一般是Yarn,当TM有空闲的slot就会告诉JM,没有足够的slot也会启动新的TM。kill掉长时间空闲的TM。

-

JobManager :接受application,包含StreamGraph(DAG)、JobGraph(logical dataflow graph,已经进过优化,如task chain)和JAR,将JobGraph转化为ExecutionGraph(physical dataflow graph,并行化),包含可以并发执行的tasks。其他工作类似Spark driver,如向RM申请资源、schedule tasks、保存作业的元数据,如checkpoints。如今JM可分为JobMaster和ResourceManager(和下面的不同),分别负责任务和资源,在Session模式下启动多个job就会有多个JobMaster。

-

TaskManager:类似Spark的executor,会跑多个线程的task、数据缓存与交换。

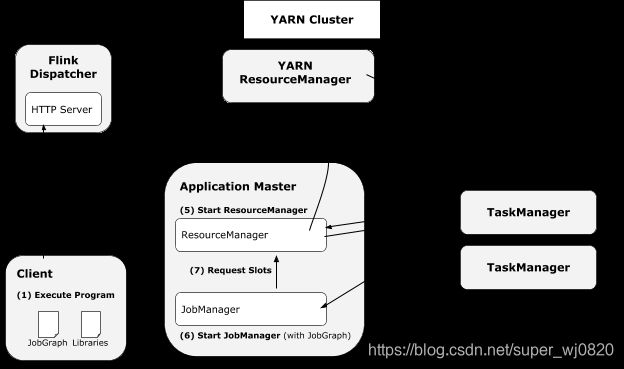

Flink On Yarn

Flink1.7之后,新增了Dispatcher,在on yarn流程上略有却别

Without dispatcher

- 当开始一个新的Flink yarn 会话时,客户端首先检查所请求的资源(containers和内存)是否可用。如果资源够用,之后,上传一个jar包,包含Flink和HDFS的配置。

- 客户端向yarn resource manager发送请求,申请一个yarn container去启动ApplicationMaster。

- yarn resource manager会在nodemanager上分配一个container,去启动ApplicationMaster

- yarn nodemanager会将配置文件和jar包下载到对应的container中,进行container容器的初始化。

- 初始化完成后,ApplicationMaster构建完成。ApplicationMaster会为TaskManagers生成新的Flink配置文件(使得TaskManagers根据配置文件去连接到JobManager),配置文件会上传到HDFS。

- ApplicationMaster开始为该Flink应用的TaskManagers分配containers,这个过程会从HDFS上下载jar和配置文件(此处的配置文件是AM修改过的,包含了JobManager的一些信息,比如说JobManager的地址)

- 一旦上面的步骤完成,Flink已经建立并准备好接受jobs。

With dispatcher

Dispatcher组件负责接收作业提交,持久化它们,生成JobManagers以执行作业并在Master故障时恢复它们。此外,它知道Flink会话群集的状态。

引入Dispatcher是因为:

- 某些集群管理器需要一个集中的作业生成和监视实例

- 它包含独立JobManager的角色,等待提交作业

分析启动命令,确定Main方法入口

项目中使用 Job模式 提交命令,所以此处以Job模式为例介绍,提交命令如下:

./bin/flink run -m yarn-cluster -yn 2 -yjm 1024 -ytm 1024 ./examples/batch/WordCount.jar ...

分析flink.sh脚本(位于flink-dist模块),发现脚本最后的入口类:org.apache.flink.client.cli.CliFrontend,此类中的Main方法是所有提交操作的开始,在客户端Client执行。

结合关键类分析启动过程

- CliFrontend[Main] :Client提交任务的入口,AM创建,提交程序

- ClusterEntrypoint[Main] : 与Yarn集群交互,启动集群的基本服务,如Dispatcher,ResourceManager和WebMonitorEndpoint等

- YarnTaskExecutorRunner[Main] :TaskExecutor(即TaskManager)上的Task执行Main入口

- JobSubmitHandler与Dispatcher :处理Client端任务提交,启动JobMaster,构建ExecutionGraph,并deploy所有Task任务

- ResourceManager :资源管理器,指明TaskExecutor入口类,启动TaskExecutor的Container

CliFrontend[Main]

通过flink.sh脚本,找到Flink On Yarn模式的入口,全路径:org.apache.flink.client.cli.CliFrontend,下面结合源码分析启动过程。

方法调用栈

CliFrontend[Main]

-> cli.parseParameters(args)

-> buildProgram(runOptions)

-> runProgram(customCommandLine, commandLine, runOptions, program)

(根据yarn提交模式,走不同分支,以Job小Session集群方式为例)

-> customCommandLine.createClusterDescriptor

-> clusterDescriptor.deploySessionCluster(clusterSpecification)

deployInternal -- block,直到ApplicationMaster/JobManager在YARN上部署完毕

startAppMaster

setupApplicationMasterContainer

startCommandValues.put("class", yarnClusterEntrypoint) -- 此处是 YarnJobClusterEntrypoint[Main]

-> executeProgram(program, client, userParallelism);

(执行程序就是优化得到JobGraph,远程提交的过程)

代码分析

1. runProgram方法

根据任务提交模式,会走不同的分支:

private void runProgram(

CustomCommandLine customCommandLine,

CommandLine commandLine,

RunOptions runOptions,

PackagedProgram program) throws ProgramInvocationException, FlinkException {

// 获取yarnClusterDescriptor,用户创建集群

final ClusterDescriptor clusterDescriptor = customCommandLine.createClusterDescriptor(commandLine);

try {

// 此处clusterId如果不为null,则表示是session模式

final T clusterId = customCommandLine.getClusterId(commandLine);

final ClusterClient client;

/*

* Yarn模式:

* 1. Job模式:每个flink job 单独在yarn上声明一个flink集群

* 2. Session模式:在集群中维护flink master,即一个yarn application master,运行多个job。

*/

if (clusterId == null && runOptions.getDetachedMode()) {

// job + DetachedMode模式

int parallelism = runOptions.getParallelism() == -1 ? defaultParallelism : runOptions.getParallelism();

// 从jar包中获取jobGraph

final JobGraph jobGraph = PackagedProgramUtils.createJobGraph(program, configuration, parallelism);

// clusterDescriptor.deployJobCluster

// -> YarnClusterDescriptor.deployInternal

// -> AbstractYarnClusterDescriptor.startAppMaster

// -> AbstractYarnClusterDescriptor.yarnClient.submitApplication(appContext);

// 新建一个RestClusterClient,在yarn集群中启动应用(ClusterEntrypoint)

final ClusterSpecification clusterSpecification = customCommandLine.getClusterSpecification(commandLine);

client = clusterDescriptor.deployJobCluster(

clusterSpecification,

jobGraph,

runOptions.getDetachedMode());

......

} else {

final Thread shutdownHook;

if (clusterId != null) {

// session模式

client = clusterDescriptor.retrieve(clusterId);

shutdownHook = null;

} else {

// job + non-DetachedMode模式

final ClusterSpecification clusterSpecification = customCommandLine.getClusterSpecification(commandLine);

// 新建一个小session集群,会启动ClusterEntrypoint,提供Dispatcher,ResourceManager和WebMonitorEndpoint等服务

client = clusterDescriptor.deploySessionCluster(clusterSpecification);

// 进行资源清理的钩子

if (!runOptions.getDetachedMode() && runOptions.isShutdownOnAttachedExit()) {

shutdownHook = ShutdownHookUtil.addShutdownHook(client::shutDownCluster, client.getClass().getSimpleName(), LOG);

} else {

shutdownHook = null;

}

}

try {

......

// 优化图,执行程序的远程提交

executeProgram(program, client, userParallelism);

} finally {

......

}

}

} finally {

......

}

}

2. clusterDescriptor.deploySessionCluster方法

新建小Session集群,部署、启动ApplicationMaster/JobManager:

clusterDescriptor.deploySessionCluster(clusterSpecification)

deployInternal -- block,直到ApplicationMaster/JobManager在YARN上部署完毕

startAppMaster

setupApplicationMasterContainer

startCommandValues.put("class", yarnClusterEntrypoint) -- 此处是 YarnJobClusterEntrypoint[Main]

deployInternal方法,部署集群:

protected ClusterClient deployInternal(

ClusterSpecification clusterSpecification,

String applicationName,

String yarnClusterEntrypoint,

@Nullable JobGraph jobGraph,

boolean detached) throws Exception {

// ------------------ Check if configuration is valid --------------------

......

// ------------------ Check if the specified queue exists --------------------

checkYarnQueues(yarnClient);

// ------------------ Add dynamic properties to local flinkConfiguraton ------

......

// ------------------ Check if the YARN ClusterClient has the requested resources --------------

// Create application via yarnClient

final YarnClientApplication yarnApplication = yarnClient.createApplication();

......

// ------------------启动ApplicationMaster ----------------

ApplicationReport report = startAppMaster(

flinkConfiguration,

applicationName,

yarnClusterEntrypoint,

jobGraph,

yarnClient,

yarnApplication,

validClusterSpecification);

......

// the Flink cluster is deployed in YARN. Represent cluster

return createYarnClusterClient(

this,

validClusterSpecification.getNumberTaskManagers(),

validClusterSpecification.getSlotsPerTaskManager(),

report,

flinkConfiguration,

true);

}

startAppMaster方法,启动ApplicationMaster:

public ApplicationReport startAppMaster(

Configuration configuration,

String applicationName,

String yarnClusterEntrypoint,

JobGraph jobGraph,

YarnClient yarnClient,

YarnClientApplication yarnApplication,

ClusterSpecification clusterSpecification) throws Exception {

// ------------------ Initialize the file systems -------------------------

......

// ------------- Set-up ApplicationSubmissionContext for the application -------------

ApplicationSubmissionContext appContext = yarnApplication.getApplicationSubmissionContext();

final ApplicationId appId = appContext.getApplicationId();

// ------------------ Add Zookeeper namespace to local flinkConfiguraton ------

......

// ------------------ 准备Yarn所需的资源和文件 ------

// Setup jar for ApplicationMaster

......

// 准备TaskManager的相关配置信息

configuration.setInteger(

TaskManagerOptions.NUM_TASK_SLOTS,

clusterSpecification.getSlotsPerTaskManager());

configuration.setString(

TaskManagerOptions.TASK_MANAGER_HEAP_MEMORY,

clusterSpecification.getTaskManagerMemoryMB() + "m");

// Upload the flink configuration, write out configuration file

......

// ------------------ 启动ApplicationMasterContainer ------

final ContainerLaunchContext amContainer = setupApplicationMasterContainer(

yarnClusterEntrypoint,

hasLogback,

hasLog4j,

hasKrb5,

clusterSpecification.getMasterMemoryMB());

// --------- set user specified app master environment variables ---------

......

// 提交App

yarnClient.submitApplication(appContext);

// --------- Waiting for the cluster to be allocated ---------

......

}

setupApplicationMasterContainer方法,启动AppMaster:

protected ContainerLaunchContext setupApplicationMasterContainer(

String yarnClusterEntrypoint,

boolean hasLogback,

boolean hasLog4j,

boolean hasKrb5,

int jobManagerMemoryMb) {

// ------------------ Prepare Application Master Container ------------------------------

......

// Set up the container launch context for the application master

ContainerLaunchContext amContainer = Records.newRecord(ContainerLaunchContext.class);

final Map startCommandValues = new HashMap<>();

......

// 与yarn集群打交道的Yarn终端,此Entrypoint会提供webMonitor、resourceManager、dispatcher 等服务

startCommandValues.put("class", yarnClusterEntrypoint);

final String amCommand =

BootstrapTools.getStartCommand(commandTemplate, startCommandValues);

amContainer.setCommands(Collections.singletonList(amCommand));

return amContainer;

}

ClusterEntrypoint[Main]

与yarn集群打交道,ClusterEntrypoint 包含了 webMonitor、resourceManager、dispatcher 的服务。

- 封装了Cluster启停的逻辑

- 根据配置文件来创建RpcService

- HaService

- HeartbeatService

- MetricRegistry

- 提供了几个抽象方法给子类(createDispatcher,createResourceManager,createRestEndpoint,createSerializableExecutionGraphStore)

Yarn相关子类(对应两种模式):

- YarnJobClusterEntrypoint

- YarnSessionClusterEntrypoint 3.

方法调用栈

YarnJobClusterEntrypoint[Main]

-> ClusterEntrypoint.runClusterEntrypoint(yarnJobClusterEntrypoint);

-> clusterEntrypoint.startCluster();

-> runCluster(configuration);

-> clusterComponent = dispatcherResourceManagerComponentFactory.create();

* 在同一进程中启动Dispatcher,ResourceManager和WebMonitorEndpoint组件服务

create -> {

webMonitorEndpoint.start();

resourceManager.start();

dispatcher.start();

}

* 重点关注ResourceManager,会创建TaskManager

-> resourceManager = resourceManagerFactory.createResourceManager()

-> YarnResourceManager.initialize()

* 创建 resourceManagerClient 和 nodeManagerClient

* YarnResourceManager 继承自 yarn 的 AMRMClientAsync.CallbackHandler接口,在Container分配完之后,回调如下接口:

-> void onContainersAllocated(List containers)

-> createTaskExecutorLaunchContext()

-> Utils.createTaskExecutorContext() -- 参数 YarnTaskExecutorRunner.class, 指明TaskManager的Main入口

-> nodeManagerClient.startContainer(container, taskExecutorLaunchContext);

代码分析

YarnJobClusterEntrypoint会启动一些重要的服务

dispatcherResourceManagerComponentFactory.create

public DispatcherResourceManagerComponent create(

Configuration configuration,

RpcService rpcService,

HighAvailabilityServices highAvailabilityServices,

BlobServer blobServer,

HeartbeatServices heartbeatServices,

MetricRegistry metricRegistry,

ArchivedExecutionGraphStore archivedExecutionGraphStore,

MetricQueryServiceRetriever metricQueryServiceRetriever,

FatalErrorHandler fatalErrorHandler) throws Exception {

// 创建服务后会启动部分服务

webMonitorEndpoint.start();

resourceManager.start(); -- 里面指明TaskExecutor(即TaskManager)的Main入口

dispatcher.start(); -- Dispatcher服务会处理client 的 submitjob,促使TaskExecutor上的任务执行

// 返回所有服务的封装类

return createDispatcherResourceManagerComponent(

dispatcher,

resourceManager,

dispatcherLeaderRetrievalService,

resourceManagerRetrievalService,

webMonitorEndpoint,

jobManagerMetricGroup);

} catch (Exception exception) {

......

}

}

JobSubmitHandler与Dispatcher

处理Client端任务提交,启动JobMaster,构建ExecutionGraph,并deploy所有Task任务.

方法调用栈

ClusterEntrypoint会启动Dispatcher服务:

Dispatcher

--> onStart()

--> startDispatcherServices()

-> submittedJobGraphStore.start(this)

-> leaderElectionService.start(this)

LeaderRetrievalHandler会从netty处理从Client发来的submitjob消息:

LeaderRetrievalHandler

-> channelRead0() -- 一个netty对象

-> AbstractHandler.respondAsLeader()

-> AbstractRestHandler.respondToRequest()

-> JobSubmitHandler.handleRequest

-> Dispatcher.submitJob

-> Dispatcher.internalSubmitJob

-> Dispatcher.persistAndRunJob

-> Dispatcher.runJob

-> Dispatcher.createJobManagerRunner -- 创建JobManagerRunner

-> jobManagerRunnerFactory.createJobManagerRunner

* 创建DefaultJobMasterServiceFactory

* new JobManagerRunner()

-> dispatcher.startJobManagerRunner -- 启动JobManagerRunner

-> jobManagerRunner.start();

-> ZooKeeperLeaderElectionService.start

-> ZooKeeperLeaderElectionService.isLeader

-> leaderContender.grantLeadership(issuedLeaderSessionID)

-> jobManagerRunner.verifyJobSchedulingStatusAndStartJobManager

-> startJobMaster(leaderSessionId) -- 启动JobMaster

-> jobMasterService.start

-> startJobExecution(newJobMasterId)

-> startJobMasterServices -- 包括slotPool和scheduler的启动,告知flinkresourceManager leader的地址,当FlinkRM和JM建立好连接后,slot就可以开始requesting slots

-> resetAndScheduleExecutionGraph -- 执行job

--> createAndRestoreExecutionGraph -- 生成ExecutionGraph

--> scheduleExecutionGraph

--> executionGraph.scheduleForExecution()

--> scheduleEager {

* 给Execution 分配 slots

--> allocateResourcesForAll()

* 遍历 execution,调用其 deploy 方法

--> execution.deploy()

--> taskManagerGateway.submitTask

--> [TaskExecutor] new Task()

--> [TaskExecutor] task.startTaskThread() -- 至此,任务真正执行

}

总结Yarn启动流程

- 运行 flink 脚本(flink.sh),从CliFrontend类开始提交流程;

- 创建 yarnClusterDescriptor,准备集群创建所需的信息;

- 部署Session集群,启动ApplicationMaster/JobManager,通过ClusterEntrypoint[Main]启动Flink所需的服务,如Dispatcher,ResourceManager和WebMonitorEndpoint等;

- ResourceManager会创建resourceManagerClient 和 nodeManagerClient,在Container分配完成,启动TaskExecutor的Container(同步指定TaskExecutor的Main入口);

- 3、4集群部署完毕,Client会进行任务提交,DIspatcher服务会接收到命令;

- Dispatcher通过JobManagerRunner启动JobMaster服务,构建ExecutionGraph,分配slot,通知TaskExecutor执行Task;

- 至此,任务真正执行。

几点说明:

- JobMaster:

负责单个 JobGraph 的执行的。JobManager 是老的 runtime 框架,1.7版本依然存在,但主要起作用的应该是 JobMaster。在1.8后,JobManager 类消失了。

JM 的主要执行在本节最后的源码分析有提及。 - YarnTaskExecutorRunner:

TaskExecutor 在 yarn 集群中的对象,相当于 TaskManager,它可能有多个 slots,每个 slot 执行一个具体的子任务。每个 TaskExecutor 会将自己的 slots 注册到 SlotManager 上,并汇报自己的状态,是忙碌状态,还是处于一个闲置的状态。