【进阶】 --- 多线程、多进程、异步IO 实用例子

asyncio 官方文档:https://docs.python.org/zh-cn/3.10/library/asyncio.html

多线程、多进程、异步IO实用例子:https://blog.csdn.net/lu8000/article/details/82315576

python之爬虫_并发(串行、多线程、多进程、异步IO):https://www.cnblogs.com/fat39/archive/2004/01/13/9044474.html

Python并发总结:多线程,多进程,异步IO:https://www.cnblogs.com/junmoxiao/p/11948993.html

关于asyncio异步io并发编程:https://zhuanlan.zhihu.com/p/158641367

Python中协程异步IO(asyncio)详解

- :https://zhuanlan.zhihu.com/zarten

- :https://zhuanlan.zhihu.com/p/59621713

asyncio:异步I/O、事件循环和并发工具:https://www.cnblogs.com/sidianok/p/12210857.html

在编写爬虫时,性能的消耗主要在IO请求中,当单进程单线程模式下请求URL时必然会引起等待,从而使得请求整体变慢。

- httpie:HTTPie 使用详解:https://zhuanlan.zhihu.com/p/45093545

- grequests,Requests + Gevent,访问:https://github.com/kennethreitz/grequests

- gevent,一个高并发的网络性能库,访问:http://www.gevent.org/

- twisted,基于事件驱动的网络引擎框架。访问:https://twistedmatrix.com/trac/

支持 asyncio 的异步 Python 库:https://github.com/aio-libs

一、多线程、多进程

1.同步执行

2.多线程执行

3.多线程 + 回调函数执行

4.多进程执行

5.多进程 + 回调函数执行

二、异步

1.asyncio 示例 1

asyncio 示例 2

python 异步编程之 asyncio(百万并发)

学习 python 高并发模块 asynio

2.asyncio + aiohttp

3.asyncio + requests

4.gevent + requests

5.grequests

6.Twisted示例

7.Tornado

8.Twisted更多

9.史上最牛逼的异步 IO 模块

一、多线程、多进程

1. 同步执行

示例 1( 同步执行 ):

import requests

import time

from lxml import etree

url_list = [

'https://so.gushiwen.cn/shiwens/',

'https://so.gushiwen.cn/mingjus/',

'https://so.gushiwen.cn/authors/',

]

def get_title(url: str):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 '

'(KHTML, like Gecko) Chrome/86.0.4240.183 Safari/537.36'

}

resp = requests.get(url, headers=headers)

resp.encoding = 'utf-8'

if 200 == resp.status_code:

print(resp.text)

else:

print(f'[status_code:{resp.status_code}]:{r.url}')

if __name__ == '__main__':

start = time.time()

for url in url_list:

get_title(url)

print(f'cost time: {time.time() - start}s')

使用 httpx 模块的同步调用( httpx 可同步,可异步 )

import time

import httpx

def make_request(client):

resp = client.get('https://httpbin.org/get')

result = resp.json()

print(f'status_code : {resp.status_code}')

assert 200 == resp.status_code

def main():

session = httpx.Client()

# 100 次调用

for _ in range(10):

make_request(session)

if __name__ == '__main__':

# 开始

start = time.time()

main()

# 结束

end = time.time()

print(f'同步:发送100次请求,耗时:{end - start}')

2. 多线程 执行( 线程池 )

线程池:字面意思就是线程的池子,例如创建一个 10个线程的池子,里面有10个线程,假设有100个任务,可以同时把这 100个任务全部加到线程池里面,但是同一时间只有10个线程执行10个任务,每当一个线程执行完成一个任务时,就会自动继续执行下一个任务,直到100个任务全部执行完毕,

from concurrent.futures import ThreadPoolExecutor

import requests

def fetch_sync(r_url):

response = requests.get(r_url)

print(f"{r_url} ---> {response.status_code}")

url_list = [

'https://www.baidu.com',

'https://www.bing.com'

]

pool = ThreadPoolExecutor(5)

for url in url_list:

pool.submit(fetch_sync, url)

pool.shutdown(wait=True)

3. 多线程 + 回调函数执行

from concurrent.futures import ThreadPoolExecutor

import requests

def fetch_sync(r_url):

response = requests.get(r_url, verify=False)

return response

def callback(future):

resp = future.result()

print(f"{resp.url} ---> {resp.status_code}")

url_list = [

'https://www.baidu.com',

'https://www.bing.com'

]

pool = ThreadPoolExecutor(5)

for url in url_list:

v = pool.submit(fetch_sync, url)

v.add_done_callback(callback)

pool.shutdown(wait=True)4. 多进程 执行 ( 进程池 )

import requests

from concurrent import futures

def fetch_sync(r_url):

response = requests.get(r_url)

return response

if __name__ == '__main__':

url_list = [

'https://www.baidu.com',

'https://www.bing.com'

]

with futures.ProcessPoolExecutor(5) as executor:

res = [executor.submit(fetch_sync, url) for url in url_list]

print(res)

示例 :

import requests

from concurrent import futures

import time

import urllib3

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

def fetch_sync(args):

i, r_url = args

print(f'index : {i}')

response = requests.get(r_url, verify=False)

time.sleep(2)

return response.status_code

def callback(future):

print(future.result())

if __name__ == '__main__':

# url_list = ['https://www.github.com', 'https://www.bing.com']

url = 'https://www.github.com'

with futures.ProcessPoolExecutor(5) as executor:

for index in range(1000):

v = executor.submit(fetch_sync, (index, url))

v.add_done_callback(callback)

pass

5. 多进程 + 回调函数执行

import requests

from concurrent import futures

def fetch_sync(r_url):

response = requests.get(r_url, verify=False)

return response

def callback(future):

print(future.result())

if __name__ == '__main__':

url_list = ['https://www.github.com', 'https://www.bing.com']

with futures.ProcessPoolExecutor(5) as executor:

for url in url_list:

v = executor.submit(fetch_sync, url)

v.add_done_callback(callback)

pass

示例 ( 返回值顺序不确定 ):

from concurrent.futures import ProcessPoolExecutor

def func_test(*args, **kwargs):

print(kwargs)

return kwargs

def func_callback(*args, **kwargs):

print(kwargs)

def main():

with ProcessPoolExecutor(3) as tp:

for i in range(100):

# 异步阻塞,回调函数,给ret对象绑定一个回调函数。

# 等ret对应的任务有了结果之后,立即调用 func_callback 这个函数

# 就可以立即对函数进行处理,而不是按照顺序接受结果,处理结果

ret = tp.submit(func_test, index=i)

ret.add_done_callback(func_callback)

if __name__ == '__main__':

main()

pass按顺序获取结果 ( 返回值顺序确定 ):使用 map 函数

from concurrent.futures import ProcessPoolExecutor

def func_test(*args, **kwargs):

print(args)

return args

def main():

with ProcessPoolExecutor(3) as tp:

temp_list = [{'index': index} for index in range(100)]

# print({'index': index} for index in range(100))

result = tp.map(func_test, temp_list)

print(f"result ---> {list(result)}")

if __name__ == '__main__':

main()

pass使用 队列

import requests

from lxml import etree

from multiprocessing import Process, Queue

def get_image_src(q=None):

url = "http://www.umeituku.com/meinvtupian/"

response = requests.get(url)

response.encoding = 'utf-8'

s_html = etree.HTML(text=response.text)

s_all_li = s_html.xpath('//div[@class="TypeList"]//li/a/@href')

# print(s_all_li)

for url in s_all_li:

q.put(url)

else:

# 添加标识,取到这个时说明已经没有任务

q.put("任务添加结束")

def download_image(q=None):

while True:

task = q.get() # 如果队列里面没数据,就会阻塞

print(task)

if "任务添加结束" == task:

# 取到标识,直接退出循环

print("任务结束")

break

pass

if __name__ == '__main__':

url_queue = Queue()

# url_queue 必须参数参过去才能实现共享

p1 = Process(target=get_image_src, args=(url_queue,))

p2 = Process(target=download_image, args=(url_queue,))

p1.start()

p2.start()

pass异步 队列

import asyncio

import aiohttp

import aiofiles

from queue import Queue

from lxml import etree

url_queue = asyncio.Queue()

async def get_image_src(q=None):

url = "http://www.umeituku.com/meinvtupian/"

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

html = await resp.text()

s_html = etree.HTML(text=html)

s_all_li = s_html.xpath('//div[@class="TypeList"]//li/a/@href')

# print(s_all_li)

for url in s_all_li:

await q.put(url)

else:

# 添加标识,取到这个时说明已经没有任务

await q.put("任务添加结束")

async def download_image(q=None):

while True:

task = await q.get() # 如果队列里面没数据,就会阻塞

print(task)

if "任务添加结束" == task:

# 取到标识,直接退出循环

print("任务结束")

break

pass

async def main():

task_list = [

asyncio.create_task(get_image_src(q=url_queue)),

asyncio.create_task(download_image(q=url_queue)),

]

await asyncio.wait(task_list)

if __name__ == '__main__':

asyncio.run(main())

pass示例:

import asyncio

import aiohttp

from asyncio import Queue

from lxml import etree

index = 1

url_queue = Queue()

async def get_every_chapter_url():

url = 'https://www.zanghaihua.org/book/40626/'

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

html = await resp.text()

s_html = etree.HTML(text=html)

all_chapter = s_html.xpath('//div[@class="section-box"]//li//a/@href')

temp_list = [f'https://www.zanghaihua.org/book/40626/{item}' for item in all_chapter]

print(f"章节数量 ---> {len(temp_list)}")

task_list = [asyncio.create_task(download(item)) for item in temp_list]

await asyncio.wait(task_list)

pass

async def download(url=None):

global index

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

html = await resp.text()

# print(html)

print(f"[{index}]url ---> {url}")

index += 1

pass

def main():

asyncio.run(get_every_chapter_url())

if __name__ == '__main__':

main()

pass二、异步

协程 只能运行在 事件循环 中。对于 事件循环,可以动态的增加协程 到 事件循环 中, 而不是在一开始就确定所有需要协程。

默认情况下 asyncio.get_event_loop() 是一个 select模型 的 事件循环。

默认的 asyncio.get_event_loop() 事件循环属于主线程。

参考 python asyncio 协程:https://blog.csdn.net/dashoumeixi/article/details/81001681

提示:一般是一个线程一个事件循环,为什么要一个线程一个事件循环?

如果你要使用多个事件循环 ,创建线程后调用:

lp = asyncio.new_event_loop() # 创建一个新的事件循环

asyncio.set_event_loop(lp) # 设置当前线程的事件循环

核心思想: yield from / await 就这2个关键字,运行(驱动)一个协程, 同时交出当前函数的控制权,让事件循环执行下个任务。

yield from 的实现原理 :https://blog.csdn.net/dashoumeixi/article/details/84076812

要搞懂 asyncio 协程,还是先把生成器弄懂,如果对生成器很模糊,比如 yield from 生成器对象,这个看不懂的话,建议先看 :python生成器 yield from:https://blog.csdn.net/dashoumeixi/article/details/80936798

有 2 种方式让协程运行起来,协程(生成器)本身是不运行的

- 1. await / yield from 协程,这一组能等待协程完成。

- 2. asyncio.ensure_future / async(协程) ,这一组不需要等待协程完成。

注意:

- 1. 协程就是生成器的增强版 ( 多了send 与 yield 的接收 ),在 asyncio 中的协程 与 生成器对象不同点:

asyncio协程: 函数内部不能使用 yield [如果使用会抛RuntimeError],只能使用 yield from / await,

一般的生成器: yield 或 yield from 2个都能用,至少使用一个。这 2个本来就是一回事, 协程只是不能使用 yield- 2. 在 asycio 中 所有的协程 都被自动包装成一个个 Task / Future 对象,但其本质还是一个生成器,因此可以 yield from / await Task/Futrue

基本流程:

- 1. 定义一个协程 (async def 或者 @asyncio.coroutine 装饰的函数)

- 2. 调用上述函数,获取一个协程对象 【不能使用yield,除非你自己写异步模块,毕竟最终所调用的还是基于yield的生成器函数】。通过 asyncio.ensure_future 或 asyncio.async 函数调度协程(这部意味着要开始执行了) ,返回了一个 Task 对象,Task对象 是 Future对象 的 子类, ( 这步可作可不作,只要是一个协程对象,一旦扔进事件队列中,将自动给你封装成Task对象 )

- 3. 获取一个事件循环 asyncio.get_event_loop() ,默认此事件循环属于主线程

- 4. 等待事件循环调度协程

后面的例子着重说明了一下 as_completed,附加了源码。 先说明一下:

- 1. as_completed 每次迭代返回一个协程,

- 2. 这个协程内部从 Queue 中取出先完成的 Future 对象

- 3. 然后我们再 await coroutine

示例 1:

import asyncio

"""

用async def (新语法) 定义一个函数,同时返回值

asyncio.sleep 模拟IO阻塞情况 ; await 相当于 yield from.

await 或者 yield from 交出函数控制权(中断),让事件循环执行下个任务 ,一边等待后面的协程完成

"""

async def func(i):

print('start')

await asyncio.sleep(i) # 交出控制权

print('done')

return i

co = func(2) # 产生协程对象

print(co)

lp = asyncio.get_event_loop() # 获取事件循环

task = asyncio.ensure_future(co) # 开始调度

lp.run_until_complete(task) # 等待完成

print(task.result()) # 获取结果

添加回调

示例 1:

import asyncio

"""

添加一个回调:add_done_callback

"""

async def func(i):

print('start')

await asyncio.sleep(i)

return i

def call_back(v):

print('callback , arg:', v, 'result:', v.result())

if __name__ == '__main__':

co = func(2) # 产生协程对象

lp = asyncio.get_event_loop() # 获取事件循环

# task = asyncio.run_coroutine_threadsafe(co) # 开始调度

task = asyncio.ensure_future(co) # 开始调度

task.add_done_callback(call_back) # 增加回调

lp.run_until_complete(task) # 等待

print(task.result()) # 获取结果

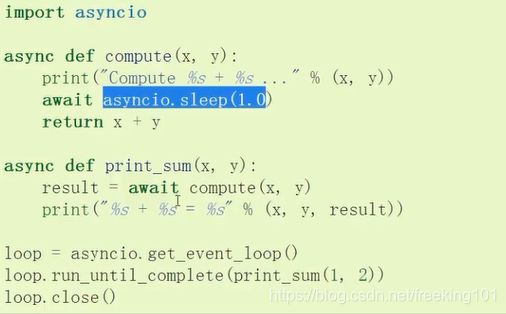

子协程调用原理图

官方的一个实例如下

从下面的原理图我们可以看到

- 1 当 事件循环 处于运行状态的时候,任务Task 处于pending(等待),会把控制权交给委托生成器 print_sum

- 2 委托生成器 print_sum 会建立一个双向通道为Task和子生成器,调用子生成器compute并把值传递过去

- 3 子生成器compute会通过委托生成器建立的双向通道把自己当前的状态suspending(暂停)传给Task,Task 告诉 loop 它数据还没处理完成

- 4 loop 会循环检测 Task ,Task 通过双向通道去看自生成器是否处理完成

- 5 子生成器处理完成后会向委托生成器抛出一个异常和计算的值,并关闭生成器

- 6 委托生成器再把异常抛给任务(Task),把任务关闭

- 7 loop 停止循环

call_soon、call_at、call_later、call_soon_threadsafe

- call_soon 循环开始检测时,立即执行一个回调函数

- call_at 循环开始的第几秒s执行

- call_later 循环开始后10s后执行

- call_soom_threadsafe 立即执行一个安全的线程

import asyncio

import time

def call_back(str_var, loop):

print("success time {}".format(str_var))

def stop_loop(str_var, loop):

time.sleep(str_var)

loop.stop()

# call_later, call_at

if __name__ == "__main__":

event_loop = asyncio.get_event_loop()

event_loop.call_soon(call_back, 'loop 循环开始检测立即执行', event_loop)

now = event_loop.time() # loop 循环时间

event_loop.call_at(now + 2, call_back, 2, event_loop)

event_loop.call_at(now + 1, call_back, 1, event_loop)

event_loop.call_at(now + 3, call_back, 3, event_loop)

event_loop.call_later(6, call_back, "6s后执行", event_loop)

# event_loop.call_soon_threadsafe(stop_loop, event_loop)

event_loop.run_forever()

( ***** ) 多线程、协程 关联 ( ***** )

asyncio 就是遍历 event_loop 获取消息,是一个单线程阻塞函数。在遍历循环过程中如果遇到某个协程阻塞就会卡住,一直等待结果返回,这时候就需要用到多线程防止阻塞。run_coroutine_threadsafe函数在新线程中建立新event_loop,可以动态添加协程,

async 的 get_event_loop和new_event_loop的使用

首先,event loop 就是一个普通 Python 对象,您可以通过 asyncio.new_event_loop() 创建无数个 event loop 对象。只不过,loop.run_xxx() 家族的函数都是阻塞的,比如 run_until_complete() 会等到给定的 coroutine 完成再结束,而 run_forever() 则会永远阻塞当前线程,直到有人停止了该 event loop 为止。所以在同一个线程里,两个 event loop 无法同时 run,但这不能阻止您用两个线程分别跑两个 event loop。

初始情况下,get_event_loop() 只会在主线程帮您创建新的 event loop,并且在主线程中多次调用始终返回该 event loop;而在其他线程中调用 get_event_loop() 则会报错,除非您在这些线程里面手动调用过 set_event_loop()。

总结:想要运用协程,首先要生成一个loop对象,然后loop.run_xxx()就可以运行协程了,而如何创建这个loop, 方法有两种:对于主线程是loop=get_event_loop(). 对于其他线程需要首先loop=new_event_loop(),然后set_event_loop(loop)。

new_event_loop()是创建一个event loop对象,而set_event_loop(eventloop对象)是将event loop对象指定为当前协程的event loop,一个协程内只允许运行一个event loop,不要一个协程有两个event loop交替运行。

Python Asyncio与多线程/多进程那些事

:https://zhuanlan.zhihu.com/p/38575715

asyncio 的事件循环不是线程安全的,一个event loop只能在一个线程内调度和执行任务,并且同一时间只有一个任务在运行,这可以在asyncio的源码中观察到,当程序调用get_event_loop获取event loop时,会从一个本地的Thread Local对象获取属于当前线程的event loop:

class _Local(threading.local):

_loop = None

_set_called = False

def get_event_loop(self):

"""Get the event loop.

This may be None or an instance of EventLoop.

"""

if (self._local._loop is None and

not self._local._set_called and

isinstance(threading.current_thread(), threading._MainThread)):

self.set_event_loop(self.new_event_loop())

if self._local._loop is None:

raise RuntimeError('There is no current event loop in thread %r.'

% threading.current_thread().name)

return self._local._loop在主线程中,调用get_event_loop总能返回属于主线程的event loop对象,如果是处于非主线程中,还需要调用set_event_loop方法指定一个event loop对象,这样get_event_loop才会获取到被标记的event loop对象:

def set_event_loop(self, loop):

"""Set the event loop."""

self._local._set_called = True

assert loop is None or isinstance(loop, AbstractEventLoop)

self._local._loop = loop那么如果 event loop 在A线程中运行的话,B线程能使用它来调度任务吗?

答案是:不能。这可以在下面这个例子中被观察到:

import asyncio

import threading

def task():

print("task")

def run_loop_inside_thread(loop):

loop.run_forever()

loop = asyncio.get_event_loop()

threading.Thread(target=run_loop_inside_thread, args=(loop,)).start()

loop.call_soon(task)主线程新建了一个event loop对象,接着这个event loop会在派生的一个线程中运行,这时候主线程想在event loop上调度一个工作函数,然而结果却是什么都没有输出。

为此,asyncio 提供了一个call_soon_threadsafe的方法,专门解决针对线程安全的调用:

import asyncio

import threading

import time

def task_1():

while True:

print("task_1")

time.sleep(1)

def task_2():

while True:

print("task_2")

time.sleep(1)

def run_loop_inside_thread(loop=None):

loop.run_forever()

loop = asyncio.get_event_loop()

threading.Thread(target=run_loop_inside_thread, args=(loop,)).start()

loop.call_soon_threadsafe(task_1)

loop.call_soon_threadsafe(task_2)指定 事件循环 运行协程

import asyncio

import threading

import time

async def task_1():

while True:

print("task_1")

# time.sleep(1)

await asyncio.sleep(1)

async def task_2():

while True:

print("task_2")

# time.sleep(1)

await asyncio.sleep(1)

def run_loop_inside_thread(loop=None):

asyncio.set_event_loop(loop)

loop.run_forever()

thread_loop = asyncio.new_event_loop()

t = threading.Thread(target=run_loop_inside_thread, args=(thread_loop, ))

t.start()

asyncio.run_coroutine_threadsafe(task_1(), thread_loop)

asyncio.run_coroutine_threadsafe(task_2(), thread_loop)示例:

import time

import asyncio

import threading

async def worker_1(index=None):

while True:

await asyncio.sleep(1)

print(f"worker_1 ---> {index}")

async def worker_2(index=None):

while True:

await asyncio.sleep(1)

print(f"worker_2 ---> {index}")

async def add_task():

for index in range(100):

# 将不同参数main这个协程循环注册到运行在线程中的循环,

# thread_loop会获得一循环任务

# 注意:run_coroutine_threadsafe 这个方法只能用在运行在线程中的循环事件使用

asyncio.run_coroutine_threadsafe(worker_1(index), thread_loop)

asyncio.run_coroutine_threadsafe(worker_2(index), thread_loop)

def loop_in_thread(loop: asyncio.AbstractEventLoop = None):

# 运行事件循环, loop 以参数的形式传递进来运行

asyncio.set_event_loop(loop)

loop.run_forever()

if __name__ == '__main__':

# 获取一个事件循环

thread_loop = asyncio.new_event_loop()

# 将次事件循环运行在一个线程中,防止阻塞当前主线程,运行线程,同时协程事件循环也会运行

threading.Thread(target=loop_in_thread, args=(thread_loop,)).start()

# 将生产任务的协程注册到这个循环中

main_loop = asyncio.get_event_loop()

# 运行次循环

main_loop.run_until_complete(add_task())共用 一个事件循环

import time

import asyncio

def blocking_io():

print(f"开始 blocking_io ---> {time.strftime('%X')}")

# Note that time.sleep() can be replaced with any blocking

# IO-bound operation, such as file operations.

time.sleep(5)

print(f"完成 blocking_io ---> {time.strftime('%X')}")

async def func_task():

print('\nfunc_task\n')

async def main():

print(f"开始 main ---> {time.strftime('%X')}")

await asyncio.gather(

asyncio.to_thread(blocking_io),

func_task(),

asyncio.sleep(1),

)

print(f"完成 main ---> {time.strftime('%X')}")

"""

在任何协程中直接调用 blocking_io() 会在其持续时间内阻塞事件循环,从而导致额外的 5 秒运行时间。

相反,通过使用 asyncio.to_thread() ,我们可以在单独的线程中运行它而不会阻塞事件循环。

"""

asyncio.run(main())不同线程中的事件循环

事件循环中维护了一个队列(FIFO, Queue) ,通过另一种方式来调用:

import time

import datetime

import asyncio

"""

事件循环中维护了一个FIFO队列

通过call_soon 通知事件循环来调度一个函数.

"""

def func(x):

print(f'x:{x}, start time:{datetime.datetime.now().replace(microsecond=0)}')

time.sleep(x)

print(f'func invoked:{x}')

loop = asyncio.get_event_loop()

loop.call_soon(func, 1) # 调度一个函数

loop.call_soon(func, 2)

loop.call_soon(func, 3)

loop.run_forever() # 阻塞

'''

x:1, start time:2020-10-01 15:45:46

func invoked:1

x:2, start time:2020-10-01 15:45:47

func invoked:2

x:3, start time:2020-10-01 15:45:49

func invoked:3

'''可以看到以上操作是同步的。下面通过 asyncio.run_coroutine_threadsafe 函数可以把上述函数调度变成异步执行:

import time

import datetime

import asyncio

"""

1.首先会调用asyncio.run_coroutine_threadsafe 这个函数.

2.之前的普通函数修改成协程对象

"""

async def func(x):

print(f'x:{x}, start time:{datetime.datetime.now().replace(microsecond=0)}')

await asyncio.sleep(x)

print(f'func invoked:{x}, now:{datetime.datetime.now().replace(microsecond=0)}')

loop = asyncio.get_event_loop()

co1 = func(1)

co2 = func(2)

co3 = func(3)

asyncio.run_coroutine_threadsafe(co1, loop) # 调度

asyncio.run_coroutine_threadsafe(co2, loop)

asyncio.run_coroutine_threadsafe(co3, loop)

loop.run_forever() # 阻塞

'''

x:1, start time:2020-10-01 15:49:32

x:2, start time:2020-10-01 15:49:32

x:3, start time:2020-10-01 15:49:32

func invoked:1, now:2020-10-01 15:49:33

func invoked:2, now:2020-10-01 15:49:34

func invoked:3, now:2020-10-01 15:49:35

'''上面 2 个例子只是告诉你 2 件事情。

- 1. run_coroutine_threadsafe是异步线程安全 ,call_soon是同步。

- 2. run_coroutine_threadsafe 这个函数 对应 ensure_future (只能作用于同一线程中)。

可以在一个子线程中运行一个事件循环,然后在主线程中动态的添加协程,这样既不阻塞主线程执行其他任务,子线程也可以异步的执行协程。

注意:默认情况下获取的 event_loop 是主线程的,所以要在子线程中使用 event_loop 需要 new_event_loop 。如果在子线程中直接获取 event_loop 会抛异常 。

源代码中的判断:isinstance(threading.current_thread(), threading._MainThread)

示例:

import os

import sys

import queue

import threading

import time

import datetime

import asyncio

"""

1. call_soon , call_soon_threadsafe 是同步的

2. asyncio.run_coroutine_threadsafe(coro, loop) -> 对应 asyncio.ensure_future

是在 事件循环中 异步执行。

"""

# 在子线程中执行一个事件循环 , 注意需要一个新的事件循环

def thread_loop(loop: asyncio.AbstractEventLoop):

print('线程开启 tid:', threading.currentThread().ident)

asyncio.set_event_loop(loop) # 设置一个新的事件循环

loop.run_forever() # run_forever 是阻塞函数,所以,子线程不会退出。

async def func(x, q):

current_time = datetime.datetime.now().replace(microsecond=0)

msg = f'func: {x}, time:{current_time}, tid:{threading.currentThread().ident}'

print(msg)

await asyncio.sleep(x)

q.put(x)

if __name__ == '__main__':

temp_queue = queue.Queue()

lp = asyncio.new_event_loop() # 新建一个事件循环, 如果使用默认的, 则不能放入子线程

thread_1 = threading.Thread(target=thread_loop, args=(lp,))

thread_1.start()

co1 = func(2, temp_queue) # 2个协程

co2 = func(3, temp_queue)

asyncio.run_coroutine_threadsafe(co1, lp) # 开始调度在子线程中的事件循环

asyncio.run_coroutine_threadsafe(co2, lp)

print(f'开始事件:{datetime.datetime.now().replace(microsecond=0)}')

while 1:

if temp_queue.empty():

print('队列为空,睡1秒继续...')

time.sleep(1)

continue

x = temp_queue.get() # 如果为空,get函数会直接阻塞,不往下执行

current_time = datetime.datetime.now().replace(microsecond=0)

msg = f'main :{x}, time:{current_time}'

print(msg)

time.sleep(1)

示例:

import asyncio

import threading

import time

async def task_1():

while True:

print("task_1")

# time.sleep(1)

await asyncio.sleep(1)

async def task_2():

while True:

print("task_2")

# time.sleep(1)

await asyncio.sleep(1)

def run_loop_inside_thread(loop=None):

asyncio.set_event_loop(loop)

loop.run_forever()

thread_loop = asyncio.new_event_loop()

t = threading.Thread(target=run_loop_inside_thread, args=(thread_loop, ))

t.start()

asyncio.run_coroutine_threadsafe(task_1(), thread_loop)

asyncio.run_coroutine_threadsafe(task_2(), thread_loop)在多线程中启用多个事件循环

示例:

import threading

import asyncio

def thread_loop_task(loop):

# 为子线程设置自己的事件循环

asyncio.set_event_loop(loop)

async def work_2():

while True:

print('work_2 on loop:%s' % id(loop))

await asyncio.sleep(2)

async def work_4():

while True:

print('work_4 on loop:%s' % id(loop))

await asyncio.sleep(4)

future = asyncio.gather(work_2(), work_4())

loop.run_until_complete(future)

if __name__ == '__main__':

# 创建一个事件循环thread_loop

thread_loop = asyncio.new_event_loop()

# 将thread_loop作为参数传递给子线程

t = threading.Thread(target=thread_loop_task, args=(thread_loop,))

t.daemon = True

t.start()

main_loop = asyncio.get_event_loop()

async def main_work():

while True:

print('main on loop:%s' % id(main_loop))

await asyncio.sleep(4)

main_loop.run_until_complete(main_work())下面例子中 asyncio.ensure_future/async 都可以换成 asyncio.run_coroutine_threadsafe 【 在不同线程中的事件循环 】:

run_in_executor、run_coroutine_threadsafe

:https://blog.csdn.net/hellojike/article/details/115092039

ThreadPollExecutor 和 asyncio 完成阻塞 IO 请求

在 asyncio 中集成线程池处理耗时IO,在协程中同步阻塞的写法,但有些时候不得已就是一些同步耗时的接口,可以把 线程池 集成到 asynico 模块中

import asyncio

from concurrent import futures

task_list = []

loop = asyncio.get_event_loop()

executor = futures.ThreadPoolExecutor(3)

def get_url(t_url=None):

print(t_url)

for url in range(20):

url = "http://shop.projectsedu.com/goods/{}/".format(url)

task = loop.run_in_executor(executor, get_url, url)

task_list.append(task)

loop.run_until_complete(asyncio.wait(task_list))示例代码:

# 使用多线程:在 协程 中集成阻塞io

import asyncio

from concurrent.futures import ThreadPoolExecutor

import socket

from urllib.parse import urlparse

def get_url(url):

# 通过socket请求html

url = urlparse(url)

host = url.netloc

path = url.path

if path == "":

path = "/"

# 建立socket连接

client = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

# client.setblocking(False)

client.connect((host, 80)) # 阻塞不会消耗cpu

# 不停的询问连接是否建立好, 需要while循环不停的去检查状态

# 做计算任务或者再次发起其他的连接请求

client.send("GET {} HTTP/1.1\r\nHost:{}\r\nConnection:close\r\n\r\n".format(path, host).encode("utf8"))

data = b""

while True:

d = client.recv(1024)

if d:

data += d

else:

break

data = data.decode("utf8")

html_data = data.split("\r\n\r\n")[1]

print(html_data)

client.close()

if __name__ == "__main__":

import time

start_time = time.time()

loop = asyncio.get_event_loop()

executor = ThreadPoolExecutor(3)

tasks = []

for url in range(20):

url = "http://shop.projectsedu.com/goods/{}/".format(url)

task = loop.run_in_executor(executor, get_url, url)

tasks.append(task)

loop.run_until_complete(asyncio.wait(tasks))

print("last time:{}".format(time.time() - start_time))

示例

在 Asyncio 中运行阻塞任务:https://zhuanlan.zhihu.com/p/610881194

import time

import asyncio

def blocking_task():

# report a message

print('Task starting')

# block for a while

time.sleep(2)

# report a message

print('Task done')

# main coroutine

async def main():

print('Main running the blocking task')

# create a coroutine for the blocking task

coro = asyncio.to_thread(blocking_task)

# schedule the task

task = asyncio.create_task(coro)

# report a message

print('Main doing other things')

# allow the scheduled task to start

await asyncio.sleep(0)

# await the task

await task

# run the asyncio program

asyncio.run(main())asyncio 的 同步 和 通信

在多少线程中考虑安全性,需要加锁,在协程中是不需要的

import asyncio

total = 0

lock = None

async def add():

global total

for _ in range(1000):

total += 1

async def desc():

global total, lock

for _ in range(1000):

total -= 1

if __name__ == '__main__':

tasks = [add(), desc()]

loop = asyncio.get_event_loop()

loop.run_until_complete(asyncio.wait(tasks))

print(total)

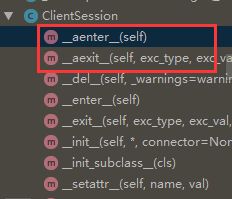

在有些情况中,对协程还是需要类似锁的机制

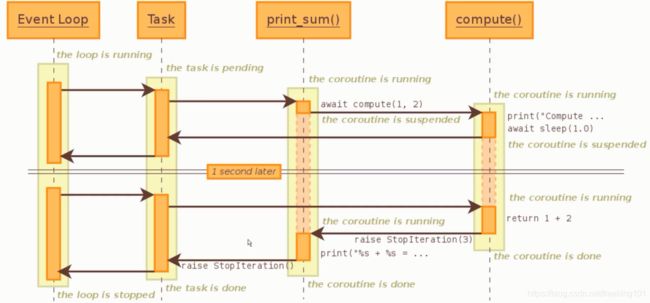

示例:parse_response 和 use_response 有共同调用的代码,get_response、parse_response 去请求的时候 如果 get_response 也去请求,会触发网站的反爬虫机制.

这就需要我们像上诉代码那样加 lock,同时 get_response 和 use_response 中都调用了parse_response,我们想在 get_response 中只请求一次,下次用缓存,所以要用到锁

import asyncio

import aiohttp

from asyncio import Lock

cache = {}

lock = Lock()

async def get_response(url):

async with lock: # 等价于 with await lock: 还有async for 。。。类似的用法

# 这里使用async with 是因为 Lock中有__await__ 和 __aenter__两个魔法方法

# 和线程一样, 这里也可以用 await lock.acquire() 并在结束时 lock.release

if url in cache:

return cache[url]

print("第一次请求")

response = aiohttp.request('GET', url)

cache[url] = response

return response

async def parse_response(url):

response = await get_response(url)

print('parse_response', response)

# do some parse

async def use_response(url):

response = await get_response(url)

print('use_response', response)

# use response to do something interesting

if __name__ == '__main__':

tasks = [parse_response('baidu'), use_response('baidu')]

loop = asyncio.get_event_loop()

# loop.run_until_complete将task放到loop中,进行事件循环, 这里必须传入的是一个list

loop.run_until_complete(asyncio.wait(tasks))

输出结果如下

asyncio 通信 queue

协程是单线程的,所以协程中完全可以使用全局变量实现 queue 来相互通信,但是如果想要在 queue 中定义存放有限的最大数目,需要在 put 和 get 的前面都要加 await

from asyncio import Queue

queue = Queue(maxsize=3)

await queue.get()

await queue.put()一个事件循环中执行多个 task,实现并发执行

future 和 task:

- future 是一个结果的容器,结果执行完后在内部会回调 call_back 函数

- task 是 future 的子类,可以用来激活协程。( task 是 协程 和 Future 的 桥梁 )

wait、gather、await

1. wait、gather 这2个函数都是用于获取结果的,且都不阻塞,直接返回一个生成器对象可用于 yield from / await

2. 两种用法可以获取执行完成后的结果:

第一种: result = asyncio.run_until_completed(asyncio.wait/gather) 执行完成所有之后获取结果

第二种: result = await asyncio.wait/gather 在一个协程内获取结果3. as_completed 与并发包 concurrent 中的行为类似,哪个任务先完成哪个先返回,内部实现是 yield from Queue.get()

4. 嵌套:await / yield from 后跟协程,直到后面的协程运行完毕,才执行 await / yield from 下面的代码,整个过程是不阻塞的

wait 和 gather 区别

这两个都可以添加多个任务到事件循环中

一般使用 asyncio.wait(tasks) 的地方也可以使用 asyncio.gather(tasks) ,但是 wait 接收一堆 task,gather接收一个 task 列表。

asyncio.wait(tasks)方法返回值是两组 task/future的 set。dones, pendings = await asyncio.wait(tasks) 其中

- dones 是 task的 set,

- pendings 是 future 的 set。

asyncio.gather(tasks) 返回一个结果的 list。

gather 比 wait 更加的高级

- 可以对任务进行分组

- 可以取消任务

import asyncio

import time

async def get_html(url):

global index

print(f"{index} start get url")

await asyncio.sleep(2)

index += 1

print(f"{index} end get url")

if __name__ == "__main__":

start_time = time.time()

index = 1

loop = asyncio.get_event_loop()

tasks = [get_html("http://www.imooc.com") for i in range(10)]

# gather和wait的区别

# tasks = [get_html("http://www.imooc.com") for i in range(10)]

# loop.run_until_complete(asyncio.wait(tasks))

group1 = [get_html("http://projectsedu.com") for i in range(2)]

group2 = [get_html("http://www.imooc.com") for i in range(2)]

group1 = asyncio.gather(*group1)

group2 = asyncio.gather(*group2)

loop.run_until_complete(asyncio.gather(group1, group2))

print(time.time() - start_time)

# gather 和 wait的区别: gather返回值是有顺序(按照你添加任务的顺序返回的)的.

# return_exceptions=True, 如果有错误信息. 返回错误信息, 其他任务正常执行.

# return_exceptions=False, 如果有错误信息. 所有任务直接停止

result = await asyncio.gather(*tasks, return_exceptions=True)

示例 1:

import asyncio

"""

并发 执行多个任务。

调度一个Task对象列表

调用 asyncio.wait 或者 asyncio.gather 获取结果

"""

async def func(i):

print('start')

# 交出控制权,事件循环执行下个任务,同时等待完成

await asyncio.sleep(i)

return i

async def func_sleep():

await asyncio.sleep(2)

def test_1():

# asyncio create_task永远运行

# https://www.pythonheidong.com/blog/article/160584/ca5dc07f62899cedad64/

lp = asyncio.get_event_loop()

tasks = [lp.create_task(func(i)) for i in range(3)]

lp.run_until_complete(func_sleep())

# 或者

# lp.run_until_complete(asyncio.wait([func_sleep(), ]))

def test_2():

lp = asyncio.get_event_loop()

# tasks = [func(i) for i in range(3)]

# tasks = [asyncio.ensure_future(func(i)) for i in range(3)] # asyncio.ensure_future

# 或者

tasks = [lp.create_task(func(i)) for i in range(3)] # lp.create_task

lp.run_until_complete(asyncio.wait(tasks))

for task in tasks:

print(task.result())

if __name__ == '__main__':

# test_1()

test_2()

pass

示例 2:

import asyncio

"""

通过 await 或者 yield from 形成1个链, 后面跟其他协程.

形成一个链的目的很简单,

当前协程需要这个结果才能继续执行下去.

就跟普通函数调用其他函数获取结果一样

"""

async def func(i):

print('start')

await asyncio.sleep(i)

return i

async def to_do():

print('to_do start')

tasks = []

# 开始调度3个协程对象

for i in range(3):

tasks.append(asyncio.ensure_future(func(i)))

# 在协程内等待结果. 通过 await 来交出控制权, 同时等待tasks完成

task_done, task_pending = await asyncio.wait(tasks)

print('to_do get result')

# 获取已经完成的任务

for task in task_done:

print('task_done:', task.result())

# 未完成的

for task in task_pending:

print('pending:', task)

if __name__ == '__main__':

lp = asyncio.get_event_loop() # 获取事件循环

lp.run_until_complete(to_do()) # 把协程对象放进去

# lp.close() # 关闭事件循环

as_completed 函数返回一个迭代器,每次迭代一个协程。

事件循环内部有一个 Queue(queue.Queue 线程安全) , 先完成的先入队。

as_completed 迭代的协程源码是 : 注意 yield from 后面可以跟 iterable

#简化版代码

f = yield from done.get() # done 是 Queue

return f.result()例子:

asyncio.as_completed 返回一个生成器对象 , 因此可用于迭代每次从此生成器中返回的对象是一个个协程(生成器),哪个最先完成哪个就返回, 而要从 生成器/协程 中获取返回值,就必须使用 yield from / await , 简单来说就是:生成器的返回值在异常中, 详情参考最上面的基础链接

import asyncio

async def func(x):

# print('\t\tstart ',x)

await asyncio.sleep(5)

# print('\t\tdone ', x)

return x

async def to_do():

# 在协程内调度2个协程

tasks = [asyncio.ensure_future(func(i)) for i in range(2)]

# 使用as_completed:先完成,先返回.

# 每次迭代返回一个协程.

# 这个协程:_wait_for_one,内部从队列中产出一个最先完成的Future对象

for coroutine in asyncio.as_completed(tasks):

result = await coroutine # 等待协程,并返回先完成的协程

print('result :', result)

print('all done')

lp = asyncio.get_event_loop()

lp.set_debug(True)

lp.run_until_complete(to_do()) # 调度协程

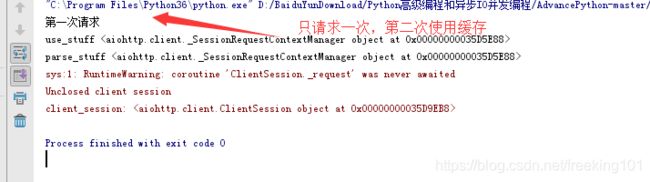

获取多个并发的 task 的结果。

( task 是 协程 和 Future 的 桥梁。 )

-

如果我们要获取 task 的结果,一定要创建一个task,就是把我们的协程绑定要 task 上,这里直接用事件循环 loop 里面的 create_task 就可以搞定。

-

我们假设有3个并发的add任务需要处理,然后调用 run_until_complete 来等待3个并发任务完成。

-

调用 task.result 查看结果,这里的 task 其实是 _asyncio.Task,是封装的一个类。大家可以在 Pycharm 中找 asyncio 里面的源码,里面有一个 tasks 文件。

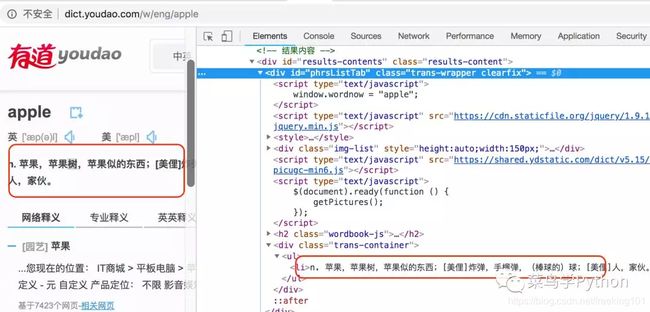

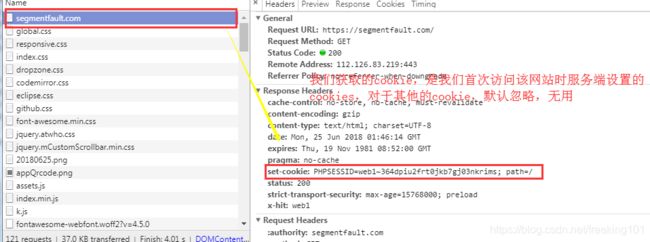

爬取有道词典

玩并发比较多的是爬虫,爬虫可以用多线程,线程池去爬。但是我们用 requests 的时候是阻塞的,无法并发。所以我们要用一个更牛逼的库 aiohttp,这个库可以当成是异步的 requests。

1). 爬取有道词典

有道翻译的API已经做好了,我们可以直接调用爬取。然后解析网页,获取单词的翻译。然后解析网页,网页比较简单,可以有很多方法解析。因为爬虫文章已经泛滥了,我这里就不展开了,很容易就可以获取单词的解释。

2). 代码的核心框架

-

设计一个异步的框架,生成一个事件循环

-

创建一个专门去爬取网页的协程,利用aiohttp去爬取网站内容

-

生成多个要翻译的单词的url地址,组建一个异步的tasks, 扔到事件循环里面

-

等待所有的页面爬取完毕,然后用pyquery去一一解析网页,获取单词的解释,部分代码如下:

import time

import asyncio

import aiohttp

from pyquery import PyQuery as pq

def decode_html(html_content=None):

url, resp_text = html_content

doc = pq(resp_text)

des = ''

for li in doc.items('#phrsListTab .trans-container ul li'):

des += li.text()

return url, des

async def fetch(session: aiohttp.ClientSession = None, url=None):

async with session.get(url=url) as resp:

resp_text = await resp.text()

return url, resp_text

async def main(word_list=None):

url_list = ['http://dict.youdao.com/w/{}'.format(word) for word in word_list]

temp_task_list = []

async with aiohttp.ClientSession() as session:

for url in url_list:

temp_task_list.append(fetch(session, url))

html_list = await asyncio.gather(*temp_task_list)

for html_content in html_list:

print(decode_html(html_content))

if __name__ == '__main__':

start_time = time.time()

text = 'apple'

word_list_1 = [ch for ch in text]

word_list_2 = [text for _ in range(100)]

loop = asyncio.get_event_loop()

task_list = [

main(word_list_1),

main(word_list_2),

]

loop.run_until_complete(asyncio.wait(task_list))

print(time.time() - start_time)谈到 http 接口调用,Requests 大家并不陌生,例如,robotframework-requests、HttpRunner 等 HTTP 接口测试库/框架都是基于它开发。

这里将介绍另一款http接口测试框架 httpx,snaic 同样也集成了 httpx 库。httpx 的 API 和 Requests 高度一致。github: https://github.com/encode/httpx

安装:pip install httpx

httpx 简单使用

import json

import httpx

r = httpx.get("http://httpbin.org/get")

print(r.status_code)

print(json.dumps(r.json(), ensure_ascii=False, indent=4))带参数的 post 调用

import json

import httpx

payload = {'key1': 'value1', 'key2': 'value2'}

r = httpx.post("http://httpbin.org/post", data=payload)

print(r.status_code)

print(json.dumps(r.json(), ensure_ascii=False, indent=4))httpx 异步调用。接下来认识 httpx 的异步调用:

import json

import httpx

import asyncio

async def main():

async with httpx.AsyncClient() as client:

resp = await client.get('http://httpbin.org/get')

result = resp.json()

print(json.dumps(result, ensure_ascii=False, indent=4))

asyncio.run(main())httpx 异步调用

import httpx

import asyncio

import time

async def request(client):

global index

resp = await client.get('http://httpbin.org/get')

index += 1

result = resp.json()

print(f'{index} status_code : {resp.status_code}')

assert 200 == resp.status_code

async def main():

async with httpx.AsyncClient() as client:

# 50 次调用

task_list = []

for _ in range(50):

req = request(client)

task = asyncio.create_task(req)

task_list.append(task)

await asyncio.gather(*task_list)

if __name__ == "__main__":

index = 0

# 开始

start = time.time()

asyncio.run(main())

# 结束

end = time.time()

print(f'异步:发送 50 次请求,耗时:{end - start}')

想搞懂 异步框架 和异步接口的调用,可以按这个路线学习:1.python异步编程、2.python Web异步框架(tornado/sanic)、3.异步接口调用(aiohttp/httpx)

1. asyncio

示例 1:( Python 3.5+ 之前的写法 )

import asyncio

@asyncio.coroutine

def func1():

print('before...func1......')

yield from asyncio.sleep(5)

print('end...func1......')

tasks = [func1(), func1()]

loop = asyncio.get_event_loop()

loop.run_until_complete(asyncio.gather(*tasks))

loop.close()

改进,使用 async / await 关键字 ( Python 3.5+ 开始引入了新的语法 async 和 await )

import asyncio

async def func1():

print('before...func1......')

await asyncio.sleep(5)

print('end...func1......')

tasks = [func1(), func1()]

loop = asyncio.get_event_loop()

loop.run_until_complete(asyncio.gather(*tasks))

loop.close()示例 2 :

import asyncio

async def fetch_async(host, url='/'):

print(host, url)

reader, writer = await asyncio.open_connection(host, 80)

request_header_content = """GET %s HTTP/1.0\r\nHost: %s\r\n\r\n""" % (url, host,)

request_header_content = bytes(request_header_content, encoding='utf-8')

writer.write(request_header_content)

await writer.drain()

text = await reader.read()

print(host, url, text)

writer.close()

tasks = [

fetch_async('www.cnblogs.com', '/wupeiqi/'),

fetch_async('dig.chouti.com', '/pic/show?nid=4073644713430508&lid=10273091')

]

loop = asyncio.get_event_loop()

results = loop.run_until_complete(asyncio.gather(*tasks))

loop.close()示例 3:

#!/usr/bin/env python

# encoding:utf-8

import asyncio

import aiohttp

import time

async def download(url): # 通过async def定义的函数是原生的协程对象

print("get: %s" % url)

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

print(resp.status)

# response = await resp.read()

async def main():

start = time.time()

await asyncio.wait([

download("http://www.163.com"),

download("http://www.mi.com"),

download("http://www.baidu.com")])

end = time.time()

print("Complete in {} seconds".format(end - start))

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

Python 异步编程之 asyncio(百万并发)

前言:python 由于 GIL(全局锁)的存在,不能发挥多核的优势,其性能一直饱受诟病。然而在 IO 密集型的网络编程里,异步处理比同步处理能提升成百上千倍的效率,弥补了 python 性能方面的短板,如最新的微服务框架 japronto,resquests per second 可达百万级。

python 还有一个优势是库(第三方库)极为丰富,运用十分方便。asyncio 是 python3.4 版本引入到标准库,python2x 没有加这个库,毕竟 python3x 才是未来啊,哈哈!python3.5 又加入了 async/await 特性。在学习 asyncio 之前,我们先来理清楚 同步/异步的概念:

- 同步 是指完成事务的逻辑,先执行第一个事务,如果阻塞了,会一直等待,直到这个事务完成,再执行第二个事务,顺序执行。。。

- 异步 是和同步相对的,异步是指在处理调用这个事务的之后,不会等待这个事务的处理结果,直接处理第二个事务去了,通过状态、通知、回调来通知调用者处理结果。

aiohttp 使用

如果需要并发 http 请求怎么办呢,通常是用 requests,但 requests 是同步的库,如果想异步的话需要引入 aiohttp。这里引入一个类,from aiohttp import ClientSession,首先要建立一个 session 对象,然后用 session 对象去打开网页。session 可以进行多项操作,比如 post、get、put、head 等。

基本用法:

async with ClientSession() as session:

async with session.get(url) as response:aiohttp 异步实现的例子:

import asyncio

from aiohttp import ClientSession

tasks = []

url = "http://httpbin.org/get?args=hello_word"

async def hello(t_url):

async with ClientSession() as session:

async with session.get(t_url) as req:

response = await req.read()

# response = await req.text()

# print(response)

print(f'{req.url} : {req.status}')

if __name__ == '__main__':

loop = asyncio.get_event_loop()

loop.run_until_complete(hello(url))

首先 async def 关键字定义了这是个异步函数,await 关键字加在需要等待的操作前面,req.read() 等待 request 响应,是个耗 IO 操作。然后使用 ClientSession 类发起 http 请求。

异步请求多个URL

如果我们需要请求多个 URL 该怎么办呢?

- 同步的做法:访问多个 URL时,只需要加个 for 循环就可以了。

- 但异步的实现方式并没那么容易:在之前的基础上需要将 hello() 包装在 asyncio 的 Future 对象中,然后将 Future对象列表 作为 任务 传递给 事件循环。

import datetime

import asyncio

from aiohttp import ClientSession

task_list = []

url = "https://www.baidu.com/{}"

async def hello(t_url):

ret_val = None

async with ClientSession() as session:

async with session.get(t_url) as req:

response = await req.read()

print(f'Hello World:{datetime.datetime.now().replace(microsecond=0)}')

# print(response)

ret_val = req.status

return ret_val

def run():

for i in range(5):

one_task = asyncio.ensure_future(hello(url.format(i)))

task_list.append(one_task)

if __name__ == '__main__':

loop = asyncio.get_event_loop()

run()

result = loop.run_until_complete(asyncio.wait(task_list))

# 方法 1 : 获取结果

for task in task_list:

print(task.result())

# 方法 2 : 获取结果

finish_task, pending_task = result

print(f'finish_task count:{len(pending_task)}')

for task in finish_task:

print(task.result())

print(f'pending_task count:{len(pending_task)}')

for task in pending_task:

print(task.result())

'''

Hello World:2020-12-06 16:29:02

Hello World:2020-12-06 16:29:02

Hello World:2020-12-06 16:29:02

Hello World:2020-12-06 16:29:02

Hello World:2020-12-06 16:29:02

404

404

404

404

404

finish_task count:0

404

404

404

404

404

pending_task count:0

'''收集 http 响应

上面介绍了访问不同 URL 的异步实现方式,但是我们只是发出了请求,如果要把响应一一收集到一个列表中,最后保存到本地或者打印出来要怎么实现呢?

可通过 asyncio.gather(*tasks) 将响应全部收集起来,具体通过下面实例来演示。

import time

import asyncio

from aiohttp import ClientSession

task_list = []

temp_url = "https://www.baidu.com/{}"

async def hello(url=None):

async with ClientSession() as session:

async with session.get(url) as request:

# print(request)

print('Hello World:%s' % time.time())

return await request.read()

def run():

for i in range(5):

task = asyncio.ensure_future(hello(temp_url.format(i)))

task_list.append(task)

result = loop.run_until_complete(asyncio.gather(*task_list))

print(f'len(result) : {len(result)}')

for item in result:

print(item)

if __name__ == '__main__':

loop = asyncio.get_event_loop()

run()

限制并发数(最大文件描述符的限制)

提示:此方法也可用来作为异步爬虫的限速方法(反反爬)

假如你的并发达到 2000 个,程序会报错:ValueError: too many file descriptors in select()。报错的原因字面上看是 Python 调取的 select 对打开的文件有最大数量的限制,这个其实是操作系统的限制,linux 打开文件的最大数默认是 1024,windows 默认是 509,超过了这个值,程序就开始报错。这里我们有三种方法解决这个问题:

- 1. 限制并发数量。(一次不要塞那么多任务,或者限制最大并发数量)

- 2. 使用回调的方式。

- 3. 修改操作系统打开文件数的最大限制,在系统里有个配置文件可以修改默认值,具体步骤不再说明了。

不修改系统默认配置的话,个人推荐限制并发数的方法,设置并发数为 500,处理速度更快。

使用 semaphore = asyncio.Semaphore(500) 以及在协程中使用 async with semaphore: 操作具体代码如下:

# coding:utf-8

import time, asyncio, aiohttp

url = 'https://www.baidu.com/'

index = 0

async def hello(url, semaphore):

global index

async with semaphore:

async with aiohttp.ClientSession() as session:

async with session.get(url) as response:

print(f'{index} : ', end='')

await asyncio.sleep(2)

print(response.status)

return await response.read()

async def run():

# 为了看效果,这是设置 100 个任务,并发限制为 5

semaphore = asyncio.Semaphore(5) # 限制并发量为500

to_get = [hello(url.format(), semaphore) for _ in range(100)] # 总共1000任务

await asyncio.wait(to_get)

if __name__ == '__main__':

# now=lambda :time.time()

loop = asyncio.get_event_loop()

loop.run_until_complete(run())

# loop.close()

示例代码:

import asyncio

import aiohttp

async def get_http(url):

async with semaphore:

async with aiohttp.ClientSession() as session:

async with session.get(url) as res:

global count

count += 1

print(count, res.status)

if __name__ == '__main__':

count = 0

semaphore = asyncio.Semaphore(500)

loop = asyncio.get_event_loop()

temp_url = 'https://www.baidu.com/s?wd={0}'

tasks = [get_http(temp_url.format(i)) for i in range(600)]

loop.run_until_complete(asyncio.wait(tasks))

loop.close()示例代码:

from aiohttp import ClientSession

import asyncio

# 限制协程并发量

async def hello(sem, num):

async with sem:

async with ClientSession() as session:

async with session.get(f'http://httpbin.org/get?a={num}') as response:

r = await response.read()

print(f'[{num}]:{r}')

await asyncio.sleep(1)

def main():

loop = asyncio.get_event_loop()

tasks = []

sem = asyncio.Semaphore(5) # this

for index in range(100000):

task = asyncio.ensure_future(hello(sem, index))

tasks.append(task)

feature = asyncio.ensure_future(asyncio.gather(*tasks))

try:

loop.run_until_complete(feature)

finally:

loop.close()

if __name__ == "__main__":

main()aiohttp 实现高并发爬虫 ( 异步 mysql )

python asyncio并发编程:https://www.cnblogs.com/crazymagic/articles/10066619.html

# asyncio爬虫, 去重, 入库

import asyncio

import re

import aiohttp

import aiomysql

from pyquery import PyQuery

stopping = False

start_url = 'http://www.jobbole.com'

waiting_urls = []

seen_urls = set() # 实际使用爬虫去重时,数量过多,需要使用布隆过滤器

async def fetch(url, session):

async with aiohttp.ClientSession() as session:

try:

async with session.get(url) as resp:

print('url status: {}'.format(resp.status))

if resp.status in [200, 201]:

data = await resp.text()

return data

except Exception as e:

print(e)

def extract_urls(html): # html中提取所有url

urls = []

pq = PyQuery(html)

for link in pq.items('a'):

url = link.attr('href')

if url and url.startwith('http') and url not in seen_urls:

urls.append(url)

waiting_urls.append(urls)

return urls

async def init_urls(url, session):

html = await fetch(url, session)

seen_urls.add(url)

extract_urls(html)

async def article_handler(url, session, pool): # 获取文章详情并解析入库

html = await fetch(url, session)

extract_urls(html)

pq = PyQuery(html)

title = pq('title').text() # 为了简单, 只获取title的内容

async with pool.acquire() as conn:

async with conn.cursor() as cur:

await cur.execute('SELECT 42;')

insert_sql = "insert into article_test(title) values('{}')".format(

title)

await cur.execute(insert_sql) # 插入数据库

# print(cur.description)

# (r,) = await cur.fetchone()

# assert r == 42

async def consumer(pool):

async with aiohttp.ClientSession() as session:

while not stopping:

if len(waiting_urls) == 0: # 如果使用asyncio.Queue的话, 不需要我们来处理这些逻辑。

await asyncio.sleep(0.5)

continue

url = waiting_urls.pop()

print('start get url:{}'.format(url))

if re.match(r'http://.*?jobbole.com/\d+/', url):

if url not in seen_urls: # 是没有处理过的url,则处理

asyncio.ensure_future(article_handler(url, sssion, pool))

else:

if url not in seen_urls:

asyncio.ensure_future(init_urls(url))

async def main(loop):

# 等待mysql连接建立好

pool = await aiomysql.create_pool(

host='127.0.0.1', port=3306, user='root', password='',

db='aiomysql_test', loop=loop, charset='utf8', autocommit=True

)

# charset autocommit必须设置, 这是坑, 不写数据库写入不了中文数据

async with aiohttp.ClientSession() as session:

html = await fetch(start_url, session)

seen_urls.add(start_url)

extract_urls(html)

asyncio.ensure_future(consumer(pool))

if __name__ == '__main__':

event_loop = asyncio.get_event_loop()

asyncio.ensure_future(main(event_loop))

event_loop.run_forever()

学习 python 高并发模块 asynio

参考:Python黑魔法 --- 异步IO( asyncio) 协程:https://www.jianshu.com/p/b5e347b3a17c

Python 中重要的模块 --- asyncio:https://www.cnblogs.com/zhaof/p/8490045.html

Python 协程深入理解:https://www.cnblogs.com/zhaof/p/7631851.html

asyncio 是 python 用于解决异步io编程的一整套解决方案

创建一个 asyncio 的步骤如下

- 创建一个 event_loop 事件循环,当启动时,程序开启一个无限循环,把一些函数注册到事件循环上,当满足事件发生的时候,调用相应的协程函数。

- 创建协程: 使用 async 关键字定义的函数就是一个协程对象。在协程函数内部可以使用 await 关键字用于阻塞操作的挂起。

- 将协程注册到事件循环中。协程的调用不会立即执行函数,而是会返回一个协程对象。协程对象需要注册到事件循环,由事件循环调用。

一、定义一个协程

import time

import asyncio

async def do_some_work(x):

print("waiting:", x)

start = time.time()

# 这里是一个协程对象,这个时候do_some_work函数并没有执行

coroutine = do_some_work(2)

print(coroutine)

# 创建一个事件loop

loop = asyncio.get_event_loop()

# 将协程注册到事件循环,并启动事件循环

loop.run_until_complete(coroutine)

print("Time:", time.time() - start)

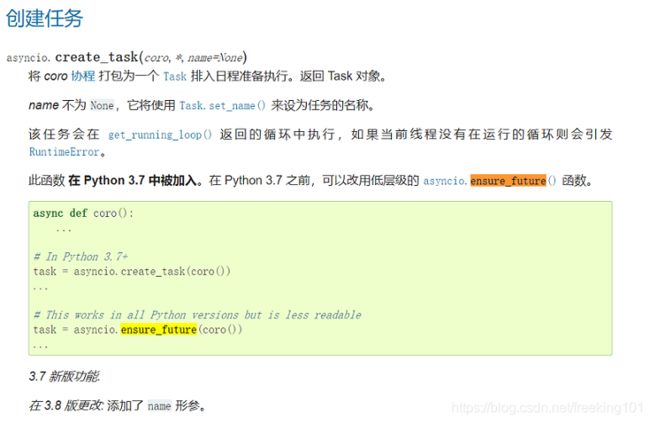

二、创建一个 task

一个协程对象 就是 一个原生可以挂起的函数,任务则是对协程进一步封装,其中包含了任务的各种状态。

在上面的代码中,在注册事件循环的时候,其实是 run_until_complete 方法将协程包装成为了一个任务(task)对象。 task 对象是 Future类的子类,保存了协程运行后的状态,用于未来获取协程的结果。

import asyncio

import time

start = lambda: time.time()

async def do_some_work(x):

print("waiting:", x)

start = start()

coroutine = do_some_work(2)

loop = asyncio.get_event_loop()

task = loop.create_task(coroutine)

print(task)

loop.run_until_complete(task)

print(task)

print("Time:", time.time() - start)

loop.create_task,asyncio.async / asyncio.ensure_future 和 Task 有什么区别?

BaseEventLoop.create_task(coro) 、asyncio.async(coro)、Task(coro)安排协同程序执行,这似乎也可以正常工作。那么,所有这些之间有什么区别?

- 在 Python> = 3.5中,已将 async 设为关键字,所以 asyncio.async 必须替换为 asyncio.ensure_future

- create_task 的存在理由:

第三方事件循环可以使用其自己的Task子类来实现互操作性。在这种情况下,结果类型是Task的子类。

这也意味着您不应直接创建 Task ,因为不同的事件循环可能具有不同的创建"任务"的方式。 - 另一个重要区别是,除了接受协程外, ensure_future 也接受任何等待的对象;而 create_task 只接受协程。

那么用 ensure_future 还是 create_task 函数声明对比:

- asyncio.ensure_future(coro_or_future, *, loop=None)

- BaseEventLoop.create_task(coro)

显然,ensure_future 除了接受 coroutine 作为参数,还接受 future 作为参数。

看 ensure_future 的代码,会发现 ensure_future 内部在某些条件下会调用 create_task,综上所述:

- encure_future: 最高层的函数,推荐使用!

- create_task: 在确定参数是 coroutine 的情况下可以使用。

- Task: 可能很多时候也可以工作,但真的没有使用的理由!

为了 interoperability,第三方的事件循环可以使用自己的 Task 子类。这种情况下,返回结果的类型是 Task 的子类。

所以,不要直接创建 Task 实例,应该使用 ensure_future() 函数或 BaseEventLoop.create_task() 方法。

asyncio.ensure_future 与 BaseEventLoop.create_task 对比简单的协同程序

From:asyncio.ensure_future与BaseEventLoop.create_task对比简单的协同程序?-python黑洞网

看过几个关于asyncio的基本Python 3.5教程,它们以各种方式执行相同的操作。在这段代码中:

import asyncio

async def doit(i):

print("Start %d" % i)

await asyncio.sleep(3)

print("End %d" % i)

return i

if __name__ == '__main__':

loop = asyncio.get_event_loop()

# futures = [asyncio.ensure_future(doit(i), loop=loop) for i in range(10)]

# futures = [loop.create_task(doit(i)) for i in range(10)]

futures = [doit(i) for i in range(10)]

result = loop.run_until_complete(asyncio.gather(*futures))

print(result)

上面定义

futures变量的所有三个变体都实现了相同的结果,那么他们有什么区别?有些情况下我不能只使用最简单的变体(协程的简单列表)吗?asyncio.create_task(coro) 和 asyncio.ensure_future(obj)

从 Python 3.7 开始,为此目的添加了

asyncio.create_task(coro)高级功能,可以使用它来代替从 coroutimes 创建任务的其他方法。但是,如果需要从任意等待创建任务,应该使用

asyncio.ensure_future(obj)。推荐:使用 asyncio.ensure_future(obj) 来代替 asyncio.create_task(coro)

ensure_future VS create_task

ensure_future是创建一个方法Task从coroutine。它基于参数以不同的方式创建任务(包括使用create_task协同程序和类似未来的对象)。

create_task是一种抽象的方法AbstractEventLoop。不同的事件循环可以不同的方式实现此功能。

您应该使用ensure_future创建任务。create_task只有在你要实现自己的事件循环类型时才需要。

“当从协程创建任务时,你应该使用适当命名的loop.create_task()”

在任务中包装协程 - 是一种在后台启动此协程的方法。这是一个例子:

import asyncio

async def msg(text):

await asyncio.sleep(0.1)

print(text)

async def long_operation():

print('long_operation started')

await asyncio.sleep(3)

print('long_operation finished')

async def main():

await msg('first')

# Now you want to start long_operation, but you don't want to wait it finised:

# long_operation should be started, but second msg should be printed immediately.

# Create task to do so:

task = asyncio.ensure_future(long_operation())

await msg('second')

# Now, when you want, you can await task finised:

await task

if __name__ == "__main__":

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

'''

输出:

first

long_operation started

second

long_operation finished

'''创建任务:

- 可以通过 loop.create_task(coroutine) 创建 task,

- 也可以通过 asyncio.ensure_future(coroutine) 创建 task。

使用这两种方式的区别在 官网( 协程与任务 — Python 3.10.4 文档 )上有提及。

task / future 以及使用 async 创建的都是 awaitable 对象,都可以在 await 关键字之后使用。

future 对象意味着在未来返回结果,可以搭配回调函数使用。

要真正运行一个协程,asyncio 提供了三种主要机制

( https://docs.python.org/zh-cn/3/library/asyncio-task.html#asyncio.ensure_future )

- 1.

asyncio.run()函数用来运行最高层级的入口点 "main()" 函数。

import asyncio

async def main():

print('hello')

await asyncio.sleep(1)

print('world')

asyncio.run(main())

- 2. 使用 await ( 即 等待一个协程 )

import asyncio

import time

async def say_after(delay, what):

await asyncio.sleep(delay)

print(what)

async def main():

print(f"started at {time.strftime('%X')}")

await say_after(3, 'hello')

await say_after(1, 'world')

print(f"finished at {time.strftime('%X')}")

asyncio.run(main())

预期的输出:

started at 17:13:52

world

hello

finished at 17:13:55- 3. 使用 asyncio.create_task() 函数用来并发运行作为 asyncio 任务 的多个协程。

import asyncio

import time

async def say_after(delay, what):

await asyncio.sleep(delay)

print(what)

async def main():

task1 = asyncio.create_task(

say_after(3, 'hello'))

task2 = asyncio.create_task(

say_after(1, 'world'))

print(f"started at {time.strftime('%X')}")

# Wait until both tasks are completed (should take

# around 2 seconds.)

await task1

await task2

asyncio.run(main())预期的输出:

started at 17:14:32

world

hello

finished at 17:14:34获取协程的返回值

- 1 创建一个任务 task

- 2 通过调用 task.result 获取协程的返回值

import asyncio

import time

async def get_html(url):

print("start get url")

await asyncio.sleep(2)

return "this is test"

if __name__ == "__main__":

start_time = time.time()

loop = asyncio.get_event_loop()

task = loop.create_task(get_html("http://httpbin.org"))

loop.run_until_complete(task)

print(task.result())三、绑定回调

执行成功进行回调处理

可以通过 add_done_callback( 任务) 添加回调,因为这个函数只接受一个回调的函数名,不能传参,我们想要传参可以使用偏函数

# 获取协程的返回值

import asyncio

import time

from functools import partial

async def get_html(url):

print("start get url")

await asyncio.sleep(2)

return "this is test"

def callback(url, future):

print(url)

print("send email to bobby")

if __name__ == "__main__":

start_time = time.time()

loop = asyncio.get_event_loop()

task = loop.create_task(get_html("http://www.imooc.com"))

task.add_done_callback(partial(callback, "http://www.imooc.com"))

loop.run_until_complete(task)

print(task.result())asnycio 异步请求+异步回调:asnycio 异步请求+异步回调_xiaobai8823的博客-CSDN博客

当使用 ensure_feature 创建任务的时候,可以使用任务的 task.add_done_callback(callback)方法,获得对象的协程返回值。

import asyncio

import time

async def do_some_work(x):

print("waiting:", x)

return "Done after {}s".format(x)

def callback(future):

print("callback:", future.result())

start = time.time()

coroutine = do_some_work(2)

loop = asyncio.get_event_loop()

task = asyncio.ensure_future(coroutine)

print(task)

task.add_done_callback(callback)

print(task)

loop.run_until_complete(task)四、阻塞 ( 使用 await 让出控制权,挂起当前操作 )

前面提到 asynic 函数内部可以使用 await 来针对耗时的操作进行挂起。

import asyncio

import time

async def do_some_work(x):

print("waiting:", x)

# await 后面就是调用耗时的操作

await asyncio.sleep(x)

return "Done after {}s".format(x)

coroutine = do_some_work(2)

loop = asyncio.get_event_loop()

task = asyncio.ensure_future(coroutine)

loop.run_until_complete(task)

五、并发 和 并行

- 并发:同一时刻 同时 发生

- 并行:同一时间 间隔 发生

并发通常是指有多个任务需要同时进行,并行则是同一个时刻有多个任务执行.

当有多个任务需要并行时,可以将任务先放置在任务队列中,然后将任务队列传给 asynicio.wait 方法,这个方法会同时并行运行队列中的任务。将其注册到事件循环中。

import asyncio

async def do_some_work(x):

print("Waiting:", x)

await asyncio.sleep(x)

return "Done after {}s".format(x)

coroutine1 = do_some_work(1)

coroutine2 = do_some_work(2)

coroutine3 = do_some_work(4)

tasks = [

asyncio.ensure_future(coroutine1),

asyncio.ensure_future(coroutine2),

asyncio.ensure_future(coroutine3)

]

loop = asyncio.get_event_loop()

loop.run_until_complete(asyncio.wait(tasks))

loop.close()示例:

import asyncio

async def do_some_work(x):

print("Waiting:", x)

await asyncio.sleep(x)

return "Done after {}s".format(x)

async def main():

coroutine1 = do_some_work(1)

coroutine2 = do_some_work(2)

coroutine3 = do_some_work(4)

task_list = [

asyncio.ensure_future(coroutine1),

asyncio.ensure_future(coroutine2),

asyncio.ensure_future(coroutine3)

]

await asyncio.wait(task_list)

if __name__ == '__main__':

asyncio.run(main())

pass

六、 asyncio.wait 、asyncio.gather

使用 async 可以定义协程,协程用于耗时的 io 操作,我们也可以封装更多的 io 操作过程,这样就实现了嵌套的协程,即一个协程中 await 了另外一个协程,如此连接起来。

import asyncio

async def do_some_work(x):

print("waiting:", x)

await asyncio.sleep(x)

return "Done after {}s".format(x)

async def main():

coroutine1 = do_some_work(1)

coroutine2 = do_some_work(2)

coroutine3 = do_some_work(4)

tasks = [

asyncio.ensure_future(coroutine1),

asyncio.ensure_future(coroutine2),

asyncio.ensure_future(coroutine3)

]

dones, pendings = await asyncio.wait(tasks)

for task in dones:

print("Task ret:", task.result())

# results = await asyncio.gather(*tasks)

# for result in results:

# print("Task ret:",result)

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

使用 asyncio.wait 的结果如下,可见返回的结果 dones 并不一定按照顺序输出

waiting: 1

waiting: 2

waiting: 4

Task ret: Done after 2s

Task ret: Done after 4s

Task ret: Done after 1s

Time: 4.006587505340576

使用 await asyncio.gather(*tasks) 得到的结果如下,是按照列表顺序进行返回的

waiting: 1

waiting: 2

waiting: 4

Task ret: Done after 1s

Task ret: Done after 2s

Task ret: Done after 4s

Time: 4.004234313964844

上面的程序将 main 也定义为协程。我们也可以不在 main 协程函数里处理结果,直接返回 await 的内容,那么最外层的 run_until_complete 将会返回main协程的结果。

import asyncio

import time

now = lambda: time.time()

async def do_some_work(x):

print("waiting:", x)

await asyncio.sleep(x)

return "Done after {}s".format(x)

async def main():

coroutine1 = do_some_work(1)

coroutine2 = do_some_work(2)

coroutine3 = do_some_work(4)

tasks = [

asyncio.ensure_future(coroutine1),

asyncio.ensure_future(coroutine2),

asyncio.ensure_future(coroutine3)

]

return await asyncio.gather(*tasks)

# return await asyncio.wait(tasks)也可以使用。注意gather方法需要*这个标记

start = now()

loop = asyncio.get_event_loop()

results = loop.run_until_complete(main())

for result in results:

print("Task ret:", result)

print("Time:", now() - start)

也可以使用 as_complete 方法实现嵌套协程

import asyncio

import time

now = lambda: time.time()

async def do_some_work(x):

print("waiting:", x)

await asyncio.sleep(x)

return "Done after {}s".format(x)

async def main():

coroutine1 = do_some_work(1)

coroutine2 = do_some_work(2)

coroutine3 = do_some_work(4)

tasks = [

asyncio.ensure_future(coroutine1),

asyncio.ensure_future(coroutine2),

asyncio.ensure_future(coroutine3)

]

for task in asyncio.as_completed(tasks):

result = await task

print("Task ret: {}".format(result))

start = now()

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

print("Time:", now() - start)

七、协程停止

创建 future 的时候,task 为 pending,事件循环调用执行的时候当然就是 running,调用完毕自然就是 done,如果需要停止事件循环,就需要先把 task 取消。可以使用 asyncio.Task 获取事件循环的 task。

future 对象有如下几个状态:Pending、Running、Done、Cacelled

import asyncio

import time

now = lambda: time.time()

async def do_some_work(x):

print("Waiting:", x)

await asyncio.sleep(x)

return "Done after {}s".format(x)

coroutine1 = do_some_work(1)

coroutine2 = do_some_work(2)

coroutine3 = do_some_work(2)

tasks = [

asyncio.ensure_future(coroutine1),

asyncio.ensure_future(coroutine2),

asyncio.ensure_future(coroutine3),

]

start = now()

loop = asyncio.get_event_loop()

try:

loop.run_until_complete(asyncio.wait(tasks))

except KeyboardInterrupt as e:

print(asyncio.Task.all_tasks())

for task in asyncio.Task.all_tasks():

print(task.cancel())

loop.stop()

loop.run_forever()

finally:

loop.close()

print("Time:", now() - start)

启动事件循环之后,马上 ctrl+c,会触发 run_until_complete 的执行异常 KeyBorardInterrupt。然后通过循环 asyncio.Task 取消 future。

可以看到输出如下:True 表示 cannel 成功,loop stop 之后还需要再次开启事件循环,最后在 close,不然还会抛出异常.

循环 task,逐个 cancel 是一种方案,可是正如上面我们把 task 的列表封装在 main 函数中,main 函数外进行事件循环的调用。这个时候,main 相当于最外出的一个task,那么处理包装的main 函数即可。

task取消和子协程调用原理

程序运行时 通过 ctl +c 取消任务 调用task.cancel()取消任务

import asyncio

import time

async def get_html(sleep_times):

print("waiting")

await asyncio.sleep(sleep_times)

print("done after {}s".format(sleep_times))

if __name__ == "__main__":

task1 = get_html(2)

task2 = get_html(3)

task3 = get_html(3)

tasks = [task1, task2, task3]

loop = asyncio.get_event_loop()

try:

loop.run_until_complete(asyncio.wait(tasks))

except KeyboardInterrupt as e:

all_tasks = asyncio.Task.all_tasks()

for task in all_tasks:

print("cancel task")

print(task.cancel())

loop.stop()

loop.run_forever()

finally:

loop.close()在终端执行:python ceshi.py ,运行成功后 按 ctl +c 取消任务

不同线程的事件循环( 线程、线程池 )

很多时候,我们的事件循环用于注册协程,而有的协程需要动态的添加到事件循环中。一个简单的方式就是使用多线程。当前线程创建一个事件循环,然后在新建一个线程,在新线程中启动事件循环。当前线程不会被 block。

import asyncio

from threading import Thread

import time

now = lambda: time.time()

def start_loop(loop):

asyncio.set_event_loop(loop)

loop.run_forever()

def more_work(x):

print('More work {}'.format(x))

time.sleep(x)

print('Finished more work {}'.format(x))

start = now()

new_loop = asyncio.new_event_loop()

t = Thread(target=start_loop, args=(new_loop,))

t.start()

print('TIME: {}'.format(time.time() - start))

new_loop.call_soon_threadsafe(more_work, 6)

new_loop.call_soon_threadsafe(more_work, 3)

启动上述代码之后,当前线程不会被 block,新线程中会按照顺序执行 call_soon_threadsafe 方法注册的 more_work 方法, 后者因为 time.sleep 操作是同步阻塞的,因此运行完毕more_work 需要大致 6 + 3

使用 线程池

# -*- coding:utf-8 -*-

import asyncio

import time

from concurrent.futures import ThreadPoolExecutor

thread_pool = ThreadPoolExecutor(5)

tasks = []

func_now = lambda: time.time()

def start_loop(loop):

asyncio.set_event_loop(loop)

loop.run_forever()

def more_work(x):

print('More work {}'.format(x))

time.sleep(x)

print('Finished more work {}'.format(x))

start = func_now()

new_loop = asyncio.new_event_loop()

thread_pool.submit(start_loop, new_loop)

print('TIME: {}'.format(time.time() - start))

new_loop.call_soon_threadsafe(more_work, 6)

new_loop.call_soon_threadsafe(more_work, 3)

主线程创建事件,子线程事件循环

import asyncio

import time

from threading import Thread

now = lambda: time.time()

def start_loop(loop):

asyncio.set_event_loop(loop)

loop.run_forever()

async def do_some_work(x):

print('Waiting {}'.format(x))

await asyncio.sleep(x)

print('Done after {}s'.format(x))

def more_work(x):

print('More work {}'.format(x))

time.sleep(x)

print('Finished more work {}'.format(x))

start = now()

new_loop = asyncio.new_event_loop()

t = Thread(target=start_loop, args=(new_loop,))

t.start()

print('TIME: {}'.format(time.time() - start))

asyncio.run_coroutine_threadsafe(do_some_work(6), new_loop)

asyncio.run_coroutine_threadsafe(do_some_work(4), new_loop)

上述的例子,主线程中创建一个 new_loop,然后在另外的子线程中开启一个无限事件循环。 主线程通过run_coroutine_threadsafe新注册协程对象。这样就能在子线程中进行事件循环的并发操作,同时主线程又不会被 block。一共执行的时间大概在 6s 左右。

master - worker 主从模式

对于并发任务,通常是用生成消费模型,对队列的处理可以使用类似 master-worker 的方式,master 主要用户获取队列的 msg,worker 用户处理消息。

为了简单起见,并且协程更适合单线程的方式,我们的主线程用来监听队列,子线程用于处理队列。这里使用 redis 的队列。主线程中有一个是无限循环,用户消费队列。

import time

import asyncio

from threading import Thread

import redis

def get_redis(): # 返回一个 redis 连接对象

connection_pool = redis.ConnectionPool(host='127.0.0.1', db=3)

return redis.Redis(connection_pool=connection_pool)

def start_loop(loop): # 开启事件循环

asyncio.set_event_loop(loop)

loop.run_forever()

async def worker(task):

print('Start worker')

while True:

# start = now()

# task = rcon.rpop("queue") # 从 redis 中 取出的数据

# if not task:

# await asyncio.sleep(1)

# continue

print('Wait ', int(task)) # 取出了相应的任务

await asyncio.sleep(int(task))

print('Done ', task, now() - start)

now = lambda: time.time()

rcon = get_redis()

start = now()

# 创建一个事件循环

new_loop = asyncio.new_event_loop()

# 创建一个线程 在新的线程中开启事件循环

t = Thread(target=start_loop, args=(new_loop,))

t.setDaemon(True) # 设置线程为守护模式

t.start() # 开启线程

try:

while True:

task = rcon.rpop("queue") # 不断从队列中获取任务

if not task:

time.sleep(1)

continue

# 包装为 task ins, 传入子线程中的事件循环

asyncio.run_coroutine_threadsafe(worker(task), new_loop)

except Exception as e:

print('error', e)

new_loop.stop() # 出现异常 关闭时间循环

finally:

pass

给队列添加一些数据:

127.0.0.1:6379[3]> lpush queue 2

(integer) 1

127.0.0.1:6379[3]> lpush queue 5

(integer) 1

127.0.0.1:6379[3]> lpush queue 1

(integer) 1

127.0.0.1:6379[3]> lpush queue 1

可以看见输出:

Waiting 2

Done 2

Waiting 5

Waiting 1

Done 1

Waiting 1

Done 1

Done 5

我们发起了一个耗时5s的操作,然后又发起了连个1s的操作,可以看见子线程并发的执行了这几个任务,其中5s awati的时候,相继执行了1s的两个任务。

改进:

import time

import redis

import asyncio

from threading import Thread

redis_queue_name = 'redis_list:test'

def get_redis(): # 返回一个 redis 连接对象

connection_pool = redis.ConnectionPool(host='127.0.0.1', db=3)

return redis.StrictRedis(connection_pool=connection_pool)

def add_data_to_redis_list(*args):

redis_conn = get_redis()

redis_conn.lpush(redis_queue_name, *args)

def start_loop(loop=None): # 开启事件循环

asyncio.set_event_loop(loop)

loop.run_forever()

async def worker(task=None):

print('Start worker')

while True:

# task = redis_conn.rpop("queue") # 从 redis 中 取出的数据

# if not task:

# await asyncio.sleep(1)

# continue

print('Wait ', int(task)) # 取出了相应的任务

# 这里只是简单的睡眠传入的秒数

await asyncio.sleep(int(task))

def main():

redis_conn = get_redis()

# 创建一个事件循环

new_loop = asyncio.new_event_loop()

# 创建一个线程 在新的线程中开启事件循环

t = Thread(target=start_loop, args=(new_loop,))

t.setDaemon(True) # 设置线程为守护模式

t.start() # 开启线程

try:

while True:

task = redis_conn.rpop(name=redis_queue_name) # 不断从队列中获取任务

if not task:

time.sleep(1)

continue

# 包装为 task , 传入子线程中的事件循环

asyncio.run_coroutine_threadsafe(worker(task), new_loop)

except Exception as e:

print('error', e)

new_loop.stop() # 出现异常 关闭时间循环

finally:

new_loop.close()

if __name__ == '__main__':

# data_list = [1, 2, 3, 4, 5, 6, 7, 8, 9]

# add_data_to_redis_list(*data_list)

main()

pass

redis队列模型( 生产者 --- 消费者 )

参考:https://zhuanlan.zhihu.com/p/59621713

下面代码的主线程和双向队列的主线程有些不同,只是换了一种写法而已,代码如下

生产者代码:

import redis

conn_pool = redis.ConnectionPool(host='127.0.0.1')

redis_conn = redis.Redis(connection_pool=conn_pool)

redis_conn.lpush('coro_test', '1')

redis_conn.lpush('coro_test', '2')

redis_conn.lpush('coro_test', '3')

redis_conn.lpush('coro_test', '4')消费者代码:

import asyncio

from threading import Thread

import redis

def get_redis():

conn_pool = redis.ConnectionPool(host='127.0.0.1')

return redis.Redis(connection_pool=conn_pool)

def start_thread_loop(loop):

asyncio.set_event_loop(loop)

loop.run_forever()

async def thread_example(name):

print('正在执行name:', name)

await asyncio.sleep(2)

return '返回结果:' + name

redis_conn = get_redis()

new_loop = asyncio.new_event_loop()

loop_thread = Thread(target=start_thread_loop, args=(new_loop,))

loop_thread.setDaemon(True)

loop_thread.start()

# 循环接收redis消息并动态加入协程

while True:

msg = redis_conn.rpop('coro_test')

if msg:

asyncio.run_coroutine_threadsafe(thread_example('Zarten' + bytes.decode(msg, 'utf-8')), new_loop)

改进:

import asyncio

from threading import Thread

import redis

def get_redis():

conn_pool = redis.ConnectionPool(host='127.0.0.1')

return redis.Redis(connection_pool=conn_pool)

def start_thread_loop(loop):

asyncio.set_event_loop(loop)

loop.run_forever()

async def thread_example(name):

print('正在执行name:', name)

await asyncio.sleep(2)

return '返回结果:' + name

if __name__ == '__main__':

redis_queue_name = 'redis_list:test'

redis_conn = get_redis()

for num in range(1, 10):

redis_conn.lpush(redis_queue_name, num)

new_loop = asyncio.new_event_loop()

loop_thread = Thread(target=start_thread_loop, args=(new_loop,))

loop_thread.setDaemon(True)

loop_thread.start()

# 循环接收redis消息并动态加入协程

while True:

msg = redis_conn.rpop(name=redis_queue_name)

if msg:

asyncio.run_coroutine_threadsafe(

thread_example('King_' + msg.decode('utf-8')), new_loop

)

pass

停止子线程

如果一切正常,那么上面的例子很完美。可是,需要停止程序,直接ctrl+c,会抛出KeyboardInterrupt错误,我们修改一下主循环:

try:

while True:

task = rcon.rpop("queue")

if not task:

time.sleep(1)

continue

asyncio.run_coroutine_threadsafe(do_some_work(int(task)), new_loop)

except KeyboardInterrupt as e:

print(e)

new_loop.stop()

可是实际上并不好使,虽然主线程 try 了 KeyboardInterrupt异常,但是子线程并没有退出,为了解决这个问题,可以设置子线程为守护线程,这样当主线程结束的时候,子线程也随机退出。

new_loop = asyncio.new_event_loop()

t = Thread(target=start_loop, args=(new_loop,))

t.setDaemon(True) # 设置子线程为守护线程

t.start()

try:

while True:

# print('start rpop')

task = rcon.rpop("queue")

if not task:

time.sleep(1)

continue

asyncio.run_coroutine_threadsafe(do_some_work(int(task)), new_loop)

except KeyboardInterrupt as e:

print(e)

new_loop.stop()

线程停止程序的时候,主线程退出后,子线程也随机退出才了,并且停止了子线程的协程任务。

aiohttp

在消费队列的时候,我们使用 asyncio 的 sleep 用于模拟耗时的 io 操作。以前有一个短信服务,需要在协程中请求远程的短信 api,此时需要是需要使用 aiohttp 进行异步的 http 请求。大致代码如下:

server.py

import time

from flask import Flask, request

app = Flask(__name__)

@app.route('/')

def index(x):

time.sleep(x)

return "{} It works".format(x)

@app.route('/error')

def error():

time.sleep(3)

return "error!"

if __name__ == '__main__':

app.run(debug=True) / 接口表示短信接口,/error 表示请求/失败之后的报警。

async-custoimer.py

import time

import asyncio

from threading import Thread

import redis

import aiohttp

def get_redis():

connection_pool = redis.ConnectionPool(host='127.0.0.1', db=3)

return redis.Redis(connection_pool=connection_pool)

rcon = get_redis()

def start_loop(loop):

asyncio.set_event_loop(loop)

loop.run_forever()

async def fetch(url):

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

print(resp.status)

return await resp.text()

async def do_some_work(x):

print('Waiting ', x)

try:

ret = await fetch(url='http://127.0.0.1:5000/{}'.format(x))

print(ret)

except Exception as e:

try:

print(await fetch(url='http://127.0.0.1:5000/error'))

except Exception as e:

print(e)

else:

print('Done {}'.format(x))

new_loop = asyncio.new_event_loop()

t = Thread(target=start_loop, args=(new_loop,))

t.setDaemon(True)

t.start()

try:

while True:

task = rcon.rpop("queue")

if not task:

time.sleep(1)

continue

asyncio.run_coroutine_threadsafe(do_some_work(int(task)), new_loop)

except Exception as e:

print('error')

new_loop.stop()

finally:

pass有一个问题需要注意,我们在fetch的时候try了异常,如果没有try这个异常,即使发生了异常,子线程的事件循环也不会退出。主线程也不会退出,暂时没找到办法可以把子线程的异常raise传播到主线程。(如果谁找到了比较好的方式,希望可以带带我)。

对于 redis 的消费,还有一个 block 的方法:

try:

while True:

_, task = rcon.brpop("queue")

asyncio.run_coroutine_threadsafe(do_some_work(int(task)), new_loop)

except Exception as e:

print('error', e)

new_loop.stop()

finally:

pass使用 brpop方法,会 block 住 task,如果主线程有消息,才会消费。测试了一下,似乎 brpop 的方式更适合这种队列消费的模型。

127.0.0.1:6379[3]> lpush queue 5

(integer) 1

127.0.0.1:6379[3]> lpush queue 1

(integer) 1

127.0.0.1:6379[3]> lpush queue 1

可以看到结果

Waiting 5

Waiting 1

Waiting 1

200

1 It works

Done 1

200

1 It works

Done 1

200

5 It works

Done 5

协程消费

主线程用于监听队列,然后子线程的做事件循环的worker是一种方式。还有一种方式实现这种类似master-worker的方案。即把监听队列的无限循环逻辑一道协程中。程序初始化就创建若干个协程,实现类似并行的效果。

import time

import asyncio

import redis

now = lambda : time.time()

def get_redis():

connection_pool = redis.ConnectionPool(host='127.0.0.1', db=3)

return redis.Redis(connection_pool=connection_pool)

rcon = get_redis()

async def worker():

print('Start worker')

while True:

start = now()

task = rcon.rpop("queue")

if not task:

await asyncio.sleep(1)

continue

print('Wait ', int(task))

await asyncio.sleep(int(task))

print('Done ', task, now() - start)

def main():

asyncio.ensure_future(worker())

asyncio.ensure_future(worker())

loop = asyncio.get_event_loop()

try:

loop.run_forever()

except KeyboardInterrupt as e:

print(asyncio.gather(*asyncio.Task.all_tasks()).cancel())

loop.stop()