Doris 编译安装(完整版)

@羲凡——只为了更好的活着

Doris 编译安装(完整版)

前期准备

安装java8、mysql、docker

安装java8、mysql 这个基本操作,晚上也有很多例子,我就不赘述了。

安装docker,这有两个博客拿走不谢(Centos7和Ubuntu)

集群规划

可能有小白会问,为啥只有 FE-Follower ,却没有FE-Leader?因为Leader是从Follower中选举出来的,如果自由一个Follower ,那么它就呗选举为Leader

| hostname | FE-Follower | FE-Observer | BE | mysql |

|---|---|---|---|---|

| 10.218.223.96 | √ | √ | √ | |

| 10.218.223.97 | √ | √ | ||

| 10.218.223.98 | √ |

一、编译

在 10.218.223.96 上操作,我用的是root用户,你们没有可以用有sudo权限的用户名

1.拉取镜像

docker pull apachedoris/doris-dev:build-env-1.2

2.运行镜像,建议同时将镜像中 maven 的 .m2 目录挂载到宿主机目录,以防止每次启动镜像编译时,重复下载 maven 的依赖库

# docker run -it -v /your/local/.m2:/root/.m2 -v /your/local/incubator-doris-DORIS-x.x.x-release/:/root/incubator-doris-DORIS-x.x.x-release/ apachedoris/doris-dev:build-env

docker run -it -v /opt/modules/complie-doris/.m2:/root/.m2 -v /opt/modules/complie-doris/incubator-doris-DORIS-0.13.0-release/:/root/incubator-doris-DORIS-0.13.0-release/ apachedoris/doris-dev:build-env-1.2

3.下载源码,执行完上面的命令你就已经在容器里了

cd incubator-doris-DORIS-0.13.0-release

git clone https://github.com/apache/incubator-doris.git

4.编译——fe和be

cd /root/incubator-doris-DORIS-0.13.0-release/incubator-doris

sh build.sh

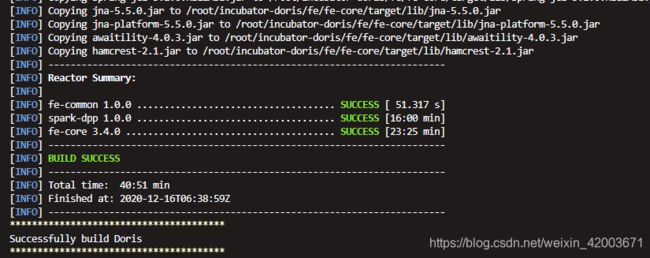

编译完成后如下图,产出文件在 output/ 目录中

5.编译——broker

cd /root/incubator-doris-DORIS-0.13.0-release/incubator-doris/fs_brokers/apache_hdfs_broker/

sh build.sh

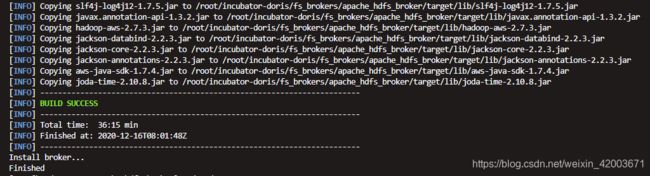

编译完成后如下图,产出文件在 output/ 目录中

二、安装FE-Follower

将源码编译生成的 output 下的 fe 文件夹拷贝到 10.218.223.96 指定部署路径 /opt/doris 下

1.配置文件(priority_networks 的ip要加上网关,不知道自己的网关,用 ip a 查看 )

cd /opt/doris/fe

mkdir /opt/doris/fe/doris-meta ####路径必须、必须、必须提前创建好

vim conf/fe.conf

###############添加下面两列信息###############

####################开始####################

meta_dir = /opt/doris/fe/doris-meta

priority_networks = 10.218.223.96/22

####################结束####################

2.启动,日志默认存放在 fe/log/ 目录下,成功后有守护进程 PaloFe

cd /opt/doris/fe

sh bin/start_fe.sh --daemon

三、安装BE

将源码编译生成的 output 下的 be 文件夹拷贝到 三台机器 指定部署路径 /opt/doris 下

scp -r output/be/ 10.218.223.96:/opt/doris/

scp -r output/be/ 10.218.223.97:/opt/doris/

scp -r output/be/ 10.218.223.98:/opt/doris/

1.配置文件(每台机器priority_networks写自己的地址,ip要加上网关,不知道自己的网关,用 ip a 查看 )

cd /opt/doris/be

mkdir -p /opt/doris/be/storage ####路径必须、必须、必须提前创建好

vim conf/be.conf

###############添加下面两列信息###############

####################开始####################

storage_root_path = /opt/doris/be/storage

priority_networks = 10.218.223.96/22

####################结束####################

2. FE 中添加所有 BE 节点

host 为 FE 所在节点 ip;port 为 fe/conf/fe.conf 中的 query_port;默认使用 root 账户,无密码登录。进入fe,如果端口没改,默认是9030

# mysql -h host -P port -uroot

mysql -h 10.218.223.96 -P 9030 -uroot

host 为 BE 所在节点 ip;port 为 be/conf/be.conf 中的 heartbeat_service_port

# ALTER SYSTEM ADD BACKEND "host:port";

ALTER SYSTEM ADD BACKEND "10.218.223.96:9050" ;

ALTER SYSTEM ADD BACKEND "10.218.223.97:9050" ;

ALTER SYSTEM ADD BACKEND "10.218.223.98:9050" ;

3.启动(三台都操作),日志默认存放在 be/log/ 目录下

cd /opt/doris/be

sh bin/start_be.sh --daemon

4. 查看be状态(isAlive 列应为 true)

mysql -h 10.218.223.96 -P 9030 -uroot

SHOW PROC '/backends';

四、安装FS_Broker(三台都装)

将源码编译生成的 output 下的 apache_hdfs_broker文件夹拷贝到 三台机器 指定部署路径 /opt/doris 下

scp -r output/apache_hdfs_broker/ 10.218.223.96:/opt/doris/

scp -r output/apache_hdfs_broker/ 10.218.223.97:/opt/doris/

scp -r output/apache_hdfs_broker/ 10.218.223.98:/opt/doris/

1. 删除原来的 hdfs-site.xml 将自己hadoop的core-site.xml和hdfs-site.xml 放到 /opt/doris/apache_hdfs_broker/conf 目录下

rm -rf conf/hdfs-site.xml

cp /etc/hadoop/conf.cloudera.hdfs/hdfs-site.xml conf/

2.启动

sh bin/start_broker.sh --daemon

3.添加Broker

host 为 FE 所在节点 ip;port 为 fe/conf/fe.conf 中的 query_port;默认使用 root 账户,无密码登录。进入fe,如果端口没改,默认是9030

# mysql -h host -P port -uroot

mysql -h 10.218.223.96 -P 9030 -uroot

host 为 Broker 所在节点 ip;port 为 Broker 配置文件中的 broker_ipc_port

# ALTER SYSTEM ADD BROKER broker_name "host1:port1","host2:port2",...;

ALTER SYSTEM ADD BROKER broker_name "10.218.223.96:8000","10.218.223.97:8000","10.218.223.98:8000";

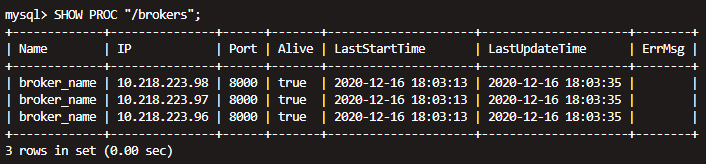

4. 查看Broker状态(isAlive 列应为 true)

SHOW PROC '/brokers';

五、安装FE-Observer

将源码编译生成的 output 下的 fe 文件夹拷贝到 10.218.223.97 指定部署路径 /opt/doris 下

1.配置文件(priority_networks 的ip要加上网关,不知道自己的网关,用 ip a 查看 )

cd /opt/doris/fe

mkdir /opt/doris/fe/doris-meta ####路径必须、必须、必须提前创建好

vim conf/fe.conf

###############添加下面两列信息###############

####################开始####################

meta_dir = /opt/doris/fe/doris-meta

priority_networks = 10.218.223.97/22

####################结束####################

2.启动

host 为 Leader 所在节点 ip, port 为 Leader 的配置文件 fe.conf 中的 edit_log_port。–helper 参数仅在 follower 和 observer 第一次启动时才需要

cd /opt/doris/fe

# sh bin/start_fe.sh --helper host:port --daemon

sh bin/start_fe.sh --helper 10.218.223.96:9010--daemon

3.添加Observer

host 为 FE 所在节点 ip;port 为 fe/conf/fe.conf 中的 query_port;默认使用 root 账户,无密码登录。进入fe,如果端口没改,默认是9030

# mysql -h host -P port -uroot

mysql -h 10.218.223.96 -P 9030 -uroot

host 为 Follower 或 Observer 所在节点 ip,port 为其配置文件 fe.conf 中的 edit_log_port

# ALTER SYSTEM ADD OBSERVER "host:port";

ALTER SYSTEM ADD OBSERVER "10.218.223.97:9010";

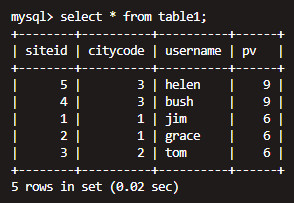

六、使用测试

# 测试数据

1,1,jim,2

2,1,grace,2

3,2,tom,2

4,3,bush,3

5,3,helen,3

mysql -h 10.218.223.96 -P 9030 -uroot

# 改密码

SET PASSWORD FOR 'root' = PASSWORD('123456');

# 创建数据库

CREATE DATABASE example_db;

USE example_db;

# 建表

CREATE TABLE table1

(

siteid INT DEFAULT '10',

citycode SMALLINT,

username VARCHAR(32) DEFAULT '',

pv BIGINT SUM DEFAULT '0'

)

AGGREGATE KEY(siteid, citycode, username)

DISTRIBUTED BY HASH(siteid) BUCKETS 10

PROPERTIES("replication_num" = "3");

加载数据-流式导入

# 如果数据在fe的节点上用下面的方式,注意看主要是端口的差异

curl --location-trusted -u root:123456 -H "label:table1_20201216" -H "column_separator:," -T table1_data http://10.218.223.96:8030/api/example_db/table1/_stream_load

# 如果数据在be的节点上用下面的方式,注意看主要是端口的差异

curl --location-trusted -u root:123456 -H "label:table1_20201217" -H "column_separator:," -T table1_data http://10.218.223.98:8040/api/example_db/table1/_stream_load;

加载数据-Broker导入(先登录Doris)

下面的 broker_name 要先找到先用 SHOW PROC '/brokers' 查看自己的是啥,不过默认是 broker_name

## HELP BROKER LOAD;

LOAD LABEL table1_20201212

(

DATA INFILE("hdfs://ns/tmp/table1_data")

INTO TABLE table1

COLUMNS TERMINATED BY ","

)

WITH BROKER broker_name

(

"hadoop.security.authentication"="simple",

"username"="hdfs",

"password"="hdfs",

"dfs.nameservices" = "ns",

"dfs.ha.namenodes.ns" = "namenode30, namenode55",

"dfs.namenode.rpc-address.ns.namenode30" = "yc-nsg-h2:8020",

"dfs.namenode.rpc-address.ns.namenode55" = "yc-nsg-h3:8020",

"dfs.client.failover.proxy.provider" = "org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider"

)

PROPERTIES

(

"timeout"="3600",

"max_filter_ratio"="0.1",

"timezone"="Asia/Shanghai"

);

-- 查看load是否成功

show load where label = 'table1_20201212' order by createtime desc limit 3

查询结果

装完也测试完了,你到任何一个fe的8030界面上查看你的参数和机器情况

|

|

|

恭喜您学会了Doris的安装,离成功又进了一步,哈哈

恭喜您学会了Doris的安装,离成功又进了一步,哈哈

恭喜您学会了Doris的安装,离成功又进了一步,哈哈

====================================================================

@羲凡——只为了更好的活着

若对博客中有任何问题,欢迎留言交流