RK3568笔记二:部署手写数字识别模型

若该文为原创文章,转载请注明原文出处。

环境搭建参考RK3568笔记一:RKNN开发环境搭建-CSDN博客

一、介绍

部署手写数字识别模型,使用手写数字识别(mnist)数据集训练了一个 LENET 的五层经典网络模型。Lenet是我们的深度学习入门的必学模型,是深度学习领域中的经典卷积神经网络(CNN)架构之一。

过程分为:训练,导出ONNX,转化RKNN,测试

二、训练

数据集训练我是在AutoDL上训练的,AutoDL配置如下:

1、创建虚拟环境

conda create -n LeNet5_env python==3.82、安装轮子

Previous PyTorch Versions | PyTorch

根据官方PyTorch,安装pytorch,使用的是CPU版本,其他版本自行安装,安装命令:

pip install torch==1.7.1+cu110 torchvision==0.8.2+cu110 torchaudio==0.7.2 -f https://download.pytorch.org/whl/torch_stable.html

-i https://pypi.tuna.tsinghua.edu.cn/simplepip install matplotlib -i https://pypi.tuna.tsinghua.edu.cn/simple

pip install opencv-python -i https://pypi.tuna.tsinghua.edu.cn/simple3、数据集下载

http://yann.lecun.com/exdb/mnist/train-images-idx3-ubyte.gz

http://yann.lecun.com/exdb/mnist/train-labels-idx1-ubyte.gz

http://yann.lecun.com/exdb/mnist/t10k-images-idx3-ubyte.gz

http://yann.lecun.com/exdb/mnist/t10k-labels-idx1-ubyte.gz4、训练

train.py

#!/usr/bin/env python3

import torch

import torch.nn.functional as F

import torch.optim as optim

from torchvision import datasets , transforms

from torch.utils.data import DataLoader

#import cv2

import numpy as np

from simple_net import SimpleModel

#hyperparameter

BATCH_SIZE = 16

DEVICE = torch.device("cuda" if torch.cuda.is_available() else "cpu")

#DEVICE = torch.device("cuda")

EPOCH = 100

#define training ways

def train_model(model,device,train_loader,optimizer,epoch):

#training

model.train()

for batch_index,(data,target) in enumerate(train_loader):

#deploy to device

data,target =data.to(device),target.to(device)

#init gradient

optimizer.zero_grad()

#training results

output = model(data)

#calulate loss

loss = F.cross_entropy(output,target)

#find the best score's index

#pred = output.max(1,keepdim = True)

#backword

loss.backward()

optimizer.step()

if batch_index % 3000 ==0:

print("Train Epoch :{} \t Loss :{:.6f}".format(epoch,loss.item()))

#test

def test_model(model,device,test_loader):

model.eval()

#correct rate

correct = 0.0

#test loss

test_loss=0

with torch.no_grad(): #do not caculate gradient as well as backward

for data,target in test_loader:

datra,target = data.to(device),target.to(device)

#test data

output = model(data.to(device))

#caculte loss

test_loss += F.cross_entropy(output,target).item()

#find the index of the best score

pred =output.max(1,keepdim=True)[1]

# 累计正确率

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /=len(test_loader.dataset)

print("TEST - average loss : {: .4f}, Accuracy :{:.3f}\n".format(

test_loss,100.0*correct /len(test_loader.dataset)))

def main():

#pipeline

pipeline = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,),(0.3081,))

])

#download dataset

train_set = datasets.MNIST("data",train=True,download=False,transform=pipeline)

test_set = datasets.MNIST("data",train=False,download=False,transform=pipeline)

#load dataset

train_loader = DataLoader(train_set,batch_size=BATCH_SIZE,shuffle=True)

test_loader = DataLoader(test_set,batch_size=BATCH_SIZE,shuffle=True)

#show dataset

with open("./data/MNIST/raw/train-images-idx3-ubyte","rb") as f:

file =f.read()

image1 = [int(str(item).encode('ascii'),16) for item in file[16:16+784]]

#print(image1)

image1_np=np.array(image1,dtype=np.uint8).reshape(28,28,1)

#print(image1_np.shape)

#cv2.imwrite("test.jpg",image1_np)

#optim

model = SimpleModel().to(DEVICE)

optimizer = optim.Adam(model.parameters())

#9 recall function to train

for epoch in range(1,EPOCH+1):

train_model(model,DEVICE,train_loader,optimizer,epoch)

test_model(model,DEVICE,test_loader)

# Create a SimpleModel and save its weight in the current directory

model_wzw = SimpleModel()

torch.save(model.state_dict(), "weight.pth")

if __name__ == "__main__":

main()

执行python train.py后开始训练,这里需要注意数据集的路径。

训练结束后,会生成一个weight.pth模型。

三、转成ONNX模型

1、转成onnx模型

export_onnx.py

#!/usr/bin/env python3

import torch

from simple_net import SimpleModel

# Load the pretrained model and export it as onnx

model = SimpleModel()

model.eval()

checkpoint = torch.load("weight.pth", map_location="cpu")

model.load_state_dict(checkpoint)

# Prepare input tensor

input = torch.randn(1, 1, 28, 28, requires_grad=True)#batch size-1 input cahnne-1 image size 28*28

# Export the torch model as onnx

torch.onnx.export(model,

input,

'model.onnx', # name of the exported onnx model

opset_version=12,

export_params=True,

do_constant_folding=True)

这里需要注意的是算子,在rknn2提及。

使用执行上面代码把weight.pth转成onnx模型。

2、测试onnx模型

test_onnx.py

#!/usr/bin/env python3

import torch

import onnxruntime

import numpy as np

import cv2

import time

# 加载 ONNX 模型

onnx_model = onnxruntime.InferenceSession("model.onnx", providers=['TensorrtExecutionProvider', 'CUDAExecutionProvider', 'CPUExecutionProvider'])

num = -1

inference_time =[0]

print("--0-5 1-0 2-4 3-1 4-9 5-2 6-1 7-3 8-1 9-4 for example:if num =9 the pic's num is 4")

# 准备输入数据

#show dataset

with open("./data/MNIST/raw/train-images-idx3-ubyte","rb") as f:

file = f.read()

for i in range(8000):

num = num+1

i = 16+784*num

image1 = [int(str(item).encode('ascii'),16) for item in file[i:i+784]]

#print(image1)

input_data = np.array(image1,dtype=np.float32).reshape(1,1,28,28)

image1_np = np.array(image1,dtype=np.uint8).reshape(28,28,1)

file_name = "test_%d.jpg"%num

#cv2.imwrite(file_name,image1_np)

#print(input_data)

input_name = onnx_model.get_inputs()[0].name

# inference

start_time = time.time()

output = onnx_model.run(None, {input_name: input_data})

end_time = time.time()

inference_time.append(end_time - start_time)

# 处理输出结果

output = torch.tensor(output[0]) # 将输出转换为 PyTorch 张量

#print(output_tensor)

# 输出结果处理和后续操作...

pred =np.argmax(output)

print("------------------------The num of this pic is ", num, pred,"use time ",inference_time[num]*1000,"ms")

mean = (sum(inference_time) / len(inference_time))*1000

print("loop ",num+1,"times","average time",mean,"ms")

执行上面代码,会生成model.onnx模型

四、转成RKNN并测试

训练和导出onnx是在租用的服务器上操作,转成RKNN模型需要在搭建好的虚拟机里操作。

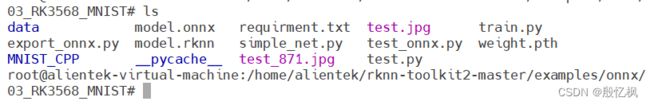

在rknn-toolkit2-master/examples/onnx/目录下新建一个03_RK3568_MNIST文件夹

主要要两个文件model.onnx和test.py,model.onnx为上面导出的模型,test.py代码如下:

test.py

import os

import urllib

import traceback

import time

import sys

import numpy as np

import cv2

from rknn.api import RKNN

ONNX_MODEL = 'model.onnx'

RKNN_MODEL = 'model.rknn'

if __name__ == '__main__':

# Create RKNN object

rknn = RKNN()

# pre-process config

print('--> Config model')

rknn.config(target_platform='rk3568')

print('done')

# Load ONNX model

print('--> Loading model')

ret = rknn.load_onnx(model=ONNX_MODEL)

if ret != 0:

print('Load model failed!')

exit(ret)

print('done')

# Build model

print('--> Building model')

ret = rknn.build(do_quantization=False)

if ret != 0:

print('Build model failed!')

exit(ret)

print('done')

# Export RKNN model

print('--> Export RKNN model')

ret = rknn.export_rknn(RKNN_MODEL)

if ret != 0:

print('Export resnet50v2.rknn failed!')

exit(ret)

print('done')

# Set inputs

with open("./data/MNIST/raw/train-images-idx3-ubyte","rb") as f:

file=f.read()

num=100

i = 16+784*num

image1 = [int(str(item).encode('ascii'),16) for item in file[i:i+784]]

input_data = np.array(image1,dtype=np.float32).reshape(1,28,28,1)

#save the image

image1_np = np.array(image1,dtype=np.uint8).reshape(28,28,1)

file_name = "test.jpg"

cv2.imwrite(file_name,image1_np)

# init runtime environment

print('--> Init runtime environment')

ret = rknn.init_runtime()

if ret != 0:

print('Init runtime environment failed')

exit(ret)

print('done')

# Inference

print('--> Running model')

outputs = rknn.inference(inputs=input_data)

x = outputs[0]

output = np.exp(x)/np.sum(np.exp(x))

outputs = np.argmax([output])

print("----------outputs----------",outputs)

print('done')

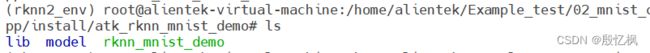

rknn.release()运行前要先进入conda的虚拟环境

conda activate rknn2_env激活环境后,运行转换及测试

python test.py运行结束后,会在当前目录下生成rknn模型,并测试正常。

五、部署到开发板并测试

测试使用的是CPP方式,我直接拷贝了yolov5的一份代码,替换了main.cc文件,重新编译

main.cc放在src里,model放的是模型,其他文件都是正点原子提供的,可以修改,也可以不改

编译后文件在install里

把rknn_mnist_demo和模型上传到开发板,还有数据集./model/data/MNIST/raw/train-images-idx3-ubyte也通过adb方式上传到开发板,运行测试和在上面测试onnx结果是相同的。

main.cc

/*-------------------------------------------

Includes

-------------------------------------------*/

#include

#include

#include

#include

#include

#include

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/imgcodecs.hpp"

#include "rknn_api.h"

using namespace std;

using namespace cv;

const int MODEL_IN_WIDTH = 28;

const int MODEL_IN_HEIGHT = 28;

const int MODEL_CHANNEL = 1;

int ret=0;

int loop_count=8000;

/*-------------------------------------------

Functions

-------------------------------------------*/

static inline int64_t getCurrentTimeUs()

{

struct timeval tv;

gettimeofday(&tv, NULL);

return tv.tv_sec * 1000000 + tv.tv_usec;

}

static void dump_tensor_attr(rknn_tensor_attr* attr) //dump tensor message

{

printf(" index=%d, name=%s, n_dims=%d, dims=[%d, %d, %d, %d], n_elems=%d, size=%d, fmt=%s, type=%s, qnt_type=%s, "

"zp=%d, scale=%f\n",

attr->index, attr->name, attr->n_dims, attr->dims[0], attr->dims[1], attr->dims[2], attr->dims[3],

attr->n_elems, attr->size, get_format_string(attr->fmt), get_type_string(attr->type),

get_qnt_type_string(attr->qnt_type), attr->zp, attr->scale);

}

static unsigned char *load_model(const char *filename, int *model_size) //load model

{

FILE *fp = fopen(filename, "rb");

if(fp == nullptr) {

printf("fopen %s fail!\n", filename);

return NULL;

}

fseek(fp, 0, SEEK_END);

int model_len = ftell(fp);

unsigned char *model = (unsigned char*)malloc(model_len);

fseek(fp, 0, SEEK_SET);

if(model_len != fread(model, 1, model_len, fp)) {

printf("fread %s fail!\n", filename);

free(model);

return NULL;

}

*model_size = model_len;

if(fp) {

fclose(fp);

}

return model;

}

void Bubble_sort(float *buffer)

{

float temp=0;

for(int i = 0; i < 10; i++){

for(int j=0;j<10-i-1;j++){

if(buffer[j]>buffer[j+1]){

temp=buffer[j];

buffer[j]=buffer[j+1];

buffer[j+1]=temp;

}

}

}

}

void Load_data(int num,unsigned char * input_image)

{

int j=16+784*num;

FILE *file = fopen("./model/data/MNIST/raw/train-images-idx3-ubyte", "rb");

if (file == NULL) {

printf("can't open the file!\n");

}

fseek(file,j,SEEK_SET);

fread(input_image,sizeof(char),784,file);

/* for(int i=0;i \n", argv[0]);

return -1;

}

// Load RKNN Model

printf("-------------load rknn model\n");

model = load_model(model_path, &model_len);

ret = rknn_init(&ctx, model, model_len, RKNN_FLAG_COLLECT_PERF_MASK, NULL);

//ret = rknn_init(&ctx, model, model_len, 0, NULL);

if(ret < 0) {

printf("rknn_init fail! ret=%d\n", ret);

return -1;

}

printf("--------------done\n");

// Get Model Input and Output Info

rknn_input_output_num io_num;

ret = rknn_query(ctx, RKNN_QUERY_IN_OUT_NUM, &io_num, sizeof(io_num));

if (ret != RKNN_SUCC) {

printf("rknn_query fail! ret=%d\n", ret);

return -1;

}

printf("model input num: %d, output num: %d\n", io_num.n_input, io_num.n_output);

//get input tensor message

printf("input tensors:\n");

rknn_tensor_attr input_attrs[io_num.n_input];

memset(input_attrs, 0, sizeof(input_attrs));

get_tensor_message(ctx,input_attrs,io_num.n_input,1);

//get output tensor message

printf("output tensors:\n");

rknn_tensor_attr output_attrs[io_num.n_output];

memset(output_attrs, 0, sizeof(output_attrs));

get_tensor_message(ctx,output_attrs,io_num.n_output,0);

for(int i=0;i= 0) {

rknn_destroy(ctx);

}

if(model) {

free(model);

}

return 0;

} 如有侵权,或需要完整代码,请及时联系博主。