安装spark并配置高可用

0、说明

上一篇文章讲了如何安装hadoop,这里将spark的详细安装步骤记录在这里。

其中实现了spark的高可用配置,即将zookeeper配置到spark集群中。对于资源管理也配置了yarn模。并开启了spark-sql的配置,可以通过jdbc链接spark。

- spark 集群安装

- zookeeper配置

- yarn配置

- 配置spark-sql

1、 普通安装

1、下载

wget https://mirrors.tuna.tsinghua.edu.cn/apache/spark/spark-3.0.2/spark-3.0.2-bin-hadoop3.2.tgz

2、规划

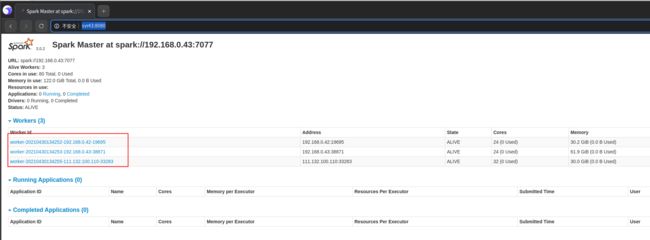

三台机器,master节点,一主一备,worker节点,三个都是worker

| svr43 | server42 | server37 | |

|---|---|---|---|

| ip | 192.168.0.43 | 192.168.0.42 | 192.168.0.37 |

| master | yes | N | yes |

| worker | yes | yes | yes |

| zookeeper | zookeeper | zookeeper |

3、解压

tar -zxvf spark-3.1.1-bin-without-hadoop.tgz

4、配置(三台机器保持一样的配置)

cd spark-3.1.1-bin-without-hadoop/conf

- 配置spark-env.sh

airwalk@svr43:~/bigdata/soft/spark-3.1.1-bin-without-hadoop/conf$ mv spark-env.sh.template spark-env.sh

airwalk@svr43:~/bigdata/soft/spark-3.1.1-bin-without-hadoop/conf$ vim spark-env.sh

# 添加如下内容,设置java_hone,设置集群的master机器名或者机器的ip地址

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export SPARK_MASTER_HOST=192.168.0.43

- 配置worker

airwalk@svr43:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/conf$ mv workers.template workers

vim workers

# 添加如下内容,可以添加主机名,也可以添加ip地址,这里使用的是ip地址

# A Spark Worker will be started on each of the machines listed below.

192.168.0.43

192.168.0.42

192.168.0.37

- 配置日志

airwalk@svr43:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/conf$ mv log4j.properties.template log4j.properties

airwalk@svr43:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/conf$ vim log4j.properties

- 配置环境变量

# 配置spark的环境变量

sudo vim /etc/profile

# SPARK_HOME

export SPARK_HOME=/home/airwalk/bigdata/soft/spark-3.0.2-bin-hadoop3.2

export PATH=$SPARK_HOME/bin:$PATH

# 使文件生效

source /etc/profile

5、启动

# 在master机器上启动一下命令

./sbin/start-all.sh

6、查看

# dakai

http://svr43:8080/

7、配置历史记录

# 一下配置需要在所有节点上配置

airwalk@server42:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/conf$ mv spark-defaults.conf.template spark-defaults.conf

airwalk@server42:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/conf$ vim spark-defaults.conf

# 添加如下内容

spark.eventLog.enabled true

spark.eventLog.dir hdfs://192.168.0.43:9000/directory

# 创建目录 directory

hdfs dfs -mkdir /directory

# 修改spark-env.sh

vim spark-env.sh

# 添加如下内容

export SPARK_HISTORY_OPTS="

-Dspark.history.ui.port=18080

-Dspark.history.fs.logDirectory=hdfs://192.168.0.43:9000/directory

-Dspark.history.retainedApplications=30"

# web-ui 端口号

# 日志存储的路径

# 指定保存applications历史记录保存的个数,如果超过这个值,旧的信息就会被删除,这个是内存中的应用数,而不是页面上显示的应用数

# 重启服务,master上执行

./sbin/stop-all.sh

./sbin/start-all.sh

./sbin/start-history-server.sh

# 测试

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master spark://192.168.0.43:7077 \

examples/jars/spark-examples_2.12-3.0.2.jar \

10

# 查看历史web

http://svr43:18080/

2、 * spark的高可用配置

三台机器,master节点,一主一备,worker节点,三个都是worker。主节点之间的协调通过zookeeper来实现

| svr43 | server42 | server37 | |

|---|---|---|---|

| ip | 192.168.0.43 | 192.168.0.42 | 192.168.0.37 |

| master | yes | N | yes |

| worker | yes | yes | yes |

| zookeeper | zookeeper | zookeeper |

1、安装zookeeper*

# 下载

wget https://mirrors.tuna.tsinghua.edu.cn/apache/zookeeper/zookeeper-3.6.3/apache-zookeeper-3.6.3-bin.tar.gz

# 解压

tar -zxvf apache-zookeeper-3.6.3-bin.tar.gz

mv apache-zookeeper-3.6.3-bin/ zookeeper

# 创建数据目录和日志目录

mkdir data

mkdir log

pwd

/home/airwalk/bigdata/soft/zookeeper

# 配置zoo.cfg

airwalk@svr43:~/bigdata/soft/zookeeper$ cd conf/

airwalk@svr43:~/bigdata/soft/zookeeper/conf$ ls

configuration.xsl log4j.properties zoo_sample.cfg

airwalk@svr43:~/bigdata/soft/zookeeper/conf$ mv zoo_sample.cfg zoo.cfg

airwalk@svr43:~/bigdata/soft/zookeeper/conf$ vim zoo.cfg

# 添加如下内容

dataDir=/home/airwalk/bigdata/soft/zookeeper/data

dataLogDir=/home/airwalk/bigdata/soft/zookeeper/log

clientPort=2181

server.0=192.168.0.43:2888:3888

server.1=192.168.0.42:2888:3888

server.2=192.168.0.37:2888:3888

# 配置zookeeper的环境变量

sudo vim /etc/profile

# ZOOKPEER_HOME

export ZOOKEEPER_HOME=/home/airwalk/bigdata/soft/zookeeper

export PATH=$ZOOKEEPER_HOME/bin:$PATH

# 使文件生效

source /etc/profile

# 配置id

# 在master节点上配置为0,余下的分别配置为1,2 跟zoo.cfg中的编号保持一致

cd data

echo 0 > myid

# 启动,如果环境变量设置失败的话,需要到bin目录下执行

zkServer.sh start

# 查看

zkServer.sh status

airwalk@server37:~/bigdata/soft/zookeeper/data$ zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/airwalk/bigdata/soft/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

2、配置

- spark-env.sh

vim spark-env.sh

# 这个注释掉

# export SPARK_MASTER_HOST=192.168.0.43

SPARK_MASTER_WEBUI_PORT=8989

export SPARK_DAEMON_JAVA_OPTS="

-Dspark.deploy.recoveryMode=ZOOKEEPER

-Dspark.deploy.zookeeper.url=192.168.0.43,192.168.0.42,192.168.0.37

-Dspark.deploy.zookeeper.dir=/spark"

- 重启集群

./sbin/stop-all.sh

./sbin/start-all.sh

# 在备用master上启动master

# 192.168.0.37

airwalk@server37:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/sbin$ jps

48130 Worker

46650 QuorumPeerMain

43995 SecondaryNameNode

43789 DataNode

48351 Jps

airwalk@server37:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/sbin$ ./start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /home/airwalk/bigdata/soft/spark-3.0.2-bin-hadoop3.2/logs/spark-airwalk-org.apache.spark.deploy.master.Master-1-server37.out

airwalk@server37:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/sbin$ jps

48130 Worker

48405 Master

46650 QuorumPeerMain

43995 SecondaryNameNode

43789 DataNode

48575 Jps

- 查看

# 主节点

http://192.168.0.43:8989/

# 从节点

http://192.168.0.37:8989/

3、 *spark的yarn模式配置

- 修改hadoop的yarn-site.xml文件

<property>

<name>yarn.nodemanager.pmem-check-enabledname>

<value>falsevalue>

property>

<property>

<name>yarn.nodemanager.vmem-check-enabledname>

<value>falsevalue>

property>

- 配置spark-env.sh

YARN_CONF_DIR=/home/airwalk/bigdata/soft/hadoop-2.10.1/etc/hadoop

- 重启hdfs,yarn,spark

# 重启hdfs,yarn

airwalk@svr43:~/bigdata/soft/hadoop-2.10.1/sbin$ ./stop-dfs.sh

airwalk@server42:~/bigdata/soft/hadoop-2.10.1/sbin$ ./stop-yarn.sh

airwalk@svr43:~/bigdata/soft/hadoop-2.10.1/sbin$ ./start-dfs.sh

airwalk@server42:~/bigdata/soft/hadoop-2.10.1/sbin$ ./start-yarn.sh

# 重启spark

airwalk@svr43:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/sbin$ ./stop-all.sh

airwalk@server37:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/sbin$ ./stop-master.sh

airwalk@svr43:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/sbin$ ./start-all.sh

airwalk@server37:~/bigdata/soft/spark-3.0.2-bin-hadoop3.2/sbin$ ./start-master.sh

- 测试spark

# 测试

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master yarn \

--deploy-mode client \

examples/jars/spark-examples_2.12-3.0.2.jar \

10

- 查看web-ui

http://192.168.0.43:8989/

4、 启动spark-sql

- 启动zookeeper

zkServer.sh start

- 启动hadoop

./start-dfs.sh;

./start-yarn.sh

- 启动spark

./start-all.sh

- 首先启动

内网连接:

sbin/start-thriftserver.sh --master spark://10.9.2.100:7077 --driver-class-path /usr/local/hive-1.2.1/lib/mysql-connector-java-5.1.31-bin.jar

外网连接:

./start-thriftserver.sh --hiveconf hive.server2.thrift.port=10000 --hiveconf hive.server2.thrift.bind.host=svr43

- 其次使用beeline启动

netstat -lnut | grep 10000

beeline -u jdbc:hive2://192.168.0.43:10000