php提交spark,spark任务提交之SparkLauncher

最近需要做一个UI,在UI上做一个可以提交的spark程序的功能;

1-zeppelin就是这样的一个工具,其内部也是比较繁琐的。有兴趣的可以了解下。

2-SparkLauncher,spark自带的类

linux下其基本用法:

public static voidmain(String[] args) throws Exception {

HashMap envParams = new HashMap<>();

envParams.put("YARN_CONF_DIR", "/home/hadoop/cluster/hadoop-release/etc/hadoop");

envParams.put("HADOOP_CONF_DIR", "/home/hadoop/cluster/hadoop-release/etc/hadoop");

envParams.put("SPARK_HOME", "/home/hadoop/cluster/spark-new");

envParams.put("SPARK_PRINT_LAUNCH_COMMAND", "1");

SparkAppHandle spark= newSparkLauncher(envParams)

.setAppResource("/home/hadoop/cluster/spark-new/examples/jars/spark-examples_2.11-2.2.1.jar")

.setMainClass("org.apache.spark.examples.SparkPi")

.setMaster("yarn")

.startApplication();

Thread.sleep(100000);

}

运行结果:

信息: 18/12/03 18:12:12 INFO scheduler.DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 1.462s

十二月03, 2018 6:12:12下午 org.apache.spark.launcher.OutputRedirector redirect

信息:18/12/03 18:12:12 INFO scheduler.DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 3.395705s

十二月03, 2018 6:12:12下午 org.apache.spark.launcher.OutputRedirector redirect

信息: Piis roughly 3.1461157305786527

windows下运行:

如果linux能运行,那就安装windows下所依赖包,包含jdk,hadoop,scala,spark;

可以参考https://blog.csdn.net/u011513853/article/details/52865076

代码贴上:

public classSparkLauncherTest {private static String YARN_CONF_DIR = null;private static String HADOOP_CONF_DIR = null;private static String SPARK_HOME = null;private static String SPARK_PRINT_LAUNCH_COMMAND = "1";private static String Mater = null;private static String appResource = null;private static String mainClass = null;public static voidmain(String[] args) throws Exception {if (args.length != 1) {

System.out.println("Usage: ServerStatisticSpark ");

System.exit(1);

}

TrackerConfig trackerConfig=TrackerConfig.loadConfig();if ("local".equals(args[0])){

YARN_CONF_DIR="D:\\software\\hadoop-2.4.1\\etc\\hadoop";

HADOOP_CONF_DIR="D:\\software\\hadoop-2.4.1\\etc\\hadoop";

SPARK_HOME="D:\\spark-new";

Mater= "local";

appResource= "D:\\spark-new\\examples\\jars\\spark-examples_2.11-2.2.1.jar";

}else{

YARN_CONF_DIR="/home/hadoop/cluster/hadoop-release/etc/hadoop";

HADOOP_CONF_DIR="/home/hadoop/cluster/hadoop-release/etc/hadoop";

SPARK_HOME="/home/hadoop/cluster/spark-new";

Mater= "yarn";

appResource= "/home/hadoop/cluster/spark-new/examples/jars/spark-examples_2.11-2.2.1.jar";

}

HashMap envParams = new HashMap<>();

envParams.put("YARN_CONF_DIR", YARN_CONF_DIR);

envParams.put("HADOOP_CONF_DIR", HADOOP_CONF_DIR);

envParams.put("SPARK_HOME", SPARK_HOME);

envParams.put("SPARK_PRINT_LAUNCH_COMMAND", SPARK_PRINT_LAUNCH_COMMAND);

mainClass= "org.apache.spark.examples.SparkPi";

SparkAppHandle spark= newSparkLauncher(envParams)

.setAppResource(appResource)

.setMainClass(mainClass)

.setMaster(Mater)

.startApplication();

Thread.sleep(100000);

}

}

运行结果:

信息: 18/12/04 17:01:11 INFO scheduler.DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 0.808691s

十二月04, 2018 5:01:11下午 org.apache.spark.launcher.OutputRedirector redirect

信息: Piis roughly 3.1455757278786396

遇到的问题,sparkLauncher一直运行不了;

这时hadoop,jdk都用了很长时间,排除其原因;

本地可以编写和运行scala,应该也不属于其中的问题;

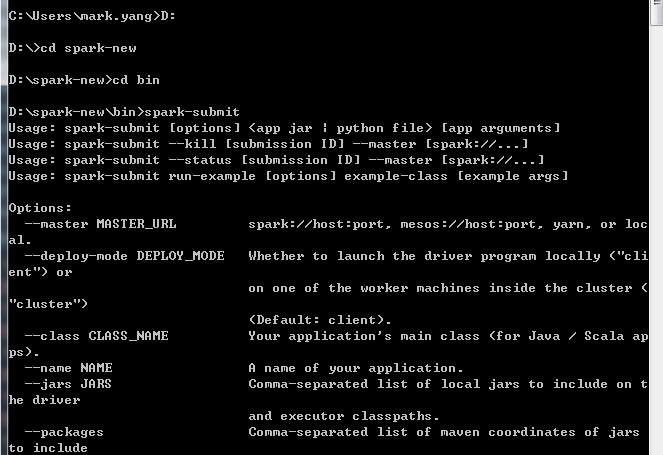

最后发现cmd运行spark\bin下的spark-submit会出现问题。于是重新拷贝linux下的spark包;

发现spark-shell可以正常运行,原来会报错:不是内部或外部命令,也不是可运行的程序或批处理文件

现在还存在的问题:

打jar包时,会有部分类打不进去,报错信息类没有找到;

等UI做成后,会更新整个流程。