Linux中的特殊进程:idle进程(0号进程)、init进程(1号进程,被systemd 取代 )、kthreadd进程(2号进程)

文章目录

- 1. Linux中的三个特殊进程:

- 2. idle进程、init进程、kthreadd进程的创建

- 3 kthreadd进程

-

- 3.1 kthreadd进程的启动

- 3.2 kthreadd进程创建子进程

- 3.3 kthreadd的工作流程总结

- 4. init进程

-

- 4.1 init进程的启动

- 4. 总结

- 参考

1. Linux中的三个特殊进程:

idle进程,或者也称为swapper进程。:

-

该进程是Linux中的第一个进程(线程),PID为0;

-

idle进程是init进程和kthreadd进程(内核线程)的父进程;

init进程:

-

init进程是Linux中第一个用户空间的进程,PID为1;

-

init进程是其他用户空间进程的直接或间接父进程;

需要注意的是,在新版本的centos系统中,已经使用 systemd 取代 init 作为1号进程

kthreadd(内核线程):

-

kthreadd线程是内核空间其他内核线程的直接或间接父进程,PID为2;

-

kthreadd线程负责内核线程的创建工作;

2. idle进程、init进程、kthreadd进程的创建

kthreadd进程是在内核初始化start_kernel()的最后rest_init()函数中,由0号进程(swapper进程)创建了两个进程:

- init进程(PID = 1, PPID = 0)

- kthreadd进程(PID = 2, PPID = 0)

在start_kernel函数中调用了rest_init函数,在rest_init函数中创建了kernel_init、kthreadd内核线程:

rest_init是idle进程调用的,因此 idle进程 是所有进程的最上层ppid,即0号进程

noinline void __ref rest_init(void)

{

struct task_struct *tsk;

int pid;

rcu_scheduler_starting();

/*

* We need to spawn init first so that it obtains pid 1, however

* the init task will end up wanting to create kthreads, which, if

* we schedule it before we create kthreadd, will OOPS.

*/

pid = kernel_thread(kernel_init, NULL, CLONE_FS); //创建init进程,入参kernel_init是进程的执行体,类似java线程的run函数

/*

* Pin init on the boot CPU. Task migration is not properly working

* until sched_init_smp() has been run. It will set the allowed

* CPUs for init to the non isolated CPUs.

*/

rcu_read_lock();

tsk = find_task_by_pid_ns(pid, &init_pid_ns);

set_cpus_allowed_ptr(tsk, cpumask_of(smp_processor_id()));

rcu_read_unlock();

numa_default_policy();

pid = kernel_thread(kthreadd, NULL, CLONE_FS | CLONE_FILES); //创建kthreadd进程,入参kthreadd是进程的执行体,类似java线程的run函数

rcu_read_lock();

kthreadd_task = find_task_by_pid_ns(pid, &init_pid_ns);

rcu_read_unlock();

/*

* Enable might_sleep() and smp_processor_id() checks.

* They cannot be enabled earlier because with CONFIG_PREEMPTION=y

* kernel_thread() would trigger might_sleep() splats. With

* CONFIG_PREEMPT_VOLUNTARY=y the init task might have scheduled

* already, but it's stuck on the kthreadd_done completion.

*/

system_state = SYSTEM_SCHEDULING;

complete(&kthreadd_done); // 等待kthreadd创建完毕

/*

* The boot idle thread must execute schedule()

* at least once to get things moving:

*/

schedule_preempt_disabled();

/* Call into cpu_idle with preempt disabled */

cpu_startup_entry(CPUHP_ONLINE);

}

3 kthreadd进程

kthreadd就是Linux的2号进程,这个进程在Linux内核中非常的重要,他是其他内核线程的父进程或者祖先进程(这个可以通过上面的PPID为2的进程可以看出,这些重要线程包括kworker、kblockd、khugepaged…),即其他进程都是通过2号进程创建的,2号进程轮询创建进程的任务队列,下面便慢慢来介绍下kthreadd进程。

3.1 kthreadd进程的启动

kthreadd是进程的执行体,我们看看这个进程干了啥?:

int kthreadd(void *unused)

{

struct task_struct *tsk = current;

/* Setup a clean context for our children to inherit. */

set_task_comm(tsk, "kthreadd");

ignore_signals(tsk);

set_cpus_allowed_ptr(tsk, housekeeping_cpumask(HK_FLAG_KTHREAD)); /*允许kthreadd在任意cpu上执行*/

set_mems_allowed(node_states[N_MEMORY]);

current->flags |= PF_NOFREEZE;

cgroup_init_kthreadd();

for (;;) {

set_current_state(TASK_INTERRUPTIBLE);

if (list_empty(&kthread_create_list)) /* 判断内核线程链表是否为空 */

schedule(); /* 若没有需要创建的内核线程,进行一次调度,让出cpu */

__set_current_state(TASK_RUNNING);

spin_lock(&kthread_create_lock);

while (!list_empty(&kthread_create_list)) { /*依次取出任务*/

struct kthread_create_info *create;

create = list_entry(kthread_create_list.next,

struct kthread_create_info, list);

list_del_init(&create->list); /*从任务列表中摘除*/

spin_unlock(&kthread_create_lock);

/* 只要kthread_create_list不为空,就根据表中元素创建内核线程 */

create_kthread(create); //创建子进程

spin_lock(&kthread_create_lock);

}

spin_unlock(&kthread_create_lock);

}

return 0;

}

从上述代码中可以看出:kthreadd进程的任务就是不断轮询,等待创建线程,如果任务队列为空,则线程主动让出cpu(调用schedule后会让出cpu,本线程会睡眠):如果不为空,则依次从任务队列中取出任务,然后创建相应的线程。如此往复,直到永远…

3.2 kthreadd进程创建子进程

从上一个章节,我们知道kthreadd进程负责轮询队列,创建子进程,那么我们看看是如何创建子进程的

创建子进程,会调用create_kthread函数,在create_kthread函数中会通过调用kernel_thread函数来创建新进程,且新进程的执行函数为kthread(类似java线程的run方法)

static void create_kthread(struct kthread_create_info *create)

{

int pid;

#ifdef CONFIG_NUMA

current->pref_node_fork = create->node;

#endif

/* We want our own signal handler (we take no signals by default). */

pid = kernel_thread(kthread, create, CLONE_FS | CLONE_FILES | SIGCHLD);/*开始创建线程,会阻塞*/

if (pid < 0) {

/* If user was SIGKILLed, I release the structure. */

struct completion *done = xchg(&create->done, NULL);

if (!done) {

kfree(create);

return;

}

create->result = ERR_PTR(pid);

complete(done);

}

}

kernel_thread接口刚才在rest_init接口中遇到过,内核就是通过kernel_thread接口创建的init进程和kthreadd进程。这里再次使用它创建新线程,新的线程执行体统一为kthead。下面我们看看kthread函数的内容:

static int kthread(void *_create)

{

/* Copy data: it's on kthread's stack */

struct kthread_create_info *create = _create;

int (*threadfn)(void *data) = create->threadfn;

void *data = create->data;

struct completion *done;

struct kthread *self;

int ret;

self = kzalloc(sizeof(*self), GFP_KERNEL);

set_kthread_struct(self);

/* If user was SIGKILLed, I release the structure. */

done = xchg(&create->done, NULL);

if (!done) {

kfree(create);

do_exit(-EINTR);

}

if (!self) {

create->result = ERR_PTR(-ENOMEM);

complete(done);

do_exit(-ENOMEM);

}

self->data = data;

init_completion(&self->exited);

init_completion(&self->parked);

current->vfork_done = &self->exited;

/* OK, tell user we're spawned, wait for stop or wakeup */

__set_current_state(TASK_UNINTERRUPTIBLE);

create->result = current;

complete(done);

schedule();/*睡眠,一直。直到被唤醒*/

ret = -EINTR;

if (!test_bit(KTHREAD_SHOULD_STOP, &self->flags)) {/*唤醒后如果此线程不需要stop*/

cgroup_kthread_ready();

__kthread_parkme(self);

ret = threadfn(data);/*执行指定的函数体*/

}

do_exit(ret);

}

从kthread函数可以看出,新线程创建成功后,会一直睡眠(使用schedule主动让出CPU并睡眠),直到有人唤醒它(wake_up_process);线程被唤醒后,并且不需要stop, 则执行指定的函数体( threadfn(data) )。

3.3 kthreadd的工作流程总结

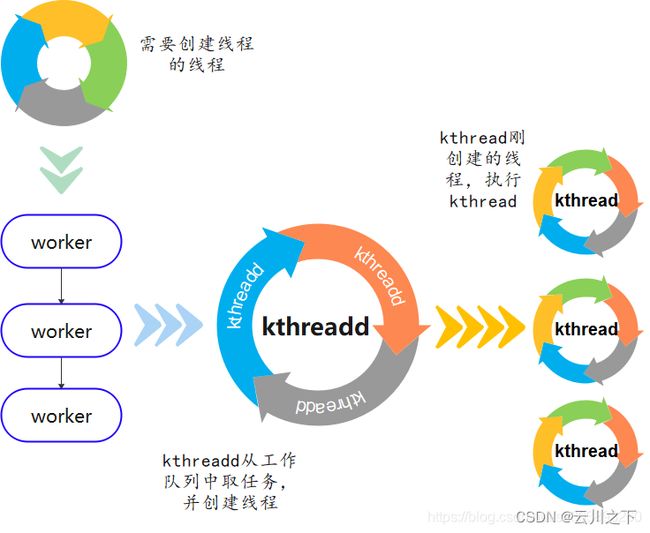

①某一个线程A(左上那个圈)调用kthread_create函数来创建新线程,调用后阻塞;kthread_create会将任务封装后添加到kthreadd监控的工作队列中;

②kthreadd进程检测到工作队列中有任务,则结束休眠状态,通过调用create_kthread函数创建线程,最后调用到kernel_thread --> do_fork来创建线程,且新线程执行体为kthead

③新线程创建成功后,执行kthead,kthreadd线程则继续睡眠等待创建新进程;

④线程A调用kthread_create返回后,在合适的时候通过wake_up_process(pid)来唤醒新创建的线程

⑤新创建的线程在kthead执行体中被唤醒,检测到是否需要stop,在不需要stop时,执行用户指定的线程执行体。(线程执行体发生了变化:先执行默认的kthead,然后才是用户指定的threadfn,当然也可能直接执行do_exit退出线程)

4. init进程

更多init进程作用参见 【linux】init进程的详解

init进程由idle通过kernel_thread创建,在内核空间完成初始化后,加载init程序

在这里我们就主要讲解下init进程,init进程由0进程创建,完成系统的初始化,是系统中所有其他用户进程的祖先进程

Linux中的所有进程都是由init进程创建并运行的。首先Linux内核启动,然后在用户空间中启动init进程,再启动其他系统进程。在系统启动完成后,init将变成为守护进程监视系统其他进程。

所以说init进程是Linux系统操作中不可缺少的程序之一,如果内核找不到init进程就会试着运行/bin/sh,如果运行失败,系统的启动也会失败。

4.1 init进程的启动

从前文得知,创建1号进程,传入 kernel_init方法体,我们看下做了什么:

static int __ref kernel_init(void *unused)

{

int ret;

kernel_init_freeable(); //kernel_init_freeable函数中就会做各种外设驱动的初始化

/* need to finish all async __init code before freeing the memory */

async_synchronize_full();

kprobe_free_init_mem();

ftrace_free_init_mem();

free_initmem();

mark_readonly();

/*

* Kernel mappings are now finalized - update the userspace page-table

* to finalize PTI.

*/

pti_finalize();

system_state = SYSTEM_RUNNING;

numa_default_policy();

rcu_end_inkernel_boot();

do_sysctl_args();

if (ramdisk_execute_command) {

ret = run_init_process(ramdisk_execute_command);

if (!ret)

return 0;

pr_err("Failed to execute %s (error %d)\n",

ramdisk_execute_command, ret);

}

/*

* We try each of these until one succeeds.

*

* The Bourne shell can be used instead of init if we are

* trying to recover a really broken machine.

*/

if (execute_command) {

ret = run_init_process(execute_command);

if (!ret)

return 0;

panic("Requested init %s failed (error %d).",

execute_command, ret);

}

if (CONFIG_DEFAULT_INIT[0] != '\0') {

ret = run_init_process(CONFIG_DEFAULT_INIT);

if (ret)

pr_err("Default init %s failed (error %d)\n",

CONFIG_DEFAULT_INIT, ret);

else

return 0;

}

/* 调用系统根目录下的init文件,会在以下几个路径中加载init进程 */

/* 在Android源码中,init进程源码的位置为:https://www.androidos.net.cn/android/10.0.0_r6/xref/system/core/init/init.cpp */

if (!try_to_run_init_process("/sbin/init") ||

!try_to_run_init_process("/etc/init") ||

!try_to_run_init_process("/bin/init") ||

!try_to_run_init_process("/bin/sh"))

return 0;

panic("No working init found. Try passing init= option to kernel. "

"See Linux Documentation/admin-guide/init.rst for guidance.");

}

- kernel_init_freeable函数中就会做各种外设驱动的初始化

- 最主要的工作就是调用 init可以执行文件,即开机自动运行的程序,通过Init配置文件在约定的路径下,会被自动读取

至此1号进程就完美的创建成功了,而且也成功执行了init可执行文件

4. 总结

总结:

- linux启动的第一个进程是0号进程,是静态创建的

- 在0号进程启动后会接连创建两个进程,分别是1号进程和2和进程。

- 1号进程最终会去调用可init可执行文件,init进程最终会去创建所有的应用进程。

- 2号进程会在内核中负责创建所有的内核线程

- 所以说0号进程是1号和2号进程的父进程;1号进程是所有用户态进程的父进程;2号进程是所有内核线程的父进程。

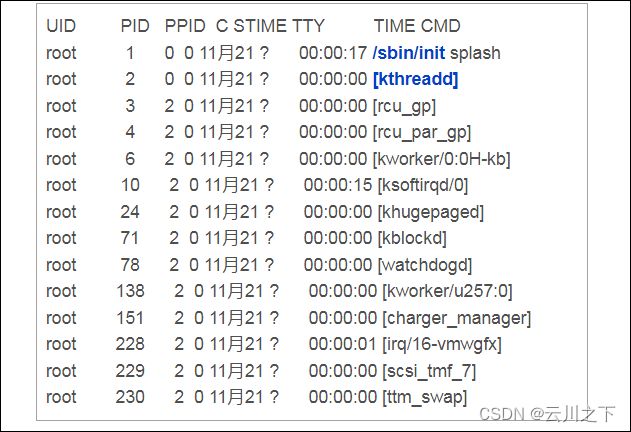

我们通过ps命令就可以详细的观察到这一现象:

root@ubuntu:zhuxl$ ps -eF

UID PID PPID C SZ RSS PSR STIME TTY TIME CMD

root 1 0 0 56317 5936 2 Feb16 ? 00:00:04 /sbin/init

root 2 0 0 0 0 1 Feb16 ? 00:00:00 [kthreadd]

上面很清晰的显示:PID=1的进程是init,PID=2的进程是kthreadd。而他们俩的父进程PPID=0,也就是0号进程。

再来看下,所有内核线性的PPI=2, 也就是所有内核线性的父进程都是kthreadd进程。

UID PID PPID C SZ RSS PSR STIME TTY TIME CMD

root 4 2 0 0 0 0 Feb16 ? 00:00:00 [kworker/0:0H]

root 6 2 0 0 0 0 Feb16 ? 00:00:00 [mm_percpu_wq]

root 7 2 0 0 0 0 Feb16 ? 00:00:10 [ksoftirqd/0]

root 8 2 0 0 0 1 Feb16 ? 00:02:11 [rcu_sched]

root 9 2 0 0 0 0 Feb16 ? 00:00:00 [rcu_bh]

root 10 2 0 0 0 0 Feb16 ? 00:00:00 [migration/0]

root 11 2 0 0 0 0 Feb16 ? 00:00:00 [watchdog/0]

root 12 2 0 0 0 0 Feb16 ? 00:00:00 [cpuhp/0]

root 13 2 0 0 0 1 Feb16 ? 00:00:00 [cpuhp/1]

root 14 2 0 0 0 1 Feb16 ? 00:00:00 [watchdog/1]

root 15 2 0 0 0 1 Feb16 ? 00:00:00 [migration/1]

root 16 2 0 0 0 1 Feb16 ? 00:00:11 [ksoftirqd/1]

root 18 2 0 0 0 1 Feb16 ? 00:00:00 [kworker/1:0H]

root 19 2 0 0 0 2 Feb16 ? 00:00:00 [cpuhp/2]

root 20 2 0 0 0 2 Feb16 ? 00:00:00 [watchdog/2]

root 21 2 0 0 0 2 Feb16 ? 00:00:00 [migration/2]

root 22 2 0 0 0 2 Feb16 ? 00:00:11 [ksoftirqd/2]

root 24 2 0 0 0 2 Feb16 ? 00:00:00 [kworker/2:0H]

UID PID PPID C SZ RSS PSR STIME TTY TIME CMD

root 362 1 0 21574 6136 2 Feb16 ? 00:00:03 /lib/systemd/systemd-journald

root 375 1 0 11906 2760 3 Feb16 ? 00:00:01 /lib/systemd/systemd-udevd

systemd+ 417 1 0 17807 2116 3 Feb16 ? 00:00:02 /lib/systemd/systemd-resolved

systemd+ 420 1 0 35997 788 3 Feb16 ? 00:00:00 /lib/systemd/systemd-timesyncd

root 487 1 0 43072 6060 0 Feb16 ? 00:00:00 /usr/bin/python3 /usr/bin/networkd-dispatcher --run-startup-triggers

root 489 1 0 8268 2036 2 Feb16 ? 00:00:00 /usr/sbin/cron -f

root 490 1 0 1138 548 0 Feb16 ? 00:00:01 /usr/sbin/acpid

root 491 1 0 106816 3284 1 Feb16 ? 00:00:00 /usr/sbin/ModemManager

root 506 1 0 27628 2132 2 Feb16 ? 00:00:01 /usr/sbin/irqbalance --foreground

所有用户态的进程的父进程PPID=1,也就是1号进程都是他们的父进程。

参考

Linux中的特殊进程:idle进程、init进程、kthreadd进程

Linux内核学习之2号进程kthreadd

Linux0号进程,1号进程,2号进程