Kubernetes 三节点安装-完整可用

0.引言

本文参考其他k8s部署文档,结合自己在部署一个完整的k8s三节点集群过程,整理出来一个清晰明了的部署文档说明,目的就是希望看到此文的你通过我的文档能够搭出一个完整可用的k8s集群。

另外,本文忽略了虚机部署的过程,因为我假设大家都是能够自己能够解决基本问题的程序员,如果解决不了基本问题,那说明Linux和虚拟机的基本操作还不够熟练,掌握了这些基本前提,查阅本文便很通俗易懂了。(我没有劝退哦)

kubeadm是官方社区推出的一个用于快速部署kubernetes集群的工具。

这个工具能通过两条指令完成一个kubernetes集群的部署:

# 创建一个 Master 节点

$ kubeadm init

# 将一个 Node 节点加入到当前集群中

$ kubeadm join

1. 安装要求

在开始之前,部署Kubernetes集群机器需要满足以下几个条件:

- 一台或多台机器,操作系统 CentOS7.x-86_x64

- 硬件配置:2GB或更多RAM,2个CPU或更多CPU,硬盘30GB或更多

- 集群中所有机器之间网络互通

- 可以访问外网,需要拉取镜像

- 禁止swap分区

2. 学习目标

- 在所有节点上安装Docker和kubeadm

- 部署Kubernetes Master

- 部署容器网络插件

- 部署 Kubernetes Node,将节点加入Kubernetes集群中

- 部署Dashboard Web页面,可视化查看Kubernetes资源

3. 准备环境

![]()

| 角色 | IP |

|---|---|

| k8s-master | 192.168.150.130 |

| k8s-node1 | 192.168.150.133 |

| k8s-node2 | 192.168.150.134 |

0. 查看linux内核

# 3.1.0 docker 和k8s不稳定,建议升级至4.4

uname -r

### 1.分别关闭防火墙:

$ systemctl stop firewalld

# 关闭防火墙自启

$ systemctl disable firewalld

### 2.1查看 setlinux 状态

$ /usr/sbin/sestatus -v

[root@dev-server ~]# getenforce

Disabled

### 2.2分别关闭selinux【重启生效】:

$ sed -i 's/enforcing/disabled/' /etc/selinux/config # 永久

或者

[root@localhost ~]# sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config && setenforce 0

### 2.3临时关闭selinux

[root@localhost ~]# setenforce 0

### 2.4重启后确认关闭

[root@k8s-master ~]# /usr/sbin/sestatus -v

SELinux status: disabled

### 3.分别关闭swap:

$ swapoff -a # 临时

$ sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久

即在 vim /etc/fstab 中注释掉:/dev/mapper/centos-swap swap swap defaults 0 0

### 4.分别设置设置主机名:

$ hostnamectl set-hostname

#master节点:

hostnamectl set-hostname k8s-master

#node1节点:

hostnamectl set-hostname k8s-node1

#node2节点:

hostnamectl set-hostname k8s-node2

### 4.1查看hostname

[root@localhost ~]# hostname

k8s-master

### 5.在master添加hosts【ip和name依据自己的虚机设置】:

$ cat >> /etc/hosts << EOF

192.168.59.156 k8s-master

192.168.59.157 k8s-node1

192.168.59.158 k8s-node2

EOF

### 6.配置master时间同步:

#6.1安装chrony:

$ yum install ntpdate -y

##$ ntpdate time.windows.com //帅超

#6.2注释默认ntp服务器

sed -i 's/^server/#&/' /etc/chrony.conf

#6.3指定上游公共 ntp 服务器,并允许其他节点同步时间

cat >> /etc/chrony.conf << EOF

server 0.asia.pool.ntp.org iburst

server 1.asia.pool.ntp.org iburst

server 2.asia.pool.ntp.org iburst

server 3.asia.pool.ntp.org iburst

allow all

EOF

#6.4重启chronyd服务并设为开机启动:

systemctl enable chronyd && systemctl restart chronyd

#6.5开启网络时间同步功能

timedatectl set-ntp true

#7.配置node节点时间同步

#7.1安装chrony:

yum install -y chrony

#7.2注释默认服务器

sed -i 's/^server/#&/' /etc/chrony.conf

#7.3指定内网 master节点为上游NTP服务器

echo server 192.168.59.156 iburst >> /etc/chrony.conf

#7.4重启服务并设为开机启动:

systemctl enable chronyd && systemctl restart chronyd

# 7.5 检查配置

所有NODE节点执行chronyc sources命令,查看存在以^*开头的行,说明已经与服务器时间同步

### 8.将桥接的IPv4流量传递到iptables的链(所有节点):

# RHEL / CentOS 7上的一些用户报告了由于iptables被绕过而导致流量路由不正确的问题。创建/etc/sysctl.d/k8s.conf文件,添加如下内容:

### 8.1配置

cat < /etc/sysctl.d/k8s.conf

vm.swappiness = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# 8.2使配置生效

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

### 帅超方法

#$ cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

$ sysctl --system # 生效

### 9.加载ipvs相关模块

由于ipvs已经加入到了内核的主干,所以为kube-proxy开启ipvs的前提需要加载以下的内核模块:

在所有的Kubernetes节点执行以下脚本:

### 9.1 配置

cat > /etc/sysconfig/modules/ipvs.modules < 4. 所有节点安装Docker / kubeadm-引导集群的工具 / kubelet-容器管理

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

4.1 安装Docker

Kubernetes默认的容器运行时仍然是Docker,使用的是kubelet中内置dockershim CRI实现。需要注意的是,Kubernetes 1.13最低支持的Docker版本是1.11.1,最高支持是18.06,而Docker最新版本已经是18.09了,故我们安装时需要指定版本为18.06.1-ce。

$ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

$ yum -y install docker-ce-18.06.1.ce-3.el7

$ systemctl enable docker && systemctl start docker

$ docker --version

Docker version 18.06.1-ce, build e68fc7a

修改镜像仓库

# cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

EOF

4.2 添加k8s阿里云YUM软件源

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

4.3 所有节点安装kubeadm,kubelet和kubectl

由于版本更新频繁,这里指定版本号部署:

$ yum install -y kubelet-1.17.0 kubeadm-1.17.0 kubectl-1.17.0

$ systemctl enable kubelet && systemctl start kubelet

5. 部署Kubernetes Master【只在master执行】

5.1在192.168.59.156(Master)执行。

由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里指定阿里云镜像仓库地址。

$ kubeadm init \

--apiserver-advertise-address=192.168.59.156 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.17.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

返回

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address=192.168.59.156 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.17.0 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

W0722 16:22:34.803927 2478 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0722 16:22:34.803970 2478 validation.go:28] Cannot validate kubelet config - no validator is available

[init] Using Kubernetes version: v1.17.0

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.59.156]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.59.156 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.59.156 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0722 16:30:42.530964 2478 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0722 16:30:42.535021 2478 manifests.go:214] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 19.558619 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.17" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 2icetc.ygcqn4j2q3lx9vw3

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.59.156:6443 --token 2icetc.ygcqn4j2q3lx9vw3 \

--discovery-token-ca-cert-hash sha256:7a437683182ff063eb7250ed9d12400447f6e191cf07f05dba0b76ce433ef7e8

(注意记录下初始化结果中的kubeadm join命令,部署worker节点时会用到)

初始化过程说明:

- [preflight] kubeadm 执行初始化前的检查。

- [kubelet-start] 生成kubelet的配置文件”/var/lib/kubelet/config.yaml”

- [certificates] 生成相关的各种token和证书

- [kubeconfig] 生成 KubeConfig 文件,kubelet 需要这个文件与 Master 通信

- [control-plane] 安装 Master 组件,会从指定的 Registry 下载组件的 Docker 镜像。

- [bootstraptoken] 生成token记录下来,后边使用kubeadm join往集群中添加节点时会用到

- [addons] 安装附加组件 kube-proxy 和 kube-dns。

Kubernetes Master 初始化成功,提示如何配置常规用户使用kubectl访问集群。

提示如何安装 Pod 网络。

提示如何注册其他节点到 Cluster。

5.2配置 kubectl

kubectl 是管理 Kubernetes Cluster 的命令行工具,前面我们已经在所有的节点安装了 kubectl。Master 初始化完成后需要做一些配置工作,然后 kubectl 就能使用了。

依照 kubeadm init 输出的最后提示,推荐用 Linux 普通用户执行 kubectl。

创建普通用户centos【可以不做配置,直接用root访问kubectl】

#创建普通用户并设置密码123456

useradd centos && echo "centos:123456" | chpasswd centos

#追加sudo权限,并配置sudo免密

sed -i '/^root/a\centos ALL=(ALL) NOPASSWD:ALL' /etc/sudoers

#保存集群安全配置文件到当前用户.kube目录

su - centos

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#启用 kubectl 命令自动补全功能(注销重新登录生效)

echo "source <(kubectl completion bash)" >> ~/.bashrc

5.3 使用kubectl工具:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 5.3.1查看节点状态可以看到,当前只存在1个master节点,并且这个节点的状态是 NotReady。

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady master 69m v1.17.0

# 5.3.2查看集群状态:确认各个组件都处于healthy状态。

[centos@k8s-master ~]$ kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

# 5.3.3使用 kubectl describe 命令来查看这个节点(Node)对象的详细信息、状态和Conditions

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

MemoryPressure False Wed, 22 Jul 2020 17:41:25 +0800 Wed, 22 Jul 2020 16:30:50 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Wed, 22 Jul 2020 17:41:25 +0800 Wed, 22 Jul 2020 16:30:50 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Wed, 22 Jul 2020 17:41:25 +0800 Wed, 22 Jul 2020 16:30:50 +0800 KubeletHasSufficientPID kubelet has sufficient PID available

Ready False Wed, 22 Jul 2020 17:41:25 +0800 Wed, 22 Jul 2020 16:30:50 +0800 KubeletNotReady runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

通过 kubectl describe 指令的输出,我们可以看到 NodeNotReady 的原因在于,我们尚未部署任何网络插件,kube-proxy等组件还处于starting状态。

另外,我们还可以通过 kubectl 检查这个节点上各个系统 Pod 的状态,其中,kube-system 是 Kubernetes 项目预留的系统 Pod 的工作空间(Namepsace,注意它并不是 Linux Namespace,它只是 Kubernetes 划分不同工作空间的单位):

# 5.3.4检查这个节点上各个系统 Pod 的状态

[root@k8s-master ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-9d85f5447-8lr7g 0/1 Pending 0 78m

coredns-9d85f5447-9d7t6 0/1 Pending 0 78m

etcd-k8s-master 1/1 Running 0 79m 192.168.59.156 k8s-master

kube-apiserver-k8s-master 1/1 Running 0 79m 192.168.59.156 k8s-master

kube-controller-manager-k8s-master 1/1 Running 0 79m 192.168.59.156 k8s-master

kube-proxy-lxdxs 1/1 Running 0 78m 192.168.59.156 k8s-master

kube-scheduler-k8s-master 1/1 Running 0 79m 192.168.59.156 k8s-master

可以看到,CoreDNS依赖于网络的 Pod 都处于 Pending 状态,即调度失败。这当然是符合预期的:因为这个 Master 节点的网络尚未就绪。

集群初始化如果遇到问题,可以使用kubeadm reset命令进行清理然后重新执行初始化。

6.部署网络插件

要让 Kubernetes Cluster 能够工作,必须安装 Pod 网络,否则 Pod 之间无法通信。

Kubernetes 支持多种网络方案,这里我们使用 flannel

执行如下命令部署 flannel:

6.1安装Pod网络插件(CNI)- master节点,node节点加入后自动下载

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

如果不下来,报错:

The connection to the server raw.githubusercontent.com was refused - did you specify the right host or port?

直接在宿主机用浏览器打开这个链接,然后把这个文件下载下来,再传到虚机里

然后在当前目录应用这个文件就可以了

[root@k8s-master ~]# ll

总用量 20

-rw-------. 1 root root 1257 7月 22 2020 anaconda-ks.cfg

-rw-r--r-- 1 root root 15014 7月 22 17:58 kube-flannel.yaml

[root@k8s-master ~]# kubectl apply -f kube-flannel.yaml

确保能够访问到quay.io这个registery。

如果Pod镜像下载失败,可以改成这个镜像地址:lizhenliang/flannel:v0.11.0-amd64

# 部署完成后,我们可以通过 kubectl get 重新检查 Pod 的状态:

[root@k8s-master ~]# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-9d85f5447-8lr7g 1/1 Running 0 91m 10.244.0.3 k8s-master

coredns-9d85f5447-9d7t6 1/1 Running 0 91m 10.244.0.2 k8s-master

etcd-k8s-master 1/1 Running 0 92m 192.168.59.156 k8s-master

kube-apiserver-k8s-master 1/1 Running 0 92m 192.168.59.156 k8s-master

kube-controller-manager-k8s-master 1/1 Running 0 92m 192.168.59.156 k8s-master

kube-flannel-ds-amd64-5hp2j 1/1 Running 0 4m2s 192.168.59.156 k8s-master

kube-proxy-lxdxs 1/1 Running 0 91m 192.168.59.156 k8s-master

kube-scheduler-k8s-master 1/1 Running 0 92m 192.168.59.156 k8s-master

可以看到,所有的系统 Pod 都成功启动了,而刚刚部署的flannel网络插件则在 kube-system 下面新建了一个名叫kube-flannel-ds-amd64-lkf2f的 Pod,一般来说,这些 Pod 就是容器网络插件在每个节点上的控制组件。

Kubernetes 支持容器网络插件,使用的是一个名叫 CNI 的通用接口,它也是当前容器网络的事实标准,市面上的所有容器网络开源项目都可以通过 CNI 接入 Kubernetes,比如 Flannel、Calico、Canal、Romana 等等,它们的部署方式也都是类似的“一键部署”。

6.2再次查看master节点状态已经为ready状态

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 99m v1.17.0

至此,Kubernetes 的 Master 节点就部署完成了。如果你只需要一个单节点的 Kubernetes,现在你就可以使用了。不过,在默认情况下,Kubernetes 的 Master 节点是不能运行用户 Pod 的。

7.部署worker节点

Kubernetes 的 Worker 节点跟 Master 节点几乎是相同的,它们运行着的都是一个 kubelet 组件。唯一的区别在于,在 kubeadm init 的过程中,kubelet 启动后,Master 节点上还会自动运行 kube-apiserver、kube-scheduler、kube-controller-manger 这三个系统 Pod。

在 k8s-node1 和 k8s-node2 上分别执行如下命令,将其注册到 Cluster 中:

7.1 加入Kubernetes Node

在192.168.59.157/158(Node)执行。

向集群添加新节点,执行在kubeadm init输出的kubeadm join命令:

$ kubeadm join 192.168.59.156:6443 --token 2icetc.ygcqn4j2q3lx9vw3 \

--discovery-token-ca-cert-hash sha256:7a437683182ff063eb7250ed9d12400447f6e191cf07f05dba0b76ce433ef7e8

# 如果执行kubeadm init时没有记录下加入集群的命令,可以通过以下命令重新创建

kubeadm token create --print-join-command

7.2 查看集群状态

分别在两台NODE节点执行join之后,再次在Master查看node状态,可以看到NODE1已经redad了,但是node2没有ready,由于每个节点都需要启动若干组件,如果node节点的状态是 NotReady,可以查看所有节点pod状态,确保所有pod成功拉取到镜像并处于running状态:

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 112m v1.17.0

k8s-node1 Ready 3m49s v1.17.0

k8s-node2 NotReady 2m31s v1.17.0

# 查看所有pod状态

[root@k8s-master ~]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-9d85f5447-8lr7g 1/1 Running 0 114m 10.244.0.3 k8s-master

kube-system coredns-9d85f5447-9d7t6 1/1 Running 0 114m 10.244.0.2 k8s-master

kube-system etcd-k8s-master 1/1 Running 0 114m 192.168.59.156 k8s-master

kube-system kube-apiserver-k8s-master 1/1 Running 0 114m 192.168.59.156 k8s-master

kube-system kube-controller-manager-k8s-master 1/1 Running 0 114m 192.168.59.156 k8s-master

kube-system kube-flannel-ds-amd64-5hp2j 1/1 Running 0 26m 192.168.59.156 k8s-master

kube-system kube-flannel-ds-amd64-gkfxl 1/1 Running 0 4m27s 192.168.59.158 k8s-node2

kube-system kube-flannel-ds-amd64-gsr5n 0/1 CrashLoopBackOff 4 5m45s 192.168.59.157 k8s-node1

kube-system kube-proxy-jfq6d 1/1 Running 0 5m45s 192.168.59.157 k8s-node1

kube-system kube-proxy-lxdxs 1/1 Running 0 114m 192.168.59.156 k8s-master

kube-system kube-proxy-pjpqq 1/1 Running 0 4m27s 192.168.59.158 k8s-node2

kube-system kube-scheduler-k8s-master 1/1 Running 0 114m 192.168.59.156 k8s-master

等所有的节点都已经 Ready,

Kubernetes Cluster创建成功,一切准备就绪。

如果pod状态为Pending、ContainerCreating、ImagePullBackOff都表明 Pod 没有就绪,Running才是就绪状态。

如果有pod提示Init:ImagePullBackOff,说明这个pod的镜像在对应节点上拉取失败,我们可以通过kubectl describe pod查看 Pod 具体情况,以确认拉取失败的镜像:

7.3 正常的集群

[root@k8s-master ~]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-9d85f5447-8lr7g 1/1 Running 0 117m 10.244.0.3 k8s-master

kube-system coredns-9d85f5447-9d7t6 1/1 Running 0 117m 10.244.0.2 k8s-master

kube-system etcd-k8s-master 1/1 Running 0 117m 192.168.59.156 k8s-master

kube-system kube-apiserver-k8s-master 1/1 Running 0 117m 192.168.59.156 k8s-master

kube-system kube-controller-manager-k8s-master 1/1 Running 0 117m 192.168.59.156 k8s-master

kube-system kube-flannel-ds-amd64-5hp2j 1/1 Running 0 29m 192.168.59.156 k8s-master

kube-system kube-flannel-ds-amd64-gkfxl 1/1 Running 0 7m10s 192.168.59.158 k8s-node2

kube-system kube-flannel-ds-amd64-gsr5n 1/1 Running 5 8m28s 192.168.59.157 k8s-node1

kube-system kube-proxy-jfq6d 1/1 Running 0 8m28s 192.168.59.157 k8s-node1

kube-system kube-proxy-lxdxs 1/1 Running 0 117m 192.168.59.156 k8s-master

kube-system kube-proxy-pjpqq 1/1 Running 0 7m10s 192.168.59.158 k8s-node2

kube-system kube-scheduler-k8s-master 1/1 Running 0 117m 192.168.59.156 k8s-master

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready master 117m v1.17.0

k8s-node1 Ready 8m37s v1.17.0

k8s-node2 Ready 7m19s v1.17.0

7.4 其他一些操作

7.4.1查看master节点下载了哪些镜像

[root@k8s-master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.17.0 7d54289267dc 7 months ago 116MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.17.0 0cae8d5cc64c 7 months ago 171MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.17.0 5eb3b7486872 7 months ago 161MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.17.0 78c190f736b1 7 months ago 94.4MB

registry.aliyuncs.com/google_containers/coredns 1.6.5 70f311871ae1 8 months ago 41.6MB

registry.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 9 months ago 288MB

lizhenliang/flannel v0.11.0-amd64 ff281650a721 18 months ago 52.6MB

registry.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca1 2 years ago 742kB

7.4.2查看node节点下载了哪些镜像

[root@k8s-node1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.17.0 7d54289267dc 7 months ago 116MB

lizhenliang/flannel v0.11.0-amd64 ff281650a721 18 months ago 52.6MB

registry.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca1 2 years ago 742kB

8. 测试kubernetes集群

首先验证kube-apiserver, kube-controller-manager, kube-scheduler, pod network 是否正常:

部署一个 Nginx Deployment,包含2个Pod

参考:https://kubernetes.io/docs/concepts/workloads/controllers/deployment/

在Kubernetes集群中创建一个pod,验证是否正常运行:

[root@k8s-master ~]# kubectl create deployment nginx --image=nginx:alpine

deployment.apps/nginx created

[root@k8s-master ~]# kubectl scale deployment nginx --replicas=2

deployment.apps/nginx scaled

# 验证Nginx Pod是否正确运行,并且会分配10.244.开头的集群IP

[root@k8s-master ~]# kubectl get pods -l app=nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-5b6fb6dd96-2s5gn 1/1 Running 0 100s 10.244.1.2 k8s-node1

nginx-5b6fb6dd96-n8kpf 1/1 Running 0 88s 10.244.2.2 k8s-node2

# 再验证一下kube-proxy是否正常:以 NodePort 方式对外提供服务

[root@k8s-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@k8s-master ~]# kubectl get services nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx NodePort 10.96.112.44 80:30967/TCP 10s

# 可以通过任意 NodeIP:Port 在集群外部访问这个服务,都可以访问到nginx的初始化页面:

[root@k8s-master ~]# curl 192.168.59.156:30967

[root@k8s-master ~]# curl 192.168.59.157:30967

[root@k8s-master ~]# curl 192.168.59.158:30967

# 访问k8s-master ip,同理

http://192.168.59.156:30967/

http://192.168.59.157:30967/

http://192.168.59.158:30967/

$ kubectl get pod,svc

最后验证一下dns, pod network是否正常:

运行Busybox并进入交互模式

[root@k8s-master ~]# kubectl run -it curl --image=radial/busyboxplus:curl

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

If you don't see a command prompt, try pressing enter.

# 输入nslookup nginx查看是否可以正确解析出集群内的IP,以验证DNS是否正常

[ root@curl-69c656fd45-t8jth:/ ]$ nslookup nginx

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: nginx

Address 1: 10.96.112.44 nginx.default.svc.cluster.local

# 通过服务名进行访问,验证kube-proxy是否正常

[ root@curl-69c656fd45-t8jth:/ ]$ curl http://nginx/

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

[ root@curl-69c656fd45-t8jth:/ ]$

9. 部署 Dashboard

- 准备安装kubernetes dashboard的yaml文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

改名

mv recommended.yaml kubernetes-dashboard.yaml

2、默认Dashboard只能集群内部访问,修改Service为NodePort类型,并暴露端口到外部:[root@k8s-master ~]# vi kubernetes-dashboard.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

3、创建service account并绑定默认cluster-admin管理员集群角色:

[root@k8s-master ~]# ll

总用量 28

-rw-------. 1 root root 1257 7月 22 18:23 anaconda-ks.cfg

-rw-r--r-- 1 root root 15014 7月 22 17:58 kube-flannel.yaml

-rw-r--r-- 1 root root 7868 7月 22 19:10 kubernetes-dashboard.yaml

[root@k8s-master ~]# vi kubernetes-dashboard.yaml

[root@k8s-master ~]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@k8s-master ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

[root@k8s-master ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-jcm9j

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 460f14af-d4e0-435d-b156-b15c87909835

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjU0M3FhWTlfRTFkdmZ6dk5acHJXTGZya1hNZ3podTU5NDN0SGM4WExiN00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tamNtOWoiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNDYwZjE0YWYtZDRlMC00MzVkLWIxNTYtYjE1Yzg3OTA5ODM1Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.pAOjjiSOdf-mcIsm-0GyfNZYn0UvS_6qMejN2i2BMEkxXL1jgaTwdKYzTWkBWqNk7Qen6kjwnOnqEyGbl2ZCPHVBXmfJQ5MrwkaJl6XnoY9NZ5AvAcI4DffuzamNySkPADeWg6pBXcWQpjMbMlZglAAyXSmztGMPaQ_pq9W06s8OZdeSe4OW8Tm-oIrdAkQ0P0Q5nxU0tVcV9ib3R2JRYDPofsQlENkwfXJz6jY4J0txest4bMGwpZsRn2Q1p8HoqYqAh_pJqVqAKPdl7rEBBYIncucv-T7XfJMtvS0YO-8ySRHSXrlGZWFSnszDUEKBOmj3hguYrkglfTCGP_GtLA

ca.crt: 1025 bytes

namespace: 11 bytes

此处的token,下文dashboard 登陆时需要使用,记得找地方记下来,实在忘了记录,也有重新输出的操作

4、应用配置文件启动服务

[root@k8s-master ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-jcm9j

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: 460f14af-d4e0-435d-b156-b15c87909835

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjU0M3FhWTlfRTFkdmZ6dk5acHJXTGZya1hNZ3podTU5NDN0SGM4WExiN00ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tamNtOWoiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNDYwZjE0YWYtZDRlMC00MzVkLWIxNTYtYjE1Yzg3OTA5ODM1Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.pAOjjiSOdf-mcIsm-0GyfNZYn0UvS_6qMejN2i2BMEkxXL1jgaTwdKYzTWkBWqNk7Qen6kjwnOnqEyGbl2ZCPHVBXmfJQ5MrwkaJl6XnoY9NZ5AvAcI4DffuzamNySkPADeWg6pBXcWQpjMbMlZglAAyXSmztGMPaQ_pq9W06s8OZdeSe4OW8Tm-oIrdAkQ0P0Q5nxU0tVcV9ib3R2JRYDPofsQlENkwfXJz6jY4J0txest4bMGwpZsRn2Q1p8HoqYqAh_pJqVqAKPdl7rEBBYIncucv-T7XfJMtvS0YO-8ySRHSXrlGZWFSnszDUEKBOmj3hguYrkglfTCGP_GtLA

$ kubectl apply -f kubernetes-dashboard.yaml

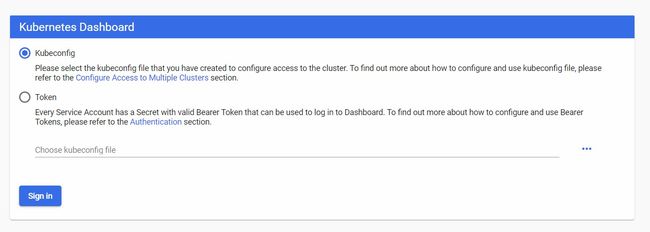

5、使用输出的token登录Dashboard。

kubectl get pods -n kubernetes-dashboard

kubectl get svc -n kubernetes-dashboard

10.解决Dashboard chrome无法访问问题

10.1问题描述

K8S Dashboard安装好以后,通过Firefox浏览器是可以打开的,但通过Google Chrome浏览器,无法成功浏览页面。如图:

10.2 解决方案

kubeadm自动生成的证书,很多浏览器不支持。所以我们需要自己创建证书。

10.2.1创建一个key的目录,存放证书等文件

[root@k8s-master ~]# mkdir kubernetes-key

[root@k8s-master ~]# cd kubernetes-key/

10.2.2 生成证书

# 1)生成证书请求的key

[root@k8s-master kubernetes-key]# openssl genrsa -out dashboard.key 2048

Generating RSA private key, 2048 bit long modulus

##输出

..............................................................................................+++

................................................+++

e is 65537 (0x10001)

# 2)生成证书请求,下面192.168.59.156为master节点的IP地址

[root@k8s-master kubernetes-key]# openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=192.168.59.156'

# 3)生成自签证书

[root@k8s-master kubernetes-key]# openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

## 输出

Signature ok

subject=/CN=192.168.59.156

Getting Private key

10.2.3 删除原有证书

[root@k8s-master kubernetes-key]# kubectl delete secret kubernetes-dashboard-certs -n kubernetes-dashboard

secret "kubernetes-dashboard-certs" deleted

10.2.4创建新证书的sercret

[root@k8s-master kubernetes-key]# ll

总用量 12

-rw-r--r-- 1 root root 989 7月 23 10:14 dashboard.crt

-rw-r--r-- 1 root root 899 7月 23 10:13 dashboard.csr

-rw-r--r-- 1 root root 1675 7月 23 10:06 dashboard.key

[root@k8s-master kubernetes-key]# kubectl create secret generic kubernetes-dashboard-certs --from-file=dashboard.key --from-file=dashboard.crt -n kubernetes-dashboard

secret/kubernetes-dashboard-certs created

10.2.5查找正在运行的pod

[root@k8s-master kubernetes-key]# kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-76585494d8-jcrxk 1/1 Running 1 15h

kubernetes-dashboard-5996555fd8-h5bq2 1/1 Running 1 15h

10.2.6删除旧的Pod

[root@k8s-master kubernetes-key]# kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-76585494d8-jcrxk 1/1 Running 1 15h

kubernetes-dashboard-5996555fd8-h5bq2 1/1 Running 1 15h

[root@k8s-master kubernetes-key]# kubectl delete po kubernetes-dashboard-5996555fd8-h5bq2 -n kubernetes-dashboard

pod "kubernetes-dashboard-5996555fd8-h5bq2" deleted

[root@k8s-master kubernetes-key]# kubectl delete po dashboard-metrics-scraper-76585494d8-jcrxk -n kubernetes-dashboard

pod "dashboard-metrics-scraper-76585494d8-jcrxk" deleted

10.2.7使用Chrome访问验证

等待新的pod状态正常 ,再使用浏览器访问

[root@k8s-master kubernetes-key]# kubectl get pod -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-76585494d8-4fwmn 1/1 Running 0 2m29s

kubernetes-dashboard-5996555fd8-74kcc 1/1 Running 0 2m56s

访问地址:http://NodeIP:30001,选择token登陆,复制前文提到的token就可以登陆了。

到此为止, Kubernetes即可真香可用了,部署过程中有什么其他疑问,可评论区留言,看到基本都会回复的

参考

- k8s安装参考文档[李振良]:https://www.cnblogs.com/tylerzhou/p/10971336.html

- k8s dashboard chrome无法访问:http://suo.im/6ec0lD