使用ceph-deploy部署ceph集群并配置ceph-dashboard

1、准备工作

1.1 关闭selinux和时间同步(所有节点)

systemctl disable firewalld && systemctl stop firewalld

或者开放端口

sudo firewall-cmd --zone=public --add-service=ceph-mon --permanent

# on monitors

sudo firewall-cmd --zone=public --add-service=ceph --permanent

# on OSDs and MDSs

sudo firewall-cmd --reload

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

setenforce 0

也可以直接修改/etc/selinux/config文件

yum -y install chrony

//修改配置文件/etc/chrony.conf

echo allow >> /etc/chrony.conf //admin执行,允许所有网段同步时间

echo server master01 iburst >> /etc/chrony.conf //除master01 都执行,获取master01 时间并同步

systemctl enable chronyd //开启时间同步

systemctl restart chronyd //重启

1.2 导入repo仓库

cat << EOM > /etc/yum.repos.d/ceph.repo

[ceph-noarch]

name=Ceph noarch packages

baseurl=http://download.ceph.com/rpm-jewel/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

priority=1

EOM然后更新yum repo

yum clean all

yum repolist1.3 安装python工具和ceph-deploy

yum makecache

yum update

yum install ceph-deploy python-setuptools -y

ceph-deploy --version1.4 创建ceph部署用户(所有节点)

这里我们需要创建一个用户专门用来给ceph-deploy部署,使用ceph-deploy部署的时候只需要加上--username选项即可指定用户,需要注意的是:

- 不建议使用root

- 不能使用ceph为用户名,因为后面的部署中需要用到该用户名,如果系统中已存在该用户则会先删除掉该用户,然后就会导致部署失败

- 该用户需要具备超级用户权限(sudo),并且不需要输入密码使用sudo权限

- 所有的节点均需要创建该用户

- 该用户需要在ceph集群中的所有机器之间免密ssh登录

hostnamectl set-hostname master01

hostnamectl set-hostname master02

hostnamectl set-hostname master03

hostnamectl set-hostname node1

hostnamectl set-hostname node2

配置hosts(每台执行)

echo "

192.168.63.90 master01

192.168.63.91 master02

192.168.63.92 master03

192.168.63.93 node1

192.168.63.94 node2" >> /etc/hosts

创建用户组(每台执行)

useradd kubeceph

echo 'cc.123' | passwd --stdin kubeceph

配置sudo权限并设置免密

echo "kubeceph ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/kubeceph

chmod 0440 /etc/sudoers.d/kubeceph

配置免密码登录及编辑deploy节点的ssh文件

su - kubeceph

ssh-keygen

ssh-copy-id kubeceph@master02

ssh-copy-id kubeceph@master03

ssh-copy-id kubeceph@node1

ssh-copy-id kubeceph@node2

添加~/.ssh/config配置文件

vim .ssh/config

Host master01

Hostname master01

User kubeceph

Host master02

Hostname master02

User kubeceph

User master03

Hostname master03

User kubeceph

Host node1

Hostname node1

User kubeceph

Host node2

Hostname node2

User kubeceph

sudo chmod 600 .ssh/config1.5 安装yum插件

sudo yum install yum-plugin-priorities

2、部署ceph

2.1 创建部署目录

[kubeceph@master01 ~]$ mkdir my-cluster

[kubeceph@master01 ~]$ cd my-cluster

[kubeceph@master01 my-cluster]$ pwd

/home/kubeceph/my-cluster由于部署过程中会生成许多文件,这里我们专门创建一个目录用于存放。

2.2 初始化mon节点

[kubeceph@master01 my-cluster]$ ceph-deploy new master01 master02 master03

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/kubeceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.39): /bin/ceph-deploy new master01 master02 master03

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] func :

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf :

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] ssh_copykey : True

[ceph_deploy.cli][INFO ] mon : ['master01', 'master02', 'master03']

[ceph_deploy.cli][INFO ] public_network : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] cluster_network : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] fsid : None

[ceph_deploy.new][DEBUG ] Creating new cluster named ceph

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[master01][DEBUG ] connection detected need for sudo

[master01][DEBUG ] connected to host: master01

[master01][DEBUG ] detect platform information from remote host

[master01][DEBUG ] detect machine type

[master01][DEBUG ] find the location of an executable

[master01][INFO ] Running command: sudo /usr/sbin/ip link show

[master01][INFO ] Running command: sudo /usr/sbin/ip addr show

[master01][DEBUG ] IP addresses found: [u'172.20.107.0', u'192.168.63.96', u'172.17.0.1', u'192.168.63.90']

[ceph_deploy.new][DEBUG ] Resolving host master01

[ceph_deploy.new][DEBUG ] Monitor master01 at 192.168.63.90

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[master02][DEBUG ] connected to host: master01

[master02][INFO ] Running command: ssh -CT -o BatchMode=yes master02

[master02][DEBUG ] connection detected need for sudo

[master02][DEBUG ] connected to host: master02

[master02][DEBUG ] detect platform information from remote host

[master02][DEBUG ] detect machine type

[master02][DEBUG ] find the location of an executable

[master02][INFO ] Running command: sudo /usr/sbin/ip link show

[master02][INFO ] Running command: sudo /usr/sbin/ip addr show

[master02][DEBUG ] IP addresses found: [u'172.17.0.1', u'192.168.63.91', u'172.20.106.0']

[ceph_deploy.new][DEBUG ] Resolving host master02

[ceph_deploy.new][DEBUG ] Monitor master02 at 192.168.63.91

[ceph_deploy.new][INFO ] making sure passwordless SSH succeeds

[master03][DEBUG ] connected to host: master01

[master03][INFO ] Running command: ssh -CT -o BatchMode=yes master03

[master03][DEBUG ] connection detected need for sudo

[master03][DEBUG ] connected to host: master03

[master03][DEBUG ] detect platform information from remote host

[master03][DEBUG ] detect machine type

[master03][DEBUG ] find the location of an executable

[master03][INFO ] Running command: sudo /usr/sbin/ip link show

[master03][INFO ] Running command: sudo /usr/sbin/ip addr show

[master03][DEBUG ] IP addresses found: [u'172.17.0.1', u'192.168.63.92', u'172.20.108.0']

[ceph_deploy.new][DEBUG ] Resolving host master03

[ceph_deploy.new][DEBUG ] Monitor master03 at 192.168.63.92

[ceph_deploy.new][DEBUG ] Monitor initial members are ['master01', 'master02', 'master03']

[ceph_deploy.new][DEBUG ] Monitor addrs are ['192.168.63.90', '192.168.63.91', '192.168.63.92']

[ceph_deploy.new][DEBUG ] Creating a random mon key...

[ceph_deploy.new][DEBUG ] Writing monitor keyring to ceph.mon.keyring...

[ceph_deploy.new][DEBUG ] Writing initial config to ceph.conf... 2.3 安装ceph

在所有的节点上都安装ceph

ceph-deploy install master01 master02 master03 node1 node2

初始化mon

ceph-deploy mon create-initial顺利执行后会在当前目录下生成一系列相关的密钥文件

使用ceph-deploy复制配置文件和密钥

ceph-deploy admin master01 master02 master03 node1 node22.4 部署manager

ceph-deploy mgr create master01 master02 master03

[kubeceph@master01 my-cluster]$ ceph-deploy mgr create master01 master02 master03

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/kubeceph/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /bin/ceph-deploy mgr create master01 master02 master03

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] mgr : [('master01', 'master01'), ('master02', 'master02'), ('master03', 'master03')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf :

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func :

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mgr][DEBUG ] Deploying mgr, cluster ceph hosts master01:master01 master02:master02 master03:master03

[master01][DEBUG ] connection detected need for sudo

[master01][DEBUG ] connected to host: master01

[master01][DEBUG ] detect platform information from remote host

[master01][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to master01

[master01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[master01][WARNIN] mgr keyring does not exist yet, creating one

[master01][DEBUG ] create a keyring file

[master01][DEBUG ] create path recursively if it doesn't exist

[master01][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.master01 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-master01/keyring

[master01][INFO ] Running command: sudo systemctl enable ceph-mgr@master01

[master01][WARNIN] Created symlink from /etc/systemd/system/ceph-mgr.target.wants/[email protected] to /usr/lib/systemd/system/[email protected].

[master01][INFO ] Running command: sudo systemctl start ceph-mgr@master01

[master01][INFO ] Running command: sudo systemctl enable ceph.target

[master02][DEBUG ] connection detected need for sudo

[master02][DEBUG ] connected to host: master02

[master02][DEBUG ] detect platform information from remote host

[master02][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to master02

[master02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[master02][WARNIN] mgr keyring does not exist yet, creating one

[master02][DEBUG ] create a keyring file

[master02][DEBUG ] create path recursively if it doesn't exist

[master02][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.master02 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-master02/keyring

[master02][INFO ] Running command: sudo systemctl enable ceph-mgr@master02

[master02][INFO ] Running command: sudo systemctl start ceph-mgr@master02

[master02][INFO ] Running command: sudo systemctl enable ceph.target

[master03][DEBUG ] connection detected need for sudo

[master03][DEBUG ] connected to host: master03

[master03][DEBUG ] detect platform information from remote host

[master03][DEBUG ] detect machine type

[ceph_deploy.mgr][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph_deploy.mgr][DEBUG ] remote host will use systemd

[ceph_deploy.mgr][DEBUG ] deploying mgr bootstrap to master03

[master03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[master03][WARNIN] mgr keyring does not exist yet, creating one

[master03][DEBUG ] create a keyring file

[master03][DEBUG ] create path recursively if it doesn't exist

[master03][INFO ] Running command: sudo ceph --cluster ceph --name client.bootstrap-mgr --keyring /var/lib/ceph/bootstrap-mgr/ceph.keyring auth get-or-create mgr.master03 mon allow profile mgr osd allow * mds allow * -o /var/lib/ceph/mgr/ceph-master03/keyring

[master03][INFO ] Running command: sudo systemctl enable ceph-mgr@master03

[master03][INFO ] Running command: sudo systemctl start ceph-mgr@master03

[master03][INFO ] Running command: sudo systemctl enable ceph.target

2.5 添加OSD

这里我们添加五个节点上面的共计5个硬盘到ceph集群中作为osd

使用lsblk命令查看硬盘

ceph-deploy osd create --data /dev/sdb2 master01

ceph-deploy osd create --data /dev/sdb2 master02

ceph-deploy osd create --data /dev/sdb2 master03

ceph-deploy osd create --data /dev/sdb2 node1

ceph-deploy osd create --data /dev/sdb2 node22.6 检测结果

查看ceph集群状态

sudo ceph health

sudo ceph -s出现报错:

[kubeceph@master01 ceph]$ ceph health detail

2021-04-02 09:54:51.269 7f3467172700 -1 auth: unable to find a keyring on /etc/ceph/ceph.client.admin.keyring,/etc/ceph/ceph.keyring,/etc/ceph/keyring,/etc/ceph/keyring.bin,: (2) No such file or directory

2021-04-02 09:54:51.270 7f3467172700 -1 monclient: ERROR: missing keyring, cannot use cephx for authentication

[errno 2] error connecting to the cluster

处理方法:

sudo chmod +r /etc/ceph/ceph.client.admin.keyring出现报错:

[kubeceph@master01 ceph]$ ceph health detail

HEALTH_WARN clock skew detected on mon.master02, mon.master03

MON_CLOCK_SKEW clock skew detected on mon.master02, mon.master03

mon.master02 addr 192.168.63.91:6789/0 clock skew 0.257359s > max 0.05s (latency 0.00164741s)

mon.master03 addr 192.168.63.92:6789/0 clock skew 0.954796s > max 0.05s (latency 0.00242741s)

处理方法:

修改ceph配置中的时间偏差阈值

vim /home/my-cluster/ceph.conf

在global字段下添加:

mon clock drift allowed = 2

mon clock drift warn backoff = 30

向需要同步的mon节点推送配置文件,命令如下:

ceph-deploy --overwrite-conf config push master01 master02 master03 node1 node2

去所有节点重启mon服务

systemctl restart ceph-mon.target3、配置dashboard

详细的官网部署文档链接:https://docs.ceph.com/docs/master/mgr/dashboard/

3.1 启用dashboard

[kubeceph@master01 my-cluster]$ ceph mgr module enable dashboard

3.2 禁用ssl加密

[kubeceph@master01 my-cluster]$ ceph config set mgr mgr/dashboard/ssl false

3.3 重启ceph-dashboard

[kubeceph@master01 my-cluster]$ ceph mgr module disable dashboard

[kubeceph@master01 my-cluster]$ ceph mgr module enable dashboard

3.4 配置IP和端口

$ ceph config set mgr mgr/dashboard/$name/server_addr $IP

$ ceph config set mgr mgr/dashboard/$name/server_port $PORT

$ ceph config set mgr mgr/dashboard/$name/ssl_server_port $PORT

$ ceph config set mgr mgr/dashboard/master01/server_addr 192.168.63.96

$ ceph config set mgr mgr/dashboard/master01/server_port 18080

3.5 创建dashboard用户

$ ceph dashboard set-login-credentials zhangjie cc.123

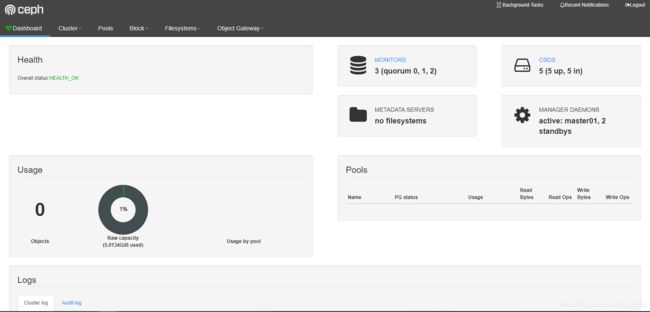

最后可以看到的界面如上。

4、配置pool

创建一个rbd名称的pool,pg_num为128:

ceph osd pool create rbd 128

设置允许容量限制为800GB:

ceph osd pool set-quota rbd max_bytes $((800 * 1024 * 1024 * 1024))

rbd pool init rbd