大数据实操篇 No.13-Flink开发环境搭建及使用

第1章 简介

在此佳节之际,首先祝福所有读者国庆中秋双节快乐!

经过前几篇文章的介绍,相信大家已经了解Flink在生产环境上的部署和使用。那么问题随之而来:难道每一个Flink开发人员都要部署安装一套集群才能做开发吗?答案当然是否定的,如果每一个开发人员都要一套集群那开发成本就太高了。本篇文章以Flink Table API/SQL为例,以Kafka为数据源、Mysql为数据汇,采用容器的方式,为大家介绍Flink开发环境的搭建及使用。(注:笔者以windows为例,mac系统类似)

第2章 安装组件

2.1 官网安装docker

这里就不详细介绍了,大家自行到官网(https://www.docker.com)下载安装。

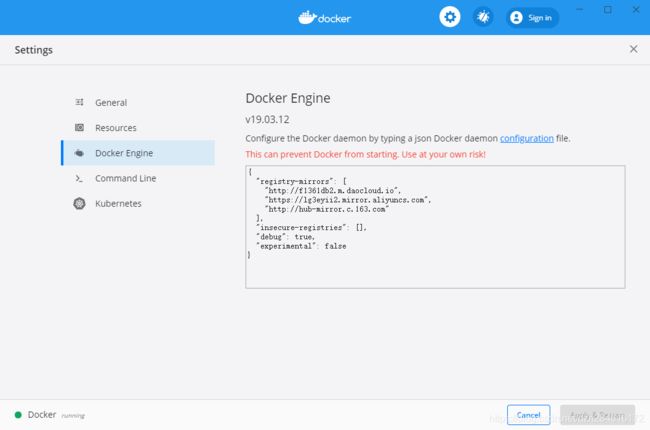

2.2 设置国内镜像仓库地址

2.3 查询docker版本

C:\Users\zihao>docker --version

Docker version 19.03.12, build 48a66213fe2.4 安装kafka

安装前可先查询kafka相关镜像docker search kafka拉取kafka镜像

C:\Users\zihao>docker pull wurstmeister/kafka:2.12-2.5.0

2.12-2.5.0: Pulling from wurstmeister/kafka

Image docker.io/wurstmeister/kafka:2.12-2.5.0 uses outdated schema1 manifest format. Please upgrade to a schema2 image for better future compatibility. More information at https://docs.docker.com/registry/spec/deprecated-schema-v1/

e7c96db7181b: Retrying in 1 second f910a506b6cb: Retrying in 1 second b6abafe80f63: Downloading 2e9c2caa5758: Waiting 1b29071c565f: Waiting c81626d038e3: Waiting 2.12-2.5.0: Pulling from wurstmeister/kafka

e7c96db7181b: Pull complete f910a506b6cb: Pull complete b6abafe80f63: Pull complete 2e9c2caa5758: Pull complete 1b29071c565f: Pull complete c81626d038e3: Pull complete Digest: sha256:71aa89afe97d3f699752b6d80ddc2024a057ae56407f6ab53a16e9e4bedec04c

Status: Downloaded newer image for wurstmeister/kafka:2.12-2.5.0

docker.io/wurstmeister/kafka:2.12-2.5.02.5 安装zookeeper

拉取zookeeper镜像

C:\Users\zihao>docker pull zookeeper:3.5.7

3.5.7: Pulling from library/zookeeper

afb6ec6fdc1c: Pulling fs layer ee19e84e8bd1: Pulling fs layer 6ac787417531: Pulling fs layer f3f781d4d83e: Waiting 424c9e43d19a: Waiting f0929561e8a7: Waiting f1cf0c087cb3: Waiting 2f47bb4dd07a: Waiting 3.5.7: Pulling from library/zookeeper

afb6ec6fdc1c: Pulling fs layer ee19e84e8bd1: Pulling fs layer 6ac787417531: Pulling fs layer f3f781d4d83e: Waiting 424c9e43d19a: Waiting f0929561e8a7: Waiting f1cf0c087cb3: Waiting 2f47bb4dd07a: Waiting 3.5.7: Pulling from library/zookeeper

afb6ec6fdc1c: Pull complete ee19e84e8bd1: Pull complete 6ac787417531: Pull complete f3f781d4d83e: Pull complete 424c9e43d19a: Pull complete f0929561e8a7: Pull complete f1cf0c087cb3: Pull complete 2f47bb4dd07a: Pull complete Digest: sha256:883b014b6535574503bda8fc6a7430ba009c0273242f86d401095689652e5731

Status: Downloaded newer image for zookeeper:3.5.7

docker.io/library/zookeeper:3.5.72.6 安装Mysql

C:\Users\zihao>docker pull mysql:5.7

5.7: Pulling from library/mysql

Image docker.io/library/mysql:5.7 uses outdated schema1 manifest format. Please upgrade to a schema2 image for better future compatibility. More information at https://docs.docker.com/registry/spec/deprecated-schema-v1/

d121f8d1c412: Downloading f3cebc0b4691: Downloading 1862755a0b37: Downloading 489b44f3dbb4: Downloading 690874f836db: Downloading baa8be383ffb: Downloading 55356608b4ac: Downloading 277d8f888368: Downloading 21f2da6feb67: Downloading 2c98f818bcb9: Downloading 031b0a770162: Downloading 5.7: Pulling from library/mysql

d121f8d1c412: Pull complete f3cebc0b4691: Pull complete 1862755a0b37: Pull complete 489b44f3dbb4: Pull complete 690874f836db: Pull complete baa8be383ffb: Pull complete 55356608b4ac: Pull complete 277d8f888368: Pull complete 21f2da6feb67: Pull complete 2c98f818bcb9: Pull complete 031b0a770162: Pull complete Digest: sha256:14fd47ec8724954b63d1a236d2299b8da25c9bbb8eacc739bb88038d82da4919

Status: Downloaded newer image for mysql:5.7

docker.io/library/mysql:5.72.7 查看已安装的镜像images

C:\Users\zihao>docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mysql 5.7 ef08065b0a30 3 days ago 448MB

wurstmeister/kafka 2.12-2.5.0 caa449bd6c28 3 weeks ago 431MB

zookeeper 3.5.7 6bd990489b09 4 months ago 245MB

第3章 启动容器

3.1 启动zookeeper

C:\Users\zihao>docker run -d --name zookeeper -p 2181:2181 -t zookeeper:3.5.7

5a29cfcb77c1bbd33eedf41add46da89b91468d09702acde5caa3086b6cc467e3.2 启动kafka

C:\Users\zihao>docker run -d --name kafka --publish 9092:9092 --link zookeeper --env KAFKA_ZOOKEEPER_CONNECT=zookeeper:2181 --env KAFKA_ADVERTISED_HOST_NAME=127.0.0.1 --env KAFKA_ADVERTISED_PORT=9092 wurstmeister/kafka:2.12-2.5.0

e45499f971d1efd59227031f41631d1cf6e9e13b7950872efbf96dcbb5f998fb3.3 启动Mysql

C:\Users\zihao>docker run -p 3306:3306 --name mysql -v D:/21docker/mysql/conf:/etc/mysql/conf.d -v D:/21docker/mysql/logs:/logs -v D:/21docker/mysql/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 -d mysql:5.7

2bea9be2ccdbea6f41c9a4dedc7d3bf8efa5538b39e19dea43eb66f4df100bb7注意用-v参数挂载路径

3.4 查看启动的容器

C:\Users\zihao>docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

5f4d03dee765 mysql:5.7 "docker-entrypoint.s…" 4 seconds ago Up 3 seconds 0.0.0.0:3306->3306/tcp, 33060/tcp mysql

e45499f971d1 wurstmeister/kafka:2.12-2.5.0 "start-kafka.sh" 47 minutes ago Up 47 minutes 0.0.0.0:9092->9092/tcp kafka

5a29cfcb77c1 zookeeper:3.5.7 "/docker-entrypoint.…" 52 minutes ago Up 52 minutes 2888/tcp, 3888/tcp, 0.0.0.0:2181->2181/tcp, 8080/tcp zookeeper3.5 检查kafka运行情况

进入kafka容器

C:\Users\zihao>docker exec -it kafka /bin/bash到kafka目录

bash-4.4# cd opt/kafka新建topic

bash-4.4# bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --topic test

Created topic test.命令启动生产者

bash-4.4# bin/kafka-console-producer.sh --topic=test --broker-list localhost:9092

>启动一个消费者

bash-4.4# bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 -from-beginning --topic test

发送数据,查看消费是否正常

生产者发送数据

bash-4.4# bin/kafka-console-producer.sh --topic=test --broker-list localhost:9092

>hello

>flink

>消费者消费数据情况

bash-4.4# bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 -from-beginning --topic test

hello

flink可以看到kafka的消息生成和消费正常,证明kafka安装已经正常。

3.6 检查mysql运行情况

C:\Users\zihao>docker exec -it mysql bash

root@5f4d03dee765:/# mysql -h localhost -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.31 MySQL Community Server (GPL)

Copyright (c) 2000, 2020, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.02 sec)可以看到mysql中的数据库,证明mysql也安装运行正常。

第4章 运行flink

4.1 建立kafka topic

bash-4.4# bin/kafka-topics.sh --create --bootstrap-server localhost:9092 --topic country-log

Created topic country-log.4.2 建立Mysql表

建库

mysql> create database flinkdb;建表

mysql> create table country_log(country_id bigint,country_msg varchar(100));4.3 启动kafka生产者

bash-4.4# bin/kafka-console-producer.sh --topic=country-log --broker-list localhost:90924.4 Idea编写作业

package sql;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.api.common.time.Time;

import org.apache.flink.runtime.state.filesystem.FsStateBackend;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.table.api.EnvironmentSettings;

import org.apache.flink.table.api.Table;

import org.apache.flink.table.api.TableEnvironment;

import org.apache.flink.table.api.bridge.java.StreamTableEnvironment;

import java.util.concurrent.TimeUnit;

/**

* Kafka生产的数据:

* {"country_id":"1","country_msg":"hello flink!"}

* MySql建表语句:

* create table country(country_id bigint,country_msg varchar(100));

*/

public class Kafka2Mysql {

public static void main(String[] args) throws Exception {

// Kafka source

String sourceSQL="CREATE TABLE demo_source (country_id BIGINT,country_msg STRING)\n" +

" WITH (\n" +

" 'connector' = 'kafka-0.11',\n" +

" 'topic'='country-log',\n" +

" 'properties.bootstrap.servers'='localhost:9092',\n" +

" 'format' = 'json',\n" +

" 'scan.startup.mode' = 'latest-offset'\n" +

")";

//Mysql sink

String sinkSQL="CREATE TABLE demo_sink (country_id BIGINT,country_msg STRING)\n" +

" WITH (" +

" 'connector' = 'jdbc',\n" +

" 'url' = 'jdbc:mysql://localhost:3306/flinkdb?characterEncoding=utf-8&useSSL=false',\n" +

" 'table-name' = 'country_log',\n" +

" 'username' = 'root',\n" +

" 'password' = '123456',\n" +

" 'sink.buffer-flush.max-rows' = '1',\n" +

" 'sink.buffer-flush.interval' = '1s'\n" +

")";

// 创建执行环境

EnvironmentSettings settings=EnvironmentSettings

.newInstance()

.useBlinkPlanner()

.inStreamingMode()

.build();

//TableEnvironment tEnv = TableEnvironment.create(settings);

StreamExecutionEnvironment sEnv = StreamExecutionEnvironment.getExecutionEnvironment();

sEnv.setRestartStrategy(RestartStrategies.fixedDelayRestart(3, Time.of(1, TimeUnit.SECONDS)));

//sEnv.enableCheckpointing(1000);

//sEnv.setStateBackend(new FsStateBackend("file:///tmp/chkdir",false));

StreamTableEnvironment tEnv= StreamTableEnvironment.create(sEnv,settings);

//注册souuce

tEnv.executeSql(sourceSQL);

//注册sink

tEnv.executeSql(sinkSQL);

//数据提取

Table sourceTable=tEnv.from("demo_source");

//发送数据

sourceTable.executeInsert("demo_sink");

//执行作业

tEnv.execute("Hello Flink");

}

}

在Idea里直接运行上面的程序。

至此,Kafka、Mysql、Flink作业程序已经全部Running,接下来我们开始生成数据,查看数据情况。

4.5 命令行生产数据

到kafka命令行手动生产消息

bash-4.4# bin/kafka-console-producer.sh --topic=country-log --broker-list localhost:9092

>{"country_id":"1","country_msg":"hello flink!"}

>{"country_id":"2","country_msg":"hello flink!"}

>4.6 查看Mysql中的数据

数据经过Kafka-Flink到达Mysql,查看Mysql中的数据

mysql> select * from country_log;

+------------+--------------+

| country_id | country_msg |

+------------+--------------+

| 1 | hello flink! |

| 2 | hello flink! |

+------------+--------------+

2 rows in set (0.01 sec)

至此,我们已经通过Flink成功从Kafka迁移至下游的Mysql,完成了Flink在本地开发环境搭建及使用。但是本篇文章只是简单的将数据从Flink中流过,也没有完整的端到端的业务。下篇文章将为大家介绍一个完整的端到端涵盖Flink计算的案例。期待您的持续关注!