- 全平台QQ聊天数据库解密项目常见问题解决方案

管旭韶

全平台QQ聊天数据库解密项目常见问题解决方案qq-win-db-keyQQNT/WindowsQQ聊天数据库解密项目地址:https://gitcode.com/gh_mirrors/qq/qq-win-db-key项目基础介绍本项目是一个开源项目,旨在为用户提供全平台QQ聊天数据库的解密方法。项目主要使用Python、JavaScript和C++等编程语言实现。新手常见问题及解决步骤问题一:如何

- 用鸿蒙打造真正的跨设备数据库:从零实现分布式存储

网罗开发

HarmonyOS实战源码实战harmonyos数据库分布式

网罗开发(小红书、快手、视频号同名) 大家好,我是展菲,目前在上市企业从事人工智能项目研发管理工作,平时热衷于分享各种编程领域的软硬技能知识以及前沿技术,包括iOS、前端、HarmonyOS、Java、Python等方向。在移动端开发、鸿蒙开发、物联网、嵌入式、云原生、开源等领域有深厚造诣。图书作者:《ESP32-C3物联网工程开发实战》图书作者:《SwiftUI入门,进阶与实战》超级个体:CO

- Python Day58

别勉.

python机器学习python信息可视化数据分析

Task:1.时序建模的流程2.时序任务经典单变量数据集3.ARIMA(p,d,q)模型实战4.SARIMA摘要图的理解5.处理不平稳的2种差分a.n阶差分—处理趋势b.季节性差分—处理季节性建立一个ARIMA模型,通常遵循以下步骤:数据可视化:观察原始时间序列图,判断是否存在趋势或季节性。平稳性检验:对原始序列进行ADF检验。如果p值>0.05,说明序列非平稳,需要进行差分。确定差分次数d:进行

- Python Day56

别勉.

python机器学习python开发语言

Task:1.假设检验基础知识a.原假设与备择假设b.P值、统计量、显著水平、置信区间2.白噪声a.白噪声的定义b.自相关性检验:ACF检验和Ljung-Box检验c.偏自相关性检验:PACF检验3.平稳性a.平稳性的定义b.单位根检验4.季节性检验a.ACF检验b.序列分解:趋势+季节性+残差记忆口诀:p越小,落在置信区间外,越拒绝原假设。1.假设检验基础知识a.原假设与备择假设原假设(Null

- Python Day57

别勉.

python机器学习python开发语言

Task:1.序列数据的处理:a.处理非平稳性:n阶差分b.处理季节性:季节性差分c.自回归性无需处理2.模型的选择a.AR§自回归模型:当前值受到过去p个值的影响b.MA(q)移动平均模型:当前值收到短期冲击的影响,且冲击影响随时间衰减c.ARMA(p,q)自回归滑动平均模型:同时存在自回归和冲击影响时间序列分析:ARIMA/SARIMA模型构建流程时间序列分析的核心目标是理解序列的过去行为,并

- Python Day44

别勉.

python机器学习python开发语言

Task:1.预训练的概念2.常见的分类预训练模型3.图像预训练模型的发展史4.预训练的策略5.预训练代码实战:resnet181.预训练的概念预训练(Pre-training)是指在大规模数据集上,先训练模型以学习通用的特征表示,然后将其用于特定任务的微调。这种方法可以显著提高模型在目标任务上的性能,减少训练时间和所需数据量。核心思想:在大规模、通用的数据(如ImageNet)上训练模型,学习丰

- Python Day42

别勉.

python机器学习python开发语言

Task:Grad-CAM与Hook函数1.回调函数2.lambda函数3.hook函数的模块钩子和张量钩子4.Grad-CAM的示例1.回调函数定义:回调函数是作为参数传入到其他函数中的函数,在特定事件发生时被调用。特点:便于扩展和自定义程序行为。常用于训练过程中的监控、日志记录、模型保存等场景。示例:defcallback_function():print("Epochcompleted!")

- Python-什么是集合

難釋懷

python开发语言数据库

一、前言在Python中,除了我们常用的列表(list)、元组(tuple)和字典(dict),还有一种非常实用的数据结构——集合(set)。集合是一种无序且不重复的元素集合,常用于去重、交并差运算等场景。本文将带你全面了解Python中集合的基本用法、操作方法及其适用场景,并通过大量代码示例帮助你掌握这一重要数据类型。二、什么是集合(set)?✅定义:集合是Python中的一种可变数据类型,它存

- Python Day53

别勉.

python机器学习python开发语言

Task:1.对抗生成网络的思想:关注损失从何而来2.生成器、判别器3.nn.sequential容器:适合于按顺序运算的情况,简化前向传播写法4.leakyReLU介绍:避免relu的神经元失活现象1.对抗生成网络的思想:关注损失从何而来这是理解GANs的关键!传统的神经网络训练中,我们通常会直接定义一个损失函数(如均方误差MSE、交叉熵CE),然后通过反向传播来优化这个损失。这个损失的“来源”

- 〖Python零基础入门篇⑮〗- Python中的字典

哈哥撩编程

#①-零基础入门篇Python全栈白宝书python开发语言后端python中的字典

>【易编橙·终身成长社群,相遇已是上上签!】-点击跳转~<作者:哈哥撩编程(视频号同名)图书作者:程序员职场效能宝典博客专家:全国博客之星第四名超级个体:COC上海社区主理人特约讲师:谷歌亚马逊分享嘉宾科技博主:极星会首批签约作者文章目录⭐️什么是字典?⭐️字典的结构与创建方法⭐️字典支持的数据类型⭐️在列表与元组中如何定义字典

- python换行输出字典_Python基础入门:字符串和字典

weixin_39959236

python换行输出字典

10、字符串常用转义字符转义字符描述\\反斜杠符号\'单引号\"双引号\n换行\t横向制表符(TAB)\r回车三引号允许一个字符串跨多行,字符串中可以包含换行符、制表符以及其他特殊字符para_str="""这是一个多行字符串的实例多行字符串可以使用制表符TAB(\t)。也可以使用换行符[\n]。"""print(para_str)#这是一个多行字符串的实例#多行字符串可以使用制表符#TAB()。

- Python----Python中的集合及其常用方法

redrose2100

Pythonpython开发语言后端

【原文链接】1集合的定义和特点(1)集合是用花括号括起来的,集合的特点是元素没有顺序,元素具有唯一性,不能重复>>>a={1,2,3,4}>>>type(a)>>>a={1,2,3,1,2,3}>>>a{1,2,3}2集合的常用运算(1)集合元素没有顺序,所以不能像列表和元组那样用下标取值>>>a={1,2,3}>>>a[0]Traceback(mostrecentcalllast):File""

- langchain+langserver+langfuse整合streamlit构建基础智能体中心

Messi^

人工智能-大模型应用langchain人工智能

ServerApi******#!/usr/bin/python--coding:UTF-8--importuvicornfromfastapiimportFastAPIfrombaseimportFaissEnginefromlangserve.serverimportadd_routesfromlangchain_core.promptsimportPromptTemplatefromlang

- pycharm两种运行py之路径问题

hellopbc

software#pycharmpythonpycahrmpath

文章目录pycharm两种运行py之路径问题pycharm两种运行py之路径问题运行python代码在pycharm中有两种方式:一种是直接鼠标点击runxxx运行,还有一种是使用#In[]:点击该行左边的绿色三角形按钮运行有可能在pythonconsole窗口运行有可能在你当前运行文件的窗口(就是run之后产生的那个窗口)**问题:**你会发现,涉及到路径问题时(使用相对路径),可能在这两种运行

- Python元组的遍历

難釋懷

python前端linux

一、前言在Python中,元组(tuple)是一种非常基础且常用的数据结构,它与列表类似,都是有序的序列,但不同的是,元组是不可变的(immutable),一旦创建就不能修改。虽然元组不能被修改,但它支持高效的遍历操作,非常适合用于存储不会变化的数据集合。本文将系统性地介绍Python中元组的多种遍历方式,包括基本遍历、索引访问、元素解包、结合函数等,并结合大量代码示例帮助你掌握这一重要技能。二、

- Python集合生成式

一、前言在Python中,我们已经熟悉了列表生成式(ListComprehension),它为我们提供了一种简洁高效的方式来创建列表。而除了列表之外,Python还支持一种类似的语法结构来创建集合——集合生成式(SetComprehension)。集合生成式不仅可以帮助我们快速构造一个无序且不重复的集合,还能有效提升代码的可读性和执行效率。本文将带你全面了解:✅什么是集合生成式✅集合生成式的语法结

- Python开发从新手到专家:第三章 列表、元组和集合

caifox菜狐狸

Python开发从新手到专家python元素集合列表元组数据结构字典

在Python开发的旅程中,数据结构是每一位开发者必须掌握的核心知识。它们是构建程序的基石,决定了代码的效率、可读性和可维护性。本章将深入探讨Python中的三种基本数据结构:列表、元组和集合。这三种数据结构在实际开发中有着广泛的应用,从简单的数据存储到复杂的算法实现,它们都扮演着不可或缺的角色。无论你是刚刚接触Python的新手,还是希望进一步提升编程技能的开发者,本章都将是你的宝贵指南。我们将

- python入门之字典

二十四桥_

python入门python

文章目录一、字典定义二、字典插入三、字典删除四、字典修改五、字典查找六、字典遍历七、字典拆包一、字典定义#{}键值对各个键值对之间用逗号隔开#1.有数据的字典dict1={'name':'zmz','age':20,'gender':'boy'}print(dict1)#2.创建空字典dict2={}print(dict2)dict3=dict()print(dict3)二、字典插入dict1={

- python类的定义与使用

菜鸟驿站2020

python

class01.py代码如下classTicket():#类的名称首字母大写#在类里定义的变量称为属性,第一个属性必须是selfdef__init__(self,checi,fstation,tstation,fdate,ftime,ttime,notes):self.checi=checiself.fstation=fstationself.tstation=tstationself.fdate

- Python爬虫设置代理IP

菜鸟驿站2020

python

配置代理ipfrombs4importBeautifulSoupimportrequestsimportrandom#从ip代理网站获取ip列表defget_ip_list(url,headers):web_data=requests.get(url,headers=headers)soup=BeautifulSoup(web_data.text,'lxml')ips=soup.find_all(

- Tensorflow 回归模型 FLASK + DOCKER 部署 至 Ubuntu 虚拟机

准备工作:安装虚拟机,安装ubuntu,安装python3.x、pip和对应版本的tensorflow和其他库文件,安装docker。注意事项:1.windows系统运行的模型文件不能直接运行到虚拟机上,需在虚拟机上重新运行并生成模型文件2.虚拟机网络状态改为桥接Flask代码如下:fromflaskimportFlask,request,jsonifyimportpickleimportnump

- 10个可以快速用Python进行数据分析的小技巧_python 通径分析

2401_86043917

python数据分析开发语言

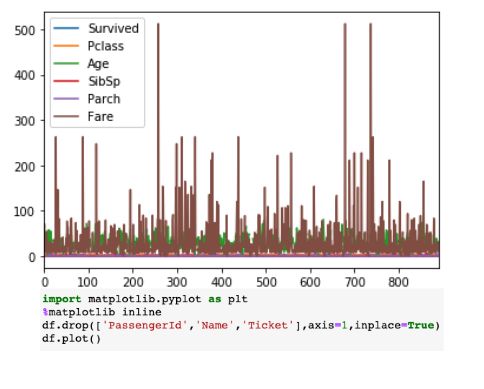

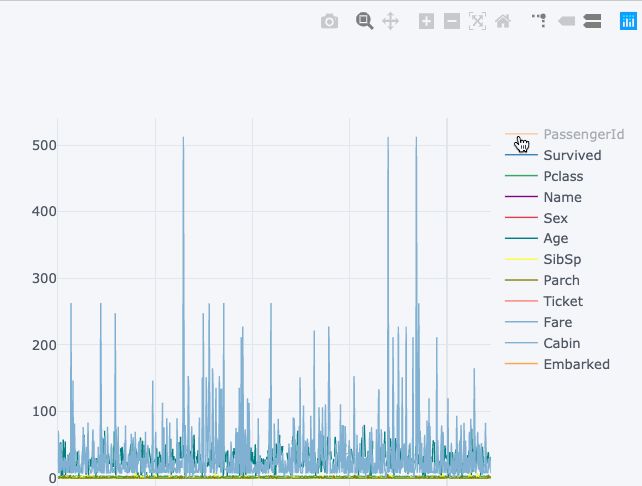

df.iplot()df.iplot()vsdf.plot()右侧的可视

- 【Python多线程】

晟翰逸闻

Pythonpython

文章目录前言一、Python等待event.set二、pythonracecondition和lock使用使用锁(Lock)三.pythonDeadLock使用等综合运用总结前言这篇技术文章讨论了多线程编程中的几个重要概念。它首先介绍了等待事件的使用,并强调了避免使用“ForLoop&Sleep”进行等待的重要性。接着,文档解释了竞态条件,并提供了处理共享资源的建议,即在使用共享资源时进行加锁和解

- 【pycharm专业版】【如何远程配置Python解释器】【SSH】

资源存储库

pythonpycharm

Wejustlookedatconfiguringalocalinterpreter.Butwedon’talwayshavea“local”environment.Sometimes–andincreasinglyoften–ourenvironmentisoverthere.我们刚刚看了配置本地解释器。但我们并不总是有一个“本地”的环境。有时候–而且越来越多的时候–我们的环境就在那里。Let’

- python线程同步锁_python的Lock锁,线程同步

weixin_39649660

python线程同步锁

一、Lock锁凡是存在共享资源争抢的地方都可以使用锁,从而保证只有一个使用者可以完全使用这个资源一旦线程获得锁,其他试图获取锁的线程将被阻塞acquire(blocking=True,timeout=-1):默认阻塞,阻塞可以设置超时时间,非阻塞时,timeout禁止设置,成功获取锁,返回True,否则返回Falsereleas():释放锁,可以从任何线程调用释放,已上锁的锁,会被重置为unloc

- 并发与并行:python多线程详解

m_merlon

python服务器Python进阶教程python

简介多进程和多线程都可以执行多个任务,线程是进程的一部分。线程的特点是线程之间可以共享内存和变量,资源消耗少,缺点是线程之间的同步和加锁比较麻烦。在cpython中,截止到3.12为止依然存在全局解释器锁(GIL),不能发挥多核的优势,因此python多线程更适合IO密集型任务并发提高效率,CPU密集型任务推荐使用多进程并行解决。注:此说法仅适用于python(如:c++的多线程可以利用到多核并行

- python多线程:生产者与消费者,高级锁定Condition、queue队列使用案例与注意事项

网小鱼的学习笔记

Pythonpythonjava大数据

高级锁定这是python中的另一种中锁定,就像是它的名字一样是可以有条件的condition,首先程序使用acquire进入锁定状态,如果需要符合一定的条件才处理数据,此时可以调用wait,让自己进入睡眠状态,程序设计时候需要用notify通知其他线程,然后放弃锁定release此时其他再等待的线程因为受到通知notify,这时候被激活了,就开始运作。生产者与消费者的设计程序用producer方法

- python协程与异步并发,同步与阻塞,异步与非阻塞,Python异步IO、协程与同步原语介绍,协程的优势和劣势

网小鱼的学习笔记

Pythonpython服务器开发语言

协程与异步软件系统的并发使用异步IO,无非是我们提的软件系统的并发,这个软件系统,可以是网络爬虫,也可以是web服务等并发的方式有多种,多线程,多进程,异步IO等多线程和多进程更多应用于CPU密集型的场景,比如科学计算的事件都消耗在CPU上面,利用多核CPU来分担计算任务多线程和多进程之间的场景切换和通讯代价很高,不适合IO密集型的场景,而异步IO就是非常适合IO密集型的场景,例如网络爬虫和web

- 使用Python和FFmpeg实现RGB到YUV444的转换

追逐程序梦想者

ffmpegpython开发语言

使用Python和FFmpeg实现RGB到YUV444的转换如果你需要将RGB图像转换为YUV444格式的图像,那么本文将为你提供一个简单且可靠的方法。我们将使用Python和FFmpeg来完成这个任务。首先,让我们了解一下什么是RGB和YUV。RGB表示红、绿、蓝三种颜色的组合,是最常见的图像格式之一。另一方面,YUV是一种亮度-色度编码,用于视频压缩和传输,它将图像分成明亮度(Y)和色度(U和

- 如何利用ssh使得pycharm连接服务器的docker容器内部环境

SoulMatter

docker容器运维pycharmssh

如题,想要配置服务器的python编译器环境,来查看容器内部环境安装的包的情况。首先,需要确定容器的状态,使用dockerps查看,只有ports那一栏有内容才证明容器暴露了端口出来。如果没有暴露,就需要将容器打包成镜像,然后将镜像再启动一个容器才可以。步骤如下:如何打包镜像:(里面包括了将镜像从A服务器远程传输到B服务器后使用的方法,如果是在本服务器自己使用,那么忽略远程传输的步骤)#创建一个基

- 多线程编程之join()方法

周凡杨

javaJOIN多线程编程线程

现实生活中,有些工作是需要团队中成员依次完成的,这就涉及到了一个顺序问题。现在有T1、T2、T3三个工人,如何保证T2在T1执行完后执行,T3在T2执行完后执行?问题分析:首先问题中有三个实体,T1、T2、T3, 因为是多线程编程,所以都要设计成线程类。关键是怎么保证线程能依次执行完呢?

Java实现过程如下:

public class T1 implements Runnabl

- java中switch的使用

bingyingao

javaenumbreakcontinue

java中的switch仅支持case条件仅支持int、enum两种类型。

用enum的时候,不能直接写下列形式。

switch (timeType) {

case ProdtransTimeTypeEnum.DAILY:

break;

default:

br

- hive having count 不能去重

daizj

hive去重having count计数

hive在使用having count()是,不支持去重计数

hive (default)> select imei from t_test_phonenum where ds=20150701 group by imei having count(distinct phone_num)>1 limit 10;

FAILED: SemanticExcep

- WebSphere对JSP的缓存

周凡杨

WAS JSP 缓存

对于线网上的工程,更新JSP到WebSphere后,有时会出现修改的jsp没有起作用,特别是改变了某jsp的样式后,在页面中没看到效果,这主要就是由于websphere中缓存的缘故,这就要清除WebSphere中jsp缓存。要清除WebSphere中JSP的缓存,就要找到WAS安装后的根目录。

现服务

- 设计模式总结

朱辉辉33

java设计模式

1.工厂模式

1.1 工厂方法模式 (由一个工厂类管理构造方法)

1.1.1普通工厂模式(一个工厂类中只有一个方法)

1.1.2多工厂模式(一个工厂类中有多个方法)

1.1.3静态工厂模式(将工厂类中的方法变成静态方法)

&n

- 实例:供应商管理报表需求调研报告

老A不折腾

finereport报表系统报表软件信息化选型

引言

随着企业集团的生产规模扩张,为支撑全球供应链管理,对于供应商的管理和采购过程的监控已经不局限于简单的交付以及价格的管理,目前采购及供应商管理各个环节的操作分别在不同的系统下进行,而各个数据源都独立存在,无法提供统一的数据支持;因此,为了实现对于数据分析以提供采购决策,建立报表体系成为必须。 业务目标

1、通过报表为采购决策提供数据分析与支撑

2、对供应商进行综合评估以及管理,合理管理和

- mysql

林鹤霄

转载源:http://blog.sina.com.cn/s/blog_4f925fc30100rx5l.html

mysql -uroot -p

ERROR 1045 (28000): Access denied for user 'root'@'localhost' (using password: YES)

[root@centos var]# service mysql

- Linux下多线程堆栈查看工具(pstree、ps、pstack)

aigo

linux

原文:http://blog.csdn.net/yfkiss/article/details/6729364

1. pstree

pstree以树结构显示进程$ pstree -p work | grep adsshd(22669)---bash(22670)---ad_preprocess(4551)-+-{ad_preprocess}(4552) &n

- html input与textarea 值改变事件

alxw4616

JavaScript

// 文本输入框(input) 文本域(textarea)值改变事件

// onpropertychange(IE) oninput(w3c)

$('input,textarea').on('propertychange input', function(event) {

console.log($(this).val())

});

- String类的基本用法

百合不是茶

String

字符串的用法;

// 根据字节数组创建字符串

byte[] by = { 'a', 'b', 'c', 'd' };

String newByteString = new String(by);

1,length() 获取字符串的长度

&nbs

- JDK1.5 Semaphore实例

bijian1013

javathreadjava多线程Semaphore

Semaphore类

一个计数信号量。从概念上讲,信号量维护了一个许可集合。如有必要,在许可可用前会阻塞每一个 acquire(),然后再获取该许可。每个 release() 添加一个许可,从而可能释放一个正在阻塞的获取者。但是,不使用实际的许可对象,Semaphore 只对可用许可的号码进行计数,并采取相应的行动。

S

- 使用GZip来压缩传输量

bijian1013

javaGZip

启动GZip压缩要用到一个开源的Filter:PJL Compressing Filter。这个Filter自1.5.0开始该工程开始构建于JDK5.0,因此在JDK1.4环境下只能使用1.4.6。

PJL Compressi

- 【Java范型三】Java范型详解之范型类型通配符

bit1129

java

定义如下一个简单的范型类,

package com.tom.lang.generics;

public class Generics<T> {

private T value;

public Generics(T value) {

this.value = value;

}

}

- 【Hadoop十二】HDFS常用命令

bit1129

hadoop

1. 修改日志文件查看器

hdfs oev -i edits_0000000000000000081-0000000000000000089 -o edits.xml

cat edits.xml

修改日志文件转储为xml格式的edits.xml文件,其中每条RECORD就是一个操作事务日志

2. fsimage查看HDFS中的块信息等

&nb

- 怎样区别nginx中rewrite时break和last

ronin47

在使用nginx配置rewrite中经常会遇到有的地方用last并不能工作,换成break就可以,其中的原理是对于根目录的理解有所区别,按我的测试结果大致是这样的。

location /

{

proxy_pass http://test;

- java-21.中兴面试题 输入两个整数 n 和 m ,从数列 1 , 2 , 3.......n 中随意取几个数 , 使其和等于 m

bylijinnan

java

import java.util.ArrayList;

import java.util.List;

import java.util.Stack;

public class CombinationToSum {

/*

第21 题

2010 年中兴面试题

编程求解:

输入两个整数 n 和 m ,从数列 1 , 2 , 3.......n 中随意取几个数 ,

使其和等

- eclipse svn 帐号密码修改问题

开窍的石头

eclipseSVNsvn帐号密码修改

问题描述:

Eclipse的SVN插件Subclipse做得很好,在svn操作方面提供了很强大丰富的功能。但到目前为止,该插件对svn用户的概念极为淡薄,不但不能方便地切换用户,而且一旦用户的帐号、密码保存之后,就无法再变更了。

解决思路:

删除subclipse记录的帐号、密码信息,重新输入

- [电子商务]传统商务活动与互联网的结合

comsci

电子商务

某一个传统名牌产品,过去销售的地点就在某些特定的地区和阶层,现在进入互联网之后,用户的数量群突然扩大了无数倍,但是,这种产品潜在的劣势也被放大了无数倍,这种销售利润与经营风险同步放大的效应,在最近几年将会频繁出现。。。。

如何避免销售量和利润率增加的

- java 解析 properties-使用 Properties-可以指定配置文件路径

cuityang

javaproperties

#mq

xdr.mq.url=tcp://192.168.100.15:61618;

import java.io.IOException;

import java.util.Properties;

public class Test {

String conf = "log4j.properties";

private static final

- Java核心问题集锦

darrenzhu

java基础核心难点

注意,这里的参考文章基本来自Effective Java和jdk源码

1)ConcurrentModificationException

当你用for each遍历一个list时,如果你在循环主体代码中修改list中的元素,将会得到这个Exception,解决的办法是:

1)用listIterator, 它支持在遍历的过程中修改元素,

2)不用listIterator, new一个

- 1分钟学会Markdown语法

dcj3sjt126com

markdown

markdown 简明语法 基本符号

*,-,+ 3个符号效果都一样,这3个符号被称为 Markdown符号

空白行表示另起一个段落

`是表示inline代码,tab是用来标记 代码段,分别对应html的code,pre标签

换行

单一段落( <p>) 用一个空白行

连续两个空格 会变成一个 <br>

连续3个符号,然后是空行

- Gson使用二(GsonBuilder)

eksliang

jsongsonGsonBuilder

转载请出自出处:http://eksliang.iteye.com/blog/2175473 一.概述

GsonBuilder用来定制java跟json之间的转换格式

二.基本使用

实体测试类:

温馨提示:默认情况下@Expose注解是不起作用的,除非你用GsonBuilder创建Gson的时候调用了GsonBuilder.excludeField

- 报ClassNotFoundException: Didn't find class "...Activity" on path: DexPathList

gundumw100

android

有一个工程,本来运行是正常的,我想把它移植到另一台PC上,结果报:

java.lang.RuntimeException: Unable to instantiate activity ComponentInfo{com.mobovip.bgr/com.mobovip.bgr.MainActivity}: java.lang.ClassNotFoundException: Didn't f

- JavaWeb之JSP指令

ihuning

javaweb

要点

JSP指令简介

page指令

include指令

JSP指令简介

JSP指令(directive)是为JSP引擎而设计的,它们并不直接产生任何可见输出,而只是告诉引擎如何处理JSP页面中的其余部分。

JSP指令的基本语法格式:

<%@ 指令 属性名="

- mac上编译FFmpeg跑ios

啸笑天

ffmpeg

1、下载文件:https://github.com/libav/gas-preprocessor, 复制gas-preprocessor.pl到/usr/local/bin/下, 修改文件权限:chmod 777 /usr/local/bin/gas-preprocessor.pl

2、安装yasm-1.2.0

curl http://www.tortall.net/projects/yasm

- sql mysql oracle中字符串连接

macroli

oraclesqlmysqlSQL Server

有的时候,我们有需要将由不同栏位获得的资料串连在一起。每一种资料库都有提供方法来达到这个目的:

MySQL: CONCAT()

Oracle: CONCAT(), ||

SQL Server: +

CONCAT() 的语法如下:

Mysql 中 CONCAT(字串1, 字串2, 字串3, ...): 将字串1、字串2、字串3,等字串连在一起。

请注意,Oracle的CON

- Git fatal: unab SSL certificate problem: unable to get local issuer ce rtificate

qiaolevip

学习永无止境每天进步一点点git纵观千象

// 报错如下:

$ git pull origin master

fatal: unable to access 'https://git.xxx.com/': SSL certificate problem: unable to get local issuer ce

rtificate

// 原因:

由于git最新版默认使用ssl安全验证,但是我们是使用的git未设

- windows命令行设置wifi

surfingll

windowswifi笔记本wifi

还没有讨厌无线wifi的无尽广告么,还在耐心等待它慢慢启动么

教你命令行设置 笔记本电脑wifi:

1、开启wifi命令

netsh wlan set hostednetwork mode=allow ssid=surf8 key=bb123456

netsh wlan start hostednetwork

pause

其中pause是等待输入,可以去掉

2、

- Linux(Ubuntu)下安装sysv-rc-conf

wmlJava

linuxubuntusysv-rc-conf

安装:sudo apt-get install sysv-rc-conf 使用:sudo sysv-rc-conf

操作界面十分简洁,你可以用鼠标点击,也可以用键盘方向键定位,用空格键选择,用Ctrl+N翻下一页,用Ctrl+P翻上一页,用Q退出。

背景知识

sysv-rc-conf是一个强大的服务管理程序,群众的意见是sysv-rc-conf比chkconf

- svn切换环境,重发布应用多了javaee标签前缀

zengshaotao

javaee

更换了开发环境,从杭州,改变到了上海。svn的地址肯定要切换的,切换之前需要将原svn自带的.svn文件信息删除,可手动删除,也可通过废弃原来的svn位置提示删除.svn时删除。

然后就是按照最新的svn地址和规范建立相关的目录信息,再将原来的纯代码信息上传到新的环境。然后再重新检出,这样每次修改后就可以看到哪些文件被修改过,这对于增量发布的规范特别有用。

检出