Django集成Celery异步执行任务(一)

1.1目标

1.调用Restful API生成异步任务

2.查看异步任务的执行结果

3.Django管理后台生成定时执行和间隔执行任务

4.获取定时和间隔执行任务的结果

5.调用Restful API生成定时和间隔执行任务

6.获取定时和间隔执行任务的结果

1.2阅读须知

适合对Django rest framework熟练配置的人阅读

适合对Django-rest-swagger熟练配置的人阅读

适合对drf-extensionsr熟练配置的人阅读

对以上配置不熟的,可以参考Django Swagger JWT Restful API

2.环境版本

以下包建议在虚拟环境下安装

Django 3.2.12

Celery 5.1.2

Django-celery-beat 2.2.1 用于定时

Django-celery-results 2.2.0

Django-redis 4.8.0

Djangorestframework 3.13.1

Django-rest-swagger 2.2.0

drf-extensions 0.7.1

eventlet 0.33.1

操作系统:Win10

Redis版本:3.2.12

3.1Celery配置

下面这段配置写在Django项目的settings.py里

CELERY_BROKER_URL = 'redis://47.93.218.25:6379/1' # Broker配置,使用Redis作为消息中间件

# CELERY_RESULT_BACKEND = 'redis://47.93.218.25:6379/2' # Backend设置,使用redis作为后端结果存储

CELERY_RESULT_BACKEND = 'django-db' # Backend设置,使用mysql作为后端结果存储

CELERY_TIMEZONE = 'Asia/Shanghai'

CELERY_ENABLE_UTC = False

# CELERY_ENABLE_UTC = True

CELERY_WORKER_CONCURRENCY = 4 # 并发的worker数量

CELERY_ACKS_LATE = True

DJANGO_CELERY_BEAT_TZ_AWARE = False

# DJANGO_CELERY_BEAT_TZ_AWARE = True

CELERY_WORKER_MAX_TASKS_PER_CHILD = 5 # 每个worker最多执行的任务数, 可防止内存泄漏

CELERY_TASK_TIME_LIMIT = 15 * 60 # 任务超时时间

3.2Django settings.py配置

django_celery_beat用于生成定时和间隔任务

django_celery_results用于将异步任务执行结果存储至关系型数据库,比如MySQL

INSTALLED_APPS = [

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'baby_app.apps.BabyAppConfig',

'bootstrap3',

'DjangoUeditor', #富文本编辑器

'moment',

'rest_framework',

'rest_framework_swagger',

'django_filters',

'django_celery_beat',

'django_celery_results',

]

# 配置redis缓存

CACHES = {

"default": {

"BACKEND": "django_redis.cache.RedisCache",

"LOCATION": "redis://47.93.218.26:6379/1",

"OPTIONS": {

"CLIENT_CLASS": "django_redis.client.DefaultClient",

}

}

}

REST_FRAMEWORK_EXTENSIONS = { # 配置redis缓存视图页面

'DEFAULT_CACHE_RESPONSE_TIMEOUT':180,

'DEFAULT_USE_CACHE': 'default',

}

# rest framework配置

REST_FRAMEWORK = { # restful插件配置项

# Use Django's standard `django.contrib.auth` permissions,

# or allow read-only access for unauthenticated users.

'DEFAULT_PERMISSION_CLASSES': [

'rest_framework.permissions.IsAuthenticatedOrReadOnly',

'rest_framework.permissions.IsAuthenticatedOrReadOnly'

],

'DEFAULT_AUTHENTICATION_CLASSES': (

'rest_framework.authentication.BasicAuthentication', # username和password形式认证

'rest_framework.authentication.SessionAuthentication',

'rest_framework_jwt.authentication.JSONWebTokenAuthentication', # 全局jwt

),

'DEFAULT_SCHEMA_CLASS': 'rest_framework.schemas.coreapi.AutoSchema',

'DEFAULT_FILTER_BACKENDS': ['django_filters.rest_framework.DjangoFilterBackend'],

'DEFAULT_PAGINATION_CLASS': 'rest_framework.pagination.PageNumberPagination',

'PAGE_SIZE': 3

}

4.实例化Celery

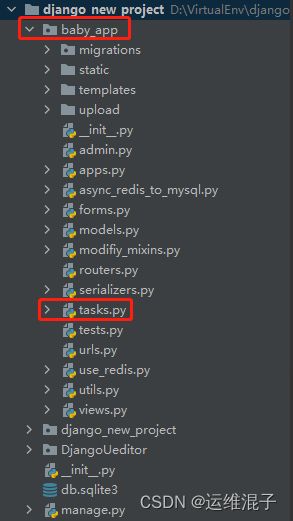

在和settings.py同级的项目根目录下新建celery.py文件,文件路径如下图所示

以下是celery.py内容(这段是借鉴别人博客内容)

# -*- coding: utf-8 -*-

# -------------------------------------------------------------------------------

# Name: celery

# Description:

# Author: CHEN

# Date: 2022/5/13

# -------------------------------------------------------------------------------

from __future__ import absolute_import, unicode_literals

import os

from celery import Celery

# set the defalut Django settings module for the 'celery' program

# 为"celery"程序设置默认的Django settings 模块

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'django_new_project.settings')

app = Celery('celery')

# Using a string here means the worker dosen't have to serialize the configuration object to child processes.

# 在这里使用字符串意味着worker不必将配置对象序列化为子进程。

# - namespace='CELERY' means all celery-related configuration keys should have a 'CELERY_' prefix

# namespace="CELERY"表示所有与Celery相关的配置keys均应该带有'CELERY_'前缀。

app.config_from_object('django.conf:settings', namespace='CELERY')

# Load task modules from all registered Django app configs.

# 从所有注册的Django app 配置中加载 task模块。

app.autodiscover_tasks()

5.创建任务

我的应用是baby_app,所以我在baby_app下创建task.py,如下图所示

以下是task.py内容

# -*- coding: utf-8 -*-

# -------------------------------------------------------------------------------

# Name: task

# Description:

# Author: CHEN

# Date: 2022/5/13

# -------------------------------------------------------------------------------

from __future__ import absolute_import, unicode_literals

import requests

from celery import shared_task

from requests.exceptions import ConnectionError, ConnectTimeout

@shared_task

def get_url(url,timeout=3):

try:

code=requests.get(url).status_code

return code

except (ConnectionError,ConnectTimeout) as e:

return 404

6.1生成手动异步任务的模型

我的应用是baby_app,所以我在baby_app下创建model.py

from django.db import models

class ManualTask(models.Model):

task_id = models.CharField(max_length=60, null=False, unique=True)

task_name = models.CharField(max_length=60, null=False, unique=True)

create_time=models.DateTimeField(default=datetime.utcnow())

params=models.CharField(max_length=60, null=True)

class Meta:

db_table = 'manual_task'

6.2生成数据表

假如以前没有将django_celery_beat,django_celery_results的数据模型迁移,以下操作同时也会将他们的模型迁移生成数据表

python manage.py makemigrations

python manage.py migrate

7.1生成手动异步任务的序列化类

我的应用是baby_app,所以我在baby_app下创建serializers.py

class ManualTaskSerializer(serializers.HyperlinkedModelSerializer):

class Meta:

model = ManualTask

fields = ['task_id', 'task_name', 'params']

7.2生成异步任务执行结果的序列化类

from django_celery_results.models import TaskResult

class TaskResultSerializer(serializers.HyperlinkedModelSerializer):

class Meta:

model = TaskResult

fields = '__all__'

8.生成手动异步任务和结果视图

我的应用是baby_app,所以我在baby_app下创建view.py

继承CacheResponseMixin是为了缓存视图数据到Redis中,满足高并发查询

以下两个视图类的create,update,delete函数都是分别复写了CreateModelMixin,UpdateModelMixin,DestroyModelMixin类的方法

from .models import Question, Baby, Blog, Healthy, Money, ManualTask

from rest_framework import viewsets, mixins

from .modifiy_mixins import CacheResponseMixin

from .modifiy_mixins import cache_response

from rest_framework.response import Response

from rest_framework_swagger.views import get_swagger_view

class TaskResultViewSet(CacheResponseMixin, viewsets.ModelViewSet):

"""

获取异步任务执行结果视图

"""

queryset_source = TaskResult.objects.all().order_by('id')

queryset = queryset_source

filter_fields = ('task_id',) # 设置过滤字段

serializer_class = TaskResultSerializer

@cache_response(key_func='list_cache_key_func', timeout='list_cache_timeout') # 在表数据发生插入更新删除操作时,更新redis缓存的数据

def create(self, request, *args, **kwargs):

data = request.data.copy()

serializer = self.get_serializer(data=data)

serializer.is_valid(raise_exception=True)

self.perform_create(serializer)

headers = self.get_success_headers(serializer.data)

return Response(serializer.data, status=status.HTTP_201_CREATED, headers=headers)

@cache_response(key_func='object_cache_key_func', timeout='object_cache_timeout')

def update(self, request, *args, **kwargs):

partial = kwargs.pop('partial', False)

instance = self.get_object()

serializer = self.get_serializer(instance, data=request.data, partial=partial)

serializer.is_valid(raise_exception=True)

self.perform_update(serializer)

if getattr(instance, '_prefetched_objects_cache', None):

# If 'prefetch_related' has been applied to a queryset, we need to

# forcibly invalidate the prefetch cache on the instance.

instance._prefetched_objects_cache = {}

return Response(serializer.data)

@cache_response(key_func='object_cache_key_func', timeout='object_cache_timeout')

def destroy(self, request, *args, **kwargs):

instance = self.get_object()

self.perform_destroy(instance)

return Response(status=status.HTTP_204_NO_CONTENT)

class ManualTaskViewSet(mixins.ListModelMixin, mixins.CreateModelMixin, viewsets.GenericViewSet):

"""

手动调用异步任务视图

"""

queryset = ManualTask.objects.all().order_by('id')

serializer_class = ManualTaskSerializer

def manual_task(self, request):

# print(request.data)

params = request.data.get('params') # request.data是一个字典,获取提交的params参数

params = json.loads(params) # 由于params参数是个json类型的字符串,故转换为python可识别的列表

url = params[0]

timeout = int(params[1])

t = tasks.get_url.delay(url, timeout) # delay异步执行任务

return t.id # 返回异步任务的task_id

def create(self, request, *args, **kwargs):

"""

此方法覆盖了CreateModelMixin类的create方法

"""

task_id = self.manual_task(request)

data = request.data.copy()

data['task_id'] = task_id # 将获取的task_id塞入即将反序列化的数据中以便写入到表中

# print(data)

serializer = self.get_serializer(data=data)

serializer.is_valid(raise_exception=True)

self.perform_create(serializer)

headers = self.get_success_headers(serializer.data)

return Response(serializer.data, status=status.HTTP_201_CREATED, headers=headers)

schema_view = get_swagger_view(title='任务')

9.将视图添加到rest framework路由中

我的应用是baby_app,所以我在baby_app下创建routers.py

# -*- coding: utf-8 -*-

# -------------------------------------------------------------------------------

# Name: routers

# Description:

# Author: CHEN

# Date: 2022/4/13

# -------------------------------------------------------------------------------

from rest_framework import routers

from .views import TaskResultViewSet,ManualTaskViewSet

router = routers.DefaultRouter()

router.register(r'taskresults', TaskResultViewSet)

router.register(r'manualtasks', ManualTaskViewSet)

10.项目路由设置

编写项目根目录的urls.py

schema_view是快速生成的swagger UI视图

from django.urls import path, include,re_path

from baby_app.routers import router

from baby_app.views import schema_view,UserViewSet

urlpatterns = [

path('api/', include(router.urls)),

path('api-auth/', include('rest_framework.urls', namespace='rest_framework')),

re_path(r'^docs',schema_view)

]

11.启动Celery的worker

1.pool参数可配置solo,eventlet等,当–pool=solo,多个任务是串行执行,效率低,–pool=eventlet,多个任务是并发执行,效率高,其中用到了协程技术

2.当pool配置成eventlet,首先要安装eventlet,并且当Celery配置中的CELERY_RESULT_BACKEND = 'django-db’时,可能报"DatabaseError: DatabaseWrapper objects created in a thread can only be used in that same thread. The object with alias ‘default’ was created in thread id 140107533682432 and this is thread id 65391024"错误

1.Pycharm打开terminal

2.执行celery -A django_new_project worker -l info --pool=eventlet即可启动worker

修改base.py里的这个方法,不让Django检查线程id是否一致,即可解决DatabaseError问题

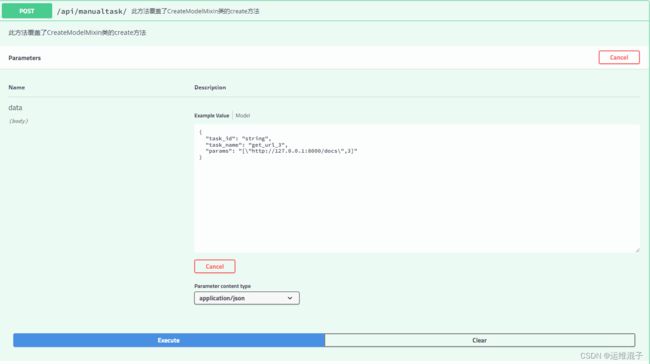

12.在Swagger UI界面调用生成异步任务API

点击Execute调用post方法请求API

注意传入的params参数的双引号要做转义,因为参数传入后台是json格式,不转义会和外层的双引号冲突

task_id参数不需要手动定义,任务生成后会在后台将task_id存入表中

{

"task_id": "string",

"task_name": "get_url_3",

"params": "[\"http://127.0.0.1:8000/docs\",3]"

}

13.调用任务结果API获取结果

可根据task_id过滤

以下是worker从redis队列中获取任务并执行任务的过程

未完请看第二章