21.Hadoop在Windows环境下的下载安装配置超详细版

Hadoop在Windows环境下的下载安装配置超详细版

本文章所需下载安装软件:

链接:https://pan.baidu.com/s/1jIQyy0VHuPvQZ8-n_Zq0pg?pwd=1017

hadoop的Windows化安装步骤是非常麻烦的,如果有一步出错将导致得充头从来。

环境配置

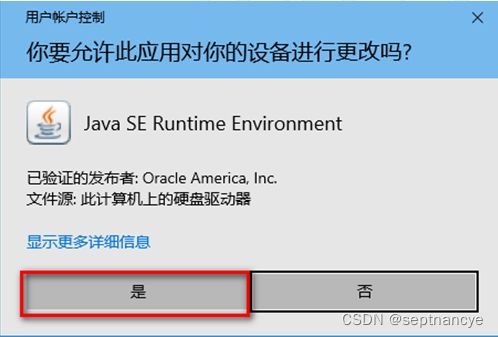

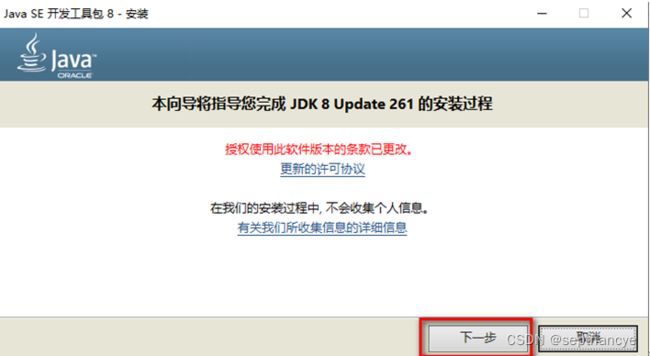

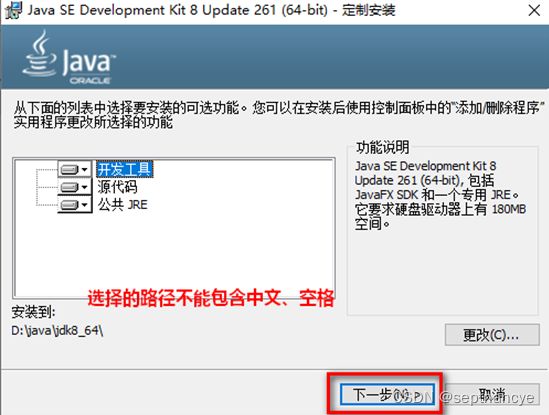

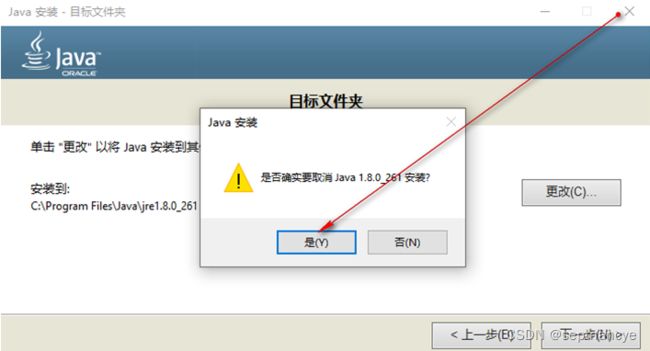

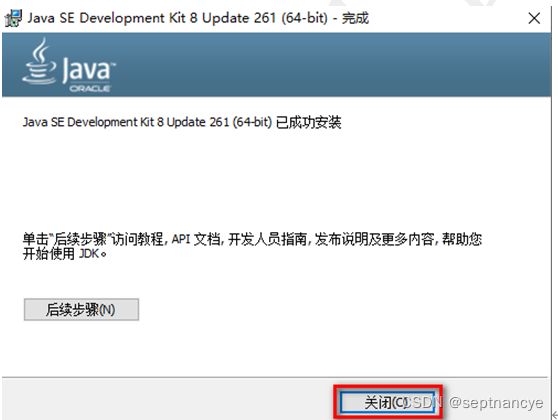

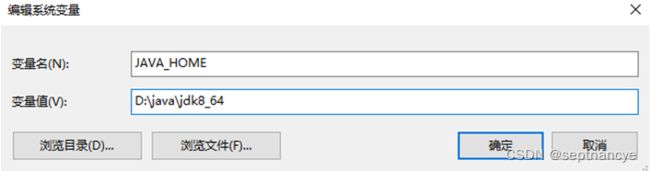

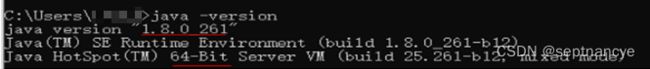

前置依赖1:64位JDK&JAVA_HOME

超详细版下载安装步骤:传送门

Hadoop依赖的是64位的JDK,我们需要安装并配置64位JDK的JAVA_HOME。

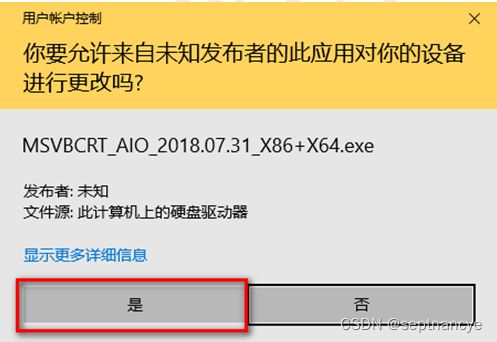

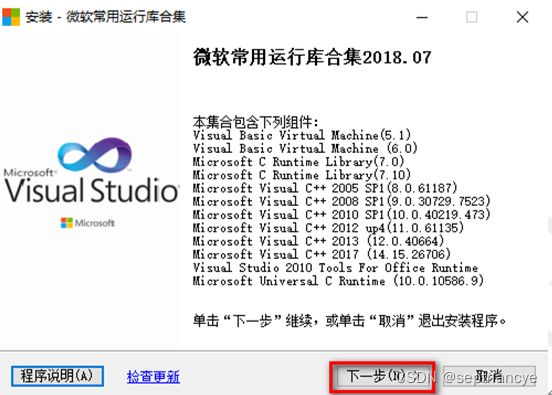

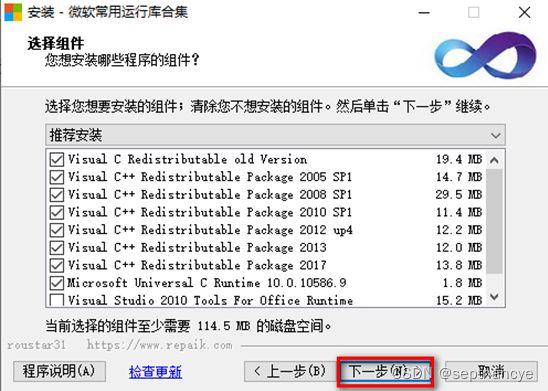

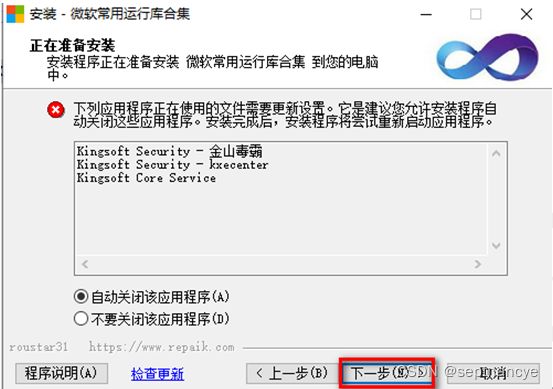

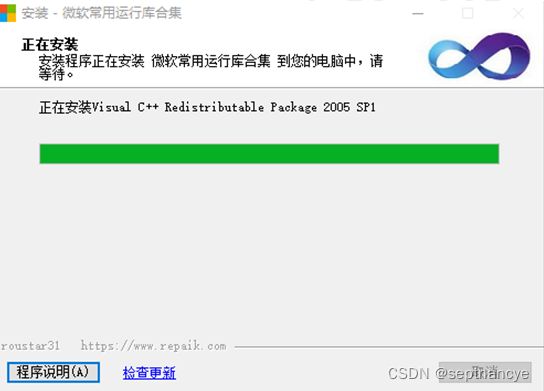

前置依赖2:C++环境依赖

在安装 Visual c++ 2005这块会比较慢,耐心等待!!!

解压缩与环境变量

使用管理员模式打开解压软件:

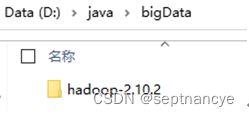

将hadoop解压到

D:/java/bigData/目录中:

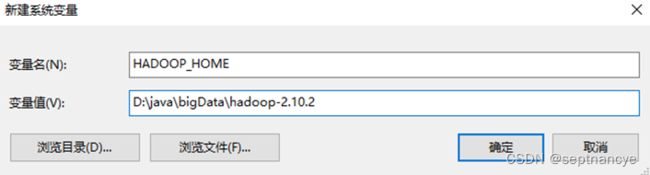

向环境变量中配置HADOOP_HOME:

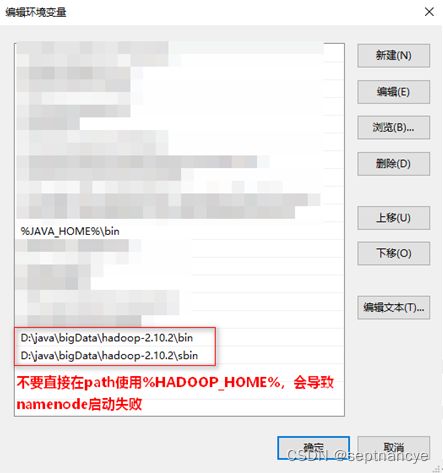

向path中配置:

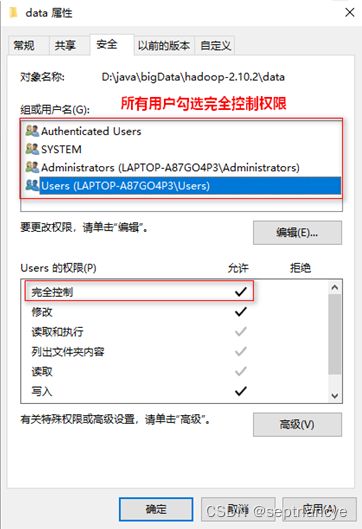

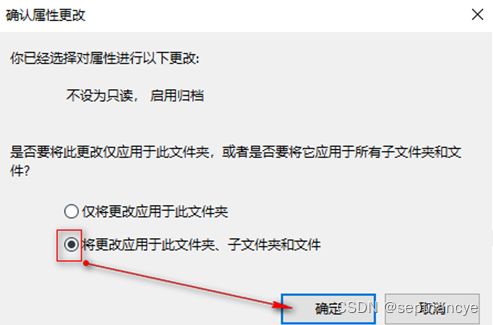

目录创建&赋予权限

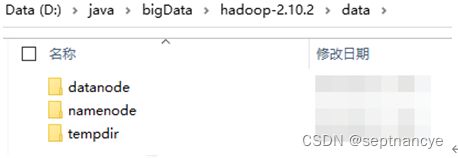

在hadoop目录创建一个data目录,data目录下创建三个目录:

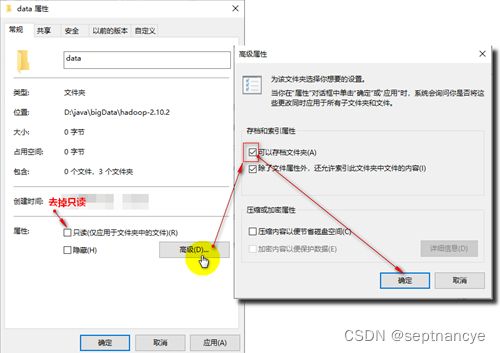

data目录必须赋予全部权限,否则会导致格式化成功,但启动namenode失败:

单节点配置

在启动之前,我们需要为Hadoop配置其单节点配置:

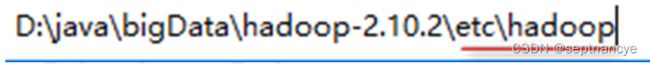

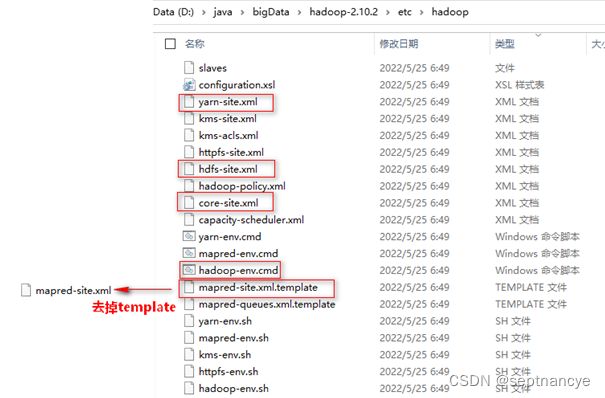

进入etc/hadoop目录

依次修改5个配置文件:

1.

2. core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:///D:/java/bigData/hadoop-2.10.2/data/tempdir

//这里是自己创建tempdir文件夹的位置 注意一定要是/而不是\

</property>

</configuration>

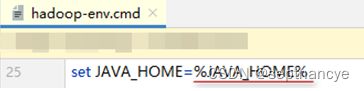

检查这里是否为%JAVA_HOME%

3. hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///D:/java/bigData/hadoop-2.10.2/data/namenode

//这里是自己创建namenode文件夹的位置 注意一定要是/而不是\

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///D:/java/bigData/hadoop-2.10.2/data/datanode

//这里是自己创建datanode文件夹的位置 注意一定要是/而不是\

</property>

</configuration>

- mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

- yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

Winutils

打开winutils,找到hadoop-2.9.2,将bin目录所有内容覆盖到hadoop的bin目录,即可将Linux 版转化为Windows版

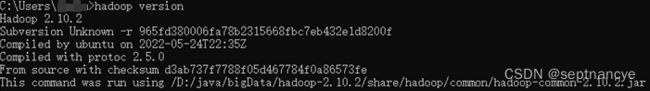

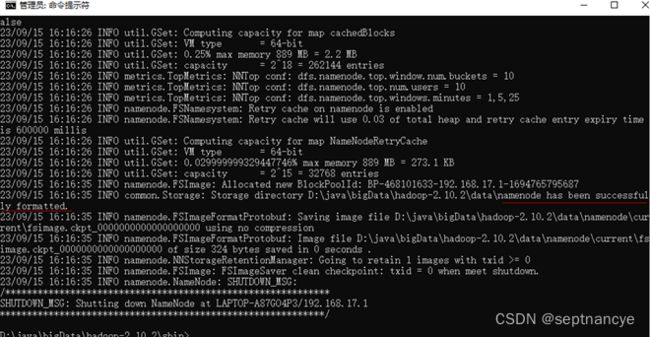

第一次格式化&启动

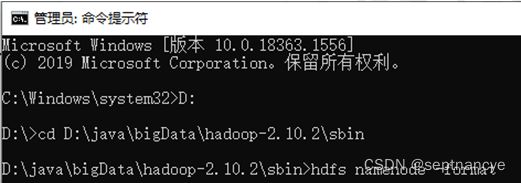

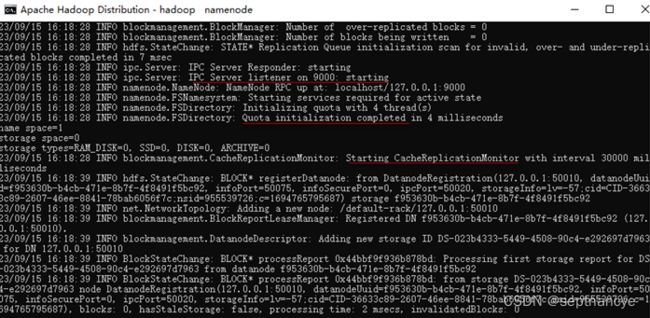

第一次执行hadoop,需要对namenode进行格式化。

需要通过管理员方式启动命令行,切换到sbin目录:

hdfs namenode -format

出现以下标志,说明第一次格式化成功,以后只要不修改配置,就无需格式化。

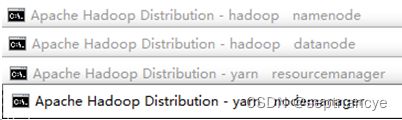

start-all.cmd

进入到sbin目录下,

![]()

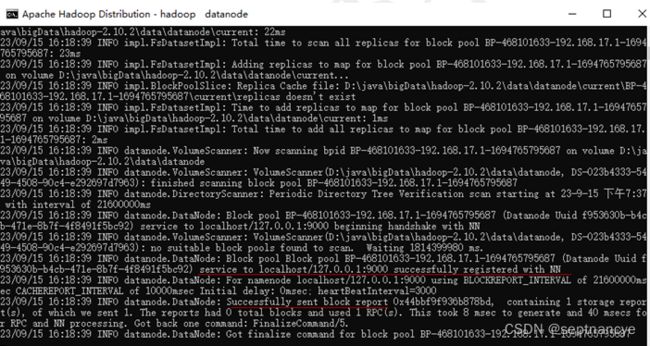

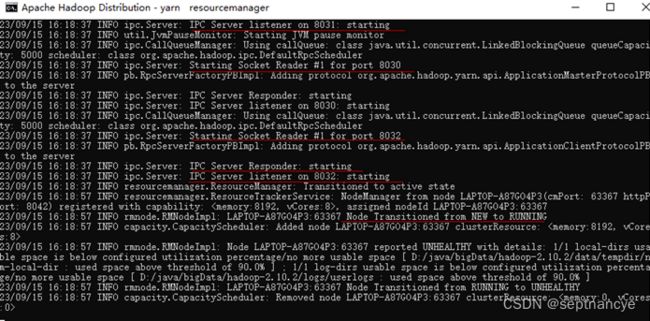

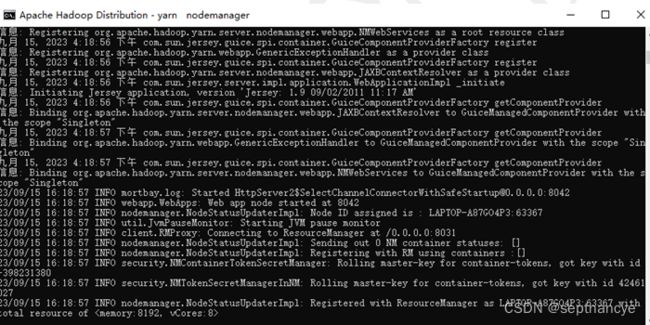

只要四大服务没有报错,就是正常执行了:

具体如下:

访问浏览器,即可查看Hadoop服务器状态:

http://localhost:50070/

浏览器访问成功,说明搭建配置成功,可正常使用!!!