Spark Streaming整合Kafka的两种方式

Spark Streaming整合Kafka,两种整合方式:Receiver-based和Direct方式

一:Kafka准备

1、分别启动zookeeper

./zkServer.sh start

2、分别启动kafka

./kafka-server-start.sh -daemon ../config/server.properties //后台启动

3、创建topic

./kafka-topics.sh --create --zookeeper hadoop:2181 --replication-factor 1 --partitions 1 --topic kafka-streaming_topic

4、通过控制台测试topic能否正常的生产和消费

启动生产者脚本:

./kafka-console-producer.sh --broker-list hadoop:9092 --topic kafka-streaming_topic

启动消费者脚本:

./kafka-console-consumer.sh --zookeeper hadoop:2181 --topic kafka-streaming_topic --from-beginning

准备工作已经就绪。

二:Receiver-based方式整合

1 添加kafka依赖

<!-- kafka依赖-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-8_2.11</artifactId>

<version>2.2.0</version>

</dependency>

2 本地代码编写

package com.kinglone.streaming

import org.apache.spark.SparkConf

import org.apache.spark.streaming.kafka.KafkaUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

object KafkaReceiverWordCount {

def main(args: Array[String]): Unit = {

if(args.length != 4) {

System.err.println("Usage: KafkaReceiverWordCount " )

}

val Array(zkQuorum, group, topics, numThreads) = args

val sparkConf = new SparkConf()//.setAppName("KafkaReceiverWordCount").setMaster("local[2]")

val ssc = new StreamingContext(sparkConf, Seconds(5))

val topicMap = topics.split(",").map((_, numThreads.toInt)).toMap

/**

* * @param ssc StreamingContext object

* * @param zkQuorum Zookeeper quorum (hostname:port,hostname:port,..)

* * @param groupId The group id for this consumer topic所在的组,可以设置为自己想要的名称

* * @param topics Map of (topic_name to numPartitions) to consume. Each partition is consumed

* * in its own thread

* * @param storageLevel Storage level to use for storing the received objects

* * (default: StorageLevel.MEMORY_AND_DISK_SER_2)

*/

val messages = KafkaUtils.createStream(ssc, zkQuorum, group,topicMap)

messages.map(_._2).flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).print()

ssc.start()

ssc.awaitTermination()

}

}

3 提交到服器上运行

如果生产中没有联网,需要使用 --jars 传入kafka的jar包

把项目打成jar包

mvn clean package -DskipTests

使用local模式提交,提交的脚本:

./spark-submit --class com.kinglone.streaming.KafkaReceiverWordCount

--master local[2] --name KafkaReceiverWordCount

--packages org.apache.spark:spark-streaming-kafka-0-8_2.11:2.2.0

/opt/script/kafkaReceiverWordCount.jar

hadoop01:2181 test kafka-streaming_topic 1

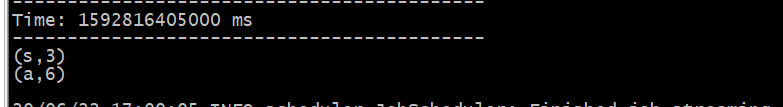

运行结果

首先在控制台,启动kafka生产者,输入一些单词,然后,启动SparkStreaming程序。

三:Direct方式整合(推荐使用)

使用的是:Simple Consumer API,自己管理offset,把kfka看成存储数据的地方,根据offset去读。没有使用zk管理消费者的offset,spark自己管理,默认的offset在内存中,如果设置了checkpoint,那么也也有一份,一般要设置。Direct模式生成的Dstream中的RDD的并行度与读取的topic中的partition一致(增加topic的partition个数)

注意点:

没有使用receive,直接查询的kafka偏移量

1 添加kafka依赖

<!-- kafka依赖-->

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-8_2.11</artifactId>

<version>2.2.0</version>

</dependency>

2 代码编写

package com.kinglone.streaming

import org.apache.spark.SparkConf

import org.apache.spark.streaming.kafka.KafkaUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

import _root_.kafka.serializer.StringDecoder

object KafkaDirectWordCount {

def main(args: Array[String]): Unit = {

if(args.length != 2) {

System.err.println("Usage: KafkaDirectWordCount " )

System.exit(1)

}

val Array(brokers, topics) = args

val sparkConf = new SparkConf() //.setAppName("KafkaReceiverWordCount").setMaster("local[2]")

val ssc = new StreamingContext(sparkConf, Seconds(5))

val topicsSet = topics.split(",").toSet

val kafkaParams = Map[String,String]("metadata.broker.list"-> brokers)

val messages = KafkaUtils.createDirectStream[String,String,StringDecoder,StringDecoder](

ssc,kafkaParams,topicsSet

)

messages.map(_._2).flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).print()

ssc.start()

ssc.awaitTermination()

}

}

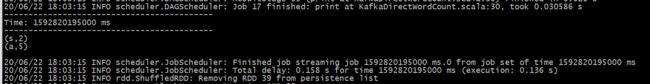

3 提交到服务器上

./spark-submit --class com.kinglone.streaming.KafkaDirectWordCount

--master local[2] --name KafkaDirectWordCount

--packages org.apache.spark:spark-streaming-kafka-0-8_2.11:2.2.0

/opt/script/kafkaDirectWordCount.jar hadoop01:9092 kafka-streaming_topic

4 总结

注意两种模式差别,receive模式几乎被淘汰,可以扩展的地方,1)使程序具备高可用的能力,挂掉之后,能否从上次的状态恢复过来,2)手动管理offset,改变了业务逻辑也能从上次的状态恢复过来