docker 运行各种容器的命令

1、docker使用elasticsearch:

docker run -d --name elasticsearch \

-p 9200:9200 -p 9300:9300 \

-e "discovery.type=single-node" \

-e ES_JAVA_OPTS="-Xms64m -Xmx128m" \

elasticsearch:tag

注释:ES_JAVA_OPTS="-Xms64m -Xmx128m" ==>表示最小占用内存64m,最大128m。

2、docker使用mysql:

docker run -p 3306:3306 --name mysql \

-v /mydata/mysql/log:/var/log/mysql \

-v /mydata/mysql/data:/var/lib/mysql \

-v /mydata/mysql/conf:/etc/mysql \

-e MYSQL_ROOT_PASSWORD=root \

-d mysql:5.7

3、docker使用portainer:

# 搜索镜像

docker search portainer/portainer

# 拉取镜像

docker pull portainer/portainer

# 运行镜像

docker run -d -p 9000:9000 \

--restart=always \

--privileged=true \

-v /root/portainer:/data \

-v /var/run/docker.sock:/var/run/docker.sock \

--name dev-portainer portainer/portainer

注释:–privileged=true ===》授权让我们可以访问

4、commit镜像:

docker commit -m="提交的描述信息" -a="作者" 容器ID 目标镜像:[TAG]

例子:

docker commit -a="wph" -m="add webapps app" f9baeebacd81 tomcat:wph-1.0

5、nginx匿名挂载和具名挂载(这两种建议使用具名):

匿名:-v 没有指定Linux系统中的名字

docker run -d -P --name nginx01 -v /etc/nginx nginx

具名:-v juming-nginx 指定了Linux系统中的名字

docker run -d -P --name nginx02 -v juming-nginx:/etc/nginx nginx

产生的效果(第一行是匿名,之后是具名):

[root@iZwz9jbs6miken6je76quyZ data]# docker volume ls

DRIVER VOLUME NAME

local fa546143f3117bfe1f24a4b8d8d655dbabdb0dbfcdb66bfa6f77a53d147ae765

local juming-nginx

查看卷:

[root@iZwz9jbs6miken6je76quyZ data]# docker inspect juming-nginx

[

{

"CreatedAt": "2020-11-17T22:56:23+08:00",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/juming-nginx/_data",

"Name": "juming-nginx",

"Options": null,

"Scope": "local"

}

]

得出结论:1、所以没有指定目录的卷都在/var/lib/docker/volumes/ 中;

2、docker的工作目录在/var/lib/docker/ ;

3、指定路径挂载就是 -v 指定目录的:容器目录;

扩展知识:

可以通过 -v 容器路径:ro rw 改变读写权限

ro:readonly 只读

rw:readwrite 可读写 (默认)

一旦这个设置了权限,容器对我们挂载出来的内容就有限定了,如果是ro那么这个文件就只能有外面改变,

不能由容器内改变了

docker run -d -P --name nginx02 -v juming-nginx:/etc/nginx:ro nginx

docker run -d -P --name nginx02 -v juming-nginx:/etc/nginx:rw nginx

6、Dockerfile:

FROM centos

VOLUME ["volume01","volume02"] #在创建镜像的时候挂载

CMD echo "------end------"

CMD /bin/bash

构建镜像:

[root@iZwz9jbs6miken6je76quyZ docker-test-volume]# docker build -f dockerFile -t wph/centos .

Sending build context to Docker daemon 2.048kB

Step 1/4 : FROM centos

---> 0d120b6ccaa8

Step 2/4 : VOLUME ["volume01","volume02"]

---> Running in 7e8b7085eea1

Removing intermediate container 7e8b7085eea1

---> c18cb9be4ee9

Step 3/4 : CMD echo "------end------"

---> Running in 57c5d9d73911

Removing intermediate container 57c5d9d73911

---> d2c06d717acd

Step 4/4 : CMD /bin/bash

---> Running in 1f26e93db034

Removing intermediate container 1f26e93db034

---> bb903c109d3f

Successfully built bb903c109d3f

Successfully tagged wph/centos:latest

常用命令:

补充:

CMD: 指定容器启动时要运行的命令,只有最后一个会生效,可被替代。

ENTRYPOINT: 指定容器启动时要运行的命令,可以追加命令。

ONBUILD: 当构建一个被继承DockerFile这个时候就会运行ONBUILD 的指令。触发指令。

COPY: 类似ADD,将我们的文件拷贝到镜像中。

ENV: 构建的时候设置环境变量。

实战例子:

1)构建一个自己的centos,使容器有vim工具

FROM centos

MAINTAINER wupeihong<[email protected]>

ENV MYPATH /usr/local

#配置根目录

WORKDIR $MYPATH

#安装自己需要的软件

RUN yum -y install vim

RUN yum -y install net-tools

#暴露端口

EXPOSE 80 3306

#构建镜像时候产生的输出

CMD echo $MYPATH

CMD echo "------end------"

#进入镜像有用

CMD /bin/bash

#查看镜像的构建过程:

docker history mycentos:0.1

2)构建一个Tomcat镜像

构建文件命名为Dockerfile,此时就不需要-f指定文件了。

FROM centos:centos7

MAINTAINER wupeihong<[email protected]>

COPY readme.txt /usr/local/readme.txt

#ADD会自动解压安装

ADD jdk-8u271-linux-x64.tar.gz /usr/local

ADD apache-tomcat-9.0.39.tar.gz /usr/local

RUN yum -y install vim

ENV MYPATH /usr/local

WORKDIR $MYPATH

ENV JAVA_HOME /usr/local/jdk1.8.0_271

ENV CLASSPATH $JAVA_HOME/lib/dt.jar:&JAVA_HOME/lib/tools.jar

ENV CATALINA_HOME /usr/local/apache-tomcat-9.0.39

ENV CATALINA_BASH /usr/local/apache-tomcat-9.0.39

ENV PATH $PATH:$JAVA_HOME/bin:$CATALINA_HOME/lib:$CATALINA_HOME/bin

EXPOSE 8080

CMD /usr/local/apache-tomcat-9.0.39/bin/startup.sh && tail -F /usr/local/apache-tomcat-9.0.39/bin/logs/catalina.out

之后构建镜像:

docker build -t selftomcat .

因为文件命名为Dockerfile所以不需要-f来指定构建的文件了

启动Tomcat:

docker run -d -p 8081:8080 --name diytomcat \

-v /home/mydata/build/tomcat/test:/usr/local/apache-tomcat-9.0.39/webapps/test \

-v /home/mydata/build/tomcat/tomcatlogs/:/usr/local/apache-tomcat-9.0.39/logs \

diytomcat

<web-app xmlns="http://java.sun.com/xml/ns/javaee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee

http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd"

version="2.5">

web-app>

<%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

<%@ page import="java.io.*,java.util.*" %>

<html>

<html>

<head>

<title>页面重定向</title>

</head>

<body>

<h1>页面重定向</h1>

<%

String site = new String("http://www.runoob.com");

response.setStatus(response.SC_MOVED_TEMPORARILY);

response.setHeader("Location", site);

%>

</body>

</html>

7、docker数据卷容器:

重要作用:实现容器间的数据共享

docker run -it --name docker02 --volumes-from docker01 wph/centos

此时父容器为docker01,子容器为docker02,虽然叫做父子容器,但是容器间的数据是双向共享的,

即在此关系中的所以容器都是共享此数据的

只要有一个容器还在用此数据就不会丢失数据,即把docker01删除了,docker02的数据依然在。因为共享数据在各自容器中各有一份。

’

’

常用:有多个MySQL数据共享。

docker run -d -p 3310:3306 -v /etc/mysql/conf.d -v /var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=root --name mysql01 mysql:5.7

docker run -d -p 3311:3306 -v /etc/mysql/conf.d -v /var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=root --name mysql02 \

--volumes-from mysql01 \

mysql:5.7

结论:

还用于容器之间配置信息的传递,数据卷的生命周期一直持续到没有容器使用为止。但是一旦持续到了本地,这个时候,本地数据是不会删除的。

8、docker常用零散命令:

docker rm $(docker ps -qa) ------移除所以容器,此操作好像也可以用的容器上,自己去试。

docker rmi -f $(docker images -aq) 移除所以镜像

docker stats ------查看各容器使用的内存情况

docker volume ------自己查看帮助文档可以看懂

docker 命令 --help ------查看命令的使用方法

修改镜像标签并放在docker镜像库里,原来的进行任然存在:

docker tag 6808f8b4ed91 wupeihong/tomcat:1.0

docker push wph1720637550/tomcat:1.0

wph1720637550/tomcat:1.0此部分前面必须和docker账户一致才行。

9、提交镜像到dockerhub:

docker tag 6808f8b4ed91 wph1720637550/tomcat:1.0

docker push wph1720637550/tomcat:1.0

wph1720637550/tomcat:1.0此部分前面必须和docker账户一致才行。

10、提交到阿里云:

自己阿里云看

11、docker镜像不能删除:

cd /var/lib/docker/image/overlay2/imagedb/content/sha256

[root@iZwz9jbs6miken6je76quyZ sha256]# ls

ac34e13f83a8817c7d1bc279ffb5c3df76440eeec7f9403ff5cc2da91dc33ccc

[root@iZwz9jbs6miken6je76quyZ sha256]# rm -f ac34e13f83a8817c7d1bc279ffb5c3df76440eeec7f9403ff5cc2da91dc33ccc

[root@iZwz9jbs6miken6je76quyZ sha256]# ls

[root@iZwz9jbs6miken6je76quyZ sha256]# systemctl restart docker

[root@iZwz9jbs6miken6je76quyZ sha256]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

[root@iZwz9jbs6miken6je76quyZ sha256]# pwd

/var/lib/docker/image/overlay2/imagedb/content/sha256

总结:删除掉对应的sha256即可。

12、docker网络:

docker exec -it tomcat01 ip addr ------查看容器的ip地址

示例:

[root@iZwz9jbs6miken6je76quyZ /]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

116: eth0@if117: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

不同容器是可以相互ping通的:

[root@iZwz9jbs6miken6je76quyZ /]# docker exec -it tomcat02 ping 172.17.0.2

PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.

64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.059 ms

64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.049 ms

64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.044 ms

64 bytes from 172.17.0.2: icmp_seq=4 ttl=64 time=0.042 ms

64 bytes from 172.17.0.2: icmp_seq=5 ttl=64 time=0.041 ms

64 bytes from 172.17.0.2: icmp_seq=6 ttl=64 time=0.046 ms

64 bytes from 172.17.0.2: icmp_seq=7 ttl=64 time=0.047 ms

64 bytes from 172.17.0.2: icmp_seq=8 ttl=64 time=0.043 ms

64 bytes from 172.17.0.2: icmp_seq=9 ttl=64 time=0.046 ms

64 bytes from 172.17.0.2: icmp_seq=10 ttl=64 time=0.047 ms

64 bytes from 172.17.0.2: icmp_seq=11 ttl=64 time=0.047 ms

64 bytes from 172.17.0.2: icmp_seq=12 ttl=64 time=0.044 ms

64 bytes from 172.17.0.2: icmp_seq=13 ttl=64 time=0.043 ms

64 bytes from 172.17.0.2: icmp_seq=14 ttl=64 time=0.053 ms

^Z64 bytes from 172.17.0.2: icmp_seq=15 ttl=64 time=0.043 ms

64 bytes from 172.17.0.2: icmp_seq=16 ttl=64 time=0.046 ms

64 bytes from 172.17.0.2: icmp_seq=17 ttl=64 time=0.047 ms

^Z64 bytes from 172.17.0.2: icmp_seq=18 ttl=64 time=0.045 ms

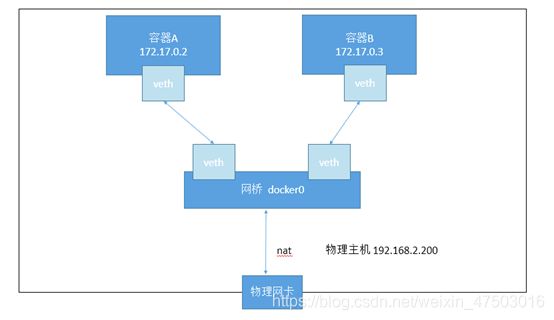

主机-docker-容器 网络关系图:

docker中所有的网卡都是虚拟的。

只要容器删除,对应的一对veth网卡就没有了。

–link(现在不推荐使用) :能够连接两个容器,通过名字就能ping通了,但是反向就不能ping通了

[root@iZwz9jbs6miken6je76quyZ /]# docker run -d -P --name tomcat04 --link tomcat02 tomcat

7d7b04a38c1b5085ceebe89bc5fa681702e6a649ca0e3eea03d74704b8b09a46

[root@iZwz9jbs6miken6je76quyZ /]# docker exec -it tomcat04 ping tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.069 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.042 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.047 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=4 ttl=64 time=0.046 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=5 ttl=64 time=0.044 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=6 ttl=64 time=0.050 ms

^Z64 bytes from tomcat02 (172.17.0.3): icmp_seq=7 ttl=64 time=0.044 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=8 ttl=64 time=0.044 ms

^C

--- tomcat02 ping statistics ---

8 packets transmitted, 8 received, 0% packet loss, time 1006ms

rtt min/avg/max/mdev = 0.042/0.048/0.069/0.009 ms

反向ping------ping不通

[root@iZwz9jbs6miken6je76quyZ /]# docker exec -it tomcat02 ping tomcat04

ping: tomcat04: Name or service not known

查看一些网络信息:

docker network --help

docker network inspect 32285b9014ab

[root@iZwz9jbs6miken6je76quyZ /]# docker network --help

Usage: docker network COMMAND

Manage networks

Commands:

connect Connect a container to a network

create Create a network

disconnect Disconnect a container from a network

inspect Display detailed information on one or more networks

ls List networks

prune Remove all unused networks

rm Remove one or more networks

Run 'docker network COMMAND --help' for more information on a command.

(bridge就是docker0)

[root@iZwz9jbs6miken6je76quyZ /]# docker network ls

NETWORK ID NAME DRIVER SCOPE

#bridge就是docker0

32285b9014ab bridge bridge local

1c3468b46ee6 host host local

e2d8eb6e4b70 none null local

[root@iZwz9jbs6miken6je76quyZ /]# docker network inspect 32285b9014ab

[

{

"Name": "bridge",

"Id": "32285b9014aba648f63d0c15f42046fce473172146237812ab73f70aa66719d5",

"Created": "2020-11-18T17:47:52.040796507+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"470bdb833750d57ab65d8fe1cd9912da26490117195ff639c2b0875acf2c7aeb": {

"Name": "tomcat02",

"EndpointID": "62d199723e3b571ebc77d27c78bc48bad56ba4fd52c0e1016a379c5c8f7306d5",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"7d7b04a38c1b5085ceebe89bc5fa681702e6a649ca0e3eea03d74704b8b09a46": {

"Name": "tomcat04",

"EndpointID": "61bd7310616640ebe31b631bd3a60da28b123214eab9cdce29d7a7c62f63fc1b",

"MacAddress": "02:42:ac:11:00:05",

"IPv4Address": "172.17.0.5/16",

"IPv6Address": ""

},

"94673cbf6552ba5a2ded0a89e7e0116b9ea05d74a4ac44d745124d860ff3eb21": {

"Name": "tomcat03",

"EndpointID": "79deaa070419ed64130b753b7231b9e4b48e7c8716fa35b86fa3d030ce4bd3bf",

"MacAddress": "02:42:ac:11:00:04",

"IPv4Address": "172.17.0.4/16",

"IPv6Address": ""

},

"9a9bf8c9f345cb59f5d4ccf2581ce5a2abadf9a71fee62024780ca65b7ecbc31": {

"Name": "tomcat01",

"EndpointID": "ccdc06371b6ddc3eb86350a7f52c61ab7c82247c02403ce43947faa2c421c2af",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

查看hosts配置,在这里原理发现。

[root@iZwz9jbs6miken6je76quyZ /]# docker exec -it tomcat04 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

#联系Windows的hosts,当ping tomcat02 时,之间将他转发到tomcat02对应的ip

172.17.0.3 tomcat02 470bdb833750

172.17.0.5 7d7b04a38c1b

结论:–link就是在tomcat04的hosts添加了一个映射:

172.17.0.3 tomcat02 470bdb833750

。

。

docker0 问题:不支持容器名的连接访问!所以我们需要自定义网络来玩docker。

。

。

。

。

。

自定义网络:

查看所以的docker网络:

[root@iZwz9jbs6miken6je76quyZ /]# docker network ls

NETWORK ID NAME DRIVER SCOPE

32285b9014ab bridge bridge local

1c3468b46ee6 host host local

e2d8eb6e4b70 none null local

网络模式

bridge:桥接 docker(默认)

none:不配置网络

host:和宿主机共享网络

container:容器内网络连通!(用得少!)

测试:

#我们直接启动的命令默认 --net bridge ,就是我们的docker0

docker run -d -P --name tomcat01 tomcat

#相当于上面这个和下面这个命令是等价的

docker run -d -P --name tomcat01 --net bridge tomcat

#docker0特点:默认,域名不能访问,--link可以打通容器间域名可访问。

创建网络:

[root@iZwz9jbs6miken6je76quyZ /]# docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

e218abd6483333d6548d50a596a546c7f7efe3402b7b014a993ee8766a1bd3a5

[root@iZwz9jbs6miken6je76quyZ /]# docker network ls

NETWORK ID NAME DRIVER SCOPE

32285b9014ab bridge bridge local

1c3468b46ee6 host host local

e218abd64833 mynet bridge local

e2d8eb6e4b70 none null local

[root@iZwz9jbs6miken6je76quyZ /]#

#我们自己的网络

[root@iZwz9jbs6miken6je76quyZ /]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "e218abd6483333d6548d50a596a546c7f7efe3402b7b014a993ee8766a1bd3a5",

"Created": "2020-11-18T20:46:28.463080287+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

[root@iZwz9jbs6miken6je76quyZ /]#

创建容器时用我们自己的网络:

[root@iZwz9jbs6miken6je76quyZ /]# docker run -d -P --name tomcat-mynet-01 --net mynet tomcat

fd0e365f3e4510ac79a1b6c611e8ec0cc7bed639351c2f1cad94cc0b7ce5439c

[root@iZwz9jbs6miken6je76quyZ /]# docker run -d -P --name tomcat-mynet-02 --net mynet tomcat

3a050cfa8b9ed248d347d2606e8d75736ed9f6783b35b90cbf50fec6c321d2c8

[root@iZwz9jbs6miken6je76quyZ /]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "e218abd6483333d6548d50a596a546c7f7efe3402b7b014a993ee8766a1bd3a5",

"Created": "2020-11-18T20:46:28.463080287+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"3a050cfa8b9ed248d347d2606e8d75736ed9f6783b35b90cbf50fec6c321d2c8": {

"Name": "tomcat-mynet-02",

"EndpointID": "c74ed108f4e9f335dbf0be8cddf850ecf4825ed645d63376804d56c6751c7253",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"fd0e365f3e4510ac79a1b6c611e8ec0cc7bed639351c2f1cad94cc0b7ce5439c": {

"Name": "tomcat-mynet-01",

"EndpointID": "2ee906492dc800b28b676a2db6e666447ef733f9678d4d754cf5bb7fee02afeb",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

[root@iZwz9jbs6miken6je76quyZ /]#

再次测试————自己创建的网络:

[root@iZwz9jbs6miken6je76quyZ /]# docker exec -it tomcat-mynet-01 ping 192.168.0.3

PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data.

64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.059 ms

64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.041 ms

64 bytes from 192.168.0.3: icmp_seq=3 ttl=64 time=0.046 ms

^C

--- 192.168.0.3 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.041/0.048/0.059/0.011 ms

不使用 --link也能通过ping容器名ping通

[root@iZwz9jbs6miken6je76quyZ /]# docker exec -it tomcat-mynet-01 ping tomcat-mynet-02

PING tomcat-mynet-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.030 ms

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.045 ms

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.046 ms

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=4 ttl=64 time=0.049 ms

^C

--- tomcat-mynet-02 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 0.030/0.042/0.049/0.009 ms

[root@iZwz9jbs6miken6je76quyZ /]#

我们自定义的网络docker都已经帮我们维护好了对应的关系,推荐我们平时使用自定义的网络。

好处:

redis 不同的集群使用不同的网络,保证集群是安全和健康的。

mysql 不同的集群使用不同的网络,保证集群是安全和健康的。

[root@iZwz9jbs6miken6je76quyZ /]# docker network --help

Usage: docker network COMMAND

Manage networks

Commands:

connect Connect a container to a network *****************************

create Create a network

disconnect Disconnect a container from a network

inspect Display detailed information on one or more networks

ls List networks

prune Remove all unused networks

rm Remove one or more networks

Run 'docker network COMMAND --help' for more information on a command.

[root@iZwz9jbs6miken6je76quyZ /]# docker network connect --help

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

Connect a container to a network

Options:

--alias strings Add network-scoped alias for the container

--driver-opt strings driver options for the network

--ip string IPv4 address (e.g., 172.30.100.104)

--ip6 string IPv6 address (e.g., 2001:db8::33)

--link list Add link to another container

--link-local-ip strings Add a link-local address for the container

测试打通tomcat01 到mynet网络(自己创建的网络),打通之后,tomcat01就可以和mynet网络的任意终端通信了。

连通之后就是将我们的 tomcat01 加入到我们的mynet网络

一个容器两个ip

[root@iZwz9jbs6miken6je76quyZ /]# docker network connect mynet tomcat01

[root@iZwz9jbs6miken6je76quyZ /]# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "e218abd6483333d6548d50a596a546c7f7efe3402b7b014a993ee8766a1bd3a5",

"Created": "2020-11-18T20:46:28.463080287+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"1b3d2cb56d17dda90a0d50449d53b45cbb211ec24df8af8cd0e19f70754ad3bc": {

"Name": "tomcat01",

"EndpointID": "8d3ebeb4d635b30c1c82bb78701bf518f90893de59b69284f27e3495992e6a85",

"MacAddress": "02:42:c0:a8:00:04",

"IPv4Address": "192.168.0.4/16",

"IPv6Address": ""

},

"3a050cfa8b9ed248d347d2606e8d75736ed9f6783b35b90cbf50fec6c321d2c8": {

"Name": "tomcat-mynet-02",

"EndpointID": "c74ed108f4e9f335dbf0be8cddf850ecf4825ed645d63376804d56c6751c7253",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

连通之后就是将我们的 tomcat01 加入到我们的mynet网络

"fd0e365f3e4510ac79a1b6c611e8ec0cc7bed639351c2f1cad94cc0b7ce5439c": {

"Name": "tomcat-mynet-01",

"EndpointID": "2ee906492dc800b28b676a2db6e666447ef733f9678d4d754cf5bb7fee02afeb",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

[root@iZwz9jbs6miken6je76quyZ /]#

此时就可以tomcat01就可以和mynet网络的每一个终端通信了。

[root@iZwz9jbs6miken6je76quyZ /]# docker exec -it tomcat01 ping tomcat-mynet-02

PING tomcat-mynet-02 (192.168.0.3) 56(84) bytes of data.

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.048 ms

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.044 ms

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=3 ttl=64 time=0.049 ms

64 bytes from tomcat-mynet-02.mynet (192.168.0.3): icmp_seq=4 ttl=64 time=0.047 ms

^C

--- tomcat-mynet-02 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 0.044/0.047/0.049/0.002 ms

[root@iZwz9jbs6miken6je76quyZ /]#

但是反过来任然不行:

[root@iZwz9jbs6miken6je76quyZ /]# docker exec -it tomcat-mynet-01 ping tomcat02

ping: tomcat02: Name or service not known

结论:假设要跨网络操作别人,就需要使用 docker network connect连通。

.

.

.

.

.

redis集群:

查看网卡:

[root@iZwz9jbs6miken6je76quyZ /]# docker network ls

NETWORK ID NAME DRIVER SCOPE

32285b9014ab bridge bridge local

1c3468b46ee6 host host local

e218abd64833 mynet bridge local

e2d8eb6e4b70 none null local

d513115a921b redis bridge local

查看网络(网卡)详细信息

[root@iZwz9jbs6miken6je76quyZ /]# docker network inspect redis

[

{

"Name": "redis",

"Id": "d513115a921b1b374ff8ba1268312b8cff12b30ad43ad3e17d780fdb9e3b8101",

"Created": "2020-11-18T21:32:46.130135013+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.38.0.0/16"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

通过脚本创建6个Redis配置

#for循环6次

for port in $(seq 1 6); \

do \

#创建目录、文件,对应文件中写入数据

mkdir -p /mydata/redis/node-${port}/conf #${port}取自第一行的port

touch /mydata/redis/node-${port}/conf/redis.conf

cat << EOF >/mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enable yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

上面附带解释,下面和上面一样纯洁版,直接复制运行即可。

for port in $(seq 1 6); \

do \

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat << EOF >/mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

docker运行的命令(共6个):

docker run -p 6371:6379 -p 16371:16379 --name redis-1 \

-v /mydata/redis/node-1/data:/data \

-v /mydata/redis/node-1/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.11 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6372:6379 -p 16372:16379 --name redis-2 \

-v /mydata/redis/node-2/data:/data \

-v /mydata/redis/node-2/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.12 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6373:6379 -p 16373:16379 --name redis-3 \

-v /mydata/redis/node-3/data:/data \

-v /mydata/redis/node-3/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.13 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6374:6379 -p 16374:16379 --name redis-4 \

-v /mydata/redis/node-4/data:/data \

-v /mydata/redis/node-4/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.14 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6375:6379 -p 16375:16379 --name redis-5 \

-v /mydata/redis/node-5/data:/data \

-v /mydata/redis/node-5/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.15 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

docker run -p 6376:6379 -p 16376:16379 --name redis-6 \

-v /mydata/redis/node-6/data:/data \

-v /mydata/redis/node-6/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis --ip 172.38.0.16 redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

进入Redis容器的命令和其他不同:

docker exec -it redis-1 /bin/sh

创建集群

创建集群的配置:

[root@iZwz9jbs6miken6je76quyZ redis]# docker exec -it redis-1 /bin/sh

/data # ls

appendonly.aof nodes.conf

/data #

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster-replic

as 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

M: f0ceb09beabd4f1ed34b1b88faf0f0a031fe4cbb 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: 3e59bcc872a01a8ca93ef305ed8aefcf7744bd42 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: 536d2f9505b8e80e5352a8fed1e3ca17028e8695 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: 5d86cbef61a3fee10903f93ff0474e2339fc7122 172.38.0.14:6379

replicates 536d2f9505b8e80e5352a8fed1e3ca17028e8695

S: 756db0b326e9a39f94baf7c68423fc5d89813a70 172.38.0.15:6379

replicates f0ceb09beabd4f1ed34b1b88faf0f0a031fe4cbb

S: 35e6e72b0111d18ac93d583095e8117f4eff9b52 172.38.0.16:6379

replicates 3e59bcc872a01a8ca93ef305ed8aefcf7744bd42

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

...

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: f0ceb09beabd4f1ed34b1b88faf0f0a031fe4cbb 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 536d2f9505b8e80e5352a8fed1e3ca17028e8695 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 5d86cbef61a3fee10903f93ff0474e2339fc7122 172.38.0.14:6379

slots: (0 slots) slave

replicates 536d2f9505b8e80e5352a8fed1e3ca17028e8695

S: 35e6e72b0111d18ac93d583095e8117f4eff9b52 172.38.0.16:6379

slots: (0 slots) slave

replicates 3e59bcc872a01a8ca93ef305ed8aefcf7744bd42

M: 3e59bcc872a01a8ca93ef305ed8aefcf7744bd42 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: 756db0b326e9a39f94baf7c68423fc5d89813a70 172.38.0.15:6379

slots: (0 slots) slave

replicates f0ceb09beabd4f1ed34b1b88faf0f0a031fe4cbb

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

查看集群的一些信息,和使用效果:

/data # redis-cli -c

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:302

cluster_stats_messages_pong_sent:323

cluster_stats_messages_sent:625

cluster_stats_messages_ping_received:318

cluster_stats_messages_pong_received:302

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:625

127.0.0.1:6379> cluster nodes

536d2f9505b8e80e5352a8fed1e3ca17028e8695 172.38.0.13:6379@16379 master - 0 1605709774000 3 connected 10923-16383

5d86cbef61a3fee10903f93ff0474e2339fc7122 172.38.0.14:6379@16379 slave 536d2f9505b8e80e5352a8fed1e3ca17028e8695 0 1605709775554 4 connected

35e6e72b0111d18ac93d583095e8117f4eff9b52 172.38.0.16:6379@16379 slave 3e59bcc872a01a8ca93ef305ed8aefcf7744bd42 0 1605709775053 6 connected

3e59bcc872a01a8ca93ef305ed8aefcf7744bd42 172.38.0.12:6379@16379 master - 0 1605709775000 2 connected 5461-10922

756db0b326e9a39f94baf7c68423fc5d89813a70 172.38.0.15:6379@16379 slave f0ceb09beabd4f1ed34b1b88faf0f0a031fe4cbb 0 1605709774552 5 connected

f0ceb09beabd4f1ed34b1b88faf0f0a031fe4cbb 172.38.0.11:6379@16379 myself,master - 0 1605709773000 1 connected 0-5460

127.0.0.1:6379> set a b

-> Redirected to slot [15495] located at 172.38.0.13:6379

OK

当关掉redis-3之后

172.38.0.13:6379> get a

Could not connect to Redis at 172.38.0.13:6379: Host is unreachable

(33.92s)

not connected> get a

Could not connect to Redis at 172.38.0.13:6379: Host is unreachable

(0.85s)

not connected>

not connected> redis-cli -c

Could not connect to Redis at 172.38.0.13:6379: Host is unreachable

(1.26s)

not connected>

/data # redis-cli -c

127.0.0.1:6379> get a

-> Redirected to slot [15495] located at 172.38.0.14:6379

"b"

172.38.0.14:6379> cluster nodes

5d86cbef61a3fee10903f93ff0474e2339fc7122 172.38.0.14:6379@16379 myself,master - 0 1605710203000 7 connected 10923-16383

f0ceb09beabd4f1ed34b1b88faf0f0a031fe4cbb 172.38.0.11:6379@16379 master - 0 1605710203765 1 connected 0-5460

536d2f9505b8e80e5352a8fed1e3ca17028e8695 172.38.0.13:6379@16379 master,fail - 1605709961148 1605709960545 3 connected

35e6e72b0111d18ac93d583095e8117f4eff9b52 172.38.0.16:6379@16379 slave 3e59bcc872a01a8ca93ef305ed8aefcf7744bd42 0 1605710202263 6 connected

756db0b326e9a39f94baf7c68423fc5d89813a70 172.38.0.15:6379@16379 slave f0ceb09beabd4f1ed34b1b88faf0f0a031fe4cbb 0 1605710202000 5 connected

3e59bcc872a01a8ca93ef305ed8aefcf7744bd42 172.38.0.12:6379@16379 master - 0 1605710203264 2 connected 5461-10922

172.38.0.14:6379>

使用docker之后搭建集群变得很简单。