K8S_学习笔记

目录

文章目录

-

- @[toc]

- 1 k8s的架构

- 2 什么是k8s,k8s有什么功能?

-

- 2.1 k8s的核心功能

- 2.2 k8s的历史

- 2.3 k8s的安装方式

- 2.4 k8s的应用场景

- 3 实验环境

- 4 k8s集群的安装

-

- 4.1 修改IP地址、主机和host解析

- 4.2 master节点安装etcd

- 4.3 master节点安装kubernetes

- 4.4 node节点安装kubernetes

- 4.5 所有节点配置flannel网络

- 4.6 配置master为镜像仓库

- 5 k8s常用的资源

-

- 5.1 pod资源

- 5.2 ReplicationController资源

- 5.3.1 rc的滚动升级

- 5.3.2 rc的回滚

- 5.4 service资源

- 5.5 deployment资源

- 5.6 tomcat+mysql练习

- 5.7 daemon set资源:

- 5.7 pet set资源

- 6 k8s的附加组件

-

- 6.1 dns服务

- 6.2 namespace命令空间

- 6.3 健康检查

-

- 6.3.1 探针的种类

- 6.3.2 探针的检测方法

- 6.3.3 附:容器的初始命令说明

- 6.3.4 liveness探针的exec使用(较少用)

- 6.3.5 liveness探针的httpGet使用(常用)

- 6.3.6 liveness探针的tcpSocket使用

- 4.3.7 readiness探针的httpGet使用

- 6.4 dashboard服务

- 6.5 通过apiservicer反向代理访问service

- 7 k8s弹性伸缩

-

- 7.1 安装heapster监控

- 7.2 弹性伸缩(hpa资源)

- 8 持久化存储

-

- 8.1 emptyDir

- 8.2 HostPath

- 8.3 nfs:

- 8.4 分布式存储glusterfs

-

- 8.4.1 创建分布式存储glusterfs

- 8.4.2 k8s对接glusterfs存储

- 8.5 持续化存储pv和pvc

文章目录

-

- @[toc]

- 1 k8s的架构

- 2 什么是k8s,k8s有什么功能?

-

- 2.1 k8s的核心功能

- 2.2 k8s的历史

- 2.3 k8s的安装方式

- 2.4 k8s的应用场景

- 3 实验环境

- 4 k8s集群的安装

-

- 4.1 修改IP地址、主机和host解析

- 4.2 master节点安装etcd

- 4.3 master节点安装kubernetes

- 4.4 node节点安装kubernetes

- 4.5 所有节点配置flannel网络

- 4.6 配置master为镜像仓库

- 5 k8s常用的资源

-

- 5.1 pod资源

- 5.2 ReplicationController资源

- 5.3.1 rc的滚动升级

- 5.3.2 rc的回滚

- 5.4 service资源

- 5.5 deployment资源

- 5.6 tomcat+mysql练习

- 5.7 daemon set资源:

- 5.7 pet set资源

- 6 k8s的附加组件

-

- 6.1 dns服务

- 6.2 namespace命令空间

- 6.3 健康检查

-

- 6.3.1 探针的种类

- 6.3.2 探针的检测方法

- 6.3.3 附:容器的初始命令说明

- 6.3.4 liveness探针的exec使用(较少用)

- 6.3.5 liveness探针的httpGet使用(常用)

- 6.3.6 liveness探针的tcpSocket使用

- 4.3.7 readiness探针的httpGet使用

- 6.4 dashboard服务

- 6.5 通过apiservicer反向代理访问service

- 7 k8s弹性伸缩

-

- 7.1 安装heapster监控

- 7.2 弹性伸缩(hpa资源)

- 8 持久化存储

-

- 8.1 emptyDir

- 8.2 HostPath

- 8.3 nfs:

- 8.4 分布式存储glusterfs

-

- 8.4.1 创建分布式存储glusterfs

- 8.4.2 k8s对接glusterfs存储

- 8.5 持续化存储pv和pvc

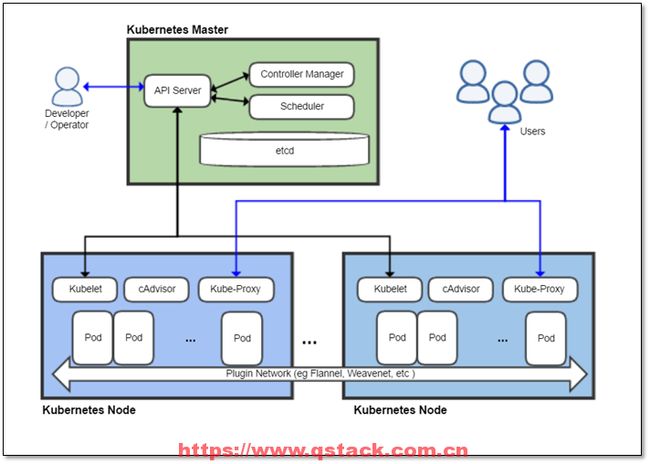

1 k8s的架构

核心组件服务介绍:

master(管理)节点:

- API Server:K8S集群的老大

- Controller Manager:管理容器的状态,擅长解决挂掉的容器

- Schedule:选择一个合适的节点启容器

- etcd:k8s的数据库

node(干活)节点:

- kubelet:管理Docker宿主机

- cAdvisor

- kub-Proxy:帮助用户能够访问容器的服务

除了核心组件,还有一些推荐的附加组件:

| 组件名称 | 说明 |

|---|---|

| kube-dns | 负责为整个集群提供DNS服务 |

| Ingress Controller | 为服务提供外网入口(七层负载均衡) |

| Heapster | 提供资源监控(现已替换成普罗米修斯) |

| Dashboard | 提供GUI |

| Federation | 提供跨可用区的集群 |

| Fluentd-elasticsearch | 提供集群日志采集、存储与查询 |

2 什么是k8s,k8s有什么功能?

k8s是一个docker集群的管理工具

k8s是容器的编排工具

2.1 k8s的核心功能

-

自愈:

重新启动失败的容器,在节点不可用时,替换和重新调度节点上的容器,对用户定义的健康检查不响应的容器会被中止,并且在容器准备好服务之前不会把其向客户端广播。 -

弹性伸缩:

通过监控容器的cpu的负载值,如果这个平均高于80%,增加容器的数量,如果这个平均低于10%,减少容器的数量 -

服务的自动发现和负载均衡:

不需要修改您的应用程序来使用不熟悉的服务发现机制,Kubernetes 为容器提供了自己的 IP 地址和一组容器的单个 DNS 名称,并可以在它们之间进行负载均衡。 -

滚动升级和一键回滚:

Kubernetes 逐渐部署对应用程序或其配置的更改,同时监视应用程序运行状况,以确保它不会同时终止所有实例。 如果出现问题,Kubernetes会为您恢复更改,利用日益增长的部署解决方案的生态系统。 -

私密配置文件管理:

web容器里面,数据库的账户密码

2.2 k8s的历史

2014年 docker容器编排工具,立项

2015年7月 发布kubernetes 1.0, 加入cncf基金会 孵化

2016年,kubernetes干掉两个对手,docker swarm,mesos marathon 1.2版

2017年 1.5 -1.9

2018年 k8s 从cncf基金会 毕业项目

2019年: 1.13, 1.14 ,1.15,1.16

cncf :cloud native compute foundation

kubernetes (k8s): 希腊语 舵手,领航 容器编排领域,

谷歌15年容器使用经验,borg容器管理平台,使用golang重构borg,kubernetes

2.3 k8s的安装方式

- yum安装 1.5 最容易安装成功,最适合学习的

- 源码编译安装—难度最大 可以安装最新版

- 二进制安装—步骤繁琐 可以安装最新版 shell,ansible,saltstack

- kubeadm 安装最容易, 网络 可以安装最新版

- minikube 适合开发人员体验k8s, 网络

2.4 k8s的应用场景

k8s最适合跑微服务项目!

微服务的好处:提供更高的并发,可用性更强,发布周期更短。

微服务的缺点:管理难度大,自动化代码上线,ansible与saltstack

3 实验环境

k8s-master 10.0.0.11

k8s-node-1 10.0.0.12

k8s-node-2 10.0.0.13

4 k8s集群的安装

4.1 修改IP地址、主机和host解析

vim /etc/hosts(三台主机)

10.0.0.11 k8s-master

10.0.0.12 k8s-node-1

10.0.0.13 k8s-node-2

改主机名

# 10.0.0.11

hostnamectl set-hostname k8s-master

# 10.0.0.12

hostnamectl set-hostname k8s-node-1

# 10.0.0.13

hostnamectl set-hostname k8s-node-2

4.2 master节点安装etcd

yum install etcd -y

vim /etc/etcd/etcd.conf

6行:ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

21行:ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.11:2379"

# 21行:ETCD_ADVERTISE_CLIENT_URLS="http://10.0.0.11:2379,http://10.0.0.12:2379" # 此为多节点的写法

# 9行:ETCD_NAME="default" # 如多节点环境,必须把名字改掉

systemctl start etcd.service

systemctl enable etcd.service

[root@k8s-master yum.repos.d]# ss -tnlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 127.0.0.1:2380 *:* users:(("etcd",pid=6787,fd=5))

LISTEN 0 128 *:22 *:* users:(("sshd",pid=6415,fd=3))

LISTEN 0 128 :::2379 :::* users:(("etcd",pid=6787,fd=6))

LISTEN 0 128 :::22 :::*

# 测试是否联通

etcdctl set testdir/testkey0 "hello world"

hello world

etcdctl get testdir/testkey0

hello world

# 检查健康状态

[root@k8s-master yum.repos.d]# etcdctl -C http://10.0.0.11:2379 cluster-health

member 8e9e05c52164694d is healthy: got healthy result from http://10.0.0.11:2379

cluster is healthy

4.3 master节点安装kubernetes

yum install kubernetes-master.x86_64 -y

vim /etc/kubernetes/apiserver

8行: KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

11行:KUBE_API_PORT="--port=8080"

14行: KUBELET_PORT="--kubelet-port=10250"

17行:KUBE_ETCD_SERVERS="--etcd-servers=http://10.0.0.11:2379"

23行:KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota" # 删掉ServiceAccount

vim /etc/kubernetes/config

22行:KUBE_MASTER="--master=http://10.0.0.11:8080"

systemctl enable kube-apiserver.service

systemctl start kube-apiserver.service

systemctl enable kube-controller-manager.service

systemctl start kube-controller-manager.service

systemctl enable kube-scheduler.service

systemctl start kube-scheduler.service

在master节点检查

[root@k8s-master ~]# kubectl get componentstatus

NAME STATUS MESSAGE ERROR

etcd-0 Healthy {"health":"true"}

scheduler Healthy ok

controller-manager Healthy ok

4.4 node节点安装kubernetes

k8s-node-1

yum install kubernetes-node.x86_64 -y

vim /etc/kubernetes/config

22行:KUBE_MASTER="--master=http://10.0.0.11:8080"

vim /etc/kubernetes/kubelet

5行:KUBELET_ADDRESS="--address=0.0.0.0"

8行:KUBELET_PORT="--port=10250"

11行:KUBELET_HOSTNAME="--hostname-override=k8s-node-1" # 也可用ip 10.0.0.12

14行:KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080"

systemctl enable kubelet.service

systemctl start kubelet.service

systemctl enable kube-proxy.service

systemctl start kube-proxy.service

k8s-node-2

yum install kubernetes-node.x86_64 -y

vim /etc/kubernetes/config

22行:KUBE_MASTER="--master=http://10.0.0.11:8080"

vim /etc/kubernetes/kubelet

5行:KUBELET_ADDRESS="--address=0.0.0.0"

8行:KUBELET_PORT="--port=10250"

11行:KUBELET_HOSTNAME="--hostname-override=k8s-node-2" # 也可用ip 10.0.0.13

14行:KUBELET_API_SERVER="--api-servers=http://10.0.0.11:8080"

systemctl enable kubelet.service

systemctl start kubelet.service

systemctl enable kube-proxy.service

systemctl start kube-proxy.service

在master节点检查

[root@k8s-master ~]# kubectl get nodes

NAME STATUS AGE

k8s-node-1 Ready 26s

k8s-node-2 Ready 13s

4.5 所有节点配置flannel网络

# 所有节点

yum install flannel -y

sed -i 's#http://127.0.0.1:2379#http://10.0.0.11:2379#g' /etc/sysconfig/flanneld

# master节点

etcdctl mk /atomic.io/network/config '{ "Network": "172.18.0.0/16" }' # 这是pod ip的范围

[root@k8s-master yum.repos.d]# etcdctl get /atomic.io/network/config # 查看是否设定成功

{ "Network": "172.18.0.0/16" }

systemctl enable flanneld.service

systemctl restart flanneld.service

# node节点

systemctl enable flanneld.service

systemctl start flanneld.service

# node节点

vim /usr/lib/systemd/system/docker.service

#在[Service]区域下的ExecStart前增加一行

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

# node节点

systemctl daemon-reload

systemctl restart docker

[root@k8s-node-1 yum.repos.d]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.18.3.1 netmask 255.255.255.0 broadcast 0.0.0.0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 172.18.3.0 netmask 255.255.0.0 destination 172.18.3.0

[root@k8s-node-2 yum.repos.d]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.18.96.1 netmask 255.255.255.0 broadcast 0.0.0.0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 172.18.96.0 netmask 255.255.0.0 destination 172.18.96.0

node节点验证

# 先将docker_busybox.tar.gz上传至两节点的root目录下

docker load -i docker_busybox.tar.gz

docker run -it docker.io/busybox:latest

两个节点互ping

# node1

[root@k8s-node-1 docker_image]# docker run -it docker.io/busybox:latest

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:12:03:02

inet addr:172.18.3.2 Bcast:0.0.0.0 Mask:255.255.255.0

/ # ping 172.18.96.2

PING 172.18.96.2 (172.18.96.2): 56 data bytes

64 bytes from 172.18.96.2: seq=0 ttl=60 time=1.740 ms

64 bytes from 172.18.96.2: seq=1 ttl=60 time=1.530 ms

# node2

[root@k8s-node-2 docker_image]# docker run -it docker.io/busybox:latest

/ # ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:12:60:02

inet addr:172.18.96.2 Bcast:0.0.0.0 Mask:255.255.255.0

/ # ping 172.18.3.2

PING 172.18.3.2 (172.18.3.2): 56 data bytes

64 bytes from 172.18.3.2: seq=0 ttl=60 time=1.408 ms

64 bytes from 172.18.3.2: seq=1 ttl=60 time=2.242 ms

4.6 配置master为镜像仓库

master节点

yum install docker -y

systemctl restart docker

systemctl enable docker

所有节点

vi /etc/docker/daemon.json

{

"registry-mirrors": ["https://registry.docker-cn.com"],

"insecure-registries": ["10.0.0.11:5000"]

}

systemctl restart docker

master节点

# 载入docker仓库的容器包

docker load -i registry.tar.gz # 事先下载该包

# 启动该容器

docker run -d -p 5000:5000 --restart=always --name registry -v /opt/myregistry:/var/lib/registry registry

# 查看仓库信息

[root@k8s-master docker_image]# curl 10.0.0.11:5000/v2/_catalog

{"repositories":[]}

node节点测试

[root@k8s-node-2 ~]# docker image ls -a

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/busybox latest d8233ab899d4 10 months ago 1.2 MB

[root@k8s-node-2 ~]# docker tag docker.io/busybox:latest 10.0.0.11:5000/busybox:latest

[root@k8s-node-2 ~]# docker push 10.0.0.11:5000/busybox:latest

The push refers to a repository [10.0.0.11:5000/busybox]

adab5d09ba79: Pushed

latest: digest: sha256:4415a904b1aca178c2450fd54928ab362825e863c0ad5452fd020e92f7a6a47e size: 527

# 显示成功上传至私有仓库

# 使用curl查看仓库中的镜像

[root@k8s-node-1 ~]# curl 10.0.0.11:5000/v2/_catalog

{"repositories":["busybox"]}

5 k8s常用的资源

5.1 pod资源

- pod是最小资源单位.

- 任何的一个k8s资源都可以由yml清单文件来定义

- 至少由两个容器组成,pod基础容器和业务容器组成(最多1+4)

k8s yaml的主要组成

apiVersion: v1 api版本

kind: pod 资源类型

metadata: 属性

spec: 详细

范例1:

单容器

master节点(11)

[root@k8s-master ~]# cd /root/k8s_yaml/pod/

[root@k8s-master pod]# vim k8s_pod.yaml

编辑k8s_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

node2节点(13)

# 先将docker_nginx1.13.tar.gz上传至/root/docker_image中

[root@k8s-node-2 docker_image]# docker load -i docker_nginx1.13.tar.gz

d626a8ad97a1: Loading layer [==================================================>] 58.46 MB/58.46 MB

82b81d779f83: Loading layer [==================================================>] 54.21 MB/54.21 MB

7ab428981537: Loading layer [==================================================>] 3.584 kB/3.584 kB

Loaded image: docker.io/nginx:1.13

[root@k8s-node-2 docker_image]# docker tag docker.io/nginx:1.13 10.0.0.11:5000/nginx:1.13

[root@k8s-node-2 docker_image]# docker push 10.0.0.11:5000/nginx:1.13

The push refers to a repository [10.0.0.11:5000/nginx]

7ab428981537: Pushed

82b81d779f83: Pushed

d626a8ad97a1: Pushed

1.13: digest: sha256:e4f0474a75c510f40b37b6b7dc2516241ffa8bde5a442bde3d372c9519c84d90 size: 948

# 再将pod-infrastructure-latest.tar上传至/root/docker_image中

# 此为 pod 容器,实现 k8s 的高级功能,用来绑定每一个业务容器(如 nginx)

[root@k8s-node-2 docker_image]# docker load -i pod-infrastructure-latest.tar.gz

Loaded image: docker.io/tianyebj/pod-infrastructure:latest

[root@k8s-node-2 docker_image]# docker tag docker.io/tianyebj/pod-infrastructure:latest 10.0.0.11:5000/rhel7/pod-infrastructure:latest

[root@k8s-node-2 docker_image]# docker push 10.0.0.11:5000/rhel7/pod-infrastructure:latest

[root@k8s-node-2 docker_image]# curl 10.0.0.11:5000/v2/_catalog

{"repositories":["busybox","nginx","rhel7/pod-infrastructure"]}

[root@k8s-node-2 docker_image]# vim /etc/kubernetes/kubelet

第十七行:KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.11:5000/rhel7/pod-infrastructure:latest"

[root@k8s-node-2 docker_image]# systemctl restart kubelet.service

node1节点(12)

[root@k8s-node-1 ~]# vim /etc/kubernetes/kubelet

第十七行:KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=10.0.0.11:5000/rhel7/pod-infrastructure:latest"

[root@k8s-node-1 ~]# systemctl restart kubelet.service

master节点(11)

[root@k8s-master pod]# ls /opt/myregistry/docker/registry/v2/repositories/

busybox nginx rhel7

[root@k8s-master pod]# ls /opt/myregistry/docker/registry/v2/repositories/nginx/_manifests/tags/

1.13

[root@k8s-master pod]# ls /opt/myregistry/docker/registry/v2/repositories/rhel7/pod-infrastructure/_manifests/tags/

latest

# 创建容器

[root@k8s-master pod]# kubectl create -f k8s_pod.yaml

pod "nginx" created

# 查看容器状态

[root@k8s-master pod]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 25s

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 1 13h 172.18.96.2 k8s-node-2

# 尝试访问容器的nginx服务

[root@k8s-master ~]# curl -I 172.18.96.2

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Mon, 16 Dec 2019 12:01:24 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

# 查看容器描述,可用于排错

[root@k8s-master pod]# kubectl describe pod nginx

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

12s 12s 1 {default-scheduler } Normal Scheduled Successfully assigned nginx to k8s-node-2

12s 12s 2 {kubelet k8s-node-2} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

12s 12s 1 {kubelet k8s-node-2} spec.containers{nginx} Normal Pulled Container image "10.0.0.11:5000/nginx:1.13" already present on machine

12s 12s 1 {kubelet k8s-node-2} spec.containers{nginx} Normal Created Created container with docker id a794c01597bf; Security:[seccomp=unconfined]

12s 12s 1 {kubelet k8s-node-2} spec.containers{nginx} Normal Started Started container with docker id a794c01597bf

node节点(12)

# 根据master节点的描述,nginx容器创建到了12上

[root@k8s-node-2 docker_image]# docker container ls -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a794c01597bf 10.0.0.11:5000/nginx:1.13 "nginx -g 'daemon ..." 5 minutes ago Up 5 minutes k8s_nginx.91390390_nginx_default_2c6f4d7d-1f89-11ea-8106-000c293c3b9a_9e48bdbf

d3d34f038a78 10.0.0.11:5000/rhel7/pod-infrastructure:latest "/pod" 5 minutes ago Up 5 minutes k8s_POD.e5ea03c1_nginx_default_2c6f4d7d-1f89-11ea-8106-000c293c3b9a_5e374e3e

# 上面一行为 pod 容器,实现 k8s 的高级功能,用来绑定每一个业务容器(如 nginx)

范例2:

双容器

master节点:

[root@k8s-master ~]# cd /root/k8s_yaml/pod/

[root@k8s-master pod]# vim k8s_pod2.yaml

创建k8s_pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: test

labels:

app: web

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

- name: busybox

image: 10.0.0.11:5000/busybox:latest

command: ["sleep","1000"]

[root@k8s-master pod]# kubectl create -f k8s_pod2.yaml

pod "test" created

[root@k8s-master pod]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx 1/1 Running 1 14h 172.18.96.2 k8s-node-2

test 2/2 Running 0 2m 172.18.3.2 k8s-node-1

[root@k8s-master pod]# curl -I 172.18.3.2

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Mon, 16 Dec 2019 12:37:18 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

node1节点:

[root@k8s-node-1 ~]# docker container ls -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

fcb4cec97194 10.0.0.11:5000/busybox:latest "sleep 1000" 3 minutes ago Up 3 minutes k8s_busybox.f5d3e53a_test_default_5ef9bfb3-2000-11ea-913e-000c293c3b9a_0db8bc81

2ddd37378f0c 10.0.0.11:5000/nginx:1.13 "nginx -g 'daemon ..." 3 minutes ago Up 3 minutes k8s_nginx.91390390_test_default_5ef9bfb3-2000-11ea-913e-000c293c3b9a_23c7f77c

86280324d6d7 10.0.0.11:5000/rhel7/pod-infrastructure:latest "/pod" 3 minutes ago Up 3 minutes k8s_POD.e5ea03c1_test_default_5ef9bfb3-2000-11ea-913e-000c293c3b9a_4220d726

另:进入一个pod容器

[root@k8s-master svc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-l6zqz 1/1 Running 0 18m 172.18.40.3 k8s-node-1

nginx-rdkpz 1/1 Running 0 18m 172.18.2.4 k8s-node-2

[root@k8s-master svc]# kubectl exec -it nginx-rdkpz /bin/bash

root@nginx-rdkpz:/# exit

5.2 ReplicationController资源

rc:保证指定数量的pod始终存活,rc通过标签选择器来关联pod

范例:

master节点(11):

[root@k8s-master ~]# cd /root/k8s_yaml/rc/

[root@k8s-master rc]# vim k8s_rc.yaml

编辑k8s_rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx

spec:

replicas: 5 #副本5

selector:

app: myweb # 此为rc的标签(每个rc之间的app名字不能相同)

template:

metadata:

labels:

app: myweb # 此为pod的标签。(与rc的app名字必须相同)

spec:

containers:

- name: myweb

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

创建rc资源

[root@k8s-master rc]# kubectl create -f k8s_rc.yaml

replicationcontroller "nginx" created

[root@k8s-master rc]# kubectl get rc -o wide --show-labels

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR LABELS

nginx 5 5 5 19m myweb 10.0.0.11:5000/nginx:1.13 app=myweb app=myweb

[root@k8s-master rc]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx 1/1 Running 2 23h 172.18.96.2 k8s-node-2 app=web

nginx-2f7jd 1/1 Running 0 19m 172.18.96.3 k8s-node-2 app=myweb

nginx-3s3f2 1/1 Running 0 19m 172.18.3.3 k8s-node-1 app=myweb

nginx-7k1qb 1/1 Running 0 19m 172.18.96.5 k8s-node-2 app=myweb

nginx-pmrk3 1/1 Running 0 19m 172.18.96.4 k8s-node-2 app=myweb

nginx-xhpv8 1/1 Running 0 19m 172.18.3.4 k8s-node-1 app=myweb

test 2/2 Running 5 9h 172.18.3.2 k8s-node-1 app=web

删除之前创建的pod资源

[root@k8s-master rc]# kubectl delete pod test

pod "test" deleted

[root@k8s-master rc]# kubectl delete pod nginx

pod "nginx" deleted

[root@k8s-master rc]# kubectl get rc -o wide --show-labels

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR LABELS

nginx 5 5 5 27m myweb 10.0.0.11:5000/nginx:1.13 app=myweb app=myweb

[root@k8s-master rc]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx-2f7jd 1/1 Running 0 27m 172.18.96.3 k8s-node-2 app=myweb

nginx-3s3f2 1/1 Running 0 27m 172.18.3.3 k8s-node-1 app=myweb

nginx-7k1qb 1/1 Running 0 27m 172.18.96.5 k8s-node-2 app=myweb

nginx-pmrk3 1/1 Running 0 27m 172.18.96.4 k8s-node-2 app=myweb

nginx-xhpv8 1/1 Running 0 27m 172.18.3.4 k8s-node-1 app=myweb

另:直接修改rc的pod节点数量

[root@k8s-master svc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-7xqlq 1/1 Running 0 18m 172.18.2.2 k8s-node-2

nginx-8d6c5 1/1 Running 0 18m 172.18.2.3 k8s-node-2

nginx-kd312 1/1 Running 0 18m 172.18.40.2 k8s-node-1

nginx-l6zqz 1/1 Running 0 18m 172.18.40.3 k8s-node-1

nginx-rdkpz 1/1 Running 0 18m 172.18.2.4 k8s-node-2

[root@k8s-master svc]# kubectl scale rc nginx --replicas=2

replicationcontroller "nginx" scaled

[root@k8s-master svc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-l6zqz 1/1 Running 0 18m 172.18.40.3 k8s-node-1

nginx-rdkpz 1/1 Running 0 18m 172.18.2.4 k8s-node-2

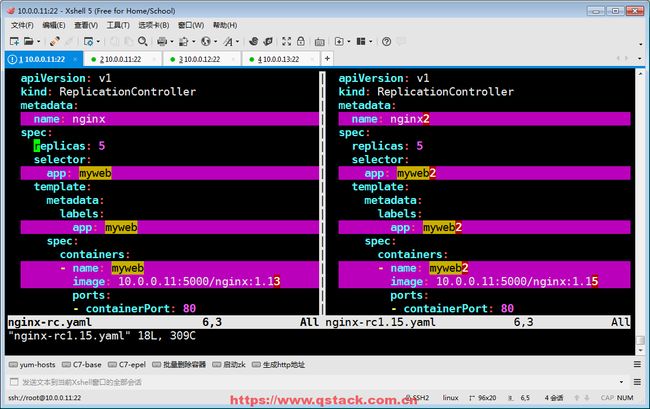

5.3.1 rc的滚动升级

master节点(11)

[root@k8s-master rc]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx-2zg2w 1/1 Running 0 3m 172.18.2.2 k8s-node-2 app=myweb

nginx-8zw3k 1/1 Running 0 3m 172.18.40.4 k8s-node-1 app=myweb

nginx-ndszh 1/1 Running 0 3m 172.18.40.3 k8s-node-1 app=myweb

nginx-s8lvq 1/1 Running 0 3m 172.18.40.2 k8s-node-1 app=myweb

nginx-z5kgt 1/1 Running 0 3m 172.18.2.3 k8s-node-2 app=myweb

# 先查看目前nginx的版本

[root@k8s-master rc]# curl -I 172.18.40.2

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Tue, 17 Dec 2019 21:20:42 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

[root@k8s-master rc]# vim k8s_rc2.yaml

编辑k8s_rc2.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx2

spec:

replicas: 5 #副本5

selector:

app: myweb2

template:

metadata:

labels:

app: myweb2

spec:

containers:

- name: myweb2

image: 10.0.0.11:5000/nginx:1.15

ports:

- containerPort: 80

node节点(13)

# 上传nginx 1.15的镜像

[root@k8s-node-2 docker_image]# docker load -i docker_nginx1.15.tar.gz

8b15606a9e3e: Loading layer [==================================================>] 58.44 MB/58.44 MB

94ad191a291b: Loading layer [==================================================>] 54.35 MB/54.35 MB

92b86b4e7957: Loading layer [==================================================>] 3.584 kB/3.584 kB

Loaded image: docker.io/nginx:latest

[root@k8s-node-2 docker_image]# docker tag docker.io/nginx:latest 10.0.0.11:5000/nginx:1.15

[root@k8s-node-2 docker_image]# docker push 10.0.0.11:5000/nginx:1.15

The push refers to a repository [10.0.0.11:5000/nginx]

92b86b4e7957: Pushed

94ad191a291b: Pushed

8b15606a9e3e: Pushed

1.15: digest: sha256:204a9a8e65061b10b92ad361dd6f406248404fe60efd5d6a8f2595f18bb37aad size: 948

master节点(11)

# 开始升级

[root@k8s-master rc]# kubectl rolling-update nginx -f k8s_rc2.yaml --update-period=10s # 升级时间设为10秒一个(默认为1分钟一个)

Created nginx2

Scaling up nginx2 from 0 to 5, scaling down nginx from 5 to 0 (keep 5 pods available, don't exceed 6 pods)

Scaling nginx2 up to 1

Scaling nginx down to 4

Scaling nginx2 up to 2

Scaling nginx down to 3

Scaling nginx2 up to 3

Scaling nginx down to 2

Scaling nginx2 up to 4

Scaling nginx down to 1

Scaling nginx2 up to 5

Scaling nginx down to 0

Update succeeded. Deleting nginx

replicationcontroller "nginx" rolling updated to "nginx2"

[root@k8s-master rc]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx2-726j3 1/1 Running 0 1m 172.18.40.3 k8s-node-1 app=myweb2

nginx2-8r5xk 1/1 Running 0 2m 172.18.2.4 k8s-node-2 app=myweb2

nginx2-dhzwt 1/1 Running 0 1m 172.18.2.2 k8s-node-2 app=myweb2

nginx2-gs72g 1/1 Running 0 1m 172.18.2.5 k8s-node-2 app=myweb2

nginx2-nsbcx 1/1 Running 0 1m 172.18.40.5 k8s-node-1 app=myweb2

[root@k8s-master rc]# curl -I 172.18.40.3

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Tue, 17 Dec 2019 21:41:25 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

5.3.2 rc的回滚

master节点(11)

[root@k8s-master rc]# kubectl rolling-update nginx2 -f k8s_rc.yaml --update-period=10s

Created nginx

Scaling up nginx from 0 to 5, scaling down nginx2 from 5 to 0 (keep 5 pods available, don't exceed 6 pods)

Scaling nginx up to 1

Scaling nginx2 down to 4

Scaling nginx up to 2

Scaling nginx2 down to 3

Scaling nginx up to 3

Scaling nginx2 down to 2

Scaling nginx up to 4

Scaling nginx2 down to 1

Scaling nginx up to 5

Scaling nginx2 down to 0

Update succeeded. Deleting nginx2

replicationcontroller "nginx2" rolling updated to "nginx"

[root@k8s-master rc]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

nginx-7vltp 1/1 Running 0 21s 172.18.40.3 k8s-node-1 app=myweb

nginx-gdz6g 1/1 Running 0 31s 172.18.2.5 k8s-node-2 app=myweb

nginx-nb13d 1/1 Running 0 1m 172.18.40.2 k8s-node-1 app=myweb

nginx-q4ldc 1/1 Running 0 41s 172.18.40.4 k8s-node-1 app=myweb

nginx-wh7cc 1/1 Running 0 51s 172.18.2.3 k8s-node-2 app=myweb

[root@k8s-master rc]# curl -I 172.18.40.3

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Tue, 17 Dec 2019 21:46:24 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

5.4 service资源

- service帮助pod暴露端口

- 负载均衡

- service默认使用iptables来实现负载均衡, k8s 1.8新版本中推荐使用lvs(四层负载均衡 传输层tcp,udp)

范例1:

添加一个svc资源

master节点(11)

[root@k8s-master svc]# pwd

/root/k8s_yaml/svc

[root@k8s-master svc]# vim k8s_svc.yaml

创建k8s_svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort #ClusterIP(宿主机端口映射到集群vip地址)

ports:

- port: 80 #clusterIP(集群vip地址)

nodePort: 30000 #node port(宿主机端口)

targetPort: 80 #pod port(容器的端口)

selector:

app: myweb # 标签选择器(选择rc的pod资源)

# 先启动一个rc

[root@k8s-master svc]# kubectl create -f /root/k8s_yaml/rc/k8s_rc.yaml

replicationcontroller "nginx" created

# 再启动svc

[root@k8s-master svc]# kubectl create -f /root/k8s_yaml/svc/k8s_svc.yaml

service "myweb" created

[root@k8s-master svc]# kubectl get svc -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.254.0.1 443/TCP 4d

myweb 10.254.158.56 80:30000/TCP 16s app=myweb

[root@k8s-master svc]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-7xqlq 1/1 Running 0 51s 172.18.2.2 k8s-node-2

nginx-8d6c5 1/1 Running 0 51s 172.18.2.3 k8s-node-2

nginx-kd312 1/1 Running 0 51s 172.18.40.2 k8s-node-1

nginx-l6zqz 1/1 Running 0 51s 172.18.40.3 k8s-node-1

nginx-rdkpz 1/1 Running 0 51s 172.18.2.4 k8s-node-2

浏览器访问:http://10.0.0.12:30000/

浏览器访问:http://10.0.0.13:30000/

范例2:

继续添加一个svc资源,关联同一组rc资源

master节点(11)

修改nodePort范围

[root@k8s-master svc]# vim /etc/kubernetes/apiserver

编辑/etc/kubernetes/apiserver

KUBE_API_ARGS="--service-node-port-range=3000-50000"

重启kube-apiserver服务

[root@k8s-master svc]# systemctl restart kube-apiserver.service

创建新的svc-yaml文件

[root@k8s-master svc]# pwd

/root/k8s_yaml/svc

[root@k8s-master svc]# vim k8s_svc2.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb2

spec:

type: NodePort #ClusterIP(宿主机端口映射到集群vip地址)

ports:

- port: 80 #clusterIP(集群vip地址)

nodePort: 3000 #node port(宿主机端口)

targetPort: 80 #pod port(容器的端口)

selector:

app: myweb # 标签选择器(选择rc的pod资源)

[root@k8s-master svc]# kubectl create -f k8s_svc2.yaml

service "myweb2" created

[root@k8s-master svc]# kubectl get svc -o wide --show-labels

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR LABELS

kubernetes 10.254.0.1 443/TCP 4d component=apiserver,provider=kubernetes

myweb 10.254.113.230 80:30000/TCP 17m app=myweb

myweb2 10.254.236.191 80:3000/TCP 54s app=myweb

浏览器访问:http://10.0.0.12:3000/

浏览器访问:http://10.0.0.13:3000/

另:命令行创建service资源

kubectl expose rc nginx --type=NodePort --port=80

5.5 deployment资源

有rc在滚动升级之后,会造成服务访问中断,于是k8s引入了deployment资源

对比rc的好处:

- 升级服务不中断

- 不依赖yaml文件

- rc标签选择器不支持模糊匹配,rs标签选择器支持模糊匹配

范例1.1:

创建deployment

master节点(11):

[root@k8s-master deploy]# pwd

/root/k8s_yaml/deploy

[root@k8s-master deploy]# vim k8s_deploy.yaml

创建k8s_deploy.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

resources: # 资源限制

limits: # 最大资源是多少

cpu: 100m

requests: # 最小需要多少资源

cpu: 100m

启用deploy资源

[root@k8s-master deploy]# kubectl create -f k8s_deploy.yaml

deployment "nginx-deployment" created

查看所有资源

[root@k8s-master deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deployment 3 3 3 3 5s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 5d

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-deployment-2807576163 3 3 3 5s nginx 10.0.0.11:5000/nginx:1.13 app=nginx,pod-template-hash=2807576163

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-deployment-2807576163-jh82t 1/1 Running 0 5s 172.18.40.2 k8s-node-1

po/nginx-deployment-2807576163-jx9h4 1/1 Running 0 5s 172.18.40.3 k8s-node-1

po/nginx-deployment-2807576163-m6wp6 1/1 Running 0 5s 172.18.2.2 k8s-node-2

命令行方式直接创建svc资源

[root@k8s-master deploy]# kubectl expose deployment nginx-deployment --port=80 --target-port=80 --type=NodePort

# --port 此为集群vip端口

# --target-port 此为容器端口

# --type=NodePort 声明类型,用于暴露宿主机端口,为随机端口

service "nginx-deployment" exposed

[root@k8s-master deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deployment 3 3 3 3 6m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 5d

svc/nginx-deployment 10.254.49.232 80:12096/TCP 5s app=nginx

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-deployment-2807576163 3 3 3 6m nginx 10.0.0.11:5000/nginx:1.13 app=nginx,pod-template-hash=2807576163

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-deployment-2807576163-jh82t 1/1 Running 0 6m 172.18.40.2 k8s-node-1

po/nginx-deployment-2807576163-jx9h4 1/1 Running 0 6m 172.18.40.3 k8s-node-1

po/nginx-deployment-2807576163-m6wp6 1/1 Running 0 6m 172.18.2.2 k8s-node-2

[root@k8s-master deploy]# curl -I http://10.0.0.12:12096

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Thu, 19 Dec 2019 12:53:43 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

[root@k8s-master deploy]# curl -I http://10.0.0.13:12096

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Thu, 19 Dec 2019 12:53:46 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

浏览器访问:http://10.0.0.12:12096/

浏览器访问:http://10.0.0.13:12096/

范例1.2:

升级

# 直接命令行方式编辑deploy资源

[root@k8s-master ~]# kubectl edit deployment nginx-deployment

# 将nginx的1.13改为1.15

- image: 10.0.0.11:5000/nginx:1.15

再次查看状态

[root@k8s-master deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deployment 3 3 3 3 19m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 5d

svc/nginx-deployment 10.254.49.232 80:12096/TCP 13m app=nginx

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-deployment-2807576163 0 0 0 19m nginx 10.0.0.11:5000/nginx:1.13 app=nginx,pod-template-hash=2807576163

rs/nginx-deployment-3014407781 3 3 3 28s nginx 10.0.0.11:5000/nginx:1.15 app=nginx,pod-template-hash=3014407781

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-deployment-3014407781-0411d 1/1 Running 0 28s 172.18.40.4 k8s-node-1

po/nginx-deployment-3014407781-6hgl9 1/1 Running 0 28s 172.18.2.3 k8s-node-2

po/nginx-deployment-3014407781-mf8xb 1/1 Running 0 28s 172.18.2.4 k8s-node-2

[root@k8s-master deploy]# curl -I http://10.0.0.13:12096

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Thu, 19 Dec 2019 13:00:32 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

[root@k8s-master deploy]# curl -I http://10.0.0.12:12096

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Thu, 19 Dec 2019 13:00:36 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

浏览器访问:http://10.0.0.12:12096/

浏览器访问:http://10.0.0.13:12096/

范例1.2:

deployment升级和回滚

node节点(13)

# 上传nginx 1.17的镜像

[root@k8s-node-2 docker_image]# docker load -i docker_nginx.tar.1.17.gz

b67d19e65ef6: Loading layer [==================================================>] 72.5 MB/72.5 MB

6eaad811af02: Loading layer [==================================================>] 57.54 MB/57.54 MB

a89b8f05da3a: Loading layer [==================================================>] 3.584 kB/3.584 kB

The image nginx:latest already exists, renaming the old one with ID sha256:be1f31be9a87cc4b8668e6f0fee4efd2b43e5d4f7734c75ac49432175aaa6ef9 to empty string

Loaded image: nginx:latest

[root@k8s-node-2 docker_image]# docker tag nginx:latest 10.0.0.11:5000/nginx:1.17

[root@k8s-node-2 docker_image]# docker push 10.0.0.11:5000/nginx:1.17

The push refers to a repository [10.0.0.11:5000/nginx]

92b86b4e7957: Layer already exists

94ad191a291b: Layer already exists

8b15606a9e3e: Layer already exists

1.17: digest: sha256:204a9a8e65061b10b92ad361dd6f406248404fe60efd5d6a8f2595f18bb37aad size: 948

[root@k8s-node-2 docker_image]# curl 10.0.0.11:5000/v2/_catalog

{"repositories":["busybox","nginx","rhel7/pod-infrastructure"]}

master节点

# 将1.15升级到1.17

[root@k8s-master deploy]# kubectl edit deployment nginx-deployment

deployment "nginx-deployment" edited

[root@k8s-master deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deployment 3 3 3 3 29m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 5d

svc/nginx-deployment 10.254.31.63 80:43272/TCP 28m app=nginx

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-deployment-2807576163 0 0 0 29m nginx 10.0.0.11:5000/nginx:1.13 app=nginx,pod-template-hash=2807576163

rs/nginx-deployment-3014407781 0 0 0 26m nginx 10.0.0.11:5000/nginx:1.15 app=nginx,pod-template-hash=3014407781

rs/nginx-deployment-3221239399 3 3 3 7s nginx 10.0.0.11:5000/nginx:1.17 app=nginx,pod-template-hash=3221239399

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-deployment-3221239399-dtzg4 1/1 Running 0 6s 172.18.40.2 k8s-node-1

po/nginx-deployment-3221239399-wm9cv 1/1 Running 0 7s 172.18.2.3 k8s-node-2

po/nginx-deployment-3221239399-xf2vg 1/1 Running 0 7s 172.18.2.2 k8s-node-2

[root@k8s-master deploy]# curl -I http://10.0.0.13:23841

HTTP/1.1 200 OK

Server: nginx/1.17.5

Date: Fri, 20 Dec 2019 15:26:03 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 22 Oct 2019 14:30:00 GMT

Connection: keep-alive

ETag: "5daf1268-264"

Accept-Ranges: bytes

# 查看deployment所有历史版本

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deployment

deployments "nginx-deployment"

REVISION CHANGE-CAUSE

1

2

3

删除deploy与svc

[root@k8s-master deploy]# kubectl delete -f k8s_deploy.yaml

[root@k8s-master svc]# kubectl delete svc nginx-deployment

service "nginx-deployment" deleted

用命令行方式操作

master节点:

# 命令行创建deployment

[root@k8s-master svc]# kubectl run nginx-deploy --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

deployment "nginx-deploy" created

[root@k8s-master deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deploy 3 3 3 3 4s

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 6d

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-deploy-703570908 3 3 3 4s nginx-deploy 10.0.0.11:5000/nginx:1.13 pod-template-hash=703570908,run=nginx-deploy

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-deploy-703570908-6fjrp 1/1 Running 0 4s 172.18.40.3 k8s-node-1

po/nginx-deploy-703570908-bsvh2 1/1 Running 0 4s 172.18.40.2 k8s-node-1

po/nginx-deploy-703570908-ns7bq 1/1 Running 0 4s 172.18.2.2 k8s-node-2

# 升级到 1.15

[root@k8s-master svc]# kubectl set image deployment nginx-deploy nginx-deploy=10.0.0.11:5000/nginx:1.15

deployment "nginx-deploy" image updated

# nginx-deploy=10.0.0.11:5000/nginx:1.15此为容器名

[root@k8s-master deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deploy 3 3 3 3 2m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 6d

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-deploy-703570908 0 0 0 2m nginx-deploy 10.0.0.11:5000/nginx:1.13 pod-template-hash=703570908,run=nginx-deploy

rs/nginx-deploy-853386206 3 3 3 1m nginx-deploy 10.0.0.11:5000/nginx:1.15 pod-template-hash=853386206,run=nginx-deploy

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-deploy-853386206-5q2xf 1/1 Running 0 1m 172.18.2.3 k8s-node-2

po/nginx-deploy-853386206-67qzs 1/1 Running 0 1m 172.18.40.4 k8s-node-1

po/nginx-deploy-853386206-frjvs 1/1 Running 0 1m 172.18.2.4 k8s-node-2

# 再升级到1.17

[root@k8s-master svc]# kubectl set image deployment nginx-deploy nginx-deploy=10.0.0.11:5000/nginx:1.17

deployment "nginx-deploy" image updated

[root@k8s-master deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deploy 3 3 3 3 3m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 6d

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-deploy-1003201504 3 3 3 15s nginx-deploy 10.0.0.11:5000/nginx:1.17 pod-template-hash=1003201504,run=nginx-deploy

rs/nginx-deploy-703570908 0 0 0 3m nginx-deploy 10.0.0.11:5000/nginx:1.13 pod-template-hash=703570908,run=nginx-deploy

rs/nginx-deploy-853386206 0 0 0 2m nginx-deploy 10.0.0.11:5000/nginx:1.15 pod-template-hash=853386206,run=nginx-deploy

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-deploy-1003201504-0m6h3 1/1 Running 0 14s 172.18.40.3 k8s-node-1

po/nginx-deploy-1003201504-5vn0r 1/1 Running 0 15s 172.18.40.2 k8s-node-1

po/nginx-deploy-1003201504-tl9bm 1/1 Running 0 15s 172.18.2.2 k8s-node-2

[root@k8s-master deploy]# curl -I 172.18.40.3

HTTP/1.1 200 OK

Server: nginx/1.17.5

Date: Fri, 20 Dec 2019 15:33:10 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 22 Oct 2019 14:30:00 GMT

Connection: keep-alive

ETag: "5daf1268-264"

Accept-Ranges: bytes

准备回滚

# 查看所有升级记录

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deploy

deployments "nginx-deploy"

REVISION CHANGE-CAUSE

1 kubectl run nginx-deploy --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

2 kubectl set image deployment nginx-deploy nginx-deploy=10.0.0.11:5000/nginx:1.15

3 kubectl set image deployment nginx-deploy nginx-deploy=10.0.0.11:5000/nginx:1.17

# 回滚到上一个版本

[root@k8s-master deploy]# kubectl rollout undo deployment nginx-deploy

deployment "nginx-deploy" rolled back

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deploy

deployments "nginx-deploy"

REVISION CHANGE-CAUSE

1 kubectl run nginx-deploy --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

3 kubectl set image deployment nginx-deploy nginx-deploy=10.0.0.11:5000/nginx:1.17

4 kubectl set image deployment nginx-deploy nginx-deploy=10.0.0.11:5000/nginx:1.15

[root@k8s-master deploy]# curl -I 172.18.40.4

HTTP/1.1 200 OK

Server: nginx/1.15.5

Date: Fri, 20 Dec 2019 15:36:04 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Tue, 02 Oct 2018 14:49:27 GMT

Connection: keep-alive

ETag: "5bb38577-264"

Accept-Ranges: bytes

# 回滚到指定版本

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deploy

deployments "nginx-deploy"

REVISION CHANGE-CAUSE

1 kubectl run nginx-deploy --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

3 kubectl set image deployment nginx-deploy nginx-deploy=10.0.0.11:5000/nginx:1.17

4 kubectl set image deployment nginx-deploy nginx-deploy=10.0.0.11:5000/nginx:1.15

[root@k8s-master deploy]# kubectl rollout undo deployment nginx-deploy --to-revision=1

deployment "nginx-deploy" rolled back

[root@k8s-master deploy]# kubectl rollout history deployment nginx-deploy

deployments "nginx-deploy"

REVISION CHANGE-CAUSE

3 kubectl set image deployment nginx-deploy nginx-deploy=10.0.0.11:5000/nginx:1.17

4 kubectl set image deployment nginx-deploy nginx-deploy=10.0.0.11:5000/nginx:1.15

5 kubectl run nginx-deploy --image=10.0.0.11:5000/nginx:1.13 --replicas=3 --record

[root@k8s-master deploy]# curl -I 172.18.40.3

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Fri, 20 Dec 2019 15:37:16 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

# 命令行创建svc

[root@k8s-master deploy]# kubectl expose deployment nginx-deploy --port=80 --target-port=80 --type=NodePort

service "nginx-deploy" exposed

[root@k8s-master deploy]# kubectl get all -o wide

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/nginx-deploy 3 3 3 3 9m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 6d

svc/nginx-deploy 10.254.218.32 80:44001/TCP 3s run=nginx-deploy

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rs/nginx-deploy-1003201504 0 0 0 6m nginx-deploy 10.0.0.11:5000/nginx:1.17 pod-template-hash=1003201504,run=nginx-deploy

rs/nginx-deploy-703570908 3 3 3 9m nginx-deploy 10.0.0.11:5000/nginx:1.13 pod-template-hash=703570908,run=nginx-deploy

rs/nginx-deploy-853386206 0 0 0 8m nginx-deploy 10.0.0.11:5000/nginx:1.15 pod-template-hash=853386206,run=nginx-deploy

NAME READY STATUS RESTARTS AGE IP NODE

po/nginx-deploy-703570908-7pnsz 1/1 Running 0 2m 172.18.40.3 k8s-node-1

po/nginx-deploy-703570908-f92bx 1/1 Running 0 2m 172.18.2.2 k8s-node-2

po/nginx-deploy-703570908-hk739 1/1 Running 0 2m 172.18.40.2 k8s-node-1

[root@k8s-master deploy]# curl -I http://10.0.0.12:44001

HTTP/1.1 200 OK

Server: nginx/1.13.12

Date: Fri, 20 Dec 2019 15:39:56 GMT

Content-Type: text/html

Content-Length: 612

Last-Modified: Mon, 09 Apr 2018 16:01:09 GMT

Connection: keep-alive

ETag: "5acb8e45-264"

Accept-Ranges: bytes

# 删除资源

[root@k8s-master deploy]# kubectl delete deployment nginx-deploy

deployment "nginx-deploy" deleted

[root@k8s-master deploy]# kubectl delete svc nginx-deploy

service "nginx-deploy" deleted

课上找到的网站资料:

Kubernetes笔记(十一)--Deployment使用+滚动升级策略

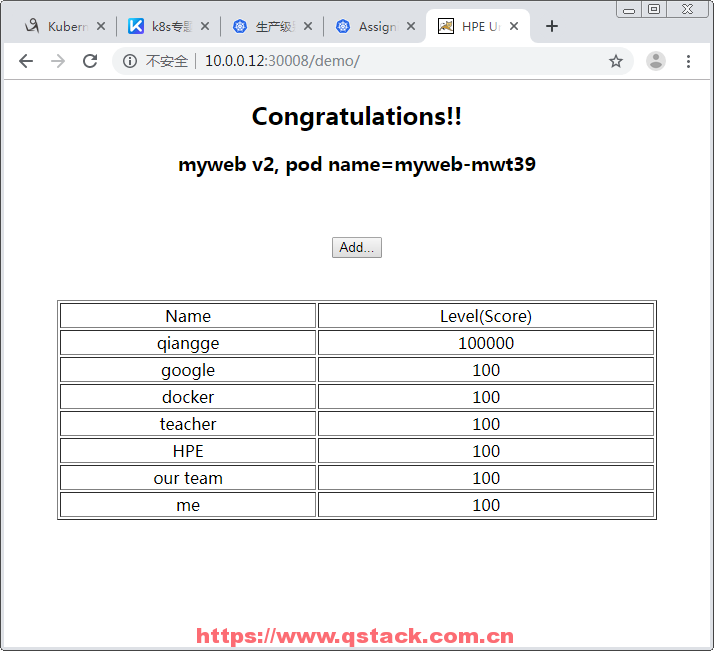

5.6 tomcat+mysql练习

在k8s中容器之间相互访问,通过VIP(service资源)地址!

master节点:

# 将老师的tomcat_demo.zip上传,解压后并删除pv字样的文件

[root@k8s-master tomcat_demo]# pwd

/root/k8s_yaml/tomcat_demo

[root@k8s-master tomcat_demo]# ls

mysql-rc.yml mysql-svc.yml tomcat-rc.yml tomcat-svc.yml

mysql-rc.yml内容:

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

replicas: 1 # 只准起1个容器

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

mysql-svc.yml内容:

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

targetPort: 3306

selector:

app: mysql

# 不让它对外接受访问,所以不设端口映射(NodePort)

tomcat-rc.yml内容:

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 1

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.11:5000/tomcat-app:v2

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql'

- name: MYSQL_SERVICE_PORT

value: '3306'

tomcat-svc.yml内容:

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort # 允许对外部访问

ports:

- port: 8080

nodePort: 30008

selector:

app: myweb

node节点(13):

# 上传mysql与tomcat镜像

[root@k8s-node-2 docker_image]# pwd

/root/docker_image

[root@k8s-node-2 docker_image]# ls

docker_busybox.tar.gz docker_nginx1.13.tar.gz docker_nginx.tar.1.17.gz tomcat-app-v2.tar.gz

docker-mysql-5.7.tar.gz docker_nginx1.15.tar.gz pod-infrastructure-latest.tar.gz

[root@k8s-node-2 docker_image]# docker load -i docker-mysql-5.7.tar.gz

Loaded image: docker.io/mysql:5.7

[root@k8s-node-2 docker_image]# docker load -i tomcat-app-v2.tar.gz

Loaded image: docker.io/kubeguide/tomcat-app:v2

[root@k8s-node-2 docker_image]# docker tag docker.io/mysql:5.7 10.0.0.11:5000/mysql:5.7

[root@k8s-node-2 docker_image]# docker tag docker.io/kubeguide/tomcat-app:v2 10.0.0.11:5000/tomcat-app:v2

[root@k8s-node-2 docker_image]# docker push 10.0.0.11:5000/mysql:5.7

The push refers to a repository [10.0.0.11:5000/mysql]

5.7: digest: sha256:e41e467ce221d6e71601bf8c167c996b3f7f96c55e7a580ef72f75fdf9289501 size: 2616

[root@k8s-node-2 docker_image]# docker push 10.0.0.11:5000/tomcat-app:v2

The push refers to a repository [10.0.0.11:5000/tomcat-app]

v2: digest: sha256:dd1ecbb64640e542819303d5667107a9c162249c14d57581cd09c2a4a19095a0 size: 5719

master节点(11):

[root@k8s-master tomcat_demo]# kubectl create -f mysql-rc.yml

replicationcontroller "mysql" created

[root@k8s-master tomcat_demo]# kubectl create -f mysql-svc.yml

service "mysql" created

[root@k8s-master tomcat_demo]# kubectl get svc

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 443/TCP 6d

mysql 10.254.145.182 3306/TCP 3s # 将此随机生成的ip记下

修改tomcat-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 1 # 起2个也可以

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.11:5000/tomcat-app:v2

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: '10.254.145.182' # 因未设DNS,所以将mysql改成svc的vip

- name: MYSQL_SERVICE_PORT

value: '3306'

修改tomcat-svc.yml

apiVersion: v1

kind: Service

metadata:

name: tomcat # 改成tomcat

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30008

selector:

app: myweb

起tomcat容器

[root@k8s-master tomcat_demo]# kubectl create -f tomcat-rc.yml

replicationcontroller "myweb" created

[root@k8s-master tomcat_demo]# kubectl create -f tomcat-svc.yml

service "tomcat" created

[root@k8s-master tomcat_demo]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 15m mysql 10.0.0.11:5000/mysql:5.7 app=mysql

rc/myweb 1 1 1 6m myweb 10.0.0.11:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 6d

svc/mysql 10.254.145.182 3306/TCP 15m app=mysql

svc/tomcat 10.254.90.68 8080:30008/TCP 6m app=myweb

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-1k2ln 1/1 Running 0 15m 172.18.2.2 k8s-node-2

po/myweb-g418s 1/1 Running 0 6m 172.18.40.2 k8s-node-1

[root@k8s-master tomcat_demo]# kubectl get pod -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE LABELS

mysql-1k2ln 1/1 Running 0 18m 172.18.2.2 k8s-node-2 app=mysql

myweb-g418s 1/1 Running 0 9m 172.18.40.2 k8s-node-1 app=myweb

浏览器访问:http://10.0.0.12:30008

浏览器访问:http://10.0.0.13:30008

浏览器访问:http://10.0.0.12:30008/demo/

浏览器访问:http://10.0.0.13:30008/demo/

显示的页面信息:

Congratulations!!

myweb v2, pod name=myweb-g418s

页面插入一条数据

Add... -->

Please input your info

Your name: rock

Your Level: 9999

Submit -->

进入容器的mysql,查看刚才插入的数据

[root@k8s-master tomcat_demo]# kubectl exec -it mysql-1k2ln /bin/bash

root@mysql-1k2ln:/# mysql -uroot -p123456

mysql> use HPE_APP;

mysql> select * from T_USERS;

+----+-----------+-------+

| ID | USER_NAME | LEVEL |

+----+-----------+-------+

| 1 | me | 100 |

| 2 | our team | 100 |

| 3 | HPE | 100 |

| 4 | teacher | 100 |

| 5 | docker | 100 |

| 6 | google | 100 |

| 7 | rock | 9999 |

+----+-----------+-------+

7 rows in set (0.00 sec)

5.7 daemon set资源:

适合收集日志:容器filebeat

适合跑监控:容器cadvisor,node-exporter(默认分配一个节点起一个容器)

deamonset演示:

master节点(11)

[root@k8s-master deamon_set]# pwd

/root/k8s_yaml/deamon_set

[root@k8s-master deamon_set]# vim k8s_deamon_set.yaml

编辑k8s_deamon_set.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: readiness

spec:

template:

metadata:

labels:

app: readiness

spec:

containers:

- name: readiness

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

启动

[root@k8s-master deamon_set]# kubectl get all -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 8d

NAME READY STATUS RESTARTS AGE IP NODE

po/daemonset-ht8s0 1/1 Running 0 5s 172.18.2.4 k8s-node-2

po/daemonset-phptw 1/1 Running 0 5s 172.18.40.2 k8s-node-1

[root@k8s-master deamon_set]# kubectl get daemonset -o wide

NAME DESIRED CURRENT READY NODE-SELECTOR AGE CONTAINER(S) IMAGE(S) SELECTOR

daemonset 2 2 2 15s daemonset 10.0.0.11:5000/nginx:1.13 app=daemonset

5.7 pet set资源

PetSet首次在K8S1.4版本中,在1.5更名为StatefulSet。

pet set与deployment的比较

pet set:

宠物应用。mysql-01 mysql-02,有数据的容器,又称有状态的容器

deployment:

畜生应用。web-xxxx web-xxxx,无数据的容器,无状态的容器

6 k8s的附加组件

6.1 dns服务

k8s集群中dns服务的作用,就是将svc的名字解析成VIP地址

没有DNS时的pod详细信息

[root@k8s-master tomcat_demo]# kubectl describe pod myweb-g418s

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

9m 9m 2 {kubelet k8s-node-1} Warning MissingClusterDNS kubelet does not have ClusterDerFirst" policy. Falling back to DNSDefault policy.

9m 9m 1 {kubelet k8s-node-1} spec.containers{myweb} Normal Pulled Container image "10.0.0.11:500

9m 9m 1 {kubelet k8s-node-1} spec.containers{myweb} Normal Created Created container with docker

9m 9m 1 {kubelet k8s-node-1} spec.containers{myweb} Normal Started Started container with docker

范例:

node2节点(13):

导入dns_docker镜像包,node2节点(13):

[root@k8s-node-2 docker_image]# pwd

/root/docker_image

[root@k8s-node-2 docker_image]# docker load -i docker_k8s_dns.tar.gz

Loaded image: gcr.io/google_containers/kubedns-amd64:1.9

Loaded image: gcr.io/google_containers/kube-dnsmasq-amd64:1.4

Loaded image: gcr.io/google_containers/dnsmasq-metrics-amd64:1.0

Loaded image: gcr.io/google_containers/exechealthz-amd64:1.2

master节点(11):

上传skydns-rc.yaml与skydns-svc.yaml

[root@k8s-master dns]# pwd

/root/k8s_yaml/dns

[root@k8s-master dns]# ls

skydns-rc.yaml skydns-svc.yaml

修改skydns-rc.yaml

spec:

50 nodeName: k8s-node-2 # 增加此行,永久运行在node2上

51 containers:

52 - name: kubedns

53 image: gcr.io/google_containers/kubedns-amd64:1.9

82 args:

83 - --domain=cluster.local.

84 - --dns-port=10053

85 - --config-map=kube-dns

86 - --kube-master-url=http://10.0.0.11:8080 # 如果master在其他宿柱机上,请修改该ip地址

创建dns服务

[root@k8s-master dns]# kubectl create -f skydns-rc.yaml

deployment "kube-dns" created

[root@k8s-master dns]# kubectl get all -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 13s

NAME DESIRED CURRENT READY AGE

rs/kube-dns-1656925611 1 1 1 13s

NAME READY STATUS RESTARTS AGE

po/kube-dns-1656925611-hg1lg 4/4 Running 0 13s

# 再创建一个dns的svc服务

[root@k8s-master dns]# kubectl create -f skydns-svc.yaml

service "kube-dns" created

# 检查

[root@k8s-master dns]# kubectl get all -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 4m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.254.230.254 53/UDP,53/TCP 3s

# 10.254.230.254为skydns-svc.yaml内申明的固定IP地址

NAME DESIRED CURRENT READY AGE

rs/kube-dns-1656925611 1 1 1 4m

NAME READY STATUS RESTARTS AGE

po/kube-dns-1656925611-hg1lg 4/4 Running 0 4m

所有node节点(12与13):

[root@k8s-node-2 docker_image]# vim /etc/kubernetes/kubelet

KUBELET_ARGS="--cluster_dns=10.254.230.254 --cluster_domain=cluster.local"

[root@k8s-node-2 docker_image]# systemctl restart kubelet.service

开始验证:

master节点(11):

# 先删除所有tomcat+mysql的资源

[root@k8s-master tomcat_demo]# ls

mysql-rc.yml mysql-svc.yml tomcat-rc.yml tomcat-svc.yml

[root@k8s-master tomcat_demo]# kubectl delete -f .

replicationcontroller "mysql" deleted

service "mysql" deleted

replicationcontroller "myweb" deleted

service "tomcat" deleted

[root@k8s-master tomcat_demo]# vim tomcat-rc.yml

编辑tomcat-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

name: myweb

spec:

replicas: 1

selector:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: 10.0.0.11:5000/tomcat-app:v2

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: 'mysql' # 将ip地址改回mysql

- name: MYSQL_SERVICE_PORT

value: '3306'

重新创建tomcat+mysql

[root@k8s-master tomcat_demo]# ls

mysql-rc.yml mysql-svc.yml tomcat-rc.yml tomcat-svc.yml

[root@k8s-master tomcat_demo]# kubectl create -f .

replicationcontroller "mysql" created

service "mysql" created

replicationcontroller "myweb" created

service "tomcat" created

[root@k8s-master tomcat_demo]# kubectl get all -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 53s mysql 10.0.0.11:5000/mysql:5.7 app=mysql

rc/myweb 1 1 1 53s myweb 10.0.0.11:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 7d

svc/mysql 10.254.1.1 3306/TCP 53s app=mysql

svc/tomcat 10.254.73.193 8080:30008/TCP 53s app=myweb

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-qjtd8 1/1 Running 0 53s 172.18.40.2 k8s-node-1

po/myweb-4ckq0 1/1 Running 0 53s 172.18.40.3 k8s-node-1

# 进入tomcat容器

[root@k8s-master tomcat_demo]# kubectl exec -it myweb-4ckq0 /bin/bash

root@myweb-4ckq0:/usr/local/tomcat# cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local

nameserver 10.254.230.254 # dns地址

nameserver 180.76.76.76

nameserver 223.5.5.5

options ndots:5

root@myweb-4ckq0:/usr/local/tomcat# ping mysql

PING mysql.default.svc.cluster.local (10.254.1.1): 56 data bytes # ip地址正确,但永远时ping不通的

^C--- mysql.default.svc.cluster.local ping statistics ---

5 packets transmitted, 0 packets received, 100% packet loss

浏览器访问:http://10.0.0.12:30008/demo/

浏览器访问:http://10.0.0.13:30008/demo/

显示的页面信息:

Congratulations!!

myweb v2, pod name=myweb-4ckq0

# 检查pod信息,不再报dns的错误

[root@k8s-master tomcat_demo]# kubectl describe pod myweb-4ckq0

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

12m 12m 1 {default-scheduler } Normal Scheduled Successfully assigned myweb-4ckq0 to k8s-node-1

12m 12m 1 {kubelet k8s-node-1} spec.containers{myweb} Normal Pulled Container image "10.0.0.11:5000/tomcat-app:v2" already present on machine

12m 12m 1 {kubelet k8s-node-1} spec.containers{myweb} Normal Created Created container with docker id 8a0b54a453f1; Security:[seccomp=unconfined]

12m 12m 1 {kubelet k8s-node-1} spec.containers{myweb} Normal Started Started container with docker id 8a0b54a453f1

6.2 namespace命令空间

namespace做资源隔离

云主机 多租户

演示:

[root@k8s-master dns]# cat skydns-rc.yaml

23 metadata:

24 name: kube-dns

25 namespace: kube-system # 这行

26 labels:

27 k8s-app: kube-dns

28 kubernetes.io/cluster-service: "true"

[root@k8s-master dns]# kubectl get all -o wide # 查看默认所有资源,但不会显示kube-system

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 30m mysql 10.0.0.11:5000/mysql:5.7 app=mysql

rc/myweb 1 1 1 30m myweb 10.0.0.11:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kubernetes 10.254.0.1 443/TCP 7d

svc/mysql 10.254.1.1 3306/TCP 30m app=mysql

svc/tomcat 10.254.73.193 8080:30008/TCP 30m app=myweb

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-qjtd8 1/1 Running 0 30m 172.18.40.2 k8s-node-1

po/myweb-4ckq0 1/1 Running 0 30m 172.18.40.3 k8s-node-1

[root@k8s-master dns]# kubectl get all --namespace=kube-system # 指定只查看kube-system的资源

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 54m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.254.230.254 53/UDP,53/TCP 50m

NAME DESIRED CURRENT READY AGE

rs/kube-dns-1656925611 1 1 1 54m

NAME READY STATUS RESTARTS AGE

po/kube-dns-1656925611-hg1lg 4/4 Running 0 54m

[root@k8s-master dns]# kubectl get all -n kube-system # 参数改写

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-dns 1 1 1 1 54m

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-dns 10.254.230.254 53/UDP,53/TCP 50m

NAME DESIRED CURRENT READY AGE

rs/kube-dns-1656925611 1 1 1 54m

NAME READY STATUS RESTARTS AGE

po/kube-dns-1656925611-hg1lg 4/4 Running 0 54m

范例:新建namespcae

master节点(11)

# 查看系统默认的namespace

[root@k8s-master dns]# kubectl get namespace

NAME STATUS AGE

default Active 7d

kube-system Active 7d # 该namespace属于系统组件

[root@k8s-master dns]# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-qjtd8 1/1 Running 0 38m

myweb-4ckq0 1/1 Running 0 38m

[root@k8s-master dns]# kubectl get pod -n default

NAME READY STATUS RESTARTS AGE

mysql-qjtd8 1/1 Running 0 38m

myweb-4ckq0 1/1 Running 0 38m

[root@k8s-master dns]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

kube-dns-1656925611-hg1lg 4/4 Running 0 1h

先删除tomcat+mysql

[root@k8s-master tomcat_demo]# kubectl delete -f .

replicationcontroller "mysql" deleted

service "mysql" deleted

replicationcontroller "myweb" deleted

service "tomcat" deleted

创建一个新的namespcae

[root@k8s-master tomcat_demo]# kubectl create namespace tomcat

namespace "tomcat" created

[root@k8s-master tomcat_demo]# kubectl get namespace

NAME STATUS AGE

default Active 7d

kube-system Active 7d

tomcat Active 42s

修改yml文件并创建

[root@k8s-master tomcat_demo]# ls

mysql-rc.yml mysql-svc.yml tomcat-rc.yml tomcat-svc.yml

[root@k8s-master tomcat_demo]# cat mysql-rc.yml | head -5

apiVersion: v1

kind: ReplicationController

metadata:

name: mysql

spec:

[root@k8s-master tomcat_demo]# sed -i '3a \ \ namespace: tomcat' *.yml

[root@k8s-master tomcat_demo]# cat mysql-rc.yml | head -5

apiVersion: v1

kind: ReplicationController

metadata:

namespace: tomcat

name: mysql

[root@k8s-master tomcat_demo]# kubectl create -f .

replicationcontroller "mysql" created

service "mysql" created

replicationcontroller "myweb" created

service "tomcat" created

查看

[root@k8s-master tomcat_demo]# kubectl get all

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes 10.254.0.1 443/TCP 7d

[root@k8s-master tomcat_demo]# kubectl get all -n tomcat -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 1m mysql 10.0.0.11:5000/mysql:5.7 app=mysql

rc/myweb 1 1 1 1m myweb 10.0.0.11:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/mysql 10.254.65.150 3306/TCP 1m app=mysql

svc/tomcat 10.254.15.40 8080:30008/TCP 1m app=myweb

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-8n9qt 1/1 Running 0 1m 172.18.40.2 k8s-node-1

po/myweb-vgrp2 1/1 Running 0 1m 172.18.40.3 k8s-node-1

浏览器访问:http://10.0.0.12:30008/demo/

浏览器访问:http://10.0.0.13:30008/demo/

显示的页面信息:

Congratulations!!

myweb v2, pod name=myweb-vgrp2

6.3 健康检查

6.3.1 探针的种类

livenessProbe:健康状态检查,周期性检查服务是否存活,检查结果失败,将重启容器

readinessProbe:可用性检查,周期性检查服务是否可用,不可用将从service的endpoints中移除

6.3.2 探针的检测方法

- exec:执行一段命令 返回值为0, 非0

- httpGet:检测某个 http 请求的返回状态码 2xx,3xx正常, 4xx,5xx错误

- tcpSocket:测试某个端口是否能够连接

6.3.3 附:容器的初始命令说明

k8s中容器的初始命令:

args:

command:

dockerfile容器的初始命令

CMD:

ENTRYPOINT:

6.3.4 liveness探针的exec使用(较少用)

master节点(11):

[root@k8s-master health]# pwd

/root/k8s_yaml/health

[root@k8s-master health]# vim nginx_pod_exec.yaml

创建nginx_pod_exec.yaml

apiVersion: v1

kind: Pod

metadata:

name: exec

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh # 使用sh执行一段命令

- -c

- touch /tmp/healthy; sleep 30; rm -rf /tmp/healthy; sleep 600 # 创建文件,睡30秒,再删,睡600秒

livenessProbe: # 开始健康检查

exec: # 执行命令

command:

- cat # 查看一个文件是否存在。存在返回为0,不存在返回非0

- /tmp/healthy

initialDelaySeconds: 5 # 初始化检查,即容器启动多少秒后开始检查

periodSeconds: 5 # 每隔多少秒检查一次

timeoutSeconds: 5 # 执行多长时间后超时

successThreshold: 1 # 成功设为1次

failureThreshold: 1 # 失败设为1次

启用pod资源与检查

[root@k8s-master health]# kubectl create -f nginx_pod_exec.yaml

pod "exec" created

[root@k8s-master health]# kubectl get pod

NAME READY STATUS RESTARTS AGE

exec 1/1 Running 0 9s

[root@k8s-master health]# kubectl describe pod exec

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

1m 1m 1 {default-scheduler } Normal Scheduled Successfully assigned exec to k8s-node-1

1m 1m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Pulled Container image "10.0.0.11:5000/nginx:1.13" already present on machine

1m 1m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Created Created container with docker id 8a4ded7a1084; Security:[seccomp=unconfined]

1m 1m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Started Started container with docker id 8a4ded7a1084

30s 30s 1 {kubelet k8s-node-1} spec.containers{nginx} Warning Unhealthy Liveness probe failed: cat: /tmp/healthy: No such file or directory

[root@k8s-master health]# kubectl describe pod exec

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

1m 1m 1 {default-scheduler } Normal Scheduled Successfully assigned exec to k8s-node-1

1m 1m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Created Created container with docker id 8a4ded7a1084; Security:[seccomp=unconfined]

1m 1m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Started Started container with docker id 8a4ded7a1084

42s 42s 1 {kubelet k8s-node-1} spec.containers{nginx} Warning Unhealthy Liveness probe failed: cat: /tmp/healthy: No such file or directory

1m 12s 2 {kubelet k8s-node-1} spec.containers{nginx} Normal Pulled Container image "10.0.0.11:5000/nginx:1.13" already present on machine

12s 12s 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Killing Killing container with docker id 8a4ded7a1084: pod "exec_default(cadfdbb8-2450-11ea-b132-000c293c3b9a)" container "nginx" is unhealthy, it will be killed and re-created.

12s 12s 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Created Created container with docker id 454e06efd711; Security:[seccomp=unconfined]

11s 11s 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Started Started container with docker id 454e06efd711

[root@k8s-master health]# kubectl get pod

NAME READY STATUS RESTARTS AGE

exec 1/1 Running 4 4m

6.3.5 liveness探针的httpGet使用(常用)

master节点(11):

[root@k8s-master health]# pwd

/root/k8s_yaml/health

[root@k8s-master health]# vim nginx_pod_httpGet.yaml

创建nginx_pod_httpGet.yaml

apiVersion: v1

kind: Pod

metadata:

name: httpget

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: /index.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

启用资源与检查

[root@k8s-master health]# kubectl create -f nginx_pod_httpGet.yaml

pod "httpget" created

[root@k8s-master health]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

httpget 1/1 Running 0 17s 172.18.40.2 k8s-node-1

[root@k8s-master health]# curl -I 172.18.40.2

HTTP/1.1 200 OK

模拟故障

[root@k8s-master health]# kubectl exec -it httpget /bin/bash

root@httpget:/# rm -f /usr/share/nginx/html/index.html

root@httpget:/# exit

exit

[root@k8s-master health]# kubectl describe pod httpget

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

3m 3m 1 {default-scheduler } Normal Scheduled Successfully assigned httpget to k8s-node-1

3m 3m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Created Created container with docker id 80d204a3bff1; Security:[seccomp=unconfined]

3m 3m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Started Started container with docker id 80d204a3bff1

3m 32s 2 {kubelet k8s-node-1} spec.containers{nginx} Normal Pulled Container image "10.0.0.11:5000/nginx:1.13" already present on machine

38s 32s 3 {kubelet k8s-node-1} spec.containers{nginx} Warning Unhealthy Liveness probe failed: HTTP probe failed with statuscode: 404

32s 32s 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Killing Killing container with docker id 80d204a3bff1: pod "httpget_default(9edd48c7-2452-11ea-b132-000c293c3b9a)" container "nginx" is unhealthy, it will be killed and re-created.

31s 31s 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Created Created container with docker id ae76dc4cd3a4; Security:[seccomp=unconfined]

31s 31s 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Started Started container with docker id ae76dc4cd3a4

[root@k8s-master health]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

httpget 1/1 Running 1 3m 172.18.40.2 k8s-node-1

[root@k8s-master health]# curl -I 172.18.40.2

HTTP/1.1 200 OK

6.3.6 liveness探针的tcpSocket使用

master节点(11):

[root@k8s-master health]# pwd

/root/k8s_yaml/health

[root@k8s-master health]# vim nginx_pod_tcpSocket.yaml

创建nginx_pod_tcpSocket.yaml

apiVersion: v1

kind: Pod

metadata:

name: tcpsocket

spec:

containers:

- name: nginx

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

args:

- /bin/sh

- -c

- tail -f /etc/hosts

livenessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 10

periodSeconds: 15

启用资源

[root@k8s-master health]# kubectl create -f nginx_pod_tcpSocket.yaml

pod "tcpsocket" created

[root@k8s-master health]# kubectl exec -it tcpsocket /bin/bash

root@tcpsocket:/# nginx

[root@k8s-master ~]# kubectl get pod tcpsocket -o wide

NAME READY STATUS RESTARTS AGE IP NODE

tcpsocket 1/1 Running 0 11s 172.18.40.2 k8s-node-1

[root@k8s-master ~]# curl -I 172.18.40.2

HTTP/1.1 200 OK

[root@k8s-master health]# kubectl exec -it tcpsocket /bin/bash

root@tcpsocket:/# nginx -s stop

[root@k8s-master ~]# kubectl get pod tcpsocket

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

3m 3m 1 {default-scheduler } Normal Scheduled Successfully assigned tcpsocket to k8s-node-1

3m 3m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Created Created container with docker id 75110941bc2e; Security:[seccomp=unconfined]

3m 3m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Started Started container with docker id 75110941bc2e

2m 2m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Killing Killing container with docker id 75110941bc2e: pod "tcpsocket_default(dba143d6-2454-11ea-b132-000c293c3b9a)" container "nginx" is unhealthy, it will be killed and re-created.

2m 2m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Created Created container with docker id 38e4d048de68; Security:[seccomp=unconfined]

2m 2m 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Started Started container with docker id 38e4d048de68

3m 32s 6 {kubelet k8s-node-1} spec.containers{nginx} Warning Unhealthy Liveness probe failed: dial tcp 172.18.40.2:80: getsockopt: connection refused

3m 2s 3 {kubelet k8s-node-1} spec.containers{nginx} Normal Pulled Container image "10.0.0.11:5000/nginx:1.13" already present on machine

2s 2s 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Killing Killing container with docker id 38e4d048de68: pod "tcpsocket_default(dba143d6-2454-11ea-b132-000c293c3b9a)" container "nginx" is unhealthy, it will be killed and re-created.

2s 2s 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Created Created container with docker id 9c8e56259eb7; Security:[seccomp=unconfined]

1s 1s 1 {kubelet k8s-node-1} spec.containers{nginx} Normal Started Started container with docker id 9c8e56259eb7

[root@k8s-master ~]# kubectl get pod tcpsocket

NAME READY STATUS RESTARTS AGE

tcpsocket 1/1 Running 2 3m

4.3.7 readiness探针的httpGet使用

可用于tomcat的假死

master节点(11):

[root@k8s-master health]# pwd

/root/k8s_yaml/health

[root@k8s-master health]# vim nginx-rc-httpGet.yaml

编辑nginx-rc-httpGet.yaml

apiVersion: v1

kind: ReplicationController # RC资源

metadata:

name: readiness

spec:

replicas: 2

selector:

app: readiness

template:

metadata:

labels:

app: readiness

spec:

containers:

- name: readiness

image: 10.0.0.11:5000/nginx:1.13

ports:

- containerPort: 80

readinessProbe:

httpGet:

path: /rock.html

port: 80

initialDelaySeconds: 3

periodSeconds: 3

启用

[root@k8s-master health]# kubectl create -f nginx-rc-httpGet.yaml

replicationcontroller "readiness" created

[root@k8s-master health]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

readiness-3fhs8 0/1 Running 0 2s 172.18.2.3 k8s-node-2 # 还没用上

readiness-w661f 0/1 Running 0 2s 172.18.40.2 k8s-node-1

创建一个svc资源

[root@k8s-master health]# kubectl expose rc readiness --port=80 --target-port=80 --type=NodePort

service "readiness" exposed

[root@k8s-master health]# kubectl get svc -o wide

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes 10.254.0.1 443/TCP 8d

readiness 10.254.205.136 80:32075/TCP 54s app=readiness

[root@k8s-master health]# kubectl describe svc readiness

Name: readiness

Namespace: default

Labels: app=readiness

Selector: app=readiness

Type: NodePort

IP: 10.254.205.136

Port: 80/TCP

NodePort: 32075/TCP

Endpoints: # 显示没有关联

Session Affinity: None

No events.

手动进入两个pod容器并创建rock.html

[root@k8s-master health]# kubectl exec -it readiness-3fhs8 /bin/bash

root@readiness-3fhs8:/# echo "web01" > /usr/share/nginx/html/rock.html

root@readiness-3fhs8:/# exit

exit

[root@k8s-master health]# kubectl exec -it readiness-w661f /bin/bash

root@readiness-w661f:/# echo "web02" > /usr/share/nginx/html/rock.html

root@readiness-w661f:/# exit

exit

[root@k8s-master health]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

readiness-3fhs8 1/1 Running 0 9m 172.18.2.3 k8s-node-2 # 显示已起来

readiness-w661f 1/1 Running 0 9m 172.18.40.2 k8s-node-1

[root@k8s-master health]# kubectl describe svc readiness

Name: readiness

Namespace: default

Labels: app=readiness

Selector: app=readiness

Type: NodePort

IP: 10.254.205.136

Port: 80/TCP

NodePort: 32075/TCP

Endpoints: 172.18.2.3:80,172.18.40.2:80 # 显示已关联

Session Affinity: None

No events.

浏览器访问:http://10.0.0.12:32075/rock.html

浏览器访问:http://10.0.0.13:32075/rock.html

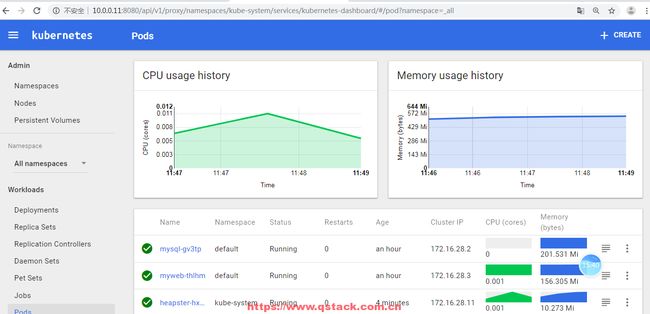

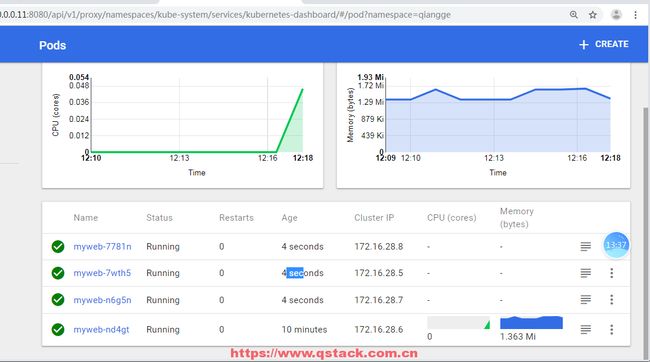

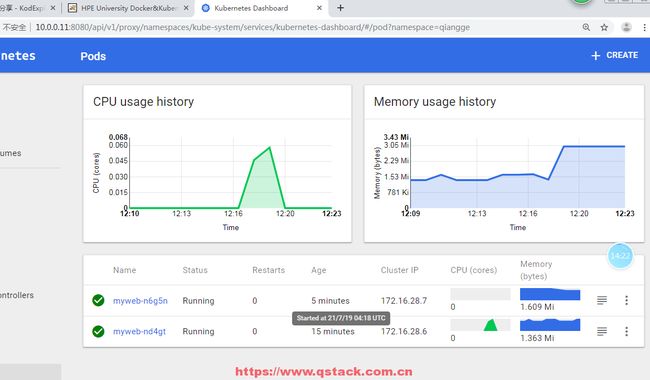

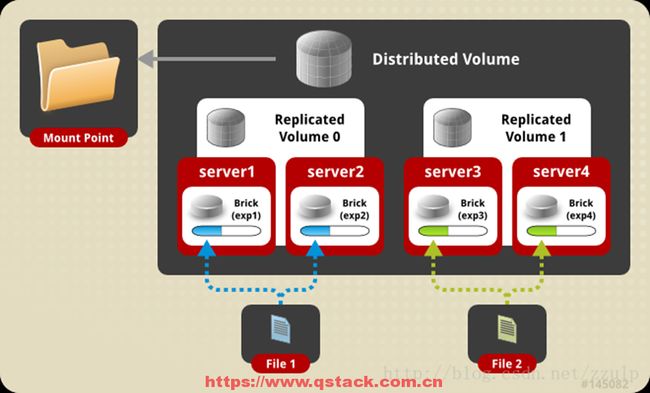

6.4 dashboard服务

1:上传并导入镜像,打标签

2:创建dashborad的deployment和service

3:访问http://10.0.0.11:8080/ui/

范例:

node节点(13):

# 上传并导入镜像,打标签

[root@k8s-node-2 docker_image]# docker load -i kubernetes-dashboard-amd64_v1.4.1.tar.gz

5f70bf18a086: Loading layer [==================================================>] 1.024 kB/1.024 kB

2e350fa8cbdf: Loading layer [==================================================>] 86.96 MB/86.96 MB

Loaded image: index.tenxcloud.com/google_containers/kubernetes-dashboard-amd64:v1.4.1

[root@k8s-node-2 docker_image]# docker tag index.tenxcloud.com/google_containers/kubernetes-dashboard-amd64:v1.4.1 10.0.0.11:5000/kubernetes-dashboard-amd64:v1.4.1

master节点(11):

[root@k8s-master dashboard]# pwd

/root/k8s_yaml/dashboard

[root@k8s-master dashboard]# vim dashboard-deploy.yaml

编辑dashboard-deploy.yaml

apiVersion: extensions/v1beta1