K8s项目实战-业务容器化-Day 05

1. 简介

本次主要是为了模拟公司业务从非k8s如何迁移到k8s,及迁移前的准备工作。

(1)了解当前的业务环境,详细如下:

a. 当前项目中有哪些服务,因为后面要针对这些服务来制作容器镜像(Dockerfile)。

b. 完整的业务调用链路,前端、后端、存储、其他中间件等。

b. 部署到k8s后,进行运行测试、功能测试、压力测试等(由测试部门负责,运维不管)。

(2)后期业务保障

a. 监控

b. 告警

c. 日志收集

d. cicd

e. 链路追踪等

(3)理清上述问题后,列出迁移计划,什么时候做什么事,什么时候完成等。

a. 如购买服务器资源,列出采购清单。

b. 如前期的网络地址规划,创建VPC,划分子网。

2. 业务容器化的优势

(1)提高资源利用率、节约部署IT成本。

a. 自动化部署:使用Kubernetes可以实现自动化部署,可以大大减少手动部署的时间和人力成本。

b. 弹性伸缩:Kubernetes可以根据业务负载自动调整应用程序的副本数量,确保资源利用率高效,避免资源浪费。

c. 负载均衡:Kubernetes可以自动进行负载均衡,将请求分配到不同的应用程序副本中,确保每个应用程序都能够平均分配负载,提高资源利用率。

d. 资源隔离:Kubernetes可以实现资源隔离,确保每个应用程序都有足够的资源可用,避免资源争用和应用程序之间的干扰。

e. 可靠性和可用性:Kubernetes可以实现应用程序的高可靠性和可用性,确保业务能够持续运行,减少因为故障导致的停机时间和业务损失。

(2)提高部署效率,基于kubernetes实现微服务的快速部署与交付、容器的批量调度与秒级启动。

(3)实现横向扩容、灰度部署、回滚、链路追踪、服务治理等。

(4)可根据业务负载进行自动弹性伸缩。

(5)容器将环境和代码打包在镜像内,保证了测试与生产运行环境的一致性。

(6)紧跟云原生社区技术发展的步伐,不给公司遗留技术债,为后期技术升级夯实了基础。

(7)为个人储备前沿技术,提高个人level。

3. 业务容器化案例

3.1 案例一:业务规划及镜像分层构建

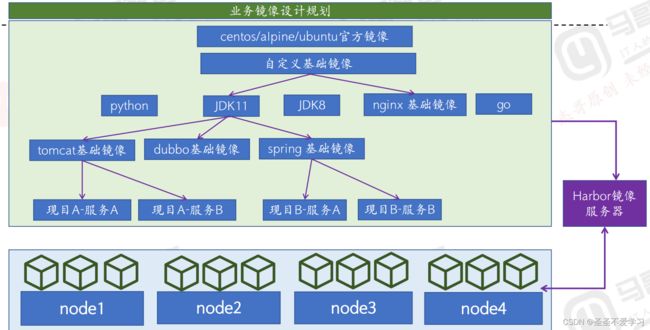

3.1.1 如何分层构建

如上图:

(1)首先我们准备好基础镜像,比如centos、Ubuntu、alpine,在里面安装好我们所需的基础命令,这样第一个基础镜像就打好了。

(2)基于自定义基础镜像,根据开发的需求来进行构建业务镜像,如上面有python、java、go、nginx。

(3)再根据基础业务镜像,来区分不同的业务场景,比如A项目使用tomcat基础镜像,B项目使用nginx基础镜像。

(4)最后就可以按照业务场景来选择使用什么镜像了。

3.1.2 构建基础镜像

3.1.2.1 编辑dockerfile

[root@containerd-build-image ~]# mkdir -p /opt/k8s-data/dockerfile/system/centos

[root@containerd-build-image ~]# cd /opt/k8s-data/dockerfile/system/centos

[root@containerd-build-image centos]# cat Dockerfile

#自定义Centos 基础镜像

FROM centos:7.9.2009

# 替换yum源

ADD CentOS-Base.repo epel.repo /etc/yum.repos.d

# 添加所需软件包并安装

ADD filebeat-7.12.1-x86_64.rpm /tmp

RUN yum install -y /tmp/filebeat-7.12.1-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop && \

rm -rf /etc/localtime /tmp/filebeat-7.12.1-x86_64.rpm && \

ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime #&& useradd nginx -u 2088

# 上传所需文件

[root@containerd-build-image centos]# mv /root/filebeat-7.12.1-x86_64.rpm ./

[root@containerd-build-image centos]# cp /etc/yum.repos.d/CentOS-Base.repo ./

[root@containerd-build-image centos]# cp /etc/yum.repos.d/epel.repo ./

[root@containerd-build-image centos]# ll

-rw-r--r-- 1 root root 1923 6月 26 14:57 CentOS-Base.repo

-rw-r--r-- 1 root root 561 6月 26 14:57 Dockerfile

-rw-r--r-- 1 root root 237 6月 26 14:58 epel.repo

-rw-r--r-- 1 root root 32600353 6月 26 14:47 filebeat-7.12.1-x86_64.rpm

3.1.2.2 编辑构建脚本

[root@containerd-build-image centos]# cat build-image.sh

#!/bin/bash

/usr/local/bin/nerdctl build -t tsk8s.top/baseimages/magedu-centos-base:7.9.2009 . && \

/usr/local/bin/nerdctl push tsk8s.top/baseimages/magedu-centos-base:7.9.2009

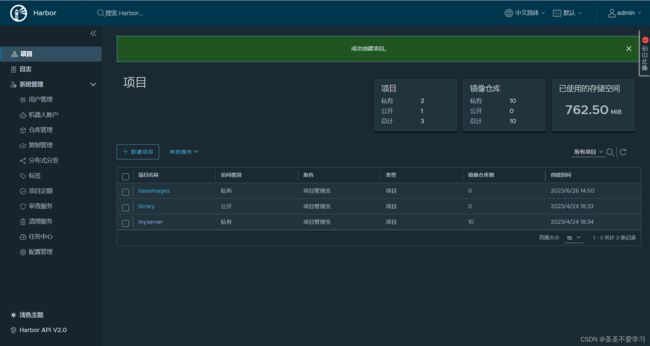

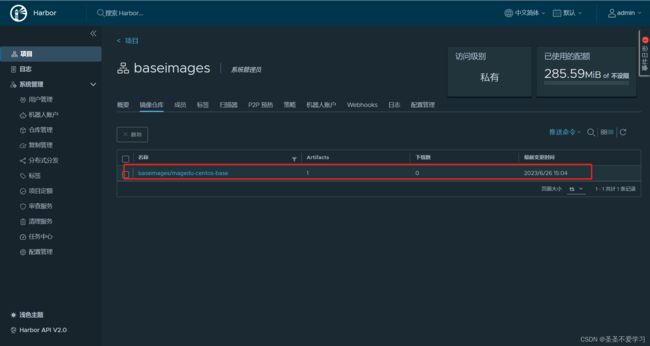

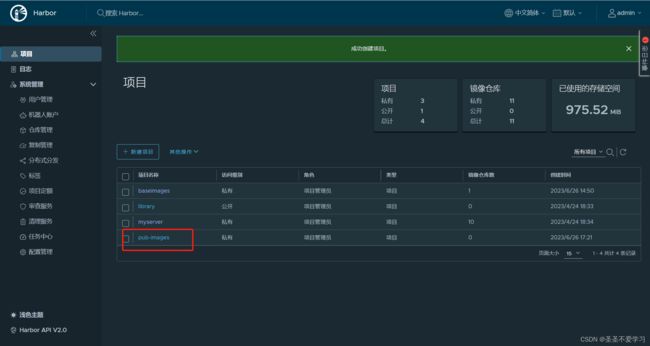

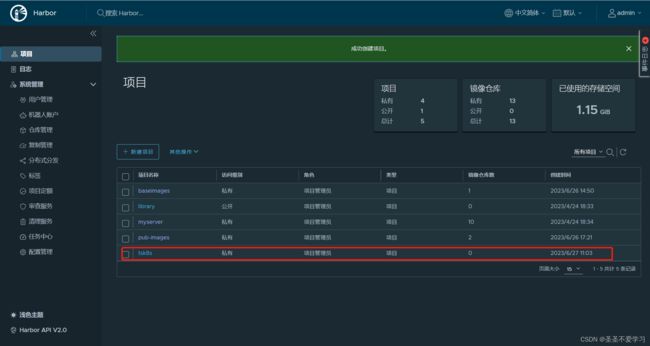

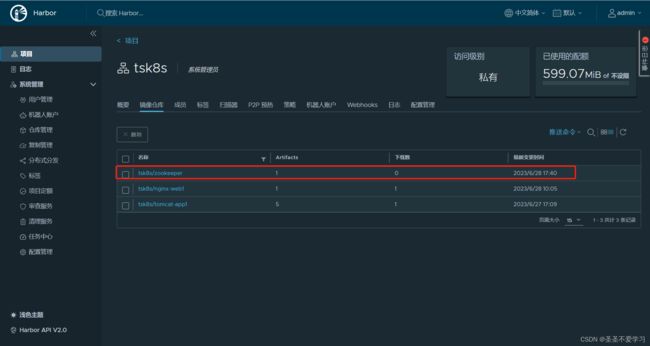

3.1.2.3 harbor创建项目

3.1.2.4 构建镜像

[root@containerd-build-image centos]# sh build-image.sh

[+] Building 231.4s (9/9)

[+] Building 232.1s (9/9) FINISHED

……省略部分内容

Loaded image: tsk8s.top/baseimages/magedu-centos-base:7.9.2009

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:4f8ce249d0db760721782e6331ae3145c3f4557b4645ad1a785da9b3b87481b4)

manifest-sha256:4f8ce249d0db760721782e6331ae3145c3f4557b4645ad1a785da9b3b87481b4: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:34073b4210e319aaedb179d2cb8c17157058943b1d7f9218d821f9195f7b3009: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 13.1s total: 3.8 Ki (295.0 B/s)

3.2 案例二: Nginx+Tomcat+NFS实现动静分离

3.2.1 构建jdk基础镜像

3.2.1.1 编辑dockerfile

[root@containerd-build-image ~]# cd /opt/k8s-data/dockerfile/

[root@containerd-build-image dockerfile]# ls

system

[root@containerd-build-image dockerfile]# mkdir web

[root@containerd-build-image dockerfile]# cd web

[root@containerd-build-image web]# mkdir pub-images

[root@containerd-build-image web]# cd pub-images

[root@containerd-build-image pub-images]# mkdir jdk-1.8.212

[root@containerd-build-image pub-images]# cd jdk-1.8.212

[root@containerd-build-image jdk-1.8.212]# cat Dockerfile

#JDK Base Image

FROM tsk8s.top/baseimages/magedu-centos-base:7.9.2009

ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/

RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk

# 这个profile是等下给普通用户使用的

ADD profile /etc/profile

# 这里声明的变量,是给root用户使用的,因为容器运行时,profile不会被加载

ENV JAVA_HOME /usr/local/jdk

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/

ENV PATH $PATH:$JAVA_HOME/bin

3.2.1.2 准备相关文件

[root@containerd-build-image jdk-1.8.212]# ll -rt

总用量 190452

-rw-r--r-- 1 root root 332 6月 26 16:47 Dockerfile

-rw-r--r-- 1 root root 195013152 6月 26 16:59 jdk-8u212-linux-x64.tar.gz

-rw-r--r-- 1 root root 2105 6月 26 16:59 profile

[root@containerd-build-image jdk-1.8.212]# cat profile

…省略部分内容

export LANG=en_US.UTF-8

export HISTTIMEFORMAT="%F %T `whoami` "

export JAVA_HOME=/usr/local/jdk

export TOMCAT_HOME=/apps/tomcat

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$TOMCAT_HOME/bin:$PATH

export CLASSPATH=.$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$JAVA_HOME/lib/tools.jar

3.2.1.3 编辑构建脚本

[root@containerd-build-image jdk-1.8.212]# cat build-image.sh

#!/bin/bash

nerdctl build -t tsk8s.top/pub-images/jdk-base:v8.212 . && \

nerdctl push tsk8s.top/pub-images/jdk-base:v8.212

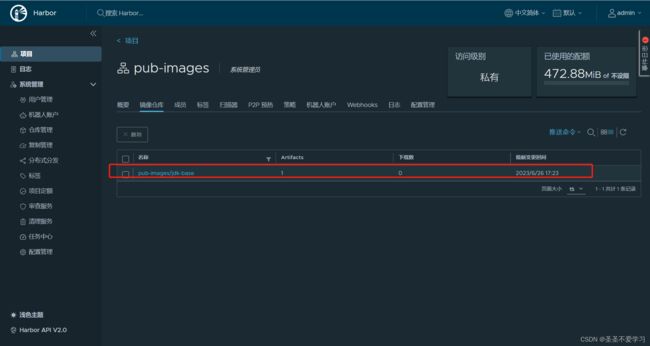

3.2.1.4 harborc创建项目

3.2.1.5 构建镜像

[root@containerd-build-image jdk-1.8.212]# sh build-image.sh

3.2.1.6 启动测试

[root@containerd-build-image jdk-1.8.212]# nerdctl run -d --name test tsk8s.top/pub-images/jdk-base:v8.212 tail -f /etc/hosts

0921ccf1a4b41e15038940fc92c9874469a6c7ea31eccf28870ae791c189b218

[root@containerd-build-image jdk-1.8.212]# nerdctl ps -l

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0921ccf1a4b4 tsk8s.top/pub-images/jdk-base:v8.212 "tail -f /etc/hosts" 5 seconds ago Up test

[root@containerd-build-image jdk-1.8.212]# nerdctl exec -it 0921ccf1a4b4 /bin/bash

[root@0921ccf1a4b4 /]# java -version

java version "1.8.0_212"

Java(TM) SE Runtime Environment (build 1.8.0_212-b10)

Java HotSpot(TM) 64-Bit Server VM (build 25.212-b10, mixed mode)

[root@0921ccf1a4b4 /]# useradd test

[root@0921ccf1a4b4 /]# su - test

[test@0921ccf1a4b4 ~]$ java -version

java version "1.8.0_212"

Java(TM) SE Runtime Environment (build 1.8.0_212-b10)

Java HotSpot(TM) 64-Bit Server VM (build 25.212-b10, mixed mode)

[test@0921ccf1a4b4 ~]$ exit

logout

[root@0921ccf1a4b4 /]# exit

exit

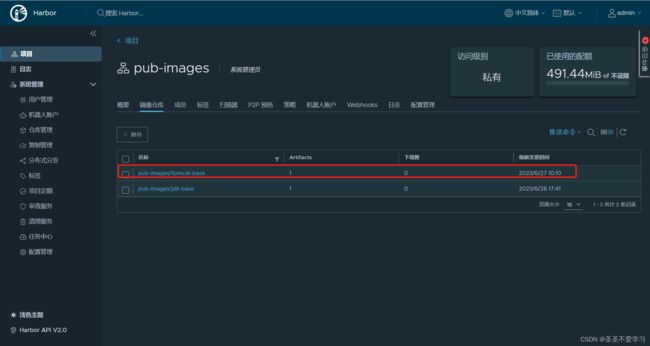

3.2.2 构建tocmat基础镜像

3.2.2.1 编辑dockerfile

[root@containerd-build-image pub-images]# mkdir tomcat-base-8.5.43

[root@containerd-build-image pub-images]# cd tomcat-base-8.5.43

[root@containerd-build-image tomcat-base-8.5.43]# vim Dockerfile

[root@containerd-build-image tomcat-base-8.5.43]# cat Dockerfile

#Tomcat 8.5.43基础镜像

FROM tsk8s.top/pub-images/jdk-base:v8.212

RUN mkdir /apps /data/tomcat/webapps /data/tomcat/logs -pv

ADD apache-tomcat-8.5.43.tar.gz /apps

# 普通用户的创建根据需求来

RUN useradd tomcat -u 2050 && \

ln -sv /apps/apache-tomcat-8.5.43 /apps/tomcat

3.2.2.2 准备相关文件

[root@ai-k8s-jump tmp]# ll apache-tomcat-8.5.43.tar.gz

-rw-rw-r-- 1 ops ops 9717059 6月 27 10:02 apache-tomcat-8.5.43.tar.gz

3.2.2.3 编辑构建脚本

[root@containerd-build-image tomcat-base-8.5.43]# cat build-image.sh

#!/bin/bash

nerdctl build -t tsk8s.top/pub-images/tomcat-base:v8.5.43 . && \

nerdctl push tsk8s.top/pub-images/tomcat-base:v8.5.43

3.2.2.4 构建镜像

[root@containerd-build-image tomcat-base-8.5.43]# sh build-image.sh

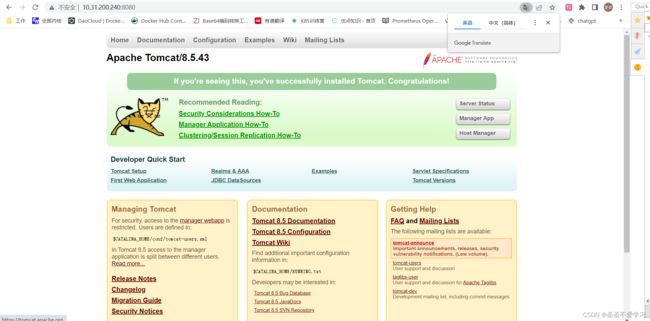

3.2.2.5 启动测试

# 创建临时容器

[root@containerd-build-image tomcat-base-8.5.43]# nerdctl run -it --rm -p 8080:8080 tsk8s.top/pub-images/tomcat-base:v8.5.43 bash

# 启动tomcat

[root@6434c11984b4 ~]# cd /apps/tomcat/bin/

[root@6434c11984b4 bin]# ./catalina.sh run

……省略部分内容

27-Jun-2023 10:19:57.997 INFO [main] org.apache.catalina.startup.Catalina.start Server startup in 1237 ms

3.2.2.6 访问测试

3.2.3 构建tocmat业务镜像

3.2.3.1 编辑dockerfile

[root@containerd-build-image tomcat-base-8.5.43]# cd ..

[root@containerd-build-image pub-images]# ls

jdk-1.8.212 tomcat-base-8.5.43

[root@containerd-build-image pub-images]# mkdir tsk8s

[root@containerd-build-image pub-images]# cd tsk8s

[root@containerd-build-image tsk8s]# mkdir tomcat-app1

[root@containerd-build-image tsk8s]# cd tomcat-app1

[root@containerd-build-image tomcat-app1]# cat Dockerfile

#tomcat web1

FROM tsk8s.top/pub-images/tomcat-base:v8.5.43

# 拷贝脚本,工作中该文件中的配置需要根据实际情况调整

ADD catalina.sh /apps/tomcat/bin/catalina.sh

# 拷贝tomcat配置文件,工作中该文件中的配置需要根据实际情况调整

ADD server.xml /apps/tomcat/conf/server.xml

# 拷贝业务代码

ADD app1.tar.gz /data/tomcat/webapps/app1/

# 拷贝容器启动脚本,一般由开发提供

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

# 授权先关目录给之前创建的普通用户,后续tomcat服务由普通用户运行,保证容器安全

RUN chown -R tomcat.tomcat /data/ /apps/ && \

chmod u+x /apps/tomcat/bin/catalina.sh /apps/tomcat/bin/run_tomcat.sh

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

3.2.3.2 编辑容器启动脚本

[root@containerd-build-image tomcat-app1]# cat run_tomcat.sh

#!/bin/bash

# 切换到普通用户运行服务

su - tomcat -c "/apps/tomcat/bin/catalina.sh start"

# 添加守护进程

tail -f /etc/hosts

3.2.3.3 编辑构建脚本

[root@containerd-build-image tomcat-app1]# cat build-image.sh

#!/bin/bash

# 该脚本执行时需要传递构建tag

TAG=$1

nerdctl build -t tsk8s.top/tsk8s/tomcat-app1:${TAG} .

nerdctl push tsk8s.top/tsk8s/tomcat-app1:${TAG}

3.2.3.4 准备相关文件

[root@containerd-build-image tomcat-app1]# ll catalina.sh server.xml app1.tar.gz run_tomcat.sh

-rw-rw-r-- 1 root root 144 6月 27 10:37 app1.tar.gz

-rw-rw-r-- 1 root root 23611 6月 27 10:37 catalina.sh

-rw-rw-r-- 1 root root 142 6月 27 10:55 run_tomcat.sh

-rw-rw-r-- 1 root root 6462 6月 27 10:37 server.xml

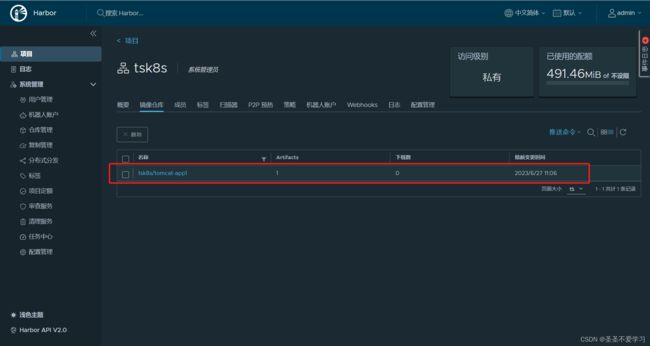

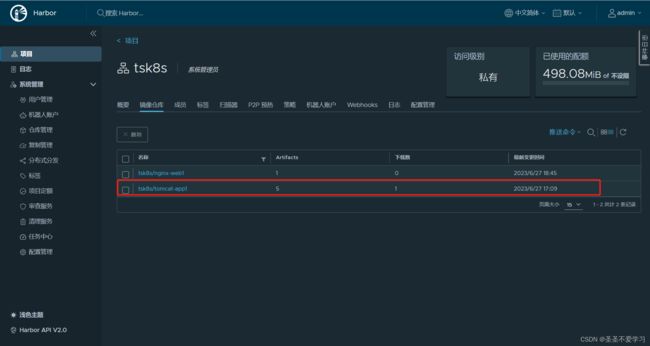

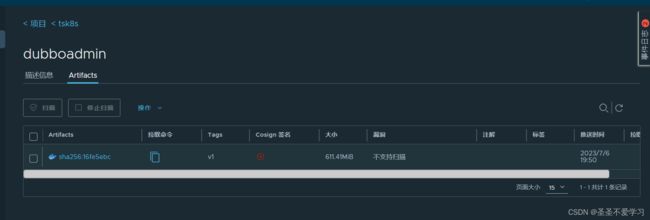

3.2.3.5 创建harbor项目

3.2.3.6 构建镜像

[root@containerd-build-image tomcat-app1]# sh build-image.sh v1

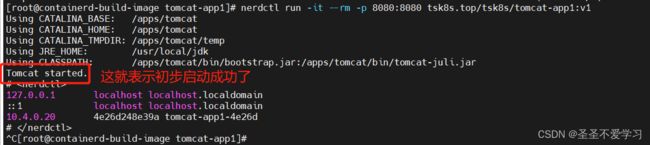

3.2.3.7 启动测试

[root@containerd-build-image tomcat-app1]# nerdctl run -it --rm -p 8080:8080 tsk8s.top/tsk8s/tomcat-app1:v1

3.2.4 在k8s中部署tomcat-app1业务镜像

3.2.4.1 编辑yaml

[root@k8s-harbor01 deployment]# cat tomcat-app1.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: tomcat-app1

name: tomcat-app1

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: tomcat-app1

template:

metadata:

labels:

app: tomcat-app1

spec:

containers:

- name: tomcat-app1

image: tsk8s.top/tsk8s/tomcat-app1:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

volumeMounts:

- name: tsk8s-images

mountPath: /usr/local/nginx/html/webapp/images

readOnly: false

- name: tsk8s-static

mountPath: /usr/local/nginx/html/webapp/static

readOnly: false

volumes: # 注意这里,需要创建持久化数据的目录

- name: tsk8s-images

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/images

- name: tsk8s-static

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/static

imagePullSecrets: # 注意这里,因为我之前访问harbor用的ip,后面改成域名了,又不想再到所有节点login一次,就用了一个Secrets,这个也需要创建

- name: dockerhub-image-pull-key

--- # 这里搞两个svc,一个nodeport是为了部署后验证,一个cluster IP是为了让等下部署的nginx pod调用

kind: Service

apiVersion: v1

metadata:

labels:

app: tomcat-app1

name: tomcat-app1-nodeport

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

selector:

app: tomcat-app1

---

kind: Service

apiVersion: v1

metadata:

labels:

app: tomcat-app1

name: tomcat-app1

namespace: myserver

spec:

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

selector:

app: tomcat-app1

3.2.4.2 创建nfs数据目录

# 新建tsk8s、以及子目录images、static

[root@k8s-harbor01 tsk8s]# ls

images static

[root@k8s-harbor01 tsk8s]# pwd

/data/k8s-data/tsk8s

3.2.4.3 创建dockerconfigjson类型的secret

[root@k8s-harbor01 k8s-data]# docker login tsk8s.top # 重新登录一次

[root@k8s-harbor01 k8s-data]# cat /root/.docker/config.json

{

"auths": {

"tsk8s.top": {

"auth": "YWRtaW46MTIzNDU2"

}

}

}

[root@k8s-harbor01 k8s-data]# kubectl create secret generic dockerhub-image-pull-key --from-file=.dockerconfigjson=/root/.docker/config.json --type=kubernetes.io/dockerconfigjson -n myserver

3.2.4.4 创建pod

[root@k8s-harbor01 deployment]# kubectl get po,svc -n myserver

NAME READY STATUS RESTARTS AGE

pod/tomcat-app1-6844b69cb5-scfb4 1/1 Running 0 16h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/tomcat-app1 ClusterIP 10.100.107.208 <none> 80/TCP 16h

service/tomcat-app1-nodeport NodePort 10.100.215.180 <none> 80:30929/TCP 14h

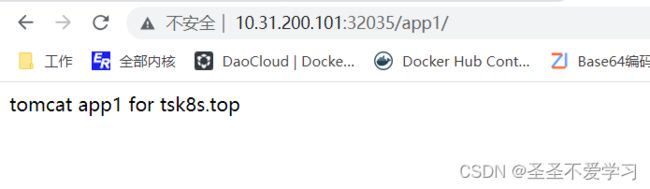

3.2.4.5 访问测试

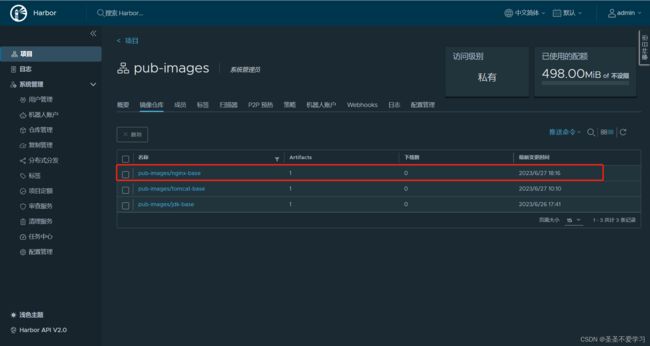

3.2.5 构建nginx基础镜像

3.2.5.1 编辑dockerfile

[root@containerd-build-image ~]# cd /opt/k8s-data/dockerfile/web/

[root@containerd-build-image web]# ls

pub-images

[root@containerd-build-image web]# mkdir nginx-base

[root@containerd-build-image web]# cd nginx-base

[root@containerd-build-image nginx-base]# cat Dockerfile

#Nginx Base Image

FROM tsk8s.top/baseimages/magedu-centos-base:7.9.2009

ADD nginx-1.22.0.tar.gz /usr/local/src/

RUN cd /usr/local/src/nginx-1.22.0 && \

./configure && \

make && \

make install && \

ln -sv /usr/local/nginx/sbin/nginx /usr/sbin/nginx && \

rm -rf /usr/local/src/nginx-1.22.0.tar.gz && \

useradd nginx -M -s /bin/nologin

3.2.5.2 准备nginx安装包

[root@containerd-build-image nginx-base]# wget http://nginx.p2hp.com/download/nginx-1.22.0.tar.gz

[root@containerd-build-image nginx-base]# ll -rt

总用量 1056

-rw-r--r-- 1 root root 1073322 6月 16 2022 nginx-1.22.0.tar.gz

-rw-r--r-- 1 root root 306 6月 27 18:11 Dockerfile

3.2.5.3 编辑构建脚本

[root@containerd-build-image nginx-base]# cat build-image.sh

#!/bin/bash

nerdctl build -t tsk8s.top/pub-images/nginx-base:v1.22.0 . && \

nerdctl push tsk8s.top/pub-images/nginx-base:v1.22.0

3.2.5.4 构建镜像

[root@containerd-build-image nginx-base]# sh build-image.sh

3.2.6 构建nginx业务镜像

3.2.6.1 编辑dockerfile

[root@containerd-build-image tsk8s]# mkdir nginx

[root@containerd-build-image tsk8s]# cd nginx

[root@containerd-build-image nginx]# ls

[root@containerd-build-image nginx]# cat Dockerfile

#Nginx 1.22.0

FROM tsk8s.top/pub-images/nginx-base:v1.22.0

ADD nginx.conf /usr/local/nginx/conf/nginx.conf

ADD app1.tar.gz /usr/local/nginx/html/webapp/

ADD index.html /usr/local/nginx/html/index.html

#静态资源挂载路径

RUN mkdir -p /usr/local/nginx/html/webapp/static /usr/local/nginx/html/webapp/images

EXPOSE 80 443

CMD ["nginx"]

3.2.6.2 准备相关文件

[root@containerd-build-image nginx]# ll -rt

总用量 20

-rw-r--r-- 1 root root 349 6月 27 18:37 Dockerfile

drwxrwxr-x 2 root root 24 6月 27 18:39 webapp

-rw-rw-r-- 1 root root 3127 6月 27 18:39 nginx.conf # nginx配置文件根据实际需求调整

-rw-rw-r-- 1 root root 25 6月 27 18:39 index.html

-rw-rw-r-- 1 root root 381 6月 27 18:39 build-command.sh

-rw-rw-r-- 1 root root 234 6月 27 18:39 app1.tar.gz

# nginx.conf有几个改的地方

[root@containerd-build-image nginx]# cat nginx.conf

user nginx nginx;

worker_processes auto;

daemon off;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

upstream tomcat_webserver {

server tomcat-app1.myserver:80; # 同一个k8s集群中,可以直接通过调用k8s内部域名的方式来访问服务

}

server {

listen 80;

server_name localhost;

location / {

root html;

index index.html index.htm;

}

location /webapp {

root html;

index index.html index.htm;

}

location /app1 {

proxy_pass http://tomcat_webserver;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $remote_addr;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

}

3.2.6.3 编辑构建脚本

[root@containerd-build-image nginx]# cat build-image.sh

#!/bin/bash

TAG=$1

nerdctl build -t tsk8s.top/tsk8s/nginx-web1:${TAG} . && \

nerdctl push tsk8s.top/tsk8s/nginx-web1:${TAG}

3.2.6.4 构建镜像

[root@containerd-build-image nginx]# sh build-image.sh v1

3.2.7 在k8s中部署nginx业务镜像

3.2.7.1 编辑yaml

[root@k8s-harbor01 deployment]# cat nginx.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: nginx-deployment

name: nginx-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: nginx-deployment

template:

metadata:

labels:

app: nginx-deployment

spec:

containers:

- name: nginx-deployment

image: tsk8s.top/tsk8s/nginx-web1:v1

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

env:

- name: "password"

value: "123456"

- name: "age"

value: "20"

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 500m

memory: 256Mi

volumeMounts:

- name: nginx-images

mountPath: /usr/local/nginx/html/webapp/images

readOnly: false

- name: nginx-static

mountPath: /usr/local/nginx/html/webapp/static

readOnly: false

volumes:

- name: nginx-images

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/images

- name: nginx-static

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/static

imagePullSecrets: # 注意这里,因为我之前访问harbor用的ip,后面改成域名了,又不想再到所有节点login一次,就用了一个Secrets,这个也需要创建

- name: dockerhub-image-pull-key

---

kind: Service

apiVersion: v1

metadata:

name: nginx-deployment-nodeport

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

- name: https

port: 443

protocol: TCP

targetPort: 443

selector:

app: nginx-deployment

3.2.7.2 创建pod

[root@k8s-harbor01 deployment]# kubectl apply -f nginx.yaml

[root@k8s-harbor01 deployment]# kubectl get po,svc -n myserver|grep nginx

pod/nginx-deployment-5b8fd687b9-jvk6f 1/1 Running 0 63s

service/nginx-deployment-nodeport NodePort 10.100.59.116 <none> 80:32035/TCP,443:32524/TCP 3m38s

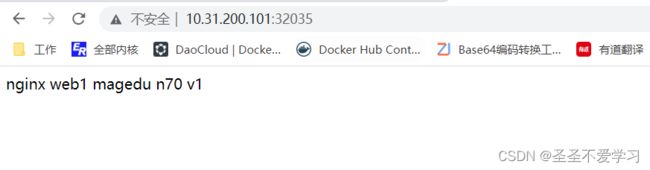

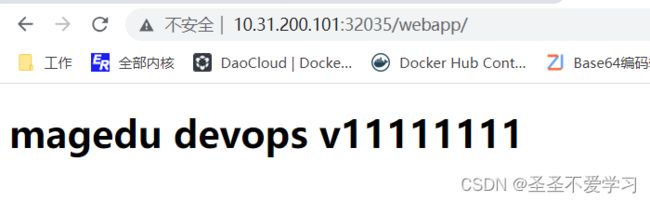

3.2.7.3 访问测试

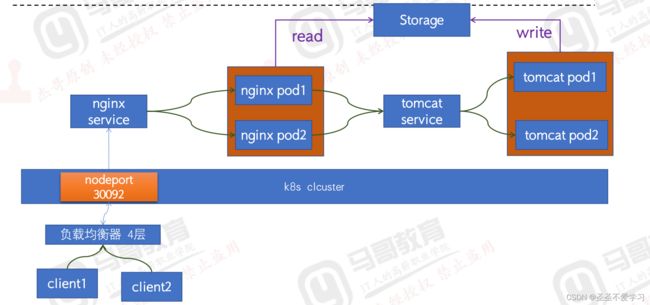

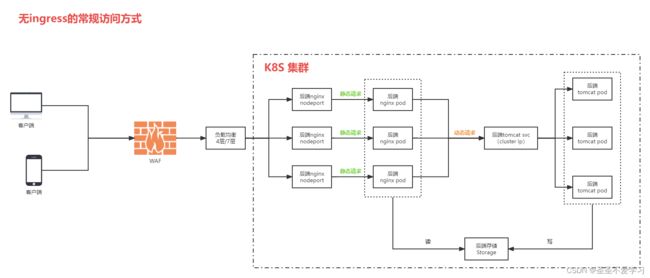

3.2.8 工作中常用访问方式

这只是一个大概的链路图,具体的还有一些常用中间件:如注册中心、数据库、消息队列等。

3.3 案例三:PV/PVC及zookeeper

主要是讲解类似zookeeper这种有状态服务的迁移,有以下特点:

(1)服务是集群模式。

(2)每一个pod都有一个独立的存储,意味着每个pod的数据都是独立的(不像nginx数据是共享的)。

3.3.1 下载zookeeper基础镜像

可以自建,也可以使用dokerhub已有的,只要是jdk 8就行

[root@containerd-build-image ~]# nerdctl pull elevy/slim_java:8

[root@containerd-build-image ~]# nerdctl tag elevy/slim_java:8 tsk8s.top/baseimages/slim_java:8

[root@containerd-build-image ~]# nerdctl push tsk8s.top/baseimages/slim_java:8

3.3.2 启动测试

[root@containerd-build-image ~]# nerdctl run -it --rm tsk8s.top/baseimages/slim_java:8 sh

/ # java -version

java version "1.8.0_144"

Java(TM) SE Runtime Environment (build 1.8.0_144-b01)

Java HotSpot(TM) 64-Bit Server VM (build 25.144-b01, mixed mode)

/ # exit

3.3.3 编辑dockerfile

官方dockerfile:https://github.com/31z4/zookeeper-docker/blob/5cf119d9c5d61024fdba66f7be707413513a8b0d/3.7.1/Dockerfile

[root@containerd-build-image tsk8s]# mkdir zookeeper

[root@containerd-build-image tsk8s]# cd zookeeper

[root@containerd-build-image zookeeper]# vim Dockerfile

[root@containerd-build-image zookeeper]# cat Dockerfile

FROM tsk8s.top/baseimages/slim_java:8

# 设置环境变量,方便确认版本

ENV ZK_VERSION 3.4.14

# 修改软件包安装源

ADD repositories /etc/apk/repositories

# Download Zookeeper。这里直接添加二进制安装包到容器中,速度更快

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz # 安装包

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc # 校验文件

COPY KEYS /tmp/KEYS # 证书文件

# 安装常用软件并设置必要环境

RUN apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

tar \

wget && \

#

# Install dependencies

apk add --no-cache \

bash && \

#

#

# Verify the signature

export GNUPGHOME="$(mktemp -d)" && \

gpg -q --batch --import /tmp/KEYS && \

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \

#

# Set up directories

#

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \

#

# Install

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \

#

# Slim down

cd /zookeeper && \

cp dist-maven/zookeeper-${ZK_VERSION}.jar . && \

rm -rf \

*.txt \

*.xml \

bin/README.txt \

bin/*.cmd \

conf/* \

contrib \

dist-maven \

docs \

lib/*.txt \

lib/cobertura \

lib/jdiff \

recipes \

src \

zookeeper-*.asc \

zookeeper-*.md5 \

zookeeper-*.sha1 && \

#

# Clean up

apk del .build-deps && \

rm -rf /tmp/* "$GNUPGHOME"

# 复制相关文件

COPY conf /zookeeper/conf/ # 配置文件

COPY bin/zkReady.sh /zookeeper/bin/ # 状态检测脚本

COPY entrypoint.sh / # 启动时加载的脚本

# 声明环境变量

ENV PATH=/zookeeper/bin:${PATH} \

ZOO_LOG_DIR=/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

# 启动命令

ENTRYPOINT [ "/entrypoint.sh" ]

# 启动命令的参数

CMD [ "zkServer.sh", "start-foreground" ]

# 声明需要暴露的端口

EXPOSE 2181 2888 3888 9010

3.3.4 重要文件介绍

3.3.4.1 entrypoint.sh

该文件中有很多系统级别的环境变量,内容需要结合zookeeper.yaml查看

#!/bin/bash

#将MYID的值希尔MYID文件,如果变量为空就默认为1,MYID为pod中的系统级别环境变量

## 在一个zookeeper集群中,每个zookeeper节点都需要一个唯一的ID,此脚本中的MYID,是从zookeeper.yaml中获取的

# myid文件就只是为了保存id的

echo ${MYID:-1} > /zookeeper/data/myid

#如果$SERVERS不为空则向下执行,SERVERS为pod中的系统级别环境变量、

## 在zookeeper集群中,会涉及到选主投票,数据同步等,所以要配置SERVERS来让集群中的节点互相能够通信,该SERVERS值也是从zookeeper.yaml中获取的

if [ -n "$SERVERS" ]; then

IFS=\, read -a servers <<<"$SERVERS" #IFS为bash内置变量用于分割字符并将结果形成一个数组

for i in "${!servers[@]}"; do #${!servers[@]}表示获取servers中每个元素的索引值,此索引值会用做当前ZK的ID

printf "\nserver.%i=%s:2888:3888" "$((1 + $i))" "${servers[$i]}" >> /zookeeper/conf/zoo.cfg #打印结果并输出重定向到文件/zookeeper/conf/zoo.cfg,其中%i和%s的值来分别自于后面变量"$((1 + $i))" "${servers[$i]}"

done

fi

cd /zookeeper

exec "$@" #$@变量用于引用给脚本传递的所有参数,传递的所有参数会被作为一个数组列表,exec为终止当前进程、保留当前进程id、新建一个进程执行新的任务,即CMD [ "zkServer.sh", "start-foreground" ]

3.3.4.2 zookeeper.yaml

[root@k8s-harbor01 deployment]# cat zookeerper.yaml

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: myserver

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: myserver

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: myserver

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: myserver

spec:

type: NodePort

ports:

- name: client # 客户端端口,给k8s集群外需要连接zookeeper集群的服务使用的

port: 2181

nodePort: 32183

- name: followers

port: 2888

- name: election

port: 3888

selector: # 这里选择了2个标签,只有同时拥有这2个标签的pod才会收到请求

app: zookeeper

server-id: "3"

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.linuxarchitect.io/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-1

volumes:

- name: zookeeper-datadir-pvc-1

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-1

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.linuxarchitect.io/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-2

volumes:

- name: zookeeper-datadir-pvc-2

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-2

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.linuxarchitect.io/magedu/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-3

volumes:

- name: zookeeper-datadir-pvc-3

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-3

3.3.5 编辑dockerfile

[root@containerd-build-image zookeeper]# cat Dockerfile

FROM tsk8s.top/baseimages/slim_java:8

# 设置环境变量,方便确认版本

ENV ZK_VERSION 3.4.14

# 修改软件包安装源

ADD repositories /etc/apk/repositories

# Download Zookeeper。这里直接添加二进制安装包到容器中,速度更快

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc

COPY KEYS /tmp/KEYS

# 安装常用软件并设置必要环境

RUN apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

tar \

wget && \

#

# Install dependencies

apk add --no-cache \

bash && \

#

#

# Verify the signature

export GNUPGHOME="$(mktemp -d)" && \

gpg -q --batch --import /tmp/KEYS && \

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \

#

# Set up directories

#

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \

#

# Install

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \

#

# Slim down

cd /zookeeper && \

cp dist-maven/zookeeper-${ZK_VERSION}.jar . && \

rm -rf \

*.txt \

*.xml \

bin/README.txt \

bin/*.cmd \

conf/* \

contrib \

dist-maven \

docs \

lib/*.txt \

lib/cobertura \

lib/jdiff \

recipes \

src \

zookeeper-*.asc \

zookeeper-*.md5 \

zookeeper-*.sha1 && \

#

# Clean up

apk del .build-deps && \

rm -rf /tmp/* "$GNUPGHOME"

# 复制相关文件

COPY conf /zookeeper/conf/

COPY bin/zkReady.sh /zookeeper/bin/

COPY entrypoint.sh /

RUN chmod u+x /entrypoint.sh

# 声明环境变量

ENV PATH=/zookeeper/bin:${PATH} \

ZOO_LOG_DIR=/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

# 启动命令

ENTRYPOINT ["/entrypoint.sh"]

# 启动命令的参数

CMD [ "zkServer.sh", "start-foreground" ]

# 声明需要暴露的端口

EXPOSE 2181 2888 3888 9010

3.3.6 准备相关软件

[root@containerd-build-image zookeeper]# ll -rt

总用量 36888

-rw-r--r-- 1 root root 2086 6月 28 14:54 Dockerfile

-rw-r--r-- 1 root root 125 6月 28 17:00 build-image.sh

-rw-rw-r-- 1 root root 2270 6月 28 17:01 zookeeper-3.12-Dockerfile.tar.gz

-rw-rw-r-- 1 root root 91 6月 28 17:01 repositories

-rw-rw-r-- 1 root root 63587 6月 28 17:01 KEYS

-rw-rw-r-- 1 root root 1015 6月 28 17:01 entrypoint.sh

-rw-rw-r-- 1 root root 836 6月 28 17:02 zookeeper-3.4.14.tar.gz.asc

-rw-rw-r-- 1 root root 37676320 6月 28 17:02 zookeeper-3.4.14.tar.gz

drwxrwxr-x 2 root root 45 6月 28 17:02 conf

drwxrwxr-x 2 root root 24 6月 28 17:02 bin

3.3.7 编辑构建脚本

[root@containerd-build-image zookeeper]# cat build-image.sh

#!/bin/bash

TAG=$1

nerdctl build -t tsk8s.top/tsk8s/zookeeper:${TAG} . && \

nerdctl push tsk8s.top/tsk8s/zookeeper:${TAG}

3.3.8 构建镜像

[root@containerd-build-image zookeeper]# sh build-image.sh v1

3.3.9 启动测试

[root@containerd-build-image zookeeper]# nerdctl run -it --rm tsk8s.top/tsk8s/zookeeper:v1

……省略部分输出

2023-06-28 10:17:18,708 [myid:] - INFO [main:NIOServerCnxnFactory@89] - binding to port 0.0.0.0/0.0.0.0:2181 # 启动成功

3.3.10 创建静态pv、pvc

3.3.10.1 编辑pv

[root@k8s-harbor01 volume]# cat zookeeper-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-1

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/zookeeper-datadir-1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-2

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/zookeeper-datadir-2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-datadir-pv-3

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/zookeeper-datadir-3

3.3.10.2 编辑pvc

[root@k8s-harbor01 volume]# cat zookeeper-persistentvolumeclaim.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-1

namespace: myserver

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-1 # 和pv强制绑定

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-2

namespace: myserver

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-2 # 和pv强制绑定

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-datadir-pvc-3

namespace: myserver

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-datadir-pv-3 # 和pv强制绑定

resources:

requests:

storage: 10Gi

3.3.10.3 创建nfs目录

[root@k8s-harbor01 tsk8s]# mkdir zookeeper-datadir-{1..3}

[root@k8s-harbor01 tsk8s]# ll -rt

总用量 0

drwxr-xr-x 2 root root 6 6月 27 15:38 images

drwxr-xr-x 2 root root 6 6月 27 15:38 static

drwxr-xr-x 2 root root 6 6月 28 18:35 zookeeper-datadir-3

drwxr-xr-x 2 root root 6 6月 28 18:35 zookeeper-datadir-2

drwxr-xr-x 2 root root 6 6月 28 18:35 zookeeper-datadir-1

3.3.10.4 创建pv、pvc

[root@k8s-harbor01 volume]# kubectl apply -f zookeeper-persistentvolume.yaml

persistentvolume/zookeeper-datadir-pv-1 created

persistentvolume/zookeeper-datadir-pv-2 created

persistentvolume/zookeeper-datadir-pv-3 created

[root@k8s-harbor01 volume]# kubectl get pv |grep zookeeper

zookeeper-datadir-pv-1 20Gi RWO Retain Available 27s

zookeeper-datadir-pv-2 20Gi RWO Retain Available 27s

zookeeper-datadir-pv-3 20Gi RWO Retain Available

[root@k8s-harbor01 volume]# kubectl apply -f zookeeper-persistentvolumeclaim.yaml

persistentvolumeclaim/zookeeper-datadir-pvc-1 created

persistentvolumeclaim/zookeeper-datadir-pvc-2 created

persistentvolumeclaim/zookeeper-datadir-pvc-3 created

[root@k8s-harbor01 volume]# kubectl get pvc -n myserver

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

zookeeper-datadir-pvc-1 Bound zookeeper-datadir-pv-1 20Gi RWO 118s

zookeeper-datadir-pvc-2 Bound zookeeper-datadir-pv-2 20Gi RWO 118s

zookeeper-datadir-pvc-3 Bound zookeeper-datadir-pv-3 20Gi RWO 118s

3.3.11 创建zookerper集群

[root@k8s-harbor01 deployment]# cat zookeerper.yaml

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: myserver

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: myserver

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: myserver

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: myserver

spec:

type: NodePort

ports:

- name: client # 客户端端口,给k8s集群外需要连接zookeeper集群的服务使用的

port: 2181

nodePort: 32183

- name: followers

port: 2888

- name: election

port: 3888

selector: # 这里选择了2个标签,只有同时拥有这2个标签的pod才会收到请求

app: zookeeper

server-id: "3"

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

imagePullSecrets:

- name: dockerhub-image-pull-key

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: tsk8s.top/tsk8s/zookeeper:v1

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-1

volumes:

- name: zookeeper-datadir-pvc-1

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-1

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

imagePullSecrets:

- name: dockerhub-image-pull-key

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: tsk8s.top/tsk8s/zookeeper:v1

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-2

volumes:

- name: zookeeper-datadir-pvc-2

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-2

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

imagePullSecrets:

- name: dockerhub-image-pull-key

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: tsk8s.top/tsk8s/zookeeper:v1

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-datadir-pvc-3

volumes:

- name: zookeeper-datadir-pvc-3

persistentVolumeClaim:

claimName: zookeeper-datadir-pvc-3

[root@k8s-harbor01 deployment]# kubectl apply -f zookeerper.yaml

service/zookeeper created

service/zookeeper1 created

service/zookeeper2 created

service/zookeeper3 created

deployment.apps/zookeeper1 created

deployment.apps/zookeeper2 created

deployment.apps/zookeeper3 created

[root@k8s-harbor01 deployment]# kubectl get po -n myserver |grep zook

zookeeper1-54fcfb896c-nr2d7 1/1 Running 1 (47s ago) 63s

zookeeper2-65789d49d8-z5n6r 1/1 Running 1 (49s ago) 63s

zookeeper3-5b94f474c7-7vbmb 1/1 Running 0 62s

3.3.12 验证

3.3.12.1 验证是否为集群模式

[root@k8s-harbor01 deployment]# kubectl exec -it -n myserver zookeeper1-54fcfb896c-nr2d7 -- /bin/sh

/ # /zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: follower # 集群模式

/ # cat /zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/zookeeper/data

dataLogDir=/zookeeper/wal

#snapCount=100000

autopurge.purgeInterval=1

clientPort=2181

quorumListenOnAllIPs=true

server.1=zookeeper1:2888:3888

server.2=zookeeper2:2888:3888

server.3=zookeeper3:2888:3888/ #

/ # exit

3.3.12.2 验证主挂了,会不会选主

[root@k8s-harbor01 deployment]# kubectl exec -it -n myserver zookeeper3-5b94f474c7-7vbmb -- /bin/sh

/ # /zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: leader # 当前角色为主

/ # exit

[root@k8s-harbor01 deployment]# cd /root/app/harbor/harbor/

[root@k8s-harbor01 harbor]# docker-compose down

Stopping nginx ... done

Stopping harbor-jobservice ... done

Stopping harbor-core ... done

Stopping registry ... done

Stopping redis ... done

Stopping harbor-db ... done

Stopping registryctl ... done

Stopping harbor-portal ... done

Stopping harbor-log ... done

Removing nginx ... done

Removing harbor-jobservice ... done

Removing harbor-core ... done

Removing registry ... done

Removing redis ... done

Removing harbor-db ... done

Removing registryctl ... done

Removing harbor-portal ... done

Removing harbor-log ... done

Removing network harbor_harbor

[root@k8s-harbor01 harbor]# kubectl delete po -n myserver zookeeper3-5b94f474c7-7vbmb

pod "zookeeper3-5b94f474c7-7vbmb" deleted

[root@k8s-harbor01 harbor]# kubectl get po -n myserver |grep zoo

zookeeper1-54fcfb896c-nr2d7 1/1 Running 1 (16m ago) 16m

zookeeper2-65789d49d8-z5n6r 1/1 Running 1 (16m ago) 16m

zookeeper3-5b94f474c7-56mfb 0/1 ImagePullBackOff 0 19s

[root@k8s-harbor01 harbor]# kubectl exec -it -n myserver zookeeper1-54fcfb896c-nr2d7 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # /zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: follower

/ # exit

[root@k8s-harbor01 harbor]# kubectl exec -it -n myserver zookeeper2-65789d49d8-z5n6r sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # /zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: leader # 这里可以看到新的主已经产生了

/ # exit

# 重新启动节点

[root@k8s-harbor01 harbor]# docker-compose up -d

Creating network "harbor_harbor" with the default driver

Creating harbor-log ... done

Creating registry ... done

Creating harbor-portal ... done

Creating registryctl ... done

Creating harbor-db ... done

Creating redis ... done

Creating harbor-core ... done

Creating nginx ... done

Creating harbor-jobservice ... done

[root@k8s-harbor01 harbor]# kubectl get po -n myserver |grep zoo

zookeeper1-54fcfb896c-nr2d7 1/1 Running 1 (18m ago) 18m

zookeeper2-65789d49d8-z5n6r 1/1 Running 1 (18m ago) 18m

zookeeper3-5b94f474c7-56mfb 0/1 ImagePullBackOff 0 2m29s

[root@k8s-harbor01 harbor]# kubectl delete po -n myserver zookeeper3-5b94f474c7-56mfb

pod "zookeeper3-5b94f474c7-56mfb" deleted

[root@k8s-harbor01 harbor]# kubectl get po -n myserver |grep zoo

zookeeper1-54fcfb896c-nr2d7 1/1 Running 1 (18m ago) 19m

zookeeper2-65789d49d8-z5n6r 1/1 Running 1 (18m ago) 19m

zookeeper3-5b94f474c7-l5ftp 1/1 Running 0 9s

3.4 案例四:PV/PVC及Redis单机

3.4.1 构建redis业务镜像

3.4.1.1 编辑dockerfile

[root@containerd-build-image ~]# cd /opt/k8s-data/dockerfile/web/

[root@containerd-build-image web]# mkdir redis

[root@containerd-build-image web]# cd redis

[root@containerd-build-image redis]#

[root@containerd-build-image redis]# cat Dockerfile

#Redis Image

FROM tsk8s.top/baseimages/magedu-centos-base:7.9.2009

ADD redis-4.0.14.tar.gz /usr/local/src

RUN ln -sv /usr/local/src/redis-4.0.14 /usr/local/redis && \

cd /usr/local/redis && \

make && \

cp src/redis-cli /usr/sbin/ && \

cp src/redis-server /usr/sbin/ && \

mkdir -pv /data/redis-data

ADD redis.conf /usr/local/redis/redis.conf

EXPOSE 6379

#ADD run_redis.sh /usr/local/redis/run_redis.sh

#CMD ["/usr/local/redis/run_redis.sh"]

ADD run_redis.sh /usr/local/redis/entrypoint.sh

ENTRYPOINT ["/bin/sh", "/usr/local/redis/entrypoint.sh"]

3.4.1.2 编辑redis启动脚本

[root@containerd-build-image redis]# cat run_redis.sh

#!/bin/bash

/usr/sbin/redis-server /usr/local/redis/redis.conf

tail -f /etc/hosts

3.4.1.3 编辑构建脚本

[root@containerd-build-image redis]# cat build-image.sh

#!/bin/bash

TAG=$1

nerdctl build -t tsk8s.top/tsk8s/redis:${TAG} . && \

nerdctl push tsk8s.top/tsk8s/redis:${TAG}

3.4.1.4 准备其他相关文件

[root@containerd-build-image redis]# ll

总用量 1776

-rw-rw-r-- 1 root root 115 6月 29 09:57 build-image.sh

-rw-r--r-- 1 root root 550 6月 29 09:53 Dockerfile

-rw-rw-r-- 1 root root 1740967 6月 29 09:55 redis-4.0.14.tar.gz

-rw-rw-r-- 1 root root 58783 6月 29 09:55 redis.conf

-rw-rw-r-- 1 root root 85 6月 29 09:55 run_redis.sh

3.4.1.5 构建镜像

[root@containerd-build-image redis]# sh build-image.sh v1

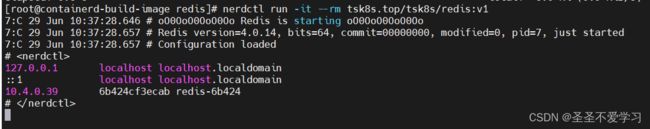

3.4.1.6 启动测试

3.4.2 创建pod

3.4.2.1 编辑yaml

[root@k8s-harbor01 deployment]# cat redis.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: devops-redis

name: deploy-devops-redis

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: devops-redis

template:

metadata:

labels:

app: devops-redis

spec:

containers:

- name: redis-container

image: tsk8s.top/tsk8s/redis:v1

imagePullPolicy: Always

volumeMounts:

- mountPath: "/data/redis-data/"

name: redis-datadir

volumes:

- name: redis-datadir

persistentVolumeClaim:

claimName: redis-datadir-pvc-1

imagePullSecrets:

- name: dockerhub-image-pull-key

---

kind: Service

apiVersion: v1

metadata:

labels:

app: devops-redis

name: srv-devops-redis

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 6379

targetPort: 6379

selector:

app: devops-redis

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

3.4.2.2 创建pv

[root@k8s-harbor01 volume]# cat redis-persistentvolume.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-datadir-pv-1

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8s-data/tsk8s/redis-datadir-1

server: 10.31.200.104

[root@k8s-harbor01 volume]# mkdir /data/k8s-data/tsk8s/redis-datadir-1

[root@k8s-harbor01 volume]# kubectl apply -f redis-persistentvolume.yaml

persistentvolume/redis-datadir-pv-1 created

[root@k8s-harbor01 volume]# kubectl get pv |grep redis

redis-datadir-pv-1 10Gi RWO Retain Available 4s

3.4.2.3 创建pvc

[root@k8s-harbor01 volume]# cat redis-persistentvolumeclaim.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: redis-datadir-pvc-1

namespace: myserver

spec:

volumeName: redis-datadir-pv-1

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

[root@k8s-harbor01 volume]# kubectl apply -f redis-persistentvolumeclaim.yaml

persistentvolumeclaim/redis-datadir-pvc-1 created

[root@k8s-harbor01 volume]# kubectl get pvc -n myserver|grep redis

redis-datadir-pvc-1 Bound redis-datadir-pv-1 10Gi RWO 13s

3.4.2.4 创建pod

[root@k8s-harbor01 ~]# kubectl get po,svc -n myserver |grep redis

pod/deploy-devops-redis-78cdf68d4d-kd7kk 1/1 Running 0 63m

service/srv-devops-redis NodePort 10.100.64.188 <none> 6379:32159/TCP 66m

3.4.3 验证Redis数据读写与持久化

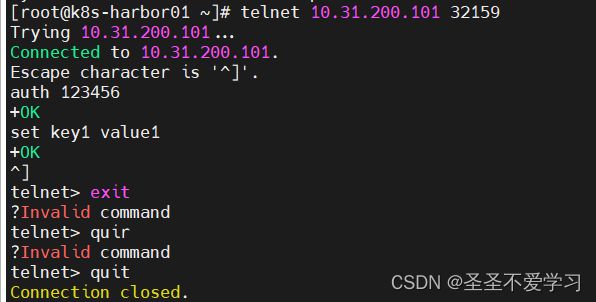

3.4.3.1 写入数据测试

3.4.3.2 数据持久化验证

[root@k8s-harbor01 ~]# ll /data/k8s-data/tsk8s/redis-datadir-1/

总用量 4

-rw-r--r-- 1 root root 111 6月 29 13:57 dump.rdb

3.5 案例四:PV/PVC及Redis Cluster

3.5.1 创建pv、pvc

3.5.1.1 编辑pv yaml

[root@k8s-harbor01 volume]# cat redis-cluster-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv0

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/redis0

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv1

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/redis1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv2

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/redis2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv3

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/redis3

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv4

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/redis4

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: redis-cluster-pv5

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.31.200.104

path: /data/k8s-data/tsk8s/redis5

3.5.1.2 创建数据持久化目录和pv

[root@k8s-harbor01 ~]# mkdir /data/k8s-data/tsk8s/redis{0..5}

[root@k8s-harbor01 ~]# ll -rt /data/k8s-data/tsk8s/|grep redis

drwxr-xr-x 2 root root 22 6月 29 13:57 redis-datadir-1

drwxr-xr-x 2 root root 6 6月 29 14:36 redis5

drwxr-xr-x 2 root root 6 6月 29 14:36 redis4

drwxr-xr-x 2 root root 6 6月 29 14:36 redis3

drwxr-xr-x 2 root root 6 6月 29 14:36 redis2

drwxr-xr-x 2 root root 6 6月 29 14:36 redis1

drwxr-xr-x 2 root root 6 6月 29 14:36 redis0

# k8s中创建pv

[root@k8s-harbor01 volume]# kubectl apply -f redis-cluster-pv.yaml

persistentvolume/redis-cluster-pv0 created

persistentvolume/redis-cluster-pv1 created

persistentvolume/redis-cluster-pv2 created

persistentvolume/redis-cluster-pv3 created

persistentvolume/redis-cluster-pv4 created

persistentvolume/redis-cluster-pv5 created

[root@k8s-harbor01 volume]# kubectl get pv|grep redis-cluster

redis-cluster-pv0 5Gi RWO Retain Available 23s

redis-cluster-pv1 5Gi RWO Retain Available 23s

redis-cluster-pv2 5Gi RWO Retain Available 23s

redis-cluster-pv3 5Gi RWO Retain Available 23s

redis-cluster-pv4 5Gi RWO Retain Available 23s

redis-cluster-pv5 5Gi RWO Retain Available 23s

3.5.2 创建configmap

[root@k8s-harbor01 configmap]# cat redis.conf

appendonly yes

cluster-enabled yes

cluster-config-file /var/lib/redis/nodes.conf

cluster-node-timeout 5000

dir /var/lib/redis

port 6379

[root@k8s-harbor01 configmap]# kubectl create cm redis-conf --from-file=redis.conf -n myserver

configmap/redis-conf created

[root@k8s-harbor01 configmap]# kubectl get cm -n myserver|grep redis

redis-conf 1 8s

[root@k8s-harbor01 configmap]# kubectl get cm -o yaml -n myserver redis-conf

apiVersion: v1

data:

redis.conf: |+

appendonly yes

cluster-enabled yes

cluster-config-file /var/lib/redis/nodes.conf

cluster-node-timeout 5000

dir /var/lib/redis

port 6379

kind: ConfigMap

metadata:

creationTimestamp: "2023-06-29T07:11:43Z"

name: redis-conf

namespace: myserver

resourceVersion: "13219950"

uid: a41e1d2c-8505-4f61-a621-b76e91c42e10

3.5.3 创建pod

3.5.3.1 编辑yaml

[root@k8s-harbor01 sts]# cat redis-cluster.yaml

apiVersion: v1

kind: Service

metadata:

name: redis

namespace: myserver

labels:

app: redis

spec:

selector:

app: redis

appCluster: redis-cluster

ports:

- name: redis

port: 6379

clusterIP: None

---

apiVersion: v1

kind: Service

metadata:

name: redis-access

namespace: myserver

labels:

app: redis

spec:

type: NodePort

selector:

app: redis

appCluster: redis-cluster

ports:

- name: redis-access

protocol: TCP

port: 6379

targetPort: 6379

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: redis

namespace: myserver

spec:

serviceName: redis

replicas: 6

selector:

matchLabels:

app: redis

appCluster: redis-cluster

template:

metadata:

labels:

app: redis

appCluster: redis-cluster

spec:

terminationGracePeriodSeconds: 20 # pod优雅终止宽限期

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- redis

topologyKey: kubernetes.io/hostname

containers:

- name: redis

image: tsk8s.top/tsk8s/redis:v1

command:

- "redis-server"

args:

- "/etc/redis/redis.conf"

- "--protected-mode"

- "no"

resources:

requests:

cpu: "500m"

memory: "500Mi"

ports:

- containerPort: 6379

name: redis

protocol: TCP

- containerPort: 16379

name: cluster

protocol: TCP

volumeMounts:

- name: conf

mountPath: /etc/redis

- name: data

mountPath: /var/lib/redis

volumes:

- name: conf

configMap:

name: redis-conf

items:

- key: redis.conf

path: redis.conf

imagePullSecrets:

- name: dockerhub-image-pull-key

volumeClaimTemplates: # 在sts中,可以指定一个pvc模板,它在pod启动时候会自动寻找可用的pv进行关联,就不用手动创建pvc了

- metadata:

name: data

namespace: myserver

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 5Gi

3.5.3.2 创建pod

[root@k8s-harbor01 sts]# kubectl apply -f redis-cluster.yaml

service/redis created

service/redis-access created

statefulset.apps/redis created

[root@k8s-harbor01 sts]# kubectl get po -n myserver|grep redis

deploy-devops-redis-78cdf68d4d-kd7kk 1/1 Running 0 6h25m

redis-0 1/1 Running 0 88s

redis-1 1/1 Running 0 30s

redis-2 1/1 Running 0 26s

redis-3 1/1 Running 0 21s

redis-4 1/1 Running 0 17s

redis-5 1/1 Running 0 14s

[root@k8s-harbor01 sts]# kubectl get pvc -n myserver |grep redis-cluster

data-redis-0 Bound redis-cluster-pv1 5Gi RWO 141m

data-redis-1 Bound redis-cluster-pv3 5Gi RWO 25m

data-redis-2 Bound redis-cluster-pv4 5Gi RWO 25m

data-redis-3 Bound redis-cluster-pv0 5Gi RWO 25m

data-redis-4 Bound redis-cluster-pv2 5Gi RWO 24m

data-redis-5 Bound redis-cluster-pv5 5Gi RWO 24m

# 上面的data-redis-x和redis-cluster-pvx对应不上也没关系,数据能正常存储就行

3.5.4 初始化redis-cluster

3.5.4.1 创建临时容器

[root@k8s-harbor01 yaml]# cat temp-unubtu.yaml

apiVersion: v1

kind: Pod

metadata:

name: temp-ubuntu

namespace: myserver

spec:

containers:

- name: temp-ubuntu

image: tsk8s.top/myserver/ubuntu:22.04

command: ['tail', '-f', '/etc/hosts']

imagePullSecrets:

- name: dockerhub-image-pull-key

[root@k8s-harbor01 yaml]# kubectl apply -f temp-unubtu.yaml

[root@k8s-harbor01 yaml]# kubectl get po -n myserver|grep temp

temp-ubuntu 1/1 Running 0 37s

[root@k8s-harbor01 yaml]# kubectl exec -it -n myserver temp-ubuntu -- /bin/bash

root@temp-ubuntu:/# apt update

root@temp-ubuntu:/# apt install python2.7 python-pip redis-tools dnsutils iputils-ping net-tools -y

root@temp-ubuntu:/# pip2 install --upgrade pip

root@temp-ubuntu:/# pip2 install redis-trib==0.5.1

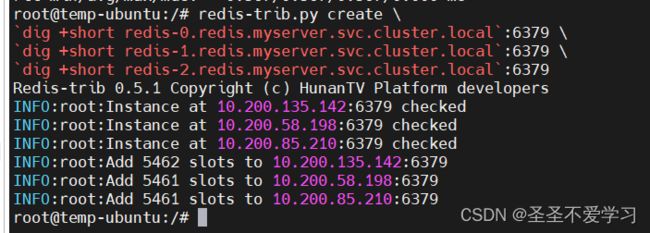

3.5.4.2 创建集群

root@temp-ubuntu:/# redis-trib.py create \

`dig +short redis-0.redis.myserver.svc.cluster.local`:6379 \

`dig +short redis-1.redis.myserver.svc.cluster.local`:6379 \

`dig +short redis-2.redis.myserver.svc.cluster.local`:6379

3.5.4.3 将redis-3加入redis-0

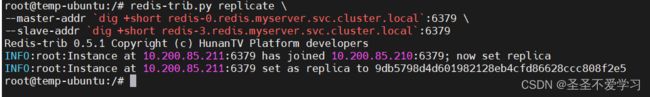

root@temp-ubuntu:/# redis-trib.py replicate \

--master-addr `dig +short redis-0.redis.myserver.svc.cluster.local`:6379 \

--slave-addr `dig +short redis-3.redis.myserver.svc.cluster.local`:6379

3.5.4.4 将redis-4加入redis-1

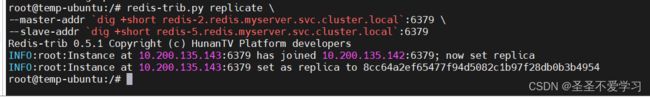

root@temp-ubuntu:/# redis-trib.py replicate --master-addr `dig +short redis-1.redis.myserver.svc.cluster.local`:6379 --slave-addr `dig +short redis-4.redis.myserver.svc.cluster.local`:6379

3.5.4.5 将redis-5加入redis-2

root@temp-ubuntu:/# redis-trib.py replicate \

--master-addr `dig +short redis-2.redis.myserver.svc.cluster.local`:6379 \

--slave-addr `dig +short redis-5.redis.myserver.svc.cluster.local`:6379

3.5.5 验证redis-cluster状态

3.5.5.1 登录验证

[root@k8s-harbor01 sts]# kubectl exec -it -n myserver redis-0 -- /bin/bash # 随便进一个pod就行

[root@redis-0 /]# redis-cli

127.0.0.1:6379> cluster info

cluster_state:ok # 集群状态

cluster_slots_assigned:16384 # 槽位分配

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:3

cluster_my_epoch:3

cluster_stats_messages_ping_sent:1804

cluster_stats_messages_pong_sent:1764

cluster_stats_messages_meet_sent:4

cluster_stats_messages_sent:3572

cluster_stats_messages_ping_received:1762

cluster_stats_messages_pong_received:1808

cluster_stats_messages_meet_received:2

cluster_stats_messages_received:3572

127.0.0.1:6379> cluster nodes

3541ad028af35947ff89ae3c56612d89b02824fa 10.200.135.143:6379@16379 slave 8cc64a2ef65477f94d5082c1b97f28db0b3b4954 0 1688365731693 2 connected

acf789c7ae98c98acd907f6602df23911a23b4cd 10.200.85.211:6379@16379 slave 9db5798d4d601982128eb4cfd86628ccc808f2e5 0 1688365733195 3 connected

9db5798d4d601982128eb4cfd86628ccc808f2e5 10.200.85.210:6379@16379 myself,master - 0 1688365731000 3 connected 10923-16383

8cc64a2ef65477f94d5082c1b97f28db0b3b4954 10.200.135.142:6379@16379 master - 0 1688365732000 2 connected 0-5461

f8fa6fe15857f994aae91ba11e096141605baa64 10.200.58.199:6379@16379 slave 60d5dbcea2b38b6a0606d6a03ef02f42e22560a1

3.5.5.2 读写验证

# 写入测试数据

127.0.0.1:6379> set k1 v1

OK

127.0.0.1:6379> get k1 # 由于集群模式下的数据是按照槽位存储的,所以在A节点写入的数据不一定会存储在A节点,我这里就报错了,提示我去10.200.85.210上查

(error) MOVED 12706 10.200.85.210:6379

# 查询测试数据(10.200.85.210上就查出来了)

127.0.0.1:6379> KEYS k1

1) "k1"

127.0.0.1:6379> get k1

"v1"

3.5 案例五:MySQL一主多从、读写分离

3.5.1 创建pv

3.5.1.1 编辑pv yaml

[root@k8s-harbor01 volume]# cat mysql-persistentvolume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-1

namespace: myserver

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8s-data/myserver/mysql-datadir-1

server: 10.31.200.104

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-2

namespace: myserver

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8s-data/myserver/mysql-datadir-2

server: 10.31.200.104

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-3

namespace: myserver

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8s-data/myserver/mysql-datadir-3

server: 10.31.200.104

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-4

namespace: myserver

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8s-data/myserver/mysql-datadir-4

server: 10.31.200.104

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-5

namespace: myserver

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8s-data/myserver/mysql-datadir-5

server: 10.31.200.104

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-6

namespace: myserver

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /data/k8s-data/myserver/mysql-datadir-6

server: 10.31.200.104

3.5.1.2 创建持久化数据目录

[root@k8s-harbor01 volume]# mkdir /data/k8s-data/myserver/mysql-datadir-{1..6}

[root@k8s-harbor01 volume]# ls /data/k8s-data/myserver/mysql-datadir-*

/data/k8s-data/myserver/mysql-datadir-1:

/data/k8s-data/myserver/mysql-datadir-2:

/data/k8s-data/myserver/mysql-datadir-3:

/data/k8s-data/myserver/mysql-datadir-4:

/data/k8s-data/myserver/mysql-datadir-5:

/data/k8s-data/myserver/mysql-datadir-6:

3.5.1.3 创建pv

[root@k8s-harbor01 volume]# kubectl apply -f mysql-persistentvolume.yaml

persistentvolume/mysql-datadir-1 created

persistentvolume/mysql-datadir-2 created

persistentvolume/mysql-datadir-3 created

persistentvolume/mysql-datadir-4 created

persistentvolume/mysql-datadir-5 created

persistentvolume/mysql-datadir-6 created

[root@k8s-harbor01 volume]# kubectl get pv |grep mysql

mysql-datadir-1 50Gi RWO Retain Available 3m45s

mysql-datadir-2 50Gi RWO Retain Available 3m45s

mysql-datadir-3 50Gi RWO Retain Available 3m45s

mysql-datadir-4 50Gi RWO Retain Available 3m45s

mysql-datadir-5 50Gi RWO Retain Available 3m45s

mysql-datadir-6 50Gi RWO Retain Available 3m45s

3.5.2 创建comfigmap

3.5.2.1 编辑yaml

[root@k8s-harbor01 configmap]# cat mysql-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

namespace: myserver

labels:

app: mysql

data:

master.cnf: | # 如果pod是master,就挂载这个key

# Apply this config only on the master.

[mysqld]

log-bin

log_bin_trust_function_creators=1

lower_case_table_names=1

slave.cnf: | # 如果pod是slave,就挂载这个key

# Apply this config only on slaves.

[mysqld]

super-read-only

log_bin_trust_function_creators=1

3.5.2.2 应用yaml

[root@k8s-harbor01 configmap]# kubectl apply -f mysql-configmap.yaml

configmap/mysql created

[root@k8s-harbor01 configmap]# kubectl get cm -n myserver |grep mysql

mysql 2 10s

3.5.3 创建svc

3.5.3.1 编辑yaml

[root@k8s-harbor01 yaml]# mkdir svc

[root@k8s-harbor01 yaml]# cd svc

[root@k8s-harbor01 svc]# vi mysql-services.yaml

[root@k8s-harbor01 svc]# cat mysql-services.yaml

apiVersion: v1

kind: Service

metadata:

namespace: myserver

name: mysql

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

clusterIP: None # 无头类型的svc

selector:

app: mysql

---

# Client service for connecting to any MySQL instance for reads.

# For writes, you must instead connect to the master: mysql-0.mysql.

apiVersion: v1

kind: Service

metadata:

name: mysql-read # 这个svc主要是给应用读的

namespace: myserver

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

selector:

app: mysq

3.5.3.2 应用yaml

[root@k8s-harbor01 svc]# kubectl apply -f mysql-services.yaml

service/mysql created

service/mysql-read created

[root@k8s-harbor01 svc]# kubectl get svc -nmyserver |grep mysql

mysql ClusterIP None <none> 3306/TCP 8s

mysql-read ClusterIP 10.100.236.134 <none> 3306/TCP 8s

3.5.4 创建sts

3.5.4.1 编辑yaml

[root@k8s-harbor01 sts]# cat mysql-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

namespace: myserver

spec:

selector:

matchLabels:

app: mysql

serviceName: mysql

replicas: 2

template:

metadata:

labels:

app: mysql

spec:

initContainers:

- name: init-mysql #初始化容器1、基于当前pod name匹配角色是master还是slave,并动态生成相对应的配置文件

image: tsk8s.top/tsk8s/mysql:5.7.36

command:

- bash

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1 #匹配hostname的最后一位、最后是一个顺序叠加的整数

ordinal=${BASH_REMATCH[1]}

echo [mysqld] > /mnt/conf.d/server-id.cnf

# Add an offset to avoid reserved server-id=0 value.

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# Copy appropriate conf.d files from config-map to emptyDir.

if [[ $ordinal -eq 0 ]]; then #如果是master、则cpmaster配置文件

cp /mnt/config-map/master.cnf /mnt/conf.d/

else #否则cp slave配置文件

cp /mnt/config-map/slave.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf #临时卷、emptyDir

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql #初始化容器2、用于生成mysql配置文件、并从上一个pod完成首次的全量数据clone(slave 3从slave2 clone,而不是每个slave都从master clone实现首次全量同步,但是后期都是与master实现增量同步)

image: tsk8s.top/tsk8s/xtrabackup:1.0

command:

- bash

- "-c"

- |

set -ex

# Skip the clone if data already exists.

[[ -d /var/lib/mysql/mysql ]] && exit 0

# Skip the clone on master (ordinal index 0).

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

[[ $ordinal -eq 0 ]] && exit 0 #如果最后一位是0(master)则退出clone过程

# Clone data from previous peer.

ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql #从上一个pod执行clone(binlog),xbstream为解压缩命令

# Prepare the backup.xue

xtrabackup --prepare --target-dir=/var/lib/mysql #通过xtrabackup恢复binlog

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql #业务容器1(mysql主容器)

image: tsk8s.top/tsk8s/mysql:5.7.36

env:

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data #挂载数据目录至/var/lib/mysql

mountPath: /var/lib/mysql

subPath: mysql

- name: conf #配置文件/etc/mysql/conf.d

mountPath: /etc/mysql/conf.d

resources: #资源限制

requests:

cpu: 500m

memory: 1Gi

livenessProbe: #存活探针

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe: #就绪探针

exec:

# Check we can execute queries over TCP (skip-networking is off).

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup #业务容器2(xtrabackup),用于后期同步master 的binglog并恢复数据

image: tsk8s.top/tsk8s/xtrabackup:1.0

ports:

- name: xtrabackup

containerPort: 3307

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql

# Determine binlog position of cloned data, if any.

if [[ -f xtrabackup_slave_info ]]; then

# XtraBackup already generated a partial "CHANGE MASTER TO" query

# because we're cloning from an existing slave.

mv xtrabackup_slave_info change_master_to.sql.in

# Ignore xtrabackup_binlog_info in this case (it's useless).

rm -f xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then

# We're cloning directly from master. Parse binlog position.

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm xtrabackup_binlog_info

echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in #生成CHANGE MASTER命令

fi

# Check if we need to complete a clone by starting replication.

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

# In case of container restart, attempt this at-most-once.

mv change_master_to.sql.in change_master_to.sql.orig

#执行CHANGE MASTER操作并启动SLAVE

mysql -h 127.0.0.1 <<EOF

$(<change_master_to.sql.orig),

MASTER_HOST='mysql-0.mysql',

MASTER_USER='root',

MASTER_PASSWORD='',

MASTER_CONNECT_RETRY=10;

START SLAVE;

EOF

fi

# Start a server to send backups when requested by peers. #监听在3307端口,用于为下一个pod同步全量数据

exec ncat --listen --keep-open --send-only --max-conns=1 3307 -c \

"xtrabackup --backup --slave-info --stream=xbstream --host=127.0.0.1 --user=root"

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 100m

memory: 100Mi

volumes:

- name: conf

emptyDir: {}

- name: config-map

configMap:

name: mysql

imagePullSecrets:

- name: dockerhub-image-pull-key

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

3.5.4.2 下载镜像上传到harbor

[root@k8s-harbor01 sts]# docker pull mysql:5.7.36

[root@k8s-harbor01 sts]# docker tag mysql:5.7.36 tsk8s.top/tsk8s/mysql:5.7.36

[root@k8s-harbor01 sts]# docker push tsk8s.top/tsk8s/mysql:5.7.36

[root@k8s-harbor01 sts]# docker pull yizhiyong/xtrabackup

[root@k8s-harbor01 sts]# docker tag yizhiyong/xtrabackup:latest tsk8s.top/tsk8s/xtrabackup:1.0

[root@k8s-harbor01 sts]# docker push tsk8s.top/tsk8s/xtrabackup:1.0

3.5.4.3 应用yaml

[root@k8s-harbor01 sts]# kubectl apply -f mysql-statefulset.yaml

[root@k8s-harbor01 sts]# kubectl get po -n myserver |grep mysql

mysql-0 2/2 Running 0 8m49s

mysql-1 2/2 Running 0 7m35s

3.5.4.4 扩容副本数为3

[root@k8s-harbor01 ~]# kubectl scale sts -n myserver mysql --replicas=3

statefulset.apps/mysql scaled

[root@k8s-harbor01 ~]# kubectl get po -A|grep mysql

myserver mysql-0 2/2 Running 0 4h55m

myserver mysql-1 2/2 Running 0 4h53m

myserver mysql-2 2/2 Running 0 77s # 差不多60s能起来

3.5.4.5 查看主从状态

[root@k8s-harbor01 ~]# kubectl exec -it -n myserver mysql-2 -- /bin/bash

root@mysql-2:/# mysql

mysql> show slave status\G

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: mysql-0.mysql

Master_User: root

Master_Port: 3306

Connect_Retry: 10

Master_Log_File: mysql-0-bin.000003

Read_Master_Log_Pos: 154

Relay_Log_File: mysql-2-relay-bin.000002

Relay_Log_Pos: 322

Relay_Master_Log_File: mysql-0-bin.000003

Slave_IO_Running: Yes # io线程和sql线程为yes就可以了

Slave_SQL_Running: Yes

……省略部分内容

3.5.5 测试数据同步

3.5.5.1 master写入数据

[root@k8s-harbor01 ~]# kubectl exec -it -n myserver mysql-0 -- /bin/bash

root@mysql-0:/# mysql

mysql> show master status\G

*************************** 1. row ***************************

File: mysql-0-bin.000003

Position: 154

Binlog_Do_DB:

Binlog_Ignore_DB:

Executed_Gtid_Set:

1 row in set (0.00 sec

mysql> create database tsk8s;

Query OK, 1 row affected (0.05 sec)

mysql> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| tsk8s |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.07 sec)

3.5.5.2 slave查询数据

[root@k8s-harbor01 ~]# kubectl exec -it -n myserver mysql-1 -- /bin/bash

root@mysql-1:/# mysql

mysql> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| tsk8s |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.01 sec)

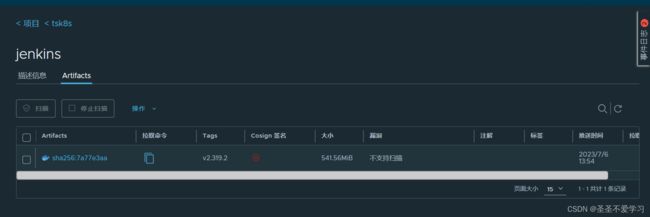

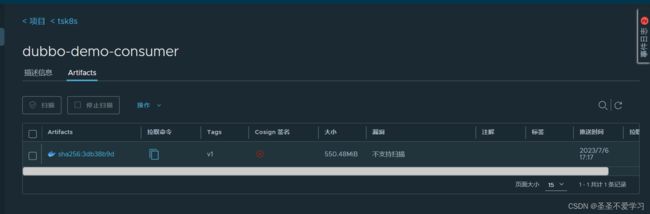

3.5 案例六:jenkins

3.5.1 构建jenkins镜像

3.5.1.1 编辑dockefile

[root@containerd-build-image jenkins]# cat Dockerfile

#Jenkins Version 2.190.1

FROM tsk8s.top/pub-images/jdk-base:v8.212

ADD jenkins-2.319.2.war /apps/jenkins/jenkins.war

ADD run_jenkins.sh /usr/bin/

RUN chmod u+x /usr/bin/run_jenkins.sh

EXPOSE 8080

CMD ["/usr/bin/run_jenkins.sh"]

3.5.1.2 编辑jenkins启动脚本

[root@containerd-build-image jenkins]# cat run_jenkins.sh

#!/bin/bash

cd /apps/jenkins && java -server -Xms1024m -Xmx1024m -Xss512k -jar jenkins.war --webroot=/apps/jenkins/jenkins-data --httpPort=8080

3.5.1.3 准备war包

[root@containerd-build-image jenkins]# ll jenkins-2.319.2.war

-rw-r--r-- 1 root root 72248203 7月 6 13:51 jenkins-2.319.2.wa

3.5.1.4 构建镜像

# 编辑构建脚本

[root@containerd-build-image jenkins]# cat build-image.sh

#!/bin/bash

echo "即将开始就像构建,请稍等!" && echo 3 && sleep 1 && echo 2 && sleep 1 && echo 1

nerdctl build -t tsk8s.top/tsk8s/jenkins:v2.319.2 .

if [ $? -eq 0 ];then

echo "即将开始镜像上传,请稍等!" && echo 3 && sleep 1 && echo 2 && sleep 1 && echo 1

nerdctl push tsk8s.top/tsk8s/jenkins:v2.319.2

if [ $? -eq 0 ];then

echo "镜像上传成功!"

else

echo "镜像上传失败"

fi

else

echo "镜像构建失败,请检查构建输出信息!"

fi

# 构建镜像

[root@containerd-build-image jenkins]# sh build-image.sh

3.5.2 部署jenkins到k8s

3.5.2.1 编辑yaml

[root@k8s-harbor01 deployment]# cat jenkins.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: jenkins

name: jenkins

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: jenkins

template:

metadata:

labels:

app: jenkins

spec:

containers:

- name: jenkins

image: tsk8s.top/tsk8s/jenkins:v2.319.2

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

volumeMounts:

- mountPath: "/apps/jenkins/jenkins-data/"

name: jenkins-datadir

- mountPath: "/root/.jenkins"

name: jenkins-root-datadir

volumes:

- name: jenkins-datadir

persistentVolumeClaim:

claimName: jenkins-datadir-pvc

- name: jenkins-root-datadir

persistentVolumeClaim:

claimName: jenkins-root-data-pvc

imagePullSecrets:

- name: dockerhub-image-pull-key

---

kind: Service

apiVersion: v1

metadata:

labels:

app: jenkins

name: jenkins-service

namespace: mmyserver

spec:

type: NodePort

ports:

- name: http