【复习mac下配置hadoop】

提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

mac启动hadoop

- 前言

- 一、检查并进行ssh连接

- 二、安装hadoop

- 三、启动

前言

提示:已经在相关课程中学过hadoop,现在再复习一下

如果没安装过,建议参考:

https://blog.csdn.net/han_yankun2009/article/details/78138953

https://blog.csdn.net/chongdutuo9831/article/details/100939395

一、检查并进行ssh连接

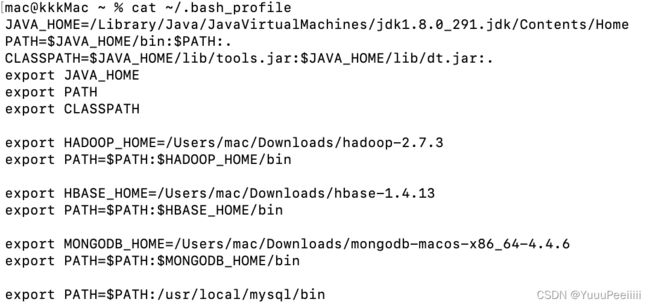

先检查一下环境

cat ~/.bash_profile

可以看到之前其实是配置过hadoop-2.7.3版本的,但是用hadoop version发现hadoop没有安装成功,经检查,最好source更新一下

mac@kkkMac ~ % source ~/.bash_profile

然后再检查

mac@kkkMac ~ % hadoop version

出现以下信息就说明hadoop安装成功了

Hadoop 2.7.3

Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r baa91f7c6bc9cb92be5982de4719c1c8af91ccff

Compiled by root on 2016-08-18T01:41Z

Compiled with protoc 2.5.0

From source with checksum 2e4ce5f957ea4db193bce3734ff29ff4

This command was run using /Users/mac/Downloads/hadoop-2.7.3/share/hadoop/common/hadoop-common-2.7.3.jar

当时学的时候为了避免麻烦就直接放在download路径下了,现在在桌面新建一个hadoop文件夹,更改一下~

首先,重新生成一下密钥:

ssh-keygen -t rsa -P ‘’ -f ~/.ssh/id_rsa

- SSH是专为远程登录会话和其他网络服务提供安全性的协议

- ssh -keygen 代表生成密钥;-t代表指定生成的密钥类型;rsa代表私钥;-P用于提供密语,-f 指定生成的密钥文件 ; id_rsa是一个私钥文件

因为之前配置过,所以这里覆盖之前的文件,这里有说,id_rsa是用来存私钥的,id_rsa.pub是来存放公钥的

Generating public/private rsa key pair.

/Users/mac/.ssh/id_rsa already exists.

Overwrite (y/n)? y

Your identification has been saved in /Users/mac/.ssh/id_rsa

Your public key has been saved in /Users/mac/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:dQj9p8f2EwHy/e4sXNCJv3e0/BtJcfngiKy2uhPtEsc [email protected]

The key’s randomart image is:

±–[RSA 3072]----+

| … |

| …o . .|

| o.+ =+o|

| o o.=o==|

| oS o .+oo+|

| o E. . =o=|

| =o +.B+|

| o… o**|

| o=. +X|

±—[SHA256]-----+

将生成的公钥id_rsa.pub 内容追加到authorized_keys

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

最后,验证一下,ssh localhost(主机名) 无错误提示则配置成功

mac@kkkMac ~ % ssh localhost

Last login: Fri Apr 1 19:10:25 2022

二、安装hadoop

没有的可以去下载2.7.3版

这里提醒自己一下,当时因为上课嫌麻烦,把路径就设在了downloads下,希望之后更改一下

可以去看看那几个文件的配置:

cd $HADOOP_HOME/etc/hadoop

core-site.xml

前面如果改路径的话,这里也要改

文件包含:使用Hadoop实例分配给文件系统的存储器,用于存储数据的内存限制的端口号,以及读/写缓冲器的大小

hadoop.tmp.dir

file:/users/mac/downloads/hadoop-2.7.3/tmp

A base for other temporary directories.

fs.defaultFS

hdfs://localhost:9000

hdfs-site.xml

如复制数据的值,名称节点的路径,本地文件系统的数据节点的路径。

所有的属性值是用户定义的,可以根据自己的Hadoop基础架构进行更改

dfs.replication

1

dfs.namenode.name.dir

file:/users/mac/downloads/hadoop-2.7.3/tmp/dfs/name

dfs.datanode.data.dir

file:/users/mac/downloads/hadoop-2.7.3/tmp/dfs/data

dfs.block.size

512000000

Block size

yarn-site.xml

作业调度 资源管理

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.scheduler.minimum-allocation-mb

2048

yarn.scheduler.maximum-allocation-mb

8192

mapred-site.xml

mapreduce.framework.name

yarn

三、启动

1.名称节点设置

回到根目录

cd ~

然后格式化名称节点

hdfs namenode -format

然后启动dfs

cd $HADOOP_HOME/sbin

notice!!! you have to enter this path first ,and then execute this command. as you see ,there are lots of command in the sbin file

start-dfs.sh

if you start successfully, you are allowed to assess the website.

http://localhost:50070/

the same,

start-yarn.sh

you can assess the http://localhost:8088/

you can also use ‘stop-all.sh’ to stop

mac@kkkMac sbin % stop-all.sh

This script is Deprecated. Instead use stop-dfs.sh and stop-yarn.sh

22/04/01 21:45:53 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Stopping namenodes on [localhost]

localhost: stopping namenode

localhost: no datanode to stop

Stopping secondary namenodes [0.0.0.0]

0.0.0.0: stopping secondarynamenode

22/04/01 21:46:07 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

stopping yarn daemons

stopping resourcemanager

localhost: stopping nodemanager

no proxyserver to stop

and then , disconnect ssh```

mac@kkkMac hadoop % logout

Saving session...

...copying shared history...

...saving history...truncating history files...

...completed.