Kafka基础——消费者和生产者实现

1. 准备

首先创建一个名为kafka-basis的springboot项目,添加kafka的依赖

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starterartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.kafkagroupId>

<artifactId>spring-kafkaartifactId>

dependency>

<dependency>

<groupId>org.projectlombokgroupId>

<artifactId>lombokartifactId>

dependency>

2. 消息发送(生产者)

发送流程

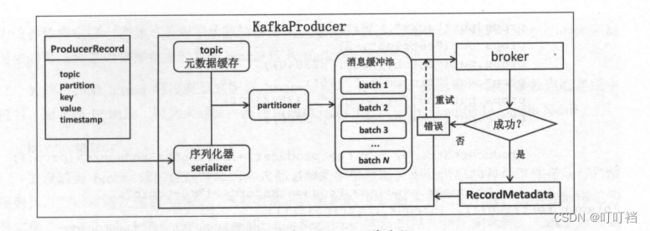

Kafka producer就是负责向Kafka写入数据的应用程序,在Kafka多种使用场景中,producer都是必要的组件。

- producer首先需要确认到底要向topic的哪个分区写入消息,Kafka producer提供了一个默认的分区器。对于每条待发迭的消息,如果该消息指定了key,那么该partitioner会根据 key的哈希值来选择目标分区;若这条消息没有指定key,则partitioner使用轮询的方式确认目标分区一一这样可以最大限度地确保消息在所有分区上的均匀性。producer 也允许用户实现自定义的分区策略而非使用默认的partitioner,这样可以很灵活地根据自身的业务需求确定不同的分区策略。

- 在确认了目标分区后,producer要做的第一件事情就是要寻找这个分区对应的leader,也就是该分区 leader副本所在的Kafka broker。

- leader响应producer发送过来的请求,而剩下的副本中有一部分副本会与leader副本保持同步即所谓的ISR。

- producer可以选择不等待任何副本的响应便返回成功,或者只是等待leader副本响应写入操作之后再返回成功等来实现消息发送。

配置

server:

port: 8080

spring:

kafka:

bootstrap-servers: xxx:9092

# 生产者

producer:

acks: 1

retries: 0

batch-size: 16384

buffer-memory: 33554432

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

properties:

linger:

ms: 0

mq:

kafka:

topic: topic

partition: 3

replica: 1

其中常用的配置如下:

- bootstrap-servers:Kafka服务集群或单机配置

- acks:可选0、1、all/-1。0 :表示producer完全不理睬leader broker端的处理结果;all或者-1::表示当发送消息时,leader broker不仅会将消息写入本地日志,同时还会等待 ISR 中所有其他副本都成功写入它们各自的本地日志后,才发送响应结果给producer;1:默认值,表示producer发送消息后,leader broker仅将该消息写入本地日志,然后便发送响应结果producer,而无须等待 ISR中其他副本写入该消息。

- retries:消息发送失败,producer重试发送次数。

- batch-size:配置batch的大小,producer会将发往同一分区的多条消息封装进batch中,当batch满了或者延迟时间超时就会

发送改batch。 - linger.ms:消息的延迟发送的时间,该参数默认值是0,表示消息需要被立即发送,无须关心batch是否己被填满。

- buffer-memory:指定了producer端用于缓存消息的缓冲区大小,单位是字节,默认值是 33554432。

- key-serializer/value-serializer:Kafka提供的序列化和反序列化类

初始化

创建Topic并设置分区数和副本数

@Configuration

public class KafkaInitialConfiguration {

//名称

@Value("${mq.kafka.topic}")

private String topic;

//分区数

@Value("${mq.kafka.partition}")

private Integer partition;

//副本数

@Value("${mq.kafka.replica}")

private short replica;

@Bean

public NewTopic initialTopic() {

return new NewTopic(topic, partition, replica);

}

}

消息发送

KafkaTemplate调用send时默认采用异步发送,源码如下:

protected ListenableFuture<SendResult<K, V>> doSend(final ProducerRecord<K, V> producerRecord) {

final Producer<K, V> producer = getTheProducer(producerRecord.topic());

this.logger.trace(() -> "Sending: " + KafkaUtils.format(producerRecord));

final SettableListenableFuture<SendResult<K, V>> future = new SettableListenableFuture<>();

Object sample = null;

if (this.micrometerEnabled && this.micrometerHolder == null) {

this.micrometerHolder = obtainMicrometerHolder();

}

if (this.micrometerHolder != null) {

sample = this.micrometerHolder.start();

}

Future<RecordMetadata> sendFuture =

producer.send(producerRecord, buildCallback(producerRecord, producer, future, sample));

// May be an immediate failure

if (sendFuture.isDone()) {

try {

sendFuture.get();

}

catch (InterruptedException e) {

Thread.currentThread().interrupt();

throw new KafkaException("Interrupted", e);

}

catch (ExecutionException e) {

throw new KafkaException("Send failed", e.getCause()); // NOSONAR, stack trace

}

}

if (this.autoFlush) {

flush();

}

this.logger.trace(() -> "Sent: " + KafkaUtils.format(producerRecord));

return future;

}

private Callback buildCallback(final ProducerRecord<K, V> producerRecord, final Producer<K, V> producer,

final SettableListenableFuture<SendResult<K, V>> future, @Nullable Object sample) {

return (metadata, exception) -> {

try {

if (exception == null) {

if (sample != null) {

this.micrometerHolder.success(sample);

}

future.set(new SendResult<>(producerRecord, metadata));

if (KafkaTemplate.this.producerListener != null) {

KafkaTemplate.this.producerListener.onSuccess(producerRecord, metadata);

}

KafkaTemplate.this.logger.trace(() -> "Sent ok: " + KafkaUtils.format(producerRecord)

+ ", metadata: " + metadata);

}

else {

if (sample != null) {

this.micrometerHolder.failure(sample, exception.getClass().getSimpleName());

}

future.setException(new KafkaProducerException(producerRecord, "Failed to send", exception));

if (KafkaTemplate.this.producerListener != null) {

KafkaTemplate.this.producerListener.onError(producerRecord, metadata, exception);

}

KafkaTemplate.this.logger.debug(exception, () -> "Failed to send: "

+ KafkaUtils.format(producerRecord));

}

}

finally {

if (!KafkaTemplate.this.transactional) {

closeProducer(producer, false);

}

}

};

}

send方法会返回一个回调方法,如果我们不调用get方法,当消息发送成功时候,发送结果会被回调给注册过的listener进行通知,默认实现是LoggingProducerListener,我们也可以自定义实现。我们实现一个简单的发送示例:

@RestController

public class KafkaProducerController {

private final static Logger logger = LoggerFactory.getLogger(KafkaProducerController.class);

@Resource

private KafkaTemplate<String, Object> kafkaTemplate;

/**

* 默认异步发送,带有回调方法

*

* @param message

*/

@PostMapping("/sendMessage")

public void sendMessage(String message) {

kafkaTemplate.send("topic", message).addCallback(success -> logger.info("发送成功----内容:" + success.getProducerRecord().value() + ",topic:"

+ success.getRecordMetadata().topic() + ",partition:" + success.getRecordMetadata().partition()),

fail -> logger.info("发送失败----" + fail.getMessage()));

}

/**

* 同步发送:get方法会一直阻塞,直到结果返回

*

* @param message

* @throws Exception

*/

@PostMapping("/sendSyncMessage")

public void sendSyncMessage(String message) throws Exception {

ListenableFuture<SendResult<String, Object>> future = kafkaTemplate.send("topic", message);

//获取发送结果,会一直等待,指导达到设置的超时时间

SendResult<String, Object> result = future.get(3, TimeUnit.SECONDS);

logger.info("发送成功----内容:" + result.getProducerRecord().value() + ",topic:"

+ result.getRecordMetadata().topic() + ",partition:" + result.getRecordMetadata().partition());

}

}

自定义生产者Listener

public class KafkaProducerListener<K, V> implements ProducerListener<K, V> {

private final static Logger logger = LoggerFactory.getLogger(KafkaProducerListener.class);

/**

* 成功处理

*

* @param producerRecord

* @param recordMetadata

*/

@Override

public void onSuccess(ProducerRecord<K, V> producerRecord, RecordMetadata recordMetadata) {

logger.info(producerRecord.value() + ",发送成功");

}

/**

* 失败处理

*

* @param producerRecord

* @param recordMetadata

* @param exception

*/

@Override

public void onError(ProducerRecord<K, V> producerRecord, RecordMetadata recordMetadata, Exception exception) {

logger.error(producerRecord.value() + ",发送失败,异常为:" + exception.getMessage());

}

}

@Configuration

public class KafkaConfiguration {

/**

* 配置producerListener

*

* @return

*/

@Bean

public ProducerListener<Object, Object> producerListener(){

return new KafkaProducerListener<>();

}

}

消息分区机制

- 给定分区号,将消息发送到指定的topic的哪个分区中;

- 没有给定分区号,给定数据的key值,producer使用默认分区策略以及对应的分区器(partitioner)。默认的partitioner会将通过key值取hashCode进行分区;

- 既没有给定分区号,也没有给定key值,则partitioner会选择轮询的方式来确保消息在topic的所有分区上均匀分配。

- 自定实现分区策略

自定义分区示例如下:

spring:

kafka:

bootstrap-servers: xxx:9092

# 生产者

producer:

acks: 1

retries: 0

batch-size: 16384

buffer-memory: 33554432

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

properties:

linger:

ms: 0

#自定义分区器

partitioner:

class: com.fengfan.kafkbasis.config.MyKafkaPartitioner

public class MyKafkaPartitioner implements Partitioner {

@Override

public int partition(String s, Object o, byte[] bytes, Object o1, byte[] bytes1, Cluster cluster) {

//全部发送到1这个分区

return 1;

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> map) {

}

}

序列化

Kafka默认提供了十几种序列化器,其中常用的serializer如下,反序列化也相似:

- ByteArraySerializer:本质上什么都不用做,因为己经是字节数组了

- ByteBufferSerializer:序列化ByteBuffer

- BytesSerializer:序列化Kafka自定义的Bytes

- DoubleSerializer:序列化Double类型

- IntegerSerializer:序列化Integer类型

- LongSerializer:序列化Long类型

- StringSerializer:序列化String类型

自定义序列化

spring:

kafka:

bootstrap-servers: xx:9092

# 生产者

producer:

acks: 1

retries: 0

batch-size: 16384

buffer-memory: 33554432

key-serializer: org.apache.kafka.common.serialization.StringSerializer

# value-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: com.fengfan.kafkbasis.config.MySerializer

properties:

linger:

ms: 0

#自定义分区器

partitioner:

class: com.fengfan.kafkbasis.config.MyKafkaPartitioner

/**

* 自定义序列化发送

*

* @param message

*/

@PostMapping("/sendSerializerMessage")

public void sendSerializercMessage(String message) {

KafkaMessageEntity<String> kafkaMessageEntity = new KafkaMessageEntity<>();

kafkaMessageEntity.setData(message);

kafkaMessageEntity.setId(UUID.randomUUID().toString());

kafkaTemplate.send("topic", kafkaMessageEntity);

}

![]()

3. 消息消费(消费者)

概念

Kafka消费者(consumer)是从Kafka读取数据的应用,若干个consumer订阅Kafka集群中的若干个topic并从Kafka接收属于这些topic的消息。

消费者使用一个消费者组名(即group.id)来标记自己,topic的每条消息都只会被发送到每个订阅它的消费者组的一个消费者实例上。其中含义如下:

- 一个consumer group可能有若干个consumer实例(一个group只有一个实例也是允许的)

- 对于同一个 group而言,topic的每条消息只能被发送到group下的一个consumer实例上

- topic消息可以被发送到多个group中

Kafka是通过consumer group实现的对队列和发布/订阅模式的支持:

- 所有 consumer 实例都属于相同 group一一实现基于队列的模型。每条消息只会被一个 consumer 实例处理

- consumer 实例都属于不同 group一一实现基于发布/订阅的模型。极端的情况是每个 consumer 实例都设置完全不同的 group,这样 Kafka 消息就会被广播到所有 consumer 实例上

配置

spring:

kafka:

bootstrap-servers: 192.168.159.135:9092

consumer:

group-id: defaul_group

enable-auto-commit: true

auto-commit-interval: 100

auto-offset-reset: latest

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

- group-id:消费组ID

- enable-auto-commit:是否自动提交offset

- auto-commit-interval:提交offset延时(接收到消息后多久提交offset)

- auto-offset-reset:earliest:当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,从头开始消费;latest:当各分区下有已提交的offset时,从提交的offset开始消费;无提交的offset时,消费新产生的该分区下的数据;none:topic各分区都存在已提交的offset时,从offset后开始消费;只要有一个分区不存在已提交的offset,则抛出异常;

- key-deserializer/value-deserializer:反序列化

消息消费

@KafkaListener(topics = "topic")

public void onMessage(ConsumerRecord<?, ?> record){

System.out.println("消费者消费,record:"+record.topic()+"-"+record.partition()+"-"+record.value());

}

客户端 consumer 接收消息特别简单,直接用 @KafkaListener 注解即可,并在监听中设置监听的 topic。属性如下:

- id:消费者ID;

- groupId:消费组ID;

- topics:监听的topic,可监听多个;

- topicPartitions:可配置更加详细的监听信息,可指定topic、parition、offset监听。

位移管理

- consumer 端需要为每个它要读取的分区保存消费进度,即分区中当前最新消费消息的位置

该位置就被称为位移(offset),consumer 需要定期地向 Kafka 提交自己的位置信息。 - consumer 会在 Kafka 集群的所有 broker 中选择一个 broker 作为 consumer group的 coordinator,用于实现组成员管理、消费分配方案制定以及提交位移等 为每个组选择对应 coordinator 的依据就是, Kafka内部的一个 topic (_consumer offsets)和普通的 Kafka topic 相同,该 topic 配置有多个分区,每个分区有多个副本。它存在的唯一目的就是保存 consumer提交的位移。

- 提交位移的主要机制是通过向所属的 coordinator 发送位移提交请求来实现的每个位移提交请求来实现的。每个位移都会往_consumer_offsets 对应分区上追加写入一条消息。 消息的 key 是 group.id、topic 和分区的元组,而 value 就是位移值。如果 consumer 为同一个 group 的同一个 topic 分区提交了多次位移,那么_consumer_offsets 对应的分区上就会有若干条 key 相同但value 不同的消息。

重平衡(rebalance)

consumer group rebalance 本质上是一组协议,它规定了一个 consumer group 是如何达成一致来分配订阅 topic 的所有分区的。假设某个组下有 20 consumer 实例,该组订阅了有着 100 个分区的 topic 正常情况下, Kafka 会为每个 consumer 平均分配 个分区。这个分配过程就被称为 rebalance,。对于每个组而言, Kafka 的某个broker 会被选举为组协调者( group coordinator) o。coordinator 负责对组的状态进行管理,它的主要职责就是当新成员到达时促成组内所有成员达成新的分区分配方案,即coordinator 负责对组执行 rebalance 操作。

触发条件

rebalance 触发的条件有以下3个:

- 组成员发生变更,比如新 consumer 加入组,或己有 consumer 主动离开组,或是己有consumer 崩溃时则触发 rebalance。

- 组订阅 topic 数发生变更,比如使用基于正则表达式的订阅,当匹配正则表达式的新topic 被创建时则会触发 rebalance。

- 组订阅 topic 的分区数发生变更,比如使用命令行脚本增加了订阅 topic 的分区数。

rebalance 流程

consumer group 在执行 rebalance 之前必须首先确定 coordinator 所在的 broker ,并创建与该

broker 相互通信的 Socket 连接。确定 coordinator 的算法与确定 offset 被提交到 _consumer_offsets 目标分区的算法是相同的。

- 计算 Math.abs(groupID.hashCode) % offsets. topic.num. partitions 参数值(默认是 50) ,假设是 10

- 寻找_consumer_offsets 分区 10 的 leader 副本所在的 broker ,该 broker 即为这个 group 的coordinator

- 加入组: 这一步中组内所有 consumer(即 group.id 相同的所有 consumer 实例)向 coordinator 发送 JoinGroup 请求。当收集全 JoinGroup 请求后, coordinator 从中选择一个 consumer 担任 group leader ,并把所有成员信息以及它们的订阅信息发送给 leader。特别需要注意的是, group 的 leader 和coordinator 不是一个概念。leader 是某个 consumer 例, coordinator 通常是 Kafka 集群中的 broker 。另外 leader 为整个 group 的所有成员制定分配方案而非 coordinator

- 同步更新分配方案:这一步中 leader 开始制定分配方案,根据分配策略决定每个 consumer 都负责哪些 topic 的哪些分区。一旦分配完成, leader 会把这个分配方案封装进 SyncGroup 请求并发送给 coordinator。比较有意思的是,组内所有成员都会发送 SyncGroup 请求,不过只有 leader 发送的 SyncGroup 请求中包含了分配方案 coordinator 接收到分配方案后把属于每个 consumer 的方案单独抽取出来作为SyncGroup 请求的 response 返还给各自的 consumer