FlinkCDC之初体验

一、CDC简介

1.1 什么是CDC?

CDC是 Change Data Capture(变更数据获取 )的简称。 核心思想是,监测并捕获数据库的

变动(包括数据或数据表的插入 、 更新 以及 删除等),将这些变更按发生的顺序完整记录下

来,写入到消息中间件中以供其他服务进行订阅及消费。

1.2 CDC的种类

1.3 Canal、 Maxwell、 Debezium、FlinkCDC 之间的区别

1.4. 什么是FlinkCDC?

Flink CDC 是基于数据库的日志 CDC 技术,实现了全增量一体化读取的数据集成框架。配合 Flink 优秀的管道能力和丰富的上下游生态,Flink CDC 可以高效实现海量数据的实时集成。作为新一代的实时数据集成框架,Flink CDC 具有全增量一体化、无锁读取、并行读取、表结构变更自动同步、分布式架构等技术优势。

FlinkCDC应用场景

1.数据同步:用于备份,容灾

2.数据分发:一个数据源分发给多个下游系统

3.数据采集:面向数据仓库/数据湖的ETL数据集成,是非常重要的数据源

3.传统实时数据获取与FlinkCDC数据实时获取

Flink_CDC优势:

1.Flink的操作者和SQL模块都比较成熟且易于使用

2.Flink的作业可以通过调整运算器的并行度来完成,易于扩展处理能力

3.Flink支持先进的状态后端(State Backends),允许访问大量的状态数据

4.Flink提供更多的Source和Sink等

5.Flink拥有更大的用户群和活跃的支持社区,问题更容易解决

6.Flink开源协议允许云厂商进行全托管深度定制,而Kafka Streams则只能由其自己部署和运营

7.和Flink Table/SQL模块集成了数据库表和变化记录流(例如CDC的数据流)。作为同一事物的两面,结果是Upsert Message结构(+I表示新增、-U表示记录更新前的值、+U表示记录的更新值、-D表示删除)

Flink CDC两种实现方式

1.FlinkDataStream_CDC实现:

利用Flink_CDC自带的连接资源,如MySQLSource通过设置hostname、port、username、password、database、table、deserializer、startupOptions等参数配置

实现获取CRUD数据变化日志

2.FlinkSQL_CDC实现:

通过FlinkSQL创建虚拟表获取关键字段的变化情况并且配置hostname、port、username、password、database、table等参数可以看到具体表数据的变化过程

注意:FlinkSQL_CDC2.0仅支持Flink1.13之后的版本

.两种方式对比:

1.FlinkDataStream_CDC支持多库多表的操作(优点)

2.FlinkFlinkDataStream_CDC需要自定义序列化器(缺点)

3.FlinkSQL_CDC只能单表操作(缺点)

4.FlinkSQL_CDC自动序列化(优点)

1.5 FlinkCDC之DataStream测试

1.5.1 导入依赖

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>org.examplegroupId>

<artifactId>Flink-CDCartifactId>

<version>1.0-SNAPSHOTversion>

<properties>

<maven.compiler.source>8maven.compiler.source>

<maven.compiler.target>8maven.compiler.target>

properties>

<dependencies>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-javaartifactId>

<version>1.13.0version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-streaming-java_2.12artifactId>

<version>1.13.0version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-clients_2.12artifactId>

<version>1.13.0version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>3.1.3version>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>5.1.47version>

dependency>

<dependency>

<groupId>com.ververicagroupId>

<artifactId>flink-connector-mysql-cdcartifactId>

<version>2.0.0version>

dependency>

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-table-planner-blink_2.12artifactId>

<version>1.13.0version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.2.28version>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-assembly-pluginartifactId>

<version>3.0.0version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependenciesdescriptorRef>

descriptorRefs> configuration>

<executions>

<execution>

<id>make-assemblyid>

<phase>packagephase>

<goals>

<goal>singlegoal>

goals>

execution>

executions>

plugin>

plugins>

build>

project>

1.5.2 创建FlinkCDC测试主类

package com.lzl;

import com.ververica.cdc.connectors.mysql.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.ververica.cdc.debezium.DebeziumSourceFunction;

import com.ververica.cdc.debezium.StringDebeziumDeserializationSchema;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

/**

* @author lzl

* @create 2023-05-04 11:21

* @name FlinkCDC

*/

public class FlinkCDC {

public static void main(String[] args) throws Exception{

//1.获取Flink的执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

//2.通过FlinkCDC构建SourceFunction

DebeziumSourceFunction<String> sourceFunction = MySqlSource.<String>builder()

.hostname("ch01")

.port(3306)

.username("root")

.password("xxb@5196")

.databaseList("cdc_test")

.tableList("cdc_test.user_info") //表前一定要加上库名

.deserializer(new StringDebeziumDeserializationSchema()) //这是官网的,后面要可自定义反序列

.startupOptions(StartupOptions.initial())

.build();

//3.使用 CDC Source从 MySQL读取数据

DataStreamSource<String> dataStreamSource = env.addSource(sourceFunction);

//4.数据打印

dataStreamSource.print();

//5.启动任务

env.execute("FlinkCDC");

}

}

1.5.3 开启Binlog日志填写监控的数据库和format格式,并创建数据库。

[root@ch01 ~]# vim /etc/my.cnf

[root@ch01 ~]# systemctl restart mysqld

在/var/mysql下找到mysql-bin.000001和mysql-bin.index的文件(一般)

或打开客户端,输入以下命令:

mysql> show variables like 'log_%';

+----------------------------------------+-----------------------------+

| Variable_name | Value |

+----------------------------------------+-----------------------------+

| log_bin | ON |

| log_bin_basename | /data/mysql/mysql-bin |

| log_bin_index | /data/mysql/mysql-bin.index |

| log_bin_trust_function_creators | OFF |

| log_bin_use_v1_row_events | OFF |

| log_builtin_as_identified_by_password | OFF |

| log_error | /var/log/mysql/mysql.log |

| log_error_verbosity | 3 |

| log_output | FILE |

| log_queries_not_using_indexes | OFF |

| log_slave_updates | OFF |

| log_slow_admin_statements | OFF |

| log_slow_slave_statements | OFF |

| log_statements_unsafe_for_binlog | ON |

| log_syslog | OFF |

| log_syslog_facility | daemon |

| log_syslog_include_pid | ON |

| log_syslog_tag | |

| log_throttle_queries_not_using_indexes | 0 |

| log_timestamps | UTC |

| log_warnings | 2 |

+----------------------------------------+-----------------------------+

21 rows in set (0.00 sec)

mysql> show binary logs;

+------------------+-----------+

| Log_name | File_size |

+------------------+-----------+

| mysql-bin.000001 | 154 |

+------------------+-----------+

1 row in set (0.00 sec)

mysql> show master status;

+------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+------------------+----------+--------------+------------------+-------------------+

| mysql-bin.000001 | 154 | cdc_test | | |

+------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)

mysql> show binlog events;

+------------------+-----+----------------+-----------+-------------+---------------------------------------+

| Log_name | Pos | Event_type | Server_id | End_log_pos | Info |

+------------------+-----+----------------+-----------+-------------+---------------------------------------+

| mysql-bin.000001 | 4 | Format_desc | 1 | 123 | Server ver: 5.7.11-log, Binlog ver: 4 |

| mysql-bin.000001 | 123 | Previous_gtids | 1 | 154 | |

+------------------+-----+----------------+-----------+-------------+---------------------------------------+

2 rows in set (0.00 sec)

show binary logs; #获取binlog文件列表

show master status;#查看当前正在写入的binlog文件

show binlog events; #只查看第一个binlog文件的内容

show binlog events in ‘mysql-bin.000002’; #查看指定binlog文件的内容

创建MySQL数据库:cdc_test

mysql> create database cdc_test;

Query OK, 1 row affected (0.00 sec)

1.5.5 创建用户表user_info

mysql> use cdc_test;

Database changed

mysql> CREATE TABLE user_info (

`id` int(2) primary key comment 'id',

`name` varchar(255) comment '姓名',

`sex` varchar(255) comment '性别'

)ENGINE=InnoDB DEFAULT CHARSET=utf8 ROW_FORMAT=DYNAMIC COMMENT='用户信息表';

Query OK, 0 rows affected (0.01 sec)

插入数据前,mysql-bin.000001的位置在695.

![]()

插入数据后,位置变为983.

![]()

证明binlog监控成功!

SourceRecord{sourcePartition={server=mysql_binlog_source}, sourceOffset={ts_sec=1683182998, file=mysql-bin.000001, pos=983}}

ConnectRecord{topic='mysql_binlog_source.cdc_test.user_info', kafkaPartition=null, key=Struct{id=1}, keySchema=Schema{mysql_binlog_source.cdc_test.user_info.Key:STRUCT},

value=Struct{after=Struct{id=1,name=关羽,sex=male},source=Struct{version=1.5.2.Final,connector=mysql,name=mysql_binlog_source,ts_ms=1683182998091,snapshot=last,db=cdc_test,table=user_info,server_id=0,file=mysql-bin.000001,pos=983,row=0},op=r,ts_ms=1683182998099},

valueSchema=Schema{mysql_binlog_source.cdc_test.user_info.Envelope:STRUCT}, timestamp=null, headers=ConnectHeaders(headers=)}

新增数据:

修改数据:把赵云改成张飞

op改成了u,即op=u。

多了一个before和after。即更新前和更新后的数据。

删除数据:

就只有before了。且op=d。

1.6 开启CK模式

什么是CK?Checkpoint的缩写。作用是为了断点续传,数据恢复还原。测试设置在本地。

1.7 同步MySQL数据(从一个MySQL到另一个MySQL,只能同步增量的数据)

17.1 创建3个类

17.2 自定义反序列化CustomDebeziumDeserializationSchema

package com.sgd;

import com.alibaba.fastjson.JSONObject;

import com.ververica.cdc.debezium.DebeziumDeserializationSchema;

import org.apache.flink.util.Collector;

import org.apache.kafka.connect.data.Field;

import org.apache.kafka.connect.data.Struct;

import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.kafka.connect.source.SourceRecord;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.List;

/**

* @author lzl

* @create 2023-05-12 18:14

* @name CustomDebeziumDeserializationSchema

*/

public class CustomDebeziumDeserializationSchema implements DebeziumDeserializationSchema<JSONObject> {

private static final Logger LOGGER = LoggerFactory.getLogger(CustomDebeziumDeserializationSchema.class);

private static final long serialVersionUID = 7906905121308228264L;

public CustomDebeziumDeserializationSchema() {

}

/**

* 新增:SourceRecord{sourcePartition={server=mysql_binlog_source}, sourceOffset={file=mysql-bin.000220, pos=16692, row=1, snapshot=true}} ConnectRecord{topic='mysql_binlog_source.test_hqh.flink_cdc', kafkaPartition=null, key=Struct{id=2}, keySchema=Schema{mysql_binlog_source.test_hqh.mysql_cdc_person.Key:STRUCT}, value=Struct{after=Struct{id=2,name=JIM,sex=male},source=Struct{version=1.2.1.Final,connector=mysql,name=mysql_binlog_source,ts_ms=0,snapshot=true,db=test_hqh,table=mysql_cdc_person,server_id=0,file=mysql-bin.000220,pos=16692,row=0},op=c,ts_ms=1603357255749}, valueSchema=Schema{mysql_binlog_source.test_hqh.mysql_cdc_person.Envelope:STRUCT}, timestamp=null, headers=ConnectHeaders(headers=)}

*

* @param sourceRecord sourceRecord

* @param collector out

*/

@Override

public void deserialize(SourceRecord sourceRecord, Collector<JSONObject> collector) {

JSONObject resJson = new JSONObject();

try {

Struct valueStruct = (Struct) sourceRecord.value();

Struct afterStruct = valueStruct.getStruct("after");

Struct beforeStruct = valueStruct.getStruct("before");

// 注意:若valueStruct中只有after,则表明插入;若只有before,说明删除;若既有before,也有after,则代表更新

if (afterStruct != null && beforeStruct != null) {

// 修改

System.out.println("Updating >>>>>>>");

LOGGER.info("Updated, ignored ...");

}else if (afterStruct != null) {

// 插入

System.out.println("Inserting >>>>>>>");

List<Field> fields = afterStruct.schema().fields();

String name;

Object value;

for (Field field : fields) {

name = field.name();

value = afterStruct.get(name);

resJson.put(name, value);

}

}else if (beforeStruct != null) {

// 删除

System.out.println("Deleting >>>>>>>");

LOGGER.info("Deleted, ignored ...");

} else {

System.out.println("No this operation ...");

LOGGER.warn("No this operation ...");

}

}catch (Exception e){

System.out.println("Deserialize throws exception:");

LOGGER.error("Deserialize throws exception:", e);

}

collector.collect(resJson);

}

@Override

public TypeInformation<JSONObject> getProducedType() {

return BasicTypeInfo.of(JSONObject.class);

}

}

反序列自定义其实就是为了拼接自己想要的数据格式(参照:https://blog.csdn.net/m0_48830183/article/details/130718138)

17.3 写入MySQL(Writer)类

package com.sgd;

import com.alibaba.fastjson.JSONObject;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

/**

* @author lzl

* @create 2023-05-12 18:24

* @name Writer

*/

public class MysqlBinlogWriter extends RichSinkFunction<JSONObject> {

private static final Logger LOGGER = LoggerFactory.getLogger(MysqlBinlogWriter.class);

private Connection connection = null;

private PreparedStatement insertStatement = null;

//目标库的信息

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

if (connection == null) {

Class.forName("com.mysql.jdbc.Driver");//加载数据库驱动

connection = DriverManager.getConnection("jdbc:mysql://10.110.17.37:3306/flink_cdc?serverTimezone=GMT%2B8&useUnicode=true&characterEncoding=UTF-8",

"root",

"xxb@5196");//获取连接

}

insertStatement = connection.prepareStatement( // 获取执行语句

"insert into flink_cdc.student_2 values (?,?,?,?)"); //插入数据

}

//执行插入语句

@Override

public void invoke(JSONObject value, Context context) throws Exception {

// 获取binlog

Integer id = (Integer) value.get("id");

String name = (String) value.get("name");

Integer age = (Integer) value.get("age");

String dt = (String) value.get("dt");

// 每条数据到来后,直接执行插入语句 这里强调注意:1,2,3,4序号必须与占位符(?)对应起来,比如第一位是id,dt最后一位

//如果更新数为0,则执行插入语句

if(updateStatement.getUpdateCount() == 0)

insertStatement.setInt(1, id);

insertStatement.setString(2, name);

insertStatement.setInt(3, age);

insertStatement.setString(4, dt);

insertStatement.execute();

LOGGER.info(insertStatement.toString());

}

//关闭数据库连接

@Override

public void close() throws Exception {

super.close();

if (connection != null) {

connection.close();

}

if (insertStatement != null) {

insertStatement.close();

}

// super.close();

}

}

17.4 主类MySqlBinlogCdcMySql

读取MySQL的Binlog日志并写入另一MySQL数据库

package com.sgd;

import com.alibaba.fastjson.JSONObject;

import com.ververica.cdc.connectors.mysql.MySqlSource;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

/**

* @author lzl

* @create 2023-05-12 18:34

* @name MySqlBinlogCdcMySql

*/

public class MySqlBinlogCdcMySql {

public static void main(String[] args) throws Exception {

//1.获取Flink的执行环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

SourceFunction<JSONObject> sourceFunction = MySqlSource.<JSONObject>builder()

.hostname("10.110.17.52")

.port(3306)

.databaseList("flink_cdc") //订阅的库

.tableList("flink_cdc.student")//监控的表名,记住一定要加库名,否则监控不到

.username("root")

.password("xxb@5196")

.deserializer(new CustomDebeziumDeserializationSchema())

.build();

//4.使用 CDC Source从 MySQL读取数据

DataStreamSource<JSONObject> dataStream = env.addSource(sourceFunction);

//5.数据打印

dataStream.print();

//6.数据添加到另一个MySQL中

dataStream.addSink(new MysqlBinlogWriter());//添加sink写入MySQL数据

System.out.println("MySQL写入成功!");

//7.启动任务

env.execute();

}

}

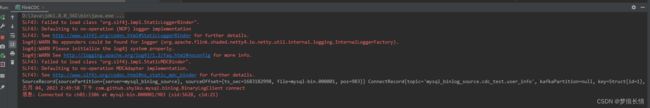

执行报错!原因是因为少导入了一个依赖包。在POM中添加以下依赖包。

<dependency>

<groupId>org.apache.flinkgroupId>

<artifactId>flink-table-planner-blink_2.12artifactId>

<version>1.13.0version>

dependency>

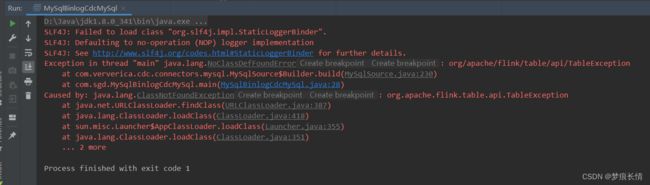

重新启动程序!程序启动成功!binlog位置在154的位置。

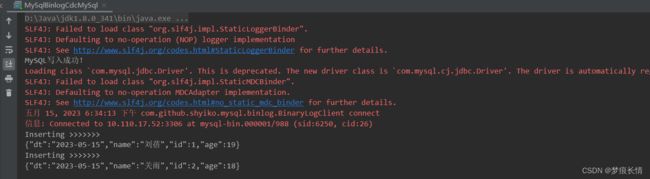

17.5 测试:

要在源数据库和目标数据库中建立表名student、student_2。向源数据库的表中写入2条数据:

有2条数据同步过去。

再新增:

mysql> insert into student values(3,'张菲',18,'2023-05-15');

Query OK, 1 row affected (0.00 sec)

更新数据的时候会报错:在flink cdc 自定义反序列化器 测试 修改主键后会终止程序 报错 Recovery is suppressed by NoRestartBackoffTimeStrategy。

原因是:update语句使用add方法添加记录,可能和快照语句重复,造成主键冲突异常(据说2.2.1版本已经修改,可以改版本试试。本次没用2.2.1版本,因为已经找到了其他方法)

- FlinkCDC_DataStream 同步MySQL实现增删改查的同步测试。

因为主键冲突的问题,所以在目标数据库的表设计时就不要设置主键了,因为是数据同步的原因,源数据库的表设置主键递增,不会重复,所以在程序运行之后也不用担心出现重复主键的问题,唯一担心的运行之前的状态,每一次程序运行前,必须清空目标库中表的数据,策略是全量同步。第二种方案是设置程序从最新的状态读取,即.startupOptions(StartupOptions.latest()),这样的话也不用清空目标库中表的数据了。

18.1 数据探究自定义反序列(参照:https://blog.csdn.net/m0_48830183/article/details/130718138)

package com.sgd;

import com.alibaba.fastjson.JSONObject;

import com.ververica.cdc.debezium.DebeziumDeserializationSchema;

import org.apache.flink.api.common.typeinfo.BasicTypeInfo;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.util.Collector;

import org.apache.kafka.connect.data.Field;

import org.apache.kafka.connect.data.Struct;

import org.apache.kafka.connect.source.SourceRecord;

import java.util.List;

/**

* @author lzl

* @create 2023-05-16 18:11

* @name CustomerDeserializationSchema

*/

public class CustomerDeserializationSchema implements DebeziumDeserializationSchema<JSONObject> {

private static final long serialVersionUID = -4278848963265670888L;

public CustomerDeserializationSchema() {

}

@Override

public void deserialize(SourceRecord record, Collector<JSONObject> out) {

Struct dataRecord = (Struct) record.value();

Struct afterStruct = dataRecord.getStruct("after");

Struct beforeStruct = dataRecord.getStruct("before");

/*

todo 1,同时存在 beforeStruct 跟 afterStruct数据的话,就代表是update的数据

2,只存在 beforeStruct 就是delete数据

3,只存在 afterStruct数据 就是insert数据

*/

JSONObject logJson = new JSONObject();

String canal_type = "";

List<Field> fieldsList = null;

if (afterStruct != null && beforeStruct != null) {

System.out.println("这是修改数据");

canal_type = "update";

fieldsList = afterStruct.schema().fields();

//todo 字段与值

for (Field field : fieldsList) {

String fieldName = field.name();

Object fieldValue = afterStruct.get(fieldName);

logJson.put(fieldName, fieldValue);

}

} else if (afterStruct != null) {

System.out.println("这是新增数据");

canal_type = "insert";

fieldsList = afterStruct.schema().fields();

//todo 字段与值

for (Field field : fieldsList) {

String fieldName = field.name();

Object fieldValue = afterStruct.get(fieldName);

logJson.put(fieldName, fieldValue);

}

} else if (beforeStruct != null) {

System.out.println("这是删除数据");

canal_type = "delete";

fieldsList = beforeStruct.schema().fields();

//todo 字段与值

for (Field field : fieldsList) {

String fieldName = field.name();

Object fieldValue = beforeStruct.get(fieldName);

logJson.put(fieldName, fieldValue);

}

} else {

System.out.println("一脸蒙蔽了");

}

//todo 拿到databases table信息

Struct source = dataRecord.getStruct("source");

Object db = source.get("db");

Object table = source.get("table");

Object ts_ms = source.get("ts_ms");

logJson.put("canal_database", db);

logJson.put("canal_table", table);

logJson.put("canal_ts", ts_ms);

logJson.put("canal_type", canal_type);

//todo 拿到topic

String topic = record.topic();

System.out.println("topic = " + topic);

//todo 主键字段

Struct pk = (Struct) record.key();

List<Field> pkFieldList = pk.schema().fields();

int partitionerNum = 0;

for (Field field : pkFieldList) {

Object pkValue = pk.get(field.name());

partitionerNum += pkValue.hashCode();

}

int hash = Math.abs(partitionerNum) % 3;

logJson.put("pk_hashcode", hash);

out.collect(logJson);

}

@Override

public TypeInformation<JSONObject> getProducedType() {

return BasicTypeInfo.of(JSONObject.class);

}

}

18.2 MySQLSink

package com.sgd;

import com.alibaba.fastjson.JSONObject;

import lombok.AllArgsConstructor;

import lombok.Data;

import lombok.NoArgsConstructor;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

/**

* @author lzl

* @create 2023-05-12 18:24

* @name Writer

*/

public class MysqlWriter extends RichSinkFunction<JSONObject> {

private static final Logger LOGGER = LoggerFactory.getLogger(MysqlWriter.class);

private Connection connection = null;

private PreparedStatement insertStatement = null;

private PreparedStatement updateStatement = null;

private PreparedStatement deleteStatement= null;

//连接目标库的信息

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

if (connection == null) {

Class.forName("com.mysql.jdbc.Driver");//加载数据库驱动

connection = DriverManager.getConnection("jdbc:mysql://10.110.17.37:3306/flink_cdc?serverTimezone=GMT%2B8&useUnicode=true&characterEncoding=UTF-8",

"root",

"xxb@5196");//获取连接

}

insertStatement = connection.prepareStatement( // 获取执行语句

"insert into flink_cdc.student_2 values (?,?,?,?)"); //插入数据

updateStatement = connection.prepareStatement( // 获取执行语句

"update flink_cdc.student_2 set name=?,age=?,dt=? where id=?"); //更新数据

deleteStatement = connection.prepareStatement( // 获取执行语句

"delete from flink_cdc.student_2 where id=?"); //删除数据

}

//执行插入、更新和删除语句

@Override

public void invoke(JSONObject value, Context context) throws Exception {

// 获取binlog

Integer id = (Integer) value.get("id");

String name = (String) value.get("name");

Integer age = (Integer) value.get("age");

String dt = (String) value.get("dt");

String canal_type =(String) value.get("canal_type");

if(canal_type =="insert"){ //插入数据

insertStatement.setInt(1, id);

insertStatement.setString(2, name);

insertStatement.setInt(3, age);

insertStatement.setString(4, dt);

insertStatement.execute();

}

if (canal_type =="update"){ //更新数据

// 每条数据到来后,直接执行更新语句 这里强调注意:1,2,3,4序号必须与占位符(?)对应起来,比如第一位是name,id最后一位

updateStatement.setString(1,name);

updateStatement.setInt(2, age);

updateStatement.setString(3, dt);

updateStatement.setInt(4, id);

updateStatement.execute(); // 执行更新语句

}

if (canal_type =="delete"){ //删除数据

deleteStatement.setInt(1, id);

deleteStatement.execute();

}

}

//关闭数据库连接

@Override

public void close() throws Exception {

super.close();

if (connection != null) {

connection.close();

}

if (updateStatement != null) {

updateStatement.close();

}

if (insertStatement != null) {

insertStatement.close();

}

if (deleteStatement!= null) {

deleteStatement.close();

}

}

}

18.3 主类

package com.sgd;

import com.alibaba.fastjson.JSONObject;

import com.ververica.cdc.connectors.mysql.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import java.util.Properties;

/**

* @author lzl

* @create 2023-05-12 18:34

* @name MySqlBinlogCdcMySql

*/

public class MySqlBinlogCdcMySql {

public static void main(String[] args) throws Exception {

//TODO 1.获取Flink的执行环境

Configuration configuration = new Configuration();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(configuration);

env.setParallelism(1);

// TODO 2. 开启检查点

// TODO 3. 创建 Flink-MySQL-CDC 的 Source

Properties props = new Properties();

props.setProperty("scan.startup.mode", "initial");

SourceFunction<JSONObject> sourceFunction = MySqlSource.<JSONObject>builder()

.hostname("10.110.17.52")

.port(3306)

.databaseList("flink_cdc") //订阅的库

.tableList("flink_cdc.student")//监控的表名,记住表签一定要加库名

.username("root")

.password("xxb@5196")

.startupOptions(StartupOptions.initial())//开启全量同步

.debeziumProperties(props)

.deserializer(new CustomerDeserializationSchema())

.build();

//4.使用 CDC Source从 MySQL读取数据

DataStreamSource<JSONObject> dataStream = env.addSource(sourceFunction);

//5.数据打印

dataStream.print("===>");

//6.数据添加到另一个MySQL中

dataStream.addSink(new MysqlWriter());

System.out.println("MySQL写入成功!");

//7.启动任务

env.execute();

}

}

18.4 测试:

新增:

更改:(将赵芸的年龄改为18)

同步(写入)的目标库的数据变化:

删除:(删掉赵芸这一条数据)

同步(写入)的目标库的数据变化:

数据已经删除!

- MySQLSink 代码优化

敬请期待~!