10. 进阶篇-K8S高级调度

错误手册

0. Kubernetes-Error

CronJob

概念

CronJob是在Job的基础上增加了时间调度。

通过CronJob可以在给定的时间点运行一个任务,也可以周期性的运行一个任务。

CronJob类似Linux中的Crontab。

注意事项

- CronJob的时间格式:* * * * * 即 分 时 日 月 周。

- CronJob可能需要调用应用的接口;可能需要依赖某些环境。

- CronJob可能需要拉取镜像,从而影响执行的时间节点。

- CronJob定时任务还要考虑宿主机、容器的时间,防止时间误差。

- CronJob的名字不能超过52个字符,否则会影响名称解析。

创建CronJob

命令创建CronJob

kubectl create cronjob cronjob-nginx --schedule="*/1 * * * *" --restart=OnFailure --image=nginx -- date

create:创建服务

cronjob:指定创建的是CronJob的服务

cronjob-nginx:指定CronJob服务的名称

–schedule=:指定定时任务的时间

–restart=:指定容器的启动策略

–image=:指定容器使用的镜像

yaml创建CronJob

- 编辑yaml文件

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: nginx-cronjob

namespace: default

spec:

jobTemplate:

spec:

template:

spec:

nodeName: k8s-master-1

restartPolicy: OnFailure

containers:

- name: nginx-web

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /temp/script

name: nginx-script

- mountPath: /temp/logs

name: nginx-cronjob-logs

command:

- /bin/sh

- -c

- /temp/script/echo.sh

volumes:

- name: nginx-script

hostPath:

path: /data/nginx/script

- name: nginx-cronjob-logs

hostPath:

path: /data/nginx/logs

schedule: '*/1 * * * *'

- 生成cronjob

kubectl apply -f /root/cronjob-nginx.yaml

- 查看CronJob

kubectl get cronjobs.batch

- 查看备份文件

ll /data/nginx/logs

配置详情

时间语法

# ┌───────────── 分钟 (0 - 59)

# │ ┌───────────── 小时 (0 - 23)

# │ │ ┌───────────── 月的某天 (1 - 31)

# │ │ │ ┌───────────── 月份 (1 - 12)

# │ │ │ │ ┌───────────── 周的某天 (0 - 6)(周日到周一;在某些系统上,7 也是星期日)

# │ │ │ │ │ 或者是 sun,mon,tue,web,thu,fri,sat

# │ │ │ │ │

# │ │ │ │ │

# * * * * *

配置详解

- 参考配置文件

cronjob.yaml

cronjob.spec.concurrencyPolicy

定义并发调度策略

- 帮助文档

kubectl explain cronjob.spec.concurrencyPolicy

KIND: CronJob

VERSION: batch/v1beta1

FIELD: concurrencyPolicy >

DESCRIPTION:

Specifies how to treat concurrent executions of a Job. Valid values are: -

"Allow" (default): allows CronJobs to run concurrently; - "Forbid": forbids

concurrent runs, skipping next run if previous run hasn't finished yet; -

"Replace": cancels currently running job and replaces it with a new one

- 参数解释

Allow:允许通知执行多个任务;

Forbid:不允许执行并发任务;

Replace:替换之前的任务;

cronjob.spec.schedule

设置执行任务时间

- 帮助文档

kubectl explain cronjob.spec.schedule

KIND: CronJob

VERSION: batch/v1beta1

FIELD: schedule <string>

DESCRIPTION:

The schedule in Cron format, see https://en.wikipedia.org/wiki/Cron.

- 参数解释

‘*/1 * * * *’,注意使用时需要有引号

cronjob.spec.failedJobsHistoryLimit

保留执行失败的历史记录次数

- 帮助文档

kubectl explain cronjob.spec.failedJobsHistoryLimit

KIND: CronJob

VERSION: batch/v1beta1

FIELD: failedJobsHistoryLimit <integer>

DESCRIPTION:

The number of failed finished jobs to retain. This is a pointer to

distinguish between explicit zero and not specified. Defaults to 1.

- 参数解释

默认保留1个,为了方便排错生成环境中可以设置70个左右。

假如设置为3,就会保留过去3次失败的执行记录

cronjob.spec.jobTemplate.spec.template.spec.restartPolicy

重启策略

- 帮助文档

kubectl explain cronjob.spec.jobTemplate.spec.template.spec.restartPolicy

KIND: CronJob

VERSION: batch/v1beta1

FIELD: restartPolicy <string>

DESCRIPTION:

Restart policy for all containers within the pod. One of Always, OnFailure,

Never. Default to Always. More info:

https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#restart-policy

- 参数解释

Always:容器失效时就重启这个容器,默认策略;

OnFailure:容器不为0的状态码终止,自动重启容器;

Nerver:无论如何都不重启容器;

cronjob.spec.successfulJobsHistoryLimit

保留执行成功的历史记录次数

- 帮助文档

kubectl explain cronjob.spec.successfulJobsHistoryLimit

KIND: CronJob

VERSION: batch/v1beta1

FIELD: successfulJobsHistoryLimit <integer>

DESCRIPTION:

The number of successful finished jobs to retain. This is a pointer to

distinguish between explicit zero and not specified. Defaults to 3.

- 参数解释

默认保留3个;

假如设置为5,就会保留过去5次成功的执行记录;

cronjob.spec.suspend

是否执行定时任务

- 帮助文档

kubectl explain cronjob.spec.suspend

KIND: CronJob

VERSION: batch/v1beta1

FIELD: suspend <boolean>

DESCRIPTION:

This flag tells the controller to suspend subsequent executions, it does

not apply to already started executions. Defaults to false.

- 参数解释

false:默认设置为false,表示cronjob挂起;

true:如果设置true则cronjob不被执行;

cronjob.spec.startingDeadlineSeconds

任务调用失败后多长时间内再次调用任务。

- 帮助文档

kubectl explain cronjob.spec.startingDeadlineSeconds

KIND: CronJob

VERSION: batch/v1beta1

FIELD: startingDeadlineSeconds <integer>

DESCRIPTION:

Optional deadline in seconds for starting the job if it misses scheduled

time for any reason. Missed jobs executions will be counted as failed ones.

- 参数解释

单位秒;

它表示任务如果由于某种原因错过了调度时间,开始该任务的截止时间的秒数。

控制器将测量从预期创建作业到现在之间的时间。

如果差异高于该限制,它将跳过此执行。

例如,如果设置为60,则它允许在实际计划后最多60 秒内创建作业。

CronJob备份MySQL案例

- 注意

MySQL已安装完成;

MySQL允许远程登录;

MySQL备份脚本

vim /data/mysql/script/mysql_backup.sh

#!/bin/bash

#数据库基本信息

DB_HOST="192.169.1.213"

DB_PORT="3306"

DB_USER="root"

DB_PASS="Yl5t@mt123"

DB_BAK_PATH="/data/backup"

#DB_BAK_PATH="/data/mysql/data"

##异地备份机信息

#BAK_HOST="192.169.1.215"

#BAK_PATH="/data/mysql"

#BAK_USER="root"

#获取当前时间

TIME=`date +%F-%H-%M-%S`

#获取数据库

OUT_PUT=`mysql -h"$DB_HOST" -P"$DB_PORT" -u"$DB_USER" -p"$DB_PASS" -N -s -e 'show databases;'`

#备份数据库

for DB_NAME in $OUT_PUT; do

#排除系统默认库

if [ "$DB_NAME" != "information_schema" ] && [ "$DB_NAME" != "performance_schema" ] && [ "$DB_NAME" != "mysql" ] && [ "$DB_NAME" != "sys" ]; then

echo "$DB_NAME"

mysqldump -h"$DB_HOST" -P"$DB_PORT" -u"$DB_USER" -p"$DB_PASS" "$DB_NAME" > "$DB_BAK_PATH"/"$DB_NAME"_"$TIME".sql

if [ "$?" == "0" ]; then

#打包sql

tar -zcvf "$DB_BAK_PATH"/"$DB_NAME"_"$TIME".sql.tgz "$DB_BAK_PATH"/"$DB_NAME"_"$TIME".sql > /dev/null 2>&1

else

echo "$DB_NAME 备份失败" >> "$DB_BAK_PATH"/mysql_"$TIME".log

fi

rm "$DB_BAK_PATH"/"$DB_NAME"_"$TIME".sql

fi

done

##同步数据库到异地

##镜像中没有rsync命令,需要更换镜像。后续自制镜像,现在先记一下。

# rsync -avp "$DB_BAK_PATH" "$BAK_USER"@"$BAK_HOST":"$BAK_PATH"

# if [ "$?" != "0" ]; then

# echo "rsync fail" > "$DB_BAK_PATH"/mysql_"$TIME".log

# fi

#本地备份保留5天

find "$DB_BAK_PATH" -mtime +5 -name "*.tgz" | xargs rm > /dev/null 2>&1

CronJob的yaml配置文件

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: mysql-cronjob

namespace: default

spec:

jobTemplate:

spec:

template:

spec:

nodeName: k8s-master-1

restartPolicy: OnFailure

containers:

- name: mysql

image: registry.cn-beijing.aliyuncs.com/publicspaces/mysql:8.0.29-debian

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /data/backup

name: cronjob-mysql-backup

- mountPath: /data/script

name: cronjob-mysql-script

command:

- /bin/sh

- -c

- /data/script/mysql_backup.sh

volumes:

- name: cronjob-mysql-backup

hostPath:

path: /data/mysql/data

- name: cronjob-mysql-script

hostPath:

path: /data/mysql/script

schedule: '*/1 * * * *'

启动cronjob

kubectl create -f /data/mysql/cronjob/cronjob-mysql.yaml

检查备份

ll /data/mysql/data

Taint&Toleration

概念

Taint

Taint:污点,应用在节点上,为了排斥Pod运行在此节点。

在一类服务器上打上污点,让不能容忍这个污点的Pod不能部署在打了污点的服务器上。

Taint组成

每个污点有一个 key 和 value 作为污点的标签,其中 value 可以为空,effect 描述污点的作用。

key=value:effect

key=[任意值]:[NoSchedule|NoExecute|PreferNoSchedule]

Taint支持三种effect:

- NoSchedule:表示k8s将不会将Pod调度到具有该污点的Node上;

- PreferNoSchedule:表示k8s将尽量避免将Pod调度到具有该污点的Node上;

- NoExecute:表示k8s将不会将Pod调度到具有该污点的Node上,同时会将Node上已经存在的Pod驱逐出去;

Toleration

Toleration:容忍度,应用在Pod上,为了允许Pod调度到匹配的污点节点上。

让Pod容忍节点上配置的污点,可以让一些特殊配置的Pod能够调用到具有污点和特殊配置的节点上。

Toleration组成

在pod中使用tolerations定义容忍度策略。

tolerations: #添加容忍策略

- key: "key1" #对应我们添加节点的变量名

operator: "Equal" #操作符

value: "value" #容忍的值 key1=value对应

effect: NoExecute #添加容忍的规则,这里必须和我们标记的五点规则相同

tolerationSeconds: 3600 #用于描述当 Pod 需要被驱逐时可以在 Pod 上继续保留运行的时间,类似限期驱离。

- key:必须与node上taint的key保持一致;

- value:必须与node上taint的value保持一致;

- effect:必须与node上taint的effect保持一致;

operator的两种匹配模式:

- Exists:表示容忍度不能指定value;

- Equal:表示容忍度必须匹配value;

- 不指定:不指定默认为Equal;

Taint和Toleration的作用

污点是给node节点设置的;容忍度是给pod设置的。

Taint需要与Toleration配合使用,让pod避开那些不合适的node。

在node上设置一个或多个Taint后,除非pod明确声明能够容忍这些“污点”,否则无法在这些node上运行。

Toleration是pod的属性,让pod只是能够运行在标注了Taint的node上。

某个Node节点设置污点,Node 被设置上污点之后就和 Pod 之间存在了一种互斥的关系,可以让 Node 拒绝 Pod 的调度执行,甚至将 Node 已经存在的 Pod 驱逐出去。

在 Pod 上设置容忍 ( Toleration ) ,意思是设置了容忍的 Pod 将可以容忍污点的存在,可以被调度到存在污点的 Node 上。

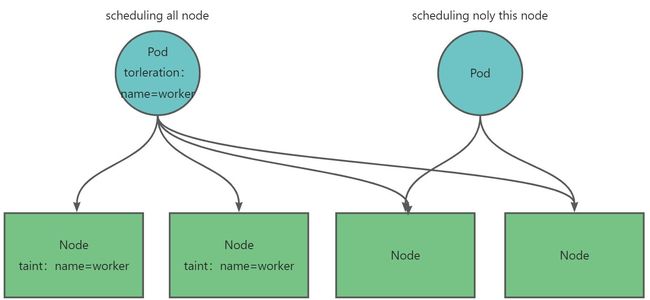

Taint和Toleration的总结

- 示意图

如上图所示:只有能够容忍对应节点污点的pod才能够被调度到对应节点运行,不能容忍节点污点的pod是一定不能调度到对应节点上运行(除节点污点为PreferNoSchedule);

- 注意

一个节点可以有多个污点。如果pod想强制运行在这个带有多个污点的节点上,那么pod应该配置节点上所有污点的容忍。

Taint

设置污点

kubectl taint node k8s-worker-1 host-name=linux:NoSchedule

查看污点

kubectl describe nodes | grep -i taint

kubectl describe nodes k8s-worker-1 | grep -i taint

删除污点

kubectl taint node k8s-worker-1 host-name:NoSchedule-

污点应用

- 添加污点

kubectl taint node k8s-worker-1 name=worker:NoSchedule

- 创建nginx deployment的yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: nginx-dep

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-web

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

- 生成pod并查看pod所在节点

kubectl apply -f /root/nginx-dep.yaml

#查看pod所在节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-58bdf797fc-h48q6 1/1 Running 0 11s 83.12.140.4 k8s-worker-2 <none> <none>

nginx-dep-58bdf797fc-sbn4t 1/1 Running 0 11s 83.12.140.6 k8s-worker-2 <none> <none>

nginx-dep-58bdf797fc-wvkfp 1/1 Running 0 11s 83.12.140.5 k8s-worker-2 <none> <none>

- 删除污点

kubectl taint node k8s-worker-1 name:NoSchedule-

- 更新pod并查看pod所在节点

kubectl delete -f nginx-dep.yaml

kubectl create -f nginx-dep.yaml

#查看pod所在节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-58bdf797fc-7fvnb 1/1 Running 0 25s 83.12.230.10 k8s-worker-1 <none> <none>

nginx-dep-58bdf797fc-gk9rv 1/1 Running 0 25s 83.12.140.7 k8s-worker-2 <none> <none>

nginx-dep-58bdf797fc-gvpb4 1/1 Running 0 25s 83.12.230.11 k8s-worker-1 <none> <none>

Toleration

容忍参数

pod.spec.tolerations

- 帮助文档

kubectl explain pod.spec.tolerations

KIND: Pod

VERSION: v1

RESOURCE: tolerations <[]Object>

DESCRIPTION:

If specified, the pod's tolerations.

The pod this Toleration is attached to tolerates any taint that matches the

triple using the matching operator .

FIELDS:

effect

Effect indicates the taint effect to match. Empty means match all taint

effects. When specified, allowed values are NoSchedule, PreferNoSchedule

and NoExecute.

key

Key is the taint key that the toleration applies to. Empty means match all

taint keys. If the key is empty, operator must be Exists; this combination

means to match all values and all keys.

operator

Operator represents a key' s relationship to the value. Valid operators are

Exists and Equal. Defaults to Equal. Exists is equivalent to wildcard for

value, so that a pod can tolerate all taints of a particular category.

tolerationSeconds <integer>

TolerationSeconds represents the period of time the toleration (which must

be of effect NoExecute, otherwise this field is ignored) tolerates the

taint. By default, it is not set, which means tolerate the taint forever

(do not evict). Zero and negative values will be treated as 0 (evict

immediately) by the system.

value <string>

Value is the taint value the toleration matches to. If the operator is

Exists, the value should be empty, otherwise just a regular string.

- 详细解释

定义容忍设置策略,可以设置的值有:effect、key、operator、tolerationSeconds、value;

pod.spec.tolerations.effect

- 帮助文档

kubectl explain pod.spec.tolerations.effect

KIND: Pod

VERSION: v1

FIELD: effect <string>

DESCRIPTION:

Effect indicates the taint effect to match. Empty means match all taint

effects. When specified, allowed values are NoSchedule, PreferNoSchedule

and NoExecute.

- 详细解释

定义Pod与Node的排斥等级;

必须与node上taint的effect保持一致;

NoSchedule:不能容忍,但仅影响调度过程,已调度上去的pod不受影响,仅对新增加的pod生效。(k8s将不会调度pod到有污点的node上)

PreferNoSchedule:柔性约束,节点现存Pod不受影响,如果实在是没有符合的节点,也可以调度上来。(k8s尽量不会调度pod到有污点的node上)

NoExecute:不能容忍,当污点变动时,Pod对象会被驱逐。(k8s将不会调度pod到有污点的node上,node上已经存在的pod也会被驱逐出去)

pod.spec.tolerations.key

- 帮助文档

kubectl explain pod.spec.tolerations.key

KIND: Pod

VERSION: v1

FIELD: key <string>

DESCRIPTION:

Key is the taint key that the toleration applies to. Empty means match all

taint keys. If the key is empty, operator must be Exists; this combination

means to match all values and all keys.

- 详细解释

key是容忍node上污点的密钥。

如果key是空的,表示匹配所有node上污点的密钥。那么运算符(operator)的选项必须是Exists,这种组合意味着匹配所有值和所有键。

必须与node上taint的key保持一致;

pod.spec.tolerations.operator

- 帮助文档

kubectl explain pod.spec.tolerations.operator

KIND: Pod

VERSION: v1

FIELD: operator <string>

DESCRIPTION:

Operator represents a key's relationship to the value. Valid operators are

Exists and Equal. Defaults to Equal. Exists is equivalent to wildcard for

value, so that a pod can tolerate all taints of a particular category.

- 详细解释

operator定义运算符,表示键与值的关系。运算符有两种:Exists、Equal,默认值是Equal。

Exists相当于值的通配符;Equal相当于精确匹配。

使用Exists可以容忍特定类别的所有污点。

pod.spec.tolerations.tolerationSeconds

- 帮助文档

kubectl explain pod.spec.tolerations.tolerationSeconds

KIND: Pod

VERSION: v1

FIELD: tolerationSeconds <integer>

DESCRIPTION:

TolerationSeconds represents the period of time the toleration (which must

be of effect NoExecute, otherwise this field is ignored) tolerates the

taint. By default, it is not set, which means tolerate the taint forever

(do not evict). Zero and negative values will be treated as 0 (evict

immediately) by the system.

- 详细解释

设定容忍污点的时间,默认情况下没有设置,表示用于容忍污点并不驱逐pod。

当设置成0或负值时,都被系统认定为0,表示立即驱逐pod。

pod.spec.tolerations.value

- 帮助文档

kubectl explain pod.spec.tolerations.value

KIND: Pod

VERSION: v1

FIELD: value <string>

DESCRIPTION:

Value is the taint value the toleration matches to. If the operator is

Exists, the value should be empty, otherwise just a regular string.

- 详细解释

设定容忍度匹配的污点值。

如果运算符为Exists,则该值应为空,否则仅为常规字符串。

必须与node上taint的key保持一致;

容忍应用

- 创建yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep

namespace: default

spec:

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

- 启动pod并查看pod运行节点

#启动pod

kubectl create -f /root/nginx-pod.yaml

#查看pod运行节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-785457fbb8-5kpwk 1/1 Running 0 8s 83.12.230.18 k8s-worker-1 <none> <none>

nginx-dep-785457fbb8-cvftk 1/1 Running 0 8s 83.12.140.35 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-jkjrw 1/1 Running 0 8s 83.12.230.20 k8s-worker-1 <none> <none>

nginx-dep-785457fbb8-kkz6x 1/1 Running 0 8s 83.12.230.17 k8s-worker-1 <none> <none>

nginx-dep-785457fbb8-pb4g8 1/1 Running 0 8s 83.12.230.22 k8s-worker-1 <none> <none>

nginx-dep-785457fbb8-rntrx 1/1 Running 0 8s 83.12.230.21 k8s-worker-1 <none> <none>

nginx-dep-785457fbb8-szgs2 1/1 Running 0 8s 83.12.140.33 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-x6phs 1/1 Running 0 8s 83.12.230.19 k8s-worker-1 <none> <none>

nginx-dep-785457fbb8-zl4h9 1/1 Running 0 8s 83.12.140.36 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-zsjph 1/1 Running 0 8s 83.12.140.34 k8s-worker-2 <none> <none>

- 创建污点

kubectl taint node k8s-worker-1 nginx=master:NoExecute

- 查看pod运行节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-785457fbb8-5wwdr 1/1 Running 0 20s 83.12.140.37 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-cvftk 1/1 Running 0 97s 83.12.140.35 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-d744r 1/1 Running 0 20s 83.12.140.39 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-df64z 1/1 Running 0 19s 83.12.140.41 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-gprvw 1/1 Running 0 19s 83.12.140.42 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-szgs2 1/1 Running 0 97s 83.12.140.33 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-xkwcn 1/1 Running 0 19s 83.12.140.38 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-zl4h9 1/1 Running 0 97s 83.12.140.36 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-zmtx8 1/1 Running 0 19s 83.12.140.40 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-zsjph 1/1 Running 0 97s 83.12.140.34 k8s-worker-2 <none> <none>

- pod添加容忍

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep

namespace: default

spec:

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

tolerations:

- effect: NoExecute

key: nginx

value: master

operator: Equal

tolerationSeconds: 5

- 更新pod并查看pod运行节点

#更新pod

kubectl replace -f nginx-pod.yaml

#查看pod运行节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-5665764cd7-2rt65 1/1 Running 0 47s 83.12.140.47 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-6xl8s 1/1 Running 0 47s 83.12.140.45 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-b2shh 1/1 Running 0 46s 83.12.140.51 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-c4xlr 1/1 Running 0 46s 83.12.140.49 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-dwj5l 1/1 Running 0 47s 83.12.140.44 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-l8f66 1/1 Running 0 47s 83.12.140.43 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-lvg8b 1/1 Running 0 47s 83.12.140.46 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-tvl47 1/1 Running 0 46s 83.12.140.52 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-vjc4l 1/1 Running 0 46s 83.12.140.50 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-wqlmj 1/1 Running 0 46s 83.12.140.48 k8s-worker-2 <none> <none>

容忍应用-完全匹配

当operator为Equal时,key、value、effect必须保持一致时,才能容忍node上的污点。

注意:如果节点上没有污点时,也可以被pod调度;但是节点上污点完全匹配后只在匹配的污点上运行pod。

- 创建yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep

namespace: default

spec:

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

tolerations:

- effect: NoExecute

key: nginx

value: master

operator: Equal

tolerationSeconds: 5

- 创建pod并查看pod运行节点

#创建pod

kubectl create -f /root/nginx-pod.yaml

#创建pod运行节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-5665764cd7-2l7qf 1/1 Running 0 7s 83.12.230.28 k8s-worker-1 <none> <none>

nginx-dep-5665764cd7-d65kg 1/1 Running 0 7s 83.12.230.31 k8s-worker-1 <none> <none>

nginx-dep-5665764cd7-dj7cx 1/1 Running 0 7s 83.12.230.33 k8s-worker-1 <none> <none>

nginx-dep-5665764cd7-drt5x 1/1 Running 0 7s 83.12.140.54 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-f5n2v 1/1 Running 0 7s 83.12.140.55 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-k725m 1/1 Running 0 7s 83.12.230.30 k8s-worker-1 <none> <none>

nginx-dep-5665764cd7-l4x96 1/1 Running 0 7s 83.12.230.29 k8s-worker-1 <none> <none>

nginx-dep-5665764cd7-rdsdp 1/1 Running 0 7s 83.12.140.53 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-sb2xx 1/1 Running 0 7s 83.12.230.32 k8s-worker-1 <none> <none>

nginx-dep-5665764cd7-zgkbl 1/1 Running 0 7s 83.12.140.56 k8s-worker-2 <none> <none>

- 设置污点

kubectl taint node k8s-worker-1 nginx=master:NoExecute

- 验证pod运行的节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-5665764cd7-96zkd 1/1 Running 0 9s 83.12.140.61 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-9rsrt 1/1 Running 0 9s 83.12.140.57 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-dfgb6 1/1 Running 0 9s 83.12.140.60 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-drt5x 1/1 Running 0 113s 83.12.140.54 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-f5n2v 1/1 Running 0 113s 83.12.140.55 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-h6fnt 1/1 Running 0 9s 83.12.140.58 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-rdsdp 1/1 Running 0 113s 83.12.140.53 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-sxbrc 1/1 Running 0 9s 83.12.140.59 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-z6lsv 1/1 Running 0 9s 83.12.140.62 k8s-worker-2 <none> <none>

nginx-dep-5665764cd7-zgkbl 1/1 Running 0 113s 83.12.140.56 k8s-worker-2 <none> <none>

容忍应用-不完全匹配

当operator为Exists时,effect保持一致,且包含这个key的节点,都能容忍node上的污点。

注意:operator为Exists时,value必须为空,可以加value参数;不完全匹配时不能使用NoExecute,会使pod一直漂移。

- 创建yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep

namespace: default

spec:

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

tolerations:

- effect: NoSchedule

key: nginx

operator: Exists

- 创建pod并查看pod运行节点

#创建pod

kubectl replace -f /root/nginx-pod.yaml

#创建pod运行节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-c7854867f-7tdph 1/1 Running 0 7s 83.12.230.4 k8s-worker-1 <none> <none>

nginx-dep-c7854867f-7v22m 1/1 Running 0 7s 83.12.140.26 k8s-worker-2 <none> <none>

nginx-dep-c7854867f-9njtb 1/1 Running 0 7s 83.12.230.33 k8s-worker-1 <none> <none>

nginx-dep-c7854867f-jgpgj 1/1 Running 0 7s 83.12.140.30 k8s-worker-2 <none> <none>

nginx-dep-c7854867f-jsc4b 1/1 Running 0 7s 83.12.230.60 k8s-worker-1 <none> <none>

nginx-dep-c7854867f-ml2q6 1/1 Running 0 7s 83.12.140.32 k8s-worker-2 <none> <none>

nginx-dep-c7854867f-pcvqb 1/1 Running 0 7s 83.12.140.31 k8s-worker-2 <none> <none>

nginx-dep-c7854867f-rrrwj 1/1 Running 0 7s 83.12.140.25 k8s-worker-2 <none> <none>

nginx-dep-c7854867f-rtnj4 1/1 Running 0 7s 83.12.230.1 k8s-worker-1 <none> <none>

nginx-dep-c7854867f-tzmn2 1/1 Running 0 7s 83.12.140.24 k8s-worker-2 <none> <none>

- 设置污点

kubectl taint node k8s-worker-1 nginx=master:NoSchedule

kubectl taint node k8s-worker-2 nginx=worker:NoSchedule

- 验证pod运行节点

kubectl delete -f nginx-pod.yaml

kubectl create -f nginx-pod.yaml

#查看pod运行节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-75f9d649cf-6fs94 1/1 Running 0 61s 83.12.140.38 k8s-worker-2 <none> <none>

nginx-dep-75f9d649cf-gwp9v 1/1 Running 0 61s 83.12.140.27 k8s-worker-2 <none> <none>

nginx-dep-75f9d649cf-h2mhl 1/1 Running 0 61s 83.12.140.13 k8s-worker-2 <none> <none>

nginx-dep-75f9d649cf-jh4lx 1/1 Running 0 61s 83.12.140.21 k8s-worker-2 <none> <none>

nginx-dep-75f9d649cf-jt7qv 1/1 Running 0 61s 83.12.140.36 k8s-worker-2 <none> <none>

nginx-dep-75f9d649cf-jtfgl 1/1 Running 0 61s 83.12.140.19 k8s-worker-2 <none> <none>

nginx-dep-75f9d649cf-kbsl9 1/1 Running 0 61s 83.12.230.16 k8s-worker-1 <none> <none>

nginx-dep-75f9d649cf-sx8m7 1/1 Running 0 61s 83.12.140.14 k8s-worker-2 <none> <none>

nginx-dep-75f9d649cf-tpkwm 1/1 Running 0 61s 83.12.140.37 k8s-worker-2 <none> <none>

nginx-dep-75f9d649cf-wbtfn 1/1 Running 0 61s 83.12.140.18 k8s-worker-2 <none> <none>

容忍应用-大范围匹配

注意:不能使用key为内置污点。

- 结构调整

工作节点不够实验,将k8s-master-03节点调整成worker节点。(使用完毕后为后续实验扔让k8s-master-3为master节点)

调整k8s-master-3为worker节点

#k8s-master-1节点执行

kubectl delete node k8s-master-3

#k8s-master-3节点执行

kubeadm reset

#k8s-master-1节点执行

kubeadm token create --print-join-command

#k8s-master-3节点执行

kubeadm join k8s-master-1:6443 --token 4cnol7.5f82u8falevp4jjn --discovery-token-ca-cert-hash sha256:9101bef649c13caf336108d4cc3696cb67dcc191db80e9b3249012f0351900fd

#k8s-master-1节点执行

kubectl get nodes

#验证k8s-master-3节点已降为worker节点

调整k8s-master-3为master节点

#k8s-master-1节点执行

kubectl delete node k8s-master-3

#k8s-master-3节点执行

kubeadm reset

#k8s-master-1节点执行

kubeadm token create --print-join-command

kubeadm init phase upload-certs --upload-certs

#k8s-master-3节点执行组合好的命令

kubeadm join k8s-master-1:6443 --token 6v0l4p.3voh9mvg5f3rmixe --discovery-token-ca-cert-hash sha256:9101bef649c13caf336108d4cc3696cb67dcc191db80e9b3249012f0351900fd、

--control-plane --certificate-key 1bb2c54ac7317968a1f2224db6ae32bdf17a26833008c4014cfaed6eb6a78ac9

- 创建yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep

namespace: default

spec:

replicas: 10

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

tolerations:

- key: nginx

operator: Exists

- 创建pod并查看pod运行节点

kubectl create -f nginx-pod.yaml

#查看pod运行节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-868496bf49-4j2pn 1/1 Running 0 29s 83.12.140.58 k8s-worker-2 <none> <none>

nginx-dep-868496bf49-6nj5b 1/1 Running 0 29s 83.12.230.61 k8s-worker-1 <none> <none>

nginx-dep-868496bf49-c67ql 1/1 Running 0 29s 83.12.168.2 k8s-master-3 <none> <none>

nginx-dep-868496bf49-ck49d 1/1 Running 0 29s 83.12.140.34 k8s-worker-2 <none> <none>

nginx-dep-868496bf49-gq5k9 1/1 Running 0 29s 83.12.230.30 k8s-worker-1 <none> <none>

nginx-dep-868496bf49-k9p97 1/1 Running 0 29s 83.12.230.44 k8s-worker-1 <none> <none>

nginx-dep-868496bf49-ks55k 1/1 Running 0 29s 83.12.168.1 k8s-master-3 <none> <none>

nginx-dep-868496bf49-ktbct 1/1 Running 0 29s 83.12.140.47 k8s-worker-2 <none> <none>

nginx-dep-868496bf49-nnwhn 1/1 Running 0 29s 83.12.168.3 k8s-master-3 <none> <none>

nginx-dep-868496bf49-x4md7 1/1 Running 0 29s 83.12.140.52 k8s-worker-2 <none> <none>

- 设置污点

kubectl taint node k8s-worker-1 nginx=master:NoSchedule

kubectl taint node k8s-worker-2 nginx=worker:NoSchedule

- 查看pod所在节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-868496bf49-25l2c 1/1 Running 0 9s 83.12.168.5 k8s-master-3 <none> <none>

nginx-dep-868496bf49-2xxns 1/1 Running 0 9s 83.12.230.12 k8s-worker-1 <none> <none>

nginx-dep-868496bf49-94mgs 1/1 Running 0 9s 83.12.168.7 k8s-master-3 <none> <none>

nginx-dep-868496bf49-g64fh 1/1 Running 0 9s 83.12.140.50 k8s-worker-2 <none> <none>

nginx-dep-868496bf49-lkc94 1/1 Running 0 9s 83.12.140.51 k8s-worker-2 <none> <none>

nginx-dep-868496bf49-mfjxv 1/1 Running 0 9s 83.12.168.6 k8s-master-3 <none> <none>

nginx-dep-868496bf49-sff9c 1/1 Running 0 9s 83.12.140.33 k8s-worker-2 <none> <none>

nginx-dep-868496bf49-vqrqx 1/1 Running 0 9s 83.12.230.23 k8s-worker-1 <none> <none>

nginx-dep-868496bf49-wpxm4 1/1 Running 0 9s 83.12.140.48 k8s-worker-2 <none> <none>

nginx-dep-868496bf49-zznkw 1/1 Running 0 9s 83.12.168.4 k8s-master-3 <none> <none>

可以看出k8s-master-3节点上仍然有pod再运行。所以大范围配应该也匹配没有污点的节点。

容忍应用-内置污点

注意:使用内置污点时,value值必须为空,可以加value参数。

- 内置污点有哪些

node.kubernetes.io/not-ready:节点未准备好,相当于节点状态Ready的值为False。

node.kubernetes.io/unreachable:Node Controller访问不到节点,相当于节点状态Ready的值为Unknown。

node.kubernetes.io/out-of-disk:节点磁盘耗尽。

node.kubernetes.io/memory-pressure:节点存在内存压力。

node.kubernetes.io/disk-pressure:节点存在磁盘压力。

node.kubernetes.io/network-unavailable:节点网络不可达。

node.kubernetes.io/unschedulable:节点不可调度。

- 创建yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

tolerations:

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

- 创建pod并查看pod运行节点

#创建pod

kubectl create -f /root/nginx-pod.yaml

#查看pod运行节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-5df4b6f78-6wx27 1/1 Running 0 13s 83.12.230.38 k8s-worker-1 <none> <none>

nginx-dep-5df4b6f78-bvvxm 1/1 Running 0 13s 83.12.168.8 k8s-master-3 <none> <none>

nginx-dep-5df4b6f78-fsg5x 1/1 Running 0 13s 83.12.140.2 k8s-worker-2 <none> <none>

- 移除k8s-master-3并查看pod运行节点

#关闭k8s-master-3

poweroff

#查看pod运行节点

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-785457fbb8-4l46w 1/1 Running 0 4s 83.12.140.4 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-fnqfs 1/1 Running 0 4s 83.12.140.43 k8s-worker-2 <none> <none>

nginx-dep-785457fbb8-pczqw 1/1 Running 0 4s 83.12.230.49 k8s-worker-1 <none> <none>

如果没有设置,pod扔在节点上,但是deployment会另外启动达到副本数量的pod。

- 应用案例

Taint&Toleration利用k8s内置污点,确保节点宕机后快速恢复业务应用。

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-dep

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

tolerations:

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 10

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 10

InitContainer

概念

InitContainer是一种特殊容器,用于在启动应用容器之前启动一个或多个初始化容器,完成应用容器所需的预置条件。

Init container与应用容器本质上是一样的,是仅运行一次就结束的任务,并且必须在成功执行完成后,系统才能继续执行下一个容器。

根据Pod的重启策略,当init container执行失败,在设置了RestartPolicy=Never时,Pod将会启动失败,而设置RestartPolicy=Always时,Pod将会被系统自动重启。

注意事项

InitContainer用途:

- Init 容器可以包含一些安装过程中应用容器中不存在的实用工具或个性化代码;

- Init 容器可以安全地运行这些工具,避免这些工具导致应用镜像的安全性降低;

- Init容器可以以root身份运行,执行一些高权限命令;

- Init容器相关操作执行完成以后即退出,不会给业务容器带来安全隐患。

初始化容器和PostStart区别

PostStart:依赖主应用的环境,而且并不一定先于Command运行。

InitContainer:不依赖主应用的环境,可以有更高的权限和更多的工具,一定会在主应用启动之前完成。

初始化容器和普通容器的区别

- 它们总是运行到完成;

- 上一个运行完成才会运行下一个;

- 如果 Pod 的 Init 容器失败,Kubernetes 会不断地重启该 Pod,直到 Init 容器成功为止,但是Pod 对应的 restartPolicy 值为 Never,Kubernetes 不会重新启动 Pod。

- Init 容器不支持 lifecycle、livenessProbe、readinessProbe 和 startupProbe。

注意

init容器修改或生成的配置,必须用volume挂载到容器中。

init容器由上而下逐个执行,且上一个init容器执行成功后还执行下一个。

init容器在几个副本下运行就执行几次。

init容器支持大部分应用容器配置,但不支持健康检查。

常用参数

pod.spec.initContainers

定义初始化容器

- 帮助文档

kubectl explain pod.spec.initContainers

KIND: Pod

VERSION: v1

RESOURCE: initContainers <[]Object>

DESCRIPTION:

List of initialization containers belonging to the pod. Init containers are

executed in order prior to containers being started. If any init container

fails, the pod is considered to have failed and is handled according to its

restartPolicy. The name for an init container or normal container must be

unique among all containers. Init containers may not have Lifecycle

actions, Readiness probes, Liveness probes, or Startup probes. The

resourceRequirements of an init container are taken into account during

scheduling by finding the highest request/limit for each resource type, and

then using the max of of that value or the sum of the normal containers.

Limits are applied to init containers in a similar fashion. Init containers

cannot currently be added or removed. Cannot be updated. More info:

https://kubernetes.io/docs/concepts/workloads/pods/init-containers/

A single application container that you want to run within a pod.

FIELDS:

args <[]string>

Arguments to the entrypoint. The docker image's CMD is used if this is not

provided. Variable references $(VAR_NAME) are expanded using the

container's environment. If a variable cannot be resolved, the reference in

the input string will be unchanged. The $(VAR_NAME) syntax can be escaped

with a double $$, ie: $$(VAR_NAME). Escaped references will never be

expanded, regardless of whether the variable exists or not. Cannot be

updated. More info:

https://kubernetes.io/docs/tasks/inject-data-application/define-command-argument-container/#running-a-command-in-a-shell

command <[]string>

Entrypoint array. Not executed within a shell. The docker image's

ENTRYPOINT is used if this is not provided. Variable references $(VAR_NAME)

are expanded using the container's environment. If a variable cannot be

resolved, the reference in the input string will be unchanged. The

$(VAR_NAME) syntax can be escaped with a double $$, ie: $$(VAR_NAME).

Escaped references will never be expanded, regardless of whether the

variable exists or not. Cannot be updated. More info:

https://kubernetes.io/docs/tasks/inject-data-application/define-command-argument-container/#running-a-command-in-a-shell

env <[]Object>

List of environment variables to set in the container. Cannot be updated.

envFrom <[]Object>

List of sources to populate environment variables in the container. The

keys defined within a source must be a C_IDENTIFIER. All invalid keys will

be reported as an event when the container is starting. When a key exists

in multiple sources, the value associated with the last source will take

precedence. Values defined by an Env with a duplicate key will take

precedence. Cannot be updated.

image <string>

Docker image name. More info:

https://kubernetes.io/docs/concepts/containers/images This field is

optional to allow higher level config management to default or override

container images in workload controllers like Deployments and StatefulSets.

imagePullPolicy <string>

Image pull policy. One of Always, Never, IfNotPresent. Defaults to Always

if :latest tag is specified, or IfNotPresent otherwise. Cannot be updated.

More info:

https://kubernetes.io/docs/concepts/containers/images#updating-images

lifecycle <Object>

Actions that the management system should take in response to container

lifecycle events. Cannot be updated.

livenessProbe <Object>

Periodic probe of container liveness. Container will be restarted if the

probe fails. Cannot be updated. More info:

https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#container-probes

name <string> -required-

Name of the container specified as a DNS_LABEL. Each container in a pod

must have a unique name (DNS_LABEL). Cannot be updated.

ports <[]Object>

List of ports to expose from the container. Exposing a port here gives the

system additional information about the network connections a container

uses, but is primarily informational. Not specifying a port here DOES NOT

prevent that port from being exposed. Any port which is listening on the

default "0.0.0.0" address inside a container will be accessible from the

network. Cannot be updated.

readinessProbe <Object>

Periodic probe of container service readiness. Container will be removed

from service endpoints if the probe fails. Cannot be updated. More info:

https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#container-probes

resources <Object>

Compute Resources required by this container. Cannot be updated. More info:

https://kubernetes.io/docs/concepts/configuration/manage-compute-resources-container/

securityContext <Object>

Security options the pod should run with. More info:

https://kubernetes.io/docs/concepts/policy/security-context/ More info:

https://kubernetes.io/docs/tasks/configure-pod-container/security-context/

startupProbe <Object>

StartupProbe indicates that the Pod has successfully initialized. If

specified, no other probes are executed until this completes successfully.

If this probe fails, the Pod will be restarted, just as if the

livenessProbe failed. This can be used to provide different probe

parameters at the beginning of a Pod's lifecycle, when it might take a long

time to load data or warm a cache, than during steady-state operation. This

cannot be updated. More info:

https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#container-probes

stdin

Whether this container should allocate a buffer for stdin in the container

runtime. If this is not set, reads from stdin in the container will always

result in EOF. Default is false.

stdinOnce

Whether the container runtime should close the stdin channel after it has

been opened by a single attach. When stdin is true the stdin stream will

remain open across multiple attach sessions. If stdinOnce is set to true,

stdin is opened on container start, is empty until the first client

attaches to stdin, and then remains open and accepts data until the client

disconnects, at which time stdin is closed and remains closed until the

container is restarted. If this flag is false, a container processes that

reads from stdin will never receive an EOF. Default is false

terminationMessagePath

Optional: Path at which the file to which the container' s termination

message will be written is mounted into the container's filesystem. Message

written is intended to be brief final status, such as an assertion failure

message. Will be truncated by the node if greater than 4096 bytes. The

total message length across all containers will be limited to 12kb.

Defaults to /dev/termination-log. Cannot be updated.

terminationMessagePolicy

Indicate how the termination message should be populated. File will use the

contents of terminationMessagePath to populate the container status message

on both success and failure. FallbackToLogsOnError will use the last chunk

of container log output if the termination message file is empty and the

container exited with an error. The log output is limited to 2048 bytes or

80 lines, whichever is smaller. Defaults to File. Cannot be updated.

tty

Whether this container should allocate a TTY for itself, also requires

' stdin' to be true. Default is false.

volumeDevices <[]Object>

volumeDevices is the list of block devices to be used by the container.

volumeMounts <[]Object>

Pod volumes to mount into the container's filesystem. Cannot be updated.

workingDir <string>

Container's working directory. If not specified, the container runtime's

default will be used, which might be configured in the container image.

Cannot be updated.

pod.spec.initContainers.name

- 用途

定义初始化容器的名称

- 帮助文档

kubectl explain pod.spec.initContainers.name

KIND: Pod

VERSION: v1

FIELD: name <string>

DESCRIPTION:

Name of the container specified as a DNS_LABEL. Each container in a pod

must have a unique name (DNS_LABEL). Cannot be updated.

pod.spec.initContainers.image

- 用途

定义初始化容器使用的镜像

- 帮助文档

kubectl explain pod.spec.initContainers.image

KIND: Pod

VERSION: v1

FIELD: image <string>

DESCRIPTION:

Docker image name. More info:

https://kubernetes.io/docs/concepts/containers/images This field is

optional to allow higher level config management to default or override

container images in workload controllers like Deployments and StatefulSets.

pod.spec.initContainers.command

- 用途

定义初始化容器使用的命令

- 帮助文档

kubectl explain pod.spec.initContainers.command

KIND: Pod

VERSION: v1

FIELD: command <[]string>

DESCRIPTION:

Entrypoint array. Not executed within a shell. The docker image's

ENTRYPOINT is used if this is not provided. Variable references $(VAR_NAME)

are expanded using the container's environment. If a variable cannot be

resolved, the reference in the input string will be unchanged. The

$(VAR_NAME) syntax can be escaped with a double $$, ie: $$(VAR_NAME).

Escaped references will never be expanded, regardless of whether the

variable exists or not. Cannot be updated. More info:

https://kubernetes.io/docs/tasks/inject-data-application/define-command-argument-container/#running-a-command-in-a-shell

pod.spec.initContainers.volumeMounts

- 用途

定义初始化容器挂载卷

- 帮助文档

kubectl explain pod.spec.initContainers.volumeMounts

KIND: Pod

VERSION: v1

RESOURCE: volumeMounts <[]Object>

DESCRIPTION:

Pod volumes to mount into the container's filesystem. Cannot be updated.

VolumeMount describes a mounting of a Volume within a container.

FIELDS:

mountPath -required-

Path within the container at which the volume should be mounted. Must not

contain ' :'.

mountPropagation

mountPropagation determines how mounts are propagated from the host to

container and the other way around. When not set, MountPropagationNone is

used. This field is beta in 1.10.

name -required-

This must match the Name of a Volume.

readOnly

Mounted read-only if true, read-write otherwise (false or unspecified).

Defaults to false.

subPath

Path within the volume from which the container' s volume should be mounted.

Defaults to "" (volume's root).

subPathExpr

Expanded path within the volume from which the container' s volume should be

mounted. Behaves similarly to SubPath but environment variable references

$(VAR_NAME) are expanded using the container's environment. Defaults to ""

(volume's root). SubPathExpr and SubPath are mutually exclusive.

pod.spec.initContainers.volumeMounts.name

- 用途

定义初始化容器挂载卷的名称

- 帮助文档

kubectl explain pod.spec.initContainers.volumeMounts.name

KIND: Pod

VERSION: v1

FIELD: name <string>

DESCRIPTION:

This must match the Name of a Volume.

pod.spec.initContainers.volumeMounts.mountPath

- 用途

定义初始化容器挂载卷的挂载路径

- 帮助文档

kubectl explain pod.spec.initContainers.volumeMounts.mountPath

KIND: Pod

VERSION: v1

FIELD: mountPath <string>

DESCRIPTION:

Path within the container at which the volume should be mounted. Must not

contain ':'.

应用InitContainer

简单应用

部署一个web网站,网站程序没有打到镜像中,而是希望从代码仓库中动态拉取放到应用容器中。

- 创建yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: nginx-dep

spec:

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

#定义初始化容器

initContainers:

- name: init-nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/busybox:1.28.0

imagePullPolicy: IfNotPresent

#拉取一个测试用的html

command:

- wget

- -O

- /opt/html/index.html

- https://www.baidu.com/index.php?tn=monline_3_dg

#初始化容器与应用容器要挂载到同一卷上

volumeMounts:

- mountPath: /opt/html

name: init-storage

containers:

- name: nginx-web

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

#初始化容器与应用容器要挂载到同一卷上

volumeMounts:

- mountPath: /usr/share/nginx/html

name: init-storage

#挂载卷,为初始化容器和应用容器提供共享卷

volumes:

- name: init-storage

emptyDir:

{}

- 创建pod

kubectl create -f /root/nginx-init.yaml

- 验证页面

#查看容器IP

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-7f975bfb94-gl528 1/1 Running 0 112s 83.12.140.23 k8s-worker-2 <none> <none>

#访问页面

curl 83.12.140.23:80

应用案例

部署elk中的elasticsearch时,使用InitContainer容器初始化elasticsearch容器的配置。(此处的yaml文件只提供配置思路,容器无法正常运行。elk部署详情参考第24章《24. 部署篇-ELK集群》)

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

volumes:

- name: data

emptyDir: {}

initContainers:

- name: fix-permissions

image: busybox

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

containers:

- name: elasticsearch

#image: docker.elastic.co/elasticsearch/elasticsearch-oss:6.4.3

image: dotbalo/es:2.4.6-cluster

imagePullPolicy: Always

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: "cluster.name"

value: "pscm-cluster"

- name: "CLUSTER_NAME"

value: "pscm-cluster"

- name: "discovery.zen.minimum_master_nodes"

value: "2"

- name: "MINIMUM_MASTER_NODES"

value: "2"

- name: "node.name"

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: "NODE_NAME"

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: "discovery.zen.ping.unicast.hosts"

value: "es-cluster-0.elasticsearch, es-cluster-1.elasticsearch, es-cluster-2.elasticsearch"

#- name: ES_JAVA_OPTS

# value: "-Xms512m -Xmx512m"

Affinity&AntiAffinity

概念

k8s中调度策略大致分两种。一种是全局的调度策略,要在启动调度器时配置,如predicates、priorities;另一种是运行时调度策略,如nodeAffinity、podAffinity、podAntiAffinity。

亲和力策略相对NodeSelector、Taints调度方式更为灵活丰富,支持的匹配表达式更多。

亲和力和反亲和力

- 什么是亲和力?

应用A与应用B两个应用频繁交互,所以有必要利用亲和性让两个应用的尽可能的靠近,甚至在一个node上,以减少因网络通信而带来的性能损耗。

- 什么是反亲和力?

当应用的采用多副本部署时,有必要采用反亲和性让各个应用实例打散分布在各个node上,以提高HA。

-

支持哪些表达式?

| 策略名称 | 匹配目标 | 支持的操作符 | 是否支持拓扑域 | 用途 |

| — | — | — | — | — |

| nodeAffinity | 主机的标签 | In,NotIn,Exists,DoesNotExist,Gt,Lt | 否 | 决定POD可以部署在哪些主机上 |

| podAffinity | Pod的标签 | In,NotIn,Exists,DoesNotExist | 是 | 决定POD可以和哪些pod部署在同一拓扑域 |

| podAntiAffinity | Pod的标签 | In,NotIn,Exists,DoesNotExist | 是 | 决定POD不可以和哪些pod部署在同一拓扑域 | -

有哪些亲和力?

亲和力的三种:

- NodeAffinity:节点亲和力,主要解决POD要部署在哪些主机上,以及POD不能部署在哪些主机上的问题,处理POD和主机直接的关系。

- PodAffinity:Pod亲和力,主要解决POD可以和哪些POD部署在同一个拓扑域的问题,处理pod与pod直接的关系。

- PodAntiAffinity:Pod反亲和力,主要解决POD不能和哪些POD部署在同一个拓扑域的问题,处理pod与pod直接的关系。

每种亲和力又分为两类:

- requiredDuringSchedulingIgnoredDuringExecution:硬亲和力,如果当前node节点中没有满足条件的节点,就不断重试,直到满足条件位置 。

- preferredDuringSchedulingIgnoredDuringExecution:软亲和力,如果当前node节点中没有满足要求的节点,Pod就会忽略,继续完成调度 。

- 操作符的含义是什么?

In:表示等于,label值需要是values列表中的其中一项

NotIn:表示不等于,label值不能是values列表中的其中一项

Exists:表示包含,存在某个label

DoesNotExist:表示不包含,不能存在某个label

Gt:表示大于,label值大于values(只能是数字)

Lt:表示小于,label值小于values(只能是数字)

NodeAffinity的两类亲和力

requiredDuringSchedulingIgnoredDuringExecution:硬亲和力,即支持必须部署在指定的节点上,也支持必须不部署在指定节点上。

preferredDuringSchedulingIgnoredDuringExecution:软亲和力,尽量部署在满足条件的节点上,或者是尽量不用部署在被匹配的节点上。

PodAffinity的两类亲和力

有三个分别是a应用、b应用、c应用,将a应用根据某种策略部署在一块。

requiredDuringSchedulingIgnoredDuringExecution:将a应用和b应用部署在一块。

preferredDuringSchedulingIgnoredDuringExecution:尽量将a应用和b应用部署在一块。

PodAntiAffinity的两类亲和力

requiredDuringSchedulingIgnoredDuringExecution:不要将a应用与之匹配的应用部署在一块。

preferredDuringSchedulingIgnoredDuringExecution:尽量不要将a应用与之匹配的应用部署在一块。

亲和力反亲和力应用

每次实验时,自定义的标签、污点、容忍等全部删除以免影响实验。

注意:为了方便测试将3master+2worker调整为1master+4worker!!!

注意:针对pod亲和力有拓扑域(topology)的配置,拓扑域文中有单独说明!!!

主机名:

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master-1 Ready control-plane,master 5d1h v1.20.9

k8s-master-2 Ready <none> 15s v1.20.9

k8s-master-3 Ready <none> 12s v1.20.9

k8s-worker-1 Ready <none> 5d1h v1.20.9

k8s-worker-2 Ready <none> 5d1h v1.20.9

应用场景一(nodeAffinity)

在k8s中,会有很多节点运行容器,可以使用node亲和力来决定哪些node可以部署pod。

nodeAffinity的硬策略

让pod强制运行在k8s-worker-2节点上。

- 定义yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: nginx-dep

spec:

replicas: 6

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-web

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: nginx-run

operator: In

values:

- worker

affinity:定义亲和力的调度设置

nodeAffinity:指定亲和力的调度策略为node亲和力

requiredDuringSchedulingIgnoredDuringExecution:定义node亲和力的硬策略

nodeSelectorTerms:这里不再使用nodeselector,使用这个参数可以进行相对简单的逻辑运算。

matchExpressions:定义硬策略的匹配表达式。

key和values:定义硬策略匹配的标签,两个参数定义了label,即节点设置标签时应为nginx-run=worker。

operator:定义硬策略的匹配模式,此处为In

- 创建pod

kubectl create -f /root/nodeAffinity.yaml

- 观察pod运行

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-fb5fc7fc6-6sbms 0/1 Pending 0 89s <none> <none> <none> <none>

nginx-dep-fb5fc7fc6-rlv5c 0/1 Pending 0 89s <none> <none> <none> <none>

nginx-dep-fb5fc7fc6-rrb92 0/1 Pending 0 89s <none> <none> <none> <none>

nginx-dep-fb5fc7fc6-vq65r 0/1 Pending 0 89s <none> <none> <none> <none>

nginx-dep-fb5fc7fc6-vvl5m 0/1 Pending 0 89s <none> <none> <none> <none>

nginx-dep-fb5fc7fc6-xvb49 0/1 Pending 0 89s <none> <none> <none> <none>

由于k8s-worker-2节点没有设置label,pod一直找不到合适的节点所以处于Pending状态。

- 设置标签

kubectl label node k8s-worker-2 nginx-run=worker

- 重启pod后观察

kubectl delete -f /root/nodeAffinity.yaml

kubectl create -f /root/nodeAffinity.yaml

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-fb5fc7fc6-2pcjw 1/1 Running 0 46s 83.12.140.17 k8s-worker-2 <none> <none>

nginx-dep-fb5fc7fc6-8fqsw 1/1 Running 0 46s 83.12.140.9 k8s-worker-2 <none> <none>

nginx-dep-fb5fc7fc6-bnzmq 1/1 Running 0 46s 83.12.140.10 k8s-worker-2 <none> <none>

nginx-dep-fb5fc7fc6-fg4vn 1/1 Running 0 46s 83.12.140.16 k8s-worker-2 <none> <none>

nginx-dep-fb5fc7fc6-ggzwz 1/1 Running 0 46s 83.12.140.24 k8s-worker-2 <none> <none>

nginx-dep-fb5fc7fc6-hh96g 1/1 Running 0 46s 83.12.140.7 k8s-worker-2 <none> <none>

nodeAffinity的软策略

4个worker节点,只希望pod运行在k8s-master-2 、k8s-master-3上

- 定义yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: default

name: nginx-dep

spec:

replicas: 6

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-web

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: nginx-run

operator: NotIn

values:

- worker

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

- key: nginx-run

operator: In

values:

- master

weight: 5

preferredDuringSchedulingIgnoredDuringExecution:定义node亲和力的软策略。

preference:定义node亲和力的调度策略,而不是硬性要求。

matchExpressions:定义软策略的表达式。

key和values:定义硬策略匹配的标签,两个参数定义了label,即节点设置标签时应为nginx-run=worker。

operator:定义软策略的匹配模式,此处为In。

- 创建pod

kubectl create -f /root/nodeAffinity.yaml

- 观察pod

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-85c785c668-9hjv7 1/1 Running 0 14s 83.12.168.11 k8s-master-3 <none> <none>

nginx-dep-85c785c668-9lss5 1/1 Running 0 14s 83.12.140.31 k8s-worker-2 <none> <none>

nginx-dep-85c785c668-9vw57 1/1 Running 0 14s 83.12.182.75 k8s-master-2 <none> <none>

nginx-dep-85c785c668-ddnhf 1/1 Running 0 14s 83.12.182.76 k8s-master-2 <none> <none>

nginx-dep-85c785c668-k7mw2 1/1 Running 0 14s 83.12.230.58 k8s-worker-1 <none> <none>

nginx-dep-85c785c668-sn8rm 1/1 Running 0 14s 83.12.230.26 k8s-worker-1 <none> <none>

由于4个节点都没有设定标签,所以在硬策略中的操作符(NotIn)没有生效,所以4个节点都有pod运行。

- 设置标签

kubectl label node k8s-worker-1 nginx-run=worker

kubectl label node k8s-worker-2 nginx-run=worker

kubectl label node k8s-master-2 nginx-run=master

- 重启pod后观察

kubectl delete -f /root/nodeAffinity.yaml

kubectl create -f /root/nodeAffinity.yaml

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-dep-85c785c668-7ctv8 1/1 Running 0 7s 83.12.182.79 k8s-master-2 <none> <none>

nginx-dep-85c785c668-fm4zp 1/1 Running 0 7s 83.12.168.13 k8s-master-3 <none> <none>

nginx-dep-85c785c668-swb6k 1/1 Running 0 7s 83.12.168.12 k8s-master-3 <none> <none>

nginx-dep-85c785c668-tth74 1/1 Running 0 7s 83.12.182.78 k8s-master-2 <none> <none>

nginx-dep-85c785c668-w5s7f 1/1 Running 0 7s 83.12.182.77 k8s-master-2 <none> <none>

nginx-dep-85c785c668-xrxxl 1/1 Running 0 7s 83.12.168.14 k8s-master-3 <none> <none>

因为加了软策略,并不是所有pod必须运行在k8s-master-2,所以k8s-master-3上也有。符合软策略特点“尽量部署在满足条件的节点上,尽量不用部署在被匹配的节点上”。

总结

node亲和性与nodeSelector类似,是它的扩展。软硬策略也可以分开写。

nodeAffinity有多个nodeSelectorTerms时,pod只需满足一个即可匹配。

nodeSelectorTerms有多个matchExpressions时,pod必须都满足才能匹配。

如果同时指定nodeSelector和nodeAffinity时,pod必须都满足才能匹配。

应用场景二(podAffinity)

在k8s中,会有很多pod,为减少pod网络影响,可以将两个pod部署在一起。

podAffinity的硬策略

一个项目用到了MySQL、Redis、Nginx三个应用,现在希望Nginx与Redis部署在一起。

- 定义yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: my-app-nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-redis

spec:

replicas: 1

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname

labelSelector:

matchLabels:

app: nginx

containers:

- name: my-app-redis

image: registry.cn-beijing.aliyuncs.com/publicspaces/redis:6.2.6

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: my-app-mysql

image: registry.cn-beijing.aliyuncs.com/publicspaces/mysql:8.0.27

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

value: "1qaz!QAZ"

affinity:定义亲和力的调度设置。

podAffinity:指定亲和力的调度策略为pod亲和力。

requiredDuringSchedulingIgnoredDuringExecution:定义pod亲和力的硬策略。

topologyKey:定义pod亲和力策略是需要带有拓扑域,此处拓扑域使用的是kubernetes.io/hostname。

labelSelector:定义pod亲和力选择的标签。

matchLabels:定义硬策略的标签匹配规则。

app: nginx:此处需要注意,此处标签为另一个pod定义的标签(labels.app: nginx)必须一致,这样两个pod就能在一起。

- 创建pod

kubectl create -f /root/podAffinity.yaml

- 观察pod

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-app-mysql-846468b577-k24rx 1/1 Running 0 6s 83.12.182.94 k8s-master-2 <none> <none>

my-app-nginx-59f96f45ff-jm8tw 1/1 Running 0 7s 83.12.168.30 k8s-master-3 <none> <none>

my-app-redis-85bfd98cbf-zvz86 1/1 Running 0 7s 83.12.168.31 k8s-master-3 <none> <none>

此时只指定了Nginx和Redis的亲和力,没有指定MySQL,所以Nginx和Redis必定在一起,而MySQL则是随机的。

podAffinity的软策略

一个项目用到了MySQL、Redis、Nginx三个应用,现在希望Nginx与Redis尽量部署在一起。

- 定义yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: my-app-nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-redis

spec:

replicas: 1

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

spec:

affinity:

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

topologyKey: kubernetes.io/hostname

labelSelector:

matchLabels:

app: nginx

weight: 60

containers:

- name: my-app-redis

image: registry.cn-beijing.aliyuncs.com/publicspaces/redis:6.2.6

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: my-app-mysql

image: registry.cn-beijing.aliyuncs.com/publicspaces/mysql:8.0.27

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

value: "1qaz!QAZ"

preferredDuringSchedulingIgnoredDuringExecution:定义pod亲和力的软策略。

podAffinityTerm:定义pod亲和力策略。

topologyKey:定义pod亲和力策略是需要带有拓扑域,此处拓扑域使用的是kubernetes.io/hostname。

labelSelector:定义pod亲和力选择的标签。

matchLabels:定义软策略的标签匹配规则。

app: nginx:此处需要注意,此处标签为另一个pod定义的标签(labels.app: nginx)必须一致,这样两个pod就能尽量在一起。

weight:定义软策略的权重,权重值越大, 优先级越高。权重范围在1-100之间。

- 创建pod

kubectl create -f /root/podAffinity.yaml

- 观察pod

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-app-mysql-846468b577-9nwmv 1/1 Running 0 5s 83.12.182.95 k8s-master-2 <none> <none>

my-app-nginx-59f96f45ff-kpdxd 1/1 Running 0 5s 83.12.168.32 k8s-master-3 <none> <none>

my-app-redis-75589b4884-fv9zf 1/1 Running 0 5s 83.12.168.33 k8s-master-3 <none> <none>

观察可以看出Nginx和Redis已经尽量部署在一起了,MySQL的部署由于没有指定,所以还是随机的。

总结

pod亲和力可以跨namespace。

- 如果配置了namespace字段但是没有配置值,那么此时匹配所有namespace下符合条件pod的标签(label);

- 如果配置了namespace字段且指定了具体的命名空间,那么此时匹配指定命名空间下pod的标签(label);

- 如果没有配置namespace字段,那么此时匹配当前命名空间下pod的标签(label);

- namespace配置位置,命名空间可以指定多个。

affinity:

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

namespaces:

- default

topologyKey: kubernetes.io/hostname

labelSelector:

matchLabels:

app: nginx

weight: 60

应用场景三(podAntiAffinity)

podAntiAffinity的硬策略

在k8s中,会有很多节点运行容器,Pod反亲和力可以将同一个应用部署到不同的节点上,实现可用,避免同一个应用部署到相同的宿主机带来的风险。

- 定义yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-nginx

spec:

replicas: 5

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: my-app-nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

- 创建pod

kubectl create -f /root/podAntiAffinity.yaml

- 观察pod

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-app-nginx-6c94554699-c7wqb 1/1 Running 0 12s 83.12.168.34 k8s-master-3 <none> <none>

my-app-nginx-6c94554699-lw7rs 1/1 Running 0 49s 83.12.182.96 k8s-master-2 <none> <none>

my-app-nginx-6c94554699-nq26p 1/1 Running 0 12s 83.12.230.20 k8s-worker-1 <none> <none>

my-app-nginx-6c94554699-vlvr5 1/1 Running 0 12s 83.12.140.15 k8s-worker-2 <none> <none>

my-app-nginx-6c94554699-vsfmj 0/1 Pending 0 12s <none> <none> <none> <none>

发现有一个pod一直处于Pending状态。这是由于设置了pod反亲和力的硬策略,即每个pod都不能部署在一起,4个工作节点部署完成后第五个pod不满足条件导致无法部署。

podAntiAffinity的软策略

两个应用Nginx和Redis,Nginx的副本尽量不部署在一起,Redis的副本尽量部署在一起。

- 定义yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: my-app-nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app-redis

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

spec:

containers:

- name: my-app-redis

image: registry.cn-beijing.aliyuncs.com/publicspaces/redis:6.2.6

imagePullPolicy: IfNotPresent

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

topologyKey: kubernetes.io/hostname

labelSelector:

matchExpressions:

- key: app

operator: NotIn

values:

- redis

weight: 10

- 观察pod

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-app-nginx-6c94554699-76gbr 1/1 Running 0 6s 83.12.168.35 k8s-master-3 <none> <none>

my-app-nginx-6c94554699-chx7s 1/1 Running 0 6s 83.12.140.20 k8s-worker-2 <none> <none>

my-app-nginx-6c94554699-jvfdk 1/1 Running 0 6s 83.12.182.97 k8s-master-2 <none> <none>

my-app-redis-7d5bff96dd-bxg9r 1/1 Running 0 6s 83.12.230.2 k8s-worker-1 <none> <none>

my-app-redis-7d5bff96dd-p46hd 1/1 Running 0 6s 83.12.230.6 k8s-worker-1 <none> <none>

应用场景四(尽量调度到高配置服务器)

- 创建标签(给服务器加上标签标识)

kubectl label node k8s-master-2 k8s-master-3 ssd=true

kubectl label node k8s-master-2 gpu=true

kubectl label node k8s-worker-1 type=physical

定义好k8s-master-2、k8s-master-3节点上有ssd磁盘;k8s-master-2是GPU服务器;k8s-worker-1是物理机。

- 定义yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: label-nginx

template:

metadata:

labels:

app: label-nginx

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

- key: ssd

operator: In

values:

- "true"

- key: gpu

operator: NotIn

values:

- "true"

weight: 100

- preference:

matchExpressions:

- key: type

operator: In

values:

- physical

weight: 10

containers:

- image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

imagePullPolicy: IfNotPresent

name: nginx-web

- 创建pod

kubectl create -f /root/nodeAffinity.yaml

- 观察pod

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx-65647868cf-dckzn 1/1 Running 0 8s 83.12.168.37 k8s-master-3 <none> <none>

my-nginx-65647868cf-lrsmt 1/1 Running 0 8s 83.12.168.38 k8s-master-3 <none> <none>

my-nginx-65647868cf-vsknk 1/1 Running 0 105s 83.12.168.36 k8s-master-3 <none> <none>

由于设置了尽量部署在ssd磁盘上且权重达到100,所以pod都被部署在了k8s-master-3上。

- 调整标签并重新部署

kubectl label node k8s-master-3 ssd-

kubectl delete -f /root/nodeAffinity.yaml

kubectl create -f /root/nodeAffinity.yaml

- 观察pod

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx-65647868cf-6jxgm 1/1 Running 0 52s 83.12.230.53 k8s-worker-1 <none> <none>

my-nginx-65647868cf-dwbc2 1/1 Running 0 52s 83.12.230.3 k8s-worker-1 <none> <none>

my-nginx-65647868cf-j9g27 1/1 Running 0 52s 83.12.230.15 k8s-worker-1 <none> <none>

设置了尽量部署在ssd磁盘上但是没有ssd的标签,所以没有生效;设置了尽量不部署在GPU上且设置了尽量部署在physical上,所以pod都运行在了符合标签条件的k8s-worker-1上。

Topology

概念

- 什么是topology?

拓扑域(Topology Domain)是Kubernetes中一种用于调度和部署容器的高级特性。在调度容器时考虑节点之间的物理或逻辑关系,以满足性能、资源分配、数据本地性等方面的需求。

Topology(拓扑域)是k8s中管理集群节点之间的关系和约束的模块。Topology模块可以帮助用户在不同节点之间分配工作负载,并确保满足节点之间的资源限制和约束。

Topology主要针对宿主机,就相当于给宿主机划定了区域;也就是给宿主机添加了标签,这个标签的key就可以称为topologyKey。

Topology一般和亲和力一起使用。

- 怎么划分拓扑域?

拓扑域的划分是逻辑概念,可以按照区域、机房、机柜、服务器、业务应用等划分拓扑域。

- 注意事项

在k8s中,两个标签的key一致时,两个节点才在一个拓扑域中。

标签也是拓扑域,例如上文使用的“kubernetes.io/hostname”,其实就是节点上的某个标签的key。

#可以使用命令查找“kubernetes.io/hostname”

kubectl get node --show-labels | grep kubernetes.io/hostname

自定义拓扑域并应用

自定义拓扑域就是给节点打上标签。

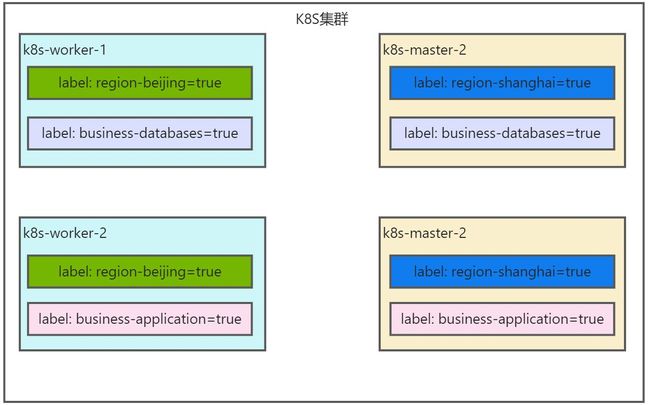

定义拓扑域

设置区域标签,北京:k8s-worker-1、k8s-worker-2;上海:k8s-master-2、k8s-master-3;

kubectl label node k8s-worker-1 k8s-worker-2 region-beijing=true

kubectl label node k8s-master-2 k8s-master-3 region-sahnghai=true

设置业务区域,数据库区域:k8s-worker-1、k8s-worker-2;应用区域:k8s-master-2、k8s-master-3;

kubectl label node k8s-worker-1 k8s-master-2 business-databases=true

kubectl label node k8s-worker-2 k8s-master-3 business-application=true

应用拓扑域

mysql-yjszs只能部署在北京的数据库区

- 创建yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-yjszs

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: registry.cn-beijing.aliyuncs.com/publicspaces/mysql:8.0.27

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

value: '1qaz!QAZ'

ports:

- containerPort: 3306

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: region-beijing

labelSelector:

matchLabels:

app: mysql

- topologyKey: business-databases

labelSelector:

matchLabels:

app: mysql

- 生成pod

kubectl create -f /root/topology.yaml

- 观察pod

kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mysql-yjszs-7895594578-45s6s 1/1 Running 0 6s 83.12.230.22 k8s-worker-1 <none> <none>

mysql-yjszs-7895594578-m6jt6 1/1 Running 0 6s 83.12.230.14 k8s-worker-1 <none> <none>

my-nginx部署在上海的多个节点

- 定义yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

namespace: default

spec:

replicas: 6

selector:

matchLabels:

app: label-nginx

template:

metadata:

labels:

app: label-nginx

spec:

containers:

- name: nginx

image: registry.cn-beijing.aliyuncs.com/publicspaces/nginx:1.22.1

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: region-sahnghai

labelSelector:

matchLabels:

app: label-nginx

- 生成pod

kubectl create -f /root/topology.yaml

- 观察pod

kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx-55b6bd5c7c-cwqc9 1/1 Running 0 2m40s 83.12.168.41 k8s-master-3 <none> <none>

my-nginx-55b6bd5c7c-ln2hr 1/1 Running 0 2m40s 83.12.182.100 k8s-master-2 <none> <none>

my-nginx-55b6bd5c7c-lrp55 1/1 Running 0 2m40s 83.12.182.99 k8s-master-2 <none> <none>

my-nginx-55b6bd5c7c-n7v28 1/1 Running 0 2m40s 83.12.168.40 k8s-master-3 <none> <none>

my-nginx-55b6bd5c7c-pf7dk 1/1 Running 0 2m40s 83.12.168.39 k8s-master-3 <none> <none>

my-nginx-55b6bd5c7c-qbq6w 1/1 Running 0 2m40s 83.12.182.98 k8s-master-2 <none> <none>

k8s-master-2和k8s-master-3上都标签region-sahnghai,满足pod调度时的硬策略,所以部署在了k8s-master-2和k8s-master-3上。