open-mmlab的mmdetection使用总结

教程来源于视频:5 MMDetection 代码教学 - 1.5_哔哩哔哩_bilibili

使用总结如下:

环境安装配置见官方教程,如果在jupyter-notebook里,只要在mim前加上!

1、使用mmdetection ,进行单张图片推理

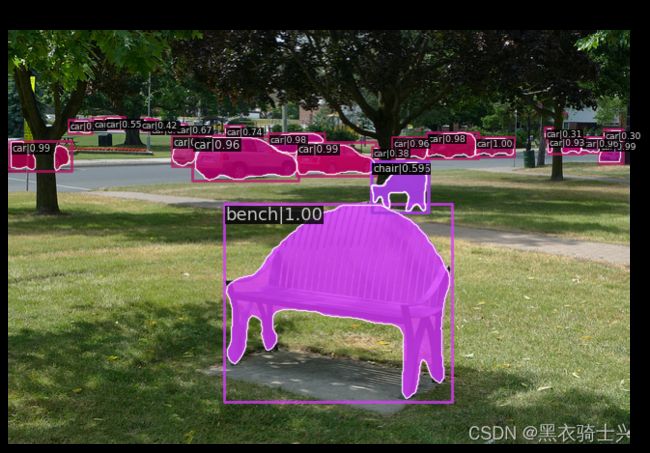

使用mask r-cnn 分割demo.jpg

# search model

mim search mmdet --model "mask r-cnn"

# download config file (.py) and weight file (.pth)

# you can also download from https://github.com/open-mmlab/mmdetection/tree/main/configs

mim download mmdet --config mask_rcnn_r50_fpn_2x_coco --dest .

# infer with mmdet

from mmdet.apis import init_detector, inference_detector, show_result_pyplot

config_file = "mask_rcnn_r50_fpn_2x_coco.py"

checkpoint_file = "mask_rcnn_r50_fpn_2x_coco_bbox_mAP-0.392__segm_mAP-0.354_20200505_003907-3e542a40.pth"

model = init_detector(config_file, checkpoint_file)

result = inference_detector(model, 'demo.jpg')

show_result_pyplot(model, 'demo.jpg', result)demo.jpg

2、根据自己的数据,进行模型训练

0). 数据集

用的是fruit dataset

下载地址 OpenBayes 控制台,注册账号,保存到自己的数据仓库,然后下载

or

链接:https://pan.baidu.com/s/1zVl-6Jk2lCQjfSVjUPx3DQ

提取码:akbz

1). 选择模型

# serch model

mim search mmdet --model "yolov3"

# download config file and pth

mim download mmdet --config yolov3_mobilenetv2_mstrain-416_300e_coco --dest .

2). 修改配置文件(继承的方式修改), 并查看我们的修改是否已经生效

从原来的80个类别,改成检测柠檬这1个类别的分类;

以前有些地方还需要+1表示背景类,这里已经不需要了;

本文这种修改类别数的方法和官方文档一致,无需像有些blog里说的去修改mmdet/datasets/coco.py和mmdet/evaluation/functional/class_names.py

# fruit.py

_base_ = ['yolov3_mobilenetv2_mstrain-416_300e_coco.py']

classes = ("lemon",)

data = dict(

train = dict(

dataset = dict(

ann_file='/data/code/mmdetection_example/fruit/annotations/train.json',

img_prefix='/data/code/mmdetection_example/fruit/train',

classes=classes)

),

val = dict(

ann_file='/data/code/mmdetection_example/fruit/annotations/train.json',

img_prefix='/data/code/mmdetection_example/fruit/train',

classes=classes

),

test = dict(

ann_file='/data/code/mmdetection_example/fruit/annotations/train.json',

img_prefix='/data/code/mmdetection_example/fruit/train',

classes=classes

)

)

model = dict(bbox_head=dict(num_classes=1))

load_from = 'yolov3_mobilenetv2_mstrain-416_300e_coco_20210718_010823-f68a07b3.pth'

# epoch

runner = dict(type='EpochBasedRunner', max_epochs=30)

# learning_rate

optimizer = dict(type='SGD', lr=0.001)

lr_config = None

# lr_config = dict(

# policy='step',

# warmup='linear',

# warmup_iters=4000,

# warmup_ratio=0.0001,

# step=[24, 28])

log_config = dict(interval=25, hooks=[dict(type='TextLoggerHook')])可以查看对应版本的mmcv,找到mmcv/utils/config.py,查看fromfile源代码

# modify config file

from mmcv import Config

config = Config.fromfile('fruit.py')

print(config.pretty_text)

3). 训练模型

# train model

mim train mmdet fruit.py4). 验证自己训练出来的模型

# test single picture

from mmdet.apis import init_detector, inference_detector, show_result_pyplot

config_file = "fruit.py"

checkpoint_file = "work_dirs/fruit/latest.pth"

img = 'fruit/train/lemon_bg_0_aug_0.jpg'

model = init_detector(config_file, checkpoint_file)

result = inference_detector(model, img)

show_result_pyplot(model, img, result)训练了30 个epoch,不知道为啥loss还有71多,测试发现效果看着还行。(因为我们这里没有进行数据划分)

# 批量测试

mim test mmdet fruit.py --checkpoint work_dirs/fruit/latest.pth --show-dir work_dirs/fruit/imgs