nlp算法文本向量化

This article is an overview of tokenization algorithms, ranging from word level, character level and subword level tokenization, with emphasis on BPE, Unigram LM, WordPiece and SentencePiece. It is meant to be readable by both experts and beginners alike. If any concept or explanation is unclear, please contact me and I will be happy to clarify whatever is needed.

本文概述了分词化算法,涵盖词级,字符级和子词级分词化,重点介绍了BPE,Unigram LM,WordPiece和SentencePiece。 它意味着专家和初学者都可以阅读。 如果不清楚任何概念或解释,请与我联系,我很乐意澄清所需内容。

什么是令牌化? (What is tokenization?)

Tokenization is one of the first steps in NLP, and it’s the task of splitting a sequence of text into units with semantic meaning. These units are called tokens, and the difficulty in tokenization lies on how to get the ideal split so that all the tokens in the text have the correct meaning, and there are no left out tokens.

标记化是NLP中的第一步,它的任务是将文本序列分成具有语义含义的单元。 这些单位称为令牌,令牌化的困难在于如何获得理想的分割,以便文本中的所有令牌都具有正确的含义,并且没有遗漏的令牌。

In most languages, text is composed of words divided by whitespace, where individual words have a semantic meaning. We will see later what happens with languages that use symbols, where a symbols has a much more complex meaning than a word. For now we can work with English. As an example:

在大多数语言中,文本是由用空格分隔的单词组成的,其中单个单词具有语义。 稍后我们将看到使用符号的语言会发生什么,其中符号的含义比单词复杂得多。 目前,我们可以使用英语。 举个例子:

- Raw text: I ate a burger, and it was good. 原始文本:我吃了一个汉堡,很好。

- Tokenized text: [’I’, ’ate’, ’a’, ’burger’, ‘,’, ‘and’, ’it’, ’was’, ’good’, ‘.’] 标记文本:['I','ate','a','burger',',','and','it','was','good','。']

‘Burger’ is a type of food, ‘and’ is a conjunction, ‘good’ is a positive adjective, and so on. By tokenizing this way, each element has a meaning, and by joining all the meanings of each token we can understand the meaning of the whole sentence. The punctuation marks get their own tokens as well, the comma to separate clauses and the period to signal the end of the sentence. Here is an alternate tokenization:

“汉堡”是一种食物,“和”是一种连词,“好”是一种肯定形容词,依此类推。 通过这种标记方式,每个元素都有一个含义,并且通过将每个标记的所有含义结合在一起,我们可以理解整个句子的含义。 标点符号也获得自己的标记,用逗号分隔各个子句,并用句点表示句子的结尾。 这是替代的标记化:

- Tokenized text: [’I ate’, ’a’, ’bur’, ‘ger’, ‘an’, ‘d it’, ’wa’, ‘s good’, ‘.’] 带标记的文本:['I ate','a','bur','ger','an','d it','wa','good','。']

For the multiword unit ‘I ate’, we can just add the meanings of ‘I’ and ‘ate’, and for the subword units ‘bur’ and ‘ger’, they have no meaning separately but by joining them we arrive at the familiar word and we can understand what it means.

对于多字单元“ I ate”,我们可以仅添加“ I”和“ ate”的含义,而对于子词单元“ bur”和“ ger”,它们没有单独的含义,但是通过将它们结合在一起,我们可以得出熟悉的单词,我们可以理解其含义。

But what do we do with ‘d it’? What meaning does this have? As humans and speakers of English, we can deduce that ‘it’ is a pronoun, and the letter ‘d’ belongs to a previous word. But following this tokenization, the previous word ‘an’ already has a meaning in English, the article ‘an’ very different from ‘and’. How to deal with this? You might be thinking: stick with words, and give punctuations their own tokens. This is the most common way of tokenizing, called word level tokenization.

但是我们如何处理“ d it”呢? 这有什么意思? 作为人类和说英语的人,我们可以推断出“它”是代词,而字母“ d”属于先前的单词。 但是在此标记化之后,前面的单词“ an”已经具有英语含义,冠词“ an”与“ and”非常不同。 怎么处理呢? 您可能在想: 坚持单词,并给标点符号指定自己的标记。 这是最常用的令牌化方式,称为单词级令牌化。

词级标记 (Word level tokenization)

It consists only of splitting a sentence by the whitespace and punctuation marks. There are plenty of libraries in Python that do this, including NLTK, SpaCy, Keras, Gensim or you can do a custom Regex.

它仅由用空格和标点符号分隔句子组成。 Python中有很多这样做的库,包括NLTK,SpaCy,Keras,Gensim或您可以自定义Regex。

Splitting on whitespace can also split an element which should be regarded as a single token, for example, New York. This is problematic and mostly the case with names, borrowed foreign phrases, and compounds that are sometimes written as multiple words.

在空格上分割也可以分割应该视为单个标记的元素,例如,纽约。 这是有问题的,大多数情况下是名称,借来的外来短语以及有时写成多个单词的复合词。

What about words like ‘don’t’, or contractions like ‘John’s’? Is it better to obtain the token ‘don’t’ or ‘do’ and ‘n’t’? What if there is a typo in the text, and burger turns into ‘birger’? We as humans can see that it was a typo, replace the word with ‘burger’ and continue, but machines can’t. Should the typo affect the complete NLP pipeline?

诸如“不要”之类的词或诸如“约翰之类”之类的缩略词呢? 获得令牌“不”,“做”和“不”更好吗? 如果文本中有拼写错误,而汉堡变成“伯尔格”怎么办? 作为人类,我们可以看到这是一个错字,用“ burger”代替“ burger”,然后继续,但是机器不能。 错字应该影响整个NLP管道吗?

Another drawback of word level tokenization is the huge vocabulary size it creates. Each token is saved into a token vocabulary, and if the vocabulary is built with all the unique words found in all the input text, it creates a huge vocabulary, which produces memory and performance problems later on. A current state-of-the-art deep learning architecture, Transformer XL, has a vocabulary size of 267,735. To solve the problem of the big vocabulary size, we can think of creating tokens with characters instead of words, which is called character level tokenization.

单词级标记化的另一个缺点是它创建的词汇量巨大。 每个令牌都保存到一个令牌词汇表中,如果该词汇表是用在所有输入文本中找到的所有唯一单词构建的,它将创建一个庞大的词汇表,此后会产生内存和性能问题。 当前最先进的深度学习架构Transformer XL的词汇量为267,735。 为了解决词汇量大的问题,我们可以考虑使用字符而不是单词来创建标记,这称为字符级标记化。

字符级标记 (Character level tokenization)

First introduced by Karpathy in 2015, instead of splitting a text into words, the splitting is done into characters, for example, smarter becomes s-m-a-r-t-e-r. The vocabulary size is dramatically reduced to the number of characters in the language, 26 for English plus the special characters. Misspellings or rare words are handled better because they are broken down into characters and these characters are already known in the vocabulary.

Karpathy于2015年首次引入该方法 ,而不是将文本拆分为单词,而是将其拆分为字符,例如,变得更聪明。 词汇量大大减少到该语言中的字符数,英语为26,再加上特殊字符。 拼写错误或稀有单词可以更好地处理,因为它们被分解为字符,并且这些字符在词汇表中已经为人所知。

Tokenizing sequences at the character level has shown some impressive results. Radford et al. (2017) from OpenAI showed that character level models can capture the semantic properties of text. Kalchbrenner et al. (2016) from Deepmind and Leet et al. (2017) both demonstrated translation at the character level. These are particularly impressive results as the task of translation captures the semantic understanding of the underlying text.

字符级别的标记序列显示了一些令人印象深刻的结果。 Radford等。 OpenAI (2017)的研究表明,字符级模型可以捕获文本的语义属性。 Kalchbrenner等。 (2016)来自Deepmind和Leet等。 (2017)都展示了角色层面的翻译。 由于翻译任务捕获了对基础文本的语义理解,因此这些结果尤其令人印象深刻。

Reducing the vocabulary size has a tradeoff with the sequence length. Now, each word being splitted into all its characters, the tokenized sequence is much longer than the initial text. The word ‘smarter’ is transformed into 7 different tokens. Additionally, the main goal of tokenization is not achieved, because characters, at least in English, have no semantic meaning. Only when joining characters together do they acquire a meaning. As an in-betweener between word and character tokenization, subword tokenization produces subword units, smaller than words but bigger than just characters.

减少词汇量需要权衡序列长度。 现在,每个单词都被分解成所有字符,标记化序列比初始文本长得多。 “更聪明”一词将转换为7个不同的令牌。 此外,没有实现标记化的主要目的,因为至少在英语中,字符没有语义。 只有将字符连接在一起才能获得意义。 作为单词和字符标记化之间的中间人,子词标记化产生子词单位 ,该子词单位小于单词,但大于字符。

子字级标记 (Subword level tokenization)

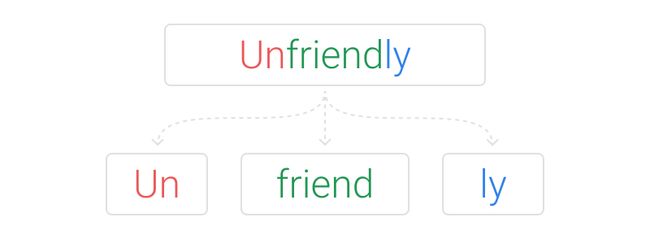

Example of subword tokenization 子词标记化的示例Subword level tokenization doesn’t transform most common words, and decomposes rare words in meaningful subword units. If ‘unfriendly’ was labelled as a rare word, it would be decomposed into ‘un-friend-ly’ which are all meaningful units, ‘un’ meaning opposite, ‘friend’ is a noun, and ‘ly’ turns it into an adverb. The challenge here is how to make that segmentation, how do we get ‘un-friend-ly’ and not ‘unfr-ien-dly’.

子词级标记化不会转换最常见的词,并以有意义的子词为单位分解稀有词。 如果将“不友好的”标记为稀有单词,它将分解为“ un-friend-ly”,它们都是有意义的单位,“ un”的含义相反,“ friend”是名词,“ ly”将其转换为副词。 这里的挑战是如何进行细分,如何使我们变得“不友善”而不是“不友善”。

As of 2020, the state-of-the-art deep learning architectures, based on Transformers, use subword level tokenization. BERT makes the following tokenization for this example:

截至2020年,基于Transformers的最先进的深度学习架构使用子词级标记。 BERT针对此示例进行以下标记化:

- Raw text: I have a new GPU. 原始文本:我有一个新的GPU。

- Tokenized text: [’i’, ’have’, ’a’, ’new’, ’gp’, ’##u’, ’.’] 标记文本:['i','have','a','new','gp','## u','。']

Words present in the vocabulary are tokenized as words themselves, but ‘GPU’ is not found in the vocabulary and is treated as a rare word. Following an algorithm it is decided that it is segmented into ‘gp-u’. The ## before ‘u’ are to show that this subword belongs to the same word as the previous subword. BPE, Unigram LM, WordPiece and SentencePiece are the most common subword tokenization algorithms. They will be explained briefly because this is an introductory post, if you are interested in deeper descriptions, let me know and I will do more detailed posts for each of them.

词汇表中存在的单词本身被标记为单词,但是在词汇表中找不到“ GPU”,因此将其视为稀有单词。 根据算法,决定将其分段为“ gp-u”。 “ u”之前的##表示此子字与上一个子字属于同一字。 BPE,Unigram LM,WordPiece和SentencePiece是最常见的子词标记化算法。 因为这是一篇介绍性文章,所以将对其进行简要说明,如果您对更深入的描述感兴趣,请告诉我,我将为每个文章做更详细的介绍。

BPE (BPE)

Introduced by Sennrich et al. in 2015, it merges the most frequently occurring character or character sequences iteratively. This is roughly how the algorithm works:

由Sennrich等人介绍。 在2015年 ,它迭代地合并最频繁出现的字符或字符序列。 该算法大致是这样工作的:

- Get a large enough corpus. 获得足够大的语料库。

- Define a desired subword vocabulary size. 定义所需的子词词汇量。

- Split word to sequence of characters and append a special token showing the beginning-of-word or end-of-word affix/suffix respectively. 将单词拆分为字符序列,并附加一个特殊的标记,分别显示单词的开头或单词的结尾词缀/后缀。

- Calculate pairs of sequences in the text and their frequencies. For example, (’t’, ’h’) has frequency X, (’h’, ’e’) has frequency Y. 计算文本中的序列对及其频率。 例如,('t','h')具有频率X,('h','e')具有频率Y.

- Generate a new subword according to the pairs of sequences that occurs most frequently. For example, if (’t’, ’h’) has the highest frequency in the set of pairs, the new subword unit would become ’th’. 根据最频繁出现的序列对生成一个新的子词。 例如,如果('t','h')在该对对中具有最高的频率,则新的子字单元将变为'th'。

- Repeat from step 3 until reaching subword vocabulary size (defined in step 2) or the next highest frequency pair is 1. Following the example, (’t’, ’h’) would be replaced by ’th’ in the corpus, the pairs calculated again, the most frequent pair obtained again, and merged again. 从第3步开始重复,直到达到子词词汇量(在第2步中定义)或下一个最高频率对为1。在示例中,语料库中的“ t”,“ h”将替换为“ th”,再次计算,最频繁的对再次获得,并再次合并。

BPE is a greedy and deterministic algorithm and can not provide multiple segmentations. That is, for a given text, the tokenized text is always the same. A more detailed explanation of how BPE works will be detailed in a later article, or you can also find it in many other articles.

BPE是一种贪婪的确定性算法,不能提供多个细分。 也就是说,对于给定的文本,标记化文本始终是相同的。 稍后的文章中将详细介绍BPE的工作原理,或者您也可以在许多其他文章中找到它。

Unigram LM (Unigram LM)

Unigram language modelling (Kudo, 2018) is based on the assumption that all subword occurrences are independent and therefore subword sequences are produced by the product of subword occurrence probabilities. These are the steps of the algorithm:

Unigram语言建模( Kudo,2018 )基于所有子词出现都是独立的假设,因此子词序列是由子词出现概率的乘积产生的。 这些是算法的步骤:

- Get a large enough corpus. 获得足够大的语料库。

- Define a desired subword vocabulary size. 定义所需的子词词汇量。

- Optimize the probability of word occurrence by giving a word sequence. 通过给出单词序列来优化单词出现的可能性。

- Compute the loss of each subword. 计算每个子字的损失。

- Sort the symbol by loss and keep top X % of word (X=80% for example). To avoid out of vocabulary instances, character level is recommended to be included as a subset of subwords. 按损失对符号进行排序,并保留单词的前X%(例如X = 80%)。 为了避免出现词汇不足的情况,建议将字符级别作为子词的子集包含在内。

- Repeat step 3–5 until reaching the subword vocabulary size (defined in step 2) or there are no changes (step 5) 重复步骤3-5,直到达到子词词汇量(在步骤2中定义)或没有变化(步骤5)

Kudo argues that the unigram LM model is more flexible than BPE because it is based on a probabilistic LM and can output multiple segmentations with their probabilities. Instead of starting with a group of base symbols and learning merges with some rule, like BPE or WordPiece, it starts from a large vocabulary (for instance, all pretokenized words and the most common substrings) that it reduces progressively.

Kudo认为,unigram LM模型比BPE更为灵活,因为它基于概率LM并可以输出具有概率的多个分段。 它不是从一组基本符号开始并且学习与某些规则(例如BPE或WordPiece)合并,而是从一个很大的词汇(例如,所有预先加标记的单词和最常见的子字符串)开始,逐渐减少。

单词集 (WordPiece)

WordPiece (Schuster and Nakajima, 2012) was initially used to solve Japanese and Korean voice problem, and is currently known for being used in BERT, but the precise tokenization algorithm and/or code has not been made public. It is similar to BPE in many ways, except that it forms a new subword based on likelihood, not on the next highest frequency pair. These are the steps of the algorithm:

WordPiece( Schuster和Nakajima,2012年 )最初用于解决日文和韩文语音问题,目前因在BERT中使用而闻名,但精确的标记化算法和/或代码尚未公开。 它在许多方面与BPE相似,不同之处在于它基于似然而不是下一个最高频率对形成一个新的子字。 这些是算法的步骤:

- Get a large enough corpus. 获得足够大的语料库。

- Define a desired subword vocabulary size. 定义所需的子词词汇量。

- Split word to sequence of characters. 将单词拆分为字符序列。

- Initialize the vocabulary with all the characters in the text. 用文本中的所有字符初始化词汇表。

- Build a language model based on the vocabulary. 根据词汇建立语言模型。

- Generate a new subword unit by combining two units out of the current vocabulary to increment the vocabulary by one. Choose the new subword unit out of all the possibilities that increases the likelihood on the training data the most when added to the model. 通过将当前词汇表中的两个单元组合以将词汇表增加一个来生成新的子词单元。 从所有可能性中选择新的子词单位,这会在添加到模型时最大程度地增加训练数据的可能性。

- Repeat step 5 until reaching subword vocabulary size (defined in step 2) or the likelihood increase falls below a certain threshold. 重复第5步,直到达到子词词汇量(在第2步中定义),或者似然性增加降至某个阈值以下。

句子片段 (SentencePiece)

All the tokenization methods so far required some form of pretokenization, which constitutes a problem because not all languages use spaces to separate words, or some languages are made of symbols. SentencePiece is equipped to accept pretokenization for specific languages. You can find the open source software in Github. For example, XLM uses SentencePiece and adds specific pretokenizers for Chinese, Japanese and Thai.

到目前为止,所有的标记化方法都需要某种形式的预标记化,这构成了一个问题,因为并非所有语言都使用空格来分隔单词,或者某些语言是由符号组成的。 SentencePiece可以接受特定语言的预令牌化。 您可以在Github中找到开源软件。 例如, XLM使用SentencePiece,并为中文,日语和泰语添加特定的预令牌。

SentencePiece is conceptually similar to BPE, but it does not use the greedy encoding strategy, achieving higher quality tokenization. SentencePiece sees ambiguity in character grouping as a source of regularization for the model during training, which makes training much slower because there are more parameters to optimize for and discouraged Google from using it in BERT, opting for WordPiece instead.

SentencePiece在概念上类似于BPE,但是它不使用贪婪编码策略,从而实现了更高质量的标记化。 SentencePiece将字符分组的歧义视为训练期间模型正则化的来源,这使训练速度变慢,因为有更多参数可以优化并阻止Google在BERT中使用它,而是选择了WordPiece。

结论 (Conclusion)

Historically, tokenization methods have evolved from word to character, and lately subword level. This is a quick overview of tokenization methods, I hope the text is readable and understandable. Follow me on Twitter for more NLP information, or ask me any questions there :)

从历史上看,标记化方法已经从单词演变为字符,以及最近的子单词级别。 这是令牌化方法的快速概述,我希望文本可读易懂。 在Twitter上关注我以获取更多NLP信息,或在这里问我任何问题:)

翻译自: https://towardsdatascience.com/overview-of-nlp-tokenization-algorithms-c41a7d5ec4f9

nlp算法文本向量化