pytorch softmax回归从零开始实现

pytorch softmax回归从零开始实现

- 数据

- 基本设置

- softmax函数

- 定义模型

- 定义损失函数

- 准确率

- 训练及预测

数据

import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import time

import sys

import d21

import numpy as np

mnist_train =torchvision.datasets.FashionMNIST(root='~/Datasets/FashionMNIST',train=True, download=True, transform=transforms.ToTensor())

mnist_test =torchvision.datasets.FashionMNIST(root='~/Datasets/FashionMNIST',train=False, download=True, transform=transforms.ToTensor())

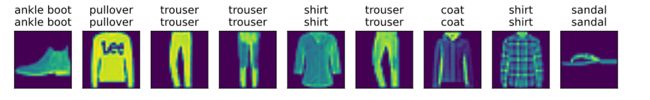

def get_fashion_mnist_labels(labels):

text_labels = ['t-shirt', 'trouser', 'pullover', 'dress','coat','sandal', 'shirt', 'sneaker', 'bag', 'ankleboot']

return [text_labels[int(i)] for i in labels]

def show_fashion_mnist(images, labels):

d21.use_svg_display()

# 这⾥的_表示我们忽略(不使⽤)的变量

_, figs = plt.subplots(1, len(images), figsize=(12, 12))

for f, img, lbl in zip(figs, images, labels):

f.imshow(img.view((28, 28)).numpy())

f.set_title(lbl)

f.axes.get_xaxis().set_visible(False)

f.axes.get_yaxis().set_visible(False)

plt.show()

X, y = [], []

for i in range(10):

X.append(mnist_train[i][0])

y.append(mnist_train[i][1])

show_fashion_mnist(X, get_fashion_mnist_labels(y))

基本设置

batch_size = 256

train_iter, test_iter = d21.load_data_fashion_mnist(batch_size)

num_inputs = 784

num_outputs = 10

W = torch.tensor(np.random.normal(0, 0.01, (num_inputs,num_outputs)), dtype=torch.float)

b = torch.zeros(num_outputs, dtype=torch.float)

W.requires_grad_(requires_grad=True)

b.requires_grad_(requires_grad=True)

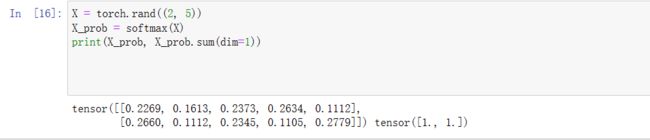

softmax函数

def softmax(X):

X_exp = X.exp()

partition = X_exp.sum(dim=1, keepdim=True)

return X_exp / partition

例子:softmax函数的作用,使得一行输入转化为,和为1的数,就相当于每个分类的概率。

定义模型

def net(X):

return softmax(torch.mm(X.view((-1, num_inputs)), W) + b)

定义损失函数

def cross_entropy(y_hat, y):

return - torch.log(y_hat.gather(1, y.view(-1, 1)))

准确率

def accuracy(y_hat, y):

return (y_hat.argmax(dim=1) == y).float().mean().item()

def evaluate_accuracy(data_iter, net):

acc_sum, n = 0.0, 0

for X, y in data_iter:

acc_sum += (net(X).argmax(dim=1) == y).float().sum().item()

n += y.shape[0]

return acc_sum / n

训练及预测

训练

num_epochs, lr = 5, 0.1

# 本函数已保存在d2lzh包中⽅便以后使⽤

def train_ch3(net, train_iter, test_iter, loss, num_epochs,batch_size,params=None, lr=None, optimizer=None):

for epoch in range(num_epochs):

train_l_sum, train_acc_sum, n = 0.0, 0.0, 0

for X, y in train_iter:

y_hat = net(X)

l = loss(y_hat, y).sum()

if optimizer is not None:

optimizer.zero_grad()

elif params is not None and params[0].grad is not None:

for param in params:

param.grad.data.zero_()

l.backward()

if optimizer is None:

d21.sgd(params, lr, batch_size)

else:

optimizer.step() # “softmax回归的简洁实现”⼀节将⽤到

train_l_sum += l.item()

train_acc_sum += (y_hat.argmax(dim=1) ==y).sum().item()

n += y.shape[0]

test_acc = evaluate_accuracy(test_iter, net)

print('epoch %d, loss %.4f, train acc %.3f, test acc %.3f'% (epoch + 1, train_l_sum / n, train_acc_sum / n,test_acc))

train_ch3(net, train_iter, test_iter, cross_entropy, num_epochs,batch_size, [W, b], lr)

预测

X, y = next(iter(test_iter))

true_labels = d21.get_fashion_mnist_labels(y.numpy())

pred_labels =d21.get_fashion_mnist_labels(net(X).argmax(dim=1).numpy())

titles = [true + '\n' + pred for true, pred in zip(true_labels,pred_labels)]

show_fashion_mnist(X[0:9], titles[0:9])