【Python机器学习】决策树ID3算法结果可视化附源代码 对UCI数据集Caesarian Section进行分类

决策树

- 实现所用到的库

- 实现

-

- 经验熵计算

-

- 经验熵计算公式

- 条件熵

- 信息增益

- ID3

-

- 选择信息增益最大的属性

- 过程

- 拟合

- 预测

- 评估

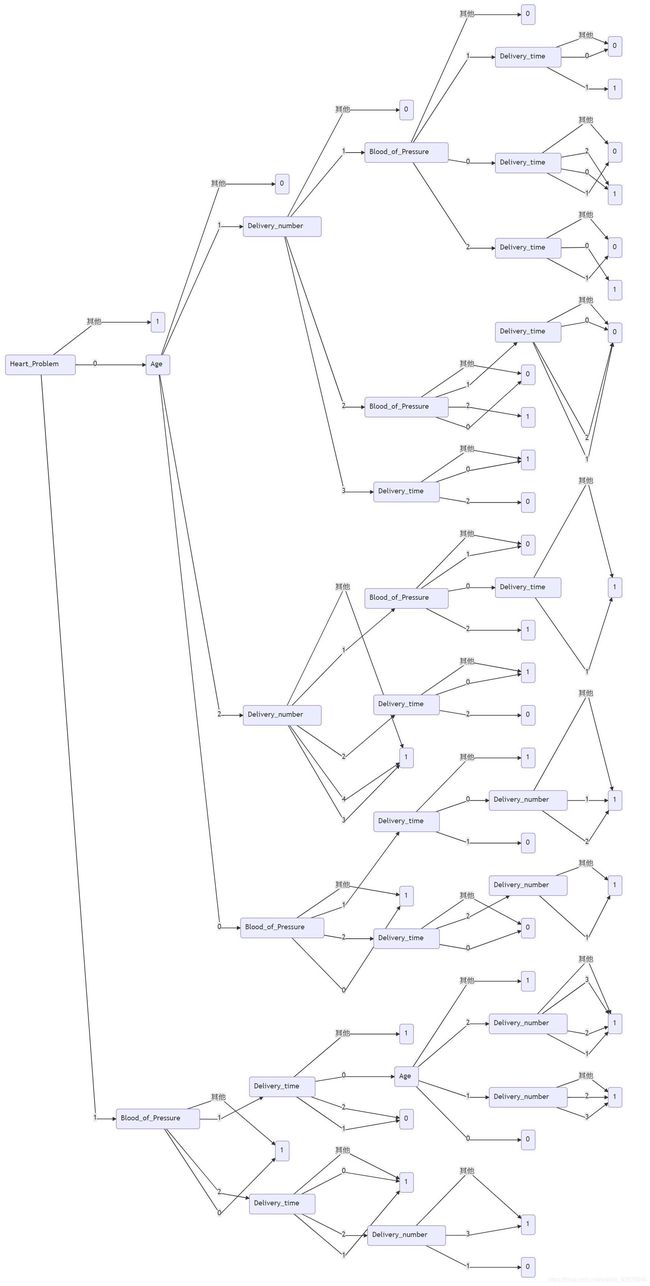

- 决策树可视化

-

- 决策树保存

- 决策树读取

- 效果图

- 总代码

-

- 如何获得每一步计算结果

- 实验结果(决策树)

-

- debug模式

决策树(Decision Tree)是在已知各种情况发生概率的基础上,通过构成决策树来求取净现值的期望值大于等于零的概率,评价项目风险,判断其可行性的决策分析方法,是直观运用概率分析的一种图解法。由于这种决策分支画成图形很像一棵树的枝干,故称决策树。 来源:决策树_百度百科

数据集使用UCI数据集 Caesarian Section Classification Dataset Data Set

【与数据集相关的详细信息和下载地址】

- 本代码实现了决策树ID3算法,并使用决策树ID3算法进行预测。

- 决策树算法写到类中,实现代码复用,并在使用过程中降低复杂度。

- 将logging日志等级调整为DEBUG,可以输出决策树每一步的详细过程。

- 通过使用mermaid的文本绘图格式对决策树进行了可视化。

实现所用到的库

- Python 3

- Pandas

- sklearn(仅用于切分数据集)

- numpy

实现

经验熵计算

熵中的概率由数据估计(特别是最大似然估计)得到时,所对应的熵称为经验熵

经验熵计算公式

H = − ∑ i = 1 n p ( x i ) l o g 2 ( p ( x i ) ) H = -\sum^n_{i=1}p(x_i)log_2(p(x_i)) H=−i=1∑np(xi)log2(p(xi))

def empirical_entropy(self, dataset=None):

"""

求经验熵

$$H = -\sum^n_{i=1}p(x_i)log_2(p(x_i))$$

:return: Float 经验熵

"""

if dataset is None:

dataset = self.DataSet

columns_count = dataset.iloc[:, -1].value_counts()

entropy = 0

total_count = columns_count.sum()

for count in columns_count:

p = count / total_count

entropy -= p * np.log2(p)

return entropy

条件熵

条件熵 H(Y∣X)H(Y|X)H(Y∣X)表示在已知随机变量X的条件下随机变量Y的不确定性。

定义X给定条件下Y的条件概率分布的熵对X的数学期望:

H ( Y ∣ X ) = ∑ i = 1 n p ( i ) H ( Y ∣ X = x i ) H(Y|X) = \sum_{i=1}^np(i)H(Y|X=x_i) H(Y∣X)=i=1∑np(i)H(Y∣X=xi)

信息增益

信息增益表示得知特征X的信息而使得类Y的信息不确定性减少的程度。

即:选择该特征对分类的帮助程度。

在分类问题困难时,也就是说在训练数据集经验熵大的时候,信息增益值会偏大,反之信息增益值会偏小。

使用信息增益比可以对这个问题进行校正,这是特征选择的另一个标准。

特征A对训练数据集D的信息增益g(D,A),定义为集合D的经验熵H(D)与特征A给定条件下D的经验条件熵H(D|A)之差:

g ( D , A ) = H ( D ) − H ( D ∣ A ) g(D,A) = H(D)-H(D|A) g(D,A)=H(D)−H(D∣A)

ID3

简单来说,就是不断选取能够对分类提供最大效果的属性,然后根据属性的各个值选取接下来的最佳属性

选择信息增益最大的属性

因为条件经验熵越小(表示该分类的结果比较统一,即信息增益越大)表示该属性对于分类重要性越大。

其中extract_dataset 相当于在符合指定条件下数据集,用于接下来计算条件经验熵,并获得信息增益。

def extract_dataset(self, dataset: pd.DataFrame, column, label):

"""

根据column和label筛选出指定的数据集

:return: pd.DataFrame 筛选后的数据集

"""

if type(column) == int:

split_dataset = dataset[dataset.iloc[:, column] == label].drop(dataset.columns[column], axis=1)

else:

split_dataset = dataset[dataset.loc[:, column] == label].drop(column, axis=1)

return split_dataset

def best_empirical_entropy(self, dataset: pd.DataFrame = None):

"""

选取数据集中的columns中,最好的column(经验熵最大)

:param dataset: 带选取的数据集

:return: 返回column

"""

if dataset is None:

dataset = self.DataSet

columns = dataset.columns[:-1]

total_count = dataset.shape[0]

empirical_entropy = self.empirical_entropy(dataset)

logging.debug(f"now dataset shape is {dataset.shape}, column is {dataset.columns.tolist()}")

logging.debug(f"empirical_entropy is {empirical_entropy}")

informationGain_max = -1

best_column = None

for column in columns:

entropy_tmp = 0

data_counts = dataset.loc[:, column].value_counts()

data_labels = data_counts.index

logging.debug(f"now is {column}")

for label in data_labels:

split_dataset = self.extract_dataset(dataset, column, label)

count = split_dataset.shape[0]

p = count / total_count

entropy_tmp += p * self.empirical_entropy(split_dataset)

logging.debug(f"now label is {label}, chooseData shape is {split_dataset.shape}, "

f"Ans count: {split_dataset.iloc[:, -1].value_counts().tolist()}, "

f"entropy: {self.empirical_entropy(split_dataset)}")

informationGain = empirical_entropy - entropy_tmp

logging.debug(f"entropy: {entropy_tmp}, {column} informationGain:{informationGain}")

if informationGain > informationGain_max:

best_column = column

informationGain_max = informationGain

logging.debug(f"Choose {best_column}:{informationGain_max}")

return best_column

过程

- 选取信息增益最大的属性。

- 如果各个属性的最大的信息增益不够大,即对分类帮助有限,此时直接设定为结果分类中,数量最多的一个值。

- 如果没有可以选取的属性(因为属性在之前已经选择完了),此时同样选取结果数量最多的一个值。

造成没有可以选取的原因:因为可能同一个属性,可能有不同结果。

- 选取当前属性的各个值,然后分别执行1;

- 当递归完毕,即每个属性的值最终都有一个值,即为决策树,如果在测试过程出现训练阶段没有出现的结果,可以为每一个属性单独设置一个

其他值用于表示决策树中没有该属性的值时决策树的输出结果,这个值可以设置为当前属性数量最多的结果值。

def id3(self, dataset: pd.DataFrame = None):

'''

实现决策树的ID3算法

:param dataset: 输入的数据集

:return: dict 决策树节点

'''

if dataset is None:

dataset = self.DataSet

next_tree = {}

result_count = dataset.iloc[:, -1].value_counts()

result_max = result_count.idxmax()

next_tree["其他"] = result_max

if result_count.shape[0] == 1 or dataset.shape[1] < 2 or self.empirical_entropy(dataset) < self._threshold:

self._leafCount += 1

logging.debug(f"select decision {result_max}, result_type:{result_count.tolist()}, dataset column:{dataset.shape}, lower than threshold:{self.empirical_entropy(dataset) < self._threshold}")

tree = {"next": next_tree}

else:

best_column = self.best_empirical_entropy(dataset)

value_counts = dataset[best_column].value_counts()

labels = value_counts.index

for label in labels:

logging.debug(f"now choose_column:{best_column}, label: {label}")

split_dataset = self.extract_dataset(dataset, best_column, label)

next_decision = self.id3(split_dataset)

next_tree[label] = next_decision

tree = {"column": best_column, "next": next_tree}

return tree

拟合

def fit(self, x: pd.DataFrame, y=None, algorithm: str = "id3", threshold=0.1):

'''

拟合函数,输入数据集进行拟合,其中如果y没有输入,则x的最后一列应包含分类结果

:param x: pd.DataFrame数据集的属性(当y为None时,为整个数据集-包含结果)

:param y: list like,shape=(-1,)数据集的结果

:param algorithm: 选择算法(目前仅有ID3)

:param threshold: 选择信息增益的阈值

:return: 决策树的根节点

'''

self.check_dataset(x, dimension=2)

self.check_dataset(y, dimension=1)

self._threshold = threshold

dataset = x

if y is not None:

dataset.insert(dataset.shape[1], 'DECISION_tempADD', y)

self.decision_tree = eval("self." + algorithm)(dataset)

logging.info(f"decision_tree leaf:{self._leafCount}")

return self.decision_tree

预测

def predict(self, x: pd.DataFrame):

'''

预测数据

:param x:pd.DataFrame 输入的数据集

:return: 分类结果

'''

self.y_predict = x.apply(self._predict_line, axis=1)

return self.y_predict

def _predict_line(self, line):

"""

私有函数,用于在predict中,对每一行数据进行预测

:param line: 输入的数据集的某一行数据

:return: 该一行的分类结果

"""

tree = self.decision_tree

while True:

try:

if len(tree["next"]) == 1:

return tree["next"]["其他"]

else:

value = line[tree["column"]]

tree = tree["next"][value]

except:

return tree["next"]["其他"]

评估

评估结果的准确度,精确度,召回率。

- score评估函数:仅适用于二分类,对于多分类该算法不适用(但是决策树代码可以predict预测)

- 同时score判断正例需要结果为1,反例结果为0。

def score(self, y):

'''

评估函数,用于评估结果

:param y: 输入实际的结果

:return: None

'''

if self.y_predict is None:

raise Exception("before score should predict first!")

y_acutalTrue = y[(y == 1) & (self.y_predict == 1)].shape[0]

y_acutalFalse = y[(y == 0) & (self.y_predict == 0)].shape[0]

y_predictTrue = self.y_predict[self.y_predict == 1].shape[0]

y_true = y[y == 1].shape[0]

y_total = y.shape[0]

logging.debug(f"y_acutalTrue:{y_acutalTrue}, y_acutalFalse:{y_acutalFalse}, y_predictTrue:{y_predictTrue}, "

f"y_true:{y_true}, y_total:{y_total}")

Accuracy = (y_acutalTrue + y_acutalFalse) / y_total

Precision = y_acutalTrue / y_predictTrue

Recall = y_acutalTrue / y_true

print("Accuracy: ", Accuracy,

"Precision: ", Precision,

"Recall: ", Recall

)

决策树可视化

利用mermaid文本绘图,将预测的值做了合并,同一属性的不同值但是分类结果相同,则可视化时都指向同一个输出节点。

- 可视化函数提供了两种输出格式

- markdown格式

- html格式(推荐,使用浏览器即可查看决策树)

决策树保存

def save(self, savePath: str):

open(savePath, "w").write(str(decisionTree.decision_tree))

logging.info(f"决策树已保存,位置:{savePath}")

决策树读取

def load(self, savePath: str):

tree = eval(open(savePath, "r").read())

if type(tree) == dict:

self.decision_tree = tree

else:

raise Exception("Load Faild!")

效果图

def visualOutput(self, savePath="", outputFormat="html", direction="TD"):

'''

将决策树可视化输出,格式为‘md'或’html'

:param outputFormat: 设置输出格式

:return: 对应输出格式的文本

'''

if self.decision_tree is None:

raise Exception("should fit first!")

text = ""

if outputFormat == "md":

text = self._format_md(direction=direction)

elif outputFormat == "html":

text = self._format_html(direction=direction)

if savePath != "":

open(savePath, "w", encoding="utf-8").write(text)

return text

def _format_html(self, direction="TD"):

'''

决策树的可视化为html格式

:return: html代码

'''

html_start = '' \

'' \

'' \

'' \

'DecisionTree ' \

'{}'

html_end = ''

mermaid = self._format_md(end=";", direction=direction)

mermaid = mermaid.replace("```mermaid\n", "").replace("```", "")

html = html_start.replace("{}", mermaid)+html_end

return html

def _format_md(self, direction="TD", end="\n"):

'''

决策树的可视化为md代码(mermaid代码)

:param end: 设置每行结尾符号

:param direction: 设置方向

:return:

'''

md = "```mermaid\n"

md += f"graph {direction}{end}"

total_node = 1

current_nodeID = 0

if len(self.decision_tree) != 2:

code_line = f"{current_nodeID}(start)-->{self.decision_tree['next']['其他']}"

return md + code_line + "\n```"

queue = [self.decision_tree]

while len(queue) > 0:

node = queue.pop(0)

ans_node = []

for key in node["next"].keys():

if type(node['next'][key]) == dict:

if len(node['next'][key]) == 1:

decision = node['next'][key]['next']['其他']

if decision not in ans_node:

ans_node.append(decision)

nodeID_ans = ans_node.index(decision)

code_line = f"{current_nodeID}({node['column']})--{key}-->" \

f"L{current_nodeID}_{nodeID_ans}({decision})"

else:

code_line = f"{current_nodeID}({node['column']})--{key}-->{total_node}"

queue.append(node["next"][key])

total_node += 1

else:

decision = node['next'][key]

if decision not in ans_node:

ans_node.append(decision)

nodeID_ans = ans_node.index(decision)

code_line = f"{current_nodeID}({node['column']})--{key}-->" \

f"L{current_nodeID}_{nodeID_ans}({decision})"

# code_line_b = str(code_line.encode("utf-8")).lstrip("b'").rstrip("'")

md += code_line+end

current_nodeID += 1

return md + "```"

总代码

如何获得每一步计算结果

不想要那么多过程,可以将开头的logging.basicConfig中的level设置为INFO即可。

即:

logging.basicConfig(level=logging.DEBUG, format="%(asctime)s-[%(name)s]\t[%(levelname)s]\t[%(funcName)s]: %(message)s")

修改为:

logging.basicConfig(level=logging.INFO, format="%(asctime)s-[%(name)s]\t[%(levelname)s]\t[%(funcName)s]: %(message)s")

如果需要导出日志:

参数filename为输出日志位置。

参数filemode为输出日志写入模式。

logging.basicConfig(level=logging.DEBUG, filename='DecisionTree.log', filemode='w', format="%(asctime)s-[%(name)s]\t[%(levelname)s]\t[%(funcName)s]: %(message)s")

运行代码可能存在问题

- 数据集不对:Caesarian Section Classification Dataset下载后为arff格式,该代码使用的数据集格式为csv,需要将arff中的数据提取出来,可以使用记事本,将arff的数据部分保存为csv格式即可。

- 此外本代码提供一个demo,无需外部数据集亦可运行。

- score评估函数:仅适用于二分类,对于多分类该算法不适用(决策树可以predict),同时score判断正例需要结果为1,反例结果为0。

import pandas as pd

import numpy as np

import logging

from sklearn.model_selection import train_test_split

logging.basicConfig(level=logging.DEBUG,

format="%(asctime)s-[%(name)s]\t[%(levelname)s]\t[%(funcName)s]: %(message)s")

"""

application: Decision_tree-ID3

writer: Flysky

Date: 2020年10月14日

"""

class DecisionTree:

def __init__(self):

self.DataSet = None

self._threshold = 0.1

self._leafCount = 0

self.decision_tree = None

self.y_predict = None

def check_dataset(self, dataset: pd.DataFrame, dimension=2):

if len(dataset.shape) != dimension:

raise ValueError(f"data dimension not {dimension} but {len(dataset.shape)}")

def empirical_entropy(self, dataset=None):

"""

求经验熵

$$H = -\sum^n_{i=1}p(x_i)log_2(p(x_i))$$

:return: Float 经验熵

"""

if dataset is None:

dataset = self.DataSet

columns_count = dataset.iloc[:, -1].value_counts()

entropy = 0

total_count = columns_count.sum()

for count in columns_count:

p = count / total_count

entropy -= p * np.log2(p)

return entropy

def extract_dataset(self, dataset: pd.DataFrame, column, label):

"""

根据column和label筛选出指定的数据集

:return: pd.DataFrame 筛选后的数据集

"""

if type(column) == int:

split_dataset = dataset[dataset.iloc[:, column] == label].drop(dataset.columns[column], axis=1)

else:

split_dataset = dataset[dataset.loc[:, column] == label].drop(column, axis=1)

return split_dataset

def best_empirical_entropy(self, dataset: pd.DataFrame = None):

"""

选取数据集中的columns中,最好的column(经验熵最大)

:param dataset: 带选取的数据集

:return: 返回column

"""

if dataset is None:

dataset = self.DataSet

columns = dataset.columns[:-1]

total_count = dataset.shape[0]

empirical_entropy = self.empirical_entropy(dataset)

logging.debug(f"now dataset shape is {dataset.shape}, column is {dataset.columns.tolist()}")

logging.debug(f"empirical_entropy is {empirical_entropy}")

informationGain_max = -1

best_column = None

for column in columns:

entropy_tmp = 0

data_counts = dataset.loc[:, column].value_counts()

data_labels = data_counts.index

logging.debug(f"now is {column}")

for label in data_labels:

split_dataset = self.extract_dataset(dataset, column, label)

count = split_dataset.shape[0]

p = count / total_count

entropy_tmp += p * self.empirical_entropy(split_dataset)

logging.debug(f"now label is {label}, chooseData shape is {split_dataset.shape}, "

f"Ans count: {split_dataset.iloc[:, -1].value_counts().tolist()}, "

f"entropy: {self.empirical_entropy(split_dataset)}")

informationGain = empirical_entropy - entropy_tmp

logging.debug(f"entropy: {entropy_tmp}, {column} informationGain:{informationGain}")

if informationGain > informationGain_max:

best_column = column

informationGain_max = informationGain

logging.debug(f"Choose {best_column}:{informationGain_max}")

return best_column

def id3(self, dataset: pd.DataFrame = None):

'''

实现决策树的ID3算法

:param dataset: 输入的数据集

:return: dict 决策树节点

'''

if dataset is None:

dataset = self.DataSet

next_tree = {}

result_count = dataset.iloc[:, -1].value_counts()

result_max = result_count.idxmax()

next_tree["其他"] = result_max

if result_count.shape[0] == 1 or dataset.shape[1] < 2 or self.empirical_entropy(dataset) < self._threshold:

self._leafCount += 1

logging.debug(

f"select decision {result_max}, result_type:{result_count.tolist()}, dataset column:{dataset.shape}, lower than threshold:{self.empirical_entropy(dataset) < self._threshold}")

tree = {"next": next_tree}

else:

best_column = self.best_empirical_entropy(dataset)

value_counts = dataset[best_column].value_counts()

labels = value_counts.index

for label in labels:

logging.debug(f"now choose_column:{best_column}, label: {label}")

split_dataset = self.extract_dataset(dataset, best_column, label)

next_decision = self.id3(split_dataset)

next_tree[label] = next_decision

tree = {"column": best_column, "next": next_tree}

return tree

def fit(self, x: pd.DataFrame, y=None, algorithm: str = "id3", threshold=0.1):

'''

拟合函数,输入数据集进行拟合,其中如果y没有输入,则x的最后一列应包含分类结果

:param x: pd.DataFrame数据集的属性(当y为None时,为整个数据集-包含结果)

:param y: list like,shape=(-1,)数据集的结果

:param algorithm: 选择算法(目前仅有ID3)

:param threshold: 选择信息增益的阈值

:return: 决策树的根节点

'''

self.check_dataset(x, dimension=2)

self.check_dataset(y, dimension=1)

self._threshold = threshold

dataset = x

if y is not None:

dataset.insert(dataset.shape[1], 'DECISION_tempADD', y)

self.decision_tree = eval("self." + algorithm)(dataset)

logging.info(f"decision_tree leaf:{self._leafCount}")

return self.decision_tree

def leaf_count(self):

'''

统计叶子节点个数(此处的叶子节点即能确定分类的属性值所对应的分类结果值

:return: 叶子节点个数

'''

return self._leafCount

def predict(self, x: pd.DataFrame):

'''

预测数据

:param x:pd.DataFrame 输入的数据集

:return: 分类结果

'''

self.y_predict = x.apply(self._predict_line, axis=1)

return self.y_predict

def _predict_line(self, line):

"""

私有函数,用于在predict中,对每一行数据进行预测

:param line: 输入的数据集的某一行数据

:return: 该一行的分类结果

"""

tree = self.decision_tree

while True:

try:

if len(tree["next"]) == 1:

return tree["next"]["其他"]

else:

value = line[tree["column"]]

tree = tree["next"][value]

except:

return tree["next"]["其他"]

def score(self, y):

'''

评估函数,用于评估结果

:param y: 输入实际的结果

:return: None

'''

if self.y_predict is None:

raise Exception("before score should predict first!")

y_acutalTrue = y[(y == 1) & (self.y_predict == 1)].shape[0]

y_acutalFalse = y[(y == 0) & (self.y_predict == 0)].shape[0]

y_predictTrue = self.y_predict[self.y_predict == 1].shape[0]

y_true = y[y == 1].shape[0]

y_total = y.shape[0]

logging.debug(f"y_acutalTrue:{y_acutalTrue}, y_acutalFalse:{y_acutalFalse}, y_predictTrue:{y_predictTrue}, "

f"y_true:{y_true}, y_total:{y_total}")

Accuracy = (y_acutalTrue + y_acutalFalse) / y_total

Precision = y_acutalTrue / y_predictTrue

Recall = y_acutalTrue / y_true

print("Accuracy: ", Accuracy,

"Precision: ", Precision,

"Recall: ", Recall

)

def visualOutput(self, savePath="", outputFormat="html", direction="TD"):

'''

将决策树可视化输出,格式为‘md'或’html'

:param outputFormat: 设置输出格式

:return: 对应输出格式的文本

'''

if self.decision_tree is None:

raise Exception("should fit first!")

text = ""

if outputFormat == "md":

text = self._format_md(direction=direction)

elif outputFormat == "html":

text = self._format_html(direction=direction)

if savePath != "":

open(savePath, "w", encoding="utf-8").write(text)

return text

def _format_html(self, direction="TD"):

'''

决策树的可视化为html格式

:return: html代码

'''

html_start = '' \

'' \

'' \

'' \

'DecisionTree ' \

'{}'

html_end = ''

mermaid = self._format_md(end=";", direction=direction)

mermaid = mermaid.replace("```mermaid\n", "").replace("```", "")

html = html_start.replace("{}", mermaid) + html_end

return html

def _format_md(self, direction="TD", end="\n"):

'''

决策树的可视化为md代码(mermaid代码)

:param end: 设置每行结尾符号

:param direction: 设置方向

:return:

'''

md = "```mermaid\n"

md += f"graph {direction}{end}"

total_node = 1

current_nodeID = 0

if len(self.decision_tree) != 2:

code_line = f"{current_nodeID}(start)-->{self.decision_tree['next']['其他']}"

return md + code_line + "\n```"

queue = [self.decision_tree]

while len(queue) > 0:

node = queue.pop(0)

ans_node = []

for key in node["next"].keys():

if type(node['next'][key]) == dict:

if len(node['next'][key]) == 1:

decision = node['next'][key]['next']['其他']

if decision not in ans_node:

ans_node.append(decision)

nodeID_ans = ans_node.index(decision)

code_line = f"{current_nodeID}({node['column']})--{key}-->" \

f"L{current_nodeID}_{nodeID_ans}({decision})"

else:

code_line = f"{current_nodeID}({node['column']})--{key}-->{total_node}"

queue.append(node["next"][key])

total_node += 1

else:

decision = node['next'][key]

if decision not in ans_node:

ans_node.append(decision)

nodeID_ans = ans_node.index(decision)

code_line = f"{current_nodeID}({node['column']})--{key}-->" \

f"L{current_nodeID}_{nodeID_ans}({decision})"

# code_line_b = str(code_line.encode("utf-8")).lstrip("b'").rstrip("'")

md += code_line + end

current_nodeID += 1

return md + "```"

def load(self, savePath: str):

tree = eval(open(savePath, "r").read())

if type(tree) == dict:

self.decision_tree = tree

else:

raise Exception("Load Faild!")

def save(self, savePath: str):

open(savePath, "w").write(str(decisionTree.decision_tree))

logging.info(f"决策树已保存,位置:{savePath}")

if __name__ == '__main__':

# 初始化决策树

decisionTree = DecisionTree()

# 不需要外部数据集的demo

demo_data = [[0, 2, 0, 0, 0],

[0, 2, 0, 1, 0],

[1, 2, 0, 0, 1],

[2, 1, 0, 0, 1],

[2, 0, 1, 0, 1],

[2, 0, 1, 1, 0],

[1, 0, 1, 1, 1],

[0, 1, 0, 0, 0],

[0, 0, 1, 0, 1],

[2, 1, 1, 0, 1],

[0, 1, 1, 1, 1],

[1, 1, 0, 1, 1],

[1, 2, 1, 0, 1],

[2, 1, 0, 1, 0]]

dataset = pd.DataFrame(demo_data)

dataset.columns = ['年龄', '有工作', '是学生', '信贷情况', "借贷"]

# UCI数据集Caesarian Section Classification

# dataset = pd.read_csv("caesarian.csv", header=None)

# dataset.columns = ["Age", "Delivery_number", "Delivery_time", "Blood_of_Pressure", "Heart_Problem", "Caesarian"]

# age = dataset["Age"].value_counts().sort_index() # 将Age分为三层,低于24岁,低于31岁,高于30岁

# dataset["Age"][dataset["Age"] < 24] = 0

# dataset["Age"][(dataset["Age"] > 23) & (dataset["Age"] < 31)] = 1

# dataset["Age"][30 < dataset["Age"]] = 2

# print(dataset.info())

# 将数据集的属性和结果分开

X = dataset.iloc[:, :-1]

Y = dataset.iloc[:, -1]

# 使用skleran切分数据集

# X_train, X_test, Y_train, Y_test = train_test_split(X, Y, train_size=0.7, shuffle=True)

# else直接使用数据集作为测试集

X_train = X_test = X

Y_train = Y_test = Y

# 拟合

e = decisionTree.fit(X_train, Y_train, threshold=-1)

# 保存决策树

decisionTree.save("decisionTree.txt")

# 加载决策树

decisionTree.load("decisionTree.txt")

# 预测

predict_y = decisionTree.predict(X_test)

# 评估

decisionTree.score(Y_test)

# 可视化输出(html格式)

# visualOutput可选参数outputFormat=["md", "html"],direction方向,设置决策树的方向=["LR","RL","TD","DT"],默认TD,从上到下

decisionTree.visualOutput(savePath="decisionTree.html", outputFormat="html")

实验结果(决策树)

debug模式

使用demo数据集运行

2020-10-14 00:47:19,827-[root] [DEBUG] [best_empirical_entropy]: now dataset shape is (14, 5), column is ['年龄', '有工作', '是学生', '信贷情况', 'DECISION_tempADD']

2020-10-14 00:47:19,827-[root] [DEBUG] [best_empirical_entropy]: empirical_entropy is 0.9402859586706311

2020-10-14 00:47:19,831-[root] [DEBUG] [best_empirical_entropy]: now is 年龄

2020-10-14 00:47:19,849-[root] [DEBUG] [best_empirical_entropy]: now label is 2, chooseData shape is (5, 4), Ans count: [3, 2], entropy: 0.9709505944546686

2020-10-14 00:47:19,859-[root] [DEBUG] [best_empirical_entropy]: now label is 0, chooseData shape is (5, 4), Ans count: [3, 2], entropy: 0.9709505944546686

2020-10-14 00:47:19,865-[root] [DEBUG] [best_empirical_entropy]: now label is 1, chooseData shape is (4, 4), Ans count: [4], entropy: 0.0

2020-10-14 00:47:19,865-[root] [DEBUG] [best_empirical_entropy]: entropy: 0.6935361388961918, 年龄 informationGain:0.24674981977443933

2020-10-14 00:47:19,868-[root] [DEBUG] [best_empirical_entropy]: now is 有工作

2020-10-14 00:47:19,880-[root] [DEBUG] [best_empirical_entropy]: now label is 1, chooseData shape is (6, 4), Ans count: [4, 2], entropy: 0.9182958340544896

2020-10-14 00:47:19,889-[root] [DEBUG] [best_empirical_entropy]: now label is 2, chooseData shape is (4, 4), Ans count: [2, 2], entropy: 1.0

2020-10-14 00:47:19,896-[root] [DEBUG] [best_empirical_entropy]: now label is 0, chooseData shape is (4, 4), Ans count: [3, 1], entropy: 0.8112781244591328

2020-10-14 00:47:19,897-[root] [DEBUG] [best_empirical_entropy]: entropy: 0.9110633930116763, 有工作 informationGain:0.02922256565895487

2020-10-14 00:47:19,898-[root] [DEBUG] [best_empirical_entropy]: now is 是学生

2020-10-14 00:47:19,909-[root] [DEBUG] [best_empirical_entropy]: now label is 1, chooseData shape is (7, 4), Ans count: [6, 1], entropy: 0.5916727785823275

2020-10-14 00:47:19,917-[root] [DEBUG] [best_empirical_entropy]: now label is 0, chooseData shape is (7, 4), Ans count: [4, 3], entropy: 0.9852281360342515

2020-10-14 00:47:19,918-[root] [DEBUG] [best_empirical_entropy]: entropy: 0.7884504573082896, 是学生 informationGain:0.15183550136234159

2020-10-14 00:47:19,920-[root] [DEBUG] [best_empirical_entropy]: now is 信贷情况

2020-10-14 00:47:19,927-[root] [DEBUG] [best_empirical_entropy]: now label is 0, chooseData shape is (8, 4), Ans count: [6, 2], entropy: 0.8112781244591328

2020-10-14 00:47:19,937-[root] [DEBUG] [best_empirical_entropy]: now label is 1, chooseData shape is (6, 4), Ans count: [3, 3], entropy: 1.0

2020-10-14 00:47:19,937-[root] [DEBUG] [best_empirical_entropy]: entropy: 0.8921589282623617, 信贷情况 informationGain:0.04812703040826949

2020-10-14 00:47:19,937-[root] [DEBUG] [best_empirical_entropy]: Choose 年龄:0.24674981977443933

2020-10-14 00:47:19,940-[root] [DEBUG] [id3]: now choose_column:年龄, label: 2

2020-10-14 00:47:19,950-[root] [DEBUG] [best_empirical_entropy]: now dataset shape is (5, 4), column is ['有工作', '是学生', '信贷情况', 'DECISION_tempADD']

2020-10-14 00:47:19,950-[root] [DEBUG] [best_empirical_entropy]: empirical_entropy is 0.9709505944546686

2020-10-14 00:47:19,953-[root] [DEBUG] [best_empirical_entropy]: now is 有工作

2020-10-14 00:47:19,964-[root] [DEBUG] [best_empirical_entropy]: now label is 1, chooseData shape is (3, 3), Ans count: [2, 1], entropy: 0.9182958340544896

2020-10-14 00:47:19,974-[root] [DEBUG] [best_empirical_entropy]: now label is 0, chooseData shape is (2, 3), Ans count: [1, 1], entropy: 1.0

2020-10-14 00:47:19,974-[root] [DEBUG] [best_empirical_entropy]: entropy: 0.9509775004326937, 有工作 informationGain:0.01997309402197489

2020-10-14 00:47:19,976-[root] [DEBUG] [best_empirical_entropy]: now is 是学生

2020-10-14 00:47:19,983-[root] [DEBUG] [best_empirical_entropy]: now label is 1, chooseData shape is (3, 3), Ans count: [2, 1], entropy: 0.9182958340544896

2020-10-14 00:47:19,992-[root] [DEBUG] [best_empirical_entropy]: now label is 0, chooseData shape is (2, 3), Ans count: [1, 1], entropy: 1.0

2020-10-14 00:47:19,992-[root] [DEBUG] [best_empirical_entropy]: entropy: 0.9509775004326937, 是学生 informationGain:0.01997309402197489

2020-10-14 00:47:19,995-[root] [DEBUG] [best_empirical_entropy]: now is 信贷情况

2020-10-14 00:47:20,004-[root] [DEBUG] [best_empirical_entropy]: now label is 0, chooseData shape is (3, 3), Ans count: [3], entropy: 0.0

2020-10-14 00:47:20,013-[root] [DEBUG] [best_empirical_entropy]: now label is 1, chooseData shape is (2, 3), Ans count: [2], entropy: 0.0

2020-10-14 00:47:20,013-[root] [DEBUG] [best_empirical_entropy]: entropy: 0.0, 信贷情况 informationGain:0.9709505944546686

2020-10-14 00:47:20,013-[root] [DEBUG] [best_empirical_entropy]: Choose 信贷情况:0.9709505944546686

2020-10-14 00:47:20,015-[root] [DEBUG] [id3]: now choose_column:信贷情况, label: 0

2020-10-14 00:47:20,021-[root] [DEBUG] [id3]: select decision 1, result_type:[3], dataset column:(3, 3), lower than threshold:False

2020-10-14 00:47:20,021-[root] [DEBUG] [id3]: now choose_column:信贷情况, label: 1

2020-10-14 00:47:20,027-[root] [DEBUG] [id3]: select decision 0, result_type:[2], dataset column:(2, 3), lower than threshold:False

2020-10-14 00:47:20,028-[root] [DEBUG] [id3]: now choose_column:年龄, label: 0

2020-10-14 00:47:20,037-[root] [DEBUG] [best_empirical_entropy]: now dataset shape is (5, 4), column is ['有工作', '是学生', '信贷情况', 'DECISION_tempADD']

2020-10-14 00:47:20,037-[root] [DEBUG] [best_empirical_entropy]: empirical_entropy is 0.9709505944546686

2020-10-14 00:47:20,038-[root] [DEBUG] [best_empirical_entropy]: now is 有工作

2020-10-14 00:47:20,046-[root] [DEBUG] [best_empirical_entropy]: now label is 2, chooseData shape is (2, 3), Ans count: [2], entropy: 0.0

2020-10-14 00:47:20,052-[root] [DEBUG] [best_empirical_entropy]: now label is 1, chooseData shape is (2, 3), Ans count: [1, 1], entropy: 1.0

2020-10-14 00:47:20,060-[root] [DEBUG] [best_empirical_entropy]: now label is 0, chooseData shape is (1, 3), Ans count: [1], entropy: 0.0

2020-10-14 00:47:20,060-[root] [DEBUG] [best_empirical_entropy]: entropy: 0.4, 有工作 informationGain:0.5709505944546686

2020-10-14 00:47:20,061-[root] [DEBUG] [best_empirical_entropy]: now is 是学生

2020-10-14 00:47:20,068-[root] [DEBUG] [best_empirical_entropy]: now label is 0, chooseData shape is (3, 3), Ans count: [3], entropy: 0.0

2020-10-14 00:47:20,076-[root] [DEBUG] [best_empirical_entropy]: now label is 1, chooseData shape is (2, 3), Ans count: [2], entropy: 0.0

2020-10-14 00:47:20,076-[root] [DEBUG] [best_empirical_entropy]: entropy: 0.0, 是学生 informationGain:0.9709505944546686

2020-10-14 00:47:20,077-[root] [DEBUG] [best_empirical_entropy]: now is 信贷情况

2020-10-14 00:47:20,085-[root] [DEBUG] [best_empirical_entropy]: now label is 0, chooseData shape is (3, 3), Ans count: [2, 1], entropy: 0.9182958340544896

2020-10-14 00:47:20,092-[root] [DEBUG] [best_empirical_entropy]: now label is 1, chooseData shape is (2, 3), Ans count: [1, 1], entropy: 1.0

2020-10-14 00:47:20,092-[root] [DEBUG] [best_empirical_entropy]: entropy: 0.9509775004326937, 信贷情况 informationGain:0.01997309402197489

2020-10-14 00:47:20,092-[root] [DEBUG] [best_empirical_entropy]: Choose 是学生:0.9709505944546686

2020-10-14 00:47:20,094-[root] [DEBUG] [id3]: now choose_column:是学生, label: 0

2020-10-14 00:47:20,100-[root] [DEBUG] [id3]: select decision 0, result_type:[3], dataset column:(3, 3), lower than threshold:False

2020-10-14 00:47:20,100-[root] [DEBUG] [id3]: now choose_column:是学生, label: 1

2020-10-14 00:47:20,106-[root] [DEBUG] [id3]: select decision 1, result_type:[2], dataset column:(2, 3), lower than threshold:False

2020-10-14 00:47:20,106-[root] [DEBUG] [id3]: now choose_column:年龄, label: 1

2020-10-14 00:47:20,112-[root] [DEBUG] [id3]: select decision 1, result_type:[4], dataset column:(4, 4), lower than threshold:False

2020-10-14 00:47:20,112-[root] [INFO] [fit]: decision_tree leaf:5

2020-10-14 00:47:20,113-[root] [INFO] [save]: 决策树已保存,位置:decisionTree.txt

2020-10-14 00:47:20,123-[root] [DEBUG] [score]: y_acutalTrue:9, y_acutalFalse:5, y_predictTrue:9, y_true:9, y_total:14