QEMU代码详解

目录

概述

QOM

QM.1 Class & Object

QM.2 初始化

QM.3 Property

QM.4 QOM List

Options

MemoryRegion

MR.1 Hierarchy

MR.2 Listeners

Qemu Task Model

TM.1 概述

TM.2 Coroutine

TM.2.1 概述

TM.2.2 coroutine实现

TM.3 glib

TM.4 AIO

TM.4.1 aio驱动模型

TM.4.2 aio bh

TM.4.3 aio coroutine schedule

Device Emulation

DE.0 概述

DE.1 CPU和chipset

DE.1.1 Chipset

DE.1.2 PCIE

DE.2 网络设备

DE.2.1 配置参数

DE.2.2 netdev参数

DE.2.3 NetQueue

DE.2.4 Backend

DE.3 存储设备

DE.3.1 配置参数

DE.3.2 BlockBackend

Migration

MG.0 概述

MG.1 VMState

MG.2 流程概述

MG.3 内存迁移

MG.3.1 发送流程

MG.3.2 Dirty Pages

MG.3.2.1 Tracking

MG.3.2.2 Output

MG.3.2.3 Dirty log Qemu代码

MG.3.2.4 Manual Dirty Log Protect

MG.3.2.5 libvirt migrate --timeout

MG.4 停止Guest

MG.4.1 停止vcpu

MG.4.2 停止外设

MG.4.2.1 net

MG.4.2.2 blk

附录

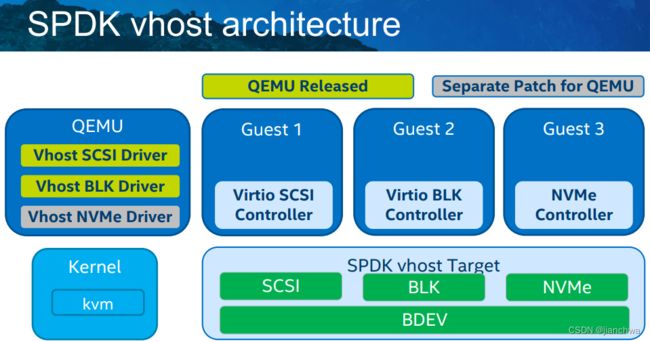

vhost-blk

概述

代码组织:

- vl.c,这是qemu的main函数所在文件;

- tcg/tcg.c,参考连接,Documentation/TCG - QEMU,The Tiny Code Generator (TCG) exists to transform target insns (the processor being emulated) via the TCG frontend to TCG ops which are then transformed into host insns (the processor executing QEMU itself) via the TCG backend. tcg.c是整个tcg机制的核心文件;

- Guest CPU,命名方式为qemu/target-xxx,这里cpu模拟相关代码,其中translate.c负责将guest insn转化为tcg ops;

- tcg/xxx,这里是tcg后端的代码,负责将tcg ops转化成host insn;

- qemu/cpu-exec.c,cpu模拟的相关执行的核心代码在这里

- Emulated Hardware,qemu/hw下保存的是模拟硬件的代码,参考其一层目录结构:

-

9pfs block cris i386 isa mips openrisc ppc sparc unicore32 xtensa acpi bt display ide lm32 misc pci s390x sparc64 usb alpha char dma input m68k moxie pci-bridge scsi ssi virtio arm core gpio intc Makefile.objs net pci-host sd timer watchdog audio cpu i2c ipack microblaze nvram pcmcia sh4 tpm xen - qom,qemu object model相关代码

QOM

QOM,Qemu Object Model,用于QEMU中的设备模拟;参考QEMU的官方文档,The QEMU Object Model (QOM) — QEMU 7.1.50 documentation![]() https://qemu.readthedocs.io/en/latest/devel/qom.html

https://qemu.readthedocs.io/en/latest/devel/qom.html

The QEMU Object Model provides a framework for registering user creatable types and instantiating objects from those types. QOM provides the following features:

- System for dynamically registering types

- Support for single-inheritance of types

- Multiple inheritance of stateless interfaces

QM.1 Class & Object

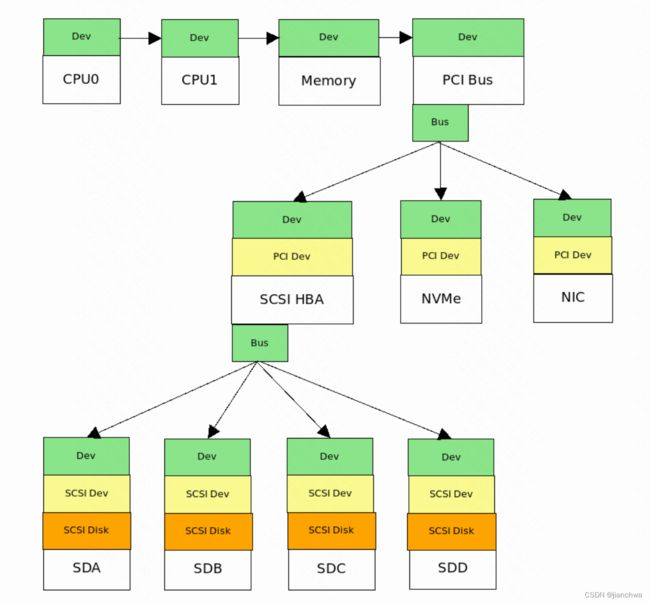

QOM使用C语言实现了部分C++的特性,比如class的继承;我们可以试想一下,如何使用类继承功能实现设备模拟?

注:上图并不是QEMU的组织方式,最上层的组织方式也不太准确

如上图中,

- 使用dev和bus组织设备,形成层级关系

- PCI Dev或者SCSI Dev等class将包含特性类型设备的属性和接口,

- PCI Dev的Config space和Bar,而具体的寄存器使用情况,将在具体的设备类中实现,比如NVME和网卡‘

- SCSI Dev将包含vender、rotational、wbc、ncq等信息;

所以,通过类继承的方式,可以很好的反映设备的组织方式,而且可以很大程度的提高代码编写。

接下来,我们看下QOM是如何实现类继承的?

QOM引入了两个概念:

- class,对应的是c++类中的方法和静态属性,这两样都是全局的;

- object,对应的是c++类中每实例的部分;

class和object都通过,将父class和object嵌入首成员的方式,实现继承,同时,也可以直接使用指针进行类型转换;例如:

typedef struct X86CPUClass {

CPUClass parent_class;

...

} X86CPUClass;

typedef struct CPUClass {

DeviceClass parent_class;

...

} CPUClass;

X86CPUClass -> CPUClass -> DeviceClass -> ObjectClass

X86CPU -> DeviceState -> Object

QOM引入了TypeImpl(或者Type),来保存class和object的size和init/exit方法,相当于构造和析构;同时,也保存了全局唯一的class结构的指针;所有TypeImpl都保存在type_table中,详情可以参考函数:type_table_lookup和type_table_add。

下面,我们看下具体代码:

- class初始化,重点关注:(1)子类与父类都是单独存在的,(2)子类对父类的method的覆盖,参考代码:

static void type_initialize(TypeImpl *ti) { TypeImpl *parent; // 代表该class已经初始化过了 if (ti->class) { return; } ti->class_size = type_class_get_size(ti); ti->instance_size = type_object_get_size(ti); //构造全局唯一的class结构 ti->class = g_malloc0(ti->class_size); parent = type_get_parent(ti); if (parent) { type_initialize(parent); // 从父类的class结构中拷贝相关成员到本class // 这里需要特别注意的是,子类和父类分别是单独的结构,且子类中包含了 // 一份父类的拷贝 memcpy(ti->class, parent->class, parent->class_size); ... } ti->class->type = ti; ... //调用子类的class_init,这一过程中可能会覆盖父类的函数,参考下面的函数 if (ti->class_init) { ti->class_init(ti->class, ti->class_data); } } x86_cpu_common_class_init() --- X86CPUClass *xcc = X86_CPU_CLASS(oc); CPUClass *cc = CPU_CLASS(oc); DeviceClass *dc = DEVICE_CLASS(oc); // xcc -> cc -> dc xcc->parent_realize = dc->realize; dc->realize = x86_cpu_realizefn; dc->bus_type = TYPE_ICC_BUS; dc->props = x86_cpu_properties; xcc->parent_reset = cc->reset; cc->reset = x86_cpu_reset; ... --- - object的初始化, 重点关注与class如何关联起来,参考函数:

object_new_with_type() --- // 依照TypeImpl,构造object obj = g_malloc(type->instance_size); object_initialize_with_type(obj, type->instance_size, type); --- object_initialize_with_type() --- //使用上层的object保存class指针 obj->class = type->class; object_init_with_type(obj, type); --- //从最上层的object依次执行instance_init object_init_with_type() --- if (type_has_parent(ti)) { object_init_with_type(obj, type_get_parent(ti)); } if (ti->instance_init) { ti->instance_init(obj); } ---

在进行object初始化之前,会先进行class的初始话,还是参考object_init_with_type(),

object_new_with_type()

---

type_initialize(type);

obj = g_malloc(type->instance_size);

object_initialize_with_type(obj, type->instance_size, type);

---

QM.2 初始化

我们知道了QOM中的class和object,以及指导构造它们的TypeImpl(Type),即

- TypeImpl,相当于C++中的类的定义,也就是class xxx { xxx };

- class,相当于C++中类的方法和静态属性,两者都是全局的;

- object,相当于C++中类的每实例的部分;

在qemu的代码中,我们只找到了各种TypeInfo的定义,以上三个元素都是什么时候被初始化的?本小节,我们将主要了解下QOM的构造框架。

以target-i386/cpu.c为例:

static const TypeInfo x86_cpu_type_info = {

.name = TYPE_X86_CPU,

.parent = TYPE_CPU,

.instance_size = sizeof(X86CPU),

.instance_init = x86_cpu_initfn,

.abstract = true,

.class_size = sizeof(X86CPUClass),

.class_init = x86_cpu_common_class_init,

};

static void x86_cpu_register_types(void)

{

int i;

type_register_static(&x86_cpu_type_info);

for (i = 0; i < ARRAY_SIZE(builtin_x86_defs); i++) {

x86_register_cpudef_type(&builtin_x86_defs[i]);

}

#ifdef CONFIG_KVM

type_register_static(&host_x86_cpu_type_info);

#endif

}

type_init(x86_cpu_register_types)

我们看到两个关键的函数:

- type_register_static,这个函数就是注册TypeImpl的地方,参考代码:

type_register_static() -> type_register() -> type_register_internal() --- TypeImpl *ti; ti = type_new(info); type_table_add(ti); --- - type_init,该函数将保证x86_cpu_register_types被自动调用,

其中用到了gcc的constructor属性,参考连接Function Attributes - Using the GNU Compiler Collection (GCC)Using the GNU Compiler Collection (GCC)

#define type_init(function) module_init(function, MODULE_INIT_QOM) #define module_init(function, type) \ static void __attribute__((constructor)) do_qemu_init_ ## function(void) \ { \ register_module_init(function, type); \ } void register_module_init(void (*fn)(void), module_init_type type) { ModuleEntry *e; ModuleTypeList *l; e = g_malloc0(sizeof(*e)); e->init = fn; e->type = type; l = find_type(type); QTAILQ_INSERT_TAIL(l, e, node); } vl.c main() -> module_call_init(MODULE_INIT_QOM); --- l = find_type(type); QTAILQ_FOREACH(e, l, node) { e->init(); } --- https://gcc.gnu.org/onlinedocs/gcc-4.7.0/gcc/Function-Attributes.html

https://gcc.gnu.org/onlinedocs/gcc-4.7.0/gcc/Function-Attributes.html The

constructorattribute causes the function to be called automatically before execution entersmain (). constructor只是做了函数的注册,最终的调用,是main函数调用的。

到这里,通过type_init()向系统注册了TypeImpl。

class的初始化并没有一个固定的地方,而是在使用之前执行type_initialize(),比如:

cpu_x86_create()

---

oc = x86_cpu_class_by_name(name);

-> object_class_by_name();

...

cpu = X86_CPU(object_new(object_class_get_name(oc)));

---

object_class_by_name()

---

TypeImpl *type = type_get_by_name(typename);

if (!type) {

return NULL;

}

type_initialize(type);

return type->class;

---同时,type_initialize()也会顺便把所有的parent都初始化了。

object是按需分配,比如默认cpu object的申请,参考代码:

main()

---

machine_class = find_default_machine(); // find the machine with "is_default = true"

...

current_machine = MACHINE(object_new(object_class_get_name(

OBJECT_CLASS(machine_class))));

object_property_add_child(object_get_root(), "machine",

OBJECT(current_machine), &error_abort);

machine = machine_class->qemu_machine;

...

machine->init(¤t_machine->init_args);

...

cpu_synchronize_all_post_init();

---

static QEMUMachine pc_i440fx_machine_v2_0 = {

PC_I440FX_2_0_MACHINE_OPTIONS,

.name = "pc-i440fx-2.0",

.alias = "pc",

.init = pc_init_pci,

.is_default = 1,

};

pc_init_pci()

-> pc_init1()

-> pc_cpus_init()

---

/* init CPUs */

if (cpu_model == NULL)

cpu_model = "qemu64";

...

for (i = 0; i < smp_cpus; i++) {

cpu = pc_new_cpu(cpu_model, x86_cpu_apic_id_from_index(i),

icc_bridge, &error);

}

---

-> cpu_x86_create()

---

model_pieces = g_strsplit(cpu_model, ",", 2);

name = model_pieces[0];

oc = x86_cpu_class_by_name(name);

xcc = X86_CPU_CLASS(oc);

cpu = X86_CPU(object_new(object_class_get_name(oc)));

---

QM.3 Property

属性的内容可以参考如下两个连接:

[Qemu-devel] qdev properties vs qom object properties![]() https://qemu-devel.nongnu.narkive.com/e31JZTzZ/qdev-properties-vs-qom-object-propertiesFeatures/QOM - QEMU Properties in QOM

https://qemu-devel.nongnu.narkive.com/e31JZTzZ/qdev-properties-vs-qom-object-propertiesFeatures/QOM - QEMU Properties in QOM![]() https://wiki.qemu.org/Features/QOM#Device_Properties总结起来,有以下几个点:

https://wiki.qemu.org/Features/QOM#Device_Properties总结起来,有以下几个点:

qdev properties are just a wrapper around QOM object properties, taking care of:

- not allowing to set the property after realize

- providing default values

- letting people use the familiar DEFINE_PROP_* array syntax

- pretty printing for "info qtree" (which right now is only used by PCI devfn and vlan properties

从更加具体的角度来讲,属性就是某个对象,比如虚拟网卡,的配置信息;比如虚拟网卡的mac就是它的一个属性;另外,有些属性还带有'side-effect',比如:

realized/unrealize,QEMU的官方描述是, Devices support the notion of "realize" which roughly corresponds to construction. More accurately, it corresponds to the moment before a device will be first consumed by a guest. "unrealize" roughly corresponds to reset. A device may be realized and unrealized many times during its lifecycle. 参考相关的调用栈信息,

#0 x86_cpu_reset (s=0x555556204a60) at /usr/src/debug/qemu-2.0.0/target-i386/cpu.c:2404

#1 0x00005555557f7141 in x86_cpu_realizefn (dev=0x555556204a60, errp=0x7fffffffdf20)

at /usr/src/debug/qemu-2.0.0/target-i386/cpu.c:2630

#2 0x0000555555685ea8 in device_set_realized (obj=, value=,

err=0x7fffffffe010) at hw/core/qdev.c:757

#3 0x000055555575bede in property_set_bool (obj=0x555556204a60, v=,

opaque=0x555556215050, name=, errp=0x7fffffffe010) at qom/object.c:1420

#4 0x000055555575e4a7 in object_property_set_qobject (obj=0x555556204a60, value=,

name=0x5555558aac3a "realized", errp=0x7fffffffe010) at qom/qom-qobject.c:24

#5 0x000055555575d380 in object_property_set_bool (obj=obj@entry=0x555556204a60,

value=value@entry=true, name=name@entry=0x5555558aac3a "realized", errp=errp@entry=0x7fffffffe010)

at qom/object.c:883

#6 0x00005555557c4e7e in pc_new_cpu (cpu_model=cpu_model@entry=0x5555558ec002 "qemu64", apic_id=0,

icc_bridge=icc_bridge@entry=0x555556201aa0, errp=errp@entry=0x7fffffffe050)

at /usr/src/debug/qemu-2.0.0/hw/i386/pc.c:944

#7 0x00005555557c5f7f in pc_cpus_init (cpu_model=0x5555558ec002 "qemu64",

icc_bridge=icc_bridge@entry=0x555556201aa0) at /usr/src/debug/qemu-2.0.0/hw/i386/pc.c:1015

#8 0x00005555557c71bf in pc_init1 (args=0x5555561eab68, pci_enabled=1, kvmclock_enabled=1)

at /usr/src/debug/qemu-2.0.0/hw/i386/pc_piix.c:108

#9 0x00005555555ee7d3 in main (argc=, argv=, envp=)

at vl.c:4383

设备的初始化过程会调用“realized”属性的回调。

另外,属性会在处理设备参数的时候用到,参考代码:

qdev_device_add()

---

/* set properties */

if (qemu_opt_foreach(opts, set_property, dev, 1) != 0) {

...

}

dev->opts = opts;

object_property_set_bool(OBJECT(dev), true, "realized", &err);

---

set_property()

-> object_property_parse()

-> object_property_set()

---

ObjectProperty *prop = object_property_find(obj, name, errp);

if (prop == NULL) {

return;

}

if (!prop->set) {

error_set(errp, QERR_PERMISSION_DENIED);

} else {

prop->set(obj, v, prop->opaque, name, errp);

}

---

static Property nvme_props[] = {

DEFINE_BLOCK_PROPERTIES(NvmeCtrl, conf),

DEFINE_PROP_STRING("serial", NvmeCtrl, serial),

DEFINE_PROP_END_OF_LIST(),

};

#define DEFINE_BLOCK_PROPERTIES(_state, _conf) \

DEFINE_PROP_DRIVE("drive", _state, _conf.bs), \

DEFINE_PROP_BLOCKSIZE("logical_block_size", _state, \

_conf.logical_block_size, 512), \

DEFINE_PROP_BLOCKSIZE("physical_block_size", _state, \

_conf.physical_block_size, 512), \

DEFINE_PROP_UINT16("min_io_size", _state, _conf.min_io_size, 0), \

DEFINE_PROP_UINT32("opt_io_size", _state, _conf.opt_io_size, 0), \

DEFINE_PROP_INT32("bootindex", _state, _conf.bootindex, -1), \

DEFINE_PROP_UINT32("discard_granularity", _state, \

_conf.discard_granularity, -1)

QM.4 QOM List

通过QMP命令,我们可以看到所有的QOM对象及其属性的值构成的树状结构,以此来了解设备的拓扑结构和配置,使用如下脚本,:

#!/bin/bash

opt=$1

obj=$2

path=$3

prop=$4

case $opt in

list)

virsh qemu-monitor-command $obj --pretty "{\"execute\": \"qom-list\", \"arguments\": { \"path\": \"$path\" }}"

;;

get)

virsh qemu-monitor-command $obj --pretty "{\"execute\": \"qom-get\", \"arguments\": { \"path\": \"$path\", \"property\":\"$prop\"}}"

;;

*)

;;

esac

以下内容基于QEMU版本为7.0.0,

[root@dceff7e73f37 libvirt]# sh qmp.sh list will1 /

{

"return": [

{

"name": "type",

"type": "string"

},

{

"name": "objects",

"type": "child"

},

{

"name": "machine",

"type": "child"

},

{

"name": "chardevs",

"type": "child"

}

],

"id": "libvirt-487"

} 我们先看下/chardevs,

[root@dceff7e73f37 libvirt]# sh qmp.sh list will1 /chardevs

{

"return": [

{

"name": "type",

"type": "string"

},

{

"name": "charchannel0",

"type": "child"

},

{

"name": "charserial0",

"type": "child"

},

{

"name": "charmonitor",

"type": "child"

}

],

"id": "libvirt-489"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh list will1 /chardevs/charmonitor

{

"return": [

{

"name": "type",

"type": "string"

},

{

"name": "connected",

"type": "bool"

},

{

"name": "addr",

"type": "SocketAddress"

}

],

"id": "libvirt-490"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh get will1 /chardevs/charmonitor addr

{

"return": {

"path": "/var/lib/libvirt/qemu/domain-1-will1/monitor.sock",

"type": "unix"

},

"id": "libvirt-491"

}

以上,我们可以看到,qemu monitor对应的对象与虚拟机machine处在同一个层级;

接下来,再看下machine,

[root@dceff7e73f37 libvirt]# sh qmp.sh list will1 /machine

{

"return": [

{

"name": "type",

"type": "string"

},

...

{

"name": "smp",

"type": "SMPConfiguration"

},

...

{

"name": "memory-backend",

"type": "string"

},

...

{

"name": "q35",

"type": "child"

},

...

{

"name": "unattached",

"type": "child"

},

{

"name": "peripheral",

"type": "child"

},

...

],

"id": "libvirt-492"

}

其中内容较多,我们省略了部分内容,可以看到machine中包含两种类型的对象:

- container,用来承载其他对象,比如:

- 'unattached',用来承载没有父设备的设备,最典型的是cpu,参考代码:

device_set_realized() --- ... if (value && !dev->realized) { ... if (!obj->parent) { gchar *name = g_strdup_printf("device[%d]", unattached_count++); object_property_add_child(container_get(qdev_get_machine(), "/unattached"), name, obj); unattached_parent = true; g_free(name); } ... } --- So '/machine/unattached' means no parent... - 'peripheral',用来承载所有外设,参考代码:

device_init_func() -> qdev_device_add() -> qdev_device_add_from_qdict() --- driver = qdict_get_try_str(opts, "driver"); ... dev = qdev_new(driver); ... id = g_strdup(qdict_get_try_str(opts, "id")); qdev_set_id(dev, id, errp); ... object_set_properties_from_keyval(&dev->parent_obj, dev->opts, from_json, errp); qdev_realize(DEVICE(dev), bus, errp); ---

- 'unattached',用来承载没有父设备的设备,最典型的是cpu,参考代码:

这里我们可以看下,cpu和设备的配置信息,

[root@dceff7e73f37 libvirt]# sh qmp.sh get will1 /machine/unattached/device[6] kvm

{

"return": true,

"id": "libvirt-496"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh get will1 /machine/unattached/device[6] type

{

"return": "host-x86_64-cpu",

"id": "libvirt-497"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh get will1 /machine/unattached/device[6] kvm_pv_eoi

{

"return": true,

"id": "libvirt-498"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh get will1 /machine/unattached/device[6] hv-apicv

{

"return": false,

"id": "libvirt-499"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh list will1 /machine/peripheral/net0/virtio-pci[0]

{

"return": [

{

"name": "type",

"type": "string"

},

{

"name": "container",

"type": "link"

},

{

"name": "addr",

"type": "uint64"

},

{

"name": "size",

"type": "uint64"

},

{

"name": "priority",

"type": "uint32"

}

],

"id": "libvirt-509"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh get will1 /machine/peripheral/net0/virtio-pci[0] addr

{

"return": 4271898624,

"id": "libvirt-510"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh get will1 /machine/peripheral/net0/virtio-pci[0] size

{

"return": 16384,

"id": "libvirt-511"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh get will1 /machine/peripheral/net0 rx_queue_size

{

"return": 256,

"id": "libvirt-512"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh list will1 /machine/peripheral/net0/msix-table[0]

{

"return": [

{

"name": "type",

"type": "string"

},

{

"name": "container",

"type": "link"

},

{

"name": "addr",

"type": "uint64"

},

{

"name": "size",

"type": "uint64"

},

{

"name": "priority",

"type": "uint32"

}

],

"id": "libvirt-513"

}

[root@dceff7e73f37 libvirt]# sh qmp.sh get will1 /machine/peripheral/net0 mac

{

"return": "52:54:00:3b:87:a1",

"id": "libvirt-514"

}

通过以上,我们可以更加直观的看到QOM在QEMU中的存在形式以及作用。

Options

本小节,我们看下Qemu是如何处理入参的。

首先我们看下几个关键结构;

QemuOptsList、QemuOpts和QemuOpt三者之间的关系就像Directory、Page以及在每个Page上的KV Pair;

参考代码:

vl.c main()

---

qemu_add_opts(&qemu_drive_opts);

...

qemu_add_opts(&qemu_device_opts);

qemu_add_opts(&qemu_netdev_opts);

...

qemu_add_opts(&qemu_machine_opts);

qemu_add_opts(&qemu_smp_opts);

...

---

QemuOptsList qemu_device_opts = {

.name = "device",

.implied_opt_name = "driver",

.head = QTAILQ_HEAD_INITIALIZER(qemu_device_opts.head),

.desc = {

/*

* no elements => accept any

* sanity checking will happen later

* when setting device properties

*/

{ /* end of list */ }

},

};

这是QemuOptsList的注册和定义的过程。我们以-device为例,参考下面的例子:

NVMe Emulation — QEMU documentation![]() https://qemu-project.gitlab.io/qemu/system/devices/nvme.html

https://qemu-project.gitlab.io/qemu/system/devices/nvme.html

-drive file=nvm.img,if=none,id=nvm

-device nvme,serial=deadbeef,drive=nvm

解析函数为:

vl.c main()

---

case QEMU_OPTION_drive:

if (qemu_opts_parse(qemu_find_opts("drive"), optstr, 0) == NULL) {

exit(1);

}

break;

...

case QEMU_OPTION_device:

if (!qemu_opts_parse(qemu_find_opts("device"), optarg, 1)) {

exit(1);

}

break;

---

这里我们不对两个函数做深入解析,最终的结果是构造出了下面的QemuOpts,

其中比较特殊的是:driver=nvme,参考代码:

"device"的QemuOptsList的implied_opt_name是“driver”

且传入的permit_abbrev为1

opts_parse()

---

firstname = permit_abbrev ? list->implied_opt_name : NULL;

...

if (opts_do_parse(opts, params, firstname, defaults) != 0) {

qemu_opts_del(opts);

return NULL;

}

---

opts_do_parse()

---

for (p = params; *p != '\0'; p++) {

pe = strchr(p, '=');

pc = strchr(p, ',');

if (!pe || (pc && pc < pe)) {

/* found "foo,more" */

if (p == params && firstname) {

/* implicitly named first option */

pstrcpy(option, sizeof(option), firstname);

p = get_opt_value(value, sizeof(value), p);

} else {

...

}

} ....

if (strcmp(option, "id") != 0) {

/* store and parse */

opt_set(opts, option, value, prepend, &local_err);

if (local_err) {

qerror_report_err(local_err);

error_free(local_err);

return -1;

}

}

if (*p != ',') {

break;

}

}

---在解析完参数之后,Qemu对依据参数进行设备构建,还是参考device部分:

vl.c main()

-> qemu_opts_foreach(qemu_find_opts("device"), device_init_func, NULL, 1)

-> device_init_func()

-> qdev_device_add()

qdev_device_add()

---

ObjectClass *oc;

DeviceClass *dc;

driver = qemu_opt_get(opts, "driver");

oc = object_class_by_name(driver);

...

dc = DEVICE_CLASS(oc);

...

path = qemu_opt_get(opts, "bus");

if (path != NULL) {

...

} else if (dc->bus_type != NULL) {

bus = qbus_find_recursive(sysbus_get_default(), NULL, dc->bus_type);

..

}

/* create device */

dev = DEVICE(object_new(driver));

if (bus) {

qdev_set_parent_bus(dev, bus);

}

...

dev->opts = opts;

object_property_set_bool(OBJECT(dev), true, "realized", &err);

...

---

static const TypeInfo nvme_info = {

.name = "nvme",

.parent = TYPE_PCI_DEVICE,

.instance_size = sizeof(NvmeCtrl),

.class_init = nvme_class_init,

};MemoryRegion

MR.1 Hierarchy

(To be continued)

MR.2 Listeners

当MemoryRegion信息更新的时候,会调用以下函数:

memory_region_transaction_commit()

-> address_space_update_topology()

-> generate_memory_topology()

-> render_memory_region()

-> flatview_simplify()

-> address_space_update_topology_pass() // pass 0

-> address_space_update_topology_pass() // pass 1

render_memory_region()会把MemoryRegion层级转换成FlatView,

struct AddrRange {

Int128 start;

Int128 size;

};

struct FlatRange {

MemoryRegion *mr;

hwaddr offset_in_region;

AddrRange addr;

...

};

/* Flattened global view of current active memory hierarchy. Kept in sorted

* order.

*/

struct FlatView {

unsigned ref;

FlatRange *ranges;

unsigned nr;

unsigned nr_allocated;

};

FlatView其实就是一个FlatRange的数组,按升序排列,具体可以参考函数render_memory_region()flatview_simply()会把FlatView里的可以合并的,合并起来;

address_space_update_topology_pass()会对比前后两版FlatView,然后调用memory_listeners的region_add/region_del等回调函数;

下面我们通过memory_listener_register(),看下qemu中都有哪些listener,

- address_space_memory,用来处理来自内核的PIO和MMIO请求,参考代码:

vl.c main() -> cpu_exec_init_all() -> memory_map_init() --- memory_region_init(system_memory, NULL, "system", UINT64_MAX); address_space_init(&address_space_memory, system_memory, "memory"); system_io = g_malloc(sizeof(*system_io)); memory_region_init_io(system_io, NULL, &unassigned_io_ops, NULL, "io", 65536); address_space_init(&address_space_io, system_io, "I/O"); --- address_space_init() -> address_space_init_dispatch() -> memory_listener_register() kvm_cpu_exec() -> cpu_physical_memory_rw() -> address_space_rw(&address_space_memory, addr, buf, len, is_write); - memory slot,用来更新内核的memslot信息,参考代码:

kvm_init() -> memory_listener_register(&kvm_memory_listener, &address_space_memory); kvm_region_add() -> kvm_set_phys_mem() -> kvm_set_user_memory_region() -> ioctl of KVM_SET_USER_MEMORY_REGION

Qemu Task Model

TM.1 概述

Qemu本身是一个事件驱动型框架,具体我们可以参考资料:Improving the QEMU Event Loop![]() http://events17.linuxfoundation.org/sites/events/files/slides/Improving%20the%20QEMU%20Event%20Loop%20-%203.pdf

http://events17.linuxfoundation.org/sites/events/files/slides/Improving%20the%20QEMU%20Event%20Loop%20-%203.pdf

这里的数据,可能会有些过时,但是也为我们指明了大体方向,在 深入研究代码之后,我们会在最后,对这里的数据进行修正。

本小节,所有代码均基于qemu 7.0.0。

TM.2 Coroutine

TM.2.1 概述

Qemu为什么要引入协程?

这里,我们参考两份资料:

第一个是qemu coroutine引入的commit,

commit 00dccaf1f848290d979a4b1e6248281ce1b32aaa

Author: Kevin Wolf

Date: Mon Jan 17 16:08:14 2011 +0000

coroutine: introduce coroutines

Asynchronous code is becoming very complex. At the same time

synchronous code is growing because it is convenient to write.

Sometimes duplicate code paths are even added, one synchronous and the

other asynchronous. This patch introduces coroutines which allow code

that looks synchronous but is asynchronous under the covers.

A coroutine has its own stack and is therefore able to preserve state

across blocking operations, which traditionally require callback

functions and manual marshalling of parameters.

... coroutine引入的目的是,让代码以更加方便更加简单的同步的方式实现,但是,在底层,却是以性能更好的异步的方式运行;

第二个是一位developer的blog,参考以下链接:Stefan Hajnoczi: Coroutines in QEMU: The basics![]() http://blog.vmsplice.net/2014/01/coroutines-in-qemu-basics.html

http://blog.vmsplice.net/2014/01/coroutines-in-qemu-basics.html

选取其中的部分内容:

QEMU is an event-driven program with a main loop that invokes callback functions when file descriptors or timers become ready. Callbacks become hard to manage when multiple steps are needed as part of a single high-level operation:

通过coroutine,可以让一个 多个步骤的复杂的异步代码变得直观简单,参考链接中例子:

/* 3-step process written using callbacks */

void start(void)

{

send("Hi, what's your name? ", step1);

}

void step1(void)

{

read_line(step2);

}

void step2(const char *name)

{

send("Hello, %s\n", name, step3);

}

void step3(void)

{

/* done! */

}

|\/|

| |

\| |/

\ /

\/

/* 3-step process using coroutines */

void coroutine_fn say_hello(void)

{

const char *name;

co_send("Hi, what's your name? ");

name = co_read_line();

co_send("Hello, %s\n", name);

/* done! */

}综上,Qemu Coroutine引入的主要目的是,在保证性能的前提下,让编程变得更加简单,代码可读性更高。

TM.2.2 coroutine实现

coroutine在Linux上的实现,实现基于两个函数:

- sigsetjmp, a call to sigsetjmp() saves the calling environment in its env parameter for later use by siglongjmp();

- siglongjmp, the siglongjmp() function restores the environment saved by the most recent invocation of sigsetjmp() in the same thread, with the corresponding env argument;

两个函数在qemu coroutine中使用,首先看下几个核心的函数:

- current,当前正在运行的上下文,对比内核的current宏,qemu中使用了一个thread local变量,

static __thread Coroutine *current; - switch,从一个上下文切换到另一个上下文,对比内核的context_switch(),

qemu_coroutine_switch() --- current = to_; ret = sigsetjmp(from->env, 0); if (ret == 0) siglongjmp(to->env, action); --- - qemu_coroutine_enter(),切换到下一个coroutine,对比内核的schedule()函数,

qemu_aio_coroutine_enter() --- QSIMPLEQ_INSERT_TAIL(&pending, co, co_queue_next); /* Run co and any queued coroutines */ while (!QSIMPLEQ_EMPTY(&pending)) { Coroutine *to = QSIMPLEQ_FIRST(&pending); ... QSIMPLEQ_REMOVE_HEAD(&pending, co_queue_next); ... to->caller = from; to->ctx = ctx; ... ret = qemu_coroutine_switch(from, to, COROUTINE_ENTER); ... QSIMPLEQ_PREPEND(&pending, &to->co_queue_wakeup); ... } ---co_queue_wakeup上保存的是在to这上下文中唤醒的coroutine,参考函数:

aio_co_enter() --- if (qemu_in_coroutine()) { Coroutine *self = qemu_coroutine_self(); assert(self != co); QSIMPLEQ_INSERT_TAIL(&self->co_queue_wakeup, co, co_queue_next); } --- - qemu_coroutine_yield()切换回caller,也就是qemu_coroutine_enter()的调用者,参考代码:

qemu_coroutine_yield() --- Coroutine *self = qemu_coroutine_self(); Coroutine *to = self->caller; ... self->caller = NULL; qemu_coroutine_switch(self, to, COROUTINE_YIELD); ---

在了解了qemu的coroutine实现之后,我们可以几个简单的例子:

mutex实现:

qemu_co_mutex_wake()

---

mutex->ctx = co->ctx;

aio_co_wake(co);

-> aio_co_enter();

---

qemu_co_mutex_lock()

-> qemu_coroutine_yield()laio的实现:

laio_co_submit()

---

struct qemu_laiocb laiocb = {

.co = qemu_coroutine_self(),

.nbytes = qiov->size,

.ctx = s,

.ret = -EINPROGRESS,

.is_read = (type == QEMU_AIO_READ),

.qiov = qiov,

};

ret = laio_do_submit(fd, &laiocb, offset, type, dev_max_batch);

if (ret < 0) {

return ret;

}

if (laiocb.ret == -EINPROGRESS) {

qemu_coroutine_yield();

}

return laiocb.ret;

---

qemu_laio_process_completions()

-> qemu_laio_process_completion()

---

if (!qemu_coroutine_entered(laiocb->co)) {

aio_co_wake(laiocb->co);

}

---

laio基于linux libaio实现,本身这套接口是异步的,但是,通过coroutine,它的代码看起来像一个同步代码。

如果以上,都通过Linux进程或者线程实现,也是可以的,但是开销会非常高;而且,因为任务会不断陷入睡眠,我们必须不断的创建新的任务,以保证其他工作的进行,而这会导致线程数大量的增加;kernel io-uring实现buffer IO的异步话,其实就是通过内核线程实现的,成为iowq,从这个角度讲,iowq的确可以实现预期功能,并不是基于系统性能的最优解。

TM.3 glib

这部分使用了很多glib函数,参考以下链接:

The Main Event Loop![]() http://tux.iar.unlp.edu.ar/~fede/manuales/glib/glib-The-Main-Event-Loop.html

http://tux.iar.unlp.edu.ar/~fede/manuales/glib/glib-The-Main-Event-Loop.html

TM.4 AIO

TM.4.1 aio驱动模型

aio是通过什么驱动运转起来的?

qemu存在一个基本的aio实例,qemu_aio_context,我们看下它是如何运行的;

初始化,参考如下代码

qemu_init_main_loop()

---

qemu_aio_context = aio_context_new(errp);

-> ctx = (AioContext *) g_source_new(&aio_source_funcs, sizeof(AioContext));

...

src = aio_get_g_source(qemu_aio_context);

g_source_set_name(src, "aio-context");

g_source_attach(src, NULL);

---

qemu_main_loop()

---

while (!main_loop_should_exit()) {

...

main_loop_wait(false);

-> os_host_main_loop_wait()

-> qemu_poll_ns()

-> glib_pollfds_poll()

-> g_main_context_dispatch()

...

}

---

qemu_aio_context是qemu main loop的glib context的其中一个src;

往qemu_aio_contex中加入新的fd,通过以下接口:

aio_set_fd_handler()

---

new_node = g_new0(AioHandler, 1);

...

new_node->pfd.fd = fd;

...

g_source_add_poll(&ctx->source, &new_node->pfd);

new_node->pfd.events = (io_read ? G_IO_IN | G_IO_HUP | G_IO_ERR : 0);

new_node->pfd.events |= (io_write ? G_IO_OUT | G_IO_ERR : 0);

QLIST_INSERT_HEAD_RCU(&ctx->aio_handlers, new_node, node);

---

fd被加入到了qemu_aio_context(或者其他aio ctx)中,并且还设置了处理函数,并挂载了ctx->aio_handlers上;当相关的fd中有事件时,dispatch回调被调用:

aio_source_funcs.aio_ctx_dispatch()

-> aio_dispatch()

-> aio_dispatch_handlers() // iterate ctx->aio_handlers

-> aio_dispatch_handler()

---

revents = node->pfd.revents & node->pfd.events;

node->pfd.revents = 0;

...

if (!QLIST_IS_INSERTED(node, node_deleted) &&

(revents & (G_IO_IN | G_IO_HUP | G_IO_ERR)) &&

aio_node_check(ctx, node->is_external) &&

node->io_read) {

node->io_read(node->opaque);

...

}

if (!QLIST_IS_INSERTED(node, node_deleted) &&

(revents & (G_IO_OUT | G_IO_ERR)) &&

aio_node_check(ctx, node->is_external) &&

node->io_write) {

node->io_write(node->opaque);

...

}

---它会遍历所有的aio_handlers并检查相关fd是否有事件,并调用相关处理函数。

综上,aio最底层依赖的是poll/epoll等fd多路复用机制;

TM.4.2 aio bh

BH,即bottom half,它的作用是往aio context中插入一个需要处理在aio ctx中进行的操作,类似于Linux内核的中断将部分操作放入中断下半部,例如将引起睡眠的操作放入kworker中;

aio bh依赖aio notifier机制,看代码:

aio_context_new()

---

ret = event_notifier_init(&ctx->notifier, false);

---

ret = eventfd(0, EFD_NONBLOCK | EFD_CLOEXEC);

...

e->rfd = e->wfd = ret;

---

...

aio_set_event_notifier(ctx, &ctx->notifier,

false,

aio_context_notifier_cb,

aio_context_notifier_poll,

aio_context_notifier_poll_ready);

-> aio_set_fd_handler(ctx, event_notifier_get_fd(notifier) ...); // event_notifier_get_fd() is 'e->rfd'

...

---aio notifier其实就是aio context内置的一个eventfd;

aio_notify()

-> event_notifier_set()

-> ret = write(e->wfd, &value, sizeof(value)); // value = 0

aio_context_notifier_cb()

-> event_notifier_test_and_clear()

-> len = read(e->rfd, buffer, sizeof(buffer));

aio_notify()仅仅支持往eventfd中写入了一个0,显然,它并不是为了传递数据,而是为了让main loop进入aio的dispatch函数;

aio bh就是基于这样的机制,看代码:

aio_bh_schedule_oneshot()

-> aio_bh_schedule_oneshot_full()

-> aio_bh_enqueue()

---

old_flags = qatomic_fetch_or(&bh->flags, BH_PENDING | new_flags);

if (!(old_flags & BH_PENDING)) {

QSLIST_INSERT_HEAD_ATOMIC(&ctx->bh_list, bh, next);

}

aio_notify(ctx);

---

aio_ctx_dispatch()

-> aio_dispatch()

-> aio_bh_poll()

---

QSLIST_MOVE_ATOMIC(&slice.bh_list, &ctx->bh_list);

QSIMPLEQ_INSERT_TAIL(&ctx->bh_slice_list, &slice, next);

while ((s = QSIMPLEQ_FIRST(&ctx->bh_slice_list))) {

...

bh = aio_bh_dequeue(&s->bh_list, &flags);

...

if ((flags & (BH_SCHEDULED | BH_DELETED)) == BH_SCHEDULED) {

...

aio_bh_call(bh);

}

...

}

---

aio_bh_schedule_oneshot()通过原子的方式往ctx中插入一个bh,并notify该ctx;然后该ctx调用aio_bh_poll()调用相关回调。

TM.4.3 aio coroutine schedule

我们已经了解过qemu coroutine的底层实现,但是qemu如何使用coroutine呢?

参考下面的一个使用场景:

virtio_mmio_write()

-> virtio_queue_notify()

-> vq->handle_output()

virtio_blk_handle_output()

-> virtio_blk_handle_vq()

-> virtio_blk_handle_request()

-> virtio_blk_submit_multireq()

-> submit_requests()

-> blk_aio_pwritev() // virtio_blk_rw_complete()

-> blk_aio_prwv()

blk_aio_prwv()

---

acb = blk_aio_get(&blk_aio_em_aiocb_info, blk, cb, opaque);

acb->rwco = (BlkRwCo) {

.blk = blk,

.offset = offset,

.iobuf = iobuf,

.flags = flags,

.ret = NOT_DONE,

};

acb->bytes = bytes;

acb->has_returned = false;

co = qemu_coroutine_create(co_entry, acb);

bdrv_coroutine_enter(blk_bs(blk), co);

acb->has_returned = true;

if (acb->rwco.ret != NOT_DONE) {

replay_bh_schedule_oneshot_event(blk_get_aio_context(blk),

blk_aio_complete_bh, acb);

}

---

bdrv_coroutine_enter()

-> aio_co_enter()

---

if (ctx != qemu_get_current_aio_context()) {

aio_co_schedule(ctx, co);

return;

}

if (qemu_in_coroutine()) {

Coroutine *self = qemu_coroutine_self();

assert(self != co);

QSIMPLEQ_INSERT_TAIL(&self->co_queue_wakeup, co, co_queue_next);

} else {

aio_context_acquire(ctx);

qemu_aio_coroutine_enter(ctx, co);

aio_context_release(ctx);

}

---

最终,vcpu thread调用了aio_co_enter(),接下来会发生什么?vcpu thread显然不会进入到coroutine处理中;关键因素就是qemu_get_current_aio_context(),参考代码:

AioContext *qemu_get_current_aio_context(void)

{

AioContext *ctx = get_my_aiocontext();

if (ctx) {

return ctx;

}

...

}

void qemu_set_current_aio_context(AioContext *ctx)

{

assert(!get_my_aiocontext());

set_my_aiocontext(ctx);

}

iothread_run()

qemu_init_main_loop()

current aio context保存在一个thread local变量中,设置current aio context的只有两个函数,iothread_run()和qemu_init_main_loop(),vcpu thread并没有;所以,aio_co_enter()进入到了aio_co_schedule(),参考代码:

aio_co_schedule()

---

QSLIST_INSERT_HEAD_ATOMIC(&ctx->scheduled_coroutines,

co, co_scheduled_next);

qemu_bh_schedule(ctx->co_schedule_bh);

---

触发bh,

aio_context_new()

-> ctx->co_schedule_bh = aio_bh_new(ctx, co_schedule_bh_cb, ctx);

bh处理函数,触发coroutine的调度

co_scheduled_bh_cb()

---

AioContext *ctx = opaque;

QSLIST_HEAD(, Coroutine) straight, reversed;

/*

原子操作的链表每次都是从链表头加入新的成员,导致schedule_coroutines

保存的任务是先进后出的;这里的reserved和straight的作用个,就是把这个

链表中的内容倒置过来,保证先进先出

*/

QSLIST_MOVE_ATOMIC(&reversed, &ctx->scheduled_coroutines);

QSLIST_INIT(&straight);

while (!QSLIST_EMPTY(&reversed)) {

Coroutine *co = QSLIST_FIRST(&reversed);

QSLIST_REMOVE_HEAD(&reversed, co_scheduled_next);

QSLIST_INSERT_HEAD(&straight, co, co_scheduled_next);

}

while (!QSLIST_EMPTY(&straight)) {

Coroutine *co = QSLIST_FIRST(&straight);

QSLIST_REMOVE_HEAD(&straight, co_scheduled_next);

...

qemu_aio_coroutine_enter(ctx, co);

...

}

---

Device Emulation

DE.0 概述

QEMU就是设备模拟软件,这是它的核心功能;

设备模拟分为前端和后端;

DE.1 CPU和chipset

DE.1.1 Chipset

参考q35的chipset:

在这里,我们思考两个问题:

- 图中的功能,我们都需要吗?

- QEMU是否需要升级到更新的chipset呢?

对于第一个问题,当我们启动一个只做计算用的虚拟机时,USB、显卡、声卡等这些功能都不需要;而当我们打开一个启动虚拟机的配置参数:

-name guest=will1,debug-threads=on -S -object {"qom-type":"secret","id":"masterKey0","format":"raw","file":"/var/lib/libvirt/qemu/domain-1-will1/master-key.aes"} -machine pc-q35-rhel9.0.0,usb=off,dump-guest-core=off,memory-backend=pc.ram -accel kvm -cpu host,migratable=on -m 16384 -object {"qom-type":"memory-backend-ram","id":"pc.ram","size":17179869184} -overcommit mem-lock=off -smp 8,sockets=8,cores=1,threads=1 -uuid 48fd510f-4c9e-4761-b37c-5dfe0af49a63 -display none -no-user-config -nodefaults -chardev socket,id=charmonitor,fd=23,server=on,wait=off -mon chardev=charmonitor,id=monitor,mode=control -rtc base=utc,driftfix=slew -global kvm-pit.lost_tick_policy=delay -no-hpet -no-shutdown -global ICH9-LPC.disable_s3=1 -global ICH9-LPC.disable_s4=1 -boot strict=on -device {"driver":"pcie-root-port","port":8,"chassis":1,"id":"pci.1","bus":"pcie.0","multifunction":true,"addr":"0x1"} -device {"driver":"pcie-root-port","port":9,"chassis":2,"id":"pci.2","bus":"pcie.0","addr":"0x1.0x1"} -device {"driver":"pcie-root-port","port":10,"chassis":3,"id":"pci.3","bus":"pcie.0","addr":"0x1.0x2"} -device {"driver":"pcie-root-port","port":11,"chassis":4,"id":"pci.4","bus":"pcie.0","addr":"0x1.0x3"} -device {"driver":"pcie-root-port","port":12,"chassis":5,"id":"pci.5","bus":"pcie.0","addr":"0x1.0x4"} -device {"driver":"pcie-root-port","port":13,"chassis":6,"id":"pci.6","bus":"pcie.0","addr":"0x1.0x5"} -device {"driver":"pcie-root-port","port":14,"chassis":7,"id":"pci.7","bus":"pcie.0","addr":"0x1.0x6"} -device {"driver":"pcie-root-port","port":15,"chassis":8,"id":"pci.8","bus":"pcie.0","addr":"0x1.0x7"} -device {"driver":"pcie-root-port","port":16,"chassis":9,"id":"pci.9","bus":"pcie.0","multifunction":true,"addr":"0x2"} -device {"driver":"pcie-root-port","port":17,"chassis":10,"id":"pci.10","bus":"pcie.0","addr":"0x2.0x1"} -device {"driver":"pcie-root-port","port":18,"chassis":11,"id":"pci.11","bus":"pcie.0","addr":"0x2.0x2"} -device {"driver":"pcie-root-port","port":19,"chassis":12,"id":"pci.12","bus":"pcie.0","addr":"0x2.0x3"} -device {"driver":"pcie-root-port","port":20,"chassis":13,"id":"pci.13","bus":"pcie.0","addr":"0x2.0x4"} -device {"driver":"pcie-root-port","port":21,"chassis":14,"id":"pci.14","bus":"pcie.0","addr":"0x2.0x5"} -device {"driver":"qemu-xhci","p2":15,"p3":15,"id":"usb","bus":"pci.3","addr":"0x0"} -device {"driver":"virtio-serial-pci","id":"virtio-serial0","bus":"pci.4","addr":"0x0"} -blockdev {"driver":"file","filename":"/vm/will1_sda.qcow2","node-name":"libvirt-2-storage","auto-read-only":true,"discard":"unmap"} -blockdev {"node-name":"libvirt-2-format","read-only":false,"driver":"qcow2","file":"libvirt-2-storage","backing":null} -device {"driver":"virtio-blk-pci","bus":"pci.5","addr":"0x0","drive":"libvirt-2-format","id":"virtio-disk0","bootindex":1} -device {"driver":"ide-cd","bus":"ide.0","id":"sata0-0-0"} -netdev tap,fd=24,vhost=on,vhostfd=26,id=hostnet0 -device {"driver":"virtio-net-pci","netdev":"hostnet0","id":"net0","mac":"52:54:00:3b:87:a1","bus":"pci.1","addr":"0x0"} -netdev user,id=hostnet1 -device {"driver":"virtio-net-pci","netdev":"hostnet1","id":"net1","mac":"52:54:00:c2:53:da","bus":"pci.2","addr":"0x0"} -chardev pty,id=charserial0 -device {"driver":"isa-serial","chardev":"charserial0","id":"serial0","index":0} -chardev socket,id=charchannel0,fd=22,server=on,wait=off -device {"driver":"virtserialport","bus":"virtio-serial0.0","nr":1,"chardev":"charchannel0","id":"channel0","name":"org.qemu.guest_agent.0"} -audiodev {"id":"audio1","driver":"none"} -device {"driver":"virtio-balloon-pci","id":"balloon0","bus":"pci.6","addr":"0x0"} -object {"qom-type":"rng-random","id":"objrng0","filename":"/dev/urandom"} -device {"driver":"virtio-rng-pci","rng":"objrng0","id":"rng0","bus":"pci.7","addr":"0x0"} -sandbox on,obsolete=deny,elevateprivileges=deny,spawn=deny,resourcecontrol=deny -msg timestamp=on

其对应的设备:

我们使用的虚拟网卡和存储都是virtio的;并不是其原生的sata和集成网卡;

q35带给用户的关键不是q35本身,而是PCIe,且不说近些年大量的设备都是PCIe的;即使是在设备模拟时,PCIe的MSI性能也要好于IOAPIC。

同时,这也回答了另外一个问题,chipset的更新并不能带来性能的提升,因为目前,设备大多还是PCIe,所以升级chipset完全没有必要。

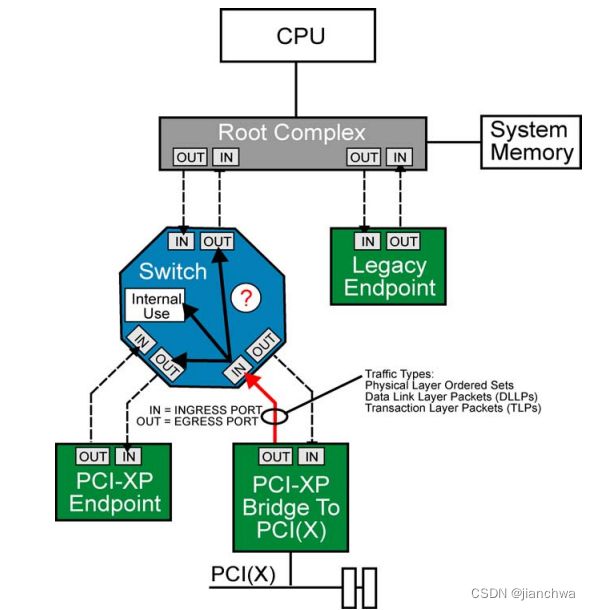

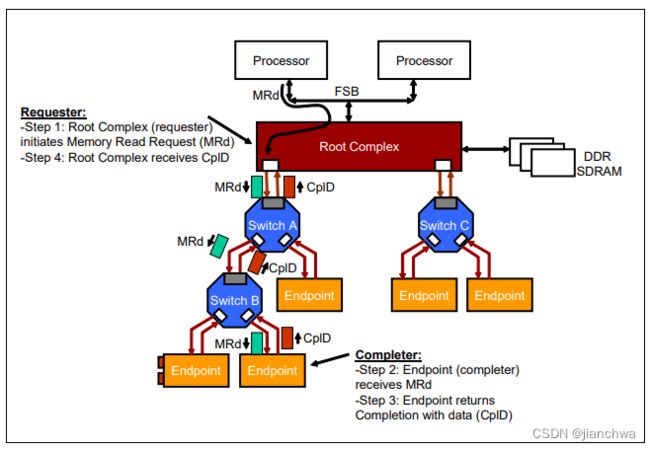

DE.1.2 PCIE

我们需要对PCIe了解更多,才能明白,它为什么这样模拟。

首先了解下PCIE的拓扑结构:PCI Express System Architecture 200 删减版![]() https://www.mindshare.com/files/ebooks/pci%20express%20system%20architecture.pdf

https://www.mindshare.com/files/ebooks/pci%20express%20system%20architecture.pdf

PCI Express System Architecture 第三章完整版![]() https://www.pearsonhighered.com/assets/samplechapter/0/3/2/1/0321156307.pdf引用其中的关键内容:

https://www.pearsonhighered.com/assets/samplechapter/0/3/2/1/0321156307.pdf引用其中的关键内容:

Unlike shared-bus architectures such as PCI and PCI-X, where traffic is visible to each device and routing is mainly a concern of bridges, PCI Express devices are dependent on each other to accept traffic or forward it in the direction of the ultimate recipient.

PCIe Endpoint之间的线路都是一对一专属的;当然,这里有个例外,就是PCe Switch;关于它的工作原理,参考以下内容:

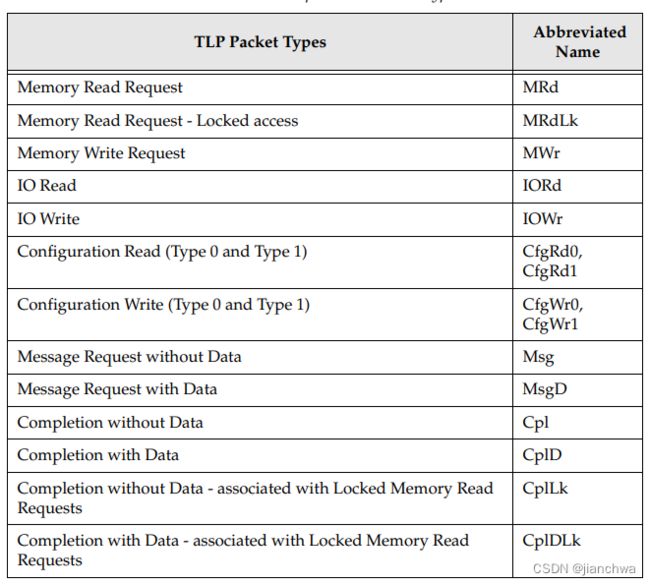

PCIe Switch的upstream port与root complex之间的链接,是其downstream ports上的设备共享的;PCIe switch会根据upstream port的TLP(Transaction Layer Packet)中的地址信息,和downstream ports的type 1 configuration space中的配置信息,来路由TLP;

注:每个downstream port都有它自己的type 1 configuration space

对于一般的PCIe Endport,只需要比较自己的Bar就可以了;

PCIe是Pakcet Based Protocol,

pci root port,参考链接:PCI EXPRESS GUIDELINEShttps://raw.githubusercontent.com/qemu/qemu/master/docs/pcie.txt

Root Bus (pcie.0)

=====================

Place only the following kinds of devices directly on the Root Complex:

- PCI Devices (e.g. network card, graphics card, IDE controller), not controllers. Place only legacy PCI devices on the Root Complex. These will be considered Integrated Endpoints. Note: Integrated Endpoints are not hot-pluggable. Although the PCI Express spec does not forbid PCI Express devices as Integrated Endpoints, existing hardware mostly integrates legacy PCI devices with the Root Complex. Guest OSes are suspected to behave strangely when PCI Express devices are integrated with the Root Complex.

- PCI Express Root Ports (pcie-root-port), for starting exclusively PCI Express hierarchies.

- PCI Express to PCI Bridge (pcie-pci-bridge), for starting legacy PCI hierarchies.

- Extra Root Complexes (pxb-pcie), if multiple PCI Express Root Buses are needed.

其中我们可以 得到的信息是,PCIe设备并不会直接连接到Root complex上,也就是pcie.0 bus,而是需要链接到PCIe root port上;其并不是协议不允许,而像是历史的约定俗成;在之前的qemu启动参数中,我们也看到这个情况。

(To Be Continued)

PCIe(一) —— 基础概念与设备树 | Soul Orbit

DE.2 网络设备

DE.2.1 配置参数

网络设备的配置参数,参考链接:

QEMU's new -nic command line option - QEMU![]() https://www.qemu.org/2018/05/31/nic-parameter/其中有非常详细的描述,我们摘取其中部分内容:

https://www.qemu.org/2018/05/31/nic-parameter/其中有非常详细的描述,我们摘取其中部分内容:

网络设备配置的初始版本为:-net,例如:

-net nic,model=e1000 -net user

-net参数会将其对应的net client链接到一个nethub,与上面的前后端就可互相连接起来,并互相转发流量;但是,这种方式存在一个问题,即

-net nic,model=e1000 -net user -net nic,model=virtio -net tap

这个问题可以通过vlan参数解决,如下:

-net nic,model=e1000,vlan=0 -net user,vlan=0 -net nic,model=virtio,vlan=1 -net tap,vlan=1,

这里的vlan与IEEE 802.1Q 没有任何关系,引起了很多错误配置,于是它被去掉了。

更加合理的-netdev参数引入后,便不再存在上面的问题;

-netdev user,id=n1 -device e1000,netdev=n1 -netdev tap,id=n2 -device virtio-net,netdev=n2

后来,还引入了-nic参数,它是为了解决以下问题:

- is easier to use (and shorter to type) than -netdev

,id= -device ,netdev= - can be used to configure on-board / non-pluggable NICs, too

- does not place a hub between the NIC and the host back-end

instead of -netdev tap,id=n1 -device e1000,netdev=n1, you can simply type -nic tap,model=e1000.

DE.2.2 netdev参数

这小结我们看下代码中,netdev是如何发挥作用的,首先要说明的是,netdev有两个,即

-netdev tap,id=n1

-device e1000,netdev=n1,

- -netdev,用于创建网络设备后端,通过id给自己命名

- -device的子参数,用于指定该网络前端的后端

qemu_init()

-> qemu_create_late_backends()

-> net_init_clients()

---

qemu_opts_foreach(qemu_find_opts("netdev"), net_init_netdev, NULL, errp);

qemu_opts_foreach(qemu_find_opts("nic"), net_param_nic, NULL, errp);

qemu_opts_foreach(qemu_find_opts("net"), net_init_client, NULL, errp);

---

-> qmp_x_exit_preconfig()

-> qemu_create_cli_devices()

-> qemu_opts_foreach(qemu_find_opts("device"),

device_init_func, NULL, &error_fatal);

-> device_init_func()

-> qdev_device_add()

-> qdev_device_add_from_qdict()

-> qdev_new()

-> object_set_properties_from_keyval(&dev->parent_obj, dev->opts, from_json, errp)

-> qdev_realize()

- net_init_clients()中,通过遍历所有的-netdev参数,初始化所有的网络后端,并创建一个net client,参考tap的代码:

net_init_netdev() -> net_client_init() -> net_client_init1() -> net_client_init_fun[type]() -> net_init_tap() -> net_init_tap_one() -> net_tap_fd_init() -> qemu_new_net_client() //net_tap_info - qemu_create_cli_device()则根据-device参数创建所有的设备,其中包括网络设备;参考代码:

static Property rtl8139_properties[] = { DEFINE_NIC_PROPERTIES(RTL8139State, conf), DEFINE_PROP_END_OF_LIST(), }; #define DEFINE_NIC_PROPERTIES(_state, _conf) \ DEFINE_PROP_MACADDR("mac", _state, _conf.macaddr), \ DEFINE_PROP_NETDEV("netdev", _state, _conf.peers) #define DEFINE_PROP_NETDEV(_n, _s, _f) \ DEFINE_PROP(_n, _s, _f, qdev_prop_netdev, NICPeers) const PropertyInfo qdev_prop_netdev = { .name = "str", .description = "ID of a netdev to use as a backend", .get = get_netdev, .set = set_netdev, }; set_netdev() --- queues = qemu_find_net_clients_except(str, peers, NET_CLIENT_DRIVER_NIC, MAX_QUEUE_NUM) ... for (i = 0; i < queues; i++) { ... ncs[i] = peers[i]; ncs[i]->queue_index = i; } --- pci_rtl8139_realize() --- s->nic = qemu_new_nic(&net_rtl8139_info, &s->conf, object_get_typename(OBJECT(dev)), d->id, s); --- --- NetClientState **peers = conf->peers.ncs; ... for (i = 0; i < queues; i++) { qemu_net_client_setup(&nic->ncs[i], info, peers[i], model, name, NULL, true); nic->ncs[i].queue_index = i; } ---

pci_rtl8139_realize()的conf->peers.ncs来自set_netdev(),而set_netdev()运行时,-netdev相关参数已经初始化好了;qemu_net_client_setup()会让rtl8139和tap的net client互相为peer;

qemu_net_client_setup()

---

if (peer) {

assert(!peer->peer);

nc->peer = peer;

peer->peer = nc;

}

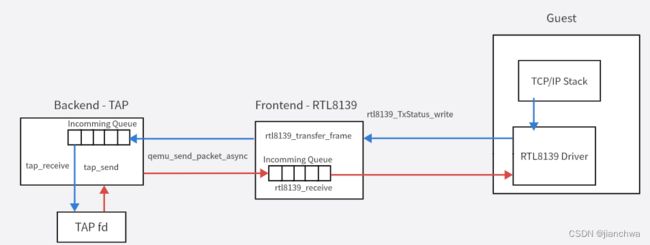

---DE.2.3 NetQueue

NetQueue是一个简单的用于连接Net前后端的数据结构,参考下图:

上图中的关键代码路径为:

net_init_tap()

-> net_init_tap_one()

-> qemu_new_net_client() // net_tap_info

-> qemu_net_client_setup()

---

if (peer) {

nc->peer = peer;

peer->peer = nc;

}

nc->incoming_queue = qemu_new_net_queue(qemu_deliver_packet_iov, nc);

---

-> nc->incoming_queue = qemu_new_net_queue(qemu_deliver_packet_iov, nc);

-> tap_read_poll()

-> tap_update_fd_handler() // read_poll == tap_send, write_poll == tap_writable

-> qemu_set_fd_handler()

tap_send()

-> qemu_send_packet_async()

-> qemu_send_packet_async_with_flags()

---

queue = sender->peer->incoming_queue;

return qemu_net_queue_send(queue, sender, flags, buf, size, sent_cb);

---

-> qemu_net_queue_deliver()

qemu_deliver_packet_iov()

-> nc->info->receive()

rtl8139_receive()

-> rtl8139_do_receive()

rtl8139_io_writel()

-> rtl8139_TxStatus_write()

-> rtl8139_transmit()

-> rtl8139_transmit_one()

-> rtl8139_transfer_frame()

-> qemu_send_packet()

-> qemu_sendv_packet_async()

-> qemu_net_queue_send_iov()

-> qemu_net_queue_deliver_iov()

-> tap_receive()

-> tap_write_packet() // write to fd of tap directlyNetQueue主要有两个函数:

- qemu_net_queue_receive(),主要用于Loopback

- qemu_send_packet_async_with_flags(),用于向peer发送packet,参考代码:

qemu_send_packet_async_with_flags() --- queue = sender->peer->incoming_queue; return qemu_net_queue_send(queue, sender, flags, buf, size, sent_cb); ---

DE.2.4 Backend

Backend后端在生产中最常见的组合是tap + vhost;tap,terminal access port,终端访问端口;我们可以通过一个char设备(socket in kernel)向tap网卡中注入或者读取流量;不像物理网卡,数据去到或者来自连接在pcie上的网卡设备和网线,而是来自用户态或者vhost;如下图:

在tap设备和网络协议栈之间,存在着一层可能的stacked netdev,即堆叠网络设备;

这一层的正式名称,我并不了解;可以对比存储中,也存在这样一个存储设备堆叠的场景,最常见的例子是lvm依赖的device mapper以及md-raid;它们作为一种虚拟存储设备,在对bio做过复制、分割、重定向等操作之后,再发送到底层的存储设备上;网络软件栈中也存在这种情况,最典型的就是bridge;

tap的fd传递自qemu启动参数,参考:

-netdev tap,fd=27,id=hostnet0

-device rtl8139,netdev=hostnet0,id=net0,mac=52:54:00:b9:a7:5d,bus=pci.0,addr=0x3

这个fd在libvird创建,libvirtd通过fork创建子进程qemu,后者继承了这个fd。

父任务给子任务传递fd可以直接继承,那子任务如何给父任务传递fd呢?答案是UNIX socket,其sendmsg/recvmsg可以在进程间传递fd,参考链接:

关于vhost部分的内容,可以参考:KVM IO虚拟化_jianchwa的博客-CSDN博客KVM/QEMU IO虚拟化_kvm io虚拟化https://blog.csdn.net/home19900111/article/details/128610752?spm=1001.2014.3001.5501![]() https://blog.csdn.net/home19900111/article/details/128610752?spm=1001.2014.3001.5501

https://blog.csdn.net/home19900111/article/details/128610752?spm=1001.2014.3001.5501

在流量离开tap或者进入tap之前的内容,参考下面两个链接:

KVM Virtual Networking Concepts - NovaOrdis Knowledge Basehttps://kb.novaordis.com/index.php/KVM_Virtual_Networking_Concepts![]() https://kb.novaordis.com/index.php/KVM_Virtual_Networking_ConceptsWhat is OpenVSwitchhttps://nsrc.org/workshops/2014/nznog-sdn/raw-attachment/wiki/Agenda/OpenVSwitch.pdf

https://kb.novaordis.com/index.php/KVM_Virtual_Networking_ConceptsWhat is OpenVSwitchhttps://nsrc.org/workshops/2014/nznog-sdn/raw-attachment/wiki/Agenda/OpenVSwitch.pdf![]() https://nsrc.org/workshops/2014/nznog-sdn/raw-attachment/wiki/Agenda/OpenVSwitch.pdf

https://nsrc.org/workshops/2014/nznog-sdn/raw-attachment/wiki/Agenda/OpenVSwitch.pdf

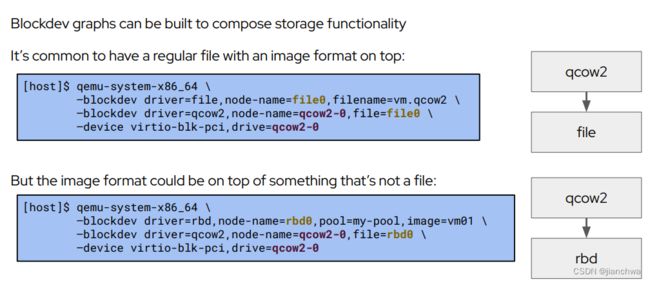

DE.3 存储设备

DE.3.1 配置参数

关于存储设备的配置参数,参考文档,另外,本小节基于代码qemu 7.0.0;

QEMU Block Layer Concepts & Features![]() https://vmsplice.net/~stefan/qemu-block-layer-features-and-concepts.pdf

https://vmsplice.net/~stefan/qemu-block-layer-features-and-concepts.pdf

与网络设备类似,存储设备的配置参数也分为两种类型,

- -device,配置前端,通过drive=xxx链接后端

- -blockdev,配置后端,通过node-name为自己定义name以便前端引用;

-blockdev的特殊之处在于它可以有堆叠关系,它们通过参数'file'指向下一层;例如上图中,qcow2描述的是镜像格式,file和rdb则是承载镜像的主体;还可以加入一层:

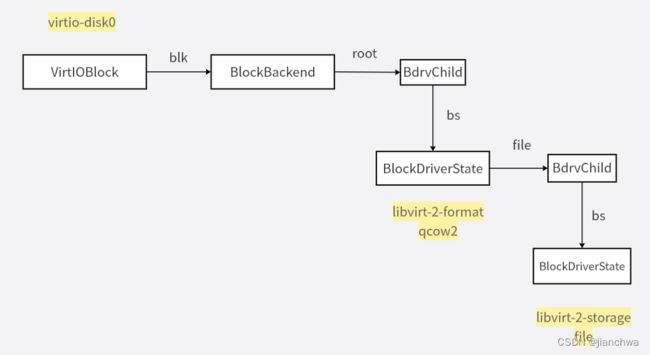

DE.3.2 BlockBackend

BlockBackend相关有三个数据结构:

- BlockBackend,该结构由存储前端直接引用;

- BlockDriver,例如raw、qcow2、file、rbd、nbd等都是BlockDriver

- BlockDriverState,BlockDriver实例

参考如下配置参数:

-blockdev {"driver":"file","filename":"/vm/will1_sda.qcow2","node-name":"libvirt-2-storage","auto-read-only":true,"discard":"unmap"}

-blockdev {"node-name":"libvirt-2-format","read-only":false,"driver":"qcow2","file":"libvirt-2-storage","backing":null}

-device {"driver":"virtio-blk-pci","bus":"pci.5","addr":"0x0","drive":"libvirt-2-format","id":"virtio-disk0","bootindex":1}

最终形成数据结构关系为:

BlockDriverState之间的堆叠关系在创建时候的体现为:

configure_blockdev()

-> qmp_blockdev_add()

-> bds_tree_init()

-> bdrv_open()

-> bdrv_open_inherit()

bdrv_open_inherit()

---

bs = bdrv_new();

...

ret = bdrv_fill_options(&options, filename, &flags, &local_err);

---

drvname = qdict_get_try_str(*options, "driver");

if (drvname) {

drv = bdrv_find_format(drvname); // iterate bdrv_drivers

...

protocol = drv->bdrv_file_open;

}

if (protocol) {

*flags |= BDRV_O_PROTOCOL;

} else {

*flags &= ~BDRV_O_PROTOCOL;

}

---

...

if ((flags & BDRV_O_PROTOCOL) == 0) {

BlockDriverState *file_bs;

file_bs = bdrv_open_child_bs(filename, options, "file", bs,

&child_of_bds, BDRV_CHILD_IMAGE,

true, &local_err);

---

reference = qdict_get_try_str(options, bdref_key); //bdref_key is "file"

...

bs = bdrv_open_inherit(filename, reference, image_options, 0,

parent, child_class, child_role, errp);

---

...

}

---

其中继续嵌套调用的条件是BlockDriver是否具有bdrv_file_open回调;观察代码,具有该回调的都是rbd, gluster, file-posix, nbd, iscsi等底层存储驱动。

堆叠关系的建立发生BlockDriver的bdrv_open中,例如:

raw_open()

---

bs->file = bdrv_open_child(NULL, options, "file", bs, &child_of_bds,

file_role, false, errp);

---

qcow2_open()

---

bs->file = bdrv_open_child(NULL, options, "file", bs, &child_of_bds,

BDRV_CHILD_IMAGE, false, errp);

---这种堆叠关系在IO路径中的体现为:

blk_aio_preadv() // BlockBackend

-> blk_aio_read_entry()

-> blk_co_do_preadv()

---

bdrv_inc_in_flight(bs);

...

ret = bdrv_co_preadv(blk->root, offset, bytes, qiov, flags);

^^^^^^^^^^

bdrv_dec_in_flight(bs);

---

这里的raw是raw format,代码比较简单

raw_co_preadv()

---

ret = raw_adjust_offset(bs, &offset, bytes, false);

...

return bdrv_co_preadv(bs->file, offset, bytes, qiov, flags);

^^^^^^^^

---

这里的raw是file storage的raw

bdrv_file.bdrv_co_preadv()

raw_co_preadv()

-> raw_co_prw()

-> laio_co_submit()

存储前端与后端建立联系是在参数配置阶段,即-device里的drive参数:

set_drive()

-> set_drive_helper()

---

blk = blk_by_name(str);

if (!blk) {

bs = bdrv_lookup_bs(NULL, str, NULL);

if (bs) {

...

ctx = iothread ? bdrv_get_aio_context(bs) : qemu_get_aio_context();

blk = blk_new(ctx, 0, BLK_PERM_ALL);

blk_created = true;

ret = blk_insert_bs(blk, bs, errp);

...

}

}

...

*ptr = blk;

---

virtio_blk_device_realize()

---

s->blk = conf->conf.blk;

---

Migration

MG.0 概述

本节内容参考了以下链接:

Live Migrating QEMU-KVM Virtual Machines | Red Hat Developer![]() https://developers.redhat.com/blog/2015/03/24/live-migrating-qemu-kvm-virtual-machines#vmstate_example__before_Introduction To Kvm Migration | Linux Technical Blogs

https://developers.redhat.com/blog/2015/03/24/live-migrating-qemu-kvm-virtual-machines#vmstate_example__before_Introduction To Kvm Migration | Linux Technical Blogs![]() https://balamuruhans.github.io/2018/11/13/introduction-to-kvm-migration.html迁移目的:

https://balamuruhans.github.io/2018/11/13/introduction-to-kvm-migration.html迁移目的:

- Load balancing – If host gets overloaded, guests can be moved to other host which are not utilized much

- Energy saving – guests can be moved from multiple host to single host and power off hosts which are not used

- Maintenance – If host have to be shut-off for maintenance or to upgrade etc.,

迁移种类:

- Live migration – guest remains to in running state in source, guest is booted and remains to be in paused state in destination host while migration is in progress. Once the migration is completed guest starts its execution from destination host without downtime.

- Online migration – guest remains in paused state in source and in destination host, once migration completes it resumes in destination host. It takes comparatively less time to migrate as the there is no memory dirtying during migration as guest remains to be in paused state but there will be downtime equal to the migration time.

- Offline migration – guest can be in running or in shutoff state in source, offline migration would just define a guest in destination in the destination host and it would remain in shutoff state.

本节,我们将主要关注热迁移流程。

在深入代码之前,我们首先要明确,虚拟机的那些信息需要迁移?

虚拟机包含的信息大体有两种:

- 配置信息,即可以从配置参数中初始化而来的信息,比如,几个vcpu、多少内存、磁盘容量等;这些不需要迁移,或者说,只需要整体将配置xml发送过去即可;

- 运行信息,即虚拟机各个组件运行过程中的产生的信息;

虚拟机运行信息分为哪些?换句话说,一台计算机在运行过程中,会有那些组件会实时变化?

- 内存,其又可以分为以下几种类型:

- 外设使用的内存,有部分外设需要OS给他分配内存,比如网卡的ring buffer、nvme的sq/cq等;

- CPU使用的内存,

- 寄存器,每个cpu都有一套寄存器,用于保存程序运行状态;

- 外设内部运行信息;外设除了访问OS分给它的内存,还有内部状态信息;

MG.1 VMState

vmstate是实现虚拟机迁移的辅助结构,其用来告知qemu什么信息需要迁移以及怎么迁移;目前,存在两种接口:

-

vmsd,大多数设备都使用这种方式:

static const VMStateDescription vmstate_virtio_blk = { .name = "virtio-blk", .minimum_version_id = 2, .version_id = 2, .fields = (VMStateField[]) { VMSTATE_VIRTIO_DEVICE, VMSTATE_END_OF_LIST() }, }; #define VMSTATE_VIRTIO_DEVICE \ { \ .name = "virtio", \ .info = &virtio_vmstate_info, \ .flags = VMS_SINGLE, \ } const VMStateInfo virtio_vmstate_info = { .name = "virtio", .get = virtio_device_get, .put = virtio_device_put, }; virtio_blk_class_init() --- dc->vmsd = &vmstate_virtio_blk; --- device_set_realized() -> vmstate_register_with_alias_id() // qdev_get_vmsd() --- se = g_new0(SaveStateEntry, 1); ... se->opaque = opaque; se->vmsd = vmsd; se->alias_id = alias_id; ... savevm_state_handler_insert(se); --- vmstate_save() -> vmstate_save_state() -> vmstate_save_state_v() --- while (field->name) { if ((field->field_exists && field->field_exists(opaque, version_id)) || (!field->field_exists && field->version_id <= version_id)) { void *first_elem = opaque + field->offset; int i, n_elems = vmstate_n_elems(opaque, field); int size = vmstate_size(opaque, field); ... for (i = 0; i < n_elems; i++) { void *curr_elem = first_elem + size * i; vmsd_desc_field_start(vmsd, vmdesc_loop, field, i, n_elems);a if (!curr_elem && size) { ... } else if (field->flags & VMS_STRUCT) { ret = vmstate_save_state(f, field->vmsd, curr_elem, vmdesc_loop); } else if (field->flags & VMS_VSTRUCT) { ret = vmstate_save_state_v(f, field->vmsd, curr_elem, vmdesc_loop, ield->struct_version_id); } else { ret = field->info->put(f, curr_elem, size, field, vmdesc_loop); } ... written_bytes = qemu_ftell_fast(f) - old_offset; vmsd_desc_field_end(vmsd, vmdesc_loop, field, written_bytes, i); ... } } ... field++; } --- - old style,典型的如ram,参考代码:

ram_mig_init() -> register_savevm_live("ram", 0, 4, &savevm_ram_handlers, &ram_state); --- se = g_new0(SaveStateEntry, 1); ... se->ops = ops; se->opaque = opaque; se->vmsd = NULL; ... savevm_state_handler_insert(se); --- static SaveVMHandlers savevm_ram_handlers = { .save_setup = ram_save_setup, .save_live_iterate = ram_save_iterate, ... .load_state = ram_load, .save_cleanup = ram_save_cleanup, .load_setup = ram_load_setup, .load_cleanup = ram_load_cleanup, .resume_prepare = ram_resume_prepare, }; vmstate_save() -> vmstate_save_old_style() // se->vmsd is NULL -> se->ops->save_state(f, se->opaque);

ram采用的这种vmstate,是因为在迁移拷贝内存的过程中,会有多轮操作,也就是save_live_iterate。

MG.2 流程概述

发送端代码流程概述大致如下:

迁移流程起始自qmp migrate命令,其处理函数为qmp_migrate(),

qmp_migrate()

-> fd_start_outgoing_migration()

-> migration_channel_connect()

-> migrate_fd_connect()

---

qemu_thread_create(&s->thread, "live_migration",

migration_thread, s, QEMU_THREAD_JOINABLE);

---

migration_thread()

---

qemu_savevm_state_setup(s->to_dst_file);

...

while (migration_is_active(s)) {

if (urgent || !qemu_file_rate_limit(s->to_dst_file)) {

MigIterateState iter_state = migration_iteration_run(s);

if (iter_state == MIG_ITERATE_SKIP) {

continue;

} else if (iter_state == MIG_ITERATE_BREAK) {

break;

}

}

...

urgent = migration_rate_limit();

}

---

migration_iteration_run()

---

qemu_savevm_state_pending(s->to_dst_file, s->threshold_size, &pend_pre,

&pend_compat, &pend_post);

pending_size = pend_pre + pend_compat + pend_post;

if (pending_size && pending_size >= s->threshold_size) {

...

qemu_savevm_state_iterate(s->to_dst_file, in_postcopy);

} else {

migration_completion(s);

return MIG_ITERATE_BREAK;

}

---首先需要拷贝所有的内存:

- qemu_savevm_state_setup()中设置全部Ram为dirty,参考函数ram_save_setup();

- qemu_savevm_state_iterate()则持续发送dirty ram,参考函数ram_save_iterate();

- 当发送完毕,或者因为某个条件强制结束,则进入migration_completion()

migration_completion()

-> vm_stop_force_state()

-> vm_stop()

-> do_vm_stop()

-> pause_all_vcpus()

---

CPU_FOREACH(cpu) {

if (qemu_cpu_is_self(cpu)) {

qemu_cpu_stop(cpu, true);

} else {

cpu->stop = true;

qemu_cpu_kick(cpu);

}

...

while (!all_vcpus_paused()) {

qemu_cond_wait(&qemu_pause_cond, &qemu_global_mutex);

CPU_FOREACH(cpu) {

qemu_cpu_kick(cpu);

-> cpus_kick_thread()

-> pthread_kill(cpu->thread->thread, SIG_IPI)

}

}

}

---

-> bdrv_drain_all()

-> bdrv_drain_all_begin()

---

/* Now poll the in-flight requests */

AIO_WAIT_WHILE(NULL, bdrv_drain_all_poll());

---

-> bdrv_flush_all()

-> qemu_savevm_state_complete_precopy()

-> cpu_synchronize_all_states()

-> cpu_synchronize_state()

kvm_cpu_synchronize_state()

-> kvm_arch_get_registers() // cpu->vcpu_dirty = true, means need to put cpu registers

---

ret = kvm_get_vcpu_events(cpu);

ret = kvm_get_mp_state(cpu);

ret = kvm_getput_regs(cpu, 0);

ret = kvm_get_xsave(cpu);

ret = kvm_get_xcrs(cpu);

ret = has_sregs2 ? kvm_get_sregs2(cpu) : kvm_get_sregs(cpu);

ret = kvm_get_msrs(cpu);

ret = kvm_get_apic(cpu);

---

-> qemu_savevm_state_complete_precopy_iterable()

---

QTAILQ_FOREACH(se, &savevm_state.handlers, entry) {

if (!se->ops ||

(in_postcopy && se->ops->has_postcopy &&

se->ops->has_postcopy(se->opaque)) ||

!se->ops->save_live_complete_precopy) {

continue;

}

...

ret = se->ops->save_live_complete_precopy(f, se->opaque);

...

}

---

-> qemu_savevm_state_complete_precopy_non_iterable()

---

QTAILQ_FOREACH(se, &savevm_state.handlers, entry) {

if ((!se->ops || !se->ops->save_state) && !se->vmsd) {

continue;

}

...

ret = vmstate_save(f, se, vmdesc);

...

}

---

从代码中可以看见migration_completion()主要分为四步:

- 停止所有vcpu线程

- 等待inflight IO完成,

- 由于vcpu线程停止,所有不会有新的IO产生

- 已经发送的IO完成之后,会给vcpu发出中断,中断信息会被记录下,

- 保存寄存器信息,其中包括上一步中的中断信息

- 保存所有设备的运行信息,分两步

- 带有save_live_complete_precopy()回调的,主要是Ram

- 带有vmsd,也就是各种设备

MG.3 内存迁移

MG.3.1 发送流程

内存迁移的发送端大致步骤如下:

- 第一轮首先将所有的内存都进行拷贝;

- 从第二轮开始,只拷贝dirty page;

- 等dirty page的数量逐渐收敛,停止vcpu,然后进行最后一轮拷贝

进入最后一轮的条件,参考代码:

migration_iteration_run()

---

qemu_savevm_state_pending(s->to_dst_file, s->threshold_size, &pend_pre,

&pend_compat, &pend_post);

pending_size = pend_pre + pend_compat + pend_post;

...

if (pending_size && pending_size >= s->threshold_size) {

...

qemu_savevm_state_iterate(s->to_dst_file, in_postcopy);

} else {

migration_completion(s);

return MIG_ITERATE_BREAK;

}

---其中pending_size来自migration_dirty_pages,其增减过程参考代码:

migration_iteration_run()

-> qemu_savevm_state_pending()

-> .save_live_pending()

ram_save_pending()

-> migration_bitmap_sync_precopy()

-> migration_bitmap_sync()

-> ramblock_sync_dirty_bitmap()

---

uint64_t new_dirty_pages =

cpu_physical_memory_sync_dirty_bitmap(rb, 0, rb->used_length);

rs->migration_dirty_pages += new_dirty_pages;

---

-> qemu_savevm_state_pending()

-> ram_save_iterate()

-> ram_find_and_save_block()

-> ram_save_host_page()

-> ram_save_target_page()

-> migration_bitmap_clear_dirty()

---

ret = test_and_clear_bit(page, rb->bmap);

if (ret) {

rs->migration_dirty_pages--;

^^^^^^^^^^^^^^^^^^^^^^^^

}

---threshhod_size则来自函数:

migration_rate_limit()

-> migration_update_counters()

---

current_bytes = migration_total_bytes(s);

transferred = current_bytes - s->iteration_initial_bytes;

time_spent = current_time - s->iteration_start_time;

bandwidth = (double)transferred / time_spent;

s->threshold_size = bandwidth * s->parameters.downtime_limit;

---

它计算自评估的发送速度和用户设置的downtime_limit。

MG.3.2 Dirty Pages

内存迁移的发送端大致步骤如以上,但是,其中还有个关键步骤没有讲,就是如何获取dirty pages,这里面包括两个关键点:

- 如何追踪到dirty pages

- 如何将dirty pages的信息传递给qemu

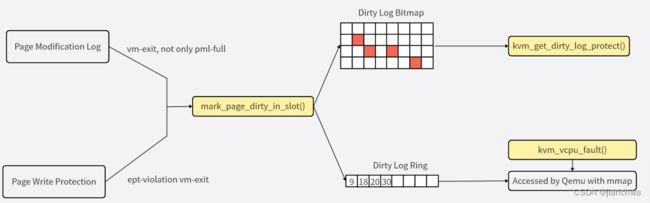

如上图中,

Dirty Page的追踪使用了两种机制:

- Page Modification Log,这是Intel VMX提供的机制;

- Page Write Protection,这是软件机制,通过去掉EPT的spte的可写bit来追踪写操作

MG.3.2.1 Tracking

关于PML机制,参考Intel手册,28.2.5 Page-Modification Logging,其具体的工作方式如下:

When accessed and dirty flags for EPT are enabled, software can track writes to guest-physical addresses using a feature called page-modification logging.

Before allowing a guest-physical access, the processor may determine that it first needs to set an accessed or dirty flag for EPT (see Section 28.2.4). When this happens, the processor examines the PML index. If the PML index is not in the range 0–511, there is a page-modification log-full event and a VM exit occurs. In this case, the accessed or dirty flag is not set, and the guest-physical access that triggered the event does not occur.

If instead the PML index is in the range 0–511, the processor proceeds to update accessed or dirty flags for EPT as described in Section 28.2.4. If the processor updated a dirty flag for EPT (changing it from 0 to 1), it then operates as follows:

- The guest-physical address of the access is written to the page-modification log. Specifically, the guest-physical address is written to physical address determined by adding 8 times the PML index to the PML address. Bits 11:0 of the value written are always 0 (the guest-physical address written is thus 4-KByte aligned).

- The PML index is decremented by 1 (this may cause the value to transition from 0 to FFFFH)

PML在内核态的相关代码如下:

__vmx_handle_exit()

---

/*

* Flush logged GPAs PML buffer, this will make dirty_bitmap more

* updated. Another good is, in kvm_vm_ioctl_get_dirty_log, before

* querying dirty_bitmap, we only need to kick all vcpus out of guest

* mode as if vcpus is in root mode, the PML buffer must has been

* flushed already. Note, PML is never enabled in hardware while

* running L2.

*/

if (enable_pml && !is_guest_mode(vcpu))

vmx_flush_pml_buffer(vcpu);

---

handle_pml_full()

---

/*

* PML buffer already flushed at beginning of VMEXIT. Nothing to do

* here.., and there's no userspace involvement needed for PML.

*/

---

vmx_flush_pml_buffer()

---

pml_idx = vmcs_read16(GUEST_PML_INDEX);

/* Do nothing if PML buffer is empty */

if (pml_idx == (PML_ENTITY_NUM - 1))

return;

/* PML index always points to next available PML buffer entity */

if (pml_idx >= PML_ENTITY_NUM)

pml_idx = 0;

else

pml_idx++;

ml_buf = page_address(vmx->pml_pg);

for (; pml_idx < PML_ENTITY_NUM; pml_idx++) {

u64 gpa;

gpa = pml_buf[pml_idx];

WARN_ON(gpa & (PAGE_SIZE - 1));

kvm_vcpu_mark_page_dirty(vcpu, gpa >> PAGE_SHIFT);

}

/* reset PML index */

vmcs_write16(GUEST_PML_INDEX, PML_ENTITY_NUM - 1);

---最终,它将Dirty Page通过函数kvm_vcpu_mark_page_dirty()输出。

如果采用Page Write Protection机制,则代码如下:

direct_page_fault()

-> fast_page_fault()

-> fast_pf_fix_direct_spte()

---

if (cmpxchg64(sptep, old_spte, new_spte) != old_spte)

return false;

if (is_writable_pte(new_spte) && !is_writable_pte(old_spte)) {

gfn = kvm_mmu_page_get_gfn(sp, sptep - sp->spt);

kvm_vcpu_mark_page_dirty(vcpu, gfn);

}

---

-> mark_page_dirty_in_slot()

同样,最终结果也输出到了mark_page_dirty_in_slot()。

MG.3.2.2 Output

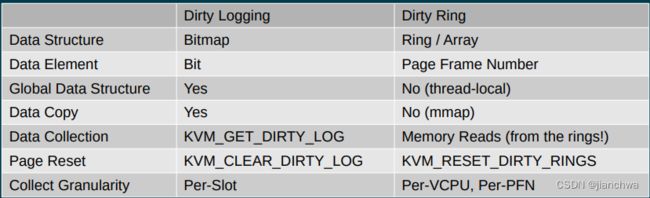

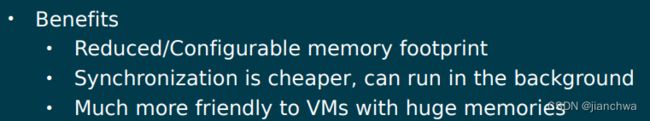

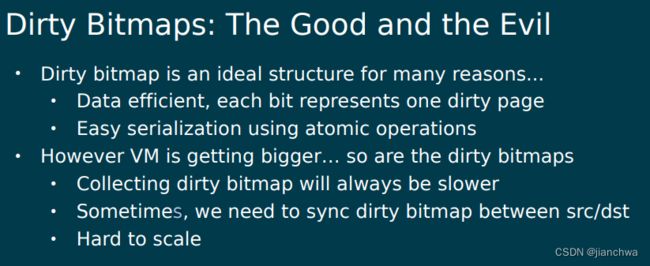

Dirty Log如何输出给用户态qemu呢?目前,存在两种方式,

- Dirty Log Bitmap,这是Qemu的传统方式

- Dirty Log Ring,这是新加入的方式;

关于两种方式的对比,可以参考链接:

KVM Dirty Ring Interface![]() https://static.sched.com/hosted_files/kvmforum2020/97/kvm_dirty_ring_peter.pdf?_gl=1*1rs0obh*_ga*ODM3MDA3NzIwLjE2OTUwMDcyNDc.*_ga_XH5XM35VHB*MTY5NTAwNzI0Ny4xLjAuMTY5NTAwNzI0Ny42MC4wLjA.参考其中关于Dirty Log Ring与Bitmap的对比:

https://static.sched.com/hosted_files/kvmforum2020/97/kvm_dirty_ring_peter.pdf?_gl=1*1rs0obh*_ga*ODM3MDA3NzIwLjE2OTUwMDcyNDc.*_ga_XH5XM35VHB*MTY5NTAwNzI0Ny4xLjAuMTY5NTAwNzI0Ny42MC4wLjA.参考其中关于Dirty Log Ring与Bitmap的对比:

从作者的commit message中得到的性能测试数据:[PATCH RFC v3 00/11] KVM: Dirty ring support (QEMU part) - Peter Xuhttps://lore.kernel.org/qemu-devel/[email protected]/

I gave it a shot with a 24G guest, 8 vcpus, using 10g NIC as migration channel. When idle or dirty workload small, I don't observe major difference on total migration time. When with higher random dirty workload (800MB/s dirty rate upon 20G memory, worse for kvm dirty ring). Total migration time is (ping pong migrate for 6 times, in seconds): |-------------------------+---------------| | dirty ring (4k entries) | dirty logging | |-------------------------+---------------| | 70 | 58 | | 78 | 70 | | 72 | 48 | | 74 | 52 | | 83 | 49 | | 65 | 54 | |-------------------------+---------------| Summary: dirty ring average: 73s dirty logging average: 55s The KVM dirty ring will be slower in above case. The number may show that the dirty logging is still preferred as a default value because small/medium VMs are still major cases, and high dirty workload happens frequently too. And that's what this series did.

似乎Dirty Ring的性能收益并不好。。。

从原理的角度考虑,dirty ring对比dirty bitmap

- 系统调用改成mmap,但是,KVM_GET_DIRTY_LOG并不会高频执行,所以,这里收益不会很明显

- dirty ring每次只需要拷贝出记录dirty的page信息的kvm_dirty_gfn(16 Bytes),bitmap则需要拷贝出整个bitmap;在大内存虚拟机且dirty rate较低时,dirty ring的效率更高,但是,却也显现不出性能收益;如果dirty rate较高,出现dirty ring overflow的情况,dirty ring单位字节存储效率低的劣势就会显现,dirty ring每个dirty page需要16Bytes,而dirty bitmap仅需1 Bit,两者相差128倍,且dirty ring overflow还会导致vcpu回到用户态,这就会导致性能下降。

从以上,我们可以看出,dirty ring并不会带来性能上收益。

但是,通过dirty ring full返回用户态的这个契机,可以引入加快收敛的机制,参考代码:

kernel side:

------------

vcpu_enter_guest()

---

/* Forbid vmenter if vcpu dirty ring is soft-full */

if (unlikely(vcpu->kvm->dirty_ring_size &&

kvm_dirty_ring_soft_full(&vcpu->dirty_ring))) {

vcpu->run->exit_reason = KVM_EXIT_DIRTY_RING_FULL;

r = 0;

goto out;

}

---

qemu side:

----------

kvm_cpu_exec()

---

...

run_ret = kvm_vcpu_ioctl(cpu, KVM_RUN, 0);

...

case KVM_EXIT_DIRTY_RING_FULL:

qemu_mutex_lock_iothread();

if (dirtylimit_in_service()) {

kvm_dirty_ring_reap(kvm_state, cpu);

} else {

kvm_dirty_ring_reap(kvm_state, NULL);

}

qemu_mutex_unlock_iothread();

dirtylimit_vcpu_execute(cpu);

...

---

dirtylimit_vcpu_execute()

---

if (dirtylimit_in_service() &&

dirtylimit_vcpu_get_state(cpu->cpu_index)->enabled &&

cpu->throttle_us_per_full) {

usleep(cpu->throttle_us_per_full);

}

---MG.3.2.3 Dirty log Qemu代码

开启Dirty log:

参考代码:

ram_save_setup()

-> ram_init_all()

-> ram_init_bitmaps()

-> memory_global_dirty_log_start() //GLOBAL_DIRTY_MIGRATION

---

global_dirty_tracking |= flags;

if (!old_flags) {

MEMORY_LISTENER_CALL_GLOBAL(log_global_start, Forward);

memory_region_transaction_begin();

memory_region_update_pending = true;

memory_region_transaction_commit();

}

---

render_memory_region()

---

fr.dirty_log_mask = memory_region_get_dirty_log_mask(mr);

---

address_space_update_topology_pass()

---

if (frold && frnew && flatrange_equal(frold, frnew)) {

/* In both and unchanged (except logging may have changed) */

if (adding) {

MEMORY_LISTENER_UPDATE_REGION(frnew, as, Forward, region_nop);

if (frnew->dirty_log_mask & ~frold->dirty_log_mask) {

MEMORY_LISTENER_UPDATE_REGION(frnew, as, Forward, log_start,

frold->dirty_log_mask,

frnew->dirty_log_mask);

}

if (frold->dirty_log_mask & ~frnew->dirty_log_mask) {

MEMORY_LISTENER_UPDATE_REGION(frnew, as, Reverse, log_stop,

frold->dirty_log_mask,

frnew->dirty_log_mask);

}

}

++iold;

++inew;

}

---

kvm_log_start()

-> kvm_section_update_flags()

-> kvm_slot_update_flags()

---

mem->flags = kvm_mem_flags(mr);

---

if (memory_region_get_dirty_log_mask(mr) != 0) {

flags |= KVM_MEM_LOG_DIRTY_PAGES;

}

---

..

kvm_slot_init_dirty_bitmap(mem);

return kvm_set_user_memory_region(kml, mem, false);

---

KVM_LOG_DIRTY_PAGES在内核端的memslot产生影响:

KVM_SET_USER_MEMORY_REGION

kvm_vm_ioctl_set_memory_region()

-> kvm_set_memory_region()

-> __kvm_set_memory_region()

-> kvm_alloc_dirty_bitmap() // KVM_MEM_LOG_DIRTY_PAGES

-> kvm_set_memslot()

-> kvm_arch_commit_memory_region()

-> kvm_mmu_slot_apply_flags()

---

bool log_dirty_pages = new->flags & KVM_MEM_LOG_DIRTY_PAGES;

...

if (!log_dirty_pages) {

...

} else {

...

/*

* Size of the CPU's dirty log buffer, i.e. VMX's PML buffer. A zero

* value indicates CPU dirty logging is unsupported or disabled.

*/

if (kvm_x86_ops.cpu_dirty_log_size) {

kvm_mmu_slot_leaf_clear_dirty(kvm, new);

kvm_mmu_slot_remove_write_access(kvm, new, PG_LEVEL_2M);

} else {

kvm_mmu_slot_remove_write_access(kvm, new, PG_LEVEL_4K);

}

}

---

读取Dirty log:

参考代码:

migration_iteration_run()

-> qemu_savevm_state_pending()

-> .save_live_pending()

ram_save_pending()

-> migration_bitmap_sync_precopy()

-> migration_bitmap_sync()

-> memory_global_dirty_log_sync()

-> memory_region_sync_dirty_bitmap()

-> .log_sync()

kvm_log_sync()

-> kvm_physical_sync_dirty_bitmap()

-> kvm_slot_get_dirty_log()

-> ret = kvm_vm_ioctl(s, KVM_GET_DIRTY_LOG, &d)

在内核态代码:

KVM_GET_DIRTY_LOG

kvm_vm_ioctl_get_dirty_log()

-> kvm_get_dirty_log_protect()

1. Take a snapshot of the bit and clear it if needed.

2. Write protect the corresponding page.

3. Copy the snapshot to the userspace.

4. Upon return caller flushes TLB's if needed.

kvm_get_dirty_log_protect()

---

n = kvm_dirty_bitmap_bytes(memslot);

flush = false;

if (kvm->manual_dirty_log_protect) {

...

} else {

...

KVM_MMU_LOCK(kvm);

for (i = 0; i < n / sizeof(long); i++) {

unsigned long mask;

gfn_t offset;

if (!dirty_bitmap[i])

continue;

flush = true;

mask = xchg(&dirty_bitmap[i], 0);

dirty_bitmap_buffer[i] = mask;

offset = i * BITS_PER_LONG;

kvm_arch_mmu_enable_log_dirty_pt_masked(kvm, memslot,

offset, mask);

}

KVM_MMU_UNLOCK(kvm);

}

if (flush)

kvm_arch_flush_remote_tlbs_memslot(kvm, memslot);

if (copy_to_user(log->dirty_bitmap, dirty_bitmap_buffer, n))

return -EFAULT;

---其不仅读取了dirty log,如果是write-protect,还会重新开启write-protect;因为write-protect的page在被写访问之后,会触发vm-exit,处理函数会赋予其写权限,并记录dirty bitmap。

MG.3.2.4 Manual Dirty Log Protect

在kvm_get_dirty_log_protect()中存在一个manual_dirty_log_protect的条件,引入的相关patch为:

[3/3] kvm: introduce manual dirty log reprotect - Patchworkhttps://patchwork.kernel.org/project/kvm/patch/[email protected]/其中谈到的场景是:

- KVM_GET_DIRTY_LOG returns a set of dirty pages and write protects them.

- The guest modifies the pages, causing them to be marked ditry.

- Userspace actually copies the pages.

- KVM_GET_DIRTY_LOG returns those pages as dirty again, even though they were not written to since (3).

场景中,Step 3做的Copy操作是Round N,而Step 2中的触发的Mark Dirty在Round N + 1中使用;这里会造成在Round N + 1中再次拷贝同一个Page;

manual_dirty_log_protect功能引入后,可以在执行Dirty Page拷贝之前,对其进行显示的Write Protection,如此便将造成两次拷贝的时间窗口缩到最短;Qemu中相关的代码为

kvm_init()

---

if (!s->kvm_dirty_ring_size) {

dirty_log_manual_caps = kvm_check_extension(s, KVM_CAP_MANUAL_DIRTY_LOG_PROTECT2);

dirty_log_manual_caps &= (KVM_DIRTY_LOG_MANUAL_PROTECT_ENABLE |

KVM_DIRTY_LOG_INITIALLY_SET);

s->manual_dirty_log_protect = dirty_log_manual_caps;

if (dirty_log_manual_caps) {

ret = kvm_vm_enable_cap(s, KVM_CAP_MANUAL_DIRTY_LOG_PROTECT2, 0,

dirty_log_manual_caps);

if (ret) {

warn_report("Trying to enable capability %"PRIu64" of "

"KVM_CAP_MANUAL_DIRTY_LOG_PROTECT2 but failed. "

"Falling back to the legacy mode. ",

dirty_log_manual_caps);

s->manual_dirty_log_protect = 0;

}

}

}

---这里的代码版本是7.0.0,这个功能是默认开启的;

ram_save_host_page()

-> migration_bitmap_clear_dirty()

-> migration_clear_memory_region_dirty_bitmap()

-> listener->log_clear()

kvm_log_clear()

-> kvm_physical_log_clear()

---

if (!s->manual_dirty_log_protect) {

/* No need to do explicit clear */

return ret;

}

...

kvm_slots_lock();