ubuntu 20.04 + cuda-11.8 + cudnn-8.6+TensorRT-8.6

1、装显卡驱动

ubuntu20.04 + cuda10.0 + cudnn7.6.4_我是谁??的博客-CSDN博客

查看支持的驱动版本:

查看本机显卡能够配置的驱动信息

lu@host:/usr/local$ ubuntu-drivers devices

== /sys/devices/pci0000:00/0000:00:01.0/0000:01:00.0 ==

modalias : pci:v000010DEd000021C4sv000010DEsd000021C4bc03sc00i00

vendor : NVIDIA Corporation

model : TU116 [GeForce GTX 1660 SUPER]

driver : nvidia-driver-525-open - distro non-free

driver : nvidia-driver-450-server - distro non-free

driver : nvidia-driver-525 - distro non-free

driver : nvidia-driver-525-server - distro non-free

driver : nvidia-driver-535-open - distro non-free

driver : nvidia-driver-470-server - distro non-free

driver : nvidia-driver-535-server-open - distro non-free recommended

driver : nvidia-driver-535-server - distro non-free

driver : nvidia-driver-535 - distro non-free

driver : nvidia-driver-470 - distro non-free

driver : xserver-xorg-video-nouveau - distro free builtin

lu@host:/usr/local$

安装驱动:

我这边安装的是nvidia-driver-535

sudo apt install nvidia-driver-535

重启电脑,查看nvidia驱动:

lu@host:/usr/local$ nvidia-smi

Fri Nov 3 00:26:46 2023

+---------------------------------------------------------------------------------------+

| NVIDIA-SMI 535.113.01 Driver Version: 535.113.01 CUDA Version: 12.2 |

|-----------------------------------------+----------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+======================+======================|

| 0 NVIDIA GeForce GTX 1660 ... Off | 00000000:01:00.0 Off | N/A |

| 29% 34C P8 N/A / N/A | 348MiB / 6144MiB | 33% Default |

| | | N/A |

+-----------------------------------------+----------------------+----------------------+

+---------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=======================================================================================|

| 0 N/A N/A 890 G /usr/lib/xorg/Xorg 45MiB |

| 0 N/A N/A 1432 G /usr/lib/xorg/Xorg 129MiB |

| 0 N/A N/A 1667 G /usr/bin/gnome-shell 30MiB |

| 0 N/A N/A 2352 G ...3584735,16244303988823860755,262144 131MiB |

+---------------------------------------------------------------------------------------+

lu@host:/usr/local$ 可以看到535最高支持cuda-12.2版本,我这里安装cuda-11.8显然满足要求(驱动版本可以高于对应cuda版本)。

2、安装CUDA

下载cuda:

链接:CUDA Toolkit Archive | NVIDIA Developer

CUDA推荐下载.run可以根据提示安装,执行如下命令:

sudo sh cuda_11.8.0_520.61.05_linux.run

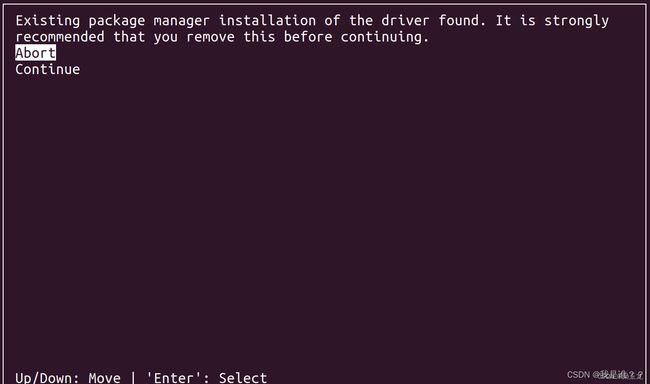

出现选择:选择Continue

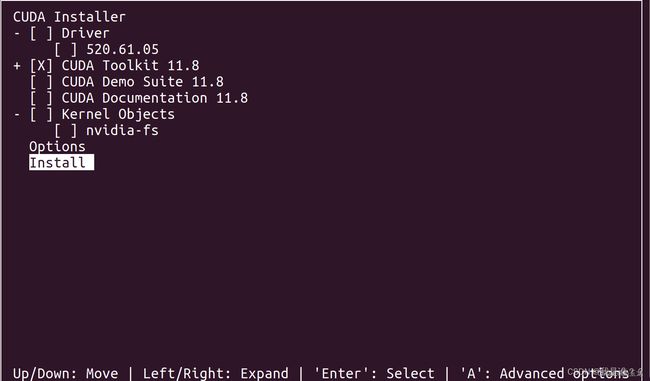

光标分别移动到Driver、CUDA Demo Suite 11.8、CUDA Documentation 11.8,按空格键,去掉安装选择,如下图,只安装CUDA Toolkit 11.8

设置cuda环境变量:

打开主目录下的 .bashrc文件添加如下路径,例如我的.bashrc文件在/home/lu/下。

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda-11.8/lib64

export PATH=$PATH:/usr/local/cuda-11.8/bin

export CUDA_HOME=$CUDA_HOME:/usr/local/cuda-11.8

终端运行:source ~/.bashrc

检查:nvcc --version3.cudnn的安装

下载安装文件:

按需求下载cudnn的安装文件:cuDNN Archive | NVIDIA Developer

安装cudnn:

这里我下载的cudnn-linux-x86_64-8.6.0.163_cuda11-archive.tar.xz

解压下载的文件,可以看到cuda文件夹,在当前目录打开终端,执行如下命令:

sudo cp cuda/include/cudnn* /usr/local/cuda/include/

sudo cp cuda/lib64/libcudnn* /usr/local/cuda/lib64/

sudo chmod a+r /usr/local/cuda/include/cudnn*

sudo chmod a+r /usr/local/cuda/lib64/libcudnn*验证是否安装成功:

sudo cat /home/cuda/cuda118/include/cudnn_version.h | grep CUDNN_MAJOR -A 2

4.TensorRT安装

下载:

网址:Log in | NVIDIA Developer

选择TensorTR8.6 GA,我下载的版本是TensorRT-8.6.1.6.Linux.x86_64-gnu.cuda-11.8.tar.gz

安装:

1.解压 tar -xzvf TensorRT-8.6.1.6.Linux.x86_64-gnu.cuda-11.8.tar.gz

2.执行命令sudo cp -rf TensorRT-8.6.1.6 / 将解压的内容拷贝到指定目录(我是到/usr/local/目录)

配置TensorRT:

打开主目录下的 .bashrc文件添加如下路径,例如我的.bashrc文件在/home/lu/下。

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/TensorRT-8.6.1.6/lib

export C_INCLUDE_PATH=$C_INCLUDE_PATH:/usr/local/TensorRT-8.6.1.6/include

export CPLUS_INCLUDE_PATH=$CPLUS_INCLUDE_PATH:/usr/local/TensorRT-8.6.1.6/include

终端运行:source ~/.bashrc

注意:也可以参考cudnn的安装方式,直接把头文件和库拷贝到cuda目录下,这样就不要再配置环境变量了。

测试TensorRT安装是否成功

# 进入到/usr/local/TensorRT-8.6.1.6/samples/sampleOnnxMNIST目录

cd /usr/local/TensorRT-8.6.1.6/samples/sampleOnnxMNIST

# 执行make命令编译

make

# 会在/usr/local/TensorRT-8.6.1.6/bin/目录下生成sample_onnx_mnist文件

/usr/local/TensorRT-8.6.1.6/bin/sample_onnx_mnist成功后显示如下:

lu@host:/usr/local/TensorRT-8.6.1.6/samples/sampleOnnxMNIST$ /usr/local/TensorRT-8.6.1.6/bin/sample_onnx_mnist

&&&& RUNNING TensorRT.sample_onnx_mnist [TensorRT v8601] # /usr/local/TensorRT-8.6.1.6/bin/sample_onnx_mnist

[11/03/2023-00:57:21] [I] Building and running a GPU inference engine for Onnx MNIST

[11/03/2023-00:57:22] [I] [TRT] [MemUsageChange] Init CUDA: CPU +14, GPU +0, now: CPU 19, GPU 448 (MiB)

[11/03/2023-00:57:28] [I] [TRT] [MemUsageChange] Init builder kernel library: CPU +897, GPU +174, now: CPU 992, GPU 586 (MiB)

[11/03/2023-00:57:28] [I] [TRT] ----------------------------------------------------------------

[11/03/2023-00:57:28] [I] [TRT] Input filename: ../../data/mnist/mnist.onnx

[11/03/2023-00:57:28] [I] [TRT] ONNX IR version: 0.0.3

[11/03/2023-00:57:28] [I] [TRT] Opset version: 8

[11/03/2023-00:57:28] [I] [TRT] Producer name: CNTK

[11/03/2023-00:57:28] [I] [TRT] Producer version: 2.5.1

[11/03/2023-00:57:28] [I] [TRT] Domain: ai.cntk

[11/03/2023-00:57:28] [I] [TRT] Model version: 1

[11/03/2023-00:57:28] [I] [TRT] Doc string:

[11/03/2023-00:57:28] [I] [TRT] ----------------------------------------------------------------

[11/03/2023-00:57:28] [W] [TRT] onnx2trt_utils.cpp:374: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

[11/03/2023-00:57:28] [I] [TRT] BuilderFlag::kTF32 is set but hardware does not support TF32. Disabling TF32.

[11/03/2023-00:57:28] [I] [TRT] Graph optimization time: 0.0039157 seconds.

[11/03/2023-00:57:28] [I] [TRT] BuilderFlag::kTF32 is set but hardware does not support TF32. Disabling TF32.

[11/03/2023-00:57:28] [I] [TRT] Local timing cache in use. Profiling results in this builder pass will not be stored.

[11/03/2023-00:57:29] [I] [TRT] Detected 1 inputs and 1 output network tensors.

[11/03/2023-00:57:29] [I] [TRT] Total Host Persistent Memory: 24224

[11/03/2023-00:57:29] [I] [TRT] Total Device Persistent Memory: 0

[11/03/2023-00:57:29] [I] [TRT] Total Scratch Memory: 0

[11/03/2023-00:57:29] [I] [TRT] [MemUsageStats] Peak memory usage of TRT CPU/GPU memory allocators: CPU 0 MiB, GPU 4 MiB

[11/03/2023-00:57:29] [I] [TRT] [BlockAssignment] Started assigning block shifts. This will take 6 steps to complete.

[11/03/2023-00:57:29] [I] [TRT] [BlockAssignment] Algorithm ShiftNTopDown took 0.017708ms to assign 3 blocks to 6 nodes requiring 32256 bytes.

[11/03/2023-00:57:29] [I] [TRT] Total Activation Memory: 31744

[11/03/2023-00:57:29] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in building engine: CPU +0, GPU +4, now: CPU 0, GPU 4 (MiB)

[11/03/2023-00:57:29] [I] [TRT] Loaded engine size: 0 MiB

[11/03/2023-00:57:29] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in engine deserialization: CPU +0, GPU +0, now: CPU 0, GPU 0 (MiB)

[11/03/2023-00:57:30] [I] [TRT] [MemUsageChange] TensorRT-managed allocation in IExecutionContext creation: CPU +0, GPU +0, now: CPU 0, GPU 0 (MiB)

[11/03/2023-00:57:30] [I] Input:

[11/03/2023-00:57:30] [I] @@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@%.:@@@@@@@@@@@@

@@@@@@@@@@@@@: *@@@@@@@@@@@@

@@@@@@@@@@@@* =@@@@@@@@@@@@@

@@@@@@@@@@@% :@@@@@@@@@@@@@@

@@@@@@@@@@@- *@@@@@@@@@@@@@@

@@@@@@@@@@# .@@@@@@@@@@@@@@@

@@@@@@@@@@: #@@@@@@@@@@@@@@@

@@@@@@@@@+ -@@@@@@@@@@@@@@@@

@@@@@@@@@: %@@@@@@@@@@@@@@@@

@@@@@@@@+ +@@@@@@@@@@@@@@@@@

@@@@@@@@:.%@@@@@@@@@@@@@@@@@

@@@@@@@% -@@@@@@@@@@@@@@@@@@

@@@@@@@% -@@@@@@#..:@@@@@@@@

@@@@@@@% +@@@@@- :@@@@@@@

@@@@@@@% =@@@@%.#@@- +@@@@@@

@@@@@@@@..%@@@*+@@@@ :@@@@@@

@@@@@@@@= -%@@@@@@@@ :@@@@@@

@@@@@@@@@- .*@@@@@@+ +@@@@@@

@@@@@@@@@@+ .:-+-: .@@@@@@@

@@@@@@@@@@@@+: :*@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

@@@@@@@@@@@@@@@@@@@@@@@@@@@@

[11/03/2023-00:57:30] [I] Output:

[11/03/2023-00:57:30] [I] Prob 0 0.0000 Class 0:

[11/03/2023-00:57:30] [I] Prob 1 0.0000 Class 1:

[11/03/2023-00:57:30] [I] Prob 2 0.0000 Class 2:

[11/03/2023-00:57:30] [I] Prob 3 0.0000 Class 3:

[11/03/2023-00:57:30] [I] Prob 4 0.0000 Class 4:

[11/03/2023-00:57:30] [I] Prob 5 0.0000 Class 5:

[11/03/2023-00:57:30] [I] Prob 6 1.0000 Class 6: **********

[11/03/2023-00:57:30] [I] Prob 7 0.0000 Class 7:

[11/03/2023-00:57:30] [I] Prob 8 0.0000 Class 8:

[11/03/2023-00:57:30] [I] Prob 9 0.0000 Class 9:

[11/03/2023-00:57:30] [I]

&&&& PASSED TensorRT.sample_onnx_mnist [TensorRT v8601] # /usr/local/TensorRT-8.6.1.6/bin/sample_onnx_mnist

lu@host:/usr/local/TensorRT-8.6.1.6/samples/sampleOnnxMNIST$