K8s 调度-亲和反亲和、污点与容忍-Day 06

1. 调度

1.1 什么是调度

在K8s中,调度 (scheduling) 指的是确保Pod匹配到合适的节点, 以便对应节点上的kubelet能够运行它们。

调度器通过K8s的监测(Watch)机制来发现集群中新创建且尚未被调度到节点上的 Pod, 然后将这些没有被调度的Pod调度到一个合适的节点上来运行。

那是谁来负责调度的呢?答:kube-scheduler。

kube-scheduler是K8s集群的默认调度器,属于Master节点上的一个组件.

生产环境中,每个master节点上都会运行一个kube-scheduler,来实现高可用机制。

1.2 Pod调度过程

但是有时候我们不想按照默认的调度规则去调度怎么办呢?就需要配置一些调度规则,来按照我们的预期进行调度。

1.3 将Pod调度到指定节点

1.3.1 简介

你可以约束一个Pod以便限制其只能在特定的节点上运行,或优先在特定的节点上运行。 有几种方法可以实现这点,推荐的方法都是用标签(label)和选择器(selector)来进行选择。

为什么要将Pod调度到指定节点呢?是因为工作中经常会有这种需求。比如:

(1)把高IO的pod调度到磁盘IO性能更好的node节点上。

(2)把高内存的pod调度到内存更多的node节点上。

(3)把需要GPU资源的pod调度到拥有GPU资源的node节点上。

(4)如果node节点是按项目打的标签,也可以通过配置把pod调度到指定项目的接node节点上。

1.3.2 指定调度的常用方式

(1)nodeSelector

(2)亲和性(Affinity)与反亲和性

(3)nodeName

1.4 节点标签

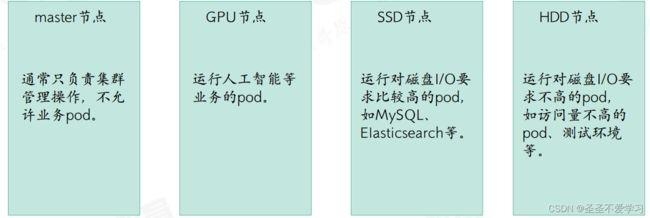

与很多其他K8s对象类似,节点也有标签(默认标签),并且也可以手动地添加标签。

默认标签是由Ku8s自带的节点控制器node-controller(kube-controller-manager的子控制器)添加的。

1.4.1 查看node节点标签

1.4.1.1 查看指定节点标签

[root@k8s-harbor01 ~]# kubectl describe no k8s-node01

……省略部分内容

Labels: beta.kubernetes.io/arch=amd64 # 表示节点的CPU架构为 x86_64,即64位的Intel 或AMD处理器架构。

beta.kubernetes.io/os=linux # 表示节点的操作系统为Linux。

kubernetes.io/arch=amd64 # 同样表示节点的CPU架构为x86_64。

kubernetes.io/hostname=k8s-node01 # 表示节点的主机名为k8s-node01。

kubernetes.io/os=linux # 同样表示节点的操作系统为Linux。

kubernetes.io/role=node # 表示节点的角色为普通节点。

1.4.1.2 查看所有节点的标签

[root@k8s-harbor01 ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master01 Ready,SchedulingDisabled master 92d v1.26.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master01,kubernetes.io/os=linux,kubernetes.io/role=master

k8s-master02 Ready,SchedulingDisabled master 92d v1.26.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master02,kubernetes.io/os=linux,kubernetes.io/role=master

k8s-master03 Ready,SchedulingDisabled master 92d v1.26.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master03,kubernetes.io/os=linux,kubernetes.io/role=master

k8s-node01 Ready node 92d v1.26.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node01,kubernetes.io/os=linux,kubernetes.io/role=node

k8s-node02 Ready node 92d v1.26.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node02,kubernetes.io/os=linux,kubernetes.io/role=node

k8s-node03 Ready node 92d v1.26.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node03,kubernetes.io/os=linux,kubernetes.io/role=node

1.4.2 根据标签过滤节点

[root@k8s-harbor01 ~]# kubectl get nodes -l kubernetes.io/role=master

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready,SchedulingDisabled master 92d v1.26.1

k8s-master02 Ready,SchedulingDisabled master 92d v1.26.1

k8s-master03 Ready,SchedulingDisabled master 92d v1.26.1

1.4.3 添加节点标签

[root@k8s-harbor01 ~]# kubectl label nodes k8s-node03 k1=v1

node/k8s-node03 labeled

[root@k8s-harbor01 ~]# kubectl get nodes -l k1=v1

NAME STATUS ROLES AGE VERSION

k8s-node03 Ready node 92d v1.26.1

1.4.4 删除节点标签

[root@k8s-harbor01 ~]# kubectl label nodes k8s-node03 k1-

[root@k8s-harbor01 ~]# kubectl get nodes -l k1

No resources found

1.4.5 修改标签

[root@k8s-harbor01 nodeAffinity]# kubectl label no k8s-node03 disktype=ssd

error: 'disktype' already has a value (xxx), and --overwrite is false

[root@k8s-harbor01 nodeAffinity]# kubectl label no k8s-node03 disktype=ssd --overwrite

node/k8s-node03 labeled

1.5 nodeSelector

1.5.1 nodeSelector简介

nodeSelector是官方推荐的最简单的节点选择方式,只需要在yaml文件中添加该字段,然后指定node节点的标签即可(加了odeSelector后,会在预选阶段按照我们的配置进行节点的过滤)。

重点:nodeSelector需要结合node节点上的标签才能使用。

1.5.2 nodeSelector示例:调度pod到指定节点

1.5.2.1 给node节点打标签

# 这里打2个标签

[root@k8s-harbor01 ~]# kubectl label no k8s-node01 project=test

node/k8s-node01 labeled

[root@k8s-harbor01 ~]# kubectl label no k8s-node01 disktype=ssd

node/k8s-node01 labeled

[root@k8s-harbor01 ~]# kubectl get no -l disktype=ssd,project=test

NAME STATUS ROLES AGE VERSION

k8s-node01 Ready node 93d v1.26.1

1.5.2.2 编辑yaml指定nodeSelector

[root@k8s-harbor01 deployment]# cat case1-nodeSelector.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

containers:

- name: test-deploy

image: tsk8s.top/baseimages/debian:7

imagePullPolicy: Always

args: ["tail", "-f", "/etc/hosts"]

resources:

limits:

cpu: "3"

memory: "2Gi"

requests:

cpu: "400m"

memory: "2Gi"

imagePullSecrets:

- name: dockerhub-image-pull-key

nodeSelector: # 配置这里

project: test

# disktype: ssd # 如果写多个标签,多个标签必须都满足才能调度

1.5.2.3 创建pod并检查

[root@k8s-harbor01 deployment]# kubectl apply -f case1-nodeSelector.yaml

deployment.apps/test-deployment created

[root@k8s-harbor01 deployment]# kubectl get po -A -owide |grep test- # 可以看到pod调度到了指定的节点

myserver test-deployment-69ffc55679-hpmht 1/1 Running 0 23s 10.200.85.249 k8s-node01 <none> <none>

# 扩容副本

[root@k8s-harbor01 deployment]# kubectl scale deploy -n myserver test-deployment --replicas=2

deployment.apps/test-deployment scaled

[root@k8s-harbor01 deployment]# kubectl get po -A -owide |grep test- # 可以看到 就算扩容了,新建的pod也还在我们指定了标签的节点上

myserver test-deployment-775c56cd5b-cgsmm 1/1 Running 0 7s 10.200.85.252 k8s-node01 <none> <none>

myserver test-deployment-775c56cd5b-hs469 1/1 Running 0 67s 10.200.85.250 k8s-node01 <none> <none>

1.6 nodeName

1.6.1 nodeName简介

nodeName是比亲和性或者nodeSelector更为直接的形式。

nodeName是Pod yaml配置中的一个字段,如果yaml中配置了nodeName字段,调度器会忽略该Pod, 而指定节点上的kubelet会尝试将 Pod放到该节点上。

使用nodeName配置的优先级会高于使用nodeSelector或亲和性与非亲和性的配置。

1.6.2 使用nodeName的注意事项

(1)如果指定的节点不存在,则Pod无法运行,而且在某些情况下可能会被自动删除。

(2)如果pod开始被调度到指定的节点,后续该node宕机了,pod不会被控制器调度到其他节点。

(3)如果指定的节点无法提供Pod运行所需的资源,Pod启动也会失败,而其失败原因中会给出是否因为内存或 CPU 不足而造成无法运行。

(4)在云环境中的节点名称并不总是可预测的,也不总是稳定的。

1.6.3 nodeName示例

1.6.3.1 确定节点名称

[root@k8s-harbor01 deployment]# kubectl get no|awk '{print $1}'

NAME # 主要看这一列

k8s-master01

k8s-master02

k8s-master03

k8s-node01

k8s-node02

k8s-node03

1.6.3.2 编辑yaml指定nodeName

[root@k8s-harbor01 deployment]# cat deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

nodeName: k8s-node03 # 配置nodeName(只能一个value,不能多个)

containers:

- name: test-deploy

image: tsk8s.top/baseimages/debian:7

imagePullPolicy: Always

args: ["tail", "-f", "/etc/hosts"]

resources:

limits:

cpu: "3"

memory: "2Gi"

requests:

cpu: "400m"

memory: "2Gi"

imagePullSecrets:

- name: dockerhub-image-pull-key

1.6.3.3 创建pod并检查

[root@k8s-harbor01 deployment]# kubectl apply -f deploy.yaml

deployment.apps/test-deployment created

[root@k8s-harbor01 deployment]# kubectl get po -A -o wide |grep test # 可以看到创建的pod被直接调度到了node3

myserver test-deployment-5c489b9c9-6hv8n 1/1 Running 0 67s 10.200.135.156 k8s-node03 <none> <none>

# 扩容

[root@k8s-harbor01 deployment]# kubectl scale deploy -n myserver test-deployment --replicas=4

deployment.apps/test-deployment scaled

# 可以看到扩容的pod也全部都在node3

[root@k8s-harbor01 deployment]# kubectl get po -A -o wide |grep test

myserver test-deployment-6667cc8dd-54kwq 1/1 Running 0 7s 10.200.135.161 k8s-node03 <none> <none>

myserver test-deployment-6667cc8dd-7kw59 1/1 Running 0 7s 10.200.135.160 k8s-node03 <none> <none>

myserver test-deployment-6667cc8dd-g825f 1/1 Running 0 7s 10.200.135.158 k8s-node03 <none> <none>

myserver test-deployment-6667cc8dd-l4wsx 1/1 Running 0 48s 10.200.135.162 k8s-node03 <none> <none>

2. 亲和性(affinity)与反亲和性(anti-affinity)

2.1 node 亲和性(affinity)与反亲和性(anti-affinity)

Affinity与anti-affinity的目的也是控制pod的调度结果,但是相对于nodeSelector,Affinity(亲和)与anti-affinity(反亲和)的功能更加强大。

如果同时配置了nodeSelector 和 nodeAffinity,两者 必须都要满足, 才能将 Pod 调度到候选节点上。

2.1.1 node 亲和性简介

节点亲和性概念上类似于nodeSelector(k8s 1.2版本后引入的新特性), 它使你可以根据节点上的标签来约束Pod可以调度到哪些节点。

2.1.2 node亲和性的类型

(1)requiredDuringSchedulingIgnoredDuringExecution(硬亲和。必须满足)

调度器只有在规则被满足的时候才能执行调度。此功能类似于nodeSelector, 但其语法表达能力更强。

(2)preferredDuringSchedulingIgnoredDuringExecution(软亲和。倾向满足,但是可以不满足)

调度器会尝试寻找满足对应规则的节点,如果找不到匹配的节点,调度器仍然会调度该 Pod。

注意:上述2种类型中,IgnoredDuringExecution意味着,如果节点标签在K8s调度Pod后发生了变更,Pod仍将继续运行。

2.1.3 affinity与nodeSelector对比

(1)亲和与反亲和对目的标签的选择匹配不仅仅支持and,还支持In、NotIn、Exists、DoesNotExist、Gt、Lt

In:标签的值存在匹配列表中(实现node亲和。匹配成功就调度到目的node。)

NotIn:标签的值不存在指定的匹配列表中(实现反亲和。不会调度到匹配成功的node。)

Gt:标签的值大于某个值(字符串)

Lt:标签的值小于某个值(字符串)

Exists:指定的标签存在

(2)可以设置软匹配和硬匹配,在软匹配下,如果调度器无法匹配节点,仍然将pod调度到其它不符合条件的节点。

(3)还可以对pod定义亲和策略,比如允许哪些pod可以或者不可以被调度至同一台node。

2.1.4 硬亲和示例一:多matchExpressions

2.1.4.1 编辑yaml

[root@k8s-harbor01 nodeAffinity]# cat case3-1.1-nodeAffinity-requiredDuring-matchExpressions.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

affinity: # 亲和性配置

nodeAffinity: # node节点亲和性配置

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms: # 亲和条件(多条件下,只要有一个条件满足就能调度)

- matchExpressions: # 匹配条件1,有一个key但是有多个values、则只要匹配成功一个value就可以调度

- key: disktype # node节点上的标签key

operator: In # 操作符字段

values: # 只要下面ssd和xxx有一个value匹配上就能调度

- ssd

- xxx

- matchExpressions: # 匹配条件2,多个matchExpressions,加上每个matchExpressions有一个key及多个values,只要其中有一个value匹配成功就可以调度

- key: project

operator: In

values: # 就算下面这俩value都匹配不上也没关系,只要上面的matchExpressions能最少匹配到一个value就能调度,也就是多个matchExpressions只要有一个满足匹配条件就行。

- mmm

- nnn

containers:

- name: test-deploy

image: tsk8s.top/baseimages/debian:7

imagePullPolicy: Always

args: ["tail", "-f", "/etc/hosts"]

resources:

limits:

cpu: "1"

memory: "1Gi"

requests:

cpu: "400m"

memory: "200Mi"

imagePullSecrets:

- name: dockerhub-image-pull-key

2.1.4.2 创建pod并检查

[root@k8s-harbor01 nodeAffinity]# kubectl get po -A|grep test

myserver test-deployment-5d8cd9cfcf-zg8pl 0/1 Pending 0 86s

[root@k8s-harbor01 nodeAffinity]# kubectl describe po -n myserver test-deployment-5d8cd9cfcf-zg8pl # 可以发现pod没有调度成功,原因:3 node(s) didn't match Pod's node affinity/selector,这个错误信息表示Pod的亲和性或选择器没有匹配到任何节点。

……省略部分内容

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 3m50s default-scheduler 0/6 nodes are available: 3 node(s) didn't match Pod's node affinity/selector, 3 node(s) were unschedulable. preemption: 0/6 nodes are available: 6 Preemption is not helpful for scheduling..

2.1.4.3 添加node标签并检查pod

[root@k8s-harbor01 nodeAffinity]# kubectl label nodes k8s-node03 disktype=xxx

node/k8s-node03 labeled

[root@k8s-harbor01 nodeAffinity]# kubectl get po -A|grep test # 可以发现标签一加上去,pod马上就被调度成功了

myserver test-deployment-5d8cd9cfcf-zg8pl 1/1 Running 0 11m 10.200.135.155 k8s-node03 <none> <none>

2.1.5 硬亲和示例二:单matchExpressions

2.1.5.1 编辑yaml

[root@k8s-harbor01 nodeAffinity]# cat case3-1.2-nodeAffinity-requiredDuring-matchExpressions.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms: # 亲和条件(单条件情况下,必须完全精确匹配才能调度)

- matchExpressions: # 匹配条件1,同一个key的多个value只要有一个匹配成功就认为当前key匹配成功

- key: disktype

operator: In

values:

- ssd

- hdd

- key: project # 匹配条件2,当前key也要匹配成功一个value,即条件1和条件2的key必须同时匹配到一个value,否则调度失败

operator: In

values:

- myserver

containers:

- name: test-deploy

image: tsk8s.top/baseimages/debian:7

imagePullPolicy: Always

args: ["tail", "-f", "/etc/hosts"]

resources:

limits:

cpu: "1"

memory: "1Gi"

requests:

cpu: "400m"

memory: "200Mi"

imagePullSecrets:

- name: dockerhub-image-pull-key

2.1.5.2 创建pod并检查

[root@k8s-harbor01 nodeAffinity]# kubectl apply -f case3-1.2-nodeAffinity-requiredDuring-matchExpressions.yaml

deployment.apps/test-deployment created

[root@k8s-harbor01 nodeAffinity]# kubectl get po -A |grep test # 可以看到pod挂起了

myserver test-deployment-5cb7b9599d-qgbhg 0/1 Pending 0 5s

[root@k8s-harbor01 nodeAffinity]# kubectl describe po -n myserver test-deployment-5cb7b9599d-qgbhg # 亲和性没有满足,pod调度失败

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 29s default-scheduler 0/6 nodes are available: 3 node(s) didn't match Pod's node affinity/selector, 3 node(s) were unschedulable. preemption: 0/6 nodes are available: 6 Preemption is not helpful for scheduling..

2.1.5.3 检查node标签

# 可以看到只有一个节点有disktype这个key,并且value还对不上,project标签没有一个node有

[root@k8s-harbor01 nodeAffinity]# kubectl get nodes --show-labels |grep disktype

k8s-node03 Ready node 93d v1.26.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,disktype=xxx,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node03,kubernetes.io/os=linux,kubernetes.io/role=node

[root@k8s-harbor01 nodeAffinity]# kubectl get nodes --show-labels |grep project

2.1.5.4 给node打标签并检查pod

[root@k8s-harbor01 nodeAffinity]# kubectl label no k8s-node03 disktype=ssd project=myserver

node/k8s-node03 labeled

[root@k8s-harbor01 nodeAffinity]# kubectl get po -A -o wide |grep test # pod调度成功

myserver test-deployment-5cb7b9599d-qgbhg 1/1 Running 0 8m1s 10.200.135.157 k8s-node03 <none> <none>

2.1.6 软亲和示例

2.1.6.1 编辑yaml(满足一个条件)

[root@k8s-harbor01 nodeAffinity]# cat case3-2.1-nodeAffinity-preferredDuring.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 80 # 软亲和权重,weight值越大优先级越高,越优先匹配调度

preference: # 节点选择器,和上面的权重相关联

matchExpressions: # 条件1

- key: project

operator: In

values:

- myserver

- weight: 60 # 软亲和权重,weight值越大优先级越高,越优先匹配调度

preference:

matchExpressions: # 条件2,在条件1不满足时匹配条件2(这里只有node3有disktype=ssd的标签)

- key: disktype

operator: In

values:

- ssd

containers:

- name: test-deploy

image: tsk8s.top/baseimages/debian:7

imagePullPolicy: Always

args: ["tail", "-f", "/etc/hosts"]

resources:

limits:

cpu: "1"

memory: "1Gi"

requests:

cpu: "400m"

memory: "200Mi"

imagePullSecrets:

- name: dockerhub-image-pull-key

2.1.6.2 创建pod并检查

[root@k8s-harbor01 nodeAffinity]# kubectl apply -f case3-2.1-nodeAffinity-preferredDuring.yaml

deployment.apps/test-deployment configured

[root@k8s-harbor01 nodeAffinity]# kubectl get po -A -owide |grep test

myserver test-deployment-855f77696-9bpnd 1/1 Running 0 13s 10.200.135.159 k8s-node03 <none> <none>

2.1.6.3 编辑yaml(没有条件满足)

# 清理环境

[root@k8s-harbor01 nodeAffinity]# kubectl delete -f case3-2.1-nodeAffinity-preferredDuring.yaml

deployment.apps "test-deployment" deleted

[root@k8s-harbor01 nodeAffinity]# cat case3-2.1-nodeAffinity-preferredDuring.yaml

……省略部分内容

- weight: 80 # 软亲和权重,weight值越大优先级越高,越优先匹配调度

preference: # 节点选择器,和上面的权重相关联

matchExpressions: # 条件1

- key: project

operator: In

values:

- yserver # 调整为一个不存在的标签value

- weight: 60 # 软亲和权重,weight值越大优先级越高,越优先匹配调度

preference:

matchExpressions: # 条件2,在条件1不满足时匹配条件2

- key: disktype

operator: In

values:

- sd # 调整为一个不存在的标签value

……省略部分内容

2.1.6.4 创建pod并检查

[root@k8s-harbor01 nodeAffinity]# kubectl apply -f case3-2.1-nodeAffinity-preferredDuring.yaml

deployment.apps/test-deployment created

[root@k8s-harbor01 nodeAffinity]# kubectl scale deploy -n myserver test-deployment --replicas=4 # 扩容副本数,方便看出来变化

deployment.apps/test-deployment scaled

[root@k8s-harbor01 nodeAffinity]# kubectl get po -A -owide |grep test # 可以看到就算没有条件满足,pod最终也会走默认调度

myserver test-deployment-5c4999897d-2ctwh 1/1 Running 0 60s 10.200.58.252 k8s-node02 <none> <none>

myserver test-deployment-5c4999897d-45l7x 1/1 Running 0 77s 10.200.135.168 k8s-node03 <none> <none>

myserver test-deployment-5c4999897d-d9pwg 1/1 Running 0 60s 10.200.85.253 k8s-node01 <none> <none>

myserver test-deployment-5c4999897d-kcf95 1/1 Running 0 60s 10.200.135.170 k8s-node03 <none> <none>

2.1.7 软硬亲和混合使用

加载顺序:先硬后软

只要硬亲和过滤成功,就算软亲和的规则没有匹配到合适的节点,也会走默认调度

2.1.7.1 编辑yaml

[root@k8s-harbor01 nodeAffinity]# cat case3-2.2-nodeAffinity-requiredDuring-preferredDuring.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution: # 硬亲和

nodeSelectorTerms: # 亲和条件(单条件模式必须匹配上)

- matchExpressions: # 硬匹配条件1

- key: "kubernetes.io/role"

operator: NotIn # 取反,不会调度到匹配成功的机器上

values:

- "master" # 硬性匹配key。不会调度到有标签kubernetes.io/role=master的机器上

preferredDuringSchedulingIgnoredDuringExecution: #软亲和(经过上面的硬亲和筛选出合格的机器后,由软亲和继续筛选)

- weight: 80

preference:

matchExpressions:

- key: project

operator: In

values:

- myserver

containers:

- name: test-deploy

image: tsk8s.top/baseimages/debian:7

imagePullPolicy: Always

args: ["tail", "-f", "/etc/hosts"]

resources:

limits:

cpu: "1"

memory: "1Gi"

requests:

cpu: "400m"

memory: "200Mi"

imagePullSecrets:

- name: dockerhub-image-pull-key

2.1.7.2 创建pod并检查

[root@k8s-harbor01 nodeAffinity]# kubectl apply -f case3-2.2-nodeAffinity-requiredDuring-preferredDuring.yaml

deployment.apps/test-deployment created

[root@k8s-harbor01 nodeAffinity]# kubectl get po -A -owide |grep test

myserver test-deployment-75d5d77fd8-lrkm5 1/1 Running 0 11s 10.200.135.172 k8s-node03 <none> <none>

2.2 pod亲和性(affinity)与反亲和性(anti-affinity)

2.2.1 简介

(1)Pod亲和性与反亲和性可以基于已经在node节点上运行的Pod的标签来约束新创建的Pod可以调度到的目的节点,注意是已经运行在node上的pod标签匹配。

(2)其规则的格式为:如果node A上已经运行了一个或多个满足规则B的Pod, 则这个Pod应该运行在node A上,而在反亲和性的情况下则不会调度到A节点上。(主要用于集群模式下部署服务,可以让pod不被调度到同一个node节点)

(3)其中规则表示一个具有可选的关联命名空间列表的LabelSelector,之所以Pod亲和与反亲和需可以通过LabelSelector选择namespace,是因为Pod是命名空间级别的,而node不属于任何nemespace,所以node的亲和与反亲和不需要namespace,因此作用于Pod标签的标签选择算符必须指定选择算符应用在哪个命名空间。

(4)从概念上讲,node节点是一个拓扑域(具有拓扑结构的区域、宕机的时候的故障域),比如k8s集群中的单台node节点、一个机架、云供应商可用区、云供应商地理区域等,可以使用topologyKey来定义亲和或者反亲和的颗粒度是node级别还是可用区级别,以便kubernetes调度系统用来识别并选择正确的目的拓扑域。

2.2.2 操作符

Pod亲和性与反亲和性的合法操作符(operator)有In、NotIn、Exists、DoesNotExist。

2.2.3 pod亲和与反亲和注意事项

(1)在Pod亲和性配置中,在 requiredDuringSchedulingIgnoredDuringExecution 和

preferredDuringSchedulingIgnoredDuringExecution 中,topologyKey 不允许为空(Empty topologyKey is not allowed.)。

(2)在Pod反亲和性中配置中,requiredDuringSchedulingIgnoredDuringExecution 和

preferredDuringSchedulingIgnoredDuringExecution 中,topologyKey 也不可以为空(Empty topologyKey is not allowed.)。

(3)对于 requiredDuringSchedulingIgnoredDuringExecution 要求的Pod反亲和性,准入控制器

LimitPodHardAntiAffinityTopology 被引入以确保 topologyKey 只能是 kubernetes.io/hostname,如果希望 topologyKey 也可用于其他定制拓扑逻辑,可以更改准入控制器或者禁用。(硬性反亲和的情况下topologyKey 只能是 kubernetes.io/hostname)

(4)除上述情况外,topologyKey 可以是任何合法的标签键。

2.2.4 部署测试服务

[root@k8s-harbor01 nodeAffinity]# cat case4-4.1-nginx.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: python-nginx

name: python-nginx

namespace: myserver

spec:

replicas: 1

selector:

matchLabels:

app: python-nginx

template:

metadata:

labels:

app: python-nginx

project: python

spec:

containers:

- name: python-nginx

image: nginx:1.20.2-alpine

#command: ["/apps/tomcat/bin/run_tomcat.sh"]

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

# resources:

# limits:

# cpu: 2

# memory: 2Gi

# requests:

# cpu: 500m

# memory: 1Gi

---

kind: Service

apiVersion: v1

metadata:

labels:

app: python-nginx

name: python-nginx

namespace: myserver

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

- name: https

port: 443

protocol: TCP

targetPort: 443

selector: #一个或多个selector,至少能匹配目标pod的一个标签

app: python-nginx

project: python

[root@k8s-harbor01 nodeAffinity]# kubectl apply -f case4-4.1-nginx.yaml

deployment.apps/python-nginx created

service/python-nginx created

[root@k8s-harbor01 nodeAffinity]# kubectl get po -A -o wide |grep python

myserver python-nginx-677bcc5599-2ggbz 1/1 Running 0 2m46s 10.200.135.171 k8s-node03 <none> <none>

2.2.5 pod软亲和示例

配置软亲和让新创建的pod也和nginx在同一个node节点

如果没有匹配上,则会进行默认调度

2.2.5.1 编辑yaml

[root@k8s-harbor01 podAffinity]# cat case4-4.2-podaffinity-preferredDuring.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: tomcat-app

name: tomcat-app

namespace: myserver

spec:

replicas: 3

selector:

matchLabels:

app: tomcat-app

template:

metadata:

labels:

app: tomcat-app

spec:

containers:

- name: tomcat-app

image: tomcat:7.0.94-alpine

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

affinity:

podAffinity: #Pod亲和

preferredDuringSchedulingIgnoredDuringExecution: #软亲和,能匹配成功就调度到一个topology,匹配不成功会由kubernetes自行调度。

- weight: 100

podAffinityTerm: # 指定亲和性规则字段

labelSelector: #标签选择(主要作用就是找pod标签)

matchExpressions: #正则匹配(在myserver名称空间下寻找拥有标签“project=python”的pod)

- key: project

operator: In

values:

- python

topologyKey: kubernetes.io/hostname # 这个pod会被调度到满足上面条件的同一个节点

namespaces:

- myserver

2.2.5.2 创建pod并检查

[root@k8s-harbor01 podAffinity]# kubectl apply -f case4-4.2-podaffinity-preferredDuring.yaml

deployment.apps/tomcat-app created

[root@k8s-harbor01 podAffinity]# kubectl get po -A -owide |grep tomcat # 可以看到所有pod都在和python pod在同一个节点

myserver tomcat-app-d6c54dbb8-5sddh 1/1 Running 0 8s 10.200.135.175 k8s-node03 <none> <none>

myserver tomcat-app-d6c54dbb8-lx9dz 1/1 Running 0 8s 10.200.135.177 k8s-node03 <none> <none>

myserver tomcat-app-d6c54dbb8-twg2z 1/1 Running 0 8s 10.200.135.176 k8s-node03 <none> <none>

[root@k8s-harbor01 podAffinity]# kubectl get po -A -owide |grep pyt

myserver python-nginx-677bcc5599-2ggbz 1/1 Running 0 36m 10.200.135.171 k8s-node03 <none> <none>

2.2.5.3 调整规则为无法匹配

[root@k8s-harbor01 podAffinity]# kubectl delete -f case4-4.2-podaffinity-preferredDuring.yaml

deployment.apps "tomcat-app" deleted

[root@k8s-harbor01 podAffinity]# cat case4-4.2-podaffinity-preferredDuring.yaml

……省略部分内容

- key: project

operator: In

values:

- pytho # 这里

2.2.5.4 创建pod并检查

[root@k8s-harbor01 podAffinity]# kubectl apply -f case4-4.2-podaffinity-preferredDuring.yaml

deployment.apps/tomcat-app created

[root@k8s-harbor01 podAffinity]# kubectl get po -A -owide |grep tomcat # 可以看到每个node都运行了一个pod

myserver tomcat-app-699f56c974-ntt47 1/1 Running 0 18s 10.200.135.179 k8s-node03 <none> <none>

myserver tomcat-app-699f56c974-tzmzt 0/1 ContainerCreating 0 18s <none> k8s-node02 <none> <none>

myserver tomcat-app-699f56c974-vcr9f 1/1 Running 0 18s 10.200.85.255 k8s-node01 <none> <none>

2.2.6 硬亲和示例

基于硬亲和实现多个pod调度在一个node。

如果规则匹配失败,则无法对pod进行调度。

2.2.6.1 编辑yaml

[root@k8s-harbor01 podAffinity]# cat case4-4.3-podaffinity-requiredDuring.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: tomcat-app

name: tomcat-app

namespace: myserver

spec:

replicas: 3

selector:

matchLabels:

app: tomcat-app

template:

metadata:

labels:

app: tomcat-app

spec:

containers:

- name: tomcat-app

image: tomcat:7.0.94-alpine

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution: #硬亲和

- labelSelector:

matchExpressions:

- key: project

operator: In

values:

- python

topologyKey: "kubernetes.io/hostname"

namespaces:

- myserver

2.2.6.2 创建pod并检查

[root@k8s-harbor01 podAffinity]# kubectl apply -f case4-4.3-podaffinity-requiredDuring.yaml

deployment.apps/tomcat-app created

[root@k8s-harbor01 podAffinity]# kubectl get po -A -owide |grep tomcat # 可以看到新建的pod都和nginx在同一个节点

myserver tomcat-app-6d74775c7b-jjt57 1/1 Running 0 16s 10.200.135.185 k8s-node03 <none> <none>

myserver tomcat-app-6d74775c7b-nn56d 1/1 Running 0 16s 10.200.135.184 k8s-node03 <none> <none>

myserver tomcat-app-6d74775c7b-xjwlv 1/1 Running 0 16s 10.200.135.180 k8s-node03 <none> <none>

2.2.6.3 调整规则为无法匹配

[root@k8s-harbor01 podAffinity]# kubectl delete -f case4-4.3-podaffinity-requiredDuring.yaml

deployment.apps "tomcat-app" deleted

[root@k8s-harbor01 podAffinity]# cat case4-4.3-podaffinity-requiredDuring.yaml

……省略部分内容

- labelSelector:

matchExpressions:

- key: project

operator: In

values:

- pytho # 改这里

2.2.6.4 创建pod并检查

[root@k8s-harbor01 podAffinity]# kubectl apply -f case4-4.3-podaffinity-requiredDuring.yaml

deployment.apps/tomcat-app created

[root@k8s-harbor01 podAffinity]# kubectl get po -A -owide |grep tomcat # 可以看到pod因为没有满足硬亲和条件,所以都无法进行调度

myserver tomcat-app-84fcfbf7dd-kjl8f 0/1 Pending 0 3s <none> <none> <none> <none>

myserver tomcat-app-84fcfbf7dd-zmvp8 0/1 Pending 0 3s <none> <none> <none> <none>

myserver tomcat-app-84fcfbf7dd-zwztm 0/1 Pending 0 3s <none> <none> <none> <none>

2.2.7 硬反亲和示例

部署中间件集群的时候常用,避免pod调度到同一个node或者可用区

2.2.7.1 编辑yaml

[root@k8s-harbor01 podAffinity]# cat case4-4.4-podAntiAffinity-requiredDuring.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: tomcat-app

name: tomcat-app

namespace: myserver

spec:

replicas: 3

selector:

matchLabels:

app: tomcat-app

template:

metadata:

labels:

app: tomcat-app

spec:

containers:

- name: tomcat-app

image: tomcat:7.0.94-alpine

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

affinity:

podAntiAffinity: # pod反亲和关键字

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: project

operator: In

values:

- python

topologyKey: "kubernetes.io/hostname"

namespaces:

- myserver

2.2.7.2 创建pod并检查

[root@k8s-harbor01 podAffinity]# kubectl apply -f case4-4.4-podAntiAffinity-requiredDuring.yaml

deployment.apps/tomcat-app created

[root@k8s-harbor01 podAffinity]# kubectl get po -A -owide |grep tomcat # 可以看到没有一个pod是和nginx调度到相同节点的

myserver tomcat-app-8594855dc4-5ckvj 1/1 Running 0 8s 10.200.58.255 k8s-node02 <none> <none>

myserver tomcat-app-8594855dc4-tq4kr 1/1 Running 0 8s 10.200.58.254 k8s-node02 <none> <none>

myserver tomcat-app-8594855dc4-xrm7k 1/1 Running 0 8s 10.200.85.193 k8s-node01 <none> <none>

2.2.8 软反亲和示例

能不在一起就不在一起,如果实在没得选,也可以将就在一起

2.2.8.1 编辑yaml

[root@k8s-harbor01 podAffinity]# cat case4-4.5-podAntiAffinity-preferredDuring.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: tomcat-app

name: tomcat-app

namespace: myserver

spec:

replicas: 3

selector:

matchLabels:

app: tomcat-app

template:

metadata:

labels:

app: tomcat-app

spec:

containers:

- name: tomcat-app

image: tomcat:7.0.94-alpine

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: project

operator: In

values:

- python

topologyKey: kubernetes.io/hostname

namespaces:

- myserver

2.2.8.2 关闭另外node节点的调度

[root@k8s-harbor01 podAffinity]# kubectl cordon k8s-node01 k8s-node02

node/k8s-node01 cordoned

node/k8s-node02 cordoned

[root@k8s-harbor01 podAffinity]# kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready,SchedulingDisabled master 93d v1.26.1

k8s-master02 Ready,SchedulingDisabled master 93d v1.26.1

k8s-master03 Ready,SchedulingDisabled master 93d v1.26.1

k8s-node01 Ready,SchedulingDisabled node 93d v1.26.1

k8s-node02 Ready,SchedulingDisabled node 93d v1.26.1

k8s-node03 Ready node 93d v1.26.1

2.2.8.3 创建pod并检查

[root@k8s-harbor01 podAffinity]# kubectl apply -f case4-4.5-podAntiAffinity-preferredDuring.yaml

deployment.apps/tomcat-app created

[root@k8s-harbor01 podAffinity]# kubectl get po -A -owide|grep tomcat # 可以看到所有的pod还是跑到了node3,这就是上面说的实在没有节点可调度,就会被调度到被取反的节点

myserver tomcat-app-7764799758-59crh 1/1 Running 0 11s 10.200.135.186 k8s-node03 <none> <none>

myserver tomcat-app-7764799758-dvjk6 1/1 Running 0 11s 10.200.135.187 k8s-node03 <none> <none>

myserver tomcat-app-7764799758-q5bfz 1/1 Running 0 11s 10.200.135.181 k8s-node03 <none> <none>

3. 污点与容忍

官网:https://kubernetes.io/zh-cn/docs/concepts/scheduling-eviction/taint-and-toleration/

3.1 简介

污点(taints),用于node节点排斥Pod调度,与亲和的作用是完全相反的,即被打上了污点的node节点,一般pod是不会调度上去的。

容忍(toleration),用于Pod容忍node节点的污点信息,即node有污点信息也会将新的pod调度到node。

3.2 应用场景

3.3 污点

3.3.1 污点的类型

(1)NoSchedule(硬限制)

表示k8s将不会将Pod调度到具有该污点的Node上

(2) PreferNoSchedule(软限制)

表示k8s将尽量避免将Pod调度到具有该污点的Node上

(3)NoExecute(双倍快乐硬限制)

表示k8s将不会将Pod调度到具有该污点的Node上,同时会将具有该污点的Node上已经存在的Pod强制驱逐出去。

3.3.2 配置方法

3.3.2.1 设置污点

kubectl taint nodes $node_name 自定义key=自定义value:NoSchedule # :NoSchedule是固定写法,NoSchedule也可以是PreferNoSchedule或NoExecute

kubectl cordon $node_name # 这个命令也可以实现关闭调度的功能,只不过它不属于污点,而且属于标记节点为不可调度

3.3.2.2 查看污点

kubectl describe no $node_name

……省略部分内容

Taints: xxxxx # 设置了污点后,这里就会有值

3.3.2.3 删除污点

kubectl taint nodes $node_name 自定义key:NoSchedule- # 注意和设置污点的差异之处。NoSchedule也可以是PreferNoSchedule或NoExecute,具体需要看污点是怎么配置的

如果是用kubectl cordon $node_name 关闭了节点的调度,那么要使用kubectl uncordon $node_name重新标记节点为可调用

3.4 容忍(tolerations)

定义Pod的容忍度(可以接受node的哪些污点),容忍后可以将Pod调度至含有该污点的node。

容忍需要基于operator进行匹配:

如果operator是Exists,则容忍度不需要value,而是直接匹配污点类型。

如果operator是Equal,则需要指定value,并且value的值需要等于tolerations的key。

3.4.1 容忍示例一:NoSchedule + tolerations

工作常用

3.4.1.1 节点打上污点信息

[root@k8s-harbor01 podAffinity]# kubectl taint nodes k8s-node03 k1=v1:NoSchedule

node/k8s-node03 tainted

[root@k8s-harbor01 podAffinity]# kubectl describe no k8s-node03|grep Taints

Taints: k1=v1:NoSchedule

3.4.1.2 测试对污点不容忍能否调度

# 编辑yaml

[root@k8s-harbor01 deployment]# cat deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

namespace: myserver

spec:

replicas: 3

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

containers:

- name: test-deploy

image: tsk8s.top/baseimages/debian:7

imagePullPolicy: Always

args: ["tail", "-f", "/etc/hosts"]

resources:

limits:

cpu: "3"

memory: "2Gi"

requests:

cpu: "400m"

memory: "200Mi"

imagePullSecrets:

- name: dockerhub-image-pull-key

#tolerations: # 容忍配置

#- key: "k1" # 标签的key

# operator: "Equal" # 容忍规则

# value: "v1" # 标签的value

# effect: "NoSchedule" # 容忍效果

# 创建pod

[root@k8s-harbor01 deployment]# kubectl apply -f deploy.yaml

deployment.apps/test-deployment created

# 查看pod能否被调度

[root@k8s-harbor01 deployment]# kubectl get po -A -o wide |grep test # 可以看到所有的pod,没有一个在k8s-node03上的

myserver test-deployment-6c7bc6b7c4-4rv7k 1/1 Running 0 9s 10.200.58.198 k8s-node02 <none> <none>

myserver test-deployment-6c7bc6b7c4-hhvbf 1/1 Running 0 9s 10.200.85.199 k8s-node01 <none> <none>

myserver test-deployment-6c7bc6b7c4-qr5wd 1/1 Running 0 9s 10.200.85.200 k8s-node01 <none> <none>

3.4.1.3 测试对污点容忍能否调度

# 清理环境

[root@k8s-harbor01 deployment]# kubectl delete -f deploy.yaml

deployment.apps "test-deployment" deleted

# 编辑yaml

[root@k8s-harbor01 deployment]# cat deploy.yaml

……省略部分内容(文件和上面的一样,解开注释的容忍部分就可以)

tolerations: # 容忍配置

- key: "k1" # 标签的key

operator: "Equal" # 容忍规则

value: "v1" # 标签的value

effect: "NoSchedule" # 容忍效果

# 创建pod

[root@k8s-harbor01 deployment]# kubectl apply -f deploy.yaml

deployment.apps/test-deployment created

# 查看调度情况

[root@k8s-harbor01 deployment]# kubectl get po -A -o wide |grep test # 可以看到pod可以调度到打了污点的node3上了

myserver test-deployment-fb5b7d54d-6nd7w 1/1 Running 0 4s 10.200.85.196 k8s-node01 <none> <none>

myserver test-deployment-fb5b7d54d-p9qww 1/1 Running 0 4s 10.200.135.183 k8s-node03 <none> <none>

myserver test-deployment-fb5b7d54d-xz25v 1/1 Running 0 4s 10.200.58.200 k8s-node02 <none> <none>

3.4.2 容忍示例二:NoExecute + tolerations

3.4.2.1 节点打上污点信息

# 删除之前的污点

[root@k8s-harbor01 deployment]# kubectl taint node k8s-node03 k1:NoSchedule-

node/k8s-node03 untainted

# 添加新的污点(注意:添加NoExecute后,被添加污点的node节点,会驱逐节点上的所有正在运行的pod。daemonset无法被驱逐)

[root@k8s-harbor01 deployment]# kubectl taint no k8s-node03 k1=v1:NoExecute

node/k8s-node03 tainted

# 查看pod,可以看到有2个pod被驱逐了。calico-node是daemonset,所以无法被驱逐

3.4.2.2 删除污点

[root@k8s-harbor01 deployment]# kubectl taint no k8s-node03 k1:NoExecute-

node/k8s-node03 untainted