1. xpath选择器

1.1 xpath介绍

xpath: 是一门在xml/html文档中查找信息的语句.

安装:

pip install lxml

导入:

from lxml import etree

生成对象:

html = etree.HTML('html文档字符串')

html = etree.parse('.html文件路径', etree.HTMLParser())

1.2 选取节点表达式

查询节点:

/ : 从根节点选取 (值是一个对象)

// : 不管任何位置, 直接查找 (值是一个对象)

. : 从当前节点

.. : 从父节点

/@属性名: 获取属性值

/text(): 获取标签内容

1. 查找所有节点

* : 通配符表示所有

from lxml import etree

doc = '''

Example website

'''

html = etree.HTML(doc)

node = html.xpath('//*')

print(node)

"""

[, , ...]

"""

2. 指定节点

//标签名

node = html.xpath('//head')

print(node)

"""

[]

"""

3. 子节点

指定子标签:

1. //父标签名/子标签名

2. 标签名/child::子标签名

node = html.xpath('//div/a')

print(node)

node = html.xpath('//a[1]/child::img/@src')

print(node)

node = html.xpath('//a[1]/child::*')

print(node)

4. 子孙节点

子/孙标签:

1. //祖或父标签名//子或孙标签名

1. 标签名/child::*

node = html.xpath('//body/a')

print(node)

node = html.xpath('//body//a')

print(node)

node = html.xpath('//a[6]/descendant::*')

print(node)

"""

[, ,

, ]

"""

node = html.xpath('//a[6]/descendant::h5/text()')

print(node)

5. 父节点

子节点/.. 找到父节点

node = html.xpath('//body//a[@href="image1.html"]/..')

print(node)

node = html.xpath('//body//a[1]/..')

print(node)

node = html.xpath('//body//a[1]/parent::div')

print(node)

node = html.xpath('//body//a[1]/parent::*')

print(node)

6. 祖先节点

node = html.xpath('//a/ancestor::div')

print(node)

node = html.xpath('//a/ancestor::*')

print(node)

7. 属性匹配

单属性值匹配:

标签名[@属性名='属性值']

多属性值匹配:

标签有class属性有多个值, 直接匹配就不可以了, 需要用contains

标签名[contains@属性名='属性值']

node = html.xpath('//body//a[@href="image1.html"]')

print(node)

node = html.xpath('//body//a[@class="li"]')

print(node)

node = html.xpath('//body//a[contains(@class,"li")]')

print(node)

8. 文本内容获取

标签名/text() 取当前标签的文本内容

node = html.xpath('//body//a[@href="image1.html"]/text()')

print(node)

node = html.xpath('//body//a/text()')

print(node)

"""

['Name: My image 1 ', 'Name: My image 2 ', 'Name: My image 3 ',

'Name: My image 4 ', 'Name: My image 5 ', 'Name: My image 6 ']

"""

9. 属性值获取

标签名/@属性名 取当前标签的属性

标签名/attribute::* 获取所有属性值

node = html.xpath('//body//a/@href')

print(node)

node = html.xpath('//body//a[1]/@href')

print(node)

node = html.xpath('//a[1]/@aa')

print(node)

node = html.xpath('//a[1]/attribute::*')

print(node)

10. 按序选择

正序:

标签名[序号] 序号从1开始

倒序:

标签名[last()] 最后一个

标签名[last()-n] 倒数第n+1个

node = html.xpath('//a[2]/text()')

print(node)

node = html.xpath('//a[2]/@href')

print(node)

node = html.xpath('//a[last()]/@href')

print(node)

node = html.xpath('//a[last()-1]/@href')

print(node)

11. 位置条件

标签名[position()<序号]

node = html.xpath('//a[position()<3]/@href')

print(node)

12. 同级节点查找

/following:当前节点之后所有同级节点(包括同级节点的子孙节点)

following-sibling:当前节点之后同级节点(只找兄弟)

node = html.xpath('//a[3]/following-sibling::*')

print(node)

node = html.xpath('//a[3]/following-sibling::a')

print(node)

node = html.xpath('//a[1]/following-sibling::*[2]')

print(node)

node = html.xpath('//a[1]/following-sibling::*[2]/@href')

print(node)

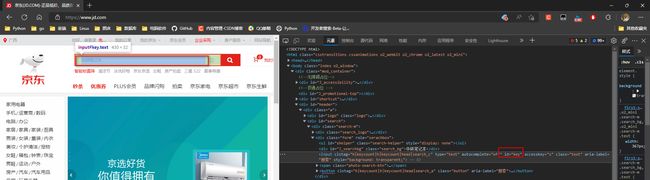

1.3 复制xpath路径

xpath路径: //*[@id="hotsearch-content-wrapper"]/li[1]/a/span[2]

完整xpath路径: /html/body/div[1]/div[1]/div[5]/div/div/div[3]/ul/li[1]/a/span[2]

2. Web应用测试工具

selenium: 是一个用于Web应用程序测试的工具.

使用requests速度快, 可以开启多线程, requests无法直接执行JavaScript代码.

爬虫中使用是为了解决requests无法直接执行JavaScript代码的问题, 但是速度慢.

2.1 安装selenium

安装selenium: pip3 install selenium==3.141.0

最新版本好多方法弃用了...

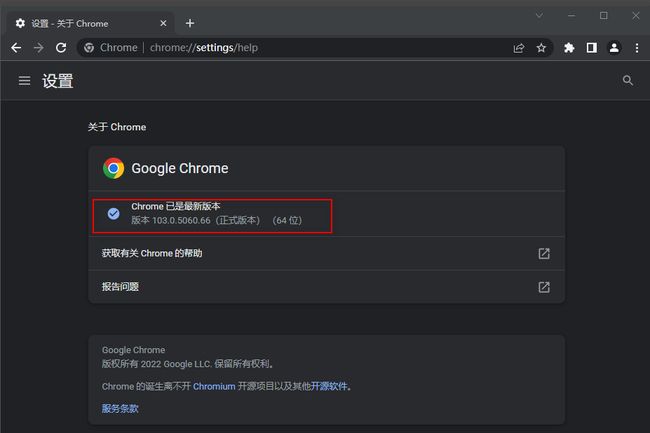

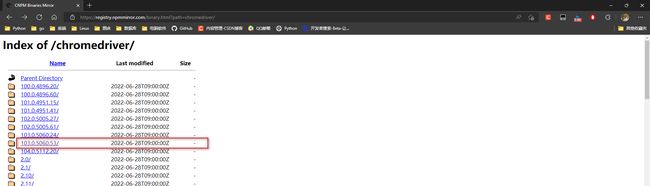

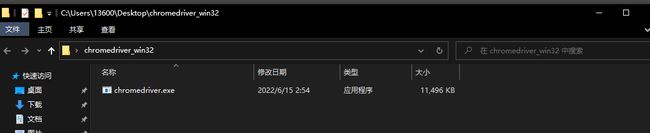

2.2 下载驱动

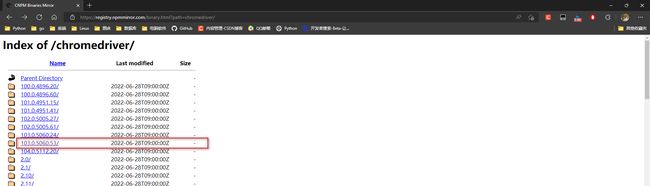

谷歌浏览器驱动网址: http://npm.taobao.org/mirrors/chromedriver/

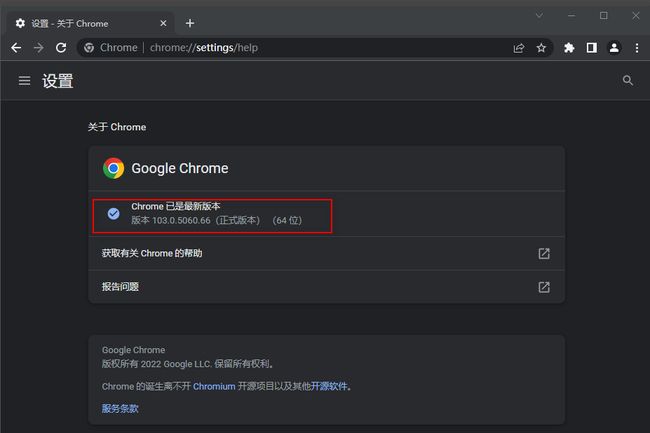

* 1. 找到chrome版本信息

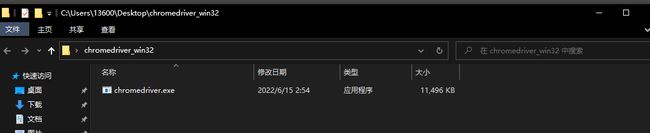

* 2. 下载对应版本的驱动(驱动器版本向下兼容)

* 3. 下载之后解压得到一个可执行文件(不需要安装)

2.3 等待元素加载

网页加载需要一定的时间, 通过代码去查找标签速度非常快, 可能标签还没加载完, 代码就查找了,

如果找不到会报错.

在执行代码查找标签之前先等待标签加载完毕.

两种方式:

1. 显示等待: 每个标签都要写等待逻辑.

2. 隐式等待: 写一个逻辑, 所有标签遵循这个规则.

元素对象.implicitly_wait(等待加载时间) 超时报错

from selenium import webdriver

bro=webdriver.Chrome(executable_path='./chromedriver.exe')

bro.get('https://www.jd.com/')

bro.implicitly_wait(10)

...

2.4 简单使用

生成对象:

浏览器对象 = webdriver.Chrome(executable_path='驱动器路径')

打开网页:

浏览器对象.get('网络地址')

打印文本信息:

浏览器对象.page_source

关闭当前页面:

浏览器对象.close()

退出浏览器:

浏览器对象.quit()

from selenium import webdriver

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

bro.get('https://www.baidu.com/')

print(bro.page_source)

bro.close()

2.5 查找标签

(最新版本模块很多方法被弃用!)

# ===============find系列方法查找元素===================

不带s:

1. find_element_by_id 通过id查找

2. find_element_by_link_text 通过a标签的文本内容找

3. find_element_by_partial_link_text 通过a标签的文本内容找, 模糊匹配

4. find_element_by_tag_name 标签名

5. find_element_by_class_name 类名

6. find_element_by_name name属性

7. find_element_by_css_selector 通过css选择器

8. find_element_by_xpath 通过xpaht选择器

带s:

强调:find_elements_by_xxx的形式是查找到多个元素, 结果为列表

元素对象.send_keys('搜索关键字') 往控件中写入搜索关键字

元素对象.clear() 清空输入的内容

元素对象.click() 点击按钮

元素对象.get_attribute('属性名') 获取元素的属性

元素对象.text 获取元素的文本信息

css选择器的复制方法:

#app > div > div > div > div.el-col.el-col-24 > section > div >

div.scroll_main.el-scrollbar__wrap.el-scrollbar__wrap--hidden-default > div

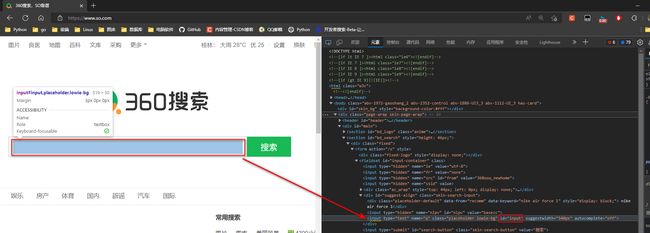

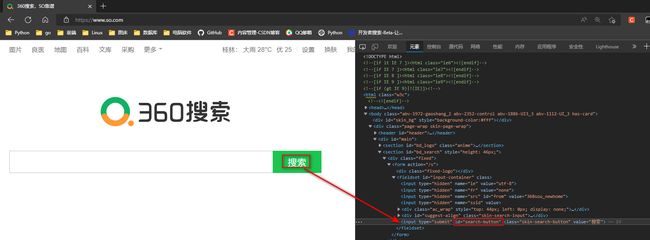

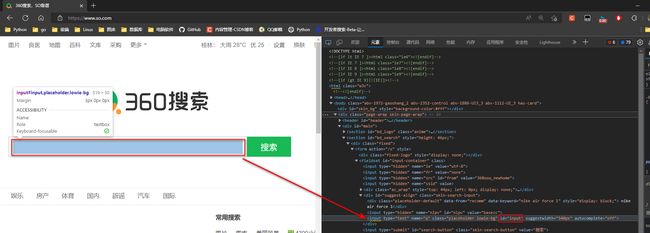

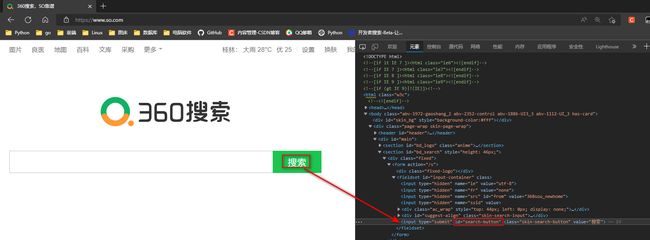

1. 自动搜索案例

* 1. 找到输入框

* 2. 找到搜索按键

from selenium import webdriver

bro = webdriver.Chrome(executable_path='chromedriver.exe')

bro.get('https://www.so.com/')

search = bro.find_element_by_id('input')

search.send_keys("美女")

button = bro.find_element_by_id('search-button')

button.click()

print(bro.page_source)

with open('baidu.html', 'w', encoding='utf-8') as f:

f.write(bro.page_source)

bro.close()

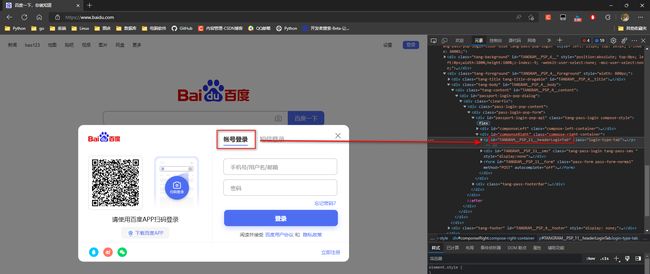

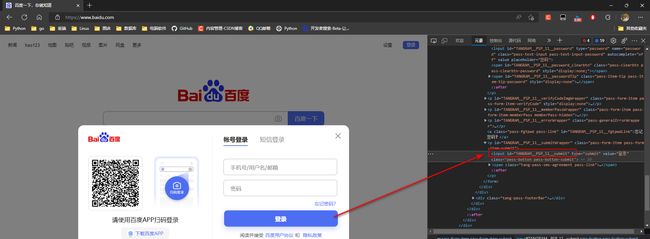

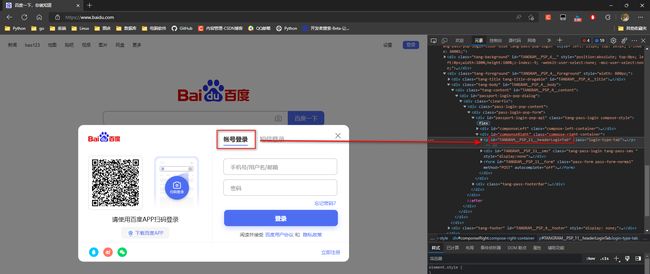

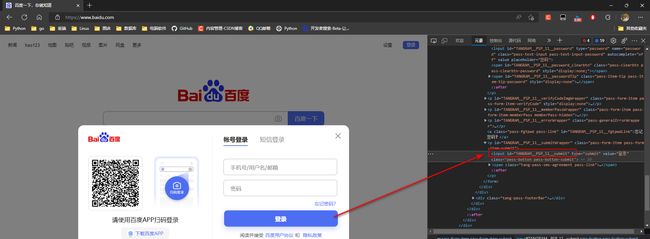

2.自动登入案例

全自定登入越来越难. 必要的时候验证手动验证.

* 1. 找到登入按键

* 2. 找打账户登入

* 3. 找到用户名与密码输入框

* 4. 找打登入按键

* 5. 登入代码(验证码手动)

from selenium import webdriver

import time

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

bro.get('https://www.baidu.com/')

bro.implicitly_wait(10)

user_login = bro.find_element_by_id('s-top-loginbtn')

user_login.click()

account_login = bro.find_element_by_id('TANGRAM__PSP_11__changePwdCodeItem')

account_login.click()

username = bro.find_element_by_id('TANGRAM__PSP_11__userName')

username.send_keys('[email protected]')

password = bro.find_element_by_id('TANGRAM__PSP_11__password')

password.send_keys('1314.qqq')

button = bro.find_element_by_id('TANGRAM__PSP_11__submit')

button.click()

time.sleep(10)

bro.close()

2.6 无界面浏览器

selenium必须是打开浏览窗口, 爬虫不需要展示窗口, 则设置为无界面浏览器.

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

chrome_options.add_argument('window-size=1920x3000')

chrome_options.add_argument('--disable-gpu')

chrome_options.add_argument('--hide-scrollbars')

chrome_options.add_argument('blinfk-settings=imagesEnabled=alse')

chrome_options.add_argument('--headless')

bro = webdriver.Chrome(executable_path='./chromedriver.exe', options=chrome_options)

bro.get('https://www.baidu.com')

print(bro.page_source)

2.7 pillow扣图

安装pillow模块: pip install pillow

元素对象.save_screenshot('保存路径') 把整个页面保存成图片

元素对象.location 元素的左上角坐标.

元素对象.size 元素占用的大小

元素对象.id 元素id(selenium分配的)

元素对象.tag_name (元素的名称)

from selenium import webdriver

from PIL import Image

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

bro.get('https://www.jd.com/')

img = bro.find_element_by_css_selector('a.logo_tit_lk')

print(img.location)

print(img.size)

print(img.id)

print(img.tag_name)

location = img.location

size = img.size

bro.save_screenshot('./main.png')

img_tu = (

int(location['x']), int(location['y']), int(location['x'] + size['width']), int(location['y'] + size['height']))

img = Image.open('./main.png')

code_img = img.crop(img_tu)

code_img.save('./code.png')

bro.close()

2.8 执行js

浏览器对象.execute_scripr('js代码')

常用操作:

1. 执行js代码

2. 使用页面的变量和函数

1. alert弹框

from selenium import webdriver

import time

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

bro.get('https://www.csdn.net/')

bro.execute_script("alert('hello')")

time.sleep(3)

bro.switch_to.alert.accept()

bro.close()

2. 滑动页面

垂直滑动 window.scrollBy(起始坐标, 结束坐标)

document.body.scrollHeight 获取页面的高度

from selenium import webdriver

import time

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

bro.get('https://www.csdn.net/')

bro.execute_script("window.scrollBy(0, 500)")

time.sleep(2)

bro.execute_script("window.scrollBy(0, document.body.scrollHeight )")

time.sleep(2)

bro.close()

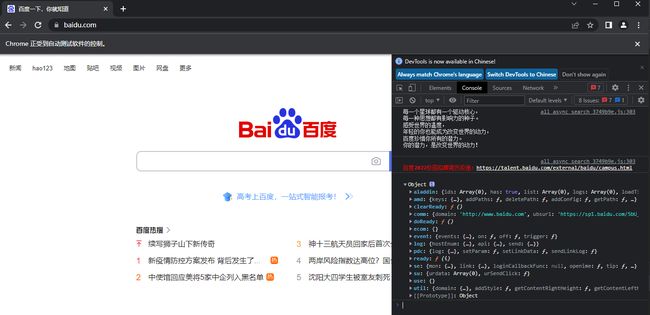

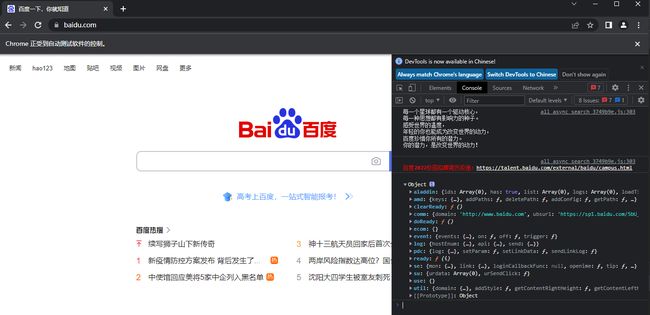

3. 使用变量

使用页面中定义的表量

from selenium import webdriver

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

bro.get('https://www.baidu.com')

bro.execute_script('console.log(bds)')

2.9 选项卡操作

选项卡-->新开网页

新开选项卡: window.open()

获取虽有选项卡: 浏览器对象.window_handles

切换选项卡: 浏览器对象..switch_to.window(选项卡)

from selenium import webdriver

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

bro.get('https://www.baidu.com')

bro.execute_script('window.open()')

all_window = bro.window_handles

bro.switch_to.window(all_window[0])

bro.get('https://www.cnblogs.com/')

bro.switch_to.window(all_window[1])

bro.get('https://www.csdn.net/')

bro.close()

bro.quit()

2.10 页面前进后退

后退: 浏览器对象.back()

前进: 浏览器对象.forward()

from selenium import webdriver

import time

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

bro.get('https://www.baidu.com')

bro.get('https://www.taobao.com')

bro.get('https://www.bilibili.com/')

time.sleep(1)

bro.back()

time.sleep(1)

bro.back()

time.sleep(1)

bro.forward()

time.sleep(1)

bro.forward()

time.sleep(1)

bro.quit()

2.11 异常处理

from selenium import webdriver

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

try:

bro.get('https://www.baidu.com')

bro.find_element_by_id('xxxx')

except Exception as e:

print(f'程序出错: {e}')

bro.quit()

2.12 半自动登入博客园

操作步骤:

1. 先半自动登入到博客园

2. 将cookice保存到本地

3. 携带cookice访问博客园

* 1. 获取登入标签

* 2. 获取账户密码表单按钮

* 3. 获取账户密码登入标签

* 4. 半自动登入获取cookie保存到本地

from selenium import webdriver

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

try:

bro.get('https://www.cnblogs.com/')

bro.implicitly_wait(10)

login_button = bro.find_element_by_link_text('登录')

login_button.click()

password_button = bro.find_element_by_class_name('mat-tab-label-content')

password_button.click()

username_input = bro.find_element_by_id('mat-input-0')

username_input.send_keys('你的账户')

password_input = bro.find_element_by_id('mat-input-1')

password_input.send_keys('你的密码')

button = bro.find_element_by_class_name('mat-button-wrapper')

button.click()

input()

import json

with open('cookie.json', mode='w') as wf:

json.dump(bro.get_cookies(), wf)

except Exception as e:

print(f'程序出错: {e}')

finally:

bro.quit()

* 5. cookie信息

[

{

"domain":"www.cnblogs.com",

"httpOnly":true,

"name":".AspNetCore.Antiforgery.b8-pDmTq1XM",

"path":"/",

"secure":false,

"value":"CfDJ8EOBBtWq0dNFoDS-ZHPSe53mEWd-ZGyjWftpCaA67Ju_PAmyKJdgIMJ6TQroItTC3KugfG1kyhlNdZx9twkZXOMpcOw8OMkPl0v3uajxTJTOJKtxX4sy1Az7e2VbFXcrcgff2l2J1QRpKn75hQ0ldtYSAD"

},

{

"domain":".cnblogs.com",

"expiry":1720163214,

"httpOnly":false,

"name":"_ga",

"path":"/",

"secure":false,

"value":"GA1.2.1702123200.1657091158"

},

{

"domain":".cnblogs.com",

"httpOnly":true,

"name":".CNBlogsCookie",

"path":"/",

"secure":false,

"value":"6AE367FDC883C9497C0965F5DCB0773D77C7B6E04AC8D3483B085CC7C8C7FD46E080F1CFF9028730A81B4781393E850814E684ABDFA2FFD7D01C0CAEB96C28EA39E26578AFF0E5355617C5C2A5191DB59937CC937D"

},

{

"domain":".cnblogs.com",

"expiry":1690787158,

"httpOnly":false,

"name":"__gpi",

"path":"/",

"secure":false,

"value":"UID=00000769a61d749c:T=1657091158:RT=1657091158:S=ALNI_MYpovhSSJNIllzFre6jRxKvDbXmXA"

},

{

"domain":".cnblogs.com",

"expiry":1690787158,

"httpOnly":false,

"name":"__gads",

"path":"/",

"secure":false,

"value":"ID=614211f6e18ef14e:T=1657091158:S=ALNI_MabIFcMdHavfJFTtGjdvxUNM6oWJA"

},

{

"domain":".cnblogs.com",

"expiry":1657177614,

"httpOnly":false,

"name":"_gid",

"path":"/",

"secure":false,

"value":"GA1.2.2027133757.1657091158"

},

{

"domain":".cnblogs.com",

"httpOnly":true,

"name":".Cnblogs.AspNetCore.Cookies",

"path":"/",

"secure":false,

"value":"CfDJ8EOBBtWq0dNFoDS-ZHPSe50ngXRAr8WvkjMPVK2CErFjHpfDDCUA5wWx_coJ_pBtFO5I5aDCaZKVAU3ENMhSzukVskoTcTgvCsxz6lBceGIdIGBAjpxkahkqzDHb323TpdV2X3KMcJUTH-Fzz5NDhvMzDBfrcgOuvhUiu67tqzJeweta9Ld_qo2d7zGzHcCQOhVZJAXsZYB6lERqnNx83pRWzwUbmeoxPjvpQiILl6Amab0RkkoGS4wP5K1l0_gn1XBdke5Vp2fXqVIAJoIpV12PC2AjcrV2ABKdYMts_qAZ6UrhK_Rk7cc8wrvyNPP63dvg8pqsceIPl45GS0XuqfPLg1K9nCydFp426a-2UUix2pIwyxKDsq3IpP6qgq4QlkzfZm9CvgF7Tq-14s4327l9uCJEYmrNyeghaBM-4WhHabI_FD6K-xweqaFVx_n5aN5vhXV9yFRiUOFD71kn5FcwOhnImFKDHnmRUaSSy4AyhawQ8hT6UTQcXcigkDStc4wkz-jXpsDdYYxED3fZAp9IwLQv63U9mEG51LlyM7jQ8"

},

{

"domain":".cnblogs.com",

"httpOnly":false,

"name":"Hm_lpvt_866c9be12d4a814454792b1fd0fed295",

"path":"/",

"secure":false,

"value":"1657091215"

},

{

"domain":".cnblogs.com",

"expiry":1720163214,

"httpOnly":false,

"name":"_ga_3Q0DVSGN10",

"path":"/",

"secure":false,

"value":"GS1.1.1657091159.1.1.1657091214.5"

},

{

"domain":".cnblogs.com",

"expiry":1688627214,

"httpOnly":false,

"name":"Hm_lvt_866c9be12d4a814454792b1fd0fed295",

"path":"/",

"secure":false,

"value":"1657091158"

}

]

* 6. 携带cookie访问博客园

from selenium import webdriver

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

try:

bro.get('https://www.cnblogs.com/')

with open('cookie.json', mode='r', encoding='utf8') as rf:

import json

cookie = json.load(rf)

for item in cookie:

bro.add_cookie(item)

bro.refresh()

import time

time.sleep(3)

except Exception as e:

print(f'程序出错: {e}')

finally:

bro.quit()

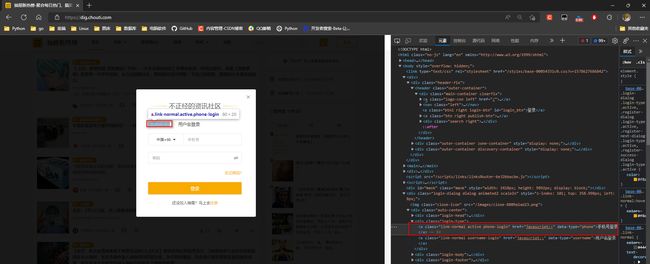

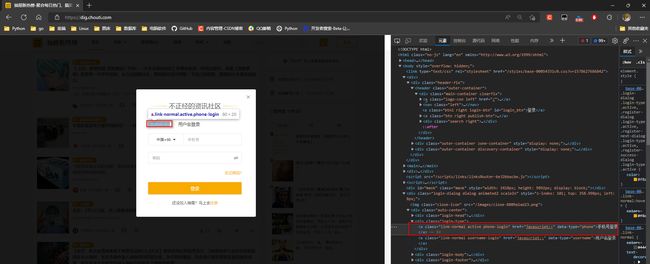

2.13 抽屉新闻自动点赞

操作步骤:

1. 使用selenium半自动登入到抽屉新闻网, 获取到cookie.

2. 使用request携带cookie访问抽屉新闻网, 批量点赞文章.

* 1. 获取登入按键

* 2. 获取到手机号码登入

* 3. 获取到手机号输入输入框, 密码输入框, 登入按钮

* 4. 自动登入代码

from selenium import webdriver

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

try:

bro.get('https://dig.chouti.com/')

bro.implicitly_wait(10)

login_button = bro.find_element_by_id('login_btn')

bro.execute_script('arguments[0].click()', login_button)

phone_login = bro.find_element_by_link_text('手机号登录')

phone_login.click()

phone_input = bro.find_element_by_name('phone')

phone_input.send_keys('账户')

import time

time.sleep(2)

password_input = bro.find_element_by_name('password')

password_input.send_keys('密码')

import time

time.sleep(2)

button_btn = password_input = bro.find_element_by_name('password')

button_btn.click()

input()

with open('chouti_cookie.json', mode='w') as wf:

import json

json.dump(bro.get_cookies(), wf)

except Exception as e:

print(f'程序出错: {e}')

finally:

bro.quit()

* 5. 获取到cookie

[

{

"domain":"dig.chouti.com",

"expiry":2147483647,

"httpOnly":false,

"name":"YD00000980905869%3AWM_NI",

"path":"/",

"secure":false,

"value":"hVmgjDuEehm%2F6tUcue5fPsyZBX4g%2BiVrsda5Y2A%2BAlPh5Q9JvDwOUT75TtZvqQSBAJT0GPwQDrndVOoDV6BF%2FM2FysGrBvko6XTGutmHh5yXaXVnRwGhFNF6B0E2IN3UpudUlU%3D"

},

{

"domain":"dig.chouti.com",

"expiry":1814786532,

"httpOnly":false,

"name":"_9755xjdesxxd_",

"path":"/",

"secure":false,

"value":"32"

},

{

"domain":"dig.chouti.com",

"expiry":1814786532,

"httpOnly":false,

"name":"gdxidpyhxdE",

"path":"/",

"secure":false,

"value":"XUz83Gg7sk4v6wKgX6oScjyLZD7IOVNSrpWzlqERCDA2o1hH1BbZYPc58ewHkCKaUMqoZyHX%2BNtoujYBmJlLnvPj1cg6yK2nlPDJDbWKGZo%2FICGr%5CLmiL2ZHNV9lEvGjnRsa%2B%5CArVE1PLTD7%2FnAD7Jbrm%2BKBV7V0IIg6eR%5CLUeRseNE6x6%3A1657106532758"

},

{

"domain":"dig.chouti.com",

"expiry":2147483647,

"httpOnly":false,

"name":"YD00000980905869%3AWM_TID",

"path":"/",

"secure":false,

"value":"wLMI2HmPOO5FVVQAQBfUBrdKG0JzBhyT"

},

{

"domain":"dig.chouti.com",

"expiry":1688641631,

"httpOnly":false,

"name":"__snaker__id",

"path":"/",

"secure":false,

"value":"akew1JdgZb6KMz9y"

},

{

"domain":".chouti.com",

"httpOnly":false,

"name":"Hm_lpvt_03b2668f8e8699e91d479d62bc7630f1",

"path":"/",

"secure":false,

"value":"1657105631"

},

{

"domain":".chouti.com",

"expiry":1688641631,

"httpOnly":false,

"name":"Hm_lvt_03b2668f8e8699e91d479d62bc7630f1",

"path":"/",

"secure":false,

"value":"1657105631"

},

{

"domain":"dig.chouti.com",

"expiry":2147483647,

"httpOnly":false,

"name":"YD00000980905869%3AWM_NIKE",

"path":"/",

"secure":false,

"value":"9ca17ae2e6ffcda170e2e6eed6e868fbf18dd8c569b4a88ba3c44e979b9facd55eedbc81a8b443ae9fa4d4d22af0fea7c3b92aac8aa388e950b79bab89f025f6ac9b84f86ff7baa094fc528288fc88aa6396aefbbab14be99fa4b1eb3f93e78697c77d8d8b9cd8b860b886ba92d4598sa29f86b5b34d94a99fa5f166a88a8190c75ef69e8ad0d03a86a7bda8f14ab6b5a1d7db6085abbc8ecb64f7a79882db5eae8eb9a8eb4e8e9afaa3b34ff29782d4d87cb7bc9b8dd837e2a3"

},

{

"domain":"dig.chouti.com",

"expiry":1688641630,

"httpOnly":false,

"name":"deviceId",

"path":"/",

"secure":false,

"value":"web.eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJqaWQisaOiI5ZmY2Nzk5Yy04NTdlLTQ3MGYtOGMzYS0yMTY1ZTE3MDBkZGMiLCJleHBpcmUiOiIxNjU5Njk3NjMwNDQzIn0.ZxRk1tBgdJ4EZraM_AnGOxvKNl6Mgv1x7FJqCfklTTg"

}

]

* 注意!!!

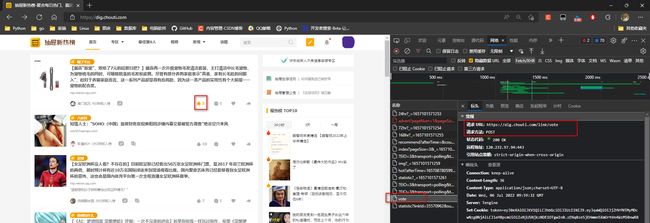

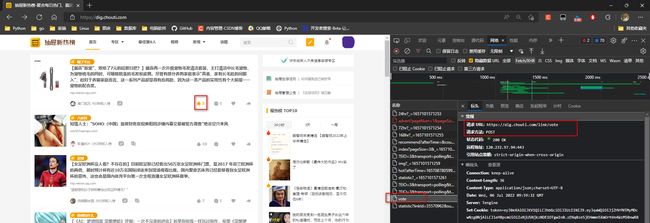

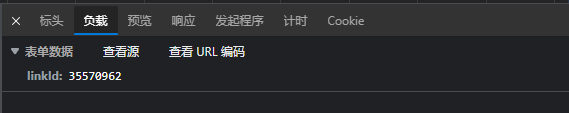

* 6. 点赞请求地址

发送请求地址: https://dig.chouti.com/link/vote

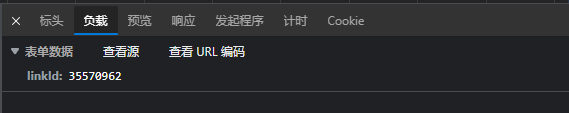

* 7. 点赞携带数据: linkId: 文章id

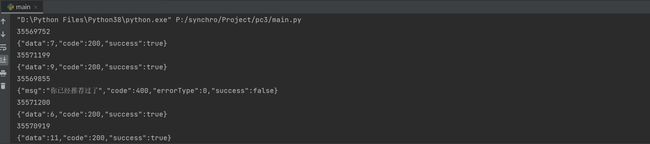

文章id 在div标签 或 div标签a标签的data-id属性中

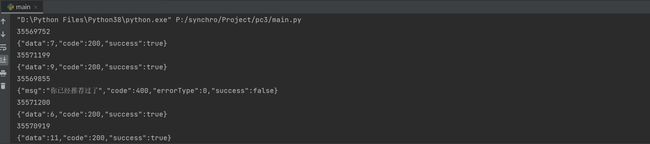

* 8. 点赞成功之后返回响应

* 9. request访问抽屉网 获取所有文章div标签节点

import requests

from bs4 import BeautifulSoup

header = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.54 Safari/537.36'

}

res=requests.get('https://dig.chouti.com/',headers=header)

soup=BeautifulSoup(res.text,'lxml')

div_list=soup.find_all(class_='link-item')

for div in div_list:

article_id=div.attrs.get('data-id')

print(article_id)

if article_id:

data = {

'linkId': article_id

}

cookie={}

with open('chouti.json', 'r') as f:

import json

res = json.load(f)

for item in res:

cookie[item['name']] = item['value']

res = requests.post('https://dig.chouti.com/link/vote', headers=header, data=data,cookies=cookie)

print(res.text)

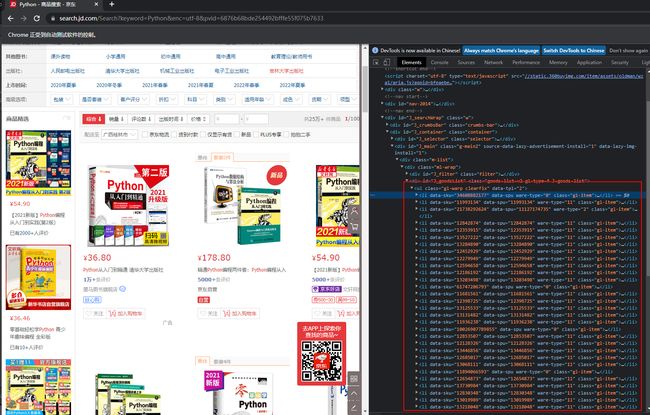

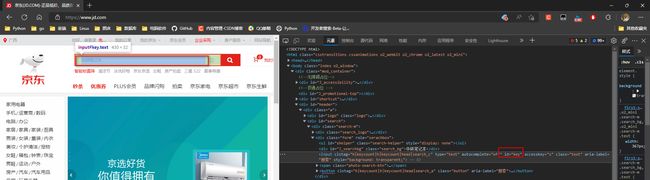

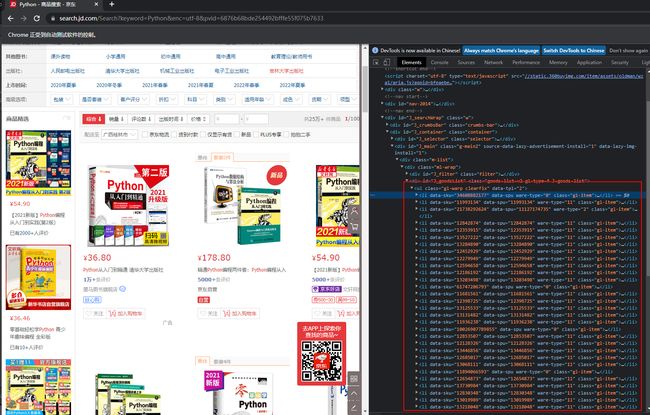

2.14 京东商品信息

* 1. 获取到搜索框

* 2. 获取搜索按键 或者使用 回车按键

* 3. 获取商品信息

* 获取图片就有一直出问题, 图片链接后缀一会是.jpg,

一会是.jpg.avif 只能拿到前四个商品的图片, 之后的都是None.

img的src数值值放在了data-lazy-img属性中

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

bro = webdriver.Chrome(executable_path='./chromedriver.exe')

def get_commodity(bro):

li_list = bro.find_elements_by_class_name('gl-item')

for commodity in li_list:

try:

name = commodity.find_element_by_css_selector('.p-name em').text

price = commodity.find_element_by_css_selector('.p-price i').text

url = commodity.find_element_by_css_selector('.p-img a').get_attribute('href')

commit = commodity.find_element_by_css_selector('.p-commit a').text

img = commodity.find_element_by_css_selector('.p-img img').get_attribute('src')

if not img:

img = 'https:' + commodity.find_element_by_css_selector('.p-img img').get_attribute('data-lazy-img')

img = img.strip('.avif')

print(f"""

商品名称: {name}

商品价格: {price}

商品链接: {url}

商品图片: {img}

商品评论数: {commit}

""")

except Exception:

continue

next_button = bro.find_element_by_class_name('pn-next')

import time

time.sleep(2)

next_button.click()

get_commodity(bro)

try:

bro.get('https://www.jd.com/')

bro.implicitly_wait(10)

search_input = bro.find_element_by_id('key')

search_input.send_keys('Python')

search_input.send_keys(Keys.ENTER)

get_commodity(bro)

except Exception as e:

print(f'出现异常: {e}')

finally:

bro.quit()

结果:

商品名称: 零基础学Python(Python3.9全彩版)(编程入门 项目实践 同步视频)

商品价格: 69.40

商品链接: https://item.jd.com/12353915.html

商品图片: https://img10.360buyimg.com/n1/s200x200_jfs/t1/192162/30/9469/137831/60cff716E24a6f3a9/f11a344fb18010fc.jpg

商品评论数: 20万+

...

2.15 动作链

from selenium import webdriver

import time

from PIL import Image

from chaojiying import Chaojiying_Client

from selenium.webdriver import ActionChains

bro=webdriver.Chrome(executable_path='./chromedriver.exe')

bro.implicitly_wait(10)

try:

bro.get('https://kyfw.12306.cn/otn/resources/login.html')

bro.maximize_window()

button_z=bro.find_element_by_css_selector('.login-hd-account a')

button_z.click()

time.sleep(2)

bro.save_screenshot('./main.png')

img_t=bro.find_element_by_id('J-loginImg')

print(img_t.size)

print(img_t.location)

size=img_t.size

location=img_t.location

img_tu = (int(location['x']), int(location['y']), int(location['x'] + size['width']), int(location['y'] + size['height']))

img = Image.open('./main.png')

fram = img.crop(img_tu)

fram.save('code.png')

chaojiying = Chaojiying_Client('用户名', '密码', '903641')

im = open('code.png', 'rb').read()

res=chaojiying.PostPic(im, 9004)

print(res)

result=res['pic_str']

all_list = []

if '|' in result:

list_1 = result.split('|')

count_1 = len(list_1)

for i in range(count_1):

xy_list = []

x = int(list_1[i].split(',')[0])

y = int(list_1[i].split(',')[1])

xy_list.append(x)

xy_list.append(y)

all_list.append(xy_list)

else:

x = int(result.split(',')[0])

y = int(result.split(',')[1])

xy_list = []

xy_list.append(x)

xy_list.append(y)

all_list.append(xy_list)

print(all_list)

for a in all_list:

x = a[0]

y = a[1]

ActionChains(bro).move_to_element_with_offset(img_t, x, y).click().perform()

time.sleep(1)

username=bro.find_element_by_id('J-userName')

username.send_keys('账户')

password=bro.find_element_by_id('J-password')

password.send_keys('密码')

time.sleep(3)

submit_login=bro.find_element_by_id('J-login')

submit_login.click()

time.sleep(3)

print(bro.get_cookies())

time.sleep(10)

bro.get('https://www.12306.cn/index/')

time.sleep(5)

except Exception as e:

print(e)

finally:

bro.close()