使用 kube-prometheus(release-0.6) 监控 Kubernetes v1.18.20

本文档是使用 kube-prometheus-stack[release-0.6] 来监控 kubernetes1.18.20,具体兼容性可以看这里:https://github.com/prometheus-operator/kube-prometheus/tree/release-0.6#kubernetes-compatibility-matrix

1 概述

1.1 在 k8s 中部署 Prometheus 监控的方法

通常在 k8s 中部署 prometheus 监控可以采用的方法有下面三种:

- 通过自定义yaml手动部署

- 通过 operator 部署

- 通过 helm chart 部署

1.2 什么是 Prometheus Operator

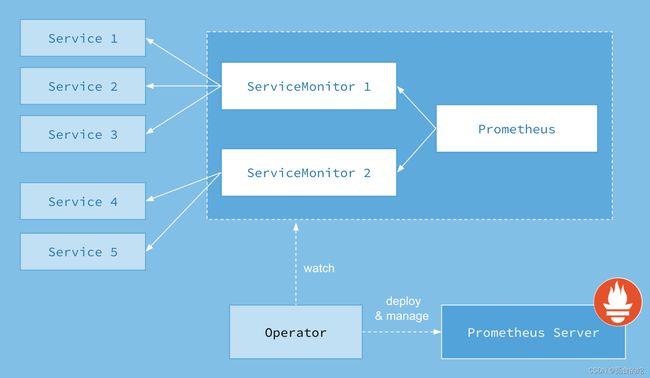

Prometheus Operator 的本职就是一组用户自定义的 CRD 资源以及 Controller 的实现,Prometheus Operator 负责监听这些自定义资源的变化,并且根据这些资源的定义自动化的完成如 Prometheus Server 自身以及配置的自动化管理工作。以下是 Prometheus Operator

的架构图。

1.3 我们为什么使用 Prometheus Operator?

由于 Prometheus 本身没有提供管理配置的 API 接口(尤其是管理监控目标和管理警报规则),也没有提供好用的多实例管理手段,因此这一块往往要自己写一写代码或者脚本。为了简化这类应用程序的管理复杂度,CoreOS 公司率先引入了 Operator的概念,并切首先推出了针对在 kubernetes 下运行和管理 Etcd 的 Eted Operator。并随后推出了 Prometheus Operator。

1.4 kube-prometheus 项目介绍

prometheus-operator官方地址:https://github.com/prometheus-operator/prometheus-operator

kube-prometheus官方地址:https://github.com/prometheus-operator/kube-prometheus

两个项目的关系:前者只包含了Prometheus Operator,后者既包含了Operator,又包含了Prometheus相关组件的部署及常用的Prometheus自定义监控,具体包含下面的组件

- The Prometheus Operator:创建CRD自定义的资源对象

- Highly available Prometheus:创建高可用的Prometheus

- Highly available Alertmanager:创建高可用的告警组件

- Prometheus node-exporter:创建主机的监控组件

- Prometheus Adapter for Kubernetes Metrics APIs:创建自定义监控的指标工具(例如可以通过nginx的request来进行应用的自动伸缩)

- kube-state-metrics:监控k8s相关资源对象的状态指标

- Grafana: 进行图像展示

2 环境介绍

我的环境中 kubernetes 是通过 kubeadm 搭建的 1.18.20 版本,由三个控制节点和三个计算节点组成,持久化存储选择的是默认的 nfs 存储 和 分布式存储 longhorn。

[[email protected] ~]$ kubectl version -oyaml

clientVersion:

buildDate: "2021-06-16T12:58:51Z"

compiler: gc

gitCommit: 1f3e19b7beb1cc0110255668c4238ed63dadb7ad

gitTreeState: clean

gitVersion: v1.18.20

goVersion: go1.13.15

major: "1"

minor: "18"

platform: linux/amd64

serverVersion:

buildDate: "2021-06-16T12:51:17Z"

compiler: gc

gitCommit: 1f3e19b7beb1cc0110255668c4238ed63dadb7ad

gitTreeState: clean

gitVersion: v1.18.20

goVersion: go1.13.15

major: "1"

minor: "18"

platform: linux/amd64

[[email protected] ~]$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

longhorn driver.longhorn.io Delete Immediate true 170d

managed-nfs-storage (default) fuseim.pri/ifs Retain Immediate false 197d

kube-prometheus 的兼容性说明:https://github.com/prometheus-operator/kube-prometheus/tree/release-0.6#kubernetes-compatibility-matrix

3 清单准备

从官方的地址获取 release-0.6 分支,或者直接下载该分支的包

[[email protected] ~]$ git clone https://github.com/prometheus-operator/kube-prometheus.git

[[email protected] ~]$ git checkout release-0.6

或者

[[email protected] ~]$ wget -c https://github.com/prometheus-operator/kube-prometheus/archive/v0.6.0.zip

默认下来的文件较多,建议把文件进行归类处理,将相关的 yaml 文件移动到对应目录下

[[email protected] ~]$ cd kube-prometheus/manifests

[[email protected] ~]$ mkdir -p serviceMonitor prometheus adapter node-exporter kube-state-metrics grafana alertmanager operator other

最终的目录结构如下:

[[email protected] ~]$ tree manifests/

manifests/

├── adapter

│ ├── prometheus-adapter-apiService.yaml

│ ├── prometheus-adapter-clusterRoleAggregatedMetricsReader.yaml

│ ├── prometheus-adapter-clusterRoleBindingDelegator.yaml

│ ├── prometheus-adapter-clusterRoleBinding.yaml

│ ├── prometheus-adapter-clusterRoleServerResources.yaml

│ ├── prometheus-adapter-clusterRole.yaml

│ ├── prometheus-adapter-configMap.yaml

│ ├── prometheus-adapter-deployment.yaml

│ ├── prometheus-adapter-roleBindingAuthReader.yaml

│ ├── prometheus-adapter-serviceAccount.yaml

│ └── prometheus-adapter-service.yaml

├── alertmanager

│ ├── alertmanager-alertmanager.yaml

│ ├── alertmanager-secret.yaml

│ ├── alertmanager-serviceAccount.yaml

│ └── alertmanager-service.yaml

├── grafana

│ ├── grafana-dashboardDatasources.yaml

│ ├── grafana-dashboardDefinitions.yaml

│ ├── grafana-dashboardSources.yaml

│ ├── grafana-deployment.yaml

│ ├── grafana-serviceAccount.yaml

│ └── grafana-service.yaml

├── kube-state-metrics

│ ├── kube-state-metrics-clusterRoleBinding.yaml

│ ├── kube-state-metrics-clusterRole.yaml

│ ├── kube-state-metrics-deployment.yaml

│ ├── kube-state-metrics-serviceAccount.yaml

│ └── kube-state-metrics-service.yaml

├── node-exporter

│ ├── node-exporter-clusterRoleBinding.yaml

│ ├── node-exporter-clusterRole.yaml

│ ├── node-exporter-daemonset.yaml

│ ├── node-exporter-serviceAccount.yaml

│ └── node-exporter-service.yaml

├── operator

│ ├── 0namespace-namespace.yaml

│ ├── prometheus-operator-0alertmanagerCustomResourceDefinition.yaml

│ ├── prometheus-operator-0podmonitorCustomResourceDefinition.yaml

│ ├── prometheus-operator-0prometheusCustomResourceDefinition.yaml

│ ├── prometheus-operator-0prometheusruleCustomResourceDefinition.yaml

│ ├── prometheus-operator-0servicemonitorCustomResourceDefinition.yaml

│ ├── prometheus-operator-0thanosrulerCustomResourceDefinition.yaml

│ ├── prometheus-operator-clusterRoleBinding.yaml

│ ├── prometheus-operator-clusterRole.yaml

│ ├── prometheus-operator-deployment.yaml

│ ├── prometheus-operator-serviceAccount.yaml

│ ├── prometheus-operator-serviceMonitor.yaml

│ └── prometheus-operator-service.yaml

├── other

│ └── grafana-pvc.yaml

├── prometheus

│ ├── prometheus-clusterRoleBinding.yaml

│ ├── prometheus-clusterRole.yaml

│ ├── prometheus-prometheus.yaml

│ ├── prometheus-roleBindingConfig.yaml

│ ├── prometheus-roleBindingSpecificNamespaces.yaml

│ ├── prometheus-roleConfig.yaml

│ ├── prometheus-roleSpecificNamespaces.yaml

│ ├── prometheus-rules.yaml

│ ├── prometheus-serviceAccount.yaml

│ └── prometheus-service.yaml

├── serviceMonitor

│ ├── alertmanager-serviceMonitor.yaml

│ ├── grafana-serviceMonitor.yaml

│ ├── kube-state-metrics-serviceMonitor.yaml

│ ├── node-exporter-serviceMonitor.yaml

│ ├── prometheus-adapter-serviceMonitor.yaml

│ ├── prometheus-serviceMonitorApiserver.yaml

│ ├── prometheus-serviceMonitorCoreDNS.yaml

│ ├── prometheus-serviceMonitorKubeControllerManager.yaml

│ ├── prometheus-serviceMonitorKubelet.yaml

│ ├── prometheus-serviceMonitorKubeScheduler.yaml

│ └── prometheus-serviceMonitor.yaml

└── setup

10 directories, 66 files

修改 yaml,增加 prometheus 和 grafana 的持久化存储

manifests/prometheus/prometheus-prometheus.yaml

...

serviceMonitorSelector: {}

version: v2.22.1

retention: 3d

storage:

volumeClaimTemplate:

spec:

storageClassName: dynamic-ceph-rbd

resources:

requests:

storage: 5Gi

manifests/grafana/grafana-deployment.yaml

...

serviceAccountName: grafana

volumes:

# - emptyDir: {}

# name: grafana-storage

- name: grafana-storage

persistentVolumeClaim:

claimName: grafana-data

新增 grafana 的 pvc,创建文件 manifests/other/grafana-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: grafana-data

namespace: monitoring

annotations:

volume.beta.kubernetes.io/storage-class: "dynamic-ceph-rbd"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

需要把 prometheus、alertmanager、grafana 这三个的组件的 Service设置为 NodePort 类型

4 开始部署

执行部署

[[email protected] ~]$ kubectl create -f other/grafana-pvc.yaml

[[email protected] ~]$ kubectl create -f operator/

[[email protected] ~]$ kubectl create -f adapter/ -f alertmanager/ -f grafana/ -f kube-state-metrics/ -f node-exporter/ -f prometheus/ -f serviceMonitor/

查看状态

[[email protected] ~]$ kubectl get po,svc -n monitoring

NAME READY STATUS RESTARTS AGE

pod/alertmanager-main-0 2/2 Running 0 133m

pod/alertmanager-main-1 2/2 Running 0 133m

pod/alertmanager-main-2 2/2 Running 0 133m

pod/grafana-5cd74dc975-fthb7 1/1 Running 0 133m

pod/kube-state-metrics-69d4c7c69d-wbvlg 3/3 Running 0 133m

pod/node-exporter-h6z9l 2/2 Running 0 133m

pod/node-exporter-jvjh2 2/2 Running 0 133m

pod/node-exporter-qjs2s 2/2 Running 0 133m

pod/node-exporter-vkbxk 2/2 Running 0 133m

pod/node-exporter-w2vr9 2/2 Running 0 133m

pod/node-exporter-ztvjj 2/2 Running 0 133m

pod/prometheus-adapter-66b855f564-jl58t 1/1 Running 0 133m

pod/prometheus-k8s-0 3/3 Running 1 133m

pod/prometheus-k8s-1 3/3 Running 1 133m

pod/prometheus-operator-57859b8b59-92bbd 2/2 Running 0 134m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager-main NodePort 10.98.248.252 <none> 9093:40002/TCP 133m

service/alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 133m

service/grafana NodePort 10.109.127.152 <none> 3000:40003/TCP 133m

service/kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 133m

service/node-exporter ClusterIP None <none> 9100/TCP 133m

service/prometheus-adapter ClusterIP 10.106.95.130 <none> 443/TCP 133m

service/prometheus-k8s NodePort 10.111.134.68 <none> 9090:40001/TCP 133m

service/prometheus-operated ClusterIP None <none> 9090/TCP 133m

service/prometheus-operator ClusterIP None <none> 8443/TCP 134m

5 解决 ControllerManager、Scheduler 监控问题

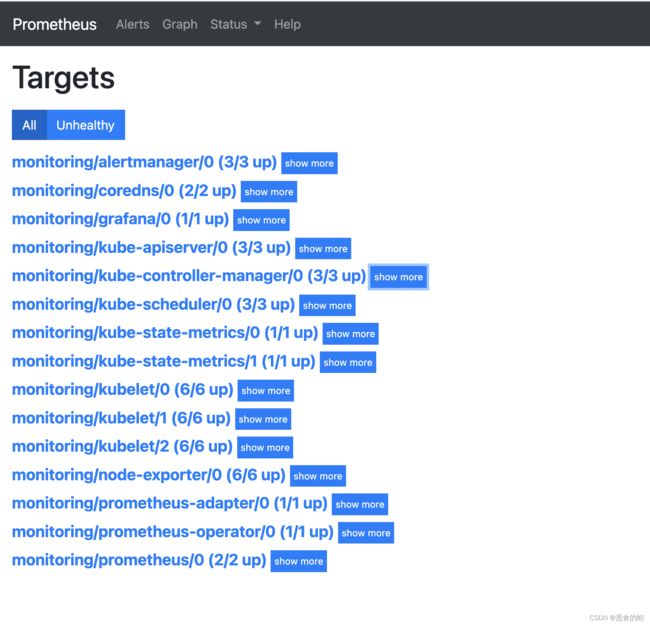

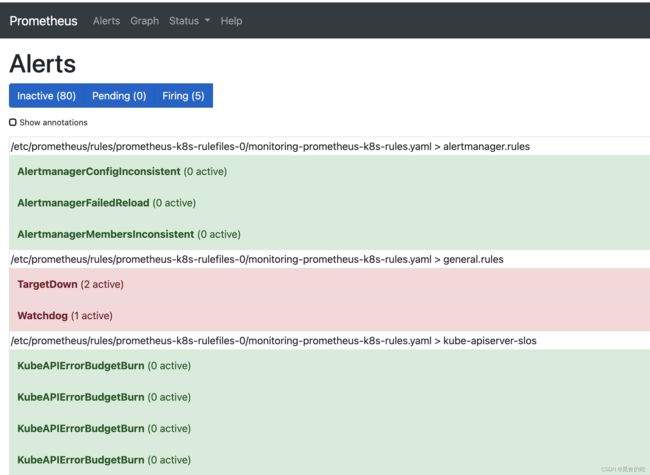

默认安装后访问 prometheus,我们可以看到有三个报警:Watchdog、KubeControllerManagerDown、KubeSchedulerDown

其中 Watchdog 是一个正常的报警,这个告警的作用是:如果alermanger或者prometheus本身挂掉了就发不出告警了,因此一般会采用另一个监控来监控prometheus,或者自定义一个持续不断的告警通知,哪一天这个告警通知不发了,说明监控出现问题了。prometheus operator已经考虑了这一点,本身携带一个watchdog,作为对自身的监控。

如果需要关闭,删除或注释掉Watchdog部分

prometheus-rules.yaml

...

- name: general.rules

rules:

- alert: TargetDown

annotations:

message: 'xxx'

expr: 100 * (count(up == 0) BY (job, namespace, service) / count(up) BY (job, namespace, service)) > 10

for: 10m

labels:

severity: warning

# - alert: Watchdog

# annotations:

# message: |

# This is an alert meant to ensure that the entire alerting pipeline is functional.

# This alert is always firing, therefore it should always be firing in Alertmanager

# and always fire against a receiver. There are integrations with various notification

# mechanisms that send a notification when this alert is not firing. For example the

# "DeadMansSnitch" integration in PagerDuty.

# expr: vector(1)

# labels:

# severity: none

KubeControllerManagerDown、KubeSchedulerDown 的解决

原因是因为默认安装的 controller-manager 和 scheduler 默认监听的是 127.0.0.1,需要修改 0.0.0.0。网上有的说是默认安装的集群并没有给系统 kube-controller-manager 组件创建 svc,我查看一下我自己的 kube-system下的 svc 资源对象是存在 kube-controller-manager 组件创建 svc 并且名字是 kube-controller-manager-svc。该 svc 有一个 labels 正好是 k8s-app=kube-controller-manager 和 文件 prometheus-serviceMonitorKubeControllerManager.yaml ServiceMonitor 的 labels 相同。

[21:38]:[[email protected]:~]# kubectl get svc -n kube-system --show-labels

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE LABELS

kube-controller-manager-svc ClusterIP None <none> 10257/TCP 260d k8s-app=kube-controller-manager

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 261d k8s-app=kube-dns,kubernetes.io/cluster-service=true,kubernetes.io/name=KubeDNS

kube-scheduler-svc ClusterIP None <none> 10259/TCP 260d k8s-app=kube-scheduler

kubelet ClusterIP None <none> 10250/TCP,10255/TCP,4194/TCP 260d k8s-app=kubelet

prometheus-serviceMonitorKubeControllerManager.yaml 文件内容如下:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: kube-controller-manager

name: kube-controller-manager

namespace: monitoring

spec:

endpoints:

- bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

interval: 30s

metricRelabelings:

- action: drop

...

...

修改 kube-controller-manager 的监听地址

# vim /etc/kubernetes/manifests/kube-controller-manager.yaml

...

spec:

containers:

- command:

- kube-controller-manager

- --allocate-node-cidrs=true

- --authentication-kubeconfig=/etc/kubernetes/controller-manager.conf

- --authorization-kubeconfig=/etc/kubernetes/controller-manager.conf

- --bind-address=0.0.0.0

# netstat -lntup|grep kube-contro

tcp6 0 0 :::10257 :::* LISTEN 38818/kube-controll

kube-sheduler 同理,修改 kube-scheduler 的监听地址

# vim /etc/kubernetes/manifests/kube-scheduler.yaml

...

spec:

containers:

- command:

- kube-scheduler

- --authentication-kubeconfig=/etc/kubernetes/scheduler.conf

- --authorization-kubeconfig=/etc/kubernetes/scheduler.conf

- --bind-address=0.0.0.0

# netstat -lntup|grep kube-sched

tcp6 0 0 :::10259 :::* LISTEN 100095/kube-schedul