ES7基础篇-10-SpringBoot集成ES操作

文章目录

- 0. 背景

- 1. 配置环境依赖

-

- 1.1 查看一下当前使用的es版本

- 1.2 配置maven的依赖以及环境变量

- 1.3 配置yaml

- 2. 索引库操作

-

- 2.1 创建索引库

- 2.2 查询索引库

- 2.3 删除索引库

- 2.4 总结

- 3. 索引映射操作

-

- 3.1 创建映射

- 3.2 查看映射

- 3.3 总结

- 4. 文档操作

-

- 4.1 新增文档数据

- 4.2 删除文档数据

- 4.3 查询文档数据

- 4.4 修改文档数据

- 4.5 总结

- 5. 搜索操作

-

- 5.1 查询所有 `match_all`

- 5.2 具体查询 `match`

- 5.3 范围查询 `range`

- 5.4 过滤`source`

- 5.5 排序 `sort`

- 5.6 分页 `from size`

- 5.7 聚合 `aggs ` 之 `度量(metrics)`

- 5.8 聚合 `aggs ` 之 `桶(bucket)`

- 5.8 高亮

0. 背景

下面会简单介绍一些关于es结合SpringBoot使用的案例,更多详情介绍应该去官网看看: https://www.elastic.co/guide/en/elasticsearch/client/java-rest/current/java-rest-high-supported-apis.html

1. 配置环境依赖

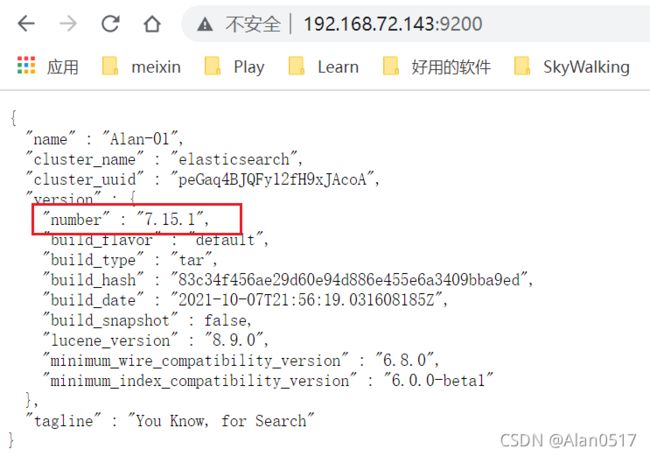

1.1 查看一下当前使用的es版本

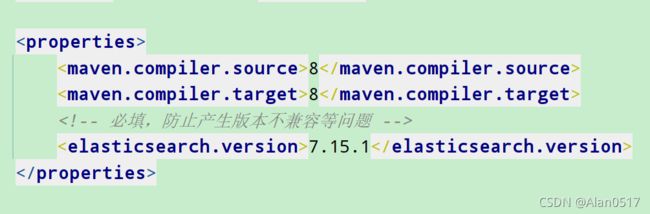

1.2 配置maven的依赖以及环境变量

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>elasticsearch-rest-high-level-client</artifactId>

<version>7.15.1</version>

</dependency>

1.3 配置yaml

spring:

elasticsearch:

rest:

uris: http://192.168.72.143:9200

这里是因为我是单机模式,9200端口,但是如果是集群,就是9300端口,为了演示方便直接这样配置,但是一般公司使用的是集群,就需要配置集群的配置,或者在es的bean里面做文章都可以

2. 索引库操作

其实会使用kibana进行命令操作,在代码层面也是一样的,只不过命令换成了方法和一些类;下午结合kibana命令对应java代码进行展示

2.1 创建索引库

- 在kibana当中,创建索引库是这样操作

PUT wang_index_01

{

"settings": {

"number_of_shards": 1,

"number_of_replicas": 1

}

}

- 对应java代码:

注入依赖

@Autowired

private RestHighLevelClient client;

// 创建索引

CreateIndexRequest indexRequest = new CreateIndexRequest ("wang_index_01");

//分片参数

indexRequest.settings(Settings.builder()

//分片数

.put("index.number_of_shards", 1)

// 副本数

.put("index.number_of_replicas", 1)

);

// 创建索引操作客户端

IndicesClient indices = client.indices();

// 创建响应结果

CreateIndexResponse createIndexResponse = indices.create(indexRequest, RequestOptions.DEFAULT);

//获取响应值

boolean acknowledged = createIndexResponse.isAcknowledged();

System.out.println("acknowledged = " + acknowledged);

2.2 查询索引库

- 在kibana当中,查询索引库是这样操作

GET wang_index_01

- 对应java代码:

GetIndexRequest getIndexRequest = new GetIndexRequest();

getIndexRequest.indices("wang_index_01");

GetIndexResponse getIndexResponse = client.indices().get(getIndexRequest, RequestOptions.DEFAULT);

System.out.println("getIndexResponse = " + getIndexResponse);

2.3 删除索引库

- 在kibana当中,删除索引库是这样操作

DELETE wang_index_01

- 对应java代码:

DeleteIndexRequest deleteIndexRequest = new DeleteIndexRequest("wang_index_01");

AcknowledgedResponse delete = client.indices().delete(deleteIndexRequest, RequestOptions.DEFAULT);

boolean acknowledged = delete.isAcknowledged();

System.out.println("acknowledged = " + acknowledged);

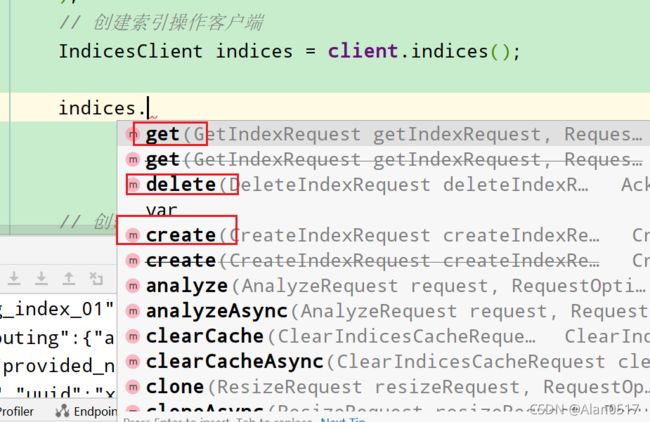

2.4 总结

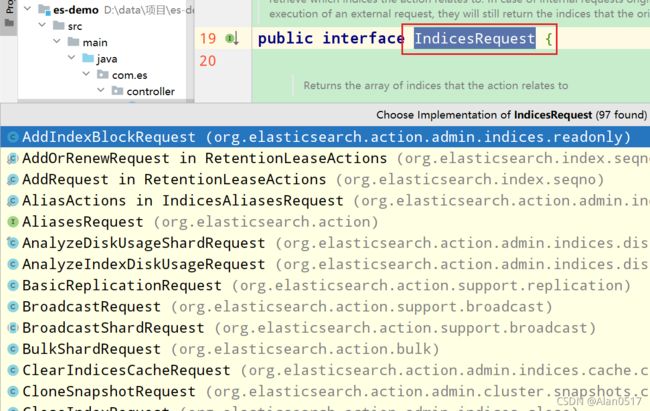

当使用es的客户端 RestHighLevelClient 时,对于索引库操作,不涉及映射,先获取他的索引库客户端

// 创建索引操作客户端

IndicesClient indices = client.indices();

然后借助idea的提示,会出现一系列API

每个API都可以同步或异步调用。 同步方法返回一个响应对象,而异步方法的名称以async后缀结尾,需要一个监听器参数,一旦收到响应或错误,就会被通知(由低级客户端管理的线程池)。

然后你就可以根据方法的提示,创建响应的api,做响应的操作,其他们都有一个共同的接口爸爸IndicesRequest ,有兴趣可以多了解一下

对于索引库的操作API:

- 创建索引库:

CreateIndexRequest - 查询索引库:

GetIndexRequest - 删除索引库:

DeleteIndexRequest

对于索引的操作是基于***IndexRequest来进行操作的。

常见操作中还有校验索引是否存在:exists

参考腾讯客户端配置:腾讯Es客户端配置

3. 索引映射操作

3.1 创建映射

因为之前在2.1小章节,我们已经创建过索引库,所以这里就直接创建映射操作

- 在kibana当中,在已有索引库创建映射如下

PUT /wang_index_01/_mapping

{

"properties": {

"address": {

"type": "text",

"analyzer": "ik_max_word"

},

"userName": {

"type": "keyword"

},

"userPhone": {

"type": "text",

"analyzer": "ik_max_word"

}

}

}

- 对应java代码如下:

PutMappingRequest putMappingRequest = new PutMappingRequest("wang_index_01");

XContentBuilder builder = XContentFactory.jsonBuilder()

.startObject()

.startObject("properties")

.startObject("address")

.field("type", "text")

.field("analyzer", "ik_max_word")

.endObject()

.startObject("userName")

.field("type", "keyword")

.endObject()

.startObject("userPhone")

.field("type", "text")

.field("analyzer", "ik_max_word")

.endObject()

.endObject()

.endObject();

PutMappingRequest source = putMappingRequest.source(builder);

AcknowledgedResponse acknowledgedResponse = client.indices().putMapping(source, RequestOptions.DEFAULT);

boolean acknowledged = acknowledgedResponse.isAcknowledged();

System.out.println("acknowledged = " + acknowledged);

其实代码根命令没啥区别,startObject你可以理解为是{ ,endObject可以理解为是} ,对应看kibana的命令你就特别熟悉了,简直一模一样;

也可以使用对象映射,比如:

- 自定义分词器枚举

@Getter

@SuppressWarnings("ALL")

public enum AnalyzerEnum {

NO("不使用分词"),

/**

* 标准分词,默认分词器

*/

STANDARD("standard"),

/**

* ik_smart:会做最粗粒度的拆分;已被分出的词语将不会再次被其它词语占有

*/

IK_SMART("ik_smart"),

/**

* ik_max_word :会将文本做最细粒度的拆分;尽可能多的拆分出词语

*/

IK_MAX_WORD("ik_max_word");

private String type;

AnalyzerEnum(String type) {

this.type = type;

}

}

- 自定义类型枚举

@Getter

@SuppressWarnings("ALL")

public enum EsFieldEnum {

TEXT("text"),

KEYWORD("keyword"),

INTEGER("integer"),

LONG("long"),

DOUBLE("double"),

DATE("date"),

/**

* 单条数据

*/

OBJECT("object"),

/**

* 嵌套数组

*/

NESTED("nested"),

;

EsFieldEnum (String type) {

this.type = type;

}

private final String type;

}

- 自定义注解

@Retention(RetentionPolicy.RUNTIME)

@Target(ElementType.FIELD)

@Documented

@Inherited

public @interface EsField {

EsFieldEnum type() default FieldTypeEnum.TEXT;

/**

* 指定分词器

*/

AnalyzerEnum analyzer() default AnalyzerEnum.STANDARD;

}

- 实体类:

@Data

@Accessors(chain = true)

@SuppressWarnings("ALL")

public class EsStudent implements Serializable {

private static final long serialVersionUID = 7100225268368014590L;

@EsField(type = FieldTypeEnum.KEYWORD)

private String name;

@EsField(type = FieldTypeEnum.INTEGER)

private int age;

}

- 实体类映射关系

private XContentBuilder generateBuilder(Class clazz) {

XContentBuilder builder = null;

try {

builder = XContentFactory.jsonBuilder();

builder.startObject();

builder.startObject("properties");

java.lang.reflect.Field[] declaredFields = clazz.getDeclaredFields();

for (java.lang.reflect.Field f : declaredFields) {

if (f.isAnnotationPresent(EsField.class)) {

// 获取注解

EsField declaredAnnotation = f.getDeclaredAnnotation(EsField.class);

if (declaredAnnotation.type() == FieldTypeEnum.OBJECT) {

// 获取当前类的对象-- Action

Class<?> type = f.getType();

java.lang.reflect.Field[] df2 = type.getDeclaredFields();

builder.startObject(f.getName());

builder.startObject("properties");

// 遍历该对象中的所有属性

for (java.lang.reflect.Field f2 : df2) {

if (f2.isAnnotationPresent(EsField.class)) {

// 获取注解

EsField declaredAnnotation2 = f2.getDeclaredAnnotation(EsField.class);

builder.startObject(f2.getName());

builder.field("type", declaredAnnotation2.type().getType());

// keyword不需要分词

if (declaredAnnotation2.type() == FieldTypeEnum.TEXT) {

builder.field("analyzer", declaredAnnotation2.analyzer().getType());

}

builder.endObject();

}

}

builder.endObject();

} else {

builder.startObject(f.getName());

builder.field("type", declaredAnnotation.type().getType());

// keyword不需要分词

if (declaredAnnotation.type() == FieldTypeEnum.TEXT) {

builder.field("analyzer", declaredAnnotation.analyzer().getType());

}

}

builder.endObject();

}

}

// 对应property

builder.endObject();

builder.endObject();

} catch (IOException e) {

log.error("【ES操作】 组装映射字段语句异常");

}

return builder;

}

- 方法

public boolean createIndex(String indexName, Class clazz) throws IOException {

if (checkIndexExists(indexName)) {

log.info("索引={}已存在", indexName);

return false;

}

CreateIndexRequest request = new CreateIndexRequest(indexName);

request.settings(Settings.builder()

.put("index.number_of_shards", 3)

.put("index.number_of_replicas", 3)

);

request.mapping(generateBuilder(clazz));

CreateIndexResponse response = client.indices().create(request, RequestOptions.DEFAULT);

boolean acknowledged = response.isAcknowledged();

boolean shardsAcknowledged = response.isShardsAcknowledged();

return acknowledged || shardsAcknowledged;

}

3.2 查看映射

- 在kibana当中,查询映射:

GET wang_index_01/_mapping

- 对应java代码如下:

GetMappingsRequest getMappingsRequest = new GetMappingsRequest();

getMappingsRequest.indices("wang_index_01");

GetMappingsResponse mapping = client.indices().getMapping(getMappingsRequest, RequestOptions.DEFAULT);

Map<String, MappingMetadata> mappings = mapping.mappings();

MappingMetadata metadata = mappings.get("wang_index_01");

String s = metadata.getSourceAsMap().toString();

System.out.println("s = " + s);

3.3 总结

涉及到索引库,映射操作,其实在 client.indices() 都可以找到,见名思意就行;

- PutMappingRequest 新增映射

- GetMappingsRequest 查询映射

4. 文档操作

4.1 新增文档数据

- kibana当中新增数据如下

POST wang_index_01/_doc/1

{

"address":"江西宜春上高泗溪镇",

"userName":"张三",

"userPhone":"15727538286"

}

- 对应代码:

Map<String, Object> jsonMap = new HashMap<>();

jsonMap.put("address", "江西宜春上高泗溪镇");

jsonMap.put("userName", "张三");

jsonMap.put("userPhone", "15727538286");

IndexRequest indexRequest = new IndexRequest("wang_index_01")

.id("1").source(jsonMap);

Map<String, Object> jsonMap2 = new HashMap<>();

jsonMap2.put("address", "江西宜春高安祥符镇");

jsonMap2.put("userName", "李四");

jsonMap2.put("userPhone", "15727538286");

IndexRequest indexRequest2 = new IndexRequest("wang_index_01")

.id("2").source(jsonMap2);

BulkRequest request = new BulkRequest();

request.add(indexRequest);

request.add(indexRequest2);

BulkResponse bulk = client.bulk(request, RequestOptions.DEFAULT);

RestStatus status = bulk.status();

System.out.println("status = " + status);

这里我采用批量新增的方式新增了2条记录

更多详细参考官网: https://www.elastic.co/guide/en/elasticsearch/client/java-rest/current/java-rest-high-document-index.html

4.2 删除文档数据

- kibana当中删除数据如下:

DELETE wang_index_01/_doc/1

- 对应java代码如下:

DeleteRequest deleteRequest = new DeleteRequest("wang_index_01");

deleteRequest.id("1");

DeleteResponse delete = client.delete(deleteRequest, RequestOptions.DEFAULT);

RestStatus status = delete.status();

System.out.println("status = " + status);

4.3 查询文档数据

- kibana当中查询数据如下:

GET wang_index_01/_doc/1

- 对应java代码如下:

GetRequest getRequest = new GetRequest("wang_index_01");

getRequest.id("1");

GetResponse documentFields = client.get(getRequest, RequestOptions.DEFAULT);

String sourceAsString = documentFields.getSourceAsString();

System.out.println("sourceAsString = " + sourceAsString);

4.4 修改文档数据

- kibana修改文档如下:

PUT wang_index_01/_doc/1

{

"address":"江西宜春上高泗溪镇",

"userName":"哈哈",

"userPhone":"15727538288"

}

- 对应java代码如下:

Map<String, Object> jsonMap = new HashMap<>();

jsonMap.put("address", "江西宜春上高泗溪镇");

jsonMap.put("userName", "哈哈");

jsonMap.put("userPhone", "15727538287");

UpdateRequest updateRequest = new UpdateRequest("wang_index_01","1");

updateRequest.doc(jsonMap);

UpdateResponse update = client.update(updateRequest, RequestOptions.DEFAULT);

RestStatus status = update.status();

System.out.println("status = " + status);

4.5 总结

- BulkRequest 新增数据

- DeleteRequest 删除数据

- UpdateRequest 修改数据

- GetRequest 查询数据

5. 搜索操作

ES最重要的环节就是搜索查询这一块,下面列举各种搜索操作

5.1 查询所有 match_all

- kibana查询所有:

GET wang_index_01/_search

{

"query": {

"match_all": {}

}

}

- 对应代码:

//创建搜索对象,入参可以为多个索引库参数

SearchRequest searchRequest = new SearchRequest("wang_index_01");

//创建查询构造器

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

//设置查询构造器

searchRequest.source(searchSourceBuilder);

// 获取结果集

SearchResponse search = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

//遍历每一条记录

for (SearchHit hit : hits) {

String sourceAsString = hit.getSourceAsString();

System.out.println("sourceAsString = " + sourceAsString);

}

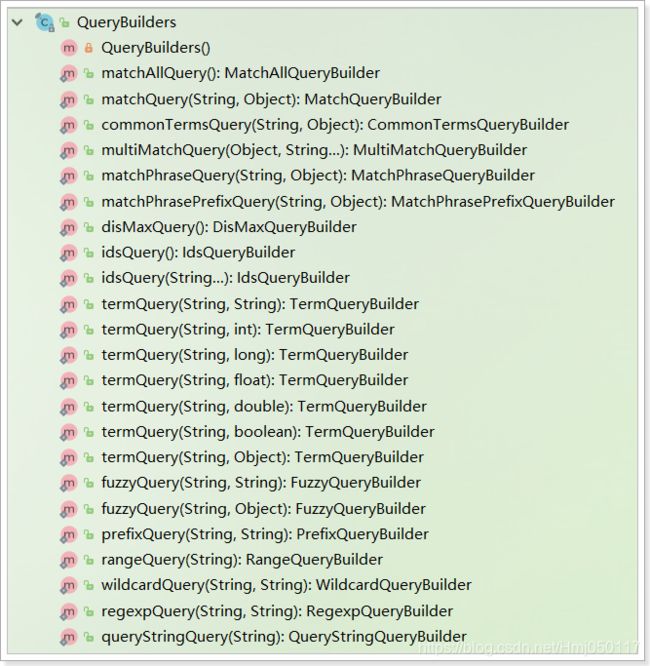

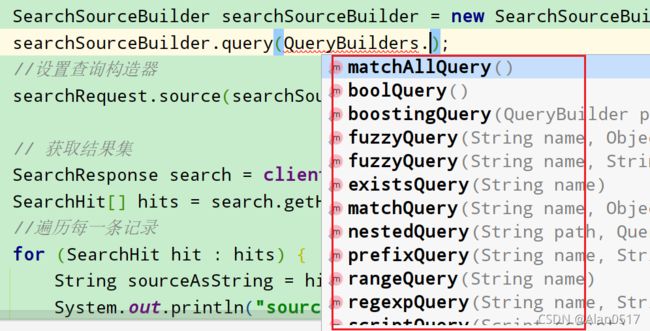

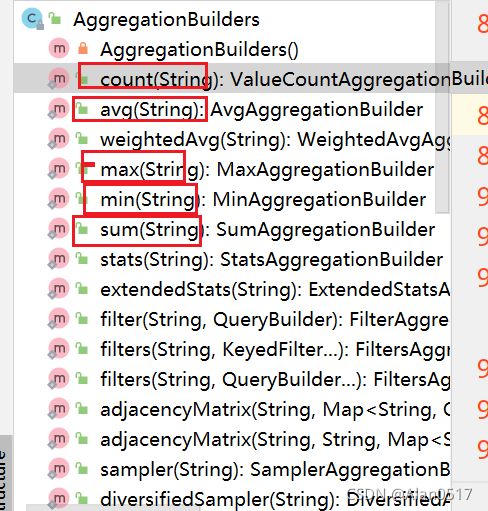

其中,kibana命令的match_all 其实就是对应 QueryBuilders.matchAllQuery() ,点开查询条件的构造器会可以看到更多你想看到的

官方提供了

官方提供了QueryBuilders工厂帮我们构建各种实现类:

5.2 具体查询 match

- kibana

GET wang_index_01/_search

{

"query": {

"match": {

"userName": "哈哈"

}

}

}

- java代码如下:

//创建搜索对象,入参可以为多个索引库参数

SearchRequest searchRequest = new SearchRequest("wang_index_01");

//创建查询构造器

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

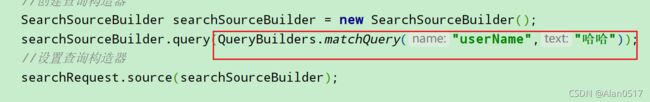

searchSourceBuilder.query(QueryBuilders.matchQuery("userName","哈哈"));

//设置查询构造器

searchRequest.source(searchSourceBuilder);

// 获取结果集

SearchResponse search = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

//遍历每一条记录

for (SearchHit hit : hits) {

String sourceAsString = hit.getSourceAsString();

System.out.println("sourceAsString = " + sourceAsString);

}

其实搜索类型的变化,仅仅是利用QueryBuilders构建的查询对象不同而已,其他代码基本一致:

- 组合查询,条件是并且的关系

- kibana

GET /wang_index_01/_search

{

"query": {

"bool": {

"must": [

{

"term": {

"userName": "张三"

}

},

{

"term": {

"passWord": "12345"

}

}

]

}

}

}

代码实现:

public SearchHit[] findByMap(String index, HashMap<String, Object> params, int size) {

SearchRequest searchRequest = new SearchRequest(index);

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

sourceBuilder.timeout(new TimeValue(1, TimeUnit.SECONDS));

sourceBuilder.size(size);

BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();

if (CollUtil.isNotEmpty(params)) {

for (String key : params.keySet()) {

if (ObjectUtils.isEmpty(params.get(key))) {

continue;

}

if (params.get(key) instanceof List) {

List<Object> param = (List<Object>) params.get(key);

boolQueryBuilder.must(QueryBuilders.termsQuery(key, param));

continue;

}

boolQueryBuilder.must(QueryBuilders.matchQuery(key, params.get(key)));

}

}

//设置查询构造器

sourceBuilder.query(boolQueryBuilder);

searchRequest.source(sourceBuilder);

try {

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

return searchResponse.getHits().getHits();

} catch (Exception e) {

e.printStackTrace();

}

return new SearchHit[]{};

}

5.3 范围查询 range

- kibana

GET wang_index_01/_search

{

"query": {

"range": {

"userPhone": {

"gte": 15727538288,

"lte": 15727538289

}

}

}

}

- java代码如下:

//创建搜索对象,入参可以为多个索引库参数

SearchRequest searchRequest = new SearchRequest("wang_index_01");

//创建查询构造器

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

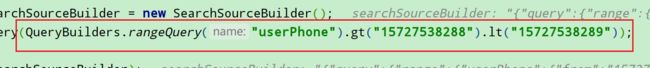

searchSourceBuilder.query(QueryBuilders.rangeQuery("userPhone").gt("15727538288").lt("15727538289"));

//设置查询构造器

searchRequest.source(searchSourceBuilder);

// 获取结果集

SearchResponse search = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

//遍历每一条记录

for (SearchHit hit : hits) {

String sourceAsString = hit.getSourceAsString();

System.out.println("sourceAsString = " + sourceAsString);

}

同理,查询构造器为 QueryBuilders.rangeQuery

5.4 过滤source

之前我们说过哈,每个字段都会保存在source下一份数据,所以默认store=false,

如果我们要做过滤操作,那肯定就从source入手;

- kibana命令如下:

GET wang_index_01/_search

{

"_source": [

"userPhone"

],

"query": {

"match_all": {}

}

}

- java代码如下:

//创建搜索对象,入参可以为多个索引库参数

SearchRequest searchRequest = new SearchRequest("wang_index_01");

//创建查询构造器

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

searchSourceBuilder.fetchField("userPhone");

//设置查询构造器

searchRequest.source(searchSourceBuilder);

// 获取结果集

SearchResponse search = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

String sourceAsString = hit.getSourceAsString();

System.out.println("sourceAsString = " + sourceAsString);

}

5.5 排序 sort

- kibana排序如下:

GET wang_index_01/_search

{

"query": {

"match_all": {

"boost": 1

}

},

"fields": [

{

"field": "userPhone"

}

],

"sort": [

{

"userName": {

"order": "asc"

}

}

]

}

注意,排序的字段一定不能是可分词的,不然会出现如下错误:

Text fields are not optimised for operations that require per-document field data like aggregations and sorting, so these operations are disabled by default. Please use a keyword field instead

- java代码如下所示:

//创建搜索对象,入参可以为多个索引库参数

SearchRequest searchRequest = new SearchRequest("wang_index_01");

//创建查询构造器

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

searchSourceBuilder.sort(new FieldSortBuilder("userName").order(SortOrder.ASC));

searchSourceBuilder.fetchField("userPhone");

//设置查询构造器

searchRequest.source(searchSourceBuilder);

// 获取结果集

SearchResponse search = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

String sourceAsString = hit.getSourceAsString();

System.out.println("sourceAsString = " + sourceAsString);

}

5.6 分页 from size

- kibana命令如下:

GET wang_index_01/_search

{

"query": {

"match_all": {}

},

"from": 0,

"size": 3

}

- java代码如下:

SearchRequest searchRequest = new SearchRequest("wang_index_01");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

searchRequest.source(searchSourceBuilder);

// 添加分页

int page = 1;

int size = 3;

int start = (page - 1) * size;

// 配置分页

searchSourceBuilder.from(start);

searchSourceBuilder.size(3);

// 获取结果集

SearchResponse search = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

String sourceAsString = hit.getSourceAsString();

System.out.println("sourceAsString = " + sourceAsString);

}

5.7 聚合 aggs 之 度量(metrics)

aggregations实体包含了所有的聚合查询,如果是多个聚合查询可以用数组,如果只有一个聚合查询使用对象,aggregations也可以简写为aggs。

- kibana 聚合操作如下:

GET wang_index_01/_search

{

"query": {

"match_all": {}

},

"aggs": {

"countUseName": {

"value_count": {

"field": "userName"

}

}

}

}

- java代码如下:

SearchRequest searchRequest = new SearchRequest("wang_index_01");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

// count统计

searchSourceBuilder.aggregation(AggregationBuilders.count("countUseName").field("userName"));

searchRequest.source(searchSourceBuilder);

// 获取结果集

SearchResponse search = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

String sourceAsString = hit.getSourceAsString();

System.out.println("sourceAsString = " + sourceAsString);

}

其实度量等同于mysql里面的求最大值,最小值,平均值,求和,统计数量等,针对的是某个字段而言

5.8 聚合 aggs 之 桶(bucket)

Bucket 聚合不像metrics 那样基于某一个值去计算,每一个Bucket (桶)是按照我们定义的准则去判断数据是否会落入桶(bucket)中。一个单独的响应中,bucket(桶)的最大个数默认是10000,我们可以通过serarch.max_buckets去进行调整。

Bucket 聚合查询就像是数据库中的group by

- kibana举例如下:

GET wang_index/_search

{

"query": {

"match_all": {

"boost": 1

}

},

"aggregations": {

"genderCount": {

"terms": {

"field": "gender",

"size": 10,

"min_doc_count": 1,

"shard_min_doc_count": 0,

"show_term_doc_count_error": false,

"order": [

{

"_count": "desc"

},

{

"_key": "asc"

}

]

}

},

"balanceAvg": {

"avg": {

"field": "balance"

}

}

}

}

注意,只有不可分词才能参与聚合;

- 对应java代码如下:

//1、创建查询请求,规定查询的索引

SearchRequest request = new SearchRequest("wang_index");

//2、创建条件构造

SearchSourceBuilder builder = new SearchSourceBuilder();

//3、构造条件

MatchAllQueryBuilder matchAllQueryBuilder = QueryBuilders.matchAllQuery();

builder.query(matchAllQueryBuilder);

//聚合年龄分布

TermsAggregationBuilder ageAgg = AggregationBuilders.terms("genderCount").field("gender");

builder.aggregation(ageAgg);

//聚合平均年龄

AvgAggregationBuilder balanceAvg = AggregationBuilders.avg("balanceAvg").field("balance");

builder.aggregation(balanceAvg);

//4、将构造好的条件放入请求中

request.source(builder);

//5、开始执行发送request请求

SearchResponse searchResponse = client.search(request, RequestOptions.DEFAULT);

//6、开始处理返回的数据

SearchHit[] hits = searchResponse.getHits().getHits();

List<String> list = new ArrayList<String>();

for (SearchHit hit : hits) {

String hitString = hit.getSourceAsString();

System.out.println(hitString);

list.add(hitString);

}

Map<String, Aggregation> asMap = searchResponse.getAggregations().getAsMap();

System.out.println("asMap = " + asMap);

5.8 高亮

- kibana举例如下:

GET wang_index_01/_search

{

"query": {

"match_all": {

"boost": 1

}

},

"highlight": {

"pre_tags": [

""

],

"post_tags": [

""

],

"fields": {

"userName": {}

}

}

}

- 对应java代码如下:

SearchRequest searchRequest = new SearchRequest("wang_index_01");

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

HighlightBuilder highlightBuilder = new HighlightBuilder();

highlightBuilder.field("userName")

.preTags("\"\"")

.postTags("");

searchSourceBuilder.highlighter(highlightBuilder);

searchRequest.source(searchSourceBuilder);

// 获取结果集

SearchResponse search = client.search(searchRequest, RequestOptions.DEFAULT);

SearchHit[] hits = search.getHits().getHits();

for (SearchHit hit : hits) {

String sourceAsString = hit.getSourceAsString();

System.out.println("sourceAsString = " + sourceAsString);

}

高亮其实要配合前端做才好看;