k8s集成ceph

有两个参考

参见1:内容全

参见2:rbd,比较详细

ceph的配置

在ceph集群中执行如下命令:

[root@node1 ~]# ceph -s

cluster:

id: 365b02aa-db0c-11ec-b243-525400ce981f

health: HEALTH_OK

services:

mon: 3 daemons, quorum node1,node2,node3 (age 41h)

mgr: node2.dqryin(active, since 2d), standbys: node1.umzcuv

osd: 12 osds: 10 up (since 2d), 10 in (since 2d)

data:

pools: 2 pools, 33 pgs

objects: 1 objects, 19 B

usage: 10 GiB used, 4.9 TiB / 4.9 TiB avail

pgs: 33 active+clean

[root@node1 ~]# ceph mon stat

e11: 3 mons at {node1=[v2:172.70.10.181:3300/0,v1:172.70.10.181:6789/0],node2=[v2:172.70.10.182:3300/0,v1:172.70.10.182:6789/0],node3=[v2:172.70.10.183:3300/0,v1:172.70.10.183:6789/0]}, election epoch 100, leader 0 node1, quorum 0,1,2 node1,node2,node3

部署ceph-csi版本

涉及三方的版本:ceph(Octopus),kubernetes (v1.24.0),ceph-sci版本

现阶段对应ceph csi与k8s版本对应如下:

| Ceph CSI Version | Container Orchestrator Name | Version Tested |

|---|---|---|

| v3.6.1 | Kubernetes | v1.21,v1.22,v1.23 |

| v3.6.0 | Kubernetes | v1.21,v1.22,v1.23 |

| v3.5.1 | Kubernetes | v1.21,v1.22,v1.23 |

| v3.5.0 | Kubernetes | v1.21,v1.22,v1.23 |

| v3.4.0 | Kubernetes | v1.20,v1.21,v1.22 |

目前使用的kubernetes版本是1.24,所以ceph-sci版本就使用最新版本3.6.1

目前使用的Ceph的版本是O版,ceph与Ceph CSI版本的对应关系,因为太多了,所以参照:ceph-sci插件官网

总上,部署ceph-csi V3.6.1版本就可以了

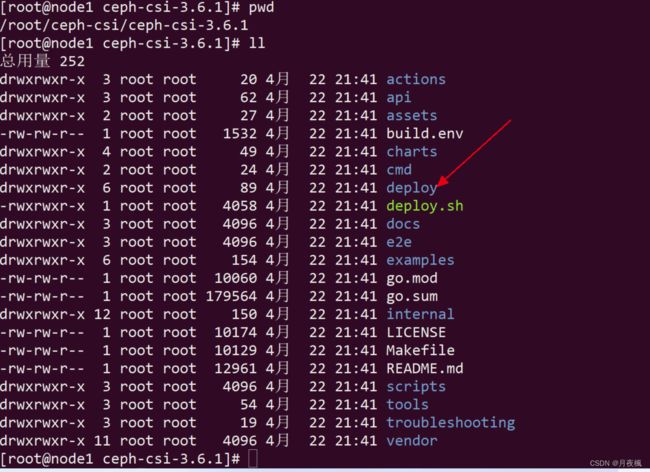

下载ceph-csi

下载ceph-csi 3.6.1的源码:下载地址

deploy目录下的rbd目录下的内容

deploy目录下的rbd目录下的内容

[root@node1 ceph-csi-3.6.1]# tree -L 3 deploy

deploy

├── cephcsi

│ └── image

│ └── Dockerfile

├── cephfs

│ └── kubernetes

│ ├── csi-cephfsplugin-provisioner.yaml

│ ├── csi-cephfsplugin.yaml

│ ├── csi-config-map.yaml

│ ├── csidriver.yaml

│ ├── csi-nodeplugin-psp.yaml

│ ├── csi-nodeplugin-rbac.yaml

│ ├── csi-provisioner-psp.yaml

│ └── csi-provisioner-rbac.yaml

├── Makefile

├── nfs

│ └── kubernetes

│ ├── csi-config-map.yaml

│ ├── csidriver.yaml

│ ├── csi-nfsplugin-provisioner.yaml

│ ├── csi-nfsplugin.yaml

│ ├── csi-nodeplugin-psp.yaml

│ ├── csi-nodeplugin-rbac.yaml

│ ├── csi-provisioner-psp.yaml

│ └── csi-provisioner-rbac.yaml

├── rbd

│ └── kubernetes

│ ├── csi-config-map.yaml

│ ├── csidriver.yaml

│ ├── csi-nodeplugin-psp.yaml

│ ├── csi-nodeplugin-rbac.yaml

│ ├── csi-provisioner-psp.yaml

│ ├── csi-provisioner-rbac.yaml

│ ├── csi-rbdplugin-provisioner.yaml

│ └── csi-rbdplugin.yaml

└── scc.yaml

部署rbd

- 将ceph-csi/deploy/rbd/kubernetes/下的所有yaml文件拷贝到本地

- 创建csi-config-map.yaml

clusterID就是集群ID,ceph -s即可获得

monitors 在/var/lib/ceph/365b02aa-db0c-11ec-b243-525400ce981f/mon.node1/config中

---

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

[

{

"clusterID": "365b02aa-db0c-11ec-b243-525400ce981f",

"monitors": [

"172.70.10.181:6789",

"172.70.10.182:6789",

"172.70.10.183:6789"

]

}

]

metadata:

name: ceph-csi-config

- 创建csi-kms-config-map.yaml,也可以不创建,但是需要将csi-rbdplugin-provisioner.yaml和csi-rbdplugin.yaml中kms有关内容注释掉

---

apiVersion: v1

kind: ConfigMap

data:

config.json: |-

{}

metadata:

name: ceph-csi-encryption-kms-config

- 创建ceph-config-map.yaml

ceph.conf就是复制ceph集群的配置文件,也就是/ect/ceph/ceph.conf文件中的对应内容

---

apiVersion: v1

kind: ConfigMap

data:

ceph.conf: |

[global]

fsid = 365b02aa-db0c-11ec-b243-525400ce981f

mon_host = [v2:172.70.10.181:3300/0,v1:172.70.10.181:6789/0] [v2:172.70.10.182:3300/0,v1:172.70.10.182:6789/0] [v2:172.70.10.183:3300/0,v1:172.70.10.183:6789/0]

#public_network = 172.70.10.0/24

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

# keyring is a required key and its value should be empty

keyring: |

metadata:

name: ceph-config

- 创建csi-rbd-secret.yaml

获取admin的key

[root@node1 ~]# ceph auth get client.admin

exported keyring for client.admin

[client.admin]

key = AQDpR4xiIN/TJRAAIIv3DMRdm70RnsGs5/DW9g==

caps mds = "allow *"

caps mgr = "allow *"

caps mon = "allow *"

caps osd = "allow *"

创建csi-rbd-secret.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: default

stringData:

userID: kubernetes

userKey: AQDpR4xiIN/TJRAAIIv3DMRdm70RnsGs5/DW9g==

encryptionPassphrase: test_passphrase

- 创建k8s_rbd块儿存储池

[root@node1 ~]# ceph osd pool create k8s_rbd

pool 'k8s_rbd' created

[root@node1 ~]# rbd pool init k8s_rbd

创建授权用户,实际上,可以使用admin账号

ceph auth get-or-create client.kubernetes mon 'profile rbd' osd 'profile rbd pool=k8s' mgr 'profile rbd pool=k8s'

- apply以上的所有文件

一共如下:

csi-config-map.yaml

csi-kms-config-map.yaml (可有可无)

ceph-config-map.yaml

csi-rbd-secret.yaml

- 创建csi-plugin

kubectl apply -f csi-provisioner-rbac.yaml

kubectl apply -f csi-nodeplugin-rbac.yaml

执行的时候,因为众所周知的原因,导致Google的镜像下载不下来,主要是

k8s.gcr.io/sig-storage/csi-provisioner:v3.1.0

k8s.gcr.io/sig-storage/csi-snapshotter:v5.0.1

k8s.gcr.io/sig-storage/csi-attacher:v3.4.0

k8s.gcr.io/sig-storage/csi-resizer:v1.4.0

k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.4.0

将k8s.gcr.io/sig-storage替换成registry.aliyuncs.com/google_containers

然后执行

kubectl apply -f csi-provisioner-rbac.yaml

kubectl apply -f csi-nodeplugin-rbac.yaml

因为kubernetes1.24.0去掉了docker引擎,所以执行完成后,镜像列表如下:

[root@node1 kubernetes]# crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/calico/cni v3.23.1 90d97aa939bbf 111MB

docker.io/calico/node v3.23.1 fbfd04bbb7f47 76.6MB

docker.io/calico/pod2daemon-flexvol v3.23.1 01dda8bd1b91e 8.67MB

docker.io/library/nginx latest de2543b9436b7 56.7MB

quay.io/tigera/operator v1.27.1 02245817b973b 60.3MB

registry.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7a 13.6MB

registry.aliyuncs.com/google_containers/etcd 3.5.3-0 aebe758cef4cd 102MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.24.0 529072250ccc6 33.8MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.24.0 88784fb4ac2f6 31MB

registry.aliyuncs.com/google_containers/kube-proxy v1.24.0 77b49675beae1 39.5MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.24.0 e3ed7dee73e93 15.5MB

registry.aliyuncs.com/google_containers/pause 3.6 6270bb605e12e 302kB

registry.aliyuncs.com/google_containers/pause 3.7 221177c6082a8 311kB

[root@node1 kubernetes]#

kubernetes1.24.0去掉了默认使用ipvs,不再使用iptables,所以,最好关闭iptables,以免出现下面的情况

[root@node5 ~]# crictl pods

POD ID CREATED STATE NAME NAMESPACE ATTEMPT RUNTIME

5d6dd91fd83e2 5 hours ago Ready csi-rbdplugin-provisioner-c6d7486dd-2jk5w default 0 (default)

354b1a8fd8b52 7 hours ago Ready csi-rbdplugin-wjpzd default 0 (default)

198dc5bf556df 5 days ago Ready calico-apiserver-6d4dd4bcf9-n8zgk calico-apiserver 0 (default)

244e05f6f9c67 5 days ago Ready calico-typha-b76b84965-bhsxn calico-system 0 (default)

4813ce009d806 5 days ago Ready calico-node-r6mv8 calico-system 0 (default)

2bca356d7a28a 5 days ago Ready kube-proxy-89lgc kube-system 0 (default)

[root@node5 ~]# crictl ps -a

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

b798b5f047428 89f8fb0f77c15 4 hours ago Running csi-rbdplugin-controller 19 5d6dd91fd83e2

ed133e74dec67 89f8fb0f77c15 4 hours ago Exited csi-rbdplugin-controller 18 5d6dd91fd83e2

8ceafecc7d9f8 89f8fb0f77c15 5 hours ago Running liveness-prometheus 0 5d6dd91fd83e2

7ede68a34c5ab 89f8fb0f77c15 5 hours ago Running csi-rbdplugin 0 5d6dd91fd83e2

a3afb73c6ed92 551fd931edd5e 5 hours ago Running csi-resizer 0 5d6dd91fd83e2

753ded0a3a413 03e115718d258 5 hours ago Running csi-attacher 0 5d6dd91fd83e2

825eaf4f07fa8 53ae5b88a3380 5 hours ago Running csi-snapshotter 0 5d6dd91fd83e2

2abb44295907a c3dfb4b04796b 5 hours ago Running csi-provisioner 0 5d6dd91fd83e2

a9e6846498a1b 89f8fb0f77c15 7 hours ago Running liveness-prometheus 0 354b1a8fd8b52

39638d5c0961a 89f8fb0f77c15 7 hours ago Running csi-rbdplugin 0 354b1a8fd8b52

1b12e9d273f68 f45c8a305a0bb 7 hours ago Running driver-registrar 0 354b1a8fd8b52

797228d6b31ed 3bcf34f7d7d8d 5 days ago Running calico-apiserver 0 198dc5bf556df

dc4bae329b42f fbfd04bbb7f47 5 days ago Running calico-node 0 4813ce009d806

d5d0be4a3ef2f 90d97aa939bbf 5 days ago Exited install-cni 0 4813ce009d806

8c5853e9a0905 4ac3a9100f349 5 days ago Running calico-typha 0 244e05f6f9c67

12f2be66fd320 01dda8bd1b91e 5 days ago Exited flexvol-driver 0 4813ce009d806

f4663a0650d73 77b49675beae1 5 days ago Running kube-proxy 0 2bca356d7a28a

[root@node5 ~]# crictl logs ed133e74dec67

I0531 08:37:48.420227 1 cephcsi.go:180] Driver version: v3.6.1 and Git version: 1bd6297ecbdf11f1ebe6a4b20f8963b4bcebe13b

I0531 08:37:48.420443 1 cephcsi.go:229] Starting driver type: controller with name: rbd.csi.ceph.com

E0531 08:37:48.422369 1 controller.go:70] failed to create manager Get "https://10.96.0.1:443/api?timeout=32s": dial tcp 10.96.0.1:443: connect: no route to host

E0531 08:37:48.422450 1 cephcsi.go:296] Get "https://10.96.0.1:443/api?timeout=32s": dial tcp 10.96.0.1:443: connect: no route to host

关闭掉iptables服务即可解决上面的问题

- 创建StorageClass

创建cat storage.class.yaml

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: csi-rbd-sc

provisioner: rbd.csi.ceph.com

parameters:

clusterID: 365b02aa-db0c-11ec-b243-525400ce981f

pool: k8s_rbd #之前创建pool的名称

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: default

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: default

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: default

csi.storage.k8s.io/fstype: ext4

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- discard

创建rbd-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: csi-rbd-sc

- 使用pvc

创建nginx.yaml

[root@node1 ~]# cat nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

volumeMounts:

- name: rbd

mountPath: /usr/share/rbd

volumes:

- name: rbd

persistentVolumeClaim: #指定pvc

claimName: rbd-pvc

---

apiVersion: v1

kind: Service

metadata:

name: ngx-service

labels:

app: nginx

spec:

type: NodePort

selector:

app: nginx

ports:

- port: 80

targetPort: 80

nodePort: 32500

部署文件系统

- 在ceph集群上面创建文件系统

[root@node1 ~]# ceph osd pool create cephfs_metadata 32 32

pool 'cephfs_metadata' created

[root@node1 ~]# ceph osd pool create cephfs_data 32 32

pool 'cephfs_data' created

[root@node1 ~]# ceph fs new cephfs cephfs_metadata cephfs_data

new fs with metadata pool 3 and data pool 4

[root@node1 ~]# ceph -s

cluster:

id: 365b02aa-db0c-11ec-b243-525400ce981f

health: HEALTH_ERR

1 filesystem is offline

1 filesystem is online with fewer MDS than max_mds

services:

mon: 3 daemons, quorum node1,node2,node3 (age 2d)

mgr: node2.dqryin(active, since 6d), standbys: node1.umzcuv

mds: cephfs:0

osd: 12 osds: 10 up (since 6d), 10 in (since 6d)

data:

pools: 4 pools, 97 pgs

objects: 23 objects, 15 MiB

usage: 10 GiB used, 4.9 TiB / 4.9 TiB avail

pgs: 97 active+clean

[root@node1 ~]# ceph auth get client.admin

exported keyring for client.admin

[client.admin]

key = AQDpR4xiIN/TJRAAIIv3DMRdm70RnsGs5/DW9g==

caps mds = "allow *"

caps mgr = "allow *"

caps mon = "allow *"

caps osd = "allow *"

[root@node1 ~]# ceph mon stat

e11: 3 mons at {node1=[v2:172.70.10.181:3300/0,v1:172.70.10.181:6789/0],node2=[v2:172.70.10.182:3300/0,v1:172.70.10.182:6789/0],node3=[v2:172.70.10.183:3300/0,v1:172.70.10.183:6789/0]}, election epoch 104, leader 0 node1, quorum 0,1,2 node1,node2,node3

[root@node1 mon.node1]# cat config

# minimal ceph.conf for 365b02aa-db0c-11ec-b243-525400ce981f

[global]

fsid = 365b02aa-db0c-11ec-b243-525400ce981f

mon_host = [v2:172.70.10.181:3300/0,v1:172.70.10.181:6789/0] [v2:172.70.10.182:3300/0,v1:172.70.10.182:6789/0] [v2:172.70.10.183:3300/0,v1:172.70.10.183:6789/0]

# 下面的这一步不能少,cephfs一定要启动mds服务,才能正常对外提供服务,一般来说,这步操作在cephadm shell命令行下执行比较好

[root@node1 kubernetes]# cephadm shell

Inferring fsid 365b02aa-db0c-11ec-b243-525400ce981f

Inferring config /var/lib/ceph/365b02aa-db0c-11ec-b243-525400ce981f/mon.node1/config

Using recent ceph image quay.io/ceph/ceph@sha256:f2822b57d72d07e6352962dc830d2fa93dd8558b725e2468ec0d07af7b14c95d

[ceph: root@node1 /]# ceph orch apply mds cephfs --placement="3 node1 node2 node3"

Scheduled mds.cephfs update...

- 下载下来之后进入 deploy/cephfs/kubernetes

- 使用rbd创建过的ceph-csi-config ConfigMap

- 安装

vim中替换掉csi-cephfsplugin-provisioner.yaml和csi-cephfsplugin.yaml中的

/k8s.gcr.io/sig-storage/registry.aliyuncs.com/google_containers/g

kubectl apply -f deploy/rbd/kubernetes/csi-provisioner-rbac.yaml

kubectl apply -f deploy/rbd/kubernetes/csi-nodeplugin-rbac.yaml

kubectl apply -f deploy/rbd/kubernetes/csi-cephfsplugin-provisioner.yaml

kubectl apply -f deploy/rbd/kubernetes/csi-cephfsplugin.yaml

- 的

kubectl apply -f deploy/rbd/kubernetes/csi-provisioner-rbac.yaml

kubectl apply -f deploy/rbd/kubernetes/csi-nodeplugin-rbac.yaml

kubectl apply -f deploy/rbd/kubernetes/csi-cephfsplugin-provisioner.yaml

kubectl apply -f deploy/rbd/kubernetes/csi-cephfsplugin.yaml - 切个目录examples/cephfs/开始部署客户端

[root@node1 fs]# cat secret.yaml

---

apiVersion: v1

kind: Secret

metadata:

name: csi-cephfs-secret

namespace: default

stringData:

# Required for statically provisioned volumes

userID: admin

userKey: AQDpR4xiIN/TJRAAIIv3DMRdm70RnsGs5/DW9g==

# Required for dynamically provisioned volumes

adminID: admin

adminKey: AQDpR4xiIN/TJRAAIIv3DMRdm70RnsGs5/DW9g==

[root@node1 fs]# kubectl apply -f secret.yaml

secret/csi-cephfs-secret created

[root@node1 fs]# k get secret

NAME TYPE DATA AGE

csi-cephfs-secret Opaque 4 11s

csi-rbd-secret Opaque 2 28h

- 遇到的问题

[root@node1 ~]# k get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

fs-pvc Pending csi-cephfs-sc 27m

rbd-pvc Bound pvc-80d393f0-8664-4d70-8e0d-d7a0550d4417 10Gi RWO csi-rbd-sc 7h22m

[root@node1 ~]# kd pvc/fs-pvc

Name: fs-pvc

Namespace: default

StorageClass: csi-cephfs-sc

Status: Pending

Volume:

Labels: <none>

Annotations: volume.beta.kubernetes.io/storage-provisioner: cephfs.csi.ceph.com

volume.kubernetes.io/storage-provisioner: cephfs.csi.ceph.com

Finalizers: [kubernetes.io/pvc-protection]

Capacity:

Access Modes:

VolumeMode: Filesystem

Used By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Provisioning 3m55s (x14 over 27m) cephfs.csi.ceph.com_csi-cephfsplugin-provisioner-794b8d9f95-jwmw4_ac816be6-acdb-4447-a41a-e034c43d1b2b External provisioner is provisioning volume for claim "default/fs-pvc"

Warning ProvisioningFailed 3m55s (x4 over 24m) cephfs.csi.ceph.com_csi-cephfsplugin-provisioner-794b8d9f95-jwmw4_ac816be6-acdb-4447-a41a-e034c43d1b2b failed to provision volume with StorageClass "csi-cephfs-sc": rpc error: code = DeadlineExceeded desc = context deadline exceeded

Warning ProvisioningFailed 3m55s (x10 over 24m) cephfs.csi.ceph.com_csi-cephfsplugin-provisioner-794b8d9f95-jwmw4_ac816be6-acdb-4447-a41a-e034c43d1b2b failed to provision volume with StorageClass "csi-cephfs-sc": rpc error: code = Aborted desc = an operation with the given Volume ID pvc-aaed8aa7-c202-44c2-8def-f3479ea27ffe already exists

Normal ExternalProvisioning 2m26s (x102 over 27m) persistentvolume-controller waiting for a volume to be created, either by external provisioner "cephfs.csi.ceph.com" or manually created by system administrator

# 到ceph集群中,查看集群健康状态

[root@node1 fs]# ceph health

HEALTH_ERR 1 filesystem is offline; 1 filesystem is online with fewer MDS than max_mds

# 该问题出现是因为cephfs没有启动mds,下面启动mds便可回复正常

[root@node1 kubernetes]# cephadm shell

Inferring fsid 365b02aa-db0c-11ec-b243-525400ce981f

Inferring config /var/lib/ceph/365b02aa-db0c-11ec-b243-525400ce981f/mon.node1/config

Using recent ceph image quay.io/ceph/ceph@sha256:f2822b57d72d07e6352962dc830d2fa93dd8558b725e2468ec0d07af7b14c95d

[ceph: root@node1 /]# ceph orch apply mds cephfs --placement="3 node1 node2 node3"

Scheduled mds.cephfs update...

#回到k8s环境中

[root@node1 ~]# k delete pvc/fs-pvc

persistentvolumeclaim "fs-pvc" deleted

[root@node1 fs]# k apply -f fs-pvc.yaml

persistentvolumeclaim/fs-pvc created

[root@node1 fs]# k get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

fs-pvc Bound pvc-01d43d98-3375-4642-9bd2-b4818ce59f77 11Gi RWX csi-cephfs-sc 6s

rbd-pvc Bound pvc-80d393f0-8664-4d70-8e0d-d7a0550d4417 10Gi RWO csi-rbd-sc 7h23m

- 挂载cephfs

[root@node1 fs]# cat fs-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: fs-nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

volumeMounts:

- name: fs

mountPath: /usr/share/rbd

volumes:

- name: fs

persistentVolumeClaim: #指定pvc

claimName: fs-pvc

---

apiVersion: v1

kind: Service

metadata:

name: fs-nginx-service

labels:

app: nginx

spec:

type: NodePort

selector:

app: nginx

ports:

- port: 80

targetPort: 80

nodePort: 32511

pod状态

[root@node1 fs]# pods

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-2wh9v 3/3 Running 0 85m

csi-cephfsplugin-dwswx 3/3 Running 0 85m

csi-cephfsplugin-n5js6 3/3 Running 0 85m

csi-cephfsplugin-provisioner-794b8d9f95-jwmw4 6/6 Running 0 85m

csi-cephfsplugin-provisioner-794b8d9f95-rprrp 6/6 Running 0 85m

csi-cephfsplugin-provisioner-794b8d9f95-sd848 6/6 Running 0 85m

csi-rbdplugin-provisioner-c6d7486dd-2jk5w 7/7 Running 19 (9h ago) 10h

csi-rbdplugin-provisioner-c6d7486dd-mk68w 7/7 Running 2 (8h ago) 12h

csi-rbdplugin-provisioner-c6d7486dd-qlgkf 7/7 Running 0 12h

csi-rbdplugin-tthrg 3/3 Running 0 12h

csi-rbdplugin-vtlbs 3/3 Running 0 12h

csi-rbdplugin-wjpzd 3/3 Running 0 12h

fs-nginx-6d86d5d84d-77gvt 1/1 Running 0 4m16s

fs-nginx-6d86d5d84d-b9twd 1/1 Running 0 4m16s

fs-nginx-6d86d5d84d-s4v85 1/1 Running 0 4m16s

my-nginx-549466b985-nzkxl 1/1 Running 0 7h29m

进入到其中一个pod中,在共享的目录下创建文件fs.txt

[root@node1 fs]# k exec -it pod/fs-nginx-6d86d5d84d-77gvt -- /bin/bash

root@fs-nginx-6d86d5d84d-77gvt:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 50G 15G 36G 30% /

tmpfs 64M 0 64M 0% /dev

tmpfs 7.9G 0 7.9G 0% /sys/fs/cgroup

shm 64M 0 64M 0% /dev/shm

/dev/mapper/centos-root 50G 15G 36G 30% /etc/hosts

172.70.10.181:6789,172.70.10.182:6789,172.70.10.183:6789:/volumes/csi/csi-vol-194d8e9e-e108-11ec-a020-26b729f874ac/372a597b-867d-40e0-b246-6537208c8a9f 11G 0 11G 0% /usr/share/rbd

tmpfs 16G 12K 16G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 7.9G 0 7.9G 0% /proc/acpi

tmpfs 7.9G 0 7.9G 0% /proc/scsi

tmpfs 7.9G 0 7.9G 0% /sys/firmware

root@fs-nginx-6d86d5d84d-77gvt:/# cd /usr/share/rbd

root@fs-nginx-6d86d5d84d-77gvt:/usr/share/rbd# ls

root@fs-nginx-6d86d5d84d-77gvt:/usr/share/rbd# echo "cephfs" > fs.txt

root@fs-nginx-6d86d5d84d-77gvt:/usr/share/rbd# cat fs.txt

cephfs

root@fs-nginx-6d86d5d84d-77gvt:/usr/share/rbd# exit

exit

进入到另一个pod中,可以看到共享目录下同样有文件fs.txt

[root@node1 fs]# k exec -it pod/fs-nginx-6d86d5d84d-b9twd -- /bin/bash

root@fs-nginx-6d86d5d84d-b9twd:/# cd /usr/share/rbd/

root@fs-nginx-6d86d5d84d-b9twd:/usr/share/rbd# ls

fs.txt

root@fs-nginx-6d86d5d84d-b9twd:/usr/share/rbd# cat fs.txt

cephfs

root@fs-nginx-6d86d5d84d-b9twd:/usr/share/rbd# exit

exit

至此,整合rbd和cephfs的过程结束。

对象存储

对于ceph对象存储,本身ceph提供的是基于七层协议的接口,直接通过对象存储s3协议访问即可,不需要通过csi进行集成。