整合Canal+RabbitMQ+Redis

整合Canal+RabbitMQ+Redis

1.设计

当mysql数据库中某些表发生变化的时候,通过canal解析数据库增量日志,将修改信息发送到mq中,当服务器监听到消息队列中有数据添加后进行解析,根据业务进行清除对应的redis缓存。

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-fBoJxWmS-1631683850219)(D:\下载\未命名文件(6)].png)

2.准备工作

mysql相关配置

1.修改mysql的配置文件 mysql.cnf

log-bin=mysql-bin # 添加这一行就ok,开启 Binlog 写入功能

binlog-format=ROW # 选择row模式

server_id=1 # 不能和canal的slaveId重复

2.查看是否开启成功

show variables like 'log_bin'

3.创建账号进行赋权

CREATE USER canal IDENTIFIED BY 'canal';

GRANT ALL PRIVILEGES ON *.* TO 'canal'@'%'; --开启所有权限

FLUSH PRIVILEGES;

canal相关配置

1.下载canal

下载地址 https://github.com/alibaba/canal/releases

2.修改配置文件 example/instance.properties

## mysql serverId , v1.0.26+ will autoGen

## v1.0.26版本后会自动生成slaveId,所以可以不用配置

# canal.instance.mysql.slaveId=0

# 数据库地址

canal.instance.master.address=127.0.0.1:3306

# binlog日志名称

canal.instance.master.journal.name=mysql-bin.000001

# mysql主库链接时起始的binlog偏移量

canal.instance.master.position=154

# mysql主库链接时起始的binlog的时间戳

canal.instance.master.timestamp=

canal.instance.master.gtid=

# username/password

# 在MySQL服务器授权的账号密码

canal.instance.dbUsername=canal

canal.instance.dbPassword=Canal

# 字符集

canal.instance.connectionCharset = UTF-8

# enable druid Decrypt database password

canal.instance.enableDruid=false

# table regex .*\\..*表示监听所有表 也可以写具体的表名,用,隔开

canal.instance.filter.regex=.*\\..*

# mysql 数据解析表的黑名单,多个表用,隔开

canal.instance.filter.black.regex=

3.启动canal

winds环境下启动,在bin目录下找到startup.bat启动

3.java-生产者-相关工作

1.引入maven依赖

<dependency>

<groupId>com.alibaba.ottergroupId>

<artifactId>canal.clientartifactId>

<version>1.1.4version>

dependency>

2.使用Springboot创建canal项目(生产者)

[外链图片转存失败,源站可能有防盗链机制,建议将图片保存下来直接上传(img-getVlWRM-1631683850224)(C:\Users\meizizi\AppData\Roaming\Typora\typora-user-images\1631172412019.png)]

3.配置文件信息

server.port=8001

# mq链接

spring.rabbitmq.host=116.62.181.226

spring.rabbitmq.port=5672

spring.rabbitmq.username=admin

spring.rabbitmq.password=123456

spring.rabbitmq.virtual-host=/

# canal链接配置

canal.hostname=127.0.0.1

canal.port=11111

canal.destination=example

canal.username=

canal.paswword=

canal.filter=esp.user,esp.mail

4.canal包相关类

Database类

package com.tc.canal;

import lombok.Data;

import lombok.NoArgsConstructor;

import java.io.Serializable;

import java.util.LinkedList;

@Data

@NoArgsConstructor

public class Database implements Serializable {

// 数据名

String databaseName;

// 表名

String tableName;

// 列

LinkedList<Column> rowDate;

public Database(String databaseName, String tableName) {

this.databaseName = databaseName;

this.tableName = tableName;

}

}

Column类

package com.tc.canal;

import lombok.Data;

import lombok.NoArgsConstructor;

import java.io.Serializable;

@Data

@NoArgsConstructor

public class Column implements Serializable {

// 字段名

String columnName;

// 字段值

String rowDate;

// 是否主键

Boolean isKey;

// 是否更新

Boolean update;

public Column(String columnName, String rowDate, Boolean isKey, Boolean update) {

this.columnName = columnName;

this.rowDate = rowDate;

this.isKey = isKey;

this.update = update;

}

}

CanalConfig类

package com.tc.canal;

import com.alibaba.otter.canal.client.CanalConnector;

import com.alibaba.otter.canal.client.CanalConnectors;

import com.alibaba.otter.canal.protocol.Message;

import com.tc.sendDataInfo.RabbitService;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.InitializingBean;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.stereotype.Component;

import java.net.InetSocketAddress;

@Component

@Slf4j

public class CanalConfig implements InitializingBean {

@Value("${canal.hostname}")

private String HOSTNAME;

@Value("${canal.port}")

private Integer PORT;

@Value("${canal.destination}")

private String DESTINATION;

@Value("${canal.username}")

private String USERNAME;

@Value("${canal.paswword}")

private String PASSWORD;

@Value("${canal.filter}")

private String FILTER;

private final static int BATCH_SIZE = 100;

@Autowired

private RabbitService rabbitService;

@Override

public void afterPropertiesSet() throws Exception {

log.info("-----------------canal监听触发-----------------");

CanalConnector connector = CanalConnectors.newSingleConnector(new InetSocketAddress(HOSTNAME, PORT),

DESTINATION, USERNAME, PASSWORD);

try {

//打开连接

connector.connect();

//订阅数据库表,全部表

// 查询全部

// connector.subscribe(".*\\..*");

// 查询指定的表

//如果修改了canal配置的instance文件,

// 一定不要在客户端调用CanalConnector.subscribe(".*\\..*"),

// 不然等于没设置canal.instance.filter.regex。

// 配置根据CanalConnector.subscribe(”表达式“)里的正则表达式进行过滤

connector.subscribe(FILTER);

//回滚到未进行ack的地方,下次fetch的时候,可以从最后一个没有ack的地方开始拿

connector.rollback();

while (true) {

// 获取指定数量的数据

Message message = connector.getWithoutAck(BATCH_SIZE);

//获取批量ID

long batchId = message.getId();

//获取批量的数量

int size = message.getEntries().size();

//如果没有数据

if (batchId == -1 || size == 0) {

try {

//线程休眠2秒

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

} else {

//如果有数据,处理数据

// printEntry(message.getEntries());

// String schemaName = message.getEntries().get(0).getHeader().getSchemaName();

//rabbitService.sendMsg("123");

rabbitService.sendData(message.getEntries());

}

//进行 batch id 的确认。确认之后,小于等于此 batchId 的 Message 都会被确认。

connector.ack(batchId);

}

} catch (Exception e) {

e.printStackTrace();

} finally {

connector.disconnect();

}

}

}

5.mq包相关类

RabbitmqConfig

package com.tc.mq;

import org.springframework.amqp.core.Binding;

import org.springframework.amqp.core.BindingBuilder;

import org.springframework.amqp.core.Queue;

import org.springframework.amqp.core.TopicExchange;

import org.springframework.amqp.rabbit.config.SimpleRabbitListenerContainerFactory;

import org.springframework.amqp.rabbit.connection.ConnectionFactory;

import org.springframework.amqp.rabbit.core.RabbitTemplate;

import org.springframework.amqp.support.converter.Jackson2JsonMessageConverter;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

public class RabbitmqConfig {

// 设置队列

// 负责接收库和表的信息

public static final String QUEUE_TOPIC1 = "queue_topic1";

// 负责接收库的信息

public static final String QUEUE_TOPIC2 = "queue_topic2";

// 设置交换机

public static final String EXCHANGE_TOPIC = "exchange_topic";

// 设置路由键

public static final String FS_EVENT_ROUTING_KEY = "database_all";

public static final String FS_EVENT_ROUTING_KEY_ONE = "database_one";

@Bean

public Queue queueTopic1() {

return new Queue(QUEUE_TOPIC1);

}

@Bean

public Queue queueTopic2() {

return new Queue(QUEUE_TOPIC2);

}

// topic 模型

@Bean

public TopicExchange exchangeTopic() {

return new TopicExchange(EXCHANGE_TOPIC);

}

@Bean

public Binding bindingTopic1() {

return BindingBuilder.bind(queueTopic1()).to(exchangeTopic()).with(FS_EVENT_ROUTING_KEY);

}

// 进行 --- 发送消息

@Bean

public Binding bindingTopic2() {

return BindingBuilder.bind(queueTopic2()).to(exchangeTopic()).with(FS_EVENT_ROUTING_KEY_ONE);

}

// 序列化

@Bean

public RabbitTemplate rabbitTemplate(ConnectionFactory connectionFactory) {

RabbitTemplate template = new RabbitTemplate(connectionFactory);

template.setMessageConverter(new Jackson2JsonMessageConverter());

return template;

}

@Bean

public SimpleRabbitListenerContainerFactory rabbitListenerContainerFactory(ConnectionFactory connectionFactory) {

SimpleRabbitListenerContainerFactory factory = new SimpleRabbitListenerContainerFactory();

factory.setConnectionFactory(connectionFactory);

factory.setMessageConverter(new Jackson2JsonMessageConverter());

return factory;

}

}

6.sendDataInfo包相关类

RabbitService

package com.tc.sendDataInfo;

import com.alibaba.otter.canal.protocol.CanalEntry;

import java.util.List;

public interface RabbitService {

// 主题交换机发送

void sendMsg(String msg);

// 接收canal发送的日志,并把信息传给mq

void sendData(List<CanalEntry.Entry> entrys);

}

RabbitServiceImpl

package com.tc.sendDataInfo;

import com.alibaba.otter.canal.protocol.CanalEntry;

import com.tc.canal.Database;

import com.tc.util.JsonUtil;

import lombok.extern.slf4j.Slf4j;

import org.springframework.amqp.rabbit.core.RabbitTemplate;

import org.springframework.beans.factory.annotation.Autowired;

import com.tc.canal.Column;

import org.springframework.stereotype.Service;

import java.util.LinkedList;

import java.util.List;

import static com.tc.mq.RabbitmqConfig.*;

@Service("rabbitService")

@Slf4j

public class RabbitServiceImpl implements RabbitService {

@Autowired

private RabbitTemplate rabbitTemplate;

@Override

public void sendMsg(String msg) {

log.info("--------------------mq开始发送消息--------------------");

System.out.println(msg);

rabbitTemplate.convertSendAndReceive(EXCHANGE_TOPIC,FS_EVENT_ROUTING_KEY,msg);

}

@Override

public void sendData(List<CanalEntry.Entry> entrys) {

for (CanalEntry.Entry entry : entrys) {

if (entry.getEntryType() == CanalEntry.EntryType.TRANSACTIONBEGIN || entry.getEntryType() == CanalEntry.EntryType.TRANSACTIONEND) {

//开启/关闭事务的实体类型,跳过

continue;

}

//RowChange对象,包含了一行数据变化的所有特征

//比如isDdl 是否是ddl变更操作 sql 具体的ddl sql beforeColumns afterColumns 变更前后的数据字段等等

CanalEntry.RowChange rowChage;

try {

rowChage = CanalEntry.RowChange.parseFrom(entry.getStoreValue());

} catch (Exception e) {

throw new RuntimeException("ERROR ## parser of eromanga-event has an error , data:" + entry.toString(), e);

}

//获取操作类型:insert/update/delete类型

CanalEntry.EventType eventType = rowChage.getEventType();

//打印Header信息

log.info("库名:[{}]\t表名:[{}]\t操作类型[{}]",entry.getHeader().getSchemaName(), entry.getHeader().getTableName(),eventType);

//判断是否是DDL语句 打印并跳出

if (rowChage.getIsDdl()) {

log.info("----isDDL----",rowChage.getSql());

continue;

}

//获取RowChange对象里的每一行数据,打印出来

for (CanalEntry.RowData rowData : rowChage.getRowDatasList()) {

//如果是删除语句

if (eventType == CanalEntry.EventType.DELETE) {

printColumn(rowData.getBeforeColumnsList());

sendMQTest(entry);

//如果是新增语句

} else if (eventType == CanalEntry.EventType.INSERT) {

printColumn(rowData.getAfterColumnsList());

// 向mq发送新增的内容

//sendMqData(entry,rowData.getAfterColumnsList());

sendMQTest(entry);

//如果是更新的语句

} else {

//变更前的数据

log.info("-------> before");

printColumn(rowData.getBeforeColumnsList());

//变更后的数据

log.info("-------> after");

printColumn(rowData.getAfterColumnsList());

}

}

}

}

private static void printColumn(List<CanalEntry.Column> columns) {

log.info("--------------开始打印----------------");

for (CanalEntry.Column column : columns) {

log.info("字段名:[{}]\t字段值:[{}]\t是否主键:[{}]\t是否更新:[{}]",

column.getName(),

column.getValue(),

column.getIsKey(),

column.getUpdated());

}

}

private void sendMqData(CanalEntry.Entry entry,List<CanalEntry.Column> columns) {

log.info("--------------开始向mq发送信息----------------");

Database database = new Database(entry.getHeader().getSchemaName(), entry.getHeader().getTableName());

LinkedList<Column> data = new LinkedList<>();

for (CanalEntry.Column column : columns) {

Column rowData = new Column(column.getName(),

column.getValue(),

column.getIsKey(),

column.getUpdated());

data.add(rowData);

database.setRowDate(data);

}

String databaseJson = JsonUtil.object2json(database);

System.out.println(databaseJson);

rabbitTemplate.convertSendAndReceive(EXCHANGE_TOPIC,FS_EVENT_ROUTING_KEY,databaseJson);

}

private void sendMQTest(CanalEntry.Entry entry){

log.info("--------------开始向mq发送测试数据库的信息----------------");

Database database = new Database(entry.getHeader().getSchemaName(), entry.getHeader().getTableName());

String databaseJson = JsonUtil.object2json(database);

rabbitTemplate.convertSendAndReceive(EXCHANGE_TOPIC,FS_EVENT_ROUTING_KEY_ONE,databaseJson);

}

}

7.util包相关类

JsonUtil

package com.tc.util;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

import java.io.IOException;

/**

* json转换工具类

* 自身架构中如果有全局异常处理机制(RuntimeException)可以不捕获异常,否则需要手动捕获RuntimeException

*/

public class JsonUtil {

private static ObjectMapper objectMapper = new ObjectMapper();

/**

* json字符串转对象

*/

public static <T> T json2object(String json, Class<T> type) {

try {

return objectMapper.readValue(json, type);

} catch (IOException e) {

throw new RuntimeException(e);

}

}

/**

* 对象转json字符串

*/

public static String object2json(Object object) {

try {

return objectMapper.writeValueAsString(object);

} catch (JsonProcessingException e) {

throw new RuntimeException(e);

}

}

}

4.java-消费者-相关工作

新建项目配置mq进行监听

在新的项目中,把mq相关的配置和canal相关的类放入来,注意不要把CanalConfig类放入

mq监听配置 TopicReceiveListener类

package com.tc.mq;

import com.tc.utils.ApplicationContentUtils;

import com.tc.utils.JsonUtil;

import lombok.extern.slf4j.Slf4j;

import org.springframework.amqp.rabbit.annotation.RabbitHandler;

import org.springframework.amqp.rabbit.annotation.RabbitListener;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.StringRedisSerializer;

import org.springframework.stereotype.Component;

import java.util.HashMap;

import java.util.Iterator;

import java.util.LinkedList;

import java.util.Map;

import static com.tc.mq.RabbitmqConfig.QUEUE_TOPIC1;

import static com.tc.mq.RabbitmqConfig.QUEUE_TOPIC2;

@Component

@Slf4j

public class TopicReceiveListener {

private static final Map<String,String> CACHE_INFO = new HashMap<>();

// 静态代码块 进行初始化 表和缓存名称的关系

// key 为被监听的表名, value 为相关的缓存名称

static {

CACHE_INFO.put("user","com.tc.mapper.UserMapper");

CACHE_INFO.put("mail","mail_cache");

}

// 设置队列

@RabbitListener(queues = QUEUE_TOPIC1)

@RabbitHandler

public void receiveMsg1(String Info) {

log.info("--------------mq收到了信息----------------");

Database database = JsonUtil.json2object(Info, Database.class);

System.out.println("库名" + database.getDatabaseName());

System.out.println("表名" + database.getTableName());

LinkedList<Column> rowDate = database.getRowDate();

rowDate.forEach(row -> System.out.println(row.toString()));

}

@RabbitListener(queues = QUEUE_TOPIC2)

@RabbitHandler

public void receiveMsg2(String Info) {

log.info("--------------mq收到了信息,进行清除缓存的操作----------------");

Database database = JsonUtil.json2object(Info, Database.class);

// System.out.println("库名" + database.getDatabaseName());

String tableName = database.getTableName();

// 匹配设定好的表和缓存关系

// 当数据库中的指定表被修改后,对相关的缓存信息进行清除

Iterator<String> iterator = CACHE_INFO.keySet().iterator();

while (iterator.hasNext()){

String key = iterator.next();

if( key.equals(tableName) ){

getRedisTemplate().delete(CACHE_INFO.get(key));

break;

}

}

log.info("--------------mq收到了信息,redis的缓存清除完成----------------");

}

// 封装获取redisTemplate

public RedisTemplate getRedisTemplate() {

RedisTemplate redisTemplate = (RedisTemplate) ApplicationContentUtils.getBean("redisTemplate");

redisTemplate.setKeySerializer(new StringRedisSerializer());

redisTemplate.setHashKeySerializer(new StringRedisSerializer());

return redisTemplate;

}

}

ApplicationContentUtils类

package com.tc.utils;

import org.springframework.beans.BeansException;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.context.ApplicationContext;

import org.springframework.context.ApplicationContextAware;

import org.springframework.stereotype.Component;

/**

*功能描述

用来获取 springboot创建好的工厂

*/

@Component

public class ApplicationContentUtils implements ApplicationContextAware {

// 保留工厂

@Autowired

private static ApplicationContext applicationContext;

// 将创建好的工厂以参数的形式传递给这个类

@Override

public void setApplicationContext(ApplicationContext applicationContext) throws BeansException {

this.applicationContext = applicationContext;

}

// 在提供的工厂中,获取对象的方法

public static Object getBean(String name) {

return applicationContext.getBean(name);

}

}

RedisConfig类

package com.tc.config.redis;

import com.fasterxml.jackson.annotation.JsonAutoDetect;

import com.fasterxml.jackson.annotation.PropertyAccessor;

import com.fasterxml.jackson.databind.ObjectMapper;

import org.springframework.cache.CacheManager;

import org.springframework.cache.annotation.CachingConfigurerSupport;

import org.springframework.cache.annotation.EnableCaching;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.cache.RedisCacheConfiguration;

import org.springframework.data.redis.cache.RedisCacheManager;

import org.springframework.data.redis.cache.RedisCacheWriter;

import org.springframework.data.redis.connection.RedisConnectionFactory;

import org.springframework.data.redis.core.*;

import org.springframework.data.redis.serializer.Jackson2JsonRedisSerializer;

import org.springframework.data.redis.serializer.StringRedisSerializer;

import java.time.Duration;

/**

* 配置redistemplate序列化

*/

@Configuration

@EnableCaching

public class RedisConfig extends CachingConfigurerSupport {

/**

* 选择redis作为默认缓存工具

*/

@Bean

public CacheManager cacheManager(RedisConnectionFactory redisConnectionFactory){

//设置缓存有效时间

RedisCacheConfiguration redisCacheConfiguration = RedisCacheConfiguration.

defaultCacheConfig().entryTtl(Duration.ofHours(10));

return RedisCacheManager.builder(RedisCacheWriter.nonLockingRedisCacheWriter(redisConnectionFactory)).cacheDefaults(redisCacheConfiguration).build();

}

/**

* 配置redistemplate相关配置

*/

@Bean

public RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory factory) {

RedisTemplate<String, Object> template = new RedisTemplate<>();

// 配置连接工厂

template.setConnectionFactory(factory);

//使用Jackson2JsonRedisSerializer来序列化和反序列化redis的value值(默认使用JDK的序列化方式)

Jackson2JsonRedisSerializer jacksonSeial = new Jackson2JsonRedisSerializer(Object.class);

ObjectMapper om = new ObjectMapper();

// 指定要序列化的域,field,get和set,以及修饰符范围,ANY是都有包括private和public

om.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

// 指定序列化输入的类型,类必须是非final修饰的,final修饰的类,比如String,Integer等会跑出异常

om.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL);

jacksonSeial.setObjectMapper(om);

// 值采用json序列化

template.setValueSerializer(jacksonSeial);

//使用StringRedisSerializer来序列化和反序列化redis的key值

template.setKeySerializer(new StringRedisSerializer());

// 设置hash key 和value序列化模式

template.setHashKeySerializer(new StringRedisSerializer());

template.setHashValueSerializer(jacksonSeial);

template.afterPropertiesSet();

return template;

}

/**

* 对hash类型的数据操作

*/

@Bean

public HashOperations<String,String,Object> hashOperations(RedisTemplate<String,Object> redisTemplate){

return redisTemplate.opsForHash();

}

/**

* 对redis字符串类型数据库操作

*/

@Bean

public ValueOperations<String, Object> valueOperations(RedisTemplate<String, Object> redisTemplate) {

return redisTemplate.opsForValue();

}

/**

* 对链表类型的数据操作

*/

@Bean

public ListOperations<String, Object> listOperations(RedisTemplate<String, Object> redisTemplate) {

return redisTemplate.opsForList();

}

/**

* 对无序集合Set操作

*/

@Bean

public SetOperations<String, Object> setOperations(RedisTemplate<String, Object> redisTemplate) {

return redisTemplate.opsForSet();

}

/**

* 对有序集合

*/

@Bean

public ZSetOperations<String, Object> zSetOperations(RedisTemplate<String, Object> redisTemplate) {

return redisTemplate.opsForZSet();

}

}

5.java-自定义redis缓存注解

自定义注解RedisCache

package com.tc.annotations;

import java.lang.annotation.*;

/**

* 自定义缓存注解

* @author ltc

*/

// 目标 作用在方法上

@Target(ElementType.METHOD)

// 生命周期 在什么范围有效 运行时保留

@Retention(RetentionPolicy.RUNTIME)

// 标记注解 阐述了某个被标注的类型是被继承的

@Inherited

// 标记注解 被标注为程序成员的公共api

@Documented

public @interface RedisCache {

/**

* 缓存组名称

* @return

*/

String cacheName();

/**

* 缓存key

* @return

*/

String key() default "";

/**

* 缓存描述

* @return

*/

String msg();

/**

* 过期时间

* 默认 1 ,设置 1个小时的过期时间

* @return

*/

int expireTime() default 1;

}

自定义缓存注解的使用

aop进行实现CacheAspect

package com.tc.config.aop;

import com.tc.annotations.RedisCache;

import com.tc.utils.Md5Utils;

import lombok.extern.slf4j.Slf4j;

import org.aspectj.lang.ProceedingJoinPoint;

import org.aspectj.lang.annotation.Around;

import org.aspectj.lang.annotation.Aspect;

import org.aspectj.lang.annotation.Pointcut;

import org.aspectj.lang.reflect.MethodSignature;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Component;

import java.lang.reflect.Method;

import java.util.Arrays;

import java.util.concurrent.TimeUnit;

/**

* 缓存切面类

* 缓存标注了@RedisCache的方法

* 把方法结果放进名为cacheName的set中

* 缓存key可以自定义

* @author 78289

*/

@Aspect

@Component

@Slf4j

public class CacheAspect {

@Autowired

private RedisTemplate redisTemplate;

/**

* 定义切入点

*/

@Pointcut("@annotation(com.tc.annotations.RedisCache)")

public void pointCut() {

}

/**

* 环绕通知,可以传入参数ProceedingJoinPoint

* 该参数封装了我们需要用到的全部信息

* 包括:

* 1. 代理对象本身

* 2. 目标对象

* 3. 签名信息

* 4. 传入参数数组

* 5. 。。。

* @param joinPoint

* @return

*/

@Around("pointCut()")

public Object around(ProceedingJoinPoint joinPoint){

log.info("---------------进入自定义redis缓存---------------");

// 获取方法签名(通过此签名获取目标方法信息)

MethodSignature signature = (MethodSignature) joinPoint.getSignature();

//获得标注该注解的方法对象

Method method = signature.getMethod();

// 取目标方法名称(方法名+参数)

String methodName = method.getName();

String params = Arrays.toString(joinPoint.getArgs());

log.info("methodName:{},params:{}",methodName,params);

//获取该注解

RedisCache annotation = method.getAnnotation(RedisCache.class);

//通过注解获取 cacheName,key,msg,expireTime 信息

String cacheName = annotation.cacheName();

String key = annotation.key()+"-"+methodName+"-"+params;

String msg = annotation.msg();

int expireTime = annotation.expireTime();

log.info("注解信息:\tcacheName : {} , key : {} , msg : {},, expireTime : {}", cacheName, key, msg,expireTime);

// 把自定义的key+方法名+参数 通过md5加密处理

String newKey = Md5Utils.getKeyByMD5(annotation.key()+"-"+methodName+"-"+params);

//判断缓存是否存在

try {

//注意,这里我用hash的数据结构作为缓存

if (!redisTemplate.opsForHash().hasKey(cacheName,newKey)) {

log.info("---------------缓存不存在---------------");

//执行了拦截方法的方法体,只有缓存不存在才执行标注注解的方法

Object cache = joinPoint.proceed(joinPoint.getArgs());

redisTemplate.opsForHash().put(cacheName,newKey,cache);

//设置缓存过期时间

redisTemplate.expire(cacheName,expireTime, TimeUnit.HOURS);

return cache;

} else {

log.info("---------------缓存存在---------------");

return redisTemplate.opsForHash().get(cacheName,newKey);

}

} catch (Throwable throwable) {

throwable.printStackTrace();

}

return null;

}

}

md5加密工具类

package com.tc.utils;

import com.tc.common.DevUser;

import org.apache.shiro.crypto.hash.Md5Hash;

import org.springframework.util.DigestUtils;

public class Md5Utils {

/*

* 生成获取md5静态方法

* */

public static String getMd5( DevUser user) {

Md5Hash md5Hash = new Md5Hash(user.getAccount(),

user.getCode(), 1024);

user.setNewPassword(md5Hash.toHex());

String temp = user.getNewPassword();

String md5 = temp.substring(8,24);

return md5;

}

/*

对redis的key使用MD5进行优化处理。

*/

public static String getKeyByMD5(String key){

return DigestUtils.md5DigestAsHex(key.getBytes());

}

}

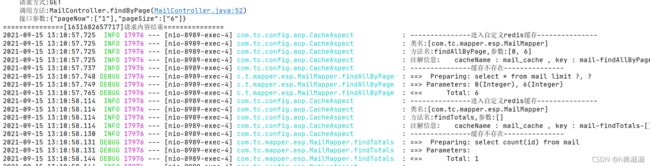

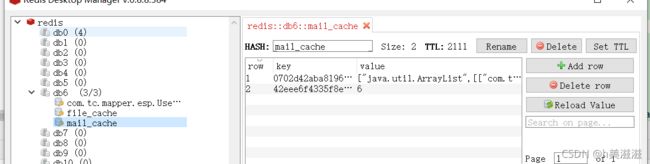

使用注解

当使用到的被注解标注的方法后,会把返回信息放入到redis缓存中

当对指定的表操作后,mq会收到消息,从而判断需要清除的缓存信息

码云地址:https://gitee.com/meiziziq/canal

码云地址:https://gitee.com/meiziziq/canal

参考:https://blog.csdn.net/liuerchong/article/details/117654489

参考:https://blog.csdn.net/qq_35387940/article/details/100514134