k8s部署详细笔记の项目交付(下)

文章目录

-

- 一、 前言

- 二、 部署zookeeper集群

- 三、 安装jenkins

- 四、 配置jenkins

- 五、 安装maven

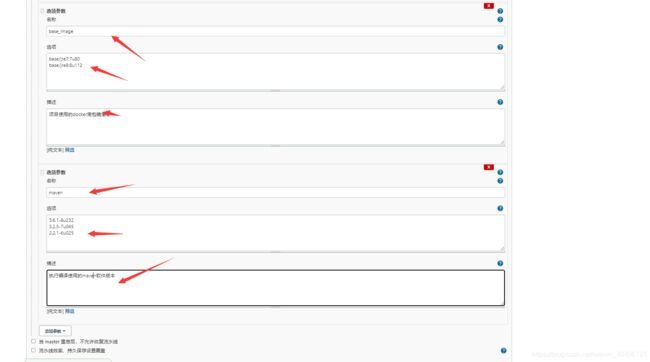

- 六、 配置dubbo微服务的底包镜像

- 七、 jenkins构建和交付server(provide)服务

- 八、交付Monitor到K8S(zk监控者)

- 九、交付web(consumer)到K8S

一、 前言

二、 部署zookeeper集群

--------------------------------------192.168.248.11、12、21(都配置jdk、zookeeper)-------------------------------

注:因为jenkins是用java写的,所以需要部署jdk

- 下载

jdk8:https://www.oracle.com/java/technologies/javase/javase-jdk8-downloads.html

1.1 创建文件夹:/opt/src

1.2 将jdk-8u291-linux-x64.tar.gz上传到/opt/src

1.3 创建文件夹:mkdir /usr/java

1.4jdk解压到/usr/java:tar -xvf jdk-8u291-linux-x64.tar.gz -C /usr/java

1.5 设置软连接:ln -s /usr/java/jdk1.8.0_291/ /usr/java/jdk - 设置环境变量:

vi /etc/profile,在最后加上这三行

export JAVA_HOME=/usr/java/jdk

export PATH=$JAVA_HOME/bin:$JAVA_HOME/bin:$PATH

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

- 重新读取配置文件:

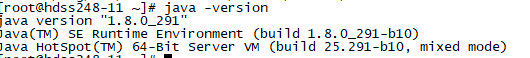

source /etc/profile - 查看jdk配置:

java -version

- 下载

zookeeper,这里使用3.4.14版本:https://archive.apache.org/dist/zookeeper/zookeeper-3.4.14/

5.1 将zookeeper-3.4.14.tar.gz上传到/opt/src

5.2zookeeper解压到/opt:tar -xvf zookeeper-3.4.14.tar.gz -C /opt

5.3 设置软连接:ln -s /opt/zookeeper-3.4.14/ /opt/zookeeper

5.4 创建日志目录:mkdir -pv /data/zookeeper/data /data/zookeeper/logs - 配置文件:

vi /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/logs

clientPort=2181

server.1=zk1.zhanzhk.com:2888:3888

server.2=zk2.zhanzhk.com:2888:3888

server.3=zk3.zhanzhk.com:2888:3888

--------------------------------------切回192.168.248.11做dns解析-------------------------------

vi /var/named/zhanzhk.com.zone

$TTL 600;10minutes

$ORIGIN zhanzhk.com.

@ IN SOA dns.zhanzhk.com. dnsadmin.zhanzhk.com. (

2021050702; serial

10800;refresh(3 hours)

900; retry(15 minutes)

604800; expire(1 week)

86400;minimum(1 day)

)

NS dns.zhanzhk.com.

$TTL 60;1minute

dns A 192.168.248.11

harbor A 192.168.248.200

k8s-yaml A 192.168.248.200

traefik A 192.168.248.10

dashboard A 192.168.248.10

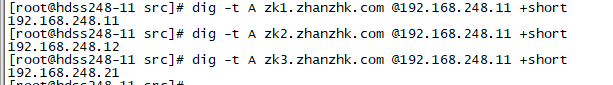

zk1 A 192.168.248.11

zk2 A 192.168.248.12

zk3 A 192.168.248.21

-

systemctl restart named—>dig -t A zk1.zhanzhk.com @192.168.248.11 +short

--------------------------------------192.168.248.11、12、21做集群------------------------------- -

做集群:

vi /data/zookeeper/data/myid

192.168.248.11:

1

192.168.248.12:

2

192.168.248.13:

3

-

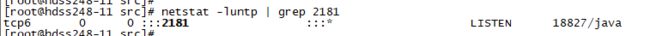

查看端口:

netstat -luntp | grep 2181,一般zookeeper默认监听2181端口

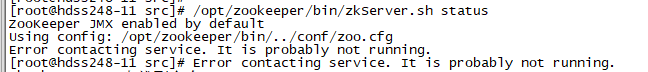

注:启动失败排查

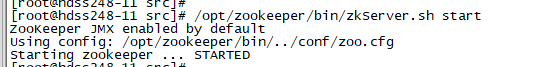

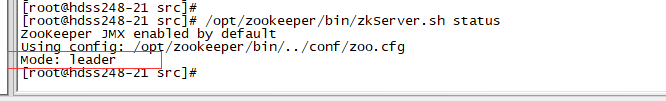

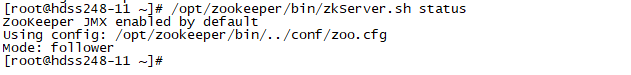

11.1 查看启动状态:/opt/zookeeper/bin/zkServer.sh status,可以看到启动失败

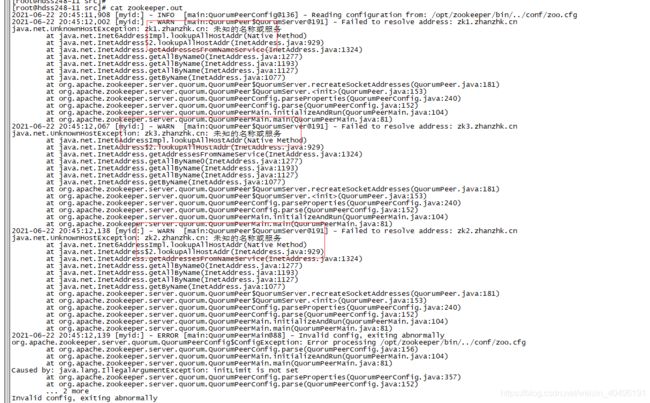

11.1 查看日志:cat zookeeper.out,域名写错,因为是com结尾。修改重启

-

查看启动状态:

/opt/zookeeper/bin/zkServer.sh status,可以看到192.168.248.21是主,其他两台是从。

-

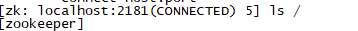

登录zookeeper:

/opt/zookeeper/bin/zkCli.sh -server localhost:2181

三、 安装jenkins

在192.168.248.200上操作

- 拉取镜像:

docker pull jenkins/jenkins:2.282 - 打标签:

docker tag 22b8b9a84dbe harbor.zhanzhk.com/myresposity/jenkins:v2.282 - 提交到我们的镜像仓库:

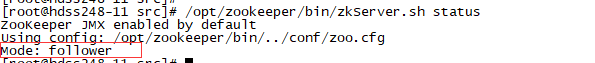

docker push harbor.zhanzhk.com/myresposity/jenkins:v2.282 - 首先创建密钥(邮箱用自己的,后面gitee需要):

ssh-keygen -t rsa -b 2048 -C "[email protected]" -N "" -f /root/.ssh/id_rsa

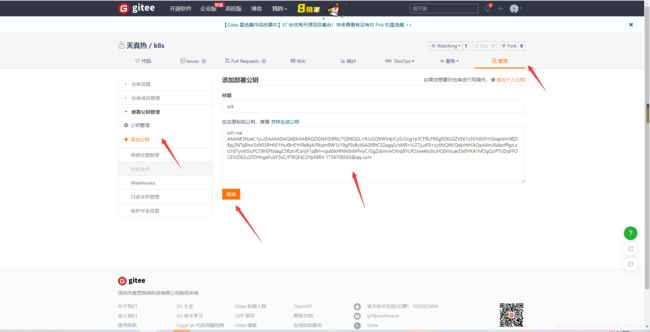

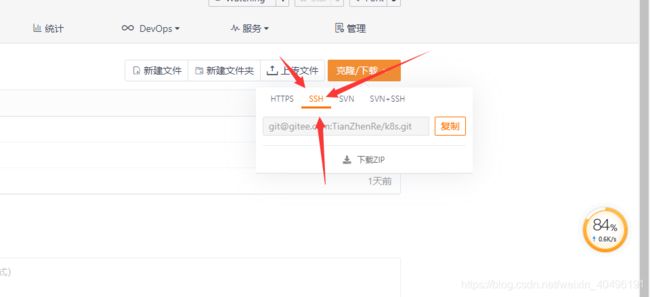

- 查看公钥,并且将公钥配置到gitee仓库:

cat /root/.ssh/id_rsa.pub

注:Dubbo服务中消费者是git ssh拉取代码,git克隆拉代码,两种方式:(1、httrp协议 2、ssh),如果走ssh必须生成密钥对,把私钥封装在docker镜像里面,把公钥放到git仓库,这样docker就能从git拉取到镜像。

- 创建文件夹:

mkdir -p /data/dockerfile/jenkins/-->cd /data/dockerfile/jenkins/ - 创建

dockerfile文件:vi Dockerfile

FROM harbor.zhanzhk.com/myresposity/jenkins:v2.282

#定义启动jenkins的用户

USER root

#修改时区 改成东八区

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

#加载用户密钥,dubbo服务拉取代码使用的ssh

ADD id_rsa /root/.ssh/id_rsa

#加载宿主机的docker配置文件,登录远程仓库的认证信息加载到容器里面。

ADD config.json /root/.docker/config.json

#在jenkins容器内安装docker 客户端,jenkins要执行docker build,docker引擎用的是宿主机的docker引擎

ADD get-docker.sh /get-docker.sh

#跳过 ssh时候输入 yes 步骤,并执行安装docker。( 优化ssh ,ssh每次连接一台新的主机的时候,提示输入yes/no指纹验证,由于gitee是公网,每次拉取IP都不一样,所以不能每次都输入yes/no)

RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&\

/get-docker.sh

-

将

Dockerfile中需要的文件拷贝过来

8.1cp /root/.ssh/id_rsa .

8.2cp /root/.docker/config.json .

8.3curl -fsSL get.docker.com -o get-docker.sh

8.4 给执行权限:chmod +x get-docker.sh -

创建镜像:

docker build . -t harbor.zhanzhk.com/infra/jenkins:v2.282(比较慢,耐心等待)

注:如果正常则忽略,如果报错,直接则跳过get-docker.sh操作

10.1 删除get-docker.sh文件

10.2 修改Dockerfile文件,删除get-docker.sh部分

10.3 创建镜像,成功。改方式将以挂载文件夹的形式启动镜像,后续会说明。 -

镜像上传:

docker push harbor.zhanzhk.com/infra/jenkins:v2.282 -

检测镜像是否可以访问

gitee:docker run --rm harbor.zhanzhk.com/infra/jenkins:v2.282 ssh -i /root.ssh/id_rsa -T [email protected]

12.1 报错1:WARNING: IPv4 forwarding is disabled. Networking will not work.,则

-----1)在宿主机上执行:echo "net.ipv4.ip_forward=1" >>/usr/lib/sysctl.d/00-system.conf

-----2)重启network和docker服务:systemctl restart network-->systemctl restart docker

12.2 报错2Permanently added 'gitee.com,212.64.62.183' (ECDSA) to the list of known hosts. Permission denied (publickey).: 公钥没有配到git仓库,看第5点

--------------------------------------切回192.168.248.21-------------------------------

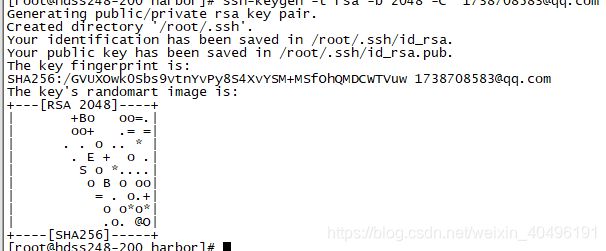

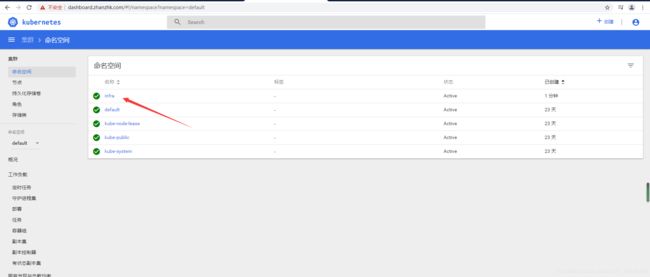

- 创建jenkins的单独名称空间:

kubectl create ns infra

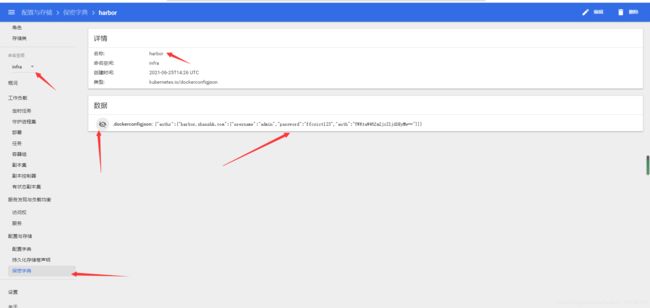

- 在

infra命名空间创建一个secret资源,类型是docker-registry。名字叫harbor,后面是参数:kubectl create secret docker-registry harbor --docker-server=harbor.zhanzhk.com --docker-username=admin --docker-password=ffcsict123 -n infra

注:jenkins镜像交付infra命名空间,infra命名空间需要从harbor私有仓库拉取镜像,仅仅dockerlogin是不行的。需要在infra命名空间创建一个secret资源,把harbor.zhanzhk.com用到的管理员账户密码声明出来

- 创建共享存储

让jenkins的pod实现持久化数据的共享存储,即使pod宕机,会在重启一个pod,数据还是使用共享存储,注意:共享存储不支持多pod,多个pod同时插入数据会乱套

NFS共享存储放在 hdss7-200 上,用于存储Jenkins持久化文件。所有Node和hdss7-200都需要安装NFS

15.1 在192.168.248.21、22、200上面安装nfs:yum install -y nfs-utils

--------------------------------------切回192.168.248.200-------------------------------

- 将192.168.248.200服务器作为服务端:

vi /etc/exports,加上/data/nfs-volume 192.168.248.0/24(rw,sync,no_root_squash).(把/data/nfs-volume目录共享给192.168.248.0/24。参数:rw可读可写,no_root_squash非root用户的所有权限压缩成root) - 创建文件夹:

mkdir /data/nfs-volume - 在192.168.248.21、22、200三台启动:

systemctl start nfs—>systemctl enable nfs mkdir /data/k8s-yaml/jenkinscd /data/k8s-yaml/jenkins- 准备资源清单:

vi dp.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: jenkins

namespace: infra

labels:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

name: jenkins

template:

metadata:

labels:

app: jenkins

name: jenkins

spec:

volumes:

- name: data

nfs:

server: hdss248-200

path: /data/nfs-volume/jenkins_home

- name: docker

hostPath:

path: /run/docker.sock

type: ''

containers:

- name: jenkins

image: harbor.zhanzhk.com/infra/jenkins:v2.282

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx512m -Xms512m

volumeMounts:

- name: data

mountPath: /var/jenkins_home

- name: docker

mountPath: /run/docker.sock

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

kind: Deployment pod控制器主要两种类型:daemonset每一个运算节点启动一个, Deployment 根据设置可以启动随意几个

apiVersion: apps/v1

metadata:

name: jenkins

namespace: infra 名命空间

labels:

name: jenkins

spec:

replicas: 1 根据设置pod可以启动1个

selector:

matchLabels:

name: jenkins

template: pod控制器pod模板

metadata:

labels:

app: jenkins

name: jenkins

spec:

volumes:

- name: data 卷名字

nfs: 使用的卷类型

server: hdss248-200 nfs服务器在哪

path: /data/nfs-volume/jenkins_home 共享路径

- name: docker 卷名字

hostPath: 挂载类型是本机,这个本机有可能是248-21 或者248-22,scheduler决定

path: /run/docker.sock 把本机这个文件共享,docker客户端就可以跟宿主机的docker服务端进行通信

type: ''

containers:

- name: jenkins 容器名字

image: harbor.zhanzhk.com/infra/jenkins:v2.282 使用镜像

imagePullPolicy: IfNotPresent 镜像拉取的策略,默认分为三种:1、always无论本地是不是有这个镜像,都要从从私有harbor镜像仓库中拉取镜像,2、never 无论如何都不去远程仓库拉取镜像,都是用本地的。3、IfNot如果本地没有,就去harbor镜像仓库拉取镜像,

ports:

- containerPort: 8080 docker容器默认跑的是8080端口,也就是jenkins的8080端口

protocol: TCP

env: 跟docker 的-e 一样,环境变量

- name: JAVA_OPTS

value: -Xmx512m -Xms512m 最大的堆内存是512,比较吃资源

volumeMounts: 挂在卷。挂载路径

- name: data

mountPath: /var/jenkins_home 决定上述宿主机的/data/nfs-volume/jenkins_home 挂载容器的哪个地方

- name: docker

mountPath: /run/docker.sock 决定上述宿主机的/run/docker.sock挂载容器的哪个地方

imagePullSecrets: delpoyment另外的一个重要参数,刚才创建在infra命名空间创建一个harbor的secret资源,当从harbor私有仓库拉取镜像时必须加上,否则拉不到

- name: harbor 这里写的是secret docker-registry harbor,secret资源,类型是docker-registry 。名字叫harbor

securityContext: 说明要使用哪个账户启动这个容器

runAsUser: 0 使用root账户启动这个容器, 0指root用户的uid。

strategy: 默认

type: RollingUpdate 采用滚动升级的方法,升级 jenkins

rollingUpdate:

maxUnavailable: 1

maxSurge: 0

revisionHistoryLimit: 7 留几份 7份提供回滚使用

progressDeadlineSeconds: 600 多长时间运行容器不正常,判断为失败,jenkins比较吃资源

--------------------------------------注意主意注意!!!-------------------------------

注:第10点如果以第二种方式打包镜像的,需要附上挂载docker路径,即名称为docker1,docker2,docker3,仔细看yaml文件。

kind: Deployment

apiVersion: apps/v1

metadata:

name: jenkins

namespace: infra

labels:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

name: jenkins

template:

metadata:

labels:

app: jenkins

name: jenkins

spec:

volumes:

- name: data

nfs:

server: hdss248-200

path: /data/nfs-volume/jenkins_home

- name: docker

hostPath:

path: /run/docker.sock

type: ''

- name: docker1

hostPath:

path: /var/run/docker.sock

type: ''

- name: docker2

hostPath:

path: /usr/bin/docker

type: ''

- name: docker3

hostPath:

path: /etc/docker

type: ''

containers:

- name: jenkins

image: harbor.zhanzhk.com/infra/jenkins:v2.282

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx512m -Xms512m

volumeMounts:

- name: data

mountPath: /var/jenkins_home

- name: docker

mountPath: /run/docker.sock

- name: docker1

mountPath: /var/run/docker.sock

- name: docker2

mountPath: /usr/bin/docker

- name: docker3

mountPath: /etc/docker

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

- 准备资源清单:

vi svc.yaml

kind: Service

apiVersion: v1

metadata:

name: jenkins

namespace: infra

spec:

ports:

- protocol: TCP

port: 80

targetPort: 8080

selector:

app: jenkins

注: port: 80 监听在ClusterIP(虚拟的集群网络)的80端口,相当于把容器里面的8080端口映射到集群网络的80端口

- 准备资源清单:

vi ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

spec:

rules:

- host: jenkins.zhanzhk.com

http:

paths:

- path: /

backend:

serviceName: jenkins

servicePort: 80

- 创建文件夹:

mkdir -p /data/nfs-volume/jenkins_home

--------------------------------------切回192.168.248.21-------------------------------

- 启动jenkins

kubectl apply -f http://k8s-yaml.zhanzhk.com//jenkins/dp.yaml

kubectl apply -f http://k8s-yaml.zhanzhk.com/jenkins/svc.yaml

kubectl apply -f http://k8s-yaml.zhanzhk.com/jenkins/ingress.yaml

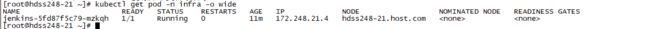

- 查看启动状态:kubectl get pod -n ifra -o wide

--------------------------------------切回192.168.248.200-------------------------------

--------------------------------------切回192.168.248.11-------------------------------

- 解析域名:

vi /var/named/zhanzhk.com.zone

$ORIGIN zhanzhk.com.

@ IN SOA dns.zhanzhk.com. dnsadmin.zhanzhk.com. (

2021050702; serial

10800;refresh(3 hours)

900; retry(15 minutes)

604800; expire(1 week)

86400;minimum(1 day)

)

NS dns.zhanzhk.com.

$TTL 60;1minute

dns A 192.168.248.11

harbor A 192.168.248.200

k8s-yaml A 192.168.248.200

traefik A 192.168.248.10

dashboard A 192.168.248.10

zk1 A 192.168.248.11

zk2 A 192.168.248.12

zk3 A 192.168.248.21

jenkins A 192.168.248.10

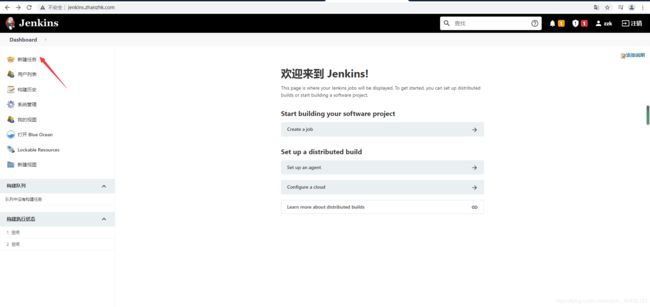

systemctl restart named-->dig -t A jenkins.zhanzhk.com @192.168.248.11 +short 192.168.248.10- 访问配置

jenkins:jenkins.zhanzhk.com

- 校验

jenkins是否成功配置了docker客户端

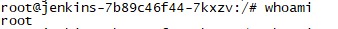

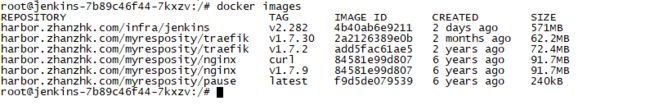

30.1 进入jenkins容器:kubectl get pod -n infra-->kubectl exec -ti jenkins-7b89c46f44-7kxzv -n infra /bin/bash

30.2 查看用户:whoami

30.3 运行的时区是否是东八区:date

30.4 验证docker引擎:docker images,可以看到查到的镜像就是宿主机上面的镜像,所以配置成功

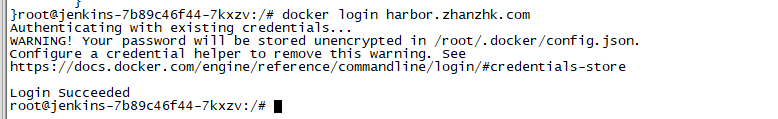

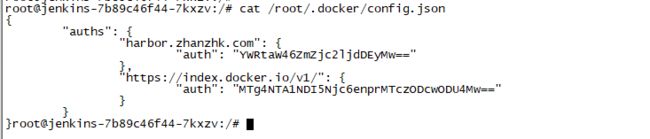

30.5 连接harbor私有仓库:docker login harbor.zhanzhk.com

之所以可以登录,可以查看:cat /root/.docker/config.json

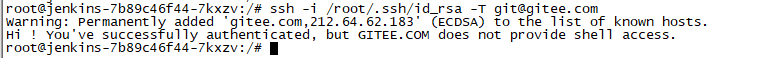

30.6 测试连接git:ssh -i /root/.ssh/id_rsa -T [email protected]

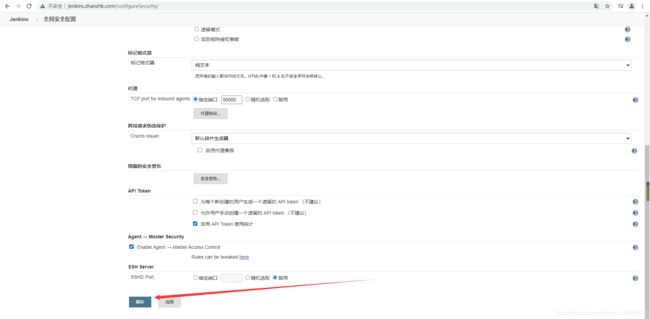

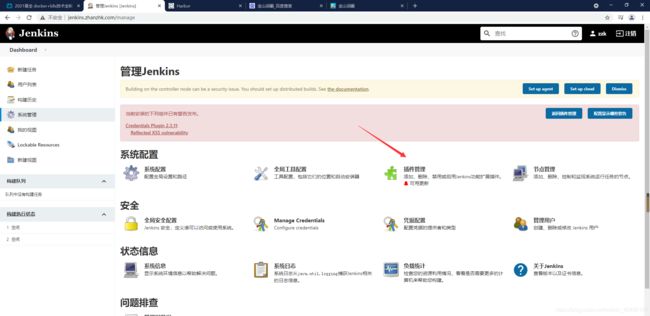

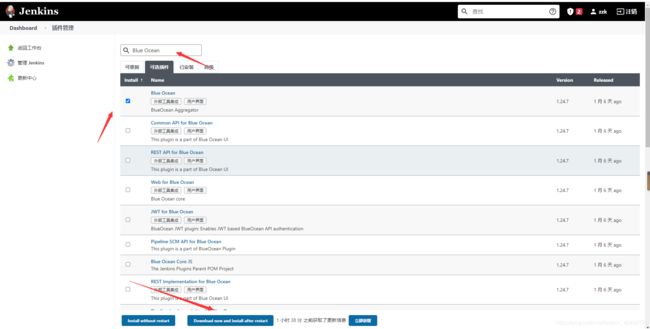

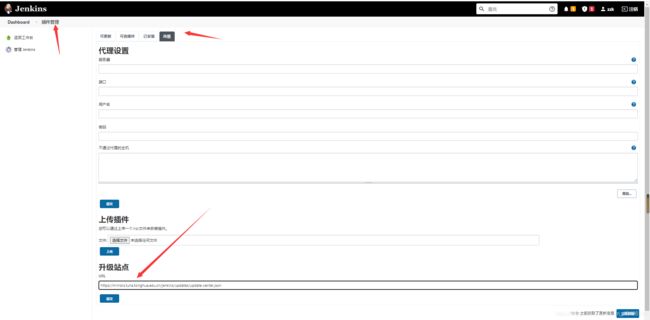

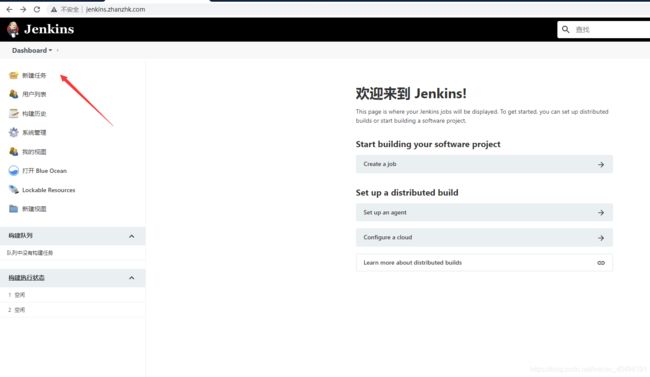

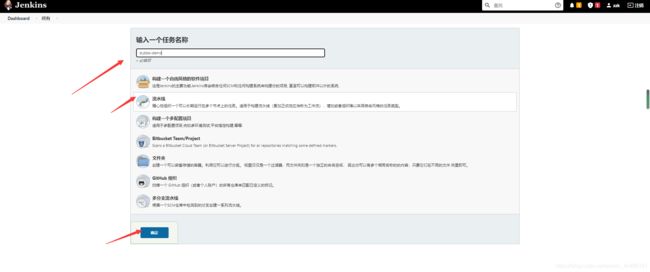

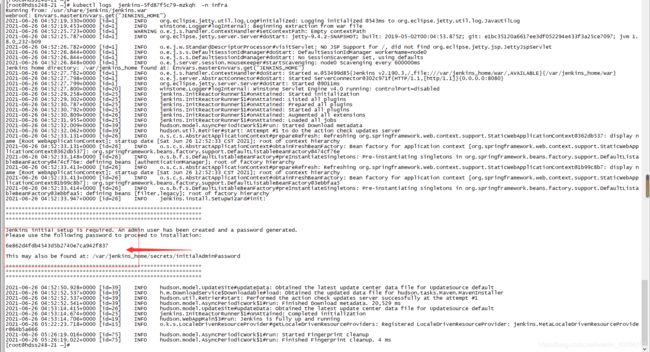

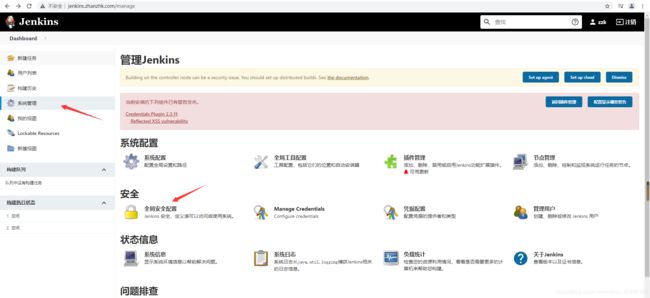

四、 配置jenkins

- 登录

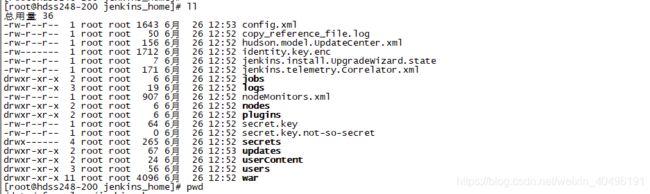

密钥获取有两种方式:

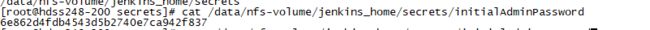

回到192.168.248.200:cat /data/nfs-volume/jenkins_home/secrets/initialAdminPassword

或者查看日志可以获取:kubectl logs jenkins-5fd87f5c79-mzkqh -n infra

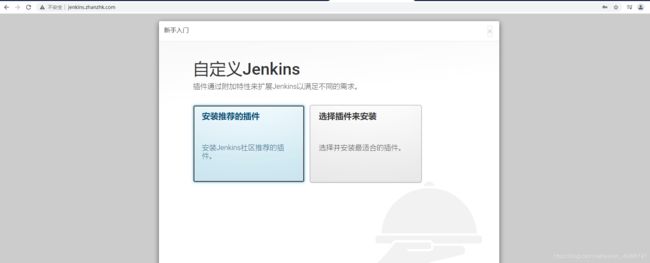

- 输入密钥到下一步

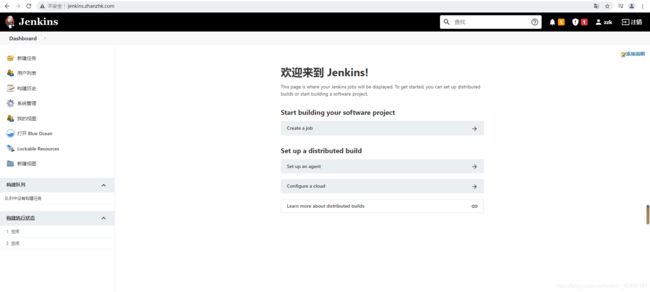

- 安装推荐的插件,一直下一步。账户密码设置为

admin/ffcsict123,设置完,进入首页

注:忘记密码怎么办

1)cd /data/nfs-volume/jenkins_home/users/admin_7164446690811246033

2)cat config.xml |grep password

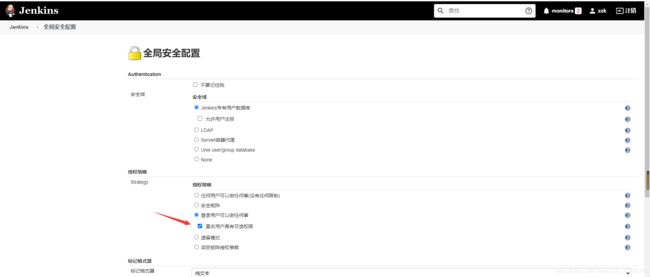

passwordHash>#jbcrypt:$2a$10$cDiZoCKO9VgSd0Lb.dpJNunH4K0k7d5xm9FH/ZUAc4JeAuiyJ7rE63)将2得到的红色部分改为#jbcrypt:$2a$10$MiIVR0rr/UhQBqT.bBq0QehTiQVqgNpUGyWW2nJObaVAM/2xSQdSq,密码即被重置为123456 - 配置安全策略,设置匿名用户具有可读权限

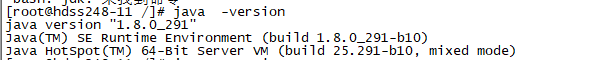

五、 安装maven

在192.168.248.200服务器上操作

- 切换目录:

cd /opt/src路径下 - 下载

maven3.6.1:wget https://archive.apache.org/dist/maven/maven-3/3.6.1/binaries/apache-maven-3.6.1-bin.tar.gz - 去

192.168.248.11上面查看jdk版本来命名maven解压路径:java -version

- 创建文件夹:

mkdir -p /data/nfs-volume/jenkins_home/maven-3.6.1-8u291 - 解压:

tar -xvf apache-maven-3.6.1-bin.tar.gz -C /data/nfs-volume/jenkins_home/maven-3.6.1-8u291 - 进入目录:

cd /data/nfs-volume/jenkins_home/maven-3.6.1-8u291 - 将解压文件挪出来:

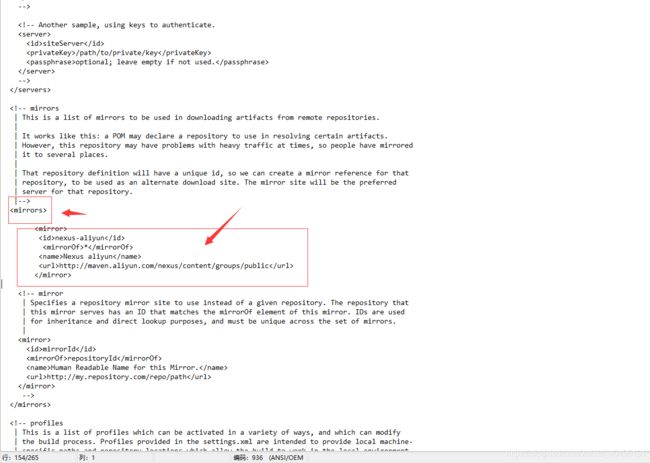

mv apache-maven-3.6.1/ ../—>mv ../apache-maven-3.6.1/* . - 修改下载源:

vi /data/nfs-volume/jenkins_home/maven-3.6.1-8u291/conf/settings.xml

nexus-aliyun

*

Nexus aliyun

http://maven.aliyun.com/nexus/content/groups/public

- 如果需要公司项目较久,需要低版本的jdk支持,如1.7.则可以这么解决:二进制部署的方式安装jdk

这里不做实操,说逻辑

9.1 将二进制包下载到/opt/src

9.2 解压到/data/nfs-volume/jenkins_home

9.3vi /data/nfs-volume/jenkins_home/maven-3.6.1-8u291/bin/mvn

加上一条JAVA_HOME=’/data/nfs-volume/jenkins_home/jdk7‘

注:因为/data/nfs-volume/jenkins_home是挂载到jenkins容器里面,所以改了这里就相当于,jenkins里面的maven使用的是jdk1.7

六、 配置dubbo微服务的底包镜像

在192.168.248.200服务器操作

注:因为我们后面构建镜像,需要有一个基础包,这个基础包一般配置了一些我们构建镜像所必需的环境,比如jdk等。因为我们不可能构建了一个镜像,还要自己安装jdk,那得多麻烦。

- 下载镜像:

docker pull docker.io/stanleyws/jre8:8u112 - 打标签:

docker tag fa3a085d6ef1 harbor.zhanzhk.com/myresposity/jre:8u112 - 推送到仓库:

docker push harbor.zhanzhk.com/myresposity/jre:8u112 - 创建文件夹:

mkdir /data/dockerfile/jre8—>cd /data/dockerfile/jre8 - 创建

Dockerfile文件:vi Dockerfile

FROM harbor.zhanzhk.com/myresposity/jre:8u112

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' >/etc/timezone

ADD config.yml /opt/prom/config.yml

ADD jmx_javaagent-0.3.1.jar /opt/prom/

ADD entrypoint.sh /entrypoint.sh

WORKDIR /opt/project_dir

RUN ["chmod", "+x", "/entrypoint.sh"]

CMD ["/entrypoint.sh"]

FROM ##继承镜像

RUN ##修改时区 改成东八区

ADD ## 普罗米修斯监控java程序用到的,从config.yml中匹配的规则rules:--- pattern: '.*'

ADD ##通过普罗米修斯通过jmx_javaagent-0.3.1.jar的jar包收集这个程序的的jvm的使用状况,jmx_javaagent-0.3.1.jar只需wget就行

ADD ##docker运行时默认的启动脚本

WORKDIR ##工作目录

RUN ["chmod", "+x", "/entrypoint.sh"] ##赋予权限

CMD ["/entrypoint.sh"]

- 下载

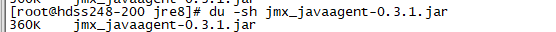

jmx_javaagent-0.3.1.jar:wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jar

6.1 查看:du -sh jmx_javaagent-0.3.1.jar

- 创建

config文件:vi config.yml

rules:

- pattern: '.*'

- 创建启动脚本:

vi entrypoint.sh

#!/bin/sh

M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml"

C_OPTS=${C_OPTS}

JAR_BALL=${JAR_BALL}

exec java -jar ${M_OPTS} ${C_OPTS} ${JAR_BALL}

#!/bin/sh

XXXXX ##java 启动参数,-Duser.timezone指定java的时区。/opt/prom/jmx_javaagent-0.3.1.jar使用刚才创建的收集LVM的javaagent。=$(hostname -i) 等于docker IP也叫pod IP。 所以就是把pod 的IP传到javaagent。${M_PORT:-"12346"} 使用的端口,M_PORT:-"12346" 为M_PORT默认数值12346,如果你没有给环境变量传M_PORT等于多少,他就会使用默认数值M_PORT=12346。/opt/prom/config.yml 匹配的规则。

C_OPTS=${C_OPTS} ##定义一个变量C_OPTS,这个变量等于从我当前容器运行的环境变量里面取C_OPTS。这个环境变量是集群统一配置清单给提供

JAR_BALL=${JAR_BALL} ##定义一个变量JAR_BALL,这个变量等于从我当前容器运行的环境变量里面取JAR_BALL。这个环境变量是集群统一配置清单给提供

exec java -jar ${M_OPTS} ${C_OPTS} ${JAR_BALL} ##执行exec

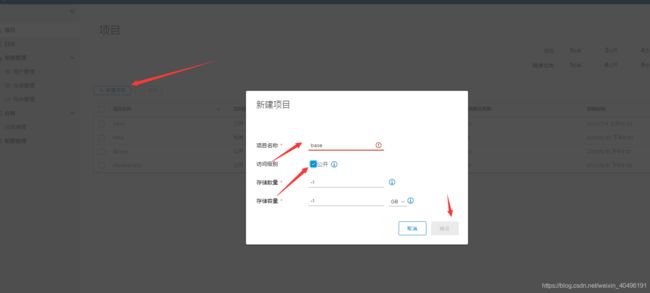

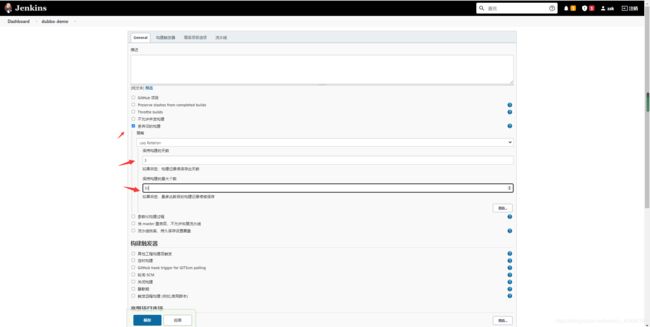

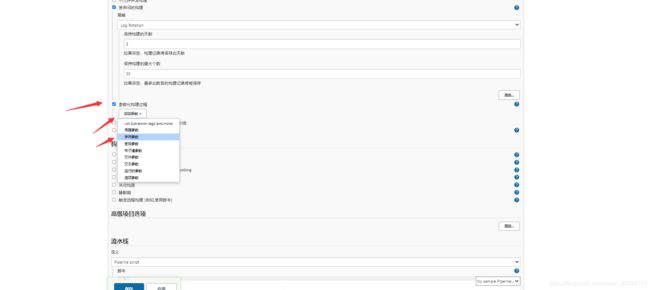

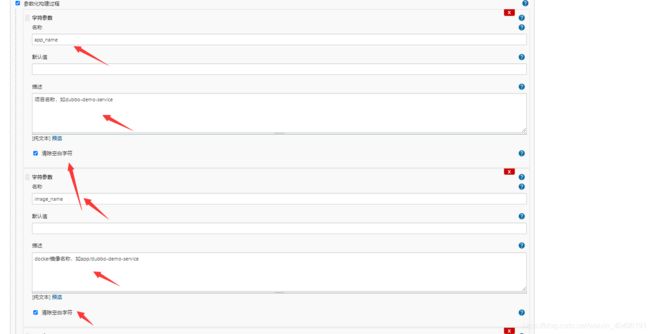

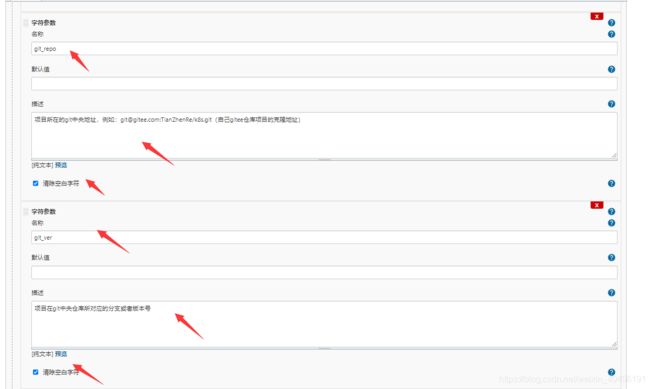

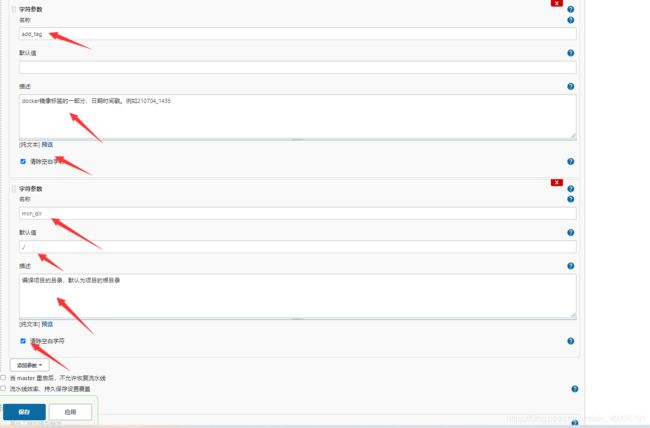

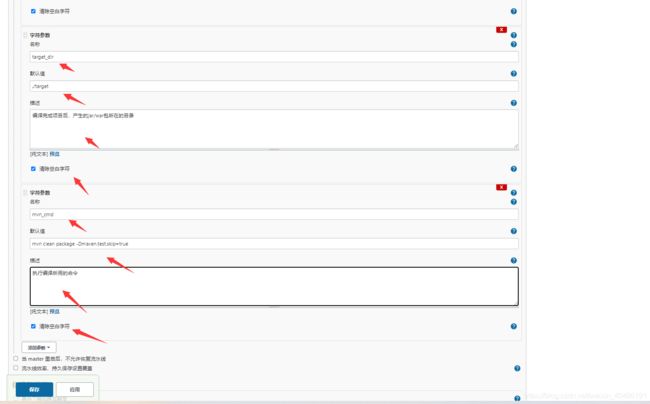

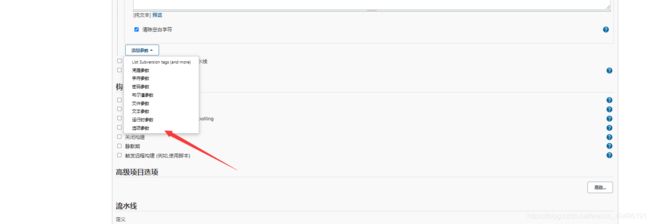

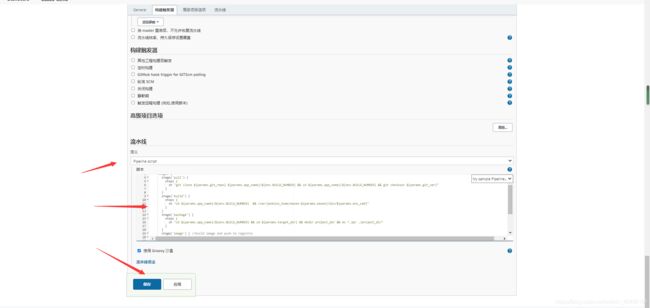

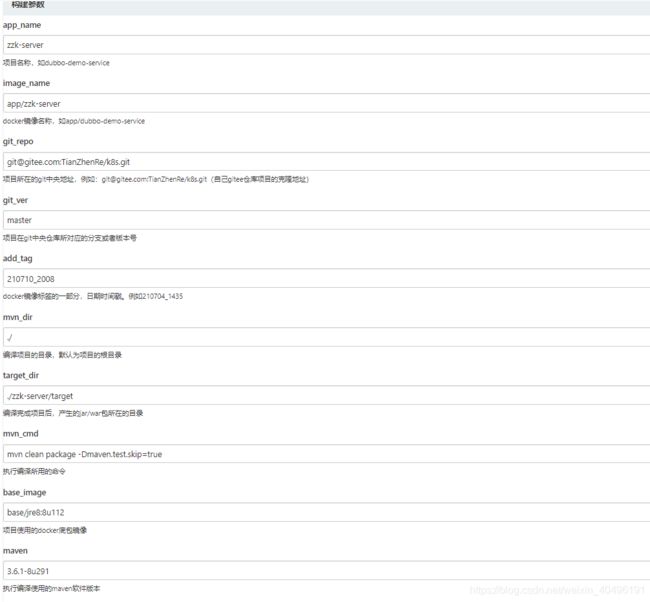

七、 jenkins构建和交付server(provide)服务

注:我这里简单搭建了一个dubbo框架,分别由zk+zzk-server+zzk-web,分别是注册中心,web端,数据服务端。

pipeline {

agent any

stages {

stage('pull') {

steps {

sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}"

}

}

stage('build') {

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}"

}

}

stage('package') {

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && mv *.jar ./project_dir"

}

}

stage('image') { //build image and push to registry

steps {

writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.zhanzhk.com/${params.base_image}

ADD ${params.target_dir}/project_dir /opt/project_dir"""

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.zhanzhk.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.zhanzhk.com/${params.image_name}:${params.git_ver}_${params.add_tag}"

}

}

}

}

stages :步骤 ,如stage('pull')、stage('build') 、stage('package')、 stage('image')

${env.BUILD_NUMBER}:系统自带环境参数

${params.git_repo}:代码仓库

${params.app_name}:项目名称

${params.git_ver}:对应分支

${params.maven}:自己选的maven版本,即第十个参数

${params.target_dir}:编译项目产生的jar/war包所在的目录

${params.base_image}:项目使用的docker底包镜像

${params.images_name}:镜像名称

${params.add_tag}:镜像标签的一部分

${params.mvn_cmd}:执行编译所用的命令

1. stage('pull'):拉取代码

脚本:克隆项目到指定的位置。并且进入目录,check out到某个对应的分支。

2. stage('build'):编译代码

脚本:进入目录,执行mavne脚本,即前面的:mvn clean package -Dmaven.test.skip=true

3. stage('package'):打包代码

脚本:进入到jar/war包所在的目录,创建project_dir目录并且将所有的jar包都挪进去。

4. stage('image') :打包镜像

首先:写一个Dockerfile,继承自定义的底层镜像,将jar包移过去(project_dir移到/opt/project_dir下)

脚本:进入目录,打包镜像,推送仓库

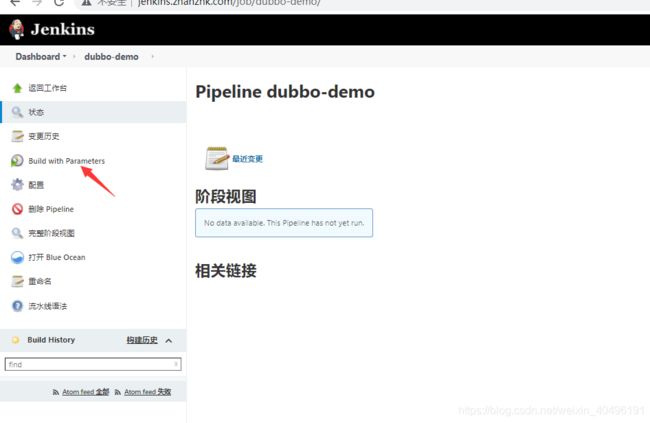

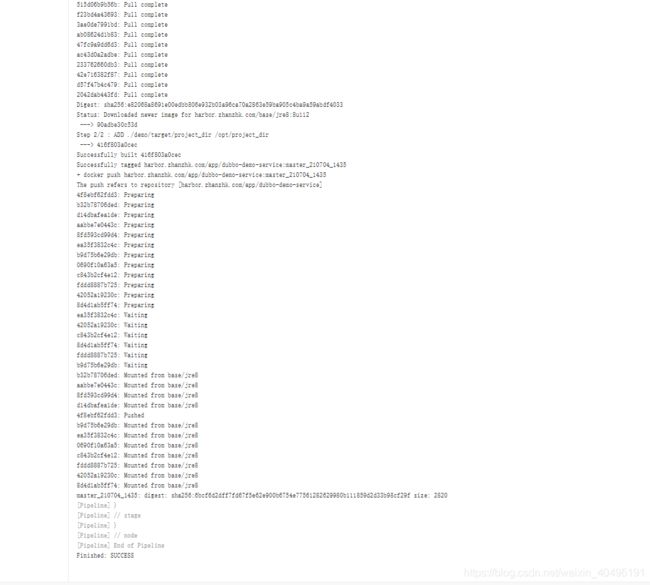

点击构建镜像

注:有一个大坑,就是git地址要选择ssh地址,否则会报错

fatal: could not read Username for 'https://gitee.com': No such device or a.....

7. 构建完镜像可以去harbor仓库查看

----------------------在192.168.248.200服务器操作-------------------------------

8. cd /data/k8s-yaml/ -->mkdir zzk-server —>cd zzk-server

9. vi dp.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: zzk-server

namespace: app

labels:

name: zzk-server

spec:

replicas: 1

selector:

matchLabels:

name: zzk-server

template:

metadata:

labels:

app: zzk-server

name: zzk-server

spec:

containers:

- name: zzk-server

image: harbor.zhanzhk.com/app/zzk-server:master_210710_2008

command: [ "/bin/bash", "-c", "--" ]

args: [ "while true; do sleep 30; done;" ]

ports:

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: zzk-server.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

kind: Deployment

apiVersion: apps/v1

metadata:

name: zzk-server

namespace: app ##命名空间

labels:

name: zzk-server

spec:

replicas: 1

selector:

matchLabels:

name: zzk-server

template:

metadata:

labels: ##标签

app: zzk-server

name: zzk-server

spec:

containers:

- name: zzk-server

image: harbor.zhanzhk.com/app/zzk-server:master_210710_2008 ##启动的镜像

command: [ "/bin/bash", "-c", "--" ]

args: [ "while true; do sleep 30; done;" ]

ports: ##暴露rpc端口

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: zzk-server.jar ##启动dubbo服务时候,传给你容器的jar包

imagePullPolicy: IfNotPresent ##如果本地没有在拉取远程镜像

imagePullSecrets: ##必须有,对应app secret,如果没有拉取不到app/私有仓库镜像

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

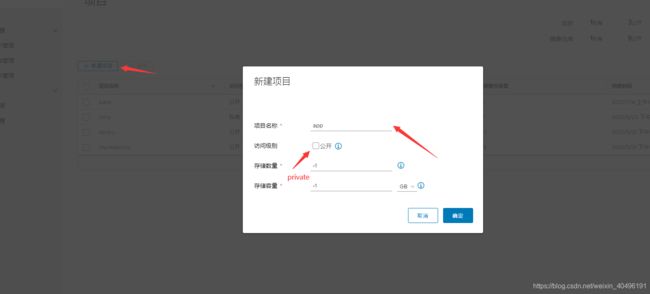

----------------------在192.168.248.21服务器操作-------------------------------

- 创建名称空间:

kubectl create ns app - 拉取

harbor.zhanzhk.com/app/私有仓库镜像需要create secret:kubectl create secret docker-registry harbor --docker-server=harbor.zhanzhk.com --docker-username=admin --docker-password=ffcsict123 -n app

----------------------在192.168.248.11服务器操作-------------------------------

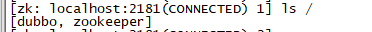

- 在交付到k8s之前查看zookeeper:

/opt/zookeeper/bin/zkServer.sh status

注:没启动的需要启动:/opt/zookeeper/bin/zkServer.sh start - 登录zookeeper:

/opt/zookeeper/bin/zkCli.sh -server localhost:2181

13.1 查看根路径:ls /

----------------------在192.168.248.21服务器操作------------------------------- - 运行zzk-server服务:

kubectl apply -f http://k8s-yaml.zhanzhk.com/zzk-server/dp.yaml

注1:如果启动出错,记得要kubectl describe或kubectl logs -f排查错误,我就是项目pom依赖没指定启动文件,导致报错no main manifest attribute

注2:附上我本地项目的yaml配置,主要是注册中心这块

server:

port: 8080

spring:

application:

name: zzk-web

dubbo:

application:

name: zzk-web

owner: zhanzhk

logger: slf4j

registry:

address: zookeeper://zk1.zhanzhk.com:2181/,zookeeper://zk2.zhanzhk.com:2181/,zookeeper://zk3.zhanzhk.com:2181/

timeout: 40000

file: ${user.home}/.dubbo/zzk-web.cache

annotation:

package: zzk

consumer:

timeout: 60000

retries: 3

----------------------在192.168.248.11服务器操作-------------------------------

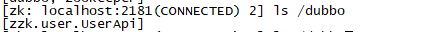

- 再次查看zookeeper状态:

ls /

- 查看:

ls /dubbo

- 回顾流水线,一共就干了四件事

1. stage('pull'):拉取代码

脚本:克隆项目到指定的位置。并且进入目录,check out到某个对应的分支。

2. stage('build'):编译代码

脚本:进入目录,执行mavne脚本,即前面的:mvn clean package -Dmaven.test.skip=true

3. stage('package'):打包代码

脚本:进入到jar/war包所在的目录,创建project_dir目录并且将所有的jar包都挪进去。

4. stage('image') :打包镜像

首先:写一个Dockerfile,继承自定义的底层镜像,将jar包移过去(project_dir移到/opt/project_dir下)

脚本:进入目录,打包镜像,推送仓库

- 补充(可忽略)

在pull代码前,可以用python写一个脚本,验证库里是否有底层基本镜像。

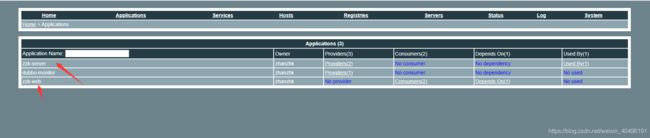

八、交付Monitor到K8S(zk监控者)

在192.168.248.200操作

注:Monitor主要就是监控zk注册的情况。 目前认知度两个:dubbo-admin 、 dubbo-Monitor,本次主要使用 dubbo-Monitor

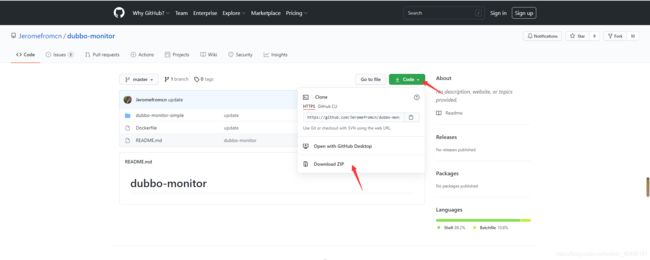

- 下载地址:https://github.com/Jeromefromcn/dubbo-monitor

cd /opt/src/—>rz上传下载的包- 安装解压命令:

yum install unzip -y - 解压:

unzip dubbo-monitor-master.zip - 改名字:

mv dubbo-monitor-master dubbo-monitor - 将包拷贝到

/data/dockerfile/底下:

6.1cd /opt/src/

6.2cp -a dubbo-monitor /data/dockerfile/ - 进入目录:

cd /data/dockerfile//dubbo-monitor/dubbo-monitor-simple/conf - 编辑:

vi dubbo_origin.properties

dubbo.container=log4j,spring,registry,jetty

dubbo.application.name=dubbo-monitor

dubbo.application.owner=zhanzhk

dubbo.registry.address=zookeeper://zk1.zhanzhk.com:2181?backup=zk2.zhanzhk.com:2181,zk3.zhanzhk.com:2181

dubbo.protocol.port=20880

dubbo.jetty.port=8080

dubbo.jetty.directory=/dubbo-monitor-simple/monitor

dubbo.charts.directory=/dubbo-monitor-simple/charts

dubbo.statistics.directory=/dubbo-monitor-simple/statistics

dubbo.log4j.file=logs/dubbo-monitor-simple.log

dubbo.log4j.level=WARN

dubbo.container=log4j,spring,registry,jetty

dubbo.application.name=dubbo-monitor ##名称,随意

dubbo.application.owner=zhanzhk ## 随意

dubbo.registry.address= #注册地址

dubbo.protocol.port=20880 ##rpc接口统一用20880

dubbo.jetty.port=8080 ##http接口

dubbo.jetty.directory=/dubbo-monitor-simple/monitor ##给一个固定的目录

dubbo.charts.directory=/dubbo-monitor-simple/charts ##给一个固定的目录

dubbo.statistics.directory=/dubbo-monitor-simple/statistics ##给一个固定的目录

dubbo.log4j.file=logs/dubbo-monitor-simple.log

dubbo.log4j.level=WARN

-

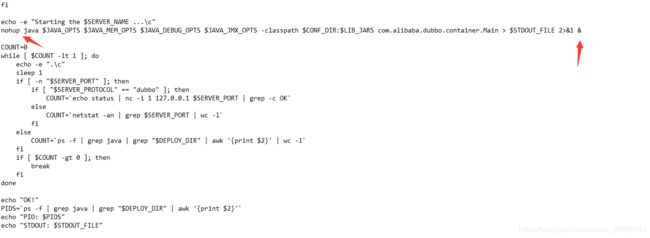

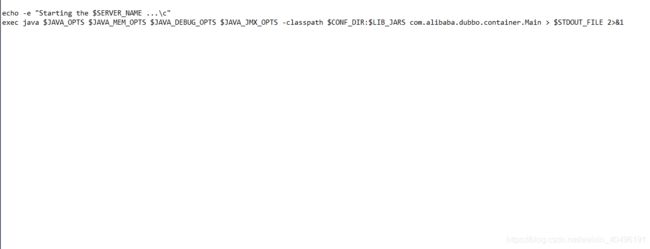

修改start.sh:

cd /data/dockerfile/dubbo-monitor/dubbo-monitor-simple/bin-->vi start.sh

10.1 修改jvm参数,按比例缩小

10.2 由于nohup java不能保证DOCKER一直保持RUNING的生命周期,所以默认提供的Dockerfile无法启动,需要修改启动参数,并且让 java 进程在前台运行,删除java之后的所有行。

注:也可以执行命令实现:sed -r -i -e '/^nohup/{p;:a;N;$!ba;d}' /data/dockerfile/dubbo-monitor/dubbo-monitor-simple/bin/start.sh && sed -r -i -e "s%^nohup(.*)%exec\1%" /data/dockerfile/dubbo-monitor/dubbo-monitor-simple/bin/start.sh -

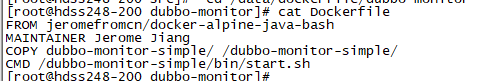

查看dockerfile文件:

cd /data/dockerfile/dubbo-monitor-->cat Dockerfile

-

直接build镜像:

docker build . -t harbor.zhanzhk.com/infra/dubbo-monitor:latest -

推送到仓库:

docker push harbor.zhanzhk.com/infra/dubbo-monitor:latest -

创建资源清单文件夹:

mkdir /data/k8s-yaml/dubbo-monitor-->cd /data/k8s-yaml/dubbo-monitor -

创建资源清单:

vi dp.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: dubbo-monitor

namespace: infra

labels:

name: dubbo-monitor

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-monitor

template:

metadata:

labels:

app: dubbo-monitor

name: dubbo-monitor

spec:

containers:

- name: dubbo-monitor

image: harbor.zhanzhk.com/infra/dubbo-monitor:latest

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

image: harbor.od.com:180/infra/dubbo-monitor:latest

ports: 暴露两个端口

- containerPort: 8080

protocol: TCP

- containerPort: 20880 rpc

protocol: TCP

- 创建资源清单:

vi service.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-monitor

namespace: infra

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: dubbo-monitor

port: 8080 在classIP集群的端口,理论上什么端口都行,因为在你这个集群中,只有你自己,但是ingress里面servicePort端口必须一致

targetPort: 8080 docker里面的端口

- 创建资源清单:

vi ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

spec:

rules:

- host: dubbo-monitor.zhanzhk.com

http:

paths:

- path: /

backend:

serviceName: dubbo-monitor

servicePort: 8080

------------------------192.168.248.21操作----------------------

- 应用资源配置清单

kubectl apply -f http://k8s-yaml.zhanzhk.com/dubbo-monitor/dp.yaml

kubectl apply -f http://k8s-yaml.zhanzhk.com/dubbo-monitor/service.yaml

kubectl apply -f http://k8s-yaml.zhanzhk.com/dubbo-monitor/ingress.yaml

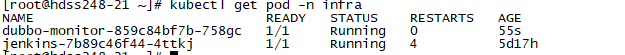

- 查看:

kubectl get pod -n infra

------------------------192.168.248.11操作---------------------- - 解析dns:

vi /var/named/zhanzhk.com.zone

$TTL 600;10minutes

$ORIGIN zhanzhk.com.

@ IN SOA dns.zhanzhk.com. dnsadmin.zhanzhk.com. (

2021050702; serial

10800;refresh(3 hours)

900; retry(15 minutes)

604800; expire(1 week)

86400;minimum(1 day)

)

NS dns.zhanzhk.com.

$TTL 60;1minute

dns A 192.168.248.11

harbor A 192.168.248.200

k8s-yaml A 192.168.248.200

traefik A 192.168.248.10

dashboard A 192.168.248.10

zk1 A 192.168.248.11

zk2 A 192.168.248.12

zk3 A 192.168.248.21

jenkins A 192.168.248.10

dubbo-monitor A 192.168.248.10

- 重启:

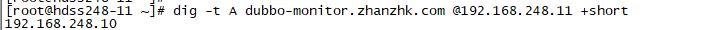

systemctl restart named - 校验:

dig -t A dubbo-monitor.zhanzhk.com @192.168.248.11 +short

- 访问:

dubbo-monitor.zhanzhk.com/

注:解析原理:指到vip,通过7层代理,指给了ingress控制器,ingress找service,service找pod

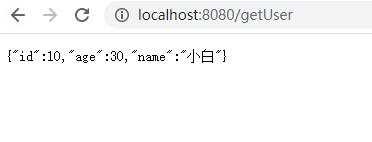

九、交付web(consumer)到K8S

在192.168.248.200服务器操作

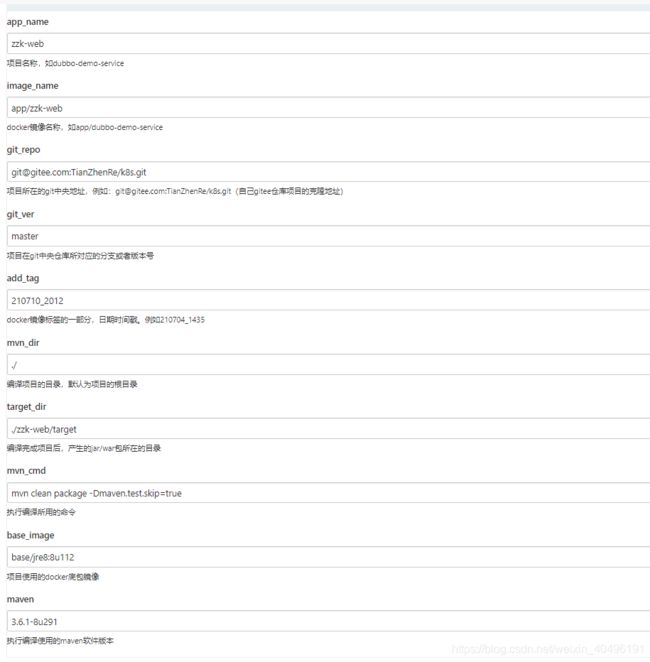

- 我们可以直接利用之前创建的流水线,填充对应参数,继续构建消费者web。

- 点击构建,勾结结束后,我们去harbor仓库查看

- 创建文件夹并进入:

mkdir /data/k8s-yaml/zzk-web-->cd /data/k8s-yaml/zzk-web - 编写资源配置清单:

vi dp.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

name: zzk-web

namespace: app

labels:

name: zzk-web

spec:

replicas: 1

selector:

matchLabels:

name: zzk-web

template:

metadata:

labels:

app: zzk-web

name: zzk-web

spec:

containers:

- name: zzk-web

image: harbor.zhanzhk.com/app/zzk-web:master_210710_2012

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: zzk-web.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

- 编写资源配置清单:

vi service.yaml

kind: Service

apiVersion: v1

metadata:

name: zzk-web

namespace: app

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: zzk-web

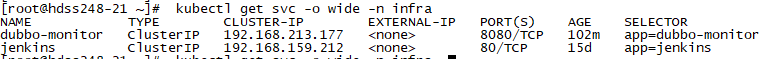

注:dubbo-monitor的8080端口监听了在192.168.217.177,所以dubbo-monitor clusterIP到底跑什么端口无所依,因为192.168.217.177的所有端口随便占用。只有你自己一个dubbo-monitor用这个

kubectl get svc -o wide -n infra

- 编写资源配置清单:

vi ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: zzk-web

namespace: app

spec:

rules:

- host: web.zhanzhk.com

http:

paths:

- path: /

backend:

serviceName: zzk-web

servicePort: 8080

---------------------------------------在192.168.248.21操作-------------------------------------------------

- 应用资源配置清单:

kubectl apply -f http://k8s-yaml.zhanzhk.com/zzk-web/dp.yaml

kubectl apply -f http://k8s-yaml.zhanzhk.com/zzk-web/service.yaml

kubectl apply -f http://k8s-yaml.zhanzhk.com/zzk-web/ingress.yaml

---------------------------------------在192.168.248.11操作-------------------------------------------------

- 配置dns解析:

vi /var/named/zhanzhk.com.zone

$TTL 600;10minutes

$ORIGIN zhanzhk.com.

@ IN SOA dns.zhanzhk.com. dnsadmin.zhanzhk.com. (

2021050702; serial

10800;refresh(3 hours)

900; retry(15 minutes)

604800; expire(1 week)

86400;minimum(1 day)

)

NS dns.zhanzhk.com.

$TTL 60;1minute

dns A 192.168.248.11

harbor A 192.168.248.200

k8s-yaml A 192.168.248.200

traefik A 192.168.248.10

dashboard A 192.168.248.10

zk1 A 192.168.248.11

zk2 A 192.168.248.12

zk3 A 192.168.248.21

jenkins A 192.168.248.10

dubbo-monitor A 192.168.248.10

web A 192.168.248.10