docker搭建etcd集群

最近用到etcd,就打算用docker搭建一套,学习整理了一下。记录在此,抛砖引玉。

文中的配置、代码见于https://gitee.com/bbjg001/darcy_common/tree/master/docker_compose_etcd

搭建一个单节点

docker run -d --name etcdx \

-p 2379:2379 \

-p 2380:2380 \

-e ALLOW_NONE_AUTHENTICATION=yes \

-e ETCD_ADVERTISE_CLIENT_URLS=http://etcdx:2379 \

bitnami/etcd:3.5.0

这样一个单节点etcd就起来了,环境变量ALLOW_NONE_AUTHENTICATION=yes允许无需密码登录

如果要设置密码,需要设置环境变量ETCD_ROOT_PASSWORD=xxxxxx,否则容器无法启动起来

test it,用etcdctl操作etcd

docker exec -it etcdx bash

etcdctl put name zhangsan

etcdctl get name

通过docker-compose搭建etcd集群

docker-compose配置文件如下

# docker-compose.cluster.yml

version: "3.0"

networks:

etcd-net: # 网络

name: etcd-net

driver: bridge # 桥接模式

ipam:

driver: default

config:

- subnet: 192.168.23.0/24

gateway: 192.168.23.1

volumes:

etcd1_data: # 挂载到本地的数据卷名

driver: local

etcd2_data:

driver: local

etcd3_data:

driver: local

# etcd 其他环境配置见:https://doczhcn.gitbook.io/etcd/index/index-1/configuration

services:

etcd1:

image: bitnami/etcd:3.5.0 # 镜像

container_name: etcd1 # 容器名 --name

restart: always # 总是重启

networks:

- etcd-net # 使用的网络 --network

ports: # 端口映射 -p

- "20079:2379"

- "20080:2380"

environment: # 环境变量 --env

- ALLOW_NONE_AUTHENTICATION=yes # 允许不用密码登录

- ETCD_NAME=etcd1 # etcd 的名字

- ETCD_INITIAL_ADVERTISE_PEER_URLS=http://etcd1:2380 # 列出这个成员的伙伴 URL 以便通告给集群的其他成员

- ETCD_LISTEN_PEER_URLS=http://0.0.0.0:2380 # 用于监听伙伴通讯的URL列表

- ETCD_LISTEN_CLIENT_URLS=http://0.0.0.0:2379 # 用于监听客户端通讯的URL列表

- ETCD_ADVERTISE_CLIENT_URLS=http://etcd1:2379 # 列出这个成员的客户端URL,通告给集群中的其他成员

- ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster # 在启动期间用于 etcd 集群的初始化集群记号

- ETCD_INITIAL_CLUSTER=etcd1=http://etcd1:2380,etcd2=http://etcd2:2380,etcd3=http://etcd3:2380 # 为启动初始化集群配置

- ETCD_INITIAL_CLUSTER_STATE=new # 初始化集群状态

volumes:

- etcd1_data:/bitnami/etcd # 挂载的数据卷

etcd2:

image: bitnami/etcd:3.5.0

container_name: etcd2

restart: always

networks:

- etcd-net

ports:

- "20179:2379"

- "20180:2380"

environment:

- ALLOW_NONE_AUTHENTICATION=yes

- ETCD_NAME=etcd2

- ETCD_INITIAL_ADVERTISE_PEER_URLS=http://etcd2:2380

- ETCD_LISTEN_PEER_URLS=http://0.0.0.0:2380

- ETCD_LISTEN_CLIENT_URLS=http://0.0.0.0:2379

- ETCD_ADVERTISE_CLIENT_URLS=http://etcd2:2379

- ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

- ETCD_INITIAL_CLUSTER=etcd1=http://etcd1:2380,etcd2=http://etcd2:2380,etcd3=http://etcd3:2380

- ETCD_INITIAL_CLUSTER_STATE=new

volumes:

- etcd2_data:/bitnami/etcd

etcd3:

image: bitnami/etcd:3.5.0

container_name: etcd3

restart: always

networks:

- etcd-net

ports:

- "20279:2379"

- "20280:2380"

environment:

- ALLOW_NONE_AUTHENTICATION=yes

- ETCD_NAME=etcd3

- ETCD_INITIAL_ADVERTISE_PEER_URLS=http://etcd3:2380

- ETCD_LISTEN_PEER_URLS=http://0.0.0.0:2380

- ETCD_LISTEN_CLIENT_URLS=http://0.0.0.0:2379

- ETCD_ADVERTISE_CLIENT_URLS=http://etcd3:2379

- ETCD_INITIAL_CLUSTER_TOKEN=etcd-cluster

- ETCD_INITIAL_CLUSTER=etcd1=http://etcd1:2380,etcd2=http://etcd2:2380,etcd3=http://etcd3:2380

- ETCD_INITIAL_CLUSTER_STATE=new

volumes:

- etcd3_data:/bitnami/etcd

docker-etcdkeeper:

hostname: etcdkeeper

container_name: etcdkeeper

image: evildecay/etcdkeeper

ports:

- "28080:8080"

networks:

- etcd-net

depends_on:

- etcd1

- etcd2

- etcd3

启动

docker-compose -f docker-compose.cluster.yml up

docker-compose -f docker-compose.cluster.yml up -d # -d 参数可以不在前台输出日志

# 注意如果修改了docker-compose配置文件重新启动,需要先清理掉当前集群

docker-compose -f docker-compose.cluster.yml down # 相当于docker rm

docker-compose -f docker-compose.cluster.yml down -v # 清理volume

test it,

查看etcd状态

etcdctl --endpoints="192.168.9.109:20079,192.168.9.109:20179,192.168.9.109:20279" --write-out=table endpoint health

# --write-out 可以控制返回值的格式,可选table、json等

etcdctl --endpoints="192.168.9.109:20079,192.168.9.109:20179,192.168.9.109:20279" --write-out=table endpoint status

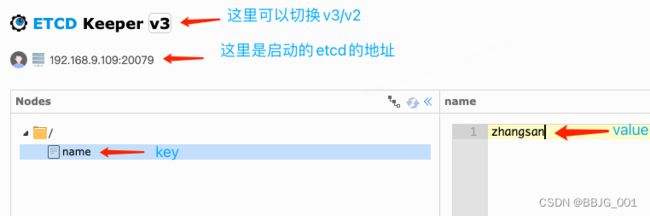

在这个集群中顺便启动了一个etcdkeeper,etcdkeeper是一个轻量级的etcd web客户端,支持etcd 2.x和etcd3.x。

在上面的配置文件中,etcdkeeper服务映射给了物理机的28080端口,在浏览器访问etcdkeeper http://192.168.9.109:28080/etcdkeeper/,其中192.168.9.109是物理机的ip

带监控的etcd集群

这里使用的是业界比较通用的Prometheus方案,简单使用可以浅看一下Prometheus容器状态监控,其大概逻辑是数据源(metrics接口)->Prometheus->Grafana,在当前场景中,数据源是etcd,它提供了metrics接口(http://192.168.9.109:20079/metrics)

etcd集群还是像上一节相同的配置,另外增加了启动Prometheus和Grafana的配置如下

prometheus:

image: prom/prometheus

container_name: prometheus

hostname: prometheus

restart: always

volumes:

- ./prometheus.yml:/etc/prometheus/prometheus.yml

ports:

- "29090:9090"

networks:

- etcd-net

grafana:

image: grafana/grafana

container_name: grafana

hostname: grafana

restart: always

ports:

- "23000:3000"

networks:

- etcd-net

在Prometheus的配置文件中启动需要配置的数据源,在这里就是etcd的入口

# prometheus.yml

scrape_configs:

- job_name: 'etcd'

static_configs:

- targets: [ '192.168.9.109:20079','192.168.9.109:20179,','192.168.9.109:20279,' ]

依旧如上启动

# 先把上一个集群清理掉

# docker-compose -f docker-compose.cluster.yml down

# docker-compose -f docker-compose.cluster.yml down -v

docker-compose -f docker-compose.monitor.yml up

然后访问Grafana的地址(http://192.168.9.109:23000)进行配置

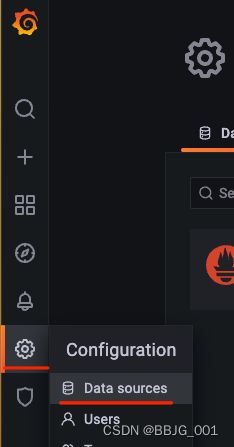

添加datasource

add data source后选择Prometheus

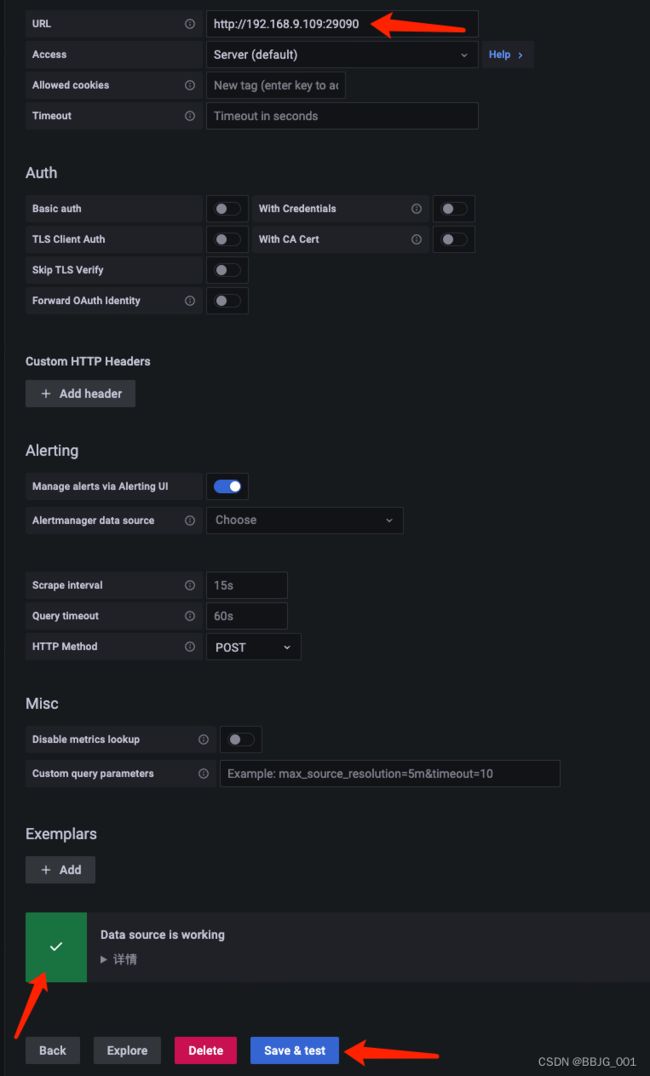

只填写启动的Prometheus的地址就可以,然后保存,看到这个datasource是working的状态

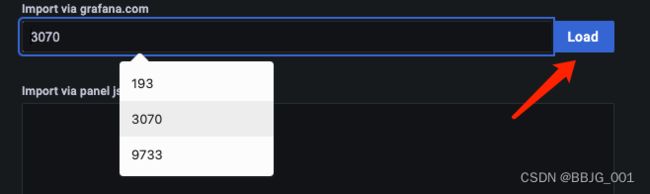

添加Dashboard。这里我们直接导入已有的dashboard

可以选择3070,9733等

数据源选择刚才配置的Prometheus

为了让曲线有浮动,写了个小脚本访问一下etcd(这不重要)

package main

// 通过client多个协程公用

import (

"context"

"flag"

"fmt"

"log"

"runtime"

"strconv"

"strings"

"sync"

"time"

"go.etcd.io/etcd/clientv3"

)

const (

timeGapMs = 0

)

var (

concurrency int = 2

mode string = "write"

timeDurationSeconds int64 = 60

endSecond int64 = 0

)

func initParams() {

flag.IntVar(&concurrency, "c", concurrency, "并发数")

flag.Int64Var(&timeDurationSeconds, "t", timeDurationSeconds, "运行持续时间")

flag.StringVar(&mode, "m", mode, "stress mode, write/rdonly/rw")

// 解析参数

flag.Parse()

// log.Println("Args: ", flag.Args())

}

func GoID() int {

var buf [64]byte

n := runtime.Stack(buf[:], false)

idField := strings.Fields(strings.TrimPrefix(string(buf[:n]), "goroutine "))[0]

id, err := strconv.Atoi(idField)

if err != nil {

panic(fmt.Sprintf("cannot get goroutine id: %v", err))

}

return id

}

func writeGoroutines(cli *clientv3.Client, ch chan int64, wg *sync.WaitGroup) {

defer wg.Done()

log.Printf("write, goid: %d", GoID())

num := int64(0)

for {

timeUnixNano := time.Now().UnixNano()

key := strconv.FormatInt(timeUnixNano/1000%1000000, 10)

value := strconv.FormatInt(timeUnixNano, 10)

// fmt.Printf("Put, %s:%s\n", key, value)

if _, err := cli.Put(context.Background(), key, value); err != nil {

log.Fatal(err)

}

num++

if time.Now().Unix() > endSecond {

break

}

time.Sleep(time.Millisecond * timeGapMs)

}

ch <- num

// done <- true

}

func readGoroutines(cli *clientv3.Client, ch chan int64, wg *sync.WaitGroup) {

defer wg.Done()

log.Printf("read, goid: %d", GoID())

num := int64(0)

for {

timeUnixNano := time.Now().UnixNano()

key := strconv.FormatInt(timeUnixNano/1000%1000000, 10)

resp, err := cli.Get(context.Background(), key)

if err != nil {

log.Fatal(err)

}

if len(resp.Kvs) == 0 {

// fmt.Printf("Get, key=%s not exist\n", key)

} else {

// for _, ev := range resp.Kvs {

// log.Printf("Get, %s:%s\n", string(ev.Key), string(ev.Value))

// }

}

num++

if time.Now().Unix() > endSecond {

break

}

time.Sleep(time.Millisecond * timeGapMs)

}

ch <- num

// done <- true

}

func init() {

initParams()

log.SetFlags(log.Lshortfile)

}

func main() {

// 创建ETCD客户端

cli, err := clientv3.New(clientv3.Config{

Endpoints: []string{"10.23.171.86:20079", "10.23.171.86:20179", "10.23.171.86:20279"}, // ETCD服务器地址

// Endpoints: []string{"192.168.9.103:2379"}, // ETCD服务器地址

DialTimeout: 5 * time.Second,

})

if err != nil {

log.Fatal(err)

}

defer cli.Close()

// doneCh := make(chan bool, concurrency)

var wg sync.WaitGroup

writeCh := make(chan int64, concurrency)

readCh := make(chan int64, concurrency)

endSecond = time.Now().Unix() + timeDurationSeconds

for i := 0; i < concurrency; i++ {

wg.Add(1)

if i%2 == 0 {

// 启动写入goroutine

go writeGoroutines(cli, writeCh, &wg)

} else {

// 启动读取goroutine

go readGoroutines(cli, readCh, &wg)

}

}

// 等待所有goroutine完成

// <-doneCh

wg.Wait()

num_w := int64(0)

num_r := int64(0)

close(writeCh)

for wi := range writeCh {

// fmt.Println(wi)

num_w += wi

}

close(readCh)

for ri := range readCh {

// fmt.Println("read", ri)

num_r += ri

}

fmt.Printf("write total: %d, time: %d, qps: %d\n", num_w, timeDurationSeconds, num_w/timeDurationSeconds)

fmt.Printf("read total: %d, time: %d, qps: %d\n", num_r, timeDurationSeconds, num_r/timeDurationSeconds)

}

go mod init ectdopt

# 避开依赖报错做下面两行replace

go mod edit -replace github.com/coreos/bbolt=go.etcd.io/[email protected]

go mod edit -replace google.golang.org/grpc=google.golang.org/[email protected]

go mod tidy

go run request_etcd.go

监控效果

另外说

- 当etcd通过https对外暴露服务时,Prometheus采集数据指标需要使用TLS证书

参考

etcd 其他环境配置见:https://doczhcn.gitbook.io/etcd/index/index-1/configuration

https://sakishum.com/2021/11/18/docker-compose%E9%83%A8%E7%BD%B2ETCD/

http://www.mydlq.club/article/117/

https://kenkao.blog.csdn.net/article/details/125083564