线性回归预测波士顿房价 & loss为NAN原因 & 画散点图找特征与标签的关系

波士顿房价csv文件

链接: https://pan.baidu.com/s/1uz6oKs7IeEzHdJkfrpiayg?pwd=vufb 提取码: vufb

代码

%matplotlib inline

import random

import torch

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

import torch

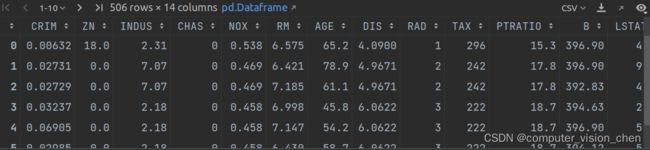

从CSV中取出数据集

# 加载数据,第一行是无用行,直接跳过

boston = pd.read_csv('../data/boston_house_prices.csv',skiprows=[0])

# 共有14列,前面十三列是特征,最后一列是价格

boston

取最后一列设置为labels,前面所有列为features

# 最后一列作为labels,把前面十三列的内容作为features

# 直接让最后一列出栈,boston剩下前面13列

labels = boston.pop('MEDV')

features = boston

画散点图,看特征与房价的关系,如果是线性关系,则说明该特征与标签存在一定的相关性。选出与labels相关的特征,作为最终的features

# 看各个特征与房价的散点图

data_xTitle = ['CRIM','ZN','INDUS','CHAS','NOX','RM','AGE','DIS','RAD','TAX','PTRATIO','B', 'LSTAT']

# 设置5行,3列 =15个子图

fig, a = plt.subplots(5, 3)

m = 0

for i in range(0, 5):

if i == 4:

a[i][0].scatter(features[str(data_xTitle[m])], labels, s=30, edgecolor='white')

a[i][0].set_title(str(data_xTitle[m]))

else:

for j in range(0, 3):

a[i][j].scatter(features[str(data_xTitle[m])], labels, s=30, edgecolor='white')

a[i][j].set_title(str(data_xTitle[m]))

m = m + 1

plt.show()

# 由下面的图可以看出CRIM,RM,LSTAT 与y是线性的关系,所以选择这三个特征作为特征值。

# CRIM,RM,LSTAT 与y是线性的关系,所以选择这三个特征作为特征值。

features = features[['LSTAT','CRIM','RM']]

把数据格式转为tensor

features = torch.tensor(np.array(features)).to(torch.float32)

labels = torch.tensor(np.array(labels)).to(torch.float32)

features.shape,labels.shape

(torch.Size([506, 13]), torch.Size([506]))

定义线性回归,损失函数,优化函数

# 制定线性回归模型

def linreg(X,w,b):

return torch.matmul(X,w) + b

# 定义损失函数

def squared_loss(y_hat,y):

return (y_hat - y.reshape(y_hat.shape)) **2 /2

# 定义优化函数

def sgd(params,lr,batch_size):

'''小批量随机梯度下降'''

with torch.no_grad():

for param in params:

param -= lr * param.grad / batch_size

param.grad.zero_()

data_iter函数,按批次取数据

def data_iter(batch_size, features, labels):

num_examples = len(features)

indices = list(range(num_examples))

# 这些样本是随机读取的,没有特定的顺序

random.shuffle(indices)

for i in range(0, num_examples, batch_size):

batch_indices = torch.tensor(indices[i: min(i + batch_size, num_examples)])

yield features[batch_indices], labels[batch_indices]

设置参数

w = torch.normal(0, 0.01, size=(features.shape[1],1), requires_grad=True)

b = torch.zeros(1, requires_grad=True)

lr = 0.03

# lr = 0.0001

num_epochs = 100

net = linreg

loss = squared_loss

batch_size = 10

w和b的shape为:

torch.Size([3, 1])

torch.Size([1])

开始训练

for epoch in range(num_epochs):

for X, y in data_iter(batch_size, features, labels):

l = loss(net(X, w, b), y)

# X和y的小批量损失

# 因为l形状是(batch_size,1),而不是一个标量。l中的所有元素被加到一起,

# 并以此计算关于[w,b]的梯度

l.sum().backward()

sgd([w, b], lr, batch_size)

# 使用参数的梯度更新参数

with torch.no_grad():

train_l = loss(net(features, w, b), labels)

print(f'epoch {epoch + 1}, loss {float(train_l.mean()):f}')

当模型的学习率设置为0.03,loss直接变为NAN

epoch 1, loss nan

epoch 2, loss nan

epoch 3, loss nan

epoch 4, loss nan

epoch 5, loss nan

epoch 6, loss nan

epoch 7, loss nan

epoch 8, loss nan

epoch 9, loss nan

epoch 10, loss nan

epoch 11, loss nan

epoch 12, loss nan

epoch 13, loss nan

epoch 14, loss nan

epoch 15, loss nan

epoch 16, loss nan

epoch 17, loss nan

epoch 18, loss nan

epoch 19, loss nan

epoch 20, loss nan

epoch 21, loss nan

epoch 22, loss nan

epoch 23, loss nan

epoch 24, loss nan

epoch 25, loss nan

epoch 26, loss nan

epoch 27, loss nan

epoch 28, loss nan

epoch 29, loss nan

epoch 30, loss nan

epoch 31, loss nan

epoch 32, loss nan

epoch 33, loss nan

epoch 34, loss nan

epoch 35, loss nan

epoch 36, loss nan

epoch 37, loss nan

epoch 38, loss nan

epoch 39, loss nan

epoch 40, loss nan

epoch 41, loss nan

epoch 42, loss nan

epoch 43, loss nan

epoch 44, loss nan

epoch 45, loss nan

epoch 46, loss nan

epoch 47, loss nan

epoch 48, loss nan

epoch 49, loss nan

epoch 50, loss nan

epoch 51, loss nan

epoch 52, loss nan

epoch 53, loss nan

epoch 54, loss nan

epoch 55, loss nan

epoch 56, loss nan

epoch 57, loss nan

epoch 58, loss nan

epoch 59, loss nan

epoch 60, loss nan

epoch 61, loss nan

epoch 62, loss nan

epoch 63, loss nan

epoch 64, loss nan

epoch 65, loss nan

epoch 66, loss nan

epoch 67, loss nan

epoch 68, loss nan

epoch 69, loss nan

epoch 70, loss nan

epoch 71, loss nan

epoch 72, loss nan

epoch 73, loss nan

epoch 74, loss nan

epoch 75, loss nan

epoch 76, loss nan

epoch 77, loss nan

epoch 78, loss nan

epoch 79, loss nan

epoch 80, loss nan

epoch 81, loss nan

epoch 82, loss nan

epoch 83, loss nan

epoch 84, loss nan

epoch 85, loss nan

epoch 86, loss nan

epoch 87, loss nan

epoch 88, loss nan

epoch 89, loss nan

epoch 90, loss nan

epoch 91, loss nan

epoch 92, loss nan

epoch 93, loss nan

epoch 94, loss nan

epoch 95, loss nan

epoch 96, loss nan

epoch 97, loss nan

epoch 98, loss nan

epoch 99, loss nan

epoch 100, loss nan

当模型的学习率设置为0.0001,loss正常,模型开始收敛

epoch 1, loss 141.555878

epoch 2, loss 115.449852

epoch 3, loss 101.026237

epoch 4, loss 90.287994

epoch 5, loss 81.646828

epoch 6, loss 74.384491

epoch 7, loss 68.148872

epoch 8, loss 62.699074

epoch 9, loss 57.872326

epoch 10, loss 53.601421

epoch 11, loss 49.778000

epoch 12, loss 46.333401

epoch 13, loss 43.253365

epoch 14, loss 40.471313

epoch 15, loss 37.963455

epoch 16, loss 35.711601

epoch 17, loss 33.679176

epoch 18, loss 31.841145

epoch 19, loss 30.203505

epoch 20, loss 28.699686

epoch 21, loss 27.352037

epoch 22, loss 26.142868

epoch 23, loss 25.045834

epoch 24, loss 24.059885

epoch 25, loss 23.171280

epoch 26, loss 22.369287

epoch 27, loss 21.646309

epoch 28, loss 20.998608

epoch 29, loss 20.407761

epoch 30, loss 19.874365

epoch 31, loss 19.396839

epoch 32, loss 18.967056

epoch 33, loss 18.576946

epoch 34, loss 18.234808

epoch 35, loss 17.904724

epoch 36, loss 17.623093

epoch 37, loss 17.360590

epoch 38, loss 17.126835

epoch 39, loss 16.916040

epoch 40, loss 16.727121

epoch 41, loss 16.555841

epoch 42, loss 16.401901

epoch 43, loss 16.264545

epoch 44, loss 16.145824

epoch 45, loss 16.026453

epoch 46, loss 15.927325

epoch 47, loss 15.830773

epoch 48, loss 15.748351

epoch 49, loss 15.672281

epoch 50, loss 15.606522

epoch 51, loss 15.546185

epoch 52, loss 15.490641

epoch 53, loss 15.458157

epoch 54, loss 15.395338

epoch 55, loss 15.359412

epoch 56, loss 15.331330

epoch 57, loss 15.284848

epoch 58, loss 15.264071

epoch 59, loss 15.238921

epoch 60, loss 15.206428

epoch 61, loss 15.184341

epoch 62, loss 15.190187

epoch 63, loss 15.144171

epoch 64, loss 15.127305

epoch 65, loss 15.115336

epoch 66, loss 15.111353

epoch 67, loss 15.098548

epoch 68, loss 15.077714

epoch 69, loss 15.075640

epoch 70, loss 15.072990

epoch 71, loss 15.051690

epoch 72, loss 15.046121

epoch 73, loss 15.038815

epoch 74, loss 15.038069

epoch 75, loss 15.027984

epoch 76, loss 15.028069

epoch 77, loss 15.030132

epoch 78, loss 15.015227

epoch 79, loss 15.014658

epoch 80, loss 15.010786

epoch 81, loss 15.005883

epoch 82, loss 15.007875

epoch 83, loss 15.003115

epoch 84, loss 15.015619

epoch 85, loss 14.996306

epoch 86, loss 15.008889

epoch 87, loss 14.993307

epoch 88, loss 14.997282

epoch 89, loss 14.990996

epoch 90, loss 14.991257

epoch 91, loss 14.997286

epoch 92, loss 14.989521

epoch 93, loss 14.987417

epoch 94, loss 14.989147

epoch 95, loss 14.989621

epoch 96, loss 14.984948

epoch 97, loss 14.984961

epoch 98, loss 14.984855

epoch 99, loss 14.983346

epoch 100, loss 14.999675

补充

学习率为0.03为什么会出现loss为NAN的情况?

说明对于模型的损失函数来说,步子太大了,最优的地方直接跨过去了。调小学习率,随着epoch增多,loss降低,模型收敛。