ceph集群节点扩容osd,rgw,mon,mgr

环境

| node1 | 10.0.40.133 | master节点 && ceph节点 |

|---|---|---|

| node2 | 10.0.40.134 | ceph节点 |

| node3 | 10.0.40.135 | ceph节点 |

| node4 | 10.0.40.136 | 新增节点 |

一、初始化

1、添加主机名与IP对应关系:node1、node2、node3、node4都执行

vim /etc/hosts

10.0.40.133 node1

10.0.40.134 node2

10.0.40.135 node3

10.0.40.135 node4

2、新增节点初始化

(1)关闭防火墙:

systemctl stop firewalld

systemctl disable firewalld

(2)关闭selinux:

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

(3)关闭NetworkManager

systemctl disable NetworkManager && systemctl stop NetworkManager

(4)设置主机名:

hostnamectl set-hostname node4

(5)同步网络时间和修改时区

echo '*/2 * * * * /usr/sbin/ntpdate cn.pool.ntp.org' &>/dev/null >>/var/spool/cron/root

cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

(6)设置文件描述符

ulimit -n 65535

cat >> /etc/security/limits.conf << EOF

* soft nofile 65535

* hard nofile 65535

EOF

sysctl -p

(7)在node1上配置免密登录到node4

ssh-copy-id root@node4

二、ceph节点扩容

注意看我是在那个节点上操作,标题已加粗的部分

1、扩容节点

主节点操作,新增节点安装

[root@node1 ~]# cd /etc/ceph

[root@node1 ceph]# ceph-deploy install --no-adjust-repos node4

2、主节点操作,在ceph配置文件中添加新增节点rgw 的配置

[root@node1 ceph]# vim ceph.conf

[client.rgw.node4]

rgw_frontends = "civetweb port=8899 num_threads=5000"

3、主节点操作,将配置文件发送到所有节点

[root@node1 ~]# ceph-deploy --overwrite-conf admin node1 node2 node3 node4

4、新增节点操作,对磁盘进行分区,ceph-volume lvm zap “磁盘路径”初始化磁盘为数据卷

[root@node4 ~]# parted /dev/sdb mklabel gpt -s

[root@node4 ~]# ceph-volume lvm zap /dev/sdb

--> Zapping: /dev/sdb

--> --destroy was not specified, but zapping a whole device will remove the partition table

Running command: dd if=/dev/zero of=/dev/sdb bs=1M count=10

--> Zapping successful for:

[root@node4 ~]# parted /dev/sdc mklabel gpt -s

[root@node4 ~]# ceph-volume lvm zap /dev/sdc

--> Zapping: /dev/sdc

--> --destroy was not specified, but zapping a whole device will remove the partition table

Running command: dd if=/dev/zero of=/dev/sdc bs=1M count=10

stderr: 记录了10+0 的读入

记录了10+0 的写出

10485760字节(10 MB)已复制,0.00643545 秒,1.6 GB/秒

--> Zapping successful for:

[root@node4 ~]#

6、主节点操作,创建osd(在主节点执行)

[root@node1 ceph]# cd /etc/ceph

[root@node1 ceph]# #ceph-deploy osd create –data {device} {ceph-node}

[root@node1 ceph]# ceph-deploy osd create --data /dev/sdb node4

[root@node1 ceph]# ceph-deploy osd create --data /dev/sdc node4

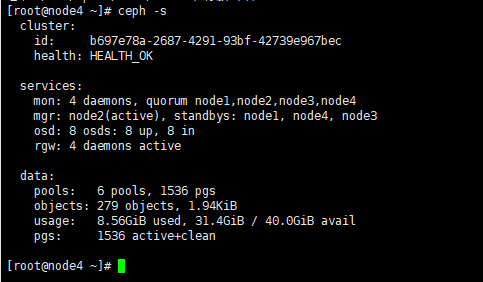

7、主节点操作,查看osd 状态

ceph osd stat

[root@node1 ceph]# ceph-deploy install --no-adjust-repos --rgw node4

9、主节点操作,将配置文件发送到所有节点

[root@node1 ceph]# ceph-deploy --overwrite-conf admin node1 node2 node3 node4

10、主节点操作,给新增节点创建网关

[root@node1 ceph]# ceph-deploy rgw create node4

12、新增节点操作,查看rgw是否启动成功

[root@node4 ~]# systemctl status [email protected]

● [email protected] - Ceph rados gateway

Loaded: loaded (/usr/lib/systemd/system/[email protected]; enabled; vendor preset: disabled)

Active: active (running) since 三 2021-07-21 17:13:09 CST; 59s ago

Main PID: 5641 (radosgw)

CGroup: /system.slice/system-ceph\x2dradosgw.slice/[email protected]

└─5641 /usr/bin/radosgw -f --cluster ceph --name client.rgw.node4 --setuser ceph --setgroup cep

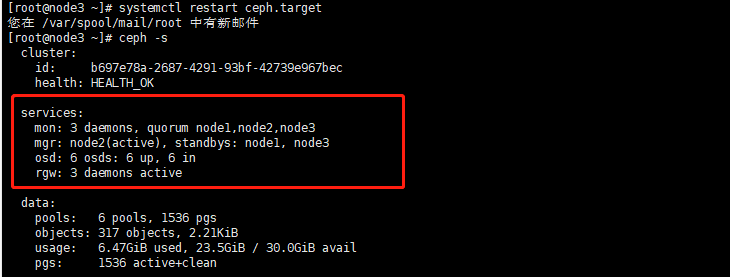

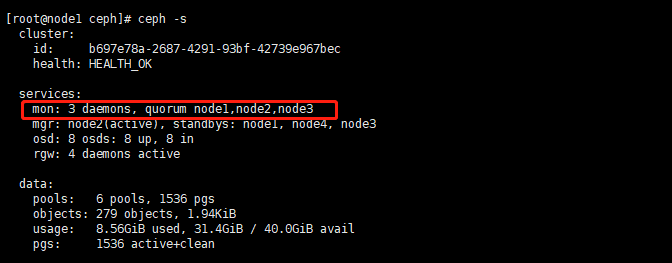

13、增加mon,维护ceph集群信息

修改配置

[root@node1 ceph]# vim /etc/ceph/ceph.conf

修改这两项

mon_initial_members = node1, node2, node3, node4

mon_host = 10.0.40.133,10.0.40.134,10.0.40.135,10.0.40.134

同步配置以及集群所使用的的秘钥

ceph-deploy --overwrite-conf admin node1 node2 node3 node4

增加mon

ceph-deploy mon add node4

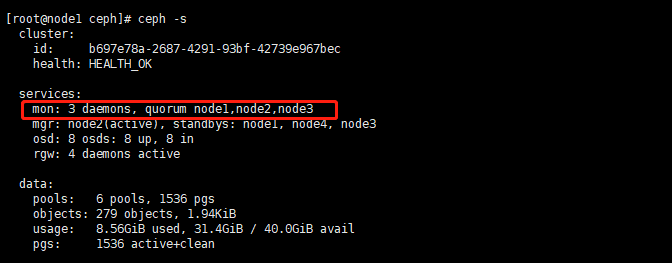

解决

发现

解决,发现/var/lib/ceph/mon/ceph-node1/keyring不一样

[root@node1 ~]# scp /var/lib/ceph/mon/ceph-node1/keyring node4:/var/lib/ceph/mon/ceph-node4/keyring

[root@node4 ~]# systemctl restart ceph-mon@node4

14、增加mgr,用于管理集群

ceph-deploy mgr create node4