K8S集群架构的二进制部署——K8S集群学习的基础

K8S集群架构的二进制部署

- 一、部署etcd

- 二、部署 docker 引擎

- 三、flannel网络配置

- 四、部署master组件

- 五、node节点部署

一、部署etcd

Master上操作

[root@192 etcd-cert]# cd /usr/local/bin/

[root@192 bin]# rz -E(cfssl cfssl-certinfo cfssljson)

[root@192 bin]# chmod +x *

[root@192 bin]# ls

cfssl cfssl-certinfo cfssljson

//生成ETCD证书

[root@192 ~]# mkdir /opt/k8s

[root@192 ~]# cd /opt/k8s/

[root@192 k8s]# rz -E(etcd-cert.sh etcd.sh[需要修改])

[root@192 k8s]# chmod +x etcd*

[root@192 k8s]# mkdir etcd-cert

[root@192 k8s]# mv etcd-cert.sh etcd-cert

[root@192 k8s]# cd etcd-cert/

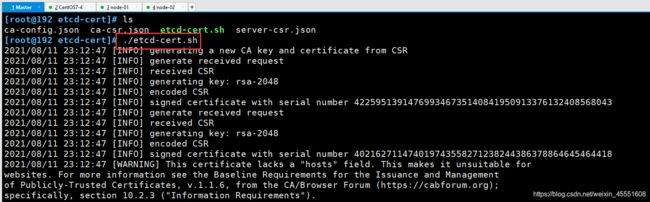

[root@192 etcd-cert]# ./etcd-cert.sh

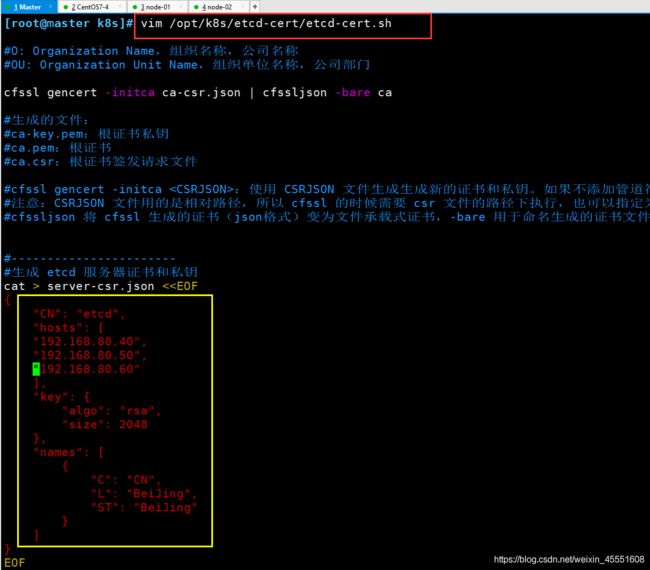

① 生成ETCD证书,etcd-cert.sh需要进行修改集群ip

然后再生成ETCD证书

//ETCD 二进制包

[root@192 k8s]# cd /opt/k8s/

[root@192 k8s]# rz -E(zxvf etcd-v3.3.10-linux-amd64.tar.gz)

rz waiting to receive.

[root@192 k8s]# ls

etcd-cert etcd.sh etcd-v3.3.10-linux-amd64.tar.gz

[root@192 k8s]# tar zxvf etcd-v3.3.10-linux-amd64.tar.gz

//配置文件,命令文件,证书

[root@192 k8s]# mkdir /opt/etcd/{cfg,bin,ssl} -p

[root@192 k8s]# mv etcd-v3.3.10-linux-amd64/etcd etcd-v3.3.10-linux-amd64/etcdctl /opt/etcd/bin/

//证书拷贝

[root@192 k8s]# cp etcd-cert/*.pem /opt/etcd/ssl/

//进入卡住状态等待其他节点加入

[root@192 k8s]# ./etcd.sh etcd01 192.168.80.40 etcd02=https://192.168.80.50:2380,etcd03=https://192.168.80.60:2380

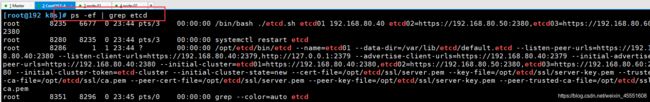

[root@192 k8s]# ps -ef | grep etcd

root 8235 6677 0 23:44 pts/3 00:00:00 /bin/bash ./etcd.sh etcd01 192.168.80.40 etcd02=https://192.168.80.50:2380,etcd03=https://192.168.80.60:2380

root 8280 8235 0 23:44 pts/3 00:00:00 systemctl restart etcd

root 8286 1 1 23:44 ? 00:00:00 /opt/etcd/bin/etcd --name=etcd01 --data-dir=/var/lib/etcd/default.etcd --listen-peer-urls=https://192.168.80.40:2380 --listen-client-urls=https://192.168.80.40:2379,http://127.0.0.1:2379 --advertise-client-urls=https://192.168.80.40:2379 --initial-advertise-peer-urls=https://192.168.80.40:2380 --initial-cluster=etcd01=https://192.168.80.40:2380,etcd02=https://192.168.80.50:2380,etcd03=https://192.168.80.60:2380 --initial-cluster-token=etcd-cluster --initial-cluster-state=new --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --trusted-ca-file=/opt/etcd/ssl/ca.pem --peer-cert-file=/opt/etcd/ssl/server.pem --peer-key-file=/opt/etcd/ssl/server-key.pem --peer-trusted-ca-file=/opt/etcd/ssl/ca.pem

root 8351 8296 0 23:45 pts/0 00:00:00 grep --color=auto etcd

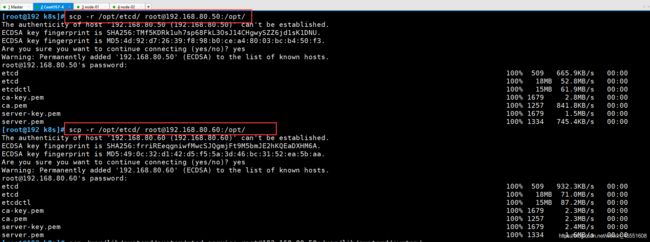

//拷贝证书去其他节点

scp -r /opt/etcd/ root@192.168.80.50:/opt/

scp -r /opt/etcd/ root@192.168.80.60:/opt/

//启动脚本拷贝其他节点

scp /usr/lib/systemd/system/etcd.service root@192.168.80.50:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/etcd.service root@192.168.80.60:/usr/lib/systemd/system/

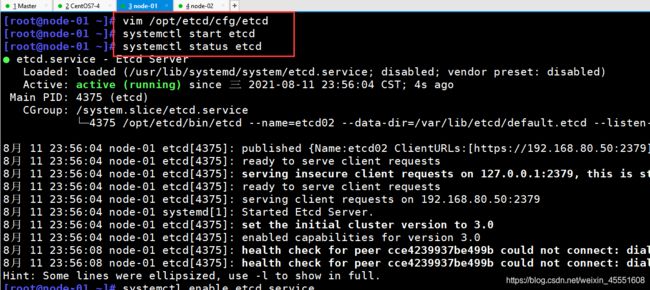

⑤ node-01上操作

[root@node-01 ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd02" ##修改为 etcd02

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.80.50:2380" #修改为192.168.80.50

ETCD_LISTEN_CLIENT_URLS="https://192.168.80.50:2379" #修改为192.168.80.50

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.80.50:2380" #修改为192.168.80.50

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.80.50:2379" #修改为192.168.80.50

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.80.40:2380,etcd02=https://192.168.80.50:2380,etcd03=https://192.168.80.60:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

//启动etcd

[root@node-01 ~]# systemctl start etcd

[root@node-01 ~]# systemctl status etcd

[root@node-01 ~]# systemctl enable etcd.service

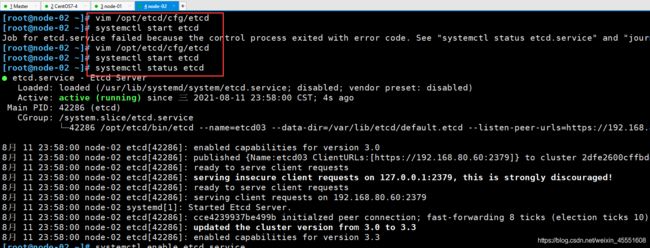

⑥ node-02上操作

[root@node-02 ~]# vim /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd03" ##修改为 etcd03

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.80.60:2380" #修改为192.168.80.60

ETCD_LISTEN_CLIENT_URLS="https://192.168.80.60:2379" #修改为192.168.80.60

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.80.60:2380" #修改为192.168.80.60

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.80.60:2379" #修改为192.168.80.60

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.80.40:2380,etcd02=https://192.168.80.50:2380,etcd03=https://192.168.80.60:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

//启动etcd

[root@node-02 ~]# systemctl start etcd

[root@node-02 ~]# systemctl status etcd

[root@node-02 ~]# systemctl enable etcd.service

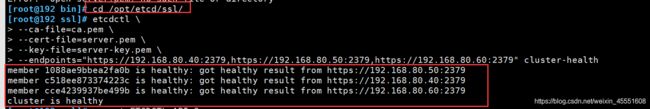

⑦ 检查集群状态(Master上操作)

[root@192 bin]# ln -s /opt/etcd/bin/etcdctl /usr/local/bin/

[root@192 bin]# cd /opt/etcd/ssl/

[root@192 ssl]# etcdctl \

--ca-file=ca.pem \

--cert-file=server.pem \

--key-file=server-key.pem \

--endpoints="https://192.168.80.40:2379,https://192.168.80.50:2379,https://192.168.80.60:2379" cluster-health

member 1088ae9bbea2fa0b is healthy: got healthy result from https://192.168.80.50:2379

member c518ee873374223c is healthy: got healthy result from https://192.168.80.40:2379

member cce4239937be499b is healthy: got healthy result from https://192.168.80.60:2379

cluster is healthy

---------------------------------------------------------------------------------------

--ca-file:识别HTTPS端使用SSL证书文件

--cert-file:使用此SSL密钥文件标识HTTPS客户端

--key-file:使用此CA证书验证启用https的服务器的证书

--endpoints:集群中以逗号分隔的机器地址列表

cluster-health:检查etcd集群的运行状况

---------------------------------------------------------------------------------------

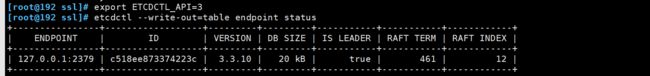

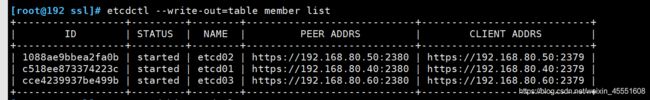

⑧ 切换到etcd3版本进行查看集群节点状态和成员列表

[root@192 ssl]# export ETCDCTL_API=3 #v2和v3命令略有不同,etc2和etc3也是不兼容的,默认是v2版本

[root@192 ssl]# etcdctl --write-out=table endpoint status

+----------------+------------------+---------+---------+-----------+-----------+------------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX |

+----------------+------------------+---------+---------+-----------+-----------+------------+

| 127.0.0.1:2379 | c518ee873374223c | 3.3.10 | 20 kB | true | 461 | 12 |

+----------------+------------------+---------+---------+-----------+-----------+------------+

[root@192 ssl]# etcdctl --write-out=table member list

+------------------+---------+--------+----------------------------+----------------------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS |

+------------------+---------+--------+----------------------------+----------------------------+

| 1088ae9bbea2fa0b | started | etcd02 | https://192.168.80.50:2380 | https://192.168.80.50:2379 |

| c518ee873374223c | started | etcd01 | https://192.168.80.40:2380 | https://192.168.80.40:2379 |

| cce4239937be499b | started | etcd03 | https://192.168.80.60:2380 | https://192.168.80.60:2379 |

[root@192 ssl]# export ETCDCTL_API=2 #再切回v2版本

二、部署 docker 引擎

所有的node节点上安装docker

[root@node-01 ~]# cd /etc/yum.repos.d/

[root@node-01 yum.repos.d]# mv repo.bak/* ./

[root@node-01 yum.repos.d]# ls

CentOS-Base.repo CentOS-Debuginfo.repo CentOS-Media.repo CentOS-Vault.repo repo.bak

CentOS-CR.repo CentOS-fasttrack.repo CentOS-Sources.repo local.repo

[root@node-01 yum.repos.d]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@node-01 yum.repos.d]# yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce

[root@node-01 yum.repos.d]# systemctl start docker.service

[root@node-01 yum.repos.d]# systemctl status docker.service

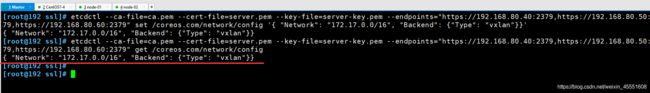

三、flannel网络配置

Msater上操作

① 添加flannel 网络配置信息,写入分配的子网段到etcd中,供flannel 使用

cd /opt/etcd/ssl/

/opt/etcd/bin/etcdctl \

--ca-file=ca.pem \

--cert-file=server.pem \

--key-file=server-key.pem \

--endpoints="https://192.168.80.40:2379,https://192.168.80.50:2379,https://192.168.80.60:2379" \

set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

//查看写入的信息

etcdctl \

--ca-file=ca.pem \

--cert-file=server.pem \

--key-file=server-key.pem \

--endpoints="https://192.168.80.40:2379,https://192.168.80.50:2379,https://192.168.80.60:2379" \

get /coreos.com/network/config

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

node上操作

① 拷贝(flannel.sh flannel-v0.10.0-linux-amd64.tar.gz)到所有node节点(只需要部署在node节点即可)

//所有node节点操作解压

[root@node-01 yum.repos.d]# cd /opt/

[root@node-01 opt]# rz -E

[root@node-01 opt]# ls

containerd etcd flannel.sh flannel-v0.10.0-linux-amd64.tar.gz rh

[root@node-01 opt]# vim flannel.sh

[root@node-01 opt]# tar zxvf flannel-v0.10.0-linux-amd64.tar.gz

//k8s工作目录

[root@node-01 opt]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@node-01 opt]# mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/

[root@node-01 opt]# chmod +x *.sh

//开启flannel网络功能

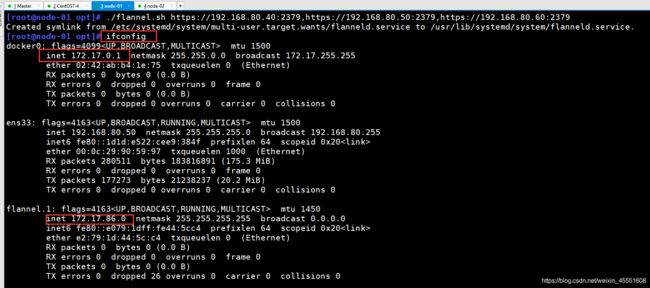

[root@node-02 opt]# ./flannel.sh https://192.168.80.40:2379,https://192.168.80.50:2379,https://192.168.80.60:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@node-01 opt]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ab:b4:1e:75 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.80.50 netmask 255.255.255.0 broadcast 192.168.80.255

inet6 fe80::1d1d:e522:cee9:384f prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:90:59:97 txqueuelen 1000 (Ethernet)

RX packets 280511 bytes 183816891 (175.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 177273 bytes 21238237 (20.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.86.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::e079:1dff:fe44:5cc4 prefixlen 64 scopeid 0x20<link>

ether e2:79:1d:44:5c:c4 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 26 overruns 0 carrier 0 collisions 0

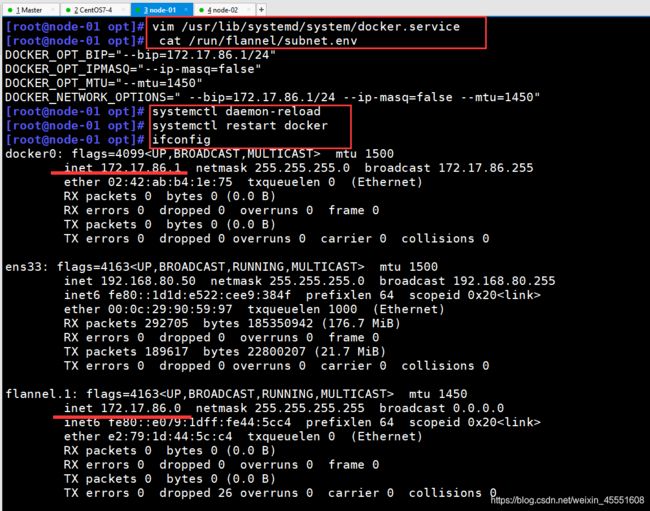

② 配置docker连接flannel

[root@node-01 opt]# vim /usr/lib/systemd/system/docker.service

1 [Unit]

2 Description=Docker Application Container Engine

3 Documentation=https://docs.docker.com

4 After=network-online.target firewalld.service containerd.service

5 Wants=network-online.target

6 Requires=docker.socket containerd.service

7

8 [Service]

9 Type=notify

10 # the default is not to use systemd for cgroups because the delegate issues still

11 # exists and systemd currently does not support the cgroup feature set required

12 # for containers run by docker

13 EnvironmentFile=/run/flannel/subnet.env //添加此项

14 ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock //添加$DOCKER_NETWORK_OPTIONS

15 ExecReload=/bin/kill -s HUP $MAINPID

16 TimeoutSec=0

17 RestartSec=2

----------------------------------------------------------------------------------------------------

[root@node-01 opt]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.86.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.86.1/24 --ip-masq=false --mtu=1450"

//说明:bip指定启动时的子网

----------------------------------------------------------------------------------------------------

//重启docker服务,ifconfig查看docker0和flannel.1

[root@node-01 opt]# systemctl daemon-reload

[root@node-01 opt]# systemctl restart docker

[root@node-01 opt]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.86.1 netmask 255.255.255.0 broadcast 172.17.86.255

ether 02:42:ab:b4:1e:75 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.80.50 netmask 255.255.255.0 broadcast 192.168.80.255

inet6 fe80::1d1d:e522:cee9:384f prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:90:59:97 txqueuelen 1000 (Ethernet)

RX packets 292705 bytes 185350942 (176.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 189617 bytes 22800207 (21.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.17.86.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::e079:1dff:fe44:5cc4 prefixlen 64 scopeid 0x20<link>

ether e2:79:1d:44:5c:c4 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 26 overruns 0 carrier 0 collisions 0

③ 测试ping通对方docker0网卡 证明flannel起到路由作用

[root@node-01 opt]# docker run -it centos:7 /bin/bash

[root@6971477e60ef /]# yum install net-tools -y

[root@6971477e60ef /]# ping 172.17.38.2

四、部署master组件

① 导入master.zip 并解压

[root@master opt]# rz -E

[root@master opt]# unzip master.zip

Archive: master.zip

inflating: apiserver.sh

inflating: controller-manager.sh

inflating: scheduler.sh

[root@master opt]# mkdir /opt/kubernetes/{cfg,bin,ssl} -p

[root@master opt]# mv *.sh /opt/k8s/

[root@master opt]# cd /opt/k8s/

[root@master k8s]# mkdir k8s-cert

[root@master k8s]# cd k8s-cert/

[root@master k8s-cert]# rz -E #导入k8s-cert.sh

[root@master k8s-cert]# ls

k8s-cert.sh

[root@master k8s-cert]# vim k8s-cert.sh //修改相关IP

[root@master k8s-cert]# chmod +x k8s-cert.sh

[root@master k8s-cert]# ./k8s-cert.sh

[root@master k8s-cert]# ls *pem

admin-key.pem admin.pem apiserver-key.pem apiserver.pem ca-key.pem ca.pem kube-proxy-key.pem kube-proxy.pem

[root@master k8s-cert]# cp ca*pem apiserver*pem /opt/kubernetes/ssl/

[root@master k8s-cert]# cd ..

③解压kubernetes压缩包

[root@master k8s]# rz -E(kubernetes-server-linux-amd64.tar.gz)

[root@master k8s]# tar zxvf kubernetes-server-linux-amd64.tar.gz

[root@master k8s]# cd kubernetes/server/bin/

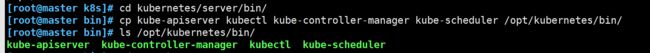

④复制关键命令文件

[root@master k8s]# cp kube-apiserver kubectl kube-controller-manager kube-scheduler /opt/kubernetes/bin/

[root@master bin]# cd /opt/k8s/

⑤ 使用 head -c 16 /dev/urandom | od -An -t x | tr -d ’ ’ 可以随机生成序列号

[root@master k8s]# vim /opt/kubernetes/cfg/token.csv

0fb61c46f8991b718eb38d27b605b008,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

⑥ 二进制文件,token,证书都准备好,开启apiserver

[root@master k8s]# chmod +x apiserver.sh

[root@master k8s]# ./apiserver.sh 192.168.80.40 https://192.168.80.40:2379,https://192.168.80.50:2379,https://192.168.80.60:2379

//检查进程是否启动成功

[root@master k8s]# ps aux | grep kube

[root@master k8s]# cat /opt/kubernetes/cfg/kube-apiserver

[root@master k8s]# netstat -ntap | grep 6443

tcp 0 0 192.168.80.40:6443 0.0.0.0:* LISTEN 11576/kube-apiserve

tcp 0 0 192.168.80.40:6443 192.168.80.40:37070 ESTABLISHED 11576/kube-apiserve

tcp 0 0 192.168.80.40:37070 192.168.80.40:6443 ESTABLISHED 11576/kube-apiserve

[root@master k8s]# netstat -ntap | grep 8080

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 11576/kube-apiserve

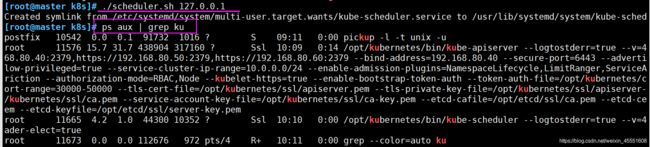

⑧ 启动scheduler服务

[root@master k8s]# ./scheduler.sh 127.0.0.1

[root@master k8s]# ps aux | grep ku

⑨ 启动controller-manager

[root@master k8s]# chmod +x controller-manager.sh

[root@master k8s]# ./controller-manager.sh 127.0.0.1

[root@master k8s]# /opt/kubernetes/bin/kubectl get cs

NAME STATUS MESSAGE ERROR

etcd-1 Healthy {"health":"true"}

controller-manager Healthy ok

scheduler Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

五、node节点部署

① master上操作

//把 kubelet、kube-proxy拷贝到node节点上去

[root@master k8s]# cd /opt/k8s/kubernetes/server/bin

[root@master bin]# scp kubelet kube-proxy root@192.168.80.50:/opt/kubernetes/bin/

[root@master bin]# scp kubelet kube-proxy root@192.168.80.60:/opt/kubernetes/bin/

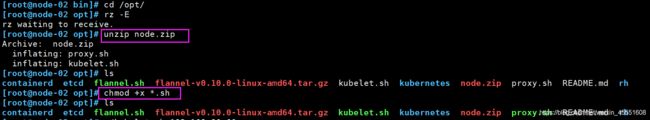

② nod01、node2节点操作(复制node.zip到/root目录下再解压)

[root@node-01 bin]# cd /opt/

[root@node-01 opt]# rz -E(node.zip )

[root@node-01 opt]# unzip node.zip //解压node.zip,获得kubelet.sh proxy.sh

Archive: node.zip

inflating: proxy.sh

inflating: kubelet.sh

[root@node-01 opt]# chmod +x *.sh

③ 在master上操作

//传入(kubeconfig.sh )文件

[root@master bin]# cd /opt/k8s/

[root@master k8s]# mkdir kubeconfig

[root@master k8s]# cd kubeconfig/

[root@master kubeconfig]# pwd

/opt/k8s/kubeconfig

[root@master kubeconfig]# rz -E

[root@master kubeconfig]# chmod +x kubeconfig.sh

[root@master kubeconfig]# cat /opt/kubernetes/cfg/token.csv

0fb61c46f8991b718eb38d27b605b008,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

![]()

⑤ # 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=0fb61c46f8991b718eb38d27b605b008 \

--kubeconfig=bootstrap.kubeconfig

⑥ 设置环境变量(可以写入到/etc/profile中)

[root@master kubeconfig]# export PATH=$PATH:/opt/kubernetes/bin/

[root@master kubeconfig]# kubectl get cs

NAME STATUS MESSAGE ERROR

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

scheduler Healthy ok

etcd-0 Healthy {"health":"true"}

controller-manager Healthy ok

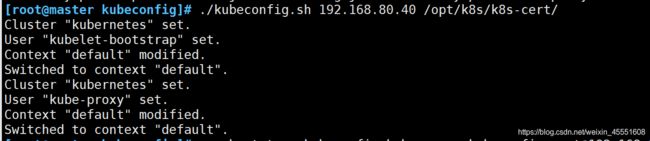

[root@master kubeconfig]# ./kubeconfig.sh 192.168.80.40 /opt/k8s/k8s-cert/

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" modified.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" modified.

Switched to context "default".

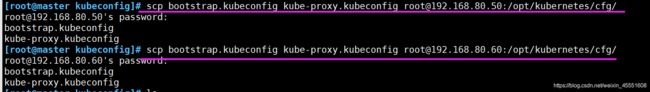

[root@master kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.80.50:/opt/kubernetes/cfg/

[root@master kubeconfig]# scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.80.60:/opt/kubernetes/cfg/

⑨ 创建bootstrap角色赋予权限用于连接apiserver请求签名(关键)

[root@master kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

//查看角色

[root@master kubeconfig]# kubectl get clusterroles | grep system:node-bootstrapper

system:node-bootstrapper 66m

//查看已授权角色

[root@master kubeconfig]# kubectl get clusterrolebinding

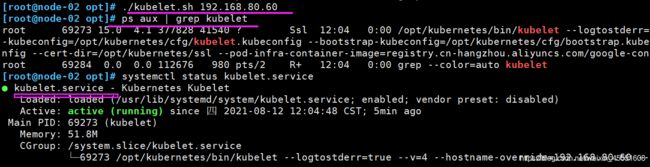

⑩ 在node01、node02节点上操作

//启动kubelet服务

[root@node-01 opt]# ./kubelet.sh 192.168.80.50

//检查kubelet服务启动

[root@node-01 opt]# ps aux | grep kubelet

root 30655 4.0 4.1 377828 41160 ? Ssl 12:04 0:00 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --hostname-override=192.168.80.50 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --config=/opt/kubernetes/cfg/kubelet.config --cert-dir=/opt/kubernetes/ssl --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

root 30672 0.0 0.0 112676 972 pts/2 R+ 12:04 0:00 grep --color=auto kubelet

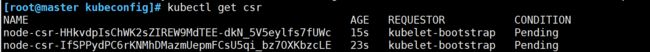

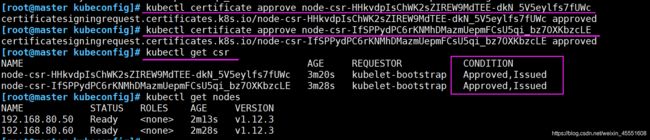

[root@master kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-HHkvdpIsChWK2sZIREW9MdTEE-dkN_5V5eylfs7fUWc 15s kubelet-bootstrap Pending

node-csr-IfSPPydPC6rKNMhDMazmUepmFCsU5qi_bz7OXKbzcLE 23s kubelet-bootstrap Pending

Pending(等待集群给该节点颁发证书)

[root@master kubeconfig]# kubectl certificate approve node-csr-HHkvdpIsChWK2sZIREW9MdTEE-dkN_5V5eylfs7fUWc

certificatesigningrequest.certificates.k8s.io/node-csr-HHkvdpIsChWK2sZIREW9MdTEE-dkN_5V5eylfs7fUWc approved

[root@master kubeconfig]# kubectl certificate approve node-csr-IfSPPydPC6rKNMhDMazmUepmFCsU5qi_bz7OXKbzcLE

certificatesigningrequest.certificates.k8s.io/node-csr-IfSPPydPC6rKNMhDMazmUepmFCsU5qi_bz7OXKbzcLE approved

[root@master kubeconfig]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-HHkvdpIsChWK2sZIREW9MdTEE-dkN_5V5eylfs7fUWc 3m20s kubelet-bootstrap Approved,Issued

node-csr-IfSPPydPC6rKNMhDMazmUepmFCsU5qi_bz7OXKbzcLE 3m28s kubelet-bootstrap Approved,Issued

[root@master kubeconfig]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.80.50 Ready <none> 2m13s v1.12.3

192.168.80.60 Ready <none> 2m28s v1.12.3

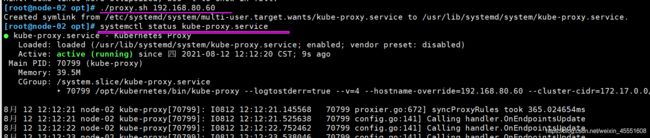

⑩③ 在node01、node02节点操作,启动proxy服务

[root@node-01 opt]# ./proxy.sh 192.168.80.50

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

[root@node-01 opt]# systemctl status kube-proxy.service

● kube-proxy.service - Kubernetes Proxy

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since 四 2021-08-12 12:10:50 CST; 9s ago

Main PID: 31826 (kube-proxy)

Memory: 37.4M

CGroup: /system.slice/kube-proxy.service