Python开源项目VQFR——人脸重建(Face Restoration),模糊清晰、划痕修复及黑白上色的实践

Python Anaconda 的安装等请参阅:

Python开源项目CodeFormer——人脸重建(Face Restoration),模糊清晰、划痕修复及黑白上色的实践![]() https://blog.csdn.net/beijinghorn/article/details/134334021

https://blog.csdn.net/beijinghorn/article/details/134334021

VQFR也是 腾讯 LAB 的作品,比较忠于德国 VQGAN 思想的,速度虽然慢一点,效果凑合。用于修正国人脸,效果一般。代码比较精炼,笔者用 Python2Sharp 转为 C++/C# 复现,速度与效果尚可。

6 VQFR (ECCV 2022 Oral)

https://github.com/TencentARC/VQFR

https://github.com/TencentARC/VQFR/releases 模型下载

download Open issue Closed issue LICENSE google colab logo

Colab Demo for VQFR

https://colab.research.google.com/drive/1Nd_PUrHaYmeEAOF5f_Zi0VuOxlJ62gLr?usp=sharing

Online demo: Replicate.ai (may need to sign in, return the whole image)

https://replicate.com/tencentarc/vqfr

6.1 进化史Updates

2022.10.16 Clean research codes & Update VQFR-v2. In this version, we emphasize the restoration quality of the texture branch and balance fidelity with user control. google colab logo

Support enhancing non-face regions (background) with Real-ESRGAN.

The Colab Demo of VQFR is created.

The training/inference codes and pretrained models in paper are released.

This paper aims at investigating the potential and limitation of Vector-Quantized (VQ) dictionary for blind face restoration.

We propose a new framework VQFR – incoporating the Vector-Quantized Dictionary and the Parallel Decoder. Compare with previous arts, VQFR produces more realistic facial details and keep the comparable fidelity.

VQFR: Blind Face Restoration with Vector-Quantized Dictionary and Parallel Decoder

[Paper] [Project Page] [Video] [B站] [Poster] [Slides]

Yuchao Gu, Xintao Wang, Liangbin Xie, Chao Dong, Gen Li, Ying Shan, Ming-Ming Cheng

Nankai University; Tencent ARC Lab; Tencent Online Video; Shanghai AI Laboratory;

Shenzhen Institutes of Advanced Technology, Chinese Academy of Sciences

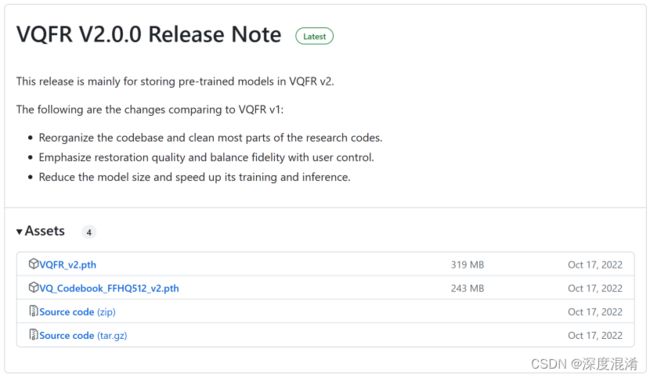

Release

去这里下载模型。

6.2 依赖项与安装Dependencies and Installation

6.2.1 依赖项Dependencies

Python >= 3.7 (Recommend to use Anaconda or Miniconda)

PyTorch >= 1.7

Option: NVIDIA GPU + CUDA

Option: Linux

6.2.2 安装Installation

6.2.2.1 Clone repo

git clone https://github.com/TencentARC/VQFR.git

cd VQFR

6.2.2.2 Install dependent packages

# Build VQFR with extension

pip install -r requirements.txt

VQFR_EXT=True python setup.py develop

# Following packages are required to run demo.py

# Install basicsr - https://github.com/xinntao/BasicSR

pip install basicsr

# Install facexlib - https://github.com/xinntao/facexlib

# We use face detection and face restoration helper in the facexlib package

pip install facexlib

# If you want to enhance the background (non-face) regions with Real-ESRGAN,

# you also need to install the realesrgan package

pip install realesrgan

6.3 快速指南Quick Inference

Download pre-trained VQFRv1/v2 models [Google Drive].

https://drive.google.com/drive/folders/1lczKYEbARwe27FJlKoFdng7UnffGDjO2?usp=sharing

https://github.com/TencentARC/VQFR/releases/download/v2.0.0/VQFR_v2.pth

https://github.com/TencentARC/VQFR/releases/download/v2.0.0/VQ_Codebook_FFHQ512_v2.pth

Inference

# for real-world image

python demo.py -i inputs/whole_imgs -o results -v 2.0 -s 2 -f 0.1

# for cropped face

python demo.py -i inputs/cropped_faces/ -o results -v 2.0 -s 1 -f 0.1 --aligned

Usage: python demo.py -i inputs/whole_imgs -o results -v 2.0 -s 2 -f 0.1 [options]...

-h show this help

-i input Input image or folder. Default: inputs/whole_imgs

-o output Output folder. Default: results

-v version VQFR model version. Option: 1.0. Default: 1.0

-f fidelity_ratio VQFRv2 model supports user control fidelity ratio, range from [0,1]. 0 for the best quality and 1 for the best fidelity. Default: 0

-s upscale The final upsampling scale of the image. Default: 2

-bg_upsampler background upsampler. Default: realesrgan

-bg_tile Tile size for background sampler, 0 for no tile during testing. Default: 400

-suffix Suffix of the restored faces

-only_center_face Only restore the center face

-aligned Input are aligned faces

-ext Image extension. Options: auto | jpg | png, auto means using the same extension as inputs. Default: auto

6.4 自我训练Training

We provide the training codes for VQFR (used in our paper).

Dataset preparation: FFHQ

https://github.com/NVlabs/ffhq-dataset

Download lpips weights [Google Drive] into experiments/pretrained_models/

https://drive.google.com/drive/folders/1weXfn5mdIwp2dEfDbNNUkauQgo8fx-2D?usp=sharing

Codebook Training

Pre-train VQ codebook on FFHQ datasets.

python -m torch.distributed.launch --nproc_per_node=8 --master_port=2022 vqfr/train.py -opt options/train/VQGAN/train_vqgan_v1_B16_800K.yml --launcher pytorch

Or download our pretrained VQ codebook Google Drive and put them in the experiments/pretrained_models folder.

https://drive.google.com/drive/folders/1lczKYEbARwe27FJlKoFdng7UnffGDjO2?usp=sharing

Restoration Training

Modify the configuration file options/train/VQFR/train_vqfr_v1_B16_200K.yml accordingly.

Training

python -m torch.distributed.launch --nproc_per_node=8 --master_port=2022 vqfr/train.py -opt options/train/VQFR/train_vqfr_v1_B16_200K.yml --launcher pytorch

6.5 评估Evaluation

We evaluate VQFR on one synthetic dataset CelebA-Test, and three real-world datasets LFW-Test, CelebChild and Webphoto-Test. For reproduce our evaluation results, you need to perform the following steps:

1 Download testing datasets (or VQFR results) by the following links:

Name Datasets Short Description Download VQFR Results

Testing Datasets CelebA-Test(LQ/HQ) 3000 (LQ, HQ) synthetic images for testing Google Drive Google Drive

LFW-Test(LQ) 1711 real-world images for testing

CelebChild(LQ) 180 real-world images for testing

Webphoto-Test(LQ) 469 real-world images for testing

2 Install related package and download pretrained models for different metrics:

# LPIPS

pip install lpips

# Deg.

cd metric_paper/

git clone https://github.com/ronghuaiyang/arcface-pytorch.git

mv arcface-pytorch/ arcface/

rm arcface/config/__init__.py arcface/models/__init__.py

# put pretrained models of different metrics to "experiments/pretrained_models/metric_weights/"

Metrics Pretrained Weights Download

FID inception_FFHQ_512.pth Google Drive

Deg resnet18_110.pth

LMD alignment_WFLW_4HG.pth

Generate restoration results:

Specify the dataset_lq/dataset_gt to the testing dataset root in test_vqfr_v1.yml.

Then run the following command:

python vqfr/test.py -opt options/test/VQFR/test_vqfr_v1.yml

Run evaluation:

# LPIPS|PSNR/SSIM|LMD|Deg.

python metric_paper/[calculate_lpips.py|calculate_psnr_ssim.py|calculate_landmark_distance.py|calculate_cos_dist.py]

-restored_folder folder_to_results -gt_folder folder_to_gt

# FID|NIQE

python metric_paper/[calculate_fid_folder.py|calculate_niqe.py] -restored_folder folder_to_results

6.6 权利License

VQFR is released under Apache License Version 2.0.

6.7 知识Acknowledgement

Thanks to the following open-source projects:

Taming-transformers

https://github.com/CompVis/taming-transformers

GFPGAN

https://github.com/TencentARC/GFPGAN

DistSup

https://github.com/distsup/DistSup

6.8 引用Citation

@inproceedings{gu2022vqfr,

title={VQFR: Blind Face Restoration with Vector-Quantized Dictionary and Parallel Decoder},

author={Gu, Yuchao and Wang, Xintao and Xie, Liangbin and Dong, Chao and Li, Gen and Shan, Ying and Cheng, Ming-Ming},

year={2022},

booktitle={ECCV}

}

6.9 联系Contact

If you have any question, please email [email protected].