K8S集群安装

1、k8s快速入门

1)、简介

Kubernetes简介k8s。是用于自动部署,扩展和管理容器化应用程序的开源系统。

中文官网:https://kubernetes.io/zh/

中文社区:https://www.kubernetes.org.cn/

官方文档:https://kubernetes.io/zh/docs/home/

社区文档:http://docs.kubernetes.org.cn/

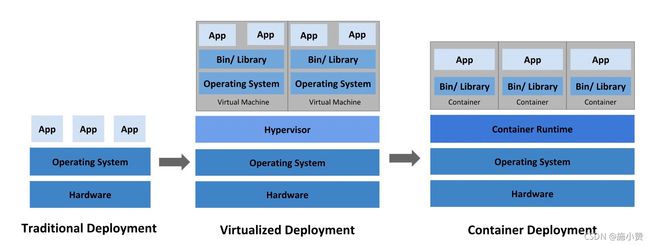

- 部署方式的进化

https://kubernetes.io/zh/docs/concepts/overview/what-is-kubernetes/

2)、架构

1、整体主从方式

2、Master节点架构

- kube-apiserver

1、对外暴露k8s的api接口,是外界进行资源操作的唯一入口

2、提供认证、授权、访问控制、API注册和发现机制

- etcd

1、etcd是兼具一致性和高可用性的键值数据库,可以作为保存Kubernetes所有集群数据的后台数据库

2、Kubernetes集群的etcd数据库通常需要有个备份计划

- kube-scheduler

1、主节点上的组件,该组件监视那些新创建的未指定运行节点的pod,并选择节点让Pod在上面运行。

2、所有k8s的集群操作,都必须经过主节点进行调度

- kube-controller-manager

1、在主节点上运行控制器的组件

2、这些控制器包括:

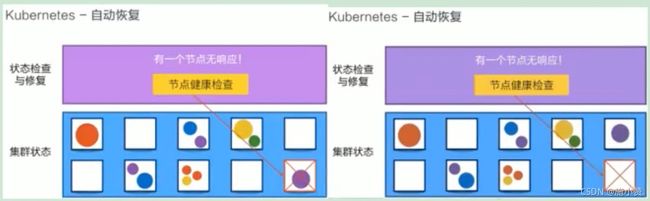

节点控制器(Node Controller): 负责在节点出现故障时进行通知和响应。

副本控制器(Replication Controller): 负责为系统中的每个副本控制器对象维护正确数量的 Pod。

端点控制器(Endpoints Controller): 填充端点(Endpoints)对象(即加入 Service 与 Pod)。

服务帐户和令牌控制器(Service Account & Token Controllers): 为新的命名空间创建默认帐户和 API 访问令牌.

- cloud-controller-manager

3、Node节点架构

- Kubelet

1、一个在集群中每个节点上运行的代理。它保证容器都运行在 Pod 中。

2、负责维护容器的生命周期,同时也负责Volume(CSI)和网络(CNI)的管理;

- kube-proxy

1、负责为Service提供cluster内部的服务发现和负载均衡

- 容器运行环境(Contaniner Runtime)

1、容器运行环境是负责运行容器的软件

2、Kubernetes支持多个容器运行环境Docker、containerd、cri-o、rktlet以及任何实现Kubernetes CRI(容器运行环境接口)。

- fluentd

1、是一个守护进程,它有助于提供集群层面日志 集群层面的日志

3)、概念

- Container:容器,可以是docker启动的一个容器

- Pod:

k8s使用Pod来组织一组容器

一个Pod中的所有容器共享同一网络

Pod是k8s中最小部署单元

- Volume

申明在Pod容器中可访问的文件目录

可以被挂载在Pod中一个或多个容器指定路径下

支持多种后端存储抽象(本地存储,分布式存储,云存储...)

- Controllers:更高层次对象,部署和管理Pod

ReplicaSet:确保预期的Pod副本数量

Deplotment:无状态应用部署

StatefulSet:有状态应用部署

DaemonSet:确保所有Node都运行一个指定Pod

Job:一次性任务

Cronjob: 定时任务

- Deployment:

定义一组Pod的副本数目、版本等

通过控制器( Controller) 维持Pod数目(自动回复失败的Pod)

通过控制器以指定的策略控制版本(滚动升级,回滚等)

- Service

定义一组Pod的访问策略

Pod的负载均衡,提供一个或者多个Pod的稳定访问地址

- Label:标签,用于对象资源的查询,筛选

- Namespace:命名空间,逻辑隔离

一个集群内部的逻辑隔离机制(鉴权,资源)

每个资源都属于一个namespace

同一个namespace所有资源名不能重复

不同namespace可以资源名重复

API:

我们通过kubernetes的API来操作整个集群

可以通过kubectl,ui,curl最终发送http+json/yaml方式的请求给API Server,然后控制k8s集群。k8s里的所有的资源对象都可以采用yaml或JSON格式的文件定义或描述

4)、快速体验

1、安装minikube

https://github.com/kubernetes/minikube/releases

2、k8s集群安装

准备三台虚拟机

| shell连接ip |

k8s集群内部通讯ip |

|

| k8s-node1 |

192.169.54.161 |

172.16.74.143 |

| k8s-node2 |

192.169.54.162 |

172.16.74.142 |

| k8s-node3 |

192.169.54.163 |

172.16.74.141 |

前置条件网络环境准备

①两个网络适配器,一个用于外网访问,另一个用于虚拟机之间访问

[root@k8s-node1 ~]# ip route show

default via 192.168.54.2 dev ens33 proto static metric 100

default via 172.16.74.1 dev ens37 proto static metric 101

172.16.74.0/24 dev ens37 proto kernel scope link src 172.16.74.143 metric 101

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

172.244.0.0/24 dev cni0 proto kernel scope link src 172.244.0.1

172.244.1.0/24 via 172.244.1.0 dev flannel.1 onlink

172.244.2.0/24 via 172.244.2.0 dev flannel.1 onlink

192.168.54.0/24 dev ens33 proto kernel scope link src 192.168.54.161 metric 100

192.168.122.0/24 dev virbr0 proto kernel scope link src 192.168.122.1

1、关闭防火墙

[root@k8s-node1 ~]# systemctl stop firewalld

[root@k8s-node1 ~]# systemctl disable firewalld

2、关闭selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 03、关闭swap

swapoff -a 临时

sed -ri 's/.*swap.*/#&/' /etc/fstab 永久

free -g验证,swap必须为0;执行前

[root@k8s-node1 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Mon Jul 6 14:29:36 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=86fdaab6-5347-4856-9a59-66bdad7be4be /boot xfs defaults 0 0

/dev/mapper/centos-swap swap swap defaults 0 0执行后 #/dev/mapper/centos-swap swap swap defaults 0 0

[root@k8s-node1 ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Mon Jul 6 14:29:36 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=86fdaab6-5347-4856-9a59-66bdad7be4be /boot xfs defaults 0 0

#/dev/mapper/centos-swap swap swap defaults 0 0

主机名一定不能是localhost

[root@k8s-node1 ~]# hostname

k8s-node14、添加主机名与ip对应关系

vim /etc/hosts

172.16.74.143 k8s-node1

172.16.74.142 k8s-node2

172.16.74.141 k8s-node3将桥接的IPV4流量传递到iptables的链:

cat > /etc/sysctl.d/k8s.conf<5、所有节点安装docker、kubeadm、kubelet、kubectl

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker

1)、安装docker 见docker相关资料

https://note.youdao.com/s/LxErQCL8

2)、添加阿里云yum源

# 由于kubernetes的镜像源在国外,速度比较慢,这里切换成国内的镜像源

cat > /etc/yum.repos.d/kubernetes.repo<# 安装kubeadm、kubelet和kubectl

yum list|grep kube #检查yum源是否有与kube相关的软件

yum install -y kubelet-1.17.3 kubeadm-1.17.3 kubectl-1.17.3systemctl enable kubelet

systemctl start kubelet以上命令三台机子均需执行

6、部署k8s-mater

使用master_images.sh下载镜像

1)、master节点初始化

./master_images.sh #在主节点执行

kubeadm init \

--apiserver-advertise-address=172.16.74.143 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version=v1.17.3 \

--service-cidr=172.96.0.0/16 \

--pod-network-cidr=172.244.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

安装、准备网络

kubectl apply -f kube-flannel.yml

#删除命令 kubectl delete -f kube-flannel.yml

[root@k8s-node1 k8s]# kubectl get pods

No resources found in default namespace.

[root@k8s-node1 k8s]# kubectl get ns

NAME STATUS AGE

default Active 10m

kube-node-lease Active 10m

kube-public Active 10m

kube-system Active 10m

[root@k8s-node1 k8s]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7f9c544f75-k7vbw 1/1 Running 0 11m

kube-system coredns-7f9c544f75-zrqx8 1/1 Running 0 11m

kube-system etcd-k8s-node1 1/1 Running 0 11m

kube-system kube-apiserver-k8s-node1 1/1 Running 0 11m

kube-system kube-controller-manager-k8s-node1 1/1 Running 0 11m

kube-system kube-flannel-ds-amd64-t56wf 1/1 Running 0 2m29s

kube-system kube-proxy-khj4b 1/1 Running 0 11m

kube-system kube-scheduler-k8s-node1 1/1 Running 0 11m

如果出现 kube-flannel-ds-amd64 ImagePullBackOff 的问题请至文末查看

[root@k8s-node1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready master 13m v1.17.3

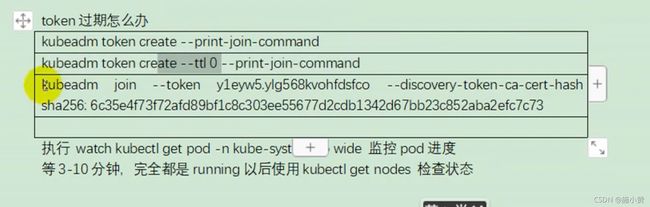

# k8s-node2 k8s-node3执行 加入主节点

kubeadm join 172.16.74.133:6443 --token 3di9a8.oybdvm3vssjsanu5 \

--discovery-token-ca-cert-hash sha256:0d799243bbf7eccadd09925115af1fc5f710656dc979938bd08ebe3694894b36

[root@k8s-node1 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-node1 Ready master 15m v1.17.3

k8s-node2 NotReady 34s v1.17.3

k8s-node3 NotReady 29s v1.17.3

监控pod进度

watch kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-7f9c544f75-k7vbw 1/1 Running 0 17m 172.244.0.3 k8s-node1

coredns-7f9c544f75-zrqx8 1/1 Running 0 17m 172.244.0.2 k8s-node1

etcd-k8s-node1 1/1 Running 0 17m 172.16.74.133 k8s-node1

kube-apiserver-k8s-node1 1/1 Running 0 17m 172.16.74.133 k8s-node1

kube-controller-manager-k8s-node1 1/1 Running 0 17m 172.16.74.133 k8s-node1

kube-flannel-ds-amd64-csb8l 1/1 Running 0 2m53s 172.16.74.132 k8s-node3

kube-flannel-ds-amd64-pzmn4 1/1 Running 0 2m58s 172.16.74.134 k8s-node2

kube-flannel-ds-amd64-t56wf 1/1 Running 0 8m5s 172.16.74.133 k8s-node1

kube-proxy-cm79j 1/1 Running 0 2m58s 172.16.74.134 k8s-node2

kube-proxy-fdsmh 1/1 Running 0 2m53s 172.16.74.132 k8s-node3

kube-proxy-khj4b 1/1 Running 0 17m 172.16.74.133 k8s-node1

kube-scheduler-k8s-node1 1/1 Running 0 17m 172.16.74.133 k8s-node1

172.16.74.143 为master虚拟机内部沟通网络ip

[root@k8s-node1 ~]# ip route show

default via 192.168.54.2 dev ens37 proto static metric 101

172.16.74.0/24 dev ens33 proto kernel scope link src 172.16.74.143 metric 100

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

192.168.54.0/24 dev ens37 proto kernel scope link src 192.168.54.160 metric 101

192.168.122.0/24 dev virbr0 proto kernel scope link src 192.168.122.1 解决 kube-flannel-ds-amd64 ImagePullBackOff 的问题

[root@k8s-mater k8s]# kubectl describe pod kube-flannel-ds-amd64-fbzlm

Error from server (NotFound): pods "kube-flannel-ds-amd64-fbzlm" not found

[root@k8s-mater k8s]# kubectl describe pod kube-flannel-ds-amd64-fbzlm -n kube-system

Name: kube-flannel-ds-amd64-fbzlm

Namespace: kube-system

Priority: 0

Node: k8s-mater/10.206.0.14

Start Time: Wed, 21 Jul 2021 16:00:36 +0800

Labels: app=flannel

controller-revision-hash=67f65bfbc7

pod-template-generation=1

tier=node

Annotations:

Status: Pending

IP: 10.206.0.14

IPs:

IP: 10.206.0.14

Controlled By: DaemonSet/kube-flannel-ds-amd64

Init Containers:

install-cni:

Container ID:

Image: quay.io/coreos/flannel:v0.11.0-amd64

Image ID:

Port:

Host Port:

Command:

cp

Args:

-f

/etc/kube-flannel/cni-conf.json

/etc/cni/net.d/10-flannel.conflist

State: Waiting

Reason: ImagePullBackOff

Ready: False

Restart Count: 0

Environment:

Mounts:

/etc/cni/net.d from cni (rw)

/etc/kube-flannel/ from flannel-cfg (rw)

/var/run/secrets/kubernetes.io/serviceaccount from flannel-token-psllq (ro)

Containers:

kube-flannel:

Container ID:

Image: quay.io/coreos/flannel:v0.11.0-amd64

Image ID:

Port:

Host Port:

Command:

/opt/bin/flanneld

Args:

--ip-masq

--kube-subnet-mgr

State: Waiting

Reason: PodInitializing

Ready: False

Restart Count: 0

Limits:

cpu: 100m

memory: 50Mi

Requests:

cpu: 100m

memory: 50Mi

Environment:

POD_NAME: kube-flannel-ds-amd64-fbzlm (v1:metadata.name)

POD_NAMESPACE: kube-system (v1:metadata.namespace)

Mounts:

/etc/kube-flannel/ from flannel-cfg (rw)

/run/flannel from run (rw)

/var/run/secrets/kubernetes.io/serviceaccount from flannel-token-psllq (ro)

Conditions:

Type Status

Initialized False

Ready False

ContainersReady False

PodScheduled True

Volumes:

run:

Type: HostPath (bare host directory volume)

Path: /run/flannel

HostPathType:

cni:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

flannel-cfg:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kube-flannel-cfg

Optional: false

flannel-token-psllq:

Type: Secret (a volume populated by a Secret)

SecretName: flannel-token-psllq

Optional: false

QoS Class: Burstable

Node-Selectors:

Tolerations: :NoSchedule

node.kubernetes.io/disk-pressure:NoSchedule

node.kubernetes.io/memory-pressure:NoSchedule

node.kubernetes.io/network-unavailable:NoSchedule

node.kubernetes.io/not-ready:NoExecute

node.kubernetes.io/pid-pressure:NoSchedule

node.kubernetes.io/unreachable:NoExecute

node.kubernetes.io/unschedulable:NoSchedule

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 15m default-scheduler Successfully assigned kube-system/kube-flannel-ds-amd64-fbzlm to k8s-mater

Warning Failed 15m kubelet, k8s-mater Failed to pull image "quay.io/coreos/flannel:v0.11.0-amd64": rpc error: code = Unknown desc = Error response from daemon: Get https://quay.io/v2/: read tcp 10.206.0.14:33152->34.197.63.98:443: read: connection reset by peer

Warning Failed 15m kubelet, k8s-mater Failed to pull image "quay.io/coreos/flannel:v0.11.0-amd64": rpc error: code = Unknown desc = Error response from daemon: Get https://quay.io/v2/: read tcp 10.206.0.14:33180->34.197.63.98:443: read: connection reset by peer

Warning Failed 14m kubelet, k8s-mater Failed to pull image "quay.io/coreos/flannel:v0.11.0-amd64": rpc error: code = Unknown desc = Error response from daemon: Get https://quay.io/v2/: read tcp 10.206.0.14:53142->50.16.140.223:443: read: connection reset by peer

Warning Failed 13m (x4 over 15m) kubelet, k8s-mater Error: ErrImagePull

Normal Pulling 13m (x4 over 15m) kubelet, k8s-mater Pulling image "quay.io/coreos/flannel:v0.11.0-amd64"

Warning Failed 13m kubelet, k8s-mater Failed to pull image "quay.io/coreos/flannel:v0.11.0-amd64": rpc error: code = Unknown desc = Error response from daemon: Get https://quay.io/v2/: read tcp 10.206.0.14:41284->44.193.101.5:443: read: connection reset by peer

Normal BackOff 5m26s (x41 over 15m) kubelet, k8s-mater Back-off pulling image "quay.io/coreos/flannel:v0.11.0-amd64"

Warning Failed 16s (x64 over 15m) kubelet, k8s-mater Error: ImagePullBackOff 可以看到 Failed to pull image "quay.io/coreos/flannel:v0.11.0-amd64",网络原因拉不下镜像,所以手动处理,三个节点均进行如下处理

# 手动拉取flannel的docker镜像

docker pull easzlab/flannel:v0.11.0-amd64

# 修改镜像名称

docker tag easzlab/flannel:v0.11.0-amd64 quay.io/coreos/flannel:v0.11.0-amd64