Python开源项目GPEN——人脸重建(Face Restoration),模糊清晰、划痕修复及黑白上色的实践

无论是自己、家人或是朋友、客户的照片,免不了有些是黑白的、被污损的、模糊的,总想着修复一下。作为一个程序员 或者 程序员的家属,当然都有责任满足他们的需求、实现他们的想法。除了这个,学习了本文的成果,或许你还可以用来赚点小钱。

Windows下Python及Anaconda的安装与设置、代码执行之保姆指南![]() https://blog.csdn.net/beijinghorn/article/details/134347642

https://blog.csdn.net/beijinghorn/article/details/134347642

8 GPEN

8.1 论文Paper

GAN Prior Embedded Network for Blind Face Restoration in the Wild

Paper: https://arxiv.org/abs/2105.06070

Supplementary: https://www4.comp.polyu.edu.hk/~cslzhang/paper/GPEN-cvpr21-supp.pdf

Demo: https://vision.aliyun.com/experience/detail?spm=a211p3.14020179.J_7524944390.17.66cd4850wVDkUQ&tagName=facebody&children=EnhanceFace

ModelScope: https://www.modelscope.cn/models/damo/cv_gpen_image-portrait-enhancement/summary

作者:

Tao Yang, Peiran Ren, Xuansong Xie, https://cg.cs.tsinghua.edu.cn/people/~tyang

Lei Zhang https://www4.comp.polyu.edu.hk/~cslzhang

DAMO Academy, Alibaba Group, Hangzhou, China

Department of Computing, The Hong Kong Polytechnic University, Hong Kong, China

8.2 功能

8.2.1 旧照修复Face Restoration

8.2.2 纹理重建Selfie Restoration

8.2.3 人脸重建Face Colorization

8.2.4 划痕修复Face Inpainting

8.2.5 Conditional Image Synthesis (Seg2Face)

8.3 News

(2023-02-15) GPEN-BFR-1024 and GPEN-BFR-2048 are now publicly available. Please download them via [ModelScope2].

(2023-02-15) We provide online demos via [ModelScope1] and [ModelScope2].

(2022-05-16) Add x1 sr model. Add --tile_size to avoid OOM.

(2022-03-15) Add x4 sr model. Try --sr_scale.

(2022-03-09) Add GPEN-BFR-2048 for selfies. I have to take it down due to commercial issues. Sorry about that.

(2021-12-29) Add online demos Hugging Face Spaces. Many thanks to CJWBW and AK391.

(2021-12-16) Release a simplified training code of GPEN. It differs from our implementation in the paper, but could achieve comparable performance. We strongly recommend to change the degradation model.

(2021-12-09) Add face parsing to better paste restored faces back.

(2021-12-09) GPEN can run on CPU now by simply discarding --use_cuda.

(2021-12-01) GPEN can now work on a Windows machine without compiling cuda codes. Please check it out. Thanks to Animadversio. Alternatively, you can try GPEN-Windows. Many thanks to Cioscos.

(2021-10-22) GPEN can now work with SR methods. A SR model trained by myself is provided. Replace it with your own model if necessary.

(2021-10-11) The Colab demo for GPEN is available now google colab logo.

8.4 下载模型 Download models from Modelscope

Install modelscope:

https://www.modelscope.cn/models/damo/cv_gpen_image-portrait-enhancement-hires/summary

https://www.modelscope.cn/models/damo/cv_gpen_image-portrait-enhancement/summary

https://www.modelscope.cn/models/damo/cv_gpen_image-portrait-enhancement-hires/summary

pip install "modelscope[cv]" -f https://modelscope.oss-cn-beijing.aliyuncs.com/releases/repo.html

Run the following codes:

import cv2

from modelscope.pipelines import pipeline

from modelscope.utils.constant import Tasks

from modelscope.outputs import OutputKeys

portrait_enhancement = pipeline(Tasks.image_portrait_enhancement, model='damo/cv_gpen_image-portrait-enhancement-hires')

result = portrait_enhancement('https://modelscope.oss-cn-beijing.aliyuncs.com/test/images/marilyn_monroe_4.jpg')

cv2.imwrite('result.png', result[OutputKeys.OUTPUT_IMG])

It will automatically download the GPEN models. You can find the model in the local path ~/.cache/modelscope/hub/damo. Please note pytorch_model.pt, pytorch_model-2048.pt are respectively the 1024 and 2048 versions.

8.5 依赖项Usage

python: https://img.shields.io/badge/python-v3.7.4-green.svg?style=plastic

pytorch: https://img.shields.io/badge/pytorch-v1.7.0-green.svg?style=plastic

cuda: https://img.shields.io/badge/cuda-v10.2.89-green.svg?style=plastic

driver: https://img.shields.io/badge/driver-v460.73.01-green.svg?style=plastic

gcc: https://img.shields.io/badge/gcc-v7.5.0-green.svg?style=plastic

8.5.1 Clone this repository:

git clone https://github.com/yangxy/GPEN.git

cd GPEN

8.5.2 Download RetinaFace model and our pre-trained model (not our best model due to commercial issues) and put them into weights/.

RetinaFace-R50 https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/RetinaFace-R50.pth

ParseNet-latest https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/ParseNet-latest.pth

model_ir_se50 https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/model_ir_se50.pth

GPEN-BFR-512 https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/GPEN-BFR-512.pth

GPEN-BFR-512-D https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/GPEN-BFR-512-D.pth

GPEN-BFR-256 https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/GPEN-BFR-256.pth

GPEN-BFR-256-D https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/GPEN-BFR-256-D.pth

GPEN-Colorization-1024 https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/GPEN-Colorization-1024.pth

GPEN-Inpainting-1024 https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/GPEN-Inpainting-1024.pth

GPEN-Seg2face-512 https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/GPEN-Seg2face-512.pth

realesrnet_x1 https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/realesrnet_x1.pth

realesrnet_x2 https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/realesrnet_x2.pth

realesrnet_x4 https://public-vigen-video.oss-cn-shanghai.aliyuncs.com/robin/models/realesrnet_x4.pth

8.5.3 Restore face images:

python demo.py --task FaceEnhancement --model GPEN-BFR-512 --in_size 512 --channel_multiplier 2 --narrow 1 --use_sr --sr_scale 4 --use_cuda --save_face --indir examples/imgs --outdir examples/outs-bfr

Colorize faces:

python demo.py --task FaceColorization --model GPEN-Colorization-1024 --in_size 1024 --use_cuda --indir examples/grays --outdir examples/outs-colorization

Complete faces:

python demo.py --task FaceInpainting --model GPEN-Inpainting-1024 --in_size 1024 --use_cuda --indir examples/ffhq-10 --outdir examples/outs-inpainting

Synthesize faces:

python demo.py --task Segmentation2Face --model GPEN-Seg2face-512 --in_size 512 --use_cuda --indir examples/segs --outdir examples/outs-seg2face

Train GPEN for BFR with 4 GPUs:

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=4321 train_simple.py --size 1024 --channel_multiplier 2 --narrow 1 --ckpt weights --sample results --batch 2 --path your_path_of_croped+aligned_hq_faces (e.g., FFHQ)

When testing your own model, set --key g_ema.

Please check out run.sh for more details.

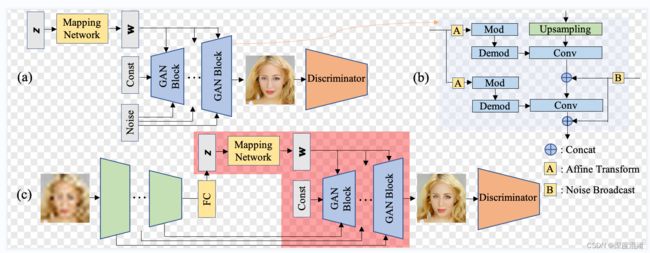

8.6 Main idea

8.7 Citation

If our work is useful for your research, please consider citing:

@inproceedings{Yang2021GPEN,

title={GAN Prior Embedded Network for Blind Face Restoration in the Wild},

author={Tao Yang, Peiran Ren, Xuansong Xie, and Lei Zhang},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2021}

}

8.8 License

© Alibaba, 2021. For academic and non-commercial use only.

8.9 Acknowledgments

We borrow some codes from Pytorch_Retinaface, stylegan2-pytorch, Real-ESRGAN, and GFPGAN.

8.10 Contact

If you have any questions or suggestions about this paper, feel free to reach me at [email protected].