【爬虫】学习:Pyppeteer

和selenium一样好用

基础

python3网络爬虫开发实战第二版——7.4

GitHub

# demo1

import asyncio

from pyppeteer import launch

from pyquery import PyQuery as pq

async def main():

browser=await launch(headless=False) # 启动一个浏览器 默认开启无界面模式

asyncio.sleep(5)

page=await browser.newPage() # 新建一个标签页

await page.goto('http://spa2.scrape.center') # 访问url

await page.waitForSelector('.item .name') # 等待节点加载出来,否则一直等到超时

resource=await page.content() # 获取页面的源代码

doc=pq(resource) # 解析数据

name=[item.text() for item in doc('.item .name').items()]

print(name)

asyncio.get_event_loop().run_until_complete(main())

输出

['霸王别姬 - Farewell My Concubine', '这个杀手不太冷 - Léon', '肖申克的救赎 - The Shawshank Redemption', '泰坦尼克号 - Titanic', '罗马假日 - Roman Holiday', '唐伯虎点秋香 - Flirting Scholar', '乱世佳人 - Gone with the Wind', '喜剧之王 - The King of Comedy', '楚门的世界 - The Truman Show', '狮子王 - The Lion King']

反屏蔽

页面显示有bug,需要自己指定一下窗口大小

# demo6

import asyncio

from pyppeteer import launch

width, height = 1366, 768

async def main():

# 有界面,关闭提示,设置窗口大小

browser = await launch(headless=False, args=['--disable-infobars', f'--window-size={width},{height}'])

page = await browser.newPage()

await page.setViewport({'width': width, 'height': height})

await page.evaluateOnNewDocument('Object.defineProperty(navigator, "webdriver", {get: () => undefined})') # 反屏蔽

await page.goto('https://antispider1.scrape.center/')

await asyncio.sleep(10)

await browser.close()

asyncio.get_event_loop().run_until_complete(main())

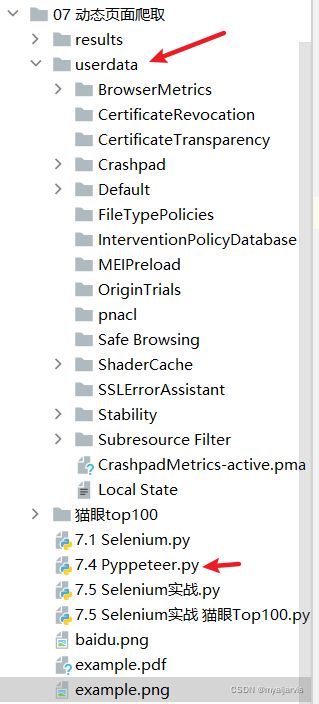

数据持久化

淘宝需要设置反屏蔽

可以扫描登录

第二次运行该程序的时候就不需要再登录了

# demo8

import asyncio

from pyppeteer import launch

width, height = 1366, 768

async def main():

browser = await launch(headless=False, userDataDir='./userdata',

args=['--disable-infobars', f'--window-size={width},{height}'])

page = await browser.newPage()

await page.setViewport({'width': width, 'height': height})

await page.evaluateOnNewDocument('Object.defineProperty(navigator, "webdriver", {get: () => undefined})') # 反屏蔽

await page.goto('https://www.taobao.com')

await asyncio.sleep(10)

await browser.close()

asyncio.get_event_loop().run_until_complete(main())

无痕模式

# demo9

import asyncio

from pyppeteer import launch

width, height = 1200, 768

async def main():

browser = await launch(headless=False,

args=['--disable-infobars', f'--window-size={width},{height}'])

context = await browser.createIncognitoBrowserContext() # 返回一个context对象

page = await context.newPage()

await page.setViewport({'width': width, 'height': height})

await page.goto('https://www.baidu.com')

await asyncio.sleep(10)

await browser.close()

asyncio.get_event_loop().run_until_complete(main())

电影网站

GitHub

python3网络爬虫开发实战第二版——7.6

import asyncio

import logging

logging.basicConfig(level=logging.INFO,

format='%(asctime)s - %(levelname)s: %(message)s')

INDEX_URL = 'https://spa2.scrape.center/page/{page}'

TIMEOUT = 10 # 超时时间

TOTAL_PAGE = 2 # 总页数

WINDOW_WIDTH, WINDOW_HEIGHT = 1366, 768 # 窗口大小

HEADLESS = False # 是否启用无头模式

from pyppeteer import launch

browser, tab = None, None # 浏览器,标签页

async def init():

global browser, tab

browser = await launch(headless=HEADLESS,

args=['--disable-infobars', f'--window-size={WINDOW_WIDTH},{WINDOW_HEIGHT}'])

tab = await browser.newPage()

await tab.setViewport({'width': WINDOW_WIDTH, 'height': WINDOW_HEIGHT})

# 通用爬取方法

async def scrape_page(url, selector):

logging.info('scraping %s', url)

try:

await tab.goto(url) # 跳转

# 直到页面加载达到预定的要求,否则一直等待直到超时

await tab.waitForSelector(selector, options={

'timeout': TIMEOUT * 1000

})

except TimeoutError: # 超时

logging.error('error occurred while scraping %s', url, exc_info=True)

# 爬取列表页

async def scrape_index(page):

url = INDEX_URL.format(page=page)

await scrape_page(url, '.item .name') # 此时浏览器会跳转到列表页,然后一直等到url页面中的'.item .name'这个节点出现

# 爬取详情页

async def scrape_detail(url):

await scrape_page(url, 'h2') # 此时浏览器会跳转到详情页,然后一直等到url页面中的’h2‘节点出现

# 解析列表页

async def parse_index():

# 获取所有满足条件的数据,返回一个列表 参数(选择器,js函数)

# map 遍历数组每一个元素并调用回调函数,并返回一个包含所有结果的数组。

# 返回详情页链接数组

return await tab.querySelectorAllEval('.item .name', 'nodes => nodes.map(node => node.href)')

# '.item .name' 是一个a链接

# 解析详情页

async def parse_detail():

url = tab.url

name = await tab.querySelectorEval('h2', 'node => node.innerText')

categories = await tab.querySelectorAllEval('.categories button span', 'nodes => nodes.map(node => node.innerText)')

cover = await tab.querySelectorEval('.cover', 'node => node.src')

score = await tab.querySelectorEval('.score', 'node => node.innerText')

drama = await tab.querySelectorEval('.drama p', 'node => node.innerText')

return {

'url': url,

'name': name,

'categories': categories,

'cover': cover,

'score': score,

'drama': drama

}

from os import makedirs

from os.path import exists

import json

RESULTS_DIR = 'results_pyppeteer' # 存放结果的文件夹

exists(RESULTS_DIR) or makedirs(RESULTS_DIR) # 不存在就创建

async def save_data(data):

name = data.get('name')

data_path = f'{RESULTS_DIR}/{name}.json'

json.dump(data, open(data_path, 'w', encoding='utf-8'), ensure_ascii=False, indent=2)

async def main():

await init()

try:

for page in range(1, TOTAL_PAGE + 1):

await scrape_index(page)

detail_urls = await parse_index()

# logging.info('details urls %s', detail_urls)

for detail_url in detail_urls:

logging.info('get detail url %s', detail_url)

await scrape_detail(detail_url)

detail_data = await parse_detail()

logging.info('detail data %s', detail_data)

await save_data(detail_data)

finally:

await browser.close()

if __name__ == '__main__':

asyncio.get_event_loop().run_until_complete(main())

猫眼Top100

该网站有反屏蔽webdriver措施

import asyncio

import logging

logging.basicConfig(level=logging.INFO,

format='%(asctime)s - %(levelname)s: %(message)s')

INDEX_URL = 'https://maoyan.com/board/4?offset={offset}'

TIMEOUT = 10 # 超时时间

TOTAL_PAGE = 2 # 总页数

WINDOW_WIDTH, WINDOW_HEIGHT = 1366, 768 # 窗口大小

HEADLESS = False # 是否启用无头模式

from pyppeteer import launch

browser, tab = None, None # 浏览器,标签页

async def init():

global browser, tab

browser = await launch(headless=HEADLESS,

args=['--disable-infobars', f'--window-size={WINDOW_WIDTH},{WINDOW_HEIGHT}'])

tab = await browser.newPage()

await tab.evaluateOnNewDocument('Object.defineProperty(navigator, "webdriver", {get: () => undefined})') # 反屏蔽

await tab.setViewport({'width': WINDOW_WIDTH, 'height': WINDOW_HEIGHT})

# 通用爬取方法

async def scrape_page(url, selector):

logging.info('scraping %s', url)

try:

await tab.goto(url) # 跳转

# 强制等待 爬过快容易被封

await asyncio.sleep(3)

await tab.waitForSelector(selector, options={

'timeout': TIMEOUT * 1000

})

except TimeoutError: # 超时

logging.error('error occurred while scraping %s', url, exc_info=True)

# 爬取列表页

async def scrape_index(offset):

url = INDEX_URL.format(offset=offset)

await scrape_page(url, '.board-wrapper dd') # 此时浏览器会跳转到列表页

# 爬取详情页

async def scrape_detail(url):

await scrape_page(url, '.name') # 此时浏览器会跳转到详情页

# 解析列表页

async def parse_index():

# 获取所有满足条件的数据,返回一个列表 参数(选择器,js函数)

# map 遍历数组每一个元素并调用回调函数,并返回一个包含所有结果的数组。

return await tab.querySelectorAllEval('.name a', 'nodes => nodes.map(node => node.href)')

# 解析详情页

async def parse_detail():

url = tab.url

name = await tab.querySelectorEval('h1', 'node => node.innerText')

categories = await tab.querySelectorAllEval('.ellipsis:first-child a', 'nodes => nodes.map(node => node.innerText)')

cover = await tab.querySelectorEval('.avatar', 'node => node.src')

score = await tab.querySelectorEval('.star-on', 'node => node.style.width.substr(0,2)') # '96%' => '96'

score = float(score) / 10 # 9.6

drama = await tab.querySelectorEval('.dra', 'node => node.innerText')

return {

'url': url,

'name': name,

'categories': categories,

'cover': cover,

'score': score,

'drama': drama

}

from os import makedirs

from os.path import exists

import json

RESULTS_DIR = 'top100_pyppeteer' # 存放结果的文件夹

exists(RESULTS_DIR) or makedirs(RESULTS_DIR) # 不存在就创建

async def save_data(data):

name = data.get('name')

data_path = f'{RESULTS_DIR}/{name}.json'

json.dump(data, open(data_path, 'w', encoding='utf-8'), ensure_ascii=False, indent=2)

async def main():

await init()

try:

for page in range(0, TOTAL_PAGE * 10 + 1, 10):

await scrape_index(page)

detail_urls = await parse_index()

# logging.info('details urls %s', detail_urls)

for detail_url in detail_urls:

logging.info('get detail url %s', detail_url)

await scrape_detail(detail_url)

detail_data = await parse_detail()

logging.info('detail data %s', detail_data)

await save_data(detail_data)

finally:

await browser.close()

if __name__ == '__main__':

asyncio.get_event_loop().run_until_complete(main())

[I:pyppeteer.launcher] Browser listening on: ws://127.0.0.1:8489/devtools/browser/29d438d3-560d-4c51-9408-b0376f5bb747

2021-12-26 17:46:59,348 - INFO: scraping https://maoyan.com/board/4?offset=0

2021-12-26 17:47:09,384 - INFO: get detail url https://www.maoyan.com/films/1200486

2021-12-26 17:47:09,384 - INFO: scraping https://www.maoyan.com/films/1200486

2021-12-26 17:47:15,507 - INFO: detail data {'url': 'https://www.maoyan.com/films/1200486', 'name': '我不是药神', 'categories': [' 剧情 ', '喜剧 '], 'cover': 'https://p0.meituan.net/movie/414176cfa3fea8bed9b579e9f42766b9686649.jpg@464w_644h_1e_1c', 'score': 9.6, 'drama': '印度神油店老板程勇日子过得窝囊,店里没生意,老父病危,手术费筹不齐。前妻跟有钱人怀上了孩子,还要把他儿子的抚养权给拿走。一日,店里来了一个白血病患者,求他从印度带回一批仿制的特效药,好让买不起天价正版药的患者保住一线生机。百般不愿却走投无路的程勇,意外因此一夕翻身,平价特效药救人无数,让他被病患封为“药神”,但随着利益而来的,是一场让他的生活以及贫穷病患性命都陷入危机的多方拉锯战 。'}

...