centos7.9 kubeadm 安装kubernetes集群

第一步:初始化系统

1,三台centos7.9虚拟机,要求内存为2G,CPU为2U。要能访问公网哦!

192.168.206.133 master

192.168.206.134 k8s-node1

192.168.206.135 k8s-node2

三台都要配置hosts:

echo "192.168.206.133 master" >>/etc/hosts

echo "192.168.206.134 k8s-node1" >>/etc/hosts

echo "192.168.206.135 k8s-node2" >>/etc/hosts三台分别修改主机名:

hostname master

hostname k8s-node1

hostname k8s-node2

echo master > /etc/hostname

echo k8s-node1 > /etc/hostname

echo k8s-node2 > /etc/hostname2,配置阿里的yum源,升级系统:

cd /etc/yum.repos.d

wget http://mirrors.aliyun.com/repo/Centos-7.repo

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum clean all

yum repolist

#yum -y update

#reboot

#安装containerd

# 1.安装源和依赖软件包(与docker没区别)

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

yum makecache fast

# 2.安装containerd

yum install containerd -y

containerd --version # 查看containerd版本

# 3.启动containerd

systemctl start containerd && systemctl enable containerd && systemctl status containerd 3,配置基础环境:

systemctl stop firewalld && systemctl disable firewalld

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

setenforce 0

swapoff -a

yes | cp /etc/fstab /etc/fstab_bak

cat /etc/fstab_bak |grep -v swap > /etc/fstab

cat <第二步:三台都要安装docker

yum -y install docker

#然后修改daemon.json,添加:

vim /etc/docker/daemon.json

cat << EOF > /etc/docker/daemon.json

{

"registry-mirrors": ["https://wyrsf017.mirror.aliyuncs.com"]

}

EOF

systemctl start docker

systemctl enable docker

docker version第三步:master节点安装k8s 基础组件,133上

安装kubelet,kubeadm,kubectl

yum -y install kubelet-1.19.0 kubeadm-1.19.0 kubectl-1.19.0 --disableexcludes=kubernetes

systemctl start kubelet

systemctl enable kubelet第四步:初始化master节点,133上执行:

1,生成init-config.yaml文件并修改

kubeadm config print init-defaults > init.default.yaml

cp -rp init.default.yaml init-config.yaml

#然后修改init-config.yaml,如下:

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.206.133 #master节点IP

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master #默认为master,不是请修改

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers #改成国内的地址,否则下载失败

kind: ClusterConfiguration

kubernetesVersion: v1.19.0 #版本号要和安装的一致

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12 #默认的可以不改

podSubnet: 10.244.0.0/16 #加上这句后面要用

scheduler: {}

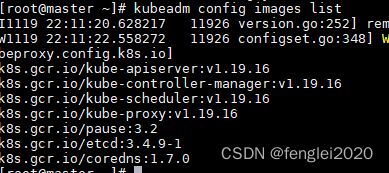

2,下载必须的镜像:

kubeadm config images list

#得到以下结果,这些docker镜像失必须要下载的,版本号一定要对得上。

k8s.gcr.io/kube-apiserver:v1.19.16

k8s.gcr.io/kube-controller-manager:v1.19.16

k8s.gcr.io/kube-scheduler:v1.19.16

k8s.gcr.io/kube-proxy:v1.19.16

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.9-1

k8s.gcr.io/coredns:1.7.0

3,使用docker pull 下载上面需要的镜像并tag为符合上述名称的镜像:

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.19.16

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.19.16

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.19.16

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.19.16

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.9-1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.7.0

docker images

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.19.16 registry.k8s.io/kube-apiserver:v1.19.16

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.19.16 registry.k8s.io/kube-controller-manager:v1.19.16

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.19.16 registry.k8s.io/kube-scheduler:v1.19.16

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.19.16 registry.k8s.io/kube-proxy:v1.19.16

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 registry.k8s.io/pause:3.2

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.9-1 registry.k8s.io/etcd:3.4.9-1

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.7.0 registry.k8s.io/coredns/coredns:v1.7.0

4,使用kubeadm安装k8s集群

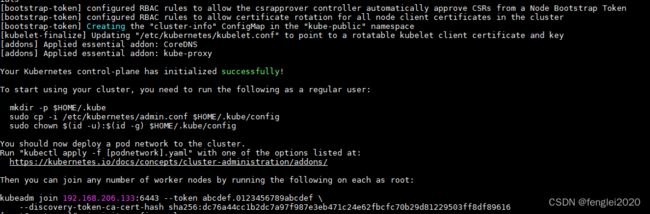

kubeadm init --config init-config.yaml当看到以下片段,表示安装成功

一定要记住最后这句,后面将新节点加入集群要用:

kubeadm join 192.168.206.133:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:dc76a44cc1b2dc7a97f987e3eb471c24e62fbcfc70b29d81229503ff8df89616

5,master节点安装好后,根据提示,还有后续操作:

rm -rf /root/.kube/

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

export KUBECONFIG=/etc/kubernetes/admin.conf

source /etc/profile6,之后就可以使用kubectl工具对kubernetes集群进行访问了,比如:

kubeadm config images list到此,kubernetes集群的master节点就可以正常工作了,但是这个集群内还是没有可用的worker node,并缺乏容器网络 的配置。

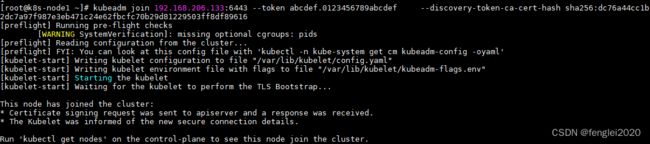

第五步:将新节点134加入集群

1,安装必要组件

yum -y remove kubelet kubelet kubectl

yum -y install kubelet-1.19.0 kubeadm-1.19.0 --disableexcludes=kubernetes

systemctl daemon-reload

systemctl start kubelet

systemctl enable kubelet

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables2,使用kubeadm join 将134加入集群,这句命令在上面出现过,直接复制即可使用:

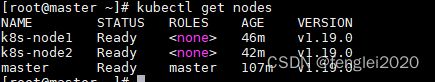

kubeadm join 192.168.206.133:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:dc76a44cc1b2dc7a97f987e3eb471c24e62fbcfc70b29d81229503ff8df896163,加入完成后,在master 133 上即可查看集群节点:

kubectl get nodes同样,将135也加入集群后,可以看到:

4, 可以看到,kubernetes提示各节点状态均为NotReady状态,这是因为还没有安装CNI的原因。安装CNI的方法有很多,这里使用calico.yaml,kubernetes 1.19一定要使用calico v3.20

在浏览器中下载该文件:https://docs.projectcalico.org/v3.20/manifests/calico.yaml

然后修改几个地方:

1)

修改定义pod网络CALICO_IPV4POOL_CIDR的值

要和kubeadm init pod-network-cidr的值一致

# 修改定义pod网络CALICO_IPV4POOL_CIDR的值和kubeadm init pod-network-cidr的值一致

## 取消注释

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

2)calico配置自动检测此主机的 IPv4 地址的方法

搜索env:在CLUSTER_TYPE下配置,新添加:

# 自动检测此主机的 IPv4 地址的方法

- name: IP_AUTODETECTION_METHOD

value: "interface=ens32" # ens32为本地网卡名字

改好的直接下载:Calico v3.20 calico.yaml 文件

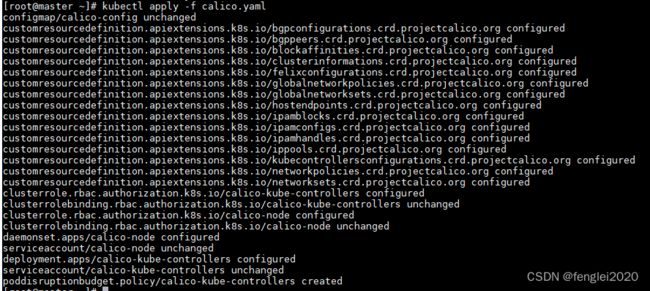

第六步:将上面修改好的 calico.yaml copy到master 133 上,然后安装:

kubectl apply -f calico.yaml此时在查看节点信息:

第七步:验证kubernetes集群是否正常工作:

kubectl get pods --all-namespaces自此,通过kubeadm安装kubernetes集群就成功了,如果集群安装失败,可以使用:kubeadm reset 命令将主机回复原状,重新运行kubeadm init安装。

第八步:安装部署dashboard

Dashboard 是基于网页的 Kubernetes 用户界面。您可以使用 Dashboard 将容器应用部署到 Kubernetes 集群中,也可以对容器应用排错,还能管理集群本身及其附属资源。您可以使用 Dashboard 获取运行在集群中的应用的概览信息,也可以创建或者修改 Kubernetes 资源(如 Deployment,Job,DaemonSet 等等)。例如,您可以对 Deployment 实现弹性伸缩、发起滚动升级、重启 Pod 或者使用向导创建新的应用。

1,下载dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml

sed -i 's#kubernetesui#registry.cn-hangzhou.aliyuncs.com\/google_containers#g' recommended.yaml# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

type: NodePort

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta8

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: kubernetes-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

name: kubernetes-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-metrics-scraper

template:

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

spec:

containers:

- name: kubernetes-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.0

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

---

# ------------------- dashboard-admin ------------------- #

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin2,部署kubernetes-dashboard

kubectl apply -f recommended.yaml

kubectl get all -n kubernetes-dashboard -o wide

kubectl get -n kubernetes-dashboard svc

kubectl describe secrets -n kubernetes-dashboard dashboard-admin这个tocken待会要用。

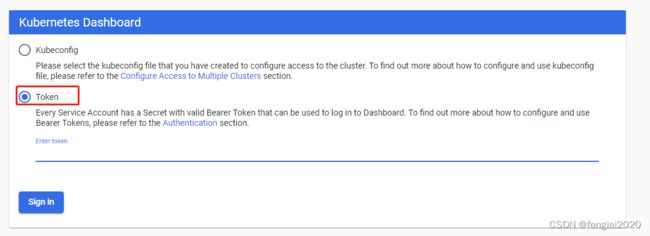

3,登录页面:

https://192.168.206.133:30001/#/login

将上面那段复制粘贴到这里,然后登录即可。