kubeadm部署kubernetes集群

Kubernetes集群搭建

Kubernetes 是由谷歌开源的 Docker 容器集群管理系统,为容器化的应用提供了资源调度、部署运行、服务发

1、k8s集群平台规划

k8s 集群可以有两种规划方式:单 master 集群和多 master 集群。

1.1 单master集群

该集群中只有一个 master 节点,在这样的集群规划中,如果 master 节点出了任何问题,它所管理的各个 node

节点都会受到影响,缺点是很明显的。

1.2 多master集群

该集群包含多个 master 节点,在该集群中,master 通过负载均衡对 node 节点进行管理。

多 master 集群也称为高可用的 master 集群,即使其中一个 master 节点出现了问题,也不会影响到其它节点。

2、集群环境硬件配置要求

master 节点至少 2 core 和 4GB 内存。

node 节点至少 4 core 和 16GB 内存。

推荐:

master节点至少 4 core 和 16GB 内存。

node节点应根据需要运行的容器数量来进行配置。

node 节点作为具体做事的节点,它的配置要求会更高。

3、部署k8s的三种方式

生产环境部署 Kubernetes 常见的几种方式:

3.1 kubeadm

Kubeadm 是一个 k8s 部署工具,提供 kubeadm init 和 kubeadm join,用于快速部署 Kubernetes 集群。

Kubeadm 降低部署门槛,但屏蔽了很多细节,遇到问题很难排查。如果想更容易可控,推荐使用二进制包部署。

Kubernetes集群,虽然手动部署麻烦点,期间可以学习很多工作原理,也利于后期维护。

3.2 二进制

Kubernetes 系统由一组可执行程序组成,用户可以通过 GitHub 上的 Kubernetes 项目页下载编译好的二进制

包,或者下载源代码并编译后进行安装。

从 github 下载发行版的二进制包,手动部署每个组件,组成 Kubernetes 集群。

3.3 kubespray

kubespray 是 Kubernetes incubator 中的项目,目标是提供 Production Ready Kubernetes 部署方案,该项目

基础是通过 Ansible Playbook 来定义系统与 Kubernetes 集群部署的任务。

本文采用 kubeadm 的方式搭建集群。

Kubernetes 需要容器运行时(Container Runtime Interface,CRI)的支持,目前官方支持的容器运行时包括:

Docker、Containerd、CRI-O 和 frakti,本文以 Docker 作为容器运行环境。

4、环境准备

服务器硬件配置:2核CPU、2G内存、60G硬盘。

操作系统版本:CentOS Linux release 7.9.2009 (Core)

Docker版本:20.10.21, build baeda1f

k8s版本:1.21.0

服务器规划(本实验采用虚拟机):

| ip | hostname |

|---|---|

| 192.168.164.200 | master |

| 192.168.164.201 | slave1 |

| 192.168.164.202 | slave2 |

| 192.168.164.203 | master2 |

5、k8s集群搭建

5.1 系统初始化(all node)

5.1.1 关闭防火墙

# 第1步

# 临时关闭

systemctl stop firewalld

# 永久关闭

systemctl disable firewalld

5.1.2 关闭 selinux

# 第2步

# 临时关闭

setenforce 0

# 永久关闭

sed -i '/SELINUX/s/enforcing/disabled/' /etc/selinux/config

5.1.3 关闭 swap

# 第3步

# 临时关闭

swapoff -a

# 永久关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab

5.1.4 设置主机名称

使用命令 hostnamectl set-hostname hostname 设置主机名称,如下四台主机分别设置为:

# 第4步

# 设置

hostnamectl set-hostname master

hostnamectl set-hostname slave1

hostnamectl set-hostname slave2

hostnamectl set-hostname master2

# 查看当前主机名称

hostname

5.1.5 添加hosts

在每个节点中添加 hosts,即节点IP地址+节点名称。

# 第5步

cat >> /etc/hosts << EOF

192.168.164.200 master

192.168.164.200 cluster-endpoint

192.168.164.201 slave1

192.168.164.202 slave2

192.168.164.203 master2

EOF

5.1.6 将桥接的IPv4流量传递到iptables的链

# 第6步

# 设置

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# 使其生效

sysctl --system

5.1.7 时间同步

让各个节点(虚拟机)中的时间与本机时间保持一致。

# 第7步

yum install ntpdate -y

ntpdate time.windows.com

注意:虚拟机不管关机还是挂起,每次重新操作都需要更新时间进行同步。

5.2 Docker的安装(all node)

5.2.1 卸载旧版本

# 第8步

yum remove docker \

docker-client \

docker-client-latest \

docker-common \

docker-latest \

docker-latest-logrotate \

docker-logrotate \

docker-engine

5.2.2 设置镜像仓库

# 第9步

# 默认是国外的,这里使用阿里云的镜像

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

5.2.3 安装需要的插件

# 第10步

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

5.2.4 更新yum软件包索引

# 第11步

# 更新yum软件包索引

yum makecache fast

5.2.5 安装docker引擎

# 第12步

# 安装特定版本

# 查看有哪些版本

yum list docker-ce --showduplicates | sort -r

yum install docker-ce-<VERSION_STRING> docker-ce-cli-<VERSION_STRING> containerd.io

yum install docker-ce-20.10.21 docker-ce-cli-20.10.21 containerd.io

# 安装最新版本

yum install docker-ce docker-ce-cli containerd.io

5.2.6 启动Docker

# 第13步

systemctl enable docker && systemctl start docker

5.2.7 配置Docker镜像加速

# 第14步

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

# 重启

systemctl restart docker

5.2.8 查看加速是否生效

# 第15步

docker info

5.2.9 验证Docker信息

# 第16步

docker -v

5.2.10 其它Docker命令

# 停止docker

systemctl stop docker

# 查看docker状态

systemctl status docker

5.2.11 卸载Docker的命令

yum remove docker-ce-20.10.21 docker-ce-cli-20.10.21 containerd.io

rm -rf /var/lib/docker

rm -rf /var/lib/containerd

5.3 添加阿里云yum源

# 第17步

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[Kubernetes]

name=kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

5.4 kubeadm、kubelet、kubectl的安装

# 第18步

yum install -y kubelet-1.21.0 kubeadm-1.21.0 kubectl-1.21.0 --disableexcludes=kubernetes

5.5 启动kubelet服务

# 第19步

systemctl enable kubelet && systemctl start kubelet

6、部署k8s-master

6.1 kubeadm初始化(master node)

1.21.0 版本在初始化过程中会报错,是因为阿里云仓库中不存在 coredns/coredns 镜像,也就是

registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0镜像不存在。

# 第20步

# master节点执行

# 该步骤需要提前执行,否则的话在初始化的时候由于找不到镜像会报错

[root@master ~]# docker pull coredns/coredns:1.8.0

1.8.0: Pulling from coredns/coredns

c6568d217a00: Pull complete

5984b6d55edf: Pull complete

Digest: sha256:cc8fb77bc2a0541949d1d9320a641b82fd392b0d3d8145469ca4709ae769980e

Status: Downloaded newer image for coredns/coredns:1.8.0

docker.io/coredns/coredns:1.8.0

[root@master ~]# docker tag coredns/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

[root@master ~]# docker rmi coredns/coredns:1.8.0

Untagged: coredns/coredns:1.8.0

Untagged: coredns/coredns@sha256:cc8fb77bc2a0541949d1d9320a641b82fd392b0d3d8145469ca4709ae769980e

# 第21步

# master节点执行

# 查看下载好的镜像

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 2 years ago 42.5MB

在 master 节点中执行以下命令,注意将 master 节点 IP 和 kubeadm 版本号修改为自己主机中所对应的。

# 第22步

# master节点执行

# 单个master节点

kubeadm init \

--apiserver-advertise-address=192.168.164.200 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.21.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

# 第22步

# master节点执行

# 多个master节点

# 本文执行的命令

kubeadm init \

--apiserver-advertise-address=192.168.164.200 \

--image-repository registry.aliyuncs.com/google_containers \

--control-plane-endpoint=cluster-endpoint \

--kubernetes-version v1.21.0 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

-

apiserver-advertise-address:指明用 master 的哪个 interface 与 cluster 的其它节点通信,如果master 有多个 interface,建议明确指定,如果不指定,kubeadm 会自动选择有默认网关的 interface,这里

的 ip 为 master 节点 ip,记得更换。

-

image-repository:这个用于指定从什么位置来拉取镜像(1.13版本才有的),默认值是 k8s.gcr.io,我们将其指定为国内镜像地址:

registry.aliyuncs.com/google_containers。 -

control-plane-endpoint:cluster-endpoint 是映射到该 IP 的自定义 DNS 名称,这里配置 hosts 映射:192.168.164.200 cluster-endpoint。这将允许你将 --control-plane-endpoint=cluster-endpoint 传递给

kubeadm init,并将相同的 DNS 名称传递给 kubeadm join,稍后你可以修改 cluster-endpoint 以指向高可

用性方案中的负载均衡器的地址。

-

kubernetes-version:指定 kubenets 版本号,默认值是 stable-1,会导致从https://dl.k8s.io/release/stable-1.txt下载最新的版本号,我们可以将其指定为固定版本(v1.21.0)来跳过网络请求,这里的值与上面安装的一致。

-

service-cidr:集群内部虚拟网络,Pod 统一访问入口。 -

pod-network-cidr:指定 Pod 网络的范围,Kubernetes 支持多种网络方案,而且不同网络方案对–pod-network-cidr 有自己的要求,这里的设置与下面部署的 CNI 网络组件 yaml 中保持一致。

# 第22步

# master节点执行

[root@master ~]# kubeadm init \

> --apiserver-advertise-address=192.168.164.200 \

> --image-repository registry.aliyuncs.com/google_containers \

> --control-plane-endpoint=cluster-endpoint \

> --kubernetes-version v1.21.0 \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.21.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [cluster-endpoint kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.96.0.1 192.168.164.200]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [192.168.164.200 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [192.168.164.200 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 64.506595 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.21" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: cv03wr.wgt8oa06phggjpz9

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join cluster-endpoint:6443 --token cv03wr.wgt8oa06phggjpz9 \

--discovery-token-ca-cert-hash sha256:a6b2581dd3fb3755eda086df861553cbce2b3daf1add59bacba140cfa9f9d3a4 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join cluster-endpoint:6443 --token cv03wr.wgt8oa06phggjpz9 \

--discovery-token-ca-cert-hash sha256:a6b2581dd3fb3755eda086df861553cbce2b3daf1add59bacba140cfa9f9d3a4

查看命令执行后的提示信息,看到 Your Kubernetes control-plane has initialized successfully! 说

明我们 master 节点上的 k8s 集群已经搭建成功。

查看命令执行后的提示信息,可以看到系统给了我们三条命令:

1、开启 kubectl 工具的使用(该命令在 master 节点中执行)。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

2、将 node 节点加入 master 中的集群(该命令在工作节点 node 中执行)。

kubeadm join cluster-endpoint:6443 --token cv03wr.wgt8oa06phggjpz9 \

--discovery-token-ca-cert-hash sha256:a6b2581dd3fb3755eda086df861553cbce2b3daf1add59bacba140cfa9f9d3a4

3、将 master 节点加入 master 中的集群(该命令在工作节点 master 中执行)。

kubeadm join cluster-endpoint:6443 --token cv03wr.wgt8oa06phggjpz9 \

--discovery-token-ca-cert-hash sha256:a6b2581dd3fb3755eda086df861553cbce2b3daf1add59bacba140cfa9f9d3a4 \

--control-plane

默认 token 有效期为 24 小时,当过期之后,该 token 就不可用了。如果重新启动 kubeadm 也需要重新生成

token。这时就需要重新创建token,可以直接使用命令快捷生成(在 master 执行):

kubeadm token create --print-join-command

6.2 开启kubectl工具的使用(master node)

# 第23步

# master节点执行

[root@master ~]# mkdir -p $HOME/.kube

[root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看 ConfigMap:

# 第24步

# master节点执行

[root@master ~]# kubectl get -n kube-system configmap

NAME DATA AGE

coredns 1 12m

extension-apiserver-authentication 6 12m

kube-proxy 2 12m

kube-root-ca.crt 1 12m

kubeadm-config 2 12m

kubelet-config-1.21 1 12m

可以看到其中生成了名为 kubeadm-config 的 ConfigMap 对象。

查看各个机器镜像的下载情况:

# 第25步

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 years ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 years ago 120MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 years ago 50.6MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 2 years ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 2 years ago 253MB

[root@slave1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

[root@slave2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

[root@master2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

slave1、slave2 和 master2 都不会有任何镜像的下载。

查看集群的节点:

# 第26步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 12m v1.21.0

6.3 slave节点加入集群(slave node)

# 第27步

# slave1节点执行

[root@slave1 ~]# kubeadm join cluster-endpoint:6443 --token cv03wr.wgt8oa06phggjpz9 \

> --discovery-token-ca-cert-hash sha256:a6b2581dd3fb3755eda086df861553cbce2b3daf1add59bacba140cfa9f9d3a4

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

# 第28步

# slave2节点执行

[root@slave2 ~]# kubeadm join cluster-endpoint:6443 --token cv03wr.wgt8oa06phggjpz9 \

> --discovery-token-ca-cert-hash sha256:a6b2581dd3fb3755eda086df861553cbce2b3daf1add59bacba140cfa9f9d3a4

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

查看集群的节点:

# 第29步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 25m v1.21.0

slave1 NotReady <none> 16m v1.21.0

slave2 NotReady <none> 16m v1.21.0

查看各个机器镜像的下载情况:

# 第30步

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 years ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 years ago 120MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 years ago 50.6MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 2 years ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 2 years ago 253MB

[root@slave1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

[root@slave2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

[root@master2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

6.4 master2节点加入集群(master2 node)

# 第31步

# master2节点执行

# 镜像下载

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.21.0

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.21.0

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.21.0

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.21.0

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/pause:3.4.1

[root@master2 ~]# docker pull registry.aliyuncs.com/google_containers/etcd:3.4.13-0

# 1.21.0版本的k8s中,阿里云镜像中没有registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0镜像,所以需要从别的地方下载镜像,然后再进行处理

[root@master2 ~]# docker pull coredns/coredns:1.8.0

[root@master2 ~]# docker tag coredns/coredns:1.8.0 registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

[root@master2 ~]# docker rmi coredns/coredns:1.8.0

查看镜像:

# 第32步

# master2节点执行

[root@master2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 years ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 years ago 120MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 years ago 50.6MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 2 years ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 2 years ago 253MB

证书拷贝:

# 第33步

# master2节点执行

# 创建目录

[root@master2 ~]# mkdir -p /etc/kubernetes/pki/etcd

# 第34步

# master节点执行

# 将master节点上的证书拷贝到master2节点上

[root@master ~]# scp -rp /etc/kubernetes/pki/ca.* master2:/etc/kubernetes/pki

[root@master ~]# scp -rp /etc/kubernetes/pki/sa.* master2:/etc/kubernetes/pki

[root@master ~]# scp -rp /etc/kubernetes/pki/front-proxy-ca.* master2:/etc/kubernetes/pki

[root@master ~]# scp -rp /etc/kubernetes/pki/etcd/ca.* master2:/etc/kubernetes/pki/etcd

[root@master ~]# scp -rp /etc/kubernetes/admin.conf master2:/etc/kubernetes

加入集群:

# 第35步

# master2节点执行

[root@master2 ~]# kubeadm join cluster-endpoint:6443 --token cv03wr.wgt8oa06phggjpz9 \

> --discovery-token-ca-cert-hash sha256:a6b2581dd3fb3755eda086df861553cbce2b3daf1add59bacba140cfa9f9d3a4 \

> --control-plane

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[preflight] Running pre-flight checks before initializing the new control plane instance

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master2] and IPs [192.168.164.203 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master2] and IPs [192.168.164.203 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [cluster-endpoint kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master2] and IPs [10.96.0.1 192.168.164.203]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Valid certificates and keys now exist in "/etc/kubernetes/pki"

[certs] Using the existing "sa" key

[kubeconfig] Generating kubeconfig files

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/admin.conf"

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[check-etcd] Checking that the etcd cluster is healthy

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

[etcd] Announced new etcd member joining to the existing etcd cluster

[etcd] Creating static Pod manifest for "etcd"

[etcd] Waiting for the new etcd member to join the cluster. This can take up to 40s

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[mark-control-plane] Marking the node master2 as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master2 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

This node has joined the cluster and a new control plane instance was created:

* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.

To start administering your cluster from this node, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Run 'kubectl get nodes' to see this node join the cluster.

# 第36步

# master2节点执行

[root@master2 ~]# mkdir -p $HOME/.kube

[root@master2 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master2 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

查看节点:

# 第37步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 44m v1.21.0

master2 NotReady control-plane,master 92s v1.21.0

slave1 NotReady <none> 35m v1.21.0

slave2 NotReady <none> 35m v1.21.0

# 第38步

# master2节点执行

[root@master2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 45m v1.21.0

master2 NotReady control-plane,master 2m18s v1.21.0

slave1 NotReady <none> 36m v1.21.0

slave2 NotReady <none> 35m v1.21.0

查看镜像下载情况:

# 第39步

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 years ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 years ago 120MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 years ago 50.6MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 2 years ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 2 years ago 253MB

[root@slave1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

[root@slave2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

[root@master2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 years ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 years ago 50.6MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 years ago 120MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 2 years ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 2 years ago 253MB

注:由于网络插件还没有部署,所有节点还没有准备就绪,状态为 NotReady,下面安装网络插件。

7、安装网络插件

7.1 部署容器网络Calico(master node)

# 第40步

# master节点执行

# 下载yaml

curl https://docs.projectcalico.org/archive/v3.20/manifests/calico-etcd.yaml -o calico.yaml

修改 calico.yaml文件:

# 第41步

# master节点执行

# 第一处修改

# 修改文件里面的定义Pod网络(CALICO_IPV4POOL_CIDR)的值,与前面kubeadm init的--pod-network-cidr指定的一样

# The default IPv4 pool to create on startup if none exists. Pod IPs will be

# chosen from this range. Changing this value after installation will have

# no effect. This should fall within `--cluster-cidr`.

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16"

# 第42步

# master节点执行

# 第二处修改

# 添加IP_AUTODETECTION_METHOD值为interface=ens33,ens33是你的网卡

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

- name: IP_AUTODETECTION_METHOD

value: "interface=ens33"

# 第43步

# master节点执行

# 第三处修改

# 将apiVersion: policy/v1beta1改为apiVersion: policy/v1

# This manifest creates a Pod Disruption Budget for Controller to allow K8s Cluster Autoscaler to evict

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: calico-kube-controllers

# 第44步

# master节点执行

# 第四处修改

# 执行下面的脚本进行相关内容的替换

#!/bin/bash

# ip是master的ip

ETCD_ENDPOINTS="https://192.168.164.200:2379"

sed -i "s#.*etcd_endpoints:.*# etcd_endpoints: \"${ETCD_ENDPOINTS}\"#g" calico.yaml

sed -i "s#__ETCD_ENDPOINTS__#${ETCD_ENDPOINTS}#g" calico.yaml

ETCD_CA=`cat /etc/kubernetes/pki/etcd/ca.crt | base64 | tr -d '\n'`

ETCD_CERT=`cat /etc/kubernetes/pki/etcd/server.crt | base64 | tr -d '\n'`

ETCD_KEY=`cat /etc/kubernetes/pki/etcd/server.key | base64 | tr -d '\n'`

sed -i "s#.*etcd-ca:.*# etcd-ca: ${ETCD_CA}#g" calico.yaml

sed -i "s#.*etcd-cert:.*# etcd-cert: ${ETCD_CERT}#g" calico.yaml

sed -i "s#.*etcd-key:.*# etcd-key: ${ETCD_KEY}#g" calico.yaml

sed -i 's#.*etcd_ca:.*# etcd_ca: "/calico-secrets/etcd-ca"#g' calico.yaml

sed -i 's#.*etcd_cert:.*# etcd_cert: "/calico-secrets/etcd-cert"#g' calico.yaml

sed -i 's#.*etcd_key:.*# etcd_key: "/calico-secrets/etcd-key"#g' calico.yaml

sed -i "s#__ETCD_CA_CERT_FILE__#/etc/kubernetes/pki/etcd/ca.crt#g" calico.yaml

sed -i "s#__ETCD_CERT_FILE__#/etc/kubernetes/pki/etcd/server.crt#g" calico.yaml

sed -i "s#__ETCD_KEY_FILE__#/etc/kubernetes/pki/etcd/server.key#g" calico.yaml

sed -i "s#__KUBECONFIG_FILEPATH__#/etc/cni/net.d/calico-kubeconfig#g" calico.yaml

calico.yaml 文件中包含4个镜像,由于网络原因,这4个镜像下载速度较慢,如果直接执行

kubectl apply -f calico.yaml 会影响最后的结果,所以可以提前下载好镜像。

# 第45步

# 所有节点都需要执行

# 下载镜像

docker pull docker.io/calico/pod2daemon-flexvol:v3.20.6

docker pull docker.io/calico/node:v3.20.6

docker pull docker.io/calico/kube-controllers:v3.20.6

docker pull docker.io/calico/cni:v3.20.6

本文提前准备好镜像,然后进行导入和导出,方便以后的使用。

# 第46步

# master节点执行

# 将镜像拷贝到master上,然后传输到其它机器

scp ./images/* root@master2:~/images

scp ./images/* root@slave1:~/images

scp ./images/* root@slave2:~/images

# 导出到外部镜像

# 格式

docker save -o "" ""

# 导出

docker save -o pod2daemon-flexvol_v3.20.6.tar docker.io/calico/pod2daemon-flexvol:v3.20.6

docker save -o node_v3.20.6.tar docker.io/calico/node:v3.20.6

docker save -o kube-controllers_v3.20.6.tar docker.io/calico/kube-controllers:v3.20.6

docker save -o cni_v3.20.6.tar docker.io/calico/cni:v3.20.6

# 导入外部的镜像

# 导入单个镜像的格式

docker load -i ""

docker load < cni_v3.20.6.tar

docker load < kube-controllers_v3.20.6.tar

docker load < node_v3.20.6.tar

docker load < pod2daemon-flexvol_v3.20.6.tar

# 导入多个镜像的格式

ls -1 *.tar | xargs --no-run-if-empty -L 1 docker load -i

查看镜像情况:

# 第47步

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

calico/node v3.20.6 daeec7e26e1f 9 months ago 156MB

calico/pod2daemon-flexvol v3.20.6 39b166f3f936 9 months ago 18.6MB

calico/cni v3.20.6 13b6f63a50d6 9 months ago 138MB

calico/kube-controllers v3.20.6 4dc6e7685020 9 months ago 60.2MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 years ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 years ago 50.6MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 years ago 120MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 2 years ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 2 years ago 253MB

[root@slave1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

calico/node v3.20.6 daeec7e26e1f 9 months ago 156MB

calico/pod2daemon-flexvol v3.20.6 39b166f3f936 9 months ago 18.6MB

calico/cni v3.20.6 13b6f63a50d6 9 months ago 138MB

calico/kube-controllers v3.20.6 4dc6e7685020 9 months ago 60.2MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

[root@slave2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

calico/node v3.20.6 daeec7e26e1f 9 months ago 156MB

calico/pod2daemon-flexvol v3.20.6 39b166f3f936 9 months ago 18.6MB

calico/cni v3.20.6 13b6f63a50d6 9 months ago 138MB

calico/kube-controllers v3.20.6 4dc6e7685020 9 months ago 60.2MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

[root@master2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

calico/node v3.20.6 daeec7e26e1f 9 months ago 156MB

calico/pod2daemon-flexvol v3.20.6 39b166f3f936 9 months ago 18.6MB

calico/cni v3.20.6 13b6f63a50d6 9 months ago 138MB

calico/kube-controllers v3.20.6 4dc6e7685020 9 months ago 60.2MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 years ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 years ago 50.6MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 years ago 120MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 2 years ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 2 years ago 253MB

进行安装:

# 第48步

# master节点执行

[root@master ~]# kubectl apply -f calico.yaml

secret/calico-etcd-secrets created

configmap/calico-config created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

poddisruptionbudget.policy/calico-kube-controllers created

查看节点信息:

# 第49步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 80m v1.21.0

master2 Ready control-plane,master 37m v1.21.0

slave1 Ready <none> 71m v1.21.0

slave2 Ready <none> 71m v1.21.0

# 第50步

# master2节点执行

[root@master2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 80m v1.21.0

master2 Ready control-plane,master 37m v1.21.0

slave1 Ready <none> 71m v1.21.0

slave2 Ready <none> 71m v1.21.0

查看 pod 信息:

# 第51步

# master节点执行

# 执行结束要等上一会才全部running

# 查看运行状态,1代表运行中

[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-b86879b9b-55lhv 1/1 Running 0 73s

kube-system calico-node-44r4h 1/1 Running 0 73s

kube-system calico-node-fpfmb 1/1 Running 0 73s

kube-system calico-node-rddbt 1/1 Running 0 73s

kube-system calico-node-x7848 1/1 Running 0 73s

kube-system coredns-545d6fc579-5vhfj 1/1 Running 0 4h8m

kube-system coredns-545d6fc579-tnbxr 1/1 Running 0 4h8m

kube-system etcd-master 1/1 Running 0 4h8m

kube-system etcd-master2 1/1 Running 0 28m

kube-system kube-apiserver-master 1/1 Running 0 4h8m

kube-system kube-apiserver-master2 1/1 Running 0 28m

kube-system kube-controller-manager-master 1/1 Running 1 4h8m

kube-system kube-controller-manager-master2 1/1 Running 0 28m

kube-system kube-proxy-5sqzf 1/1 Running 0 4h5m

kube-system kube-proxy-kg2kw 1/1 Running 0 28m

kube-system kube-proxy-pvzgq 1/1 Running 0 4h8m

kube-system kube-proxy-r64nw 1/1 Running 0 4h5m

kube-system kube-scheduler-master 1/1 Running 1 4h8m

kube-system kube-scheduler-master2 1/1 Running 0 28m

# 第52步

# master2节点执行

# 执行结束要等上一会才全部running

# 查看运行状态,1代表运行中

[root@master2 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-b86879b9b-55lhv 1/1 Running 0 73s

kube-system calico-node-44r4h 1/1 Running 0 73s

kube-system calico-node-fpfmb 1/1 Running 0 73s

kube-system calico-node-rddbt 1/1 Running 0 73s

kube-system calico-node-x7848 1/1 Running 0 73s

kube-system coredns-545d6fc579-5vhfj 1/1 Running 0 4h8m

kube-system coredns-545d6fc579-tnbxr 1/1 Running 0 4h8m

kube-system etcd-master 1/1 Running 0 4h8m

kube-system etcd-master2 1/1 Running 0 28m

kube-system kube-apiserver-master 1/1 Running 0 4h8m

kube-system kube-apiserver-master2 1/1 Running 0 28m

kube-system kube-controller-manager-master 1/1 Running 1 4h8m

kube-system kube-controller-manager-master2 1/1 Running 0 28m

kube-system kube-proxy-5sqzf 1/1 Running 0 4h5m

kube-system kube-proxy-kg2kw 1/1 Running 0 28m

kube-system kube-proxy-pvzgq 1/1 Running 0 4h8m

kube-system kube-proxy-r64nw 1/1 Running 0 4h5m

kube-system kube-scheduler-master 1/1 Running 1 4h8m

kube-system kube-scheduler-master2 1/1 Running 0 28m

如果发现结点状态是 0,可以根据下面命令查看错误原因:

kubectl describe pods -n kube-system pod-name

本文安装过程中出现了如下问题:

CoreDNS 一直 ContainerCreating 的情况:

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

......

coredns-545d6fc579-6mnn4 0/1 ContainerCreating 0 97m

coredns-545d6fc579-tdnbf 0/1 ContainerCreating 0 97m

......

解决方法:

卸载 master、slave 节点上所有关于 calico 的安装信息。

使用以下命令删除 slave 节点上关于 calico 的配置信息,并重启kubelet服务。

rm -rf /etc/cni/net.d/*

rm -rf /var/lib/cni/calico

systemctl restart kubelet

7.2 部署容器网络fannel(master node)

查看集群的信息:

# 第53步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane,master 13m v1.21.0

master2 NotReady control-plane,master 17s v1.21.0

slave1 NotReady <none> 2m27s v1.21.0

slave2 NotReady <none> 2m24s v1.21.0

[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-545d6fc579-lhm8r 0/1 Pending 0 13m

kube-system coredns-545d6fc579-zx75n 0/1 Pending 0 13m

kube-system etcd-master 1/1 Running 0 13m

kube-system etcd-master2 1/1 Running 0 32s

kube-system kube-apiserver-master 1/1 Running 0 13m

kube-system kube-apiserver-master2 1/1 Running 0 33s

kube-system kube-controller-manager-master 1/1 Running 0 13m

kube-system kube-controller-manager-master2 1/1 Running 0 33s

kube-system kube-proxy-2c2t9 1/1 Running 0 34s

kube-system kube-proxy-bcxzm 1/1 Running 0 2m41s

kube-system kube-proxy-n79tj 1/1 Running 0 13m

kube-system kube-proxy-wht8z 1/1 Running 0 2m44s

kube-system kube-scheduler-master 1/1 Running 0 13m

kube-system kube-scheduler-master2 1/1 Running 0 34s

# 第54步

# master节点执行

# 获取fannel的配置文件

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 如果出现无法访问的情况,可以直接用下面的flannel网络的官方github地址

wget https://github.com/flannel-io/flannel/tree/master/Documentation/kube-flannel.yml

# 第55步

# master节点执行

# 修改文件内容

net-conf.json: |

{

"Network": "10.244.0.0/16", #这里的网段地址需要与master初始化的必须保持一致

"Backend": {

"Type": "vxlan"

}

}

# 第56步

# master节点执行

[root@master ~]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

查看镜像下载情况,每个节点多了两个fannel相关的镜像:

# 第57步

[root@master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

rancher/mirrored-flannelcni-flannel v0.20.1 d66192101c64 6 months ago 59.4MB

rancher/mirrored-flannelcni-flannel-cni-plugin v1.1.0 fcecffc7ad4a 11 months ago 8.09MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 years ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 years ago 120MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 years ago 50.6MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 2 years ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 2 years ago 253MB

[root@slave1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

rancher/mirrored-flannelcni-flannel v0.20.1 d66192101c64 6 months ago 59.4MB

rancher/mirrored-flannelcni-flannel-cni-plugin v1.1.0 fcecffc7ad4a 11 months ago 8.09MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

[root@slave2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

rancher/mirrored-flannelcni-flannel v0.20.1 d66192101c64 6 months ago 59.4MB

rancher/mirrored-flannelcni-flannel-cni-plugin v1.1.0 fcecffc7ad4a 11 months ago 8.09MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

[root@master2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

rancher/mirrored-flannelcni-flannel v0.20.1 d66192101c64 6 months ago 59.4MB

rancher/mirrored-flannelcni-flannel-cni-plugin v1.1.0 fcecffc7ad4a 11 months ago 8.09MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.21.0 4d217480042e 2 years ago 126MB

registry.aliyuncs.com/google_containers/kube-proxy v1.21.0 38ddd85fe90e 2 years ago 122MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.21.0 62ad3129eca8 2 years ago 50.6MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.21.0 09708983cc37 2 years ago 120MB

registry.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 2 years ago 683kB

registry.aliyuncs.com/google_containers/coredns/coredns v1.8.0 296a6d5035e2 2 years ago 42.5MB

registry.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 2 years ago 253MB

查看节点情况:

# 第58步

# master节点执行

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 24m v1.21.0

master2 Ready control-plane,master 10m v1.21.0

slave1 Ready <none> 13m v1.21.0

slave2 Ready <none> 13m v1.21.0

# 第59步

# master2节点执行

[root@master2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 24m v1.21.0

master2 Ready control-plane,master 11m v1.21.0

slave1 Ready <none> 13m v1.21.0

slave2 Ready <none> 13m v1.21.0

查看 pod 情况:

# 第60步

# master节点执行

[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-4cs89 1/1 Running 0 9m51s

kube-flannel kube-flannel-ds-4ndpr 1/1 Running 0 9m51s

kube-flannel kube-flannel-ds-64n7z 1/1 Running 0 9m51s

kube-flannel kube-flannel-ds-b7vb9 1/1 Running 0 9m51s

kube-system coredns-545d6fc579-lhm8r 1/1 Running 0 25m

kube-system coredns-545d6fc579-zx75n 1/1 Running 0 25m

kube-system etcd-master 1/1 Running 0 25m

kube-system etcd-master2 1/1 Running 0 12m

kube-system kube-apiserver-master 1/1 Running 0 25m

kube-system kube-apiserver-master2 1/1 Running 0 12m

kube-system kube-controller-manager-master 1/1 Running 0 25m

kube-system kube-controller-manager-master2 1/1 Running 0 12m

kube-system kube-proxy-2c2t9 1/1 Running 0 12m

kube-system kube-proxy-bcxzm 1/1 Running 0 14m

kube-system kube-proxy-n79tj 1/1 Running 0 25m

kube-system kube-proxy-wht8z 1/1 Running 0 14m

kube-system kube-scheduler-master 1/1 Running 0 25m

kube-system kube-scheduler-master2 1/1 Running 0 12m

# 第61步

# master2节点执行

[root@master2 ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-4cs89 1/1 Running 0 10m

kube-flannel kube-flannel-ds-4ndpr 1/1 Running 0 10m

kube-flannel kube-flannel-ds-64n7z 1/1 Running 0 10m

kube-flannel kube-flannel-ds-b7vb9 1/1 Running 0 10m

kube-system coredns-545d6fc579-lhm8r 1/1 Running 0 25m

kube-system coredns-545d6fc579-zx75n 1/1 Running 0 25m

kube-system etcd-master 1/1 Running 0 25m

kube-system etcd-master2 1/1 Running 0 12m

kube-system kube-apiserver-master 1/1 Running 0 25m

kube-system kube-apiserver-master2 1/1 Running 0 12m

kube-system kube-controller-manager-master 1/1 Running 0 25m

kube-system kube-controller-manager-master2 1/1 Running 0 12m

kube-system kube-proxy-2c2t9 1/1 Running 0 12m

kube-system kube-proxy-bcxzm 1/1 Running 0 14m

kube-system kube-proxy-n79tj 1/1 Running 0 25m

kube-system kube-proxy-wht8z 1/1 Running 0 14m

kube-system kube-scheduler-master 1/1 Running 0 25m

kube-system kube-scheduler-master2 1/1 Running 0 12m

至此,通过 kubeadm 工具就实现了 Kubernetes 集群的快速搭建。如果安装失败,则可以执行 kubeadm reset

命令将主机恢复原状,重新执行 kubeadm init 命令,再次进行安装。

Kubernetes 集群安装目录:/etc/kubernetes/

Kubernetes 集群组件配置文件目录:/etc/kubernetes/manifests/

注:以后所有 yaml 文件都只在 master 节点执行。

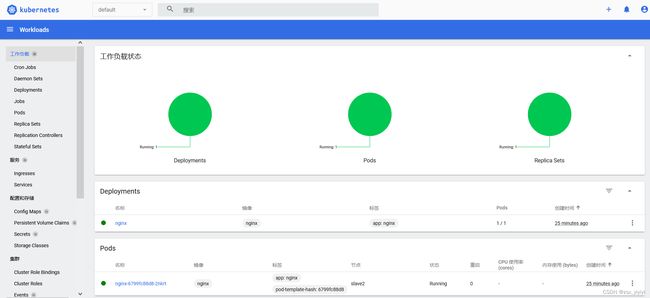

8、集群验证

在 Kubernetes 集群中创建一个 pod,验证是否正常运行。

# 第62步

# master节点执行

# nginx安装

# 创建一个nginx镜像

[root@master ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

# 第63步

# master节点执行

# 设置对外暴露端口

[root@master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

# 第64步

# master节点执行

[root@master ~]# kubectl get pods,svc

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-2nkrt 1/1 Running 0 74s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 37m

service/nginx NodePort 10.101.235.244 <none> 80:31104/TCP 24s

# 第65步

# master节点执行

# 发送curl请求

[root@master ~]# curl http://192.168.164.200:31104/

[root@master ~]# curl http://192.168.164.201:31104/

[root@master ~]# curl http://192.168.164.202:31104/

[root@master ~]# curl http://192.168.164.203:31104/

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

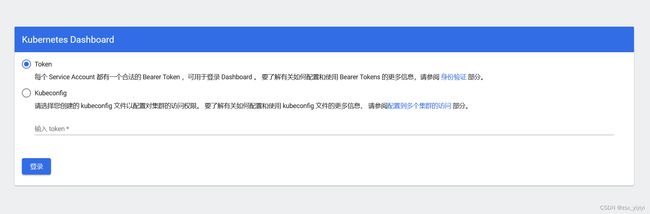

9、部署Dashboard

Dashboard是官方提供的一个UI,可用于基本管理K8s资源。

k8s与Dashboard的版本对应情况参考:https://github.com/kubernetes/dashboard/releases

# 第66步

# master节点执行

# YAML下载地址

[root@master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml

默认Dashboard只能集群内部访问,修改 Service 为 NodePort 类型,暴露到外部:

# 第67步

# master节点执行

# 修改recommended.yaml文件

[root@master ~]# vim recommended.yaml

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

type: NodePort

---

执行:

# 第68步

# master节点执行

[root@master ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

查看安装情况:

# 第69步

# master节点执行

[root@master ~]# kubectl get pods,svc -n kubernetes-dashboard -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/dashboard-metrics-scraper-c45b7869d-sdbbf 1/1 Running 0 3m5s 10.244.2.3 slave2 <none> <none>

pod/kubernetes-dashboard-576cb95f94-wdtt2 1/1 Running 0 3m5s 10.244.1.2 slave1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/dashboard-metrics-scraper ClusterIP 10.105.20.44 <none> 8000/TCP 3m5s k8s-app=dashboard-metrics-scraper

service/kubernetes-dashboard NodePort 10.107.222.58 <none> 443:31107/TCP 3m5s k8s-app=kubernetes-dashboard

创建 service account 并绑定默认 cluster-admin 管理员集群角色:

# 第70步

# master节点执行

# 创建用户

[root@master ~]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

# 用户授权

[root@master ~]# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-admin created

# 获取用户Token

[root@master ~]# kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

Name: dashboard-admin-token-9f7cw

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: dashboard-admin

kubernetes.io/service-account.uid: a2c90dac-8b3f-4fdc-a6e0-825ffed44c0b

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImtQd2tRUGtpYWtUUjJDSmhqRzRJQVVLNjEyUHdpUm50Znp6RUNfd3JGUTAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tOWY3Y3ciLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYTJjOTBkYWMtOGIzZi00ZmRjLWE2ZTAtODI1ZmZlZDQ0YzBiIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.qLThwD2yrlXr68ypz6hgx8BYDjFxZuJXRs8bRSpy5rQ82mMn64U8lss2QY6LtH-VGSbg0hL8RRWVoRdBechPSIBz7aEoKyW-qol_yYCzTkSh7h0BSJUhJ3_oBpUED0t9iWf7RZ1aWeROPAP4-3y5n4TmSTJB-AeZilhVcHfgJgkVS-yP5V0vMUGje__b-qLuqmznebdfSZudO03ZYUButiJSfK782feekRNmBsr-UlpMgbnDNWybCFxuRpSMC8ieXAm8IGjzA1DKrtggUHVop4T44imp350teU6866rTDDVUqm40QcNZX7Sg4tBSTGi1B5GuAsrkuptzwn3H39mphw

访问地址:https://192.168.164.201:31107/

# 第71步

# master节点执行

# 删除Dashboard

# 查询pod

kubectl get pods --all-namespaces | grep "dashboard"

# 删除pod

kubectl delete deployment kubernetes-dashboard --namespace=kubernetes-dashboard

kubectl delete deployment dashboard-metrics-scraper --namespace=kubernetes-dashboard

# 查询service

kubectl get service -A

# 删除service

kubectl delete service kubernetes-dashboard --namespace=kubernetes-dashboard

kubectl delete service dashboard-metrics-scraper --namespace=kubernetes-dashboard

# 删除账户和密钥

kubectl delete sa kubernetes-dashboard --namespace=kubernetes-dashboard

kubectl delete secret kubernetes-dashboard-certs --namespace=kubernetes-dashboard

kubectl delete secret kubernetes-dashboard-key-holder --namespace=kubernetes-dashboard

10、卸载k8s环境

yum -y remove kubelet kubeadm kubectl

sudo kubeadm reset -f

sudo rm -rvf $HOME/.kube

sudo rm -rvf ~/.kube/

sudo rm -rvf /etc/kubernetes/

sudo rm -rvf /etc/systemd/system/kubelet.service.d

sudo rm -rvf /etc/systemd/system/kubelet.service

sudo rm -rvf /usr/bin/kube*

sudo rm -rvf /etc/cni

sudo rm -rvf /opt/cni

sudo rm -rvf /var/lib/etcd

sudo rm -rvf /var/etcd

11、安装过程中的错误处理

11.1 报错1

calico-node服务报错信息如下:

-

Liveness probe failed: calico/node is not ready: bird/confd is not live: exit status 1 -

Felix is not live: Get "http://localhost:9099/liveness": dial tcp [::1]:9099: connect:connection refused -

Readiness probe failed: calico/node is not ready: BIRD is not ready: Failed to stat()nodename file: stat /var/lib/calico/nodename: no such file or directory

calico-kube-controllers 报错信息如下:

-

Readiness probe errored: rpc error: code = Unknown desc = container not running -

Readiness probe failed: Failed to read status file /status/status.json: unexpected endof JSON input

解决方法,执行下面脚本:

#!/bin/bash

# ip是master的ip

ETCD_ENDPOINTS="https://192.168.164.200:2379"

sed -i "s#.*etcd_endpoints:.*# etcd_endpoints: \"${ETCD_ENDPOINTS}\"#g" calico.yaml

sed -i "s#__ETCD_ENDPOINTS__#${ETCD_ENDPOINTS}#g" calico.yaml

ETCD_CA=`cat /etc/kubernetes/pki/etcd/ca.crt | base64 | tr -d '\n'`

ETCD_CERT=`cat /etc/kubernetes/pki/etcd/server.crt | base64 | tr -d '\n'`

ETCD_KEY=`cat /etc/kubernetes/pki/etcd/server.key | base64 | tr -d '\n'`

sed -i "s#.*etcd-ca:.*# etcd-ca: ${ETCD_CA}#g" calico.yaml

sed -i "s#.*etcd-cert:.*# etcd-cert: ${ETCD_CERT}#g" calico.yaml

sed -i "s#.*etcd-key:.*# etcd-key: ${ETCD_KEY}#g" calico.yaml

sed -i 's#.*etcd_ca:.*# etcd_ca: "/calico-secrets/etcd-ca"#g' calico.yaml

sed -i 's#.*etcd_cert:.*# etcd_cert: "/calico-secrets/etcd-cert"#g' calico.yaml

sed -i 's#.*etcd_key:.*# etcd_key: "/calico-secrets/etcd-key"#g' calico.yaml

sed -i "s#__ETCD_CA_CERT_FILE__#/etc/kubernetes/pki/etcd/ca.crt#g" calico.yaml

sed -i "s#__ETCD_CERT_FILE__#/etc/kubernetes/pki/etcd/server.crt#g" calico.yaml

sed -i "s#__ETCD_KEY_FILE__#/etc/kubernetes/pki/etcd/server.key#g" calico.yaml

sed -i "s#__KUBECONFIG_FILEPATH__#/etc/cni/net.d/calico-kubeconfig#g" calico.yaml

11.2 报错2

coredns报错信息:

Warning FailedCreatePodSandBox 14s (x4 over 17s) kubelet, k8s-work2 (combined from similar

events): Failed to create pod sandbox: rpc error: code = Unknown desc = failed to set up

sandbox container "266213ee3ba95ea42c067702990b81f6b5ee1857c6bdee6d247464dfb0a85dc7"

network for pod "coredns-6d56c8448f-c6x7h": networkPlugin cni failed to set up pod

"coredns-6d56c8448f-c6x7h_kube-system" network: could not initialize etcdv3 client: open

/etc/kubernetes/pki/etcd/server.crt: no such file or directory

解决方法:

# 配置主机密钥对信任,从master节点同步ssl证书到work节点

ssh-keygen -t rsa

ssh-copy-id root@slave1

ssh-copy-id root@slave2

scp -r /etc/kubernetes/pki/etcd root@slave1:/etc/kubernetes/pki/etcd

scp -r /etc/kubernetes/pki/etcd root@slave2:/etc/kubernetes/pki/etcd

11.3 报错3

calico-kube-controllers 报错信息如下:

Failed to start error=failed to build Calico client: could not initialize etcdv3 client:

open /calico-secrets/etcd-cert: permission denied

解决方法,defaultMode: 0400改为defaultMode: 0040:

volumes:

# Mount in the etcd TLS secrets with mode 400.

# See https://kubernetes.io/docs/concepts/configuration/secret/

- name: etcd-certs

secret:

secretName: calico-etcd-secrets

# defaultMode: 0400

defaultMode: 0040

所有的错误修改之后如果不生效,可以重启docker和kubelet。

12、部署Nginx高可用负载均衡器

Nginx 是一个主流 Web 服务和反向代理服务器,这里用四层实现对 apiserver 实现负载均衡。

12.1 查看现有的集群环境

# 第72步

[root@master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane,master 6d v1.21.0

master2 Ready control-plane,master 6d v1.21.0

slave1 Ready <none> 6d v1.21.0

slave2 Ready <none> 6d v1.21.0

# 第73步

[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-4cs89 1/1 Running 1 6d

kube-flannel kube-flannel-ds-4ndpr 1/1 Running 2 6d

kube-flannel kube-flannel-ds-64n7z 1/1 Running 1 6d

kube-flannel kube-flannel-ds-b7vb9 1/1 Running 2 6d

kube-system coredns-545d6fc579-5nqkk 1/1 Running 0 13m

kube-system coredns-545d6fc579-pmzv2 1/1 Running 0 14m

kube-system etcd-master 1/1 Running 1 6d

kube-system etcd-master2 1/1 Running 1 6d

kube-system kube-apiserver-master 1/1 Running 1 6d

kube-system kube-apiserver-master2 1/1 Running 1 6d

kube-system kube-controller-manager-master 1/1 Running 2 6d

kube-system kube-controller-manager-master2 1/1 Running 1 6d

kube-system kube-proxy-2c2t9 1/1 Running 1 6d

kube-system kube-proxy-bcxzm 1/1 Running 2 6d

kube-system kube-proxy-n79tj 1/1 Running 1 6d

kube-system kube-proxy-wht8z 1/1 Running 2 6d

kube-system kube-scheduler-master 1/1 Running 2 6d

kube-system kube-scheduler-master2 1/1 Running 1 6d

各个节点的信息:

# 第74步

[root@master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.164.200 master

192.168.164.200 cluster-endpoint

192.168.164.201 slave1

192.168.164.202 slave2

192.168.164.203 master2

12.2 在master节点上安装nginx

这里我们使用 docker 的方式进行安装。

以下操作只需要在Nginx节点部署即可,这里选择master节点安装Nginx,真实的环境下 nginx 可能不会和 k8s 在

同一个节点。

# 第75步

# 镜像下载

[root@master ~]# docker pull nginx:1.17.2

# 第76步

# 编辑配置文件

[root@master ~]# mkdir -p /data/nginx && cd /data/nginx

[root@master nginx]# vim nginx-lb.conf

user nginx;

worker_processes 2; # 根据服务器cpu核数修改

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 8192;

}

stream {

upstream apiserver {

server 192.168.164.200:6443 weight=5 max_fails=3 fail_timeout=30s; #master apiserver ip和端口

server 192.168.164.203:6443 weight=5 max_fails=3 fail_timeout=30s; #master2 apiserver ip和端口

}

server {

listen 8443; # 监听端口

proxy_pass apiserver;

}

}

# 第77步

# 启动容器

[root@master ~]# docker run -d --restart=unless-stopped -p 8443:8443 -v /data/nginx/nginx-lb.conf:/etc/nginx/nginx.conf --name nginx-lb --hostname nginx-lb nginx:1.17.2

973d4442ff36a8de08c11b6bf9670536eabccf13b99c1d4e54b2e1c14b2cbc94

# 第78步

# 查看启动情况

[root@master ~]# docker ps | grep nginx-lb

973d4442ff36 nginx:1.17.2 "nginx -g 'daemon of…" 39 seconds ago Up 38 seconds 80/tcp, 0.0.0.0:8443->8443/tcp, :::8443->8443/tcp nginx-lb

12.3 测试

# 第79步

[root@master ~]# curl -k https://192.168.164.200:8443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.0",

"gitCommit": "cb303e613a121a29364f75cc67d3d580833a7479",

"gitTreeState": "clean",

"buildDate": "2021-04-08T16:25:06Z",

"goVersion": "go1.16.1",

"compiler": "gc",

"platform": "linux/amd64"

}

# 第80步

[root@slave1 ~]# curl -k https://192.168.164.200:8443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.0",

"gitCommit": "cb303e613a121a29364f75cc67d3d580833a7479",

"gitTreeState": "clean",

"buildDate": "2021-04-08T16:25:06Z",

"goVersion": "go1.16.1",

"compiler": "gc",

"platform": "linux/amd64"

}

# 第81步

[root@slave2 ~]# curl -k https://192.168.164.200:8443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.0",

"gitCommit": "cb303e613a121a29364f75cc67d3d580833a7479",

"gitTreeState": "clean",

"buildDate": "2021-04-08T16:25:06Z",

"goVersion": "go1.16.1",

"compiler": "gc",

"platform": "linux/amd64"

}

# 第82步

[root@master2 ~]# curl -k https://192.168.164.200:8443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.0",

"gitCommit": "cb303e613a121a29364f75cc67d3d580833a7479",

"gitTreeState": "clean",

"buildDate": "2021-04-08T16:25:06Z",

"goVersion": "go1.16.1",

"compiler": "gc",

"platform": "linux/amd64"

}

12.4 高可用配置的另一种方式

kubeadm 的安装方式在初始化的时候指定两个参数:

# apiserver的端口,默认6443

--apiserver-bind-port port

# 为控制平面指定一个稳定的IP地址或DNS名称,也就是配置一个高可用的vip或域名

--control-plane-endpoint ip

# 例如

--apiserver-bind-port 8443 # 8443为Nginx所在主机的port

--control-plane-endpoint 192.168.165.200 # 192.168.164.200为Nginx所在主机的IP

这种方式有一个坏处就是一但 nginx 服务不可用,那么整个 k8s 集群就不可用。

13、部署Nginx+Keepalived高可用负载均衡器

-

Nginx 是一个主流 Web 服务和反向代理服务器,这里用四层实现对 apiserver 实现负载均衡。

-

Keepalived 是一个主流高可用软件,基于 VIP 绑定实现服务器双机热备,Keepalived 主要根据 Nginx 运行状

态判断是否需要故障转移(漂移VIP),例如当 Nginx 主节点挂掉,VIP 会自动绑定在 Nginx 备节点,从而保证

VIP 一直可用,实现 Nginx 高可用。

-

如果你是在公有云上,一般都不支持 keepalived,那么你可以直接用它们的负载均衡器产品,直接负载均衡

多台 master kube-apiserver。

下面的操作在两台 master 节点上进行操作。

13.1 安装软件包(master/master2)

# 第83步

yum install epel-release -y

yum install nginx keepalived -y

13.2 Nginx配置文件(master和master2相同)(两台master分别做为主备)

# 第84步

cat > /etc/nginx/nginx.conf << "EOF"

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

# 四层负载均衡,为两台master apiserver组件提供负载均衡

stream {

log_format main '$remote_addr $upstream_addr - [$time_local] $status $upstream_bytes_sent';

access_log /var/log/nginx/k8s-access.log main;

upstream k8s-apiserver {

server 192.168.164.200:6443; # master APISERVER IP:PORT

server 192.168.164.203:6443; # master2 APISERVER IP:PORT

}

server {

listen 16443; # 由于nginx与master节点复用,这个监听端口不能是6443,否则会冲突

proxy_pass k8s-apiserver;

}

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

server {

listen 80 default_server;

server_name _;

location / {

}

}

}

EOF

13.3 keepalived配置文件(master和master2相同)

# 第85步

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

[email protected]

[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_nginx.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33 # 修改为实际网卡名

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

192.168.164.205/24 # 虚拟IP

}

track_script {

check_nginx

}

}

EOF

-

vrrp_script:指定检查nginx工作状态脚本(根据nginx状态判断是否故障转移) -

virtual_ipaddress:虚拟IP(VIP)

准备上述配置文件中检查Nginx运行状态的脚本

# 第86步

cat > /etc/keepalived/check_nginx.sh << "EOF"

#!/bin/bash

count=$(ss -antp |grep 16443 |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

exit 1

else

exit 0

fi

EOF

# 第87步

chmod +x /etc/keepalived/check_nginx.sh

说明:keepalived根据脚本返回状态码(0为工作正常,非0不正常)判断是否故障转移。

13.4 Nginx增加Steam模块(在master2上操作)

13.4.1 查看Nginx版本模块

如果已经安装--with-stream模块,后面的步骤可以跳过。

# 第88步

[root@k8s-master2 nginx-1.20.1]# nginx -V

nginx version: nginx/1.20.1

built by gcc 4.8.5 20150623 (Red Hat 4.8.5-44) (GCC)

configure arguments: --prefix=/usr/share/nginx --sbin-path=/usr/sbin/nginx --modules-path=/usr/lib64/nginx/modules --conf-path=/etc/nginx/nginx.conf --with-stream

# --with-stream代表安装

13.4.2 下载同一个版本的nginx

下载地址:http://nginx.org/download/

这里下载:http://nginx.org/download/nginx-1.20.1.tar.gz

13.4.3 备份原Nginx文件

# 第89步

mv /usr/sbin/nginx /usr/sbin/nginx.bak

cp -r /etc/nginx{,.bak}

13.4.4 重新编译Nginx

# 根据第1步查到已有的模块,加上本次需新增的模块: --with-stream

# 检查模块是否支持,比如这次添加limit限流模块和stream模块

# -without-http_limit_conn_module disable表示已有该模块,编译时,不需要添加

./configure -help | grep limit

# -with-stream enable表示不支持,编译时要自己添加该模块

./configure -help | grep stream

编译环境准备:

# 第90步

yum -y install libxml2 libxml2-dev libxslt-devel

yum -y install gd-devel

yum -y install perl-devel perl-ExtUtils-Embed

yum -y install GeoIP GeoIP-devel GeoIP-data

yum -y install pcre-devel

yum -y install openssl openssl-devel

yum -y install gcc make

编译:

# 第91步

tar -xf nginx-1.20.1.tar.gz

cd nginx-1.20.1/

./configure --prefix=/usr/share/nginx --sbin-path=/usr/sbin/nginx --modules-path=/usr/lib64/nginx/modules --conf-path=/etc/nginx/nginx.conf --with-stream

make

说明:make完成后不要继续输入make install,以免现在的nginx出现问题。以上完成后,会在objs目录下生成

一个nginx文件,先验证:

# 第92步

[root@k8s-master2 nginx-1.20.1]# ./objs/nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

13.4.5 替换nginx到master1/master2

# 第93步

cp ./objs/nginx /usr/sbin/

scp objs/nginx [email protected]:/usr/sbin/

13.4.6 修改nginx服务文件(master和master2)

# 第94步

vim /usr/lib/systemd/system/nginx.service

[Unit]

Description=The nginx HTTP and reverse proxy server

After=network.target remote-fs.target nss-lookup.target

[Service]

Type=forking

PIDFile=/run/nginx.pid

ExecStartPre=/usr/bin/rm -rf /run/nginx.pid

ExecStartPre=/usr/sbin/nginx -t

ExecStart=/usr/sbin/nginx

ExecStop=/usr/sbin/nginx -s stop

ExecReload=/usr/sbin/nginx -s reload

PrivateTmp=true

[Install]

WantedBy=multi-user.target

13.5 启动并设置开机自启(master1/master2)

# 第95步

systemctl daemon-reload

systemctl start nginx keepalived

systemctl enable nginx keepalived

systemctl status nginx keepalived

13.6 查看keepalived工作状态

# 第96步

[root@master ~]# ip addr | grep inet

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

inet 192.168.164.200/24 brd 192.168.164.255 scope global noprefixroute ens33

# 该标志

inet 192.168.164.205/24 scope global secondary ens33

inet6 2409:8903:f02:458e:ddd0:c1de:2cb0:3640/64 scope global noprefixroute dynamic

inet6 fe80::9bc0:3f5:d3cd:a77b/64 scope link noprefixroute

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

inet6 fe80::42:5bff:fe2b:4fe6/64 scope link

inet6 fe80::98a8:21ff:fe84:fcae/64 scope link

[root@master2 nginx-1.20.1]# ip addr | grep inet

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

inet 192.168.164.203/24 brd 192.168.164.255 scope global noprefixroute ens33

inet6 fe80::fcc5:d0ea:9971:9b17/64 scope link tentative noprefixroute dadfailed

inet6 fe80::9bc0:3f5:d3cd:a77b/64 scope link tentative noprefixroute dadfailed

inet6 fe80::bcf0:21da:7eb0:a297/64 scope link tentative noprefixroute dadfailed

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

inet 10.244.3.1/24 brd 10.244.3.255 scope global cni0

可以看到,在 ens33 网卡绑定了 192.168.164.205 虚拟IP,说明工作正常。

13.7 Nginx+keepalived高可用测试

关闭主节点 Nginx,测试 VIP 是否漂移到备节点服务器。 在 Nginx master 执行 pkill nginx;在 Nginx 备节点,

ip addr 命令查看已成功绑定 VIP。

# 第97步

[root@master ~]# systemctl stop nginx

[root@master ~]# ip addr | grep inet

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

inet 192.168.164.200/24 brd 192.168.164.255 scope global noprefixroute ens33

inet6 2409:8903:f02:458e:ddd0:c1de:2cb0:3640/64 scope global noprefixroute dynamic

inet6 fe80::9bc0:3f5:d3cd:a77b/64 scope link noprefixroute

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

inet6 fe80::42:5bff:fe2b:4fe6/64 scope link

inet6 fe80::98a8:21ff:fe84:fcae/64 scope link

[root@master2 nginx-1.20.1]#ip addr | grep inetn

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

inet 192.168.164.203/24 brd 192.168.164.255 scope global noprefixroute ens33

# 该标志

inet 192.168.164.205/24 scope global secondary ens33

inet6 fe80::fcc5:d0ea:9971:9b17/64 scope link tentative noprefixroute dadfailed

inet6 fe80::9bc0:3f5:d3cd:a77b/64 scope link tentative noprefixroute dadfailed

inet6 fe80::bcf0:21da:7eb0:a297/64 scope link tentative noprefixroute dadfailed

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

inet 10.244.3.1/24 brd 10.244.3.255 scope global cni0

13.8 访问负载均衡器测试

找 K8s 集群中任意一个节点,使用 curl 查看 K8s 版本测试,使用 VIP 访问:

# 第98步

[root@master ~]# curl -k https://192.168.164.205:16443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.0",

"gitCommit": "cb303e613a121a29364f75cc67d3d580833a7479",

"gitTreeState": "clean",

"buildDate": "2021-04-08T16:25:06Z",

"goVersion": "go1.16.1",

"compiler": "gc",

"platform": "linux/amd64"

}

[root@slave1 ~]# curl -k https://192.168.164.205:16443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.0",

"gitCommit": "cb303e613a121a29364f75cc67d3d580833a7479",

"gitTreeState": "clean",

"buildDate": "2021-04-08T16:25:06Z",

"goVersion": "go1.16.1",

"compiler": "gc",

"platform": "linux/amd64"

}

[root@slave2 ~]# curl -k https://192.168.164.205:16443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.0",

"gitCommit": "cb303e613a121a29364f75cc67d3d580833a7479",

"gitTreeState": "clean",

"buildDate": "2021-04-08T16:25:06Z",

"goVersion": "go1.16.1",

"compiler": "gc",

"platform": "linux/amd64"

}

[root@master2 ~]# curl -k https://192.168.164.205:16443/version

{

"major": "1",

"minor": "21",

"gitVersion": "v1.21.0",

"gitCommit": "cb303e613a121a29364f75cc67d3d580833a7479",

"gitTreeState": "clean",

"buildDate": "2021-04-08T16:25:06Z",

"goVersion": "go1.16.1",

"compiler": "gc",

"platform": "linux/amd64"

}

可以正确获取到 K8s 版本信息,说明负载均衡器搭建正常,该请求数据流程:

curl -> vip(nginx) -> apiserver ,通过查看Nginx日志也可以看到转发apiserver IP:

# 第99步

[root@master ~]# tailf /var/log/nginx/k8s-access.log

192.168.164.200 192.168.164.200:6443 - [15/May/2023:21:38:00 +0800] 200 425

192.168.164.201 192.168.164.200:6443 - [15/May/2023:21:38:17 +0800] 200 425

192.168.164.202 192.168.164.200:6443 - [15/May/2023:21:38:20 +0800] 200 425

192.168.164.203 192.168.164.203:6443 - [15/May/2023:21:38:22 +0800] 200 425

# 切换之后备节点的信息

[root@master2 ~]# tailf /var/log/nginx/k8s-access.log

192.168.164.201 192.168.164.203:6443 - [15/May/2023:21:42:23 +0800] 200 425

192.168.164.201 192.168.164.203:6443 - [15/May/2023:21:42:33 +0800] 200 425

192.168.164.202 192.168.164.200:6443 - [15/May/2023:21:43:38 +0800] 200 425

192.168.164.203 192.168.164.203:6443 - [15/May/2023:21:43:40 +0800] 200 425

13.9 高可用配置的另一种方式

# kubeadm的安装方式

# 在初始化的时候指定

# apiserver的端口,默认6443

--apiserver-bind-port port

# 为控制平面指定一个稳定的IP地址或DNS名称,也就是配置一个高可用的vip或域名

--control-plane-endpoint ip

# 例如

--apiserver-bind-port 16443 # 16443为访问的port

--control-plane-endpoint 192.168.164.205 # 192.168.164.205为虚拟IP

13.10 集群状态

# 第100步

[root@master ~]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-4cs89 1/1 Running 1 6d1h

kube-flannel kube-flannel-ds-4ndpr 1/1 Running 2 6d1h

kube-flannel kube-flannel-ds-64n7z 1/1 Running 1 6d1h

kube-flannel kube-flannel-ds-b7vb9 1/1 Running 2 6d1h

kube-system coredns-545d6fc579-5nqkk 1/1 Running 0 78m

kube-system coredns-545d6fc579-pmzv2 1/1 Running 0 79m

kube-system etcd-master 1/1 Running 1 6d2h

kube-system etcd-master2 1/1 Running 1 6d1h

kube-system kube-apiserver-master 1/1 Running 1 6d2h

kube-system kube-apiserver-master2 1/1 Running 1 6d1h

kube-system kube-controller-manager-master 1/1 Running 2 6d2h

kube-system kube-controller-manager-master2 1/1 Running 1 6d1h

kube-system kube-proxy-2c2t9 1/1 Running 1 6d1h

kube-system kube-proxy-bcxzm 1/1 Running 2 6d1h