nginx日志采集到ClickHouse

- 流程

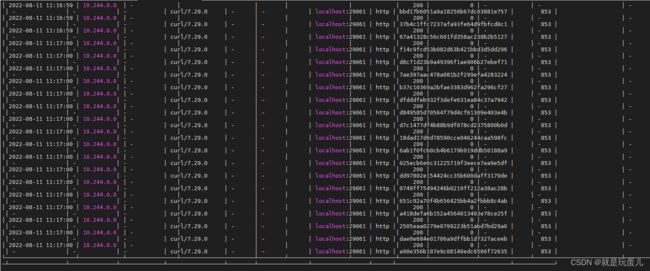

nignx日志(json)–>filebeat–>kafka–>clickhouse–>grafana - nginx日志转json

log_format json '{"access_time": "$time_iso8601","remote_addr": "$remote_addr", "x_forward_for": "$http_x_forwarded_for", "method": "$request_method", "request_url_path": "$uri", "request_url": "$request_uri", "status": $status, "request_time": $request_time, "request_length": "$request_length", "upstream_host": "$upstream_http_host", "upstream_response_length": "$upstream_response_length", "upstream_response_time": "$upstream_response_time", "upstream_status": "$upstream_status", "http_referer": "$http_referer", "remote_user": "$remote_user", "http_user_agent": "$http_user_agent", "appkey": "$arg_appKey", "upstream_addr": "$upstream_addr", "http_host": "$http_host", "pro": "$scheme", "request_id": "$request_id", "bytes_sent": $bytes_sent}';

access_log /var/log/nginx/access.log json;

- docker安装filebeat

docker run --restart=always --name filebeat --user=root -d -v /var/log/nginx/:/var/log/nginx/ -v /root/docker/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml elastic/filebeat:7.5.1

#cat /root/docker/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/*.log

json:

keys_under_root: true

# multiline:

# pattern: '^\['

# negate: true

# match: after

# max_lines: 500

# timeout: 1s

# fields:

# logtopic: log-collector

#

output.kafka:

enabled: true

hosts: ['192.168.10.100:9092']

# topic: '%{[fields.logtopic]}'

topic: 'log-collector'

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip

- docker安装kafka

docker pull wurstmeister/zookeeper

docker run -d --restart=always --log-driver json-file --log-opt max-size=100m --log-opt max-file=2 --name zookeeper -p 2181:2181 -v /etc/localtime:/etc/localtime wurstmeister/zookeeper

docker pull wurstmeister/kafka

docker run -d --restart=always --log-driver json-file --log-opt max-size=100m --log-opt max-file=2 --name kafka -p 9092:9092 -e KAFKA_BROKER_ID=0 -e KAFKA_ZOOKEEPER_CONNECT=192.168.10.100:2181/kafka -e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://192.168.10.100:9092 -e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092 -v /etc/localtime:/etc/localtime wurstmeister/kafka

#进入容器

docker exec -it kafka bash

#生产者

cd /opt/kafka_2.12-2.5.0/bin/

./kafka-console-producer.sh --broker-list localhost:9092 --topic test

#消费者

./kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test --from-beginning

- clickhouse安装

官网:https://clickhouse.com/docs/zh/getting-started/install

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://packages.clickhouse.com/rpm/clickhouse.repo

sudo yum install -y clickhouse-server clickhouse-client

sudo /etc/init.d/clickhouse-server start

4.1 创建kafka索引:

#进入clickhouse

clickhouse-client --stream_like_engine_allow_direct_select 1 -udefault

#新建库

create database test1;

use test1;

#创建kafka索引

SET date_time_input_format = 'best_effort';

CREATE TABLE nginx_log(access_time DateTime, remote_addr String, x_forward_for String, http_x_forwarded_for String, request_method String, request_uri_path String, request_uri String, status UInt64, request_time Float32, upstream_host String, upstream_response_length String, upstream_response_time String, upstream_status String, http_referer String, remote_user String, http_user_agent String, appkey String, upstream_addr String, http_host String, pro String, request_id String, bytes_sent UInt64 )ENGINE = Kafka SETTINGS kafka_broker_list = '192.168.10.100:9092', kafka_topic_list = 'log-collector', kafka_group_name = 'sre-clickhouse', kafka_format = 'JSONEachRow', kafka_row_delimiter = '\n', kafka_num_consumers = 1, date_time_input_format = 'best_effort';

#创建持久化存储表

CREATE TABLE nginx_logstroe (access_time DateTime, remote_addr String, x_forward_for String, http_x_forwarded_for String, method String, request_url_path String, request_url String, status UInt64, request_time Float32, upstream_host String, upstream_response_length String, upstream_response_time String, upstream_status String, http_referer String, remote_user String, http_user_agent String, appkey String, upstream_addr String, http_host String, pro String, request_id String, bytes_sent UInt64 ) ENGINE = MergeTree() ORDER BY access_time;

#2表同步

CREATE MATERIALIZED VIEW user_behavior_consumer TO nginx_logstroe AS SELECT * FROM nginx_log SETTINGS date_time_input_format = 'best_effort' ;