flink 13.5 自定义connect之clickhouse

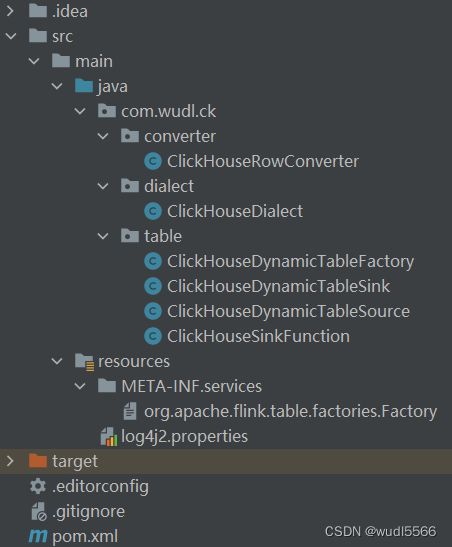

1.项目结构

2. pom 文件

<!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing,

software distributed under the License is distributed on an

"AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

KIND, either express or implied. See the License for the

specific language governing permissions and limitations

under the License.

-->

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com-wudl-ck</groupId>

<artifactId>flink-connector-clickhouse</artifactId>

<version>13.5</version>

<packaging>jar</packaging>

<name>Flink Connector ClickHouse</name>

<properties>

<java.version>1.8</java.version>

<flink.version>1.13.5</flink.version>

<log4j.version>2.12.1</log4j.version>

<scala.binary.version>2.12</scala.binary.version>

<clickhouse-jdbc.version>0.3.2</clickhouse-jdbc.version>

<maven.compiler.source>${java.version}</maven.compiler.source>

<maven.compiler.target>${java.version}</maven.compiler.target>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

</properties>

<repositories>

<repository>

<id>apache.snapshots</id>

<name>Apache Development Snapshot Repository</name>

<url>https://repository.apache.org/content/repositories/snapshots/</url>

<releases>

<enabled>false</enabled>

</releases>

<snapshots>

<enabled>true</enabled>

</snapshots>

</repository>

</repositories>

<dependencies>

<!-- Apache Flink dependencies -->

<!-- These dependencies are provided, because they should not be packaged into the JAR file. -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-jdbc_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>ru.yandex.clickhouse</groupId>

<artifactId>clickhouse-jdbc</artifactId>

<version>${clickhouse-jdbc.version}</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>${log4j.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>${log4j.version}</version>

<scope>runtime</scope>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>${log4j.version}</version>

<scope>runtime</scope>

</dependency>

</dependencies>

<build>

<plugins>

<!-- Java Compiler -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.1</version>

<configuration>

<source>${java.version}</source>

<target>${java.version}</target>

</configuration>

</plugin>

<!-- We use the maven-shade plugin to create a fat jar that contains all necessary dependencies. -->

<!-- Change the value of <mainClass>...</mainClass> if your program entry point changes. -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.1.1</version>

<executions>

<!-- Run shade goal on package phase -->

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<artifactSet>

<excludes>

<exclude>org.apache.flink:force-shading</exclude>

<exclude>com.google.code.findbugs:jsr305</exclude>

<exclude>org.slf4j:*</exclude>

<exclude>org.apache.logging.log4j:*</exclude>

</excludes>

</artifactSet>

<filters>

<filter>

<!-- Do not copy the signatures in the META-INF folder.

Otherwise, this might cause SecurityExceptions when using the JAR. -->

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

<pluginManagement>

<plugins>

<!-- This improves the out-of-the-box experience in Eclipse by resolving some warnings. -->

<plugin>

<groupId>org.eclipse.m2e</groupId>

<artifactId>lifecycle-mapping</artifactId>

<version>1.0.0</version>

<configuration>

<lifecycleMappingMetadata>

<pluginExecutions>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<versionRange>[3.1.1,)</versionRange>

<goals>

<goal>shade</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore/>

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<versionRange>[3.1,)</versionRange>

<goals>

<goal>testCompile</goal>

<goal>compile</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore/>

</action>

</pluginExecution>

</pluginExecutions>

</lifecycleMappingMetadata>

</configuration>

</plugin>

</plugins>

</pluginManagement>

</build>

</project>

3.ClickHouseRowConverter.文件

package com.wudl.ck.converter;

import org.apache.flink.connector.jdbc.internal.converter.JdbcRowConverter;

import org.apache.flink.connector.jdbc.statement.FieldNamedPreparedStatement;

import org.apache.flink.table.data.*;

import org.apache.flink.table.types.logical.DecimalType;

import org.apache.flink.table.types.logical.LogicalType;

import org.apache.flink.table.types.logical.RowType;

import org.apache.flink.table.types.logical.TimestampType;

import org.apache.flink.util.Preconditions;

import ru.yandex.clickhouse.ClickHousePreparedStatement;

import java.io.Serializable;

import java.math.BigDecimal;

import java.math.BigInteger;

import java.sql.*;

import java.time.LocalDate;

import java.time.LocalTime;

public class ClickHouseRowConverter implements JdbcRowConverter {

private static final long serialVersionUID = 1L;

private RowType rowType;

private LogicalType[] fieldTypes;

private DeserializationConverter[] toInternalConverters;

private SerializationConverter[] toClickHouseConverters;

private JDBCSerializationConverter[] toExternalConverters;

public ClickHouseRowConverter(RowType rowType) {

this.rowType = (RowType) Preconditions.checkNotNull(rowType);

this.fieldTypes = (LogicalType[]) rowType.getFields().stream().map(RowType.RowField::getType).toArray(x -> new LogicalType[x]);

this.toInternalConverters = new DeserializationConverter[rowType.getFieldCount()];

this.toClickHouseConverters = new SerializationConverter[rowType.getFieldCount()];

this.toExternalConverters = new JDBCSerializationConverter[rowType.getFieldCount()];

for (int i = 0; i < rowType.getFieldCount(); i++) {

this.toInternalConverters[i] = createToFlinkConverter(rowType.getTypeAt(i));

this.toClickHouseConverters[i] = createToClickHouseConverter(this.fieldTypes[i]);

this.toExternalConverters[i] = createToExternalConverter(this.fieldTypes[i]);

}

}

public ClickHousePreparedStatement toClickHouse(RowData rowData, ClickHousePreparedStatement statement) throws SQLException {

//getArity Returns the number of fields in this row.

for(int idx = 0; idx < rowData.getArity(); idx++) {

//isNullAt Returns true if the field is null at the given position

if(rowData == null || rowData.isNullAt(idx)) {

statement.setObject(idx + 1, null);

} else {

//通过函数式接口将rowdata转换为ck statement

this.toClickHouseConverters[idx].serialize(rowData, idx, statement);

}

}

return statement;

}

/**

* toInternal与toExternal为继承jdbc,查询使用

* @return

* @throws SQLException

*/

@Override

public RowData toInternal(ResultSet resultSet) throws SQLException {

GenericRowData genericRowData = new GenericRowData(this.rowType.getFieldCount());

for(int idx = 0; idx < genericRowData.getArity(); idx++) {

Object field = resultSet.getObject(idx + 1);

genericRowData.setField(idx, this.toInternalConverters[idx].deserialize(field));

}

return (RowData) genericRowData;

}

@Override

public FieldNamedPreparedStatement toExternal(RowData rowData, FieldNamedPreparedStatement statement) throws SQLException {

//getArity Returns the number of fields in this row.

for(int idx = 0; idx < rowData.getArity(); idx++) {

//isNullAt Returns true if the field is null at the given position

if(rowData == null || rowData.isNullAt(idx)) {

statement.setObject(idx + 1, null);

} else {

//通过函数式接口将rowdata转换为ck statement

this.toExternalConverters[idx].serialize(rowData, idx, statement);

}

}

return statement;

}

protected SerializationConverter createToClickHouseConverter(LogicalType type) {

int timestampPrecision;

int decimalPrecision;

int decimalScale;

switch(type.getTypeRoot()) {

case BOOLEAN:

return (val, index, statement) -> statement.setBoolean(index + 1, val.getBoolean(index));

case TINYINT:

return (val, index, statement) -> statement.setByte(index + 1, val.getByte(index));

case SMALLINT:

return (val, index, statement) -> statement.setShort(index + 1, val.getShort(index));

case INTERVAL_YEAR_MONTH:

case INTEGER:

return (val, index, statement) -> statement.setInt(index + 1, val.getInt(index));

case INTERVAL_DAY_TIME:

case BIGINT:

return (val, index, statement) -> statement.setLong(index + 1, val.getLong(index));

case FLOAT:

return (val, index, statement) -> statement.setFloat(index + 1, val.getFloat(index));

case DOUBLE:

return (val, index, statement) -> statement.setDouble(index + 1, val.getDouble(index));

case CHAR:

case VARCHAR:

return (val, index, statement) -> statement.setString(index + 1, val.getString(index).toString());

case VARBINARY:

return (val, index, statement) -> statement.setBytes(index + 1, val.getBinary(index));

case DATE:

return (val, index, statement) -> statement.setDate(index + 1, Date.valueOf(LocalDate.ofEpochDay(val.getInt(index))));

case TIME_WITHOUT_TIME_ZONE:

return (val, index, statement) -> statement.setTime(index + 1, Time.valueOf(LocalTime.ofNanoOfDay(val.getInt(index) * 1000000L)));

case TIMESTAMP_WITH_TIME_ZONE:

case TIMESTAMP_WITHOUT_TIME_ZONE:

timestampPrecision = ((TimestampType)type).getPrecision();

return (val, index, statement) -> statement.setTimestamp(index + 1, val.getTimestamp(index, timestampPrecision).toTimestamp());

case DECIMAL:

decimalPrecision = ((DecimalType)type).getPrecision();

decimalScale = ((DecimalType)type).getScale();

return (val, index, statement) -> statement.setBigDecimal(index + 1, val.getDecimal(index, decimalPrecision, decimalScale).toBigDecimal());

}

throw new UnsupportedOperationException("Unsupported type:" + type);

}

protected JDBCSerializationConverter createToExternalConverter(LogicalType type) {

int timestampPrecision;

int decimalPrecision;

int decimalScale;

switch(type.getTypeRoot()) {

case BOOLEAN:

return (val, index, statement) -> statement.setBoolean(index + 1, val.getBoolean(index));

case TINYINT:

return (val, index, statement) -> statement.setByte(index + 1, val.getByte(index));

case SMALLINT:

return (val, index, statement) -> statement.setShort(index + 1, val.getShort(index));

case INTERVAL_YEAR_MONTH:

case INTEGER:

return (val, index, statement) -> statement.setInt(index + 1, val.getInt(index));

case INTERVAL_DAY_TIME:

case BIGINT:

return (val, index, statement) -> statement.setLong(index + 1, val.getLong(index));

case FLOAT:

return (val, index, statement) -> statement.setFloat(index + 1, val.getFloat(index));

case DOUBLE:

return (val, index, statement) -> statement.setDouble(index + 1, val.getDouble(index));

case CHAR:

case VARCHAR:

return (val, index, statement) -> statement.setString(index + 1, val.getString(index).toString());

case VARBINARY:

return (val, index, statement) -> statement.setBytes(index + 1, val.getBinary(index));

case DATE:

return (val, index, statement) -> statement.setDate(index + 1, Date.valueOf(LocalDate.ofEpochDay(val.getInt(index))));

case TIME_WITHOUT_TIME_ZONE:

return (val, index, statement) -> statement.setTime(index + 1, Time.valueOf(LocalTime.ofNanoOfDay(val.getInt(index) * 1000000L)));

case TIMESTAMP_WITH_TIME_ZONE:

case TIMESTAMP_WITHOUT_TIME_ZONE:

timestampPrecision = ((TimestampType)type).getPrecision();

return (val, index, statement) -> statement.setTimestamp(index + 1, val.getTimestamp(index, timestampPrecision).toTimestamp());

case DECIMAL:

decimalPrecision = ((DecimalType)type).getPrecision();

decimalScale = ((DecimalType)type).getScale();

return (val, index, statement) -> statement.setBigDecimal(index + 1, val.getDecimal(index, decimalPrecision, decimalScale).toBigDecimal());

}

throw new UnsupportedOperationException("Unsupported type:" + type);

}

protected DeserializationConverter createToFlinkConverter(LogicalType type) {

int precision;

int scale;

switch (type.getTypeRoot()) {

case NULL:

return val -> null;

case BOOLEAN:

case FLOAT:

case DOUBLE:

case INTERVAL_YEAR_MONTH:

case INTERVAL_DAY_TIME:

return val -> val;

case TINYINT:

return val -> Byte.valueOf(((Integer)val).byteValue());

case SMALLINT:

return val -> (val instanceof Integer) ? Short.valueOf(((Integer)val).shortValue()):val;

case INTEGER:

return val -> val;

case BIGINT:

return val -> (val instanceof BigInteger) ? ((BigInteger) val).longValue() :val;

case DECIMAL:

precision = ((DecimalType)type).getPrecision();

scale = ((DecimalType)type).getScale();

return val -> (val instanceof BigInteger) ? DecimalData.fromBigDecimal(new BigDecimal((BigInteger)val, 0), precision, scale) : DecimalData.fromBigDecimal((BigDecimal)val, precision, scale);

case DATE:

return val -> Integer.valueOf((int)((Date)val).toLocalDate().toEpochDay());

case TIME_WITHOUT_TIME_ZONE:

return val -> Integer.valueOf((int)(((Time)val).toLocalTime().toNanoOfDay() / 1000000L));

case TIMESTAMP_WITH_TIME_ZONE:

case TIMESTAMP_WITHOUT_TIME_ZONE:

return val -> TimestampData.fromTimestamp((Timestamp) val);

case CHAR:

case VARCHAR:

return val -> StringData.fromString(String.valueOf(val));

case BINARY:

case VARBINARY:

return val -> (byte[])val;

}

throw new UnsupportedOperationException("Unsupported type:" + type);

}

@FunctionalInterface

static interface SerializationConverter extends Serializable{

void serialize(RowData param1RowData, int param1Int, PreparedStatement param1PreparedStatement) throws SQLException;

}

@FunctionalInterface

static interface JDBCSerializationConverter extends Serializable{

void serialize(RowData param1RowData, int param1Int, FieldNamedPreparedStatement param1PreparedStatement) throws SQLException;

}

@FunctionalInterface

static interface DeserializationConverter extends Serializable {

Object deserialize(Object param1Object) throws SQLException;

}

}

4. ClickHouseDialect.java 类

package com.wudl.ck.dialect;

import org.apache.flink.connector.jdbc.dialect.JdbcDialect;

import org.apache.flink.connector.jdbc.internal.converter.JdbcRowConverter;

import org.apache.flink.table.types.logical.RowType;

import com.wudl.ck.converter.ClickHouseRowConverter;

import java.util.Optional;

public class ClickHouseDialect implements JdbcDialect {

private static final long serialVersionUID = 1L;

@Override

public String dialectName() {

return "ClickHouse";

}

@Override

public boolean canHandle(String url) {

return url.startsWith("jdbc:clickhouse:");

}

@Override

public JdbcRowConverter getRowConverter(RowType rowType) {

return new ClickHouseRowConverter(rowType);

}

@Override

public String getLimitClause(long limit) {

return "LIMIT " + limit;

}

@Override

public Optional<String> defaultDriverName() {

return Optional.of("ru.yandex.clickhouse.ClickHouseDriver");

}

@Override

public String quoteIdentifier(String identifier) {

return "`" + identifier + "`";

}

@Override

public Optional<String> getUpsertStatement(String tableName, String[] fieldNames, String[] uniqueKeyFields) {

return Optional.empty();

}

}

5.ClickHouseDynamicTableFactory.java 工厂类

package com.wudl.ck.table;

import org.apache.flink.api.common.serialization.SerializationSchema;

import org.apache.flink.configuration.ConfigOption;

import org.apache.flink.configuration.ConfigOptions;

import org.apache.flink.configuration.ReadableConfig;

//import org.apache.flink.connector.jdbc.internal.options.JdbcConnectorOptions;

import org.apache.flink.connector.jdbc.internal.options.JdbcOptions;

import org.apache.flink.table.api.TableSchema;

import org.apache.flink.table.connector.format.EncodingFormat;

import org.apache.flink.table.connector.sink.DynamicTableSink;

import org.apache.flink.table.connector.source.DynamicTableSource;

import org.apache.flink.table.data.RowData;

import org.apache.flink.table.factories.DynamicTableSinkFactory;

import org.apache.flink.table.factories.DynamicTableSourceFactory;

import org.apache.flink.table.factories.FactoryUtil;

import org.apache.flink.table.factories.SerializationFormatFactory;

import org.apache.flink.table.types.DataType;

import org.apache.flink.table.utils.TableSchemaUtils;

import com.wudl.ck.dialect.ClickHouseDialect;

import java.util.HashSet;

import java.util.Set;

public class ClickHouseDynamicTableFactory implements DynamicTableSourceFactory, DynamicTableSinkFactory {

public static final String IDENTIFIER = "clickhouse";

private static final String DRIVER_NAME = "ru.yandex.clickhouse.ClickHouseDriver";

public static final ConfigOption<String> URL = ConfigOptions

.key("url")

.stringType()

.noDefaultValue()

.withDescription("the jdbc database url.");

public static final ConfigOption<String> TABLE_NAME = ConfigOptions

.key("table-name")

.stringType()

.noDefaultValue()

.withDescription("the jdbc table name.");

public static final ConfigOption<String> USERNAME = ConfigOptions

.key("username")

.stringType()

.noDefaultValue()

.withDescription("the jdbc user name.");

public static final ConfigOption<String> PASSWORD = ConfigOptions

.key("password")

.stringType()

.noDefaultValue()

.withDescription("the jdbc password.");

public static final ConfigOption<String> FORMAT = ConfigOptions

.key("format")

.stringType()

.noDefaultValue()

.withDescription("the format.");

@Override

public String factoryIdentifier() {

return IDENTIFIER;

}

@Override

public Set<ConfigOption<?>> requiredOptions() {

Set<ConfigOption<?>> requiredOptions = new HashSet<>();

requiredOptions.add(URL);

requiredOptions.add(TABLE_NAME);

requiredOptions.add(USERNAME);

requiredOptions.add(PASSWORD);

requiredOptions.add(FORMAT);

return requiredOptions;

}

@Override

public Set<ConfigOption<?>> optionalOptions() {

return new HashSet<>();

}

@Override

public DynamicTableSource createDynamicTableSource(Context context) {

// either implement your custom validation logic here ...

final FactoryUtil.TableFactoryHelper helper = FactoryUtil.createTableFactoryHelper(this, context);

final ReadableConfig config = helper.getOptions();

// validate all options

helper.validate();

// JdbcConnectorOptions jdbcOptions = getJdbcOptions(config);

JdbcOptions jdbcOptions = getJdbcOptions(config);

// get table schema

TableSchema physicalSchema = TableSchemaUtils.getPhysicalSchema(context.getCatalogTable().getSchema());

// table source

return new ClickHouseDynamicTableSource(jdbcOptions, physicalSchema);

}

@Override

public DynamicTableSink createDynamicTableSink(Context context) {

// either implement your custom validation logic here ...

final FactoryUtil.TableFactoryHelper helper = FactoryUtil.createTableFactoryHelper(this, context);

// discover a suitable decoding format

final EncodingFormat<SerializationSchema<RowData>> encodingFormat = helper.discoverEncodingFormat(

SerializationFormatFactory.class,

FactoryUtil.FORMAT);

final ReadableConfig config = helper.getOptions();

// validate all options

helper.validate();

// get the validated options

JdbcOptions jdbcOptions = getJdbcOptions(config);

// derive the produced data type (excluding computed columns) from the catalog table

final DataType dataType = context.getCatalogTable().getSchema().toPhysicalRowDataType();

// table sink

return new ClickHouseDynamicTableSink(jdbcOptions, encodingFormat, dataType);

}

private JdbcOptions getJdbcOptions(ReadableConfig readableConfig) {

final String url = readableConfig.get(URL);

final JdbcOptions .Builder builder = JdbcOptions .builder()

.setDriverName(DRIVER_NAME)

.setDBUrl(url)

.setTableName(readableConfig.get(TABLE_NAME))

.setDialect(new ClickHouseDialect());

readableConfig.getOptional(USERNAME).ifPresent(builder::setUsername);

readableConfig.getOptional(PASSWORD).ifPresent(builder::setPassword);

return builder.build();

}

}

5. ClickHouseDynamicTableSink.java 动态表输出

package com.wudl.ck.table;

import org.apache.flink.api.common.serialization.SerializationSchema;

//import org.apache.flink.connector.jdbc.internal.options.JdbcConnectorOptions;

import org.apache.flink.connector.jdbc.internal.options.JdbcOptions;

import org.apache.flink.table.connector.ChangelogMode;

import org.apache.flink.table.connector.format.EncodingFormat;

import org.apache.flink.table.connector.sink.DynamicTableSink;

import org.apache.flink.table.connector.sink.SinkFunctionProvider;

import org.apache.flink.table.data.RowData;

import org.apache.flink.table.types.DataType;

public class ClickHouseDynamicTableSink implements DynamicTableSink {

// private final JdbcConnectorOptions jdbcOptions;

private final JdbcOptions jdbcOptions;

private final EncodingFormat<SerializationSchema<RowData>> encodingFormat;

private final DataType dataType;

public ClickHouseDynamicTableSink(JdbcOptions jdbcOptions, EncodingFormat<SerializationSchema<RowData>> encodingFormat, DataType dataType) {

this.jdbcOptions = jdbcOptions;

this.encodingFormat = encodingFormat;

this.dataType = dataType;

}

@Override

public ChangelogMode getChangelogMode(ChangelogMode requestedMode) {

return requestedMode;

}

@Override

public SinkRuntimeProvider getSinkRuntimeProvider(Context context) {

SerializationSchema<RowData> serializationSchema = encodingFormat.createRuntimeEncoder(context, dataType);

ClickHouseSinkFunction clickHouseSinkFunction = new ClickHouseSinkFunction(jdbcOptions, serializationSchema);

return SinkFunctionProvider.of(clickHouseSinkFunction);

}

@Override

public DynamicTableSink copy() {

return new ClickHouseDynamicTableSink(jdbcOptions, encodingFormat, dataType);

}

@Override表

6. ClickHouseDynamicTableSource.java 动态源

package com.wudl.ck.table;

import org.apache.flink.connector.jdbc.dialect.JdbcDialect;

//import org.apache.flink.connector.jdbc.internal.options.JdbcConnectorOptions;

import org.apache.flink.connector.jdbc.internal.options.JdbcOptions;

import org.apache.flink.connector.jdbc.table.JdbcRowDataInputFormat;

import org.apache.flink.table.api.TableSchema;

import org.apache.flink.table.connector.ChangelogMode;

import org.apache.flink.table.connector.source.DynamicTableSource;

import org.apache.flink.table.connector.source.InputFormatProvider;

import org.apache.flink.table.connector.source.ScanTableSource;

import org.apache.flink.table.types.logical.RowType;

public class ClickHouseDynamicTableSource implements ScanTableSource {

// private final JdbcConnectorOptions options;

private final JdbcOptions options;

private final TableSchema tableSchema;

public ClickHouseDynamicTableSource(JdbcOptions options, TableSchema tableSchema) {

this.options = options;

this.tableSchema = tableSchema;

}

@Override

public ChangelogMode getChangelogMode() {

return ChangelogMode.insertOnly();

}

@Override

@SuppressWarnings("unchecked")

public ScanRuntimeProvider getScanRuntimeProvider(ScanContext runtimeProviderContext) {

final JdbcDialect dialect = options.getDialect();

String query = dialect.getSelectFromStatement(

options.getTableName(), tableSchema.getFieldNames(), new String[0]);

final RowType rowType = (RowType) tableSchema.toRowDataType().getLogicalType();

final JdbcRowDataInputFormat.Builder builder = JdbcRowDataInputFormat.builder()

.setDrivername(options.getDriverName())

.setDBUrl(options.getDbURL())

.setUsername(options.getUsername().orElse(null))

.setPassword(options.getPassword().orElse(null))

.setQuery(query)

.setRowConverter(dialect.getRowConverter(rowType))

.setRowDataTypeInfo(runtimeProviderContext.createTypeInformation(tableSchema.toRowDataType()));;

return InputFormatProvider.of(builder.build());

}

@Override

public DynamicTableSource copy() {

return new ClickHouseDynamicTableSource(options, tableSchema);

}

@Override

public String asSummaryString() {

return "ClickHouse Table Source";

}

}

7.ClickHouseSinkFunction.java 的函数

package com.wudl.ck.table;

import org.apache.flink.api.common.serialization.SerializationSchema;

import org.apache.flink.configuration.Configuration;

//import org.apache.flink.connector.jdbc.internal.options.JdbcConnectorOptions;

import org.apache.flink.connector.jdbc.internal.options.JdbcOptions;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import org.apache.flink.table.data.RowData;

import ru.yandex.clickhouse.BalancedClickhouseDataSource;

import ru.yandex.clickhouse.ClickHouseConnection;

import ru.yandex.clickhouse.ClickHouseStatement;

import ru.yandex.clickhouse.domain.ClickHouseFormat;

import ru.yandex.clickhouse.settings.ClickHouseProperties;

import ru.yandex.clickhouse.settings.ClickHouseQueryParam;

import java.io.ByteArrayInputStream;

import java.sql.SQLException;

public class ClickHouseSinkFunction extends RichSinkFunction<RowData> {

private static final long serialVersionUID = 1L;

private static final String MAX_PARALLEL_REPLICAS_VALUE = "2";

private final JdbcOptions jdbcOptions;

private final SerializationSchema<RowData> serializationSchema;

private ClickHouseConnection conn;

private ClickHouseStatement stmt;

public ClickHouseSinkFunction(JdbcOptions jdbcOptions, SerializationSchema<RowData> serializationSchema) {

this.jdbcOptions = jdbcOptions;

this.serializationSchema = serializationSchema;

}

@Override

public void open(Configuration parameters) {

ClickHouseProperties properties = new ClickHouseProperties();

properties.setUser(jdbcOptions.getUsername().orElse(null));

properties.setPassword(jdbcOptions.getPassword().orElse(null));

BalancedClickhouseDataSource dataSource;

try {

if (null == conn) {

dataSource = new BalancedClickhouseDataSource(jdbcOptions.getDbURL(), properties);

conn = dataSource.getConnection();

}

} catch (SQLException e) {

e.printStackTrace();

}

}

@Override

public void invoke(RowData value, Context context) throws Exception {

byte[] serialize = serializationSchema.serialize(value);

stmt = conn.createStatement();

stmt.write().table(jdbcOptions.getTableName()).data(new ByteArrayInputStream(serialize), ClickHouseFormat.JSONEachRow)

.addDbParam(ClickHouseQueryParam.MAX_PARALLEL_REPLICAS, MAX_PARALLEL_REPLICAS_VALUE).send();

}

@Override

public void close() throws Exception {

if (stmt != null) {

stmt.close();

}

if (conn != null) {

conn.close();

}

}

}

8.配置类

在resources下面新建 META-INF/services 然后新建一个 org.apache.flink.table.factories.Factory 文件里面指定到com.wudl.ck.table.ClickHouseDynamicTableFactory 动态表工厂。

9. 测试

从clickhouse 数据同步到 mysql

Flink SQL> CREATE TABLE source_mysql4 (

> id BIGINT PRIMARY KEY NOT ENFORCED,

> name STRING,

> age BIGINT,

> birthday TIMESTAMP(3),

> ts TIMESTAMP(3)

> ) WITH (

> 'connector' = 'jdbc',

> 'url' = 'jdbc:mysql://192.168.1.162:3306/wudldb?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=UTF-8',

> 'table-name' = 'Flink_cdc_2022',

> 'username' = 'root',

> 'password' = '123456'

> );

[INFO] Execute statement succeed.

Flink SQL> drop table source_mysql4;

[INFO] Execute statement succeed.

Flink SQL> CREATE TABLE source_mysql4 (

> id BIGINT PRIMARY KEY NOT ENFORCED,

> name STRING,

> age BIGINT,

> birthday TIMESTAMP,

> ts TIMESTAMP

> ) WITH (

> 'connector' = 'jdbc',

> 'url' = 'jdbc:mysql://192.168.1.162:3306/wudldb?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=UTF-8',

> 'table-name' = 'Flink_cdc_2022',

> 'username' = 'root',

> 'password' = '123456'

> );

[INFO] Execute statement succeed.

Flink SQL> select * from source_mysql4;

[INFO] Result retrieval cancelled.

Flink SQL> insert into source_mysql4 select id,name,age,birthday,LOCALTIMESTAMP ts from wutable_ck;

[INFO] Submitting SQL update statement to the cluster...

[INFO] SQL update statement has been successfully submitted to the cluster:

Job ID: 4d9cc4cf21d528779fa9c97c5cd933dc

类型转化

cast(属性 as 类型) ,进行类型转换

Flink SQL> CREATE TABLE if not exists wutable_ck (

> id BIGINT,

> name STRING,

> age BIGINT,

> birthday TIMESTAMP

> ) WITH (

> 'connector' = 'clickhouse',

> 'url' = 'jdbc:clickhouse://192.168.1.161:8123/wudldb',

> 'username' = 'default',

> 'password' = '',

> 'table-name' = 'clickhosuetable',

> 'format' = 'json'

> );

>

[INFO] Execute statement succeed.

Flink SQL> CREATE TABLE source_mysql4 (

> id BIGINT PRIMARY KEY NOT ENFORCED,

> name STRING,

> age int,

> birthday TIMESTAMP,

> ts TIMESTAMP

> ) WITH (

> 'connector' = 'jdbc',

> 'url' = 'jdbc:mysql://192.168.1.162:3306/wudldb?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=UTF-8',

> 'table-name' = 'Flink_cdc_2022',

> 'username' = 'root',

> 'password' = '123456'

> );

[INFO] Execute statement succeed.

Flink SQL> select * from source_mysql4;

[INFO] Result retrieval cancelled.

Flink SQL> select id ,name , CAST(age AS BIGINT) age, birthday from source_mysql4;

[INFO] Result retrieval cancelled.

Flink SQL> insert into wutable_ck select id ,name , CAST(age AS BIGINT) age, birthday from source_mysql4;

[INFO] Submitting SQL update statement to the cluster...

[INFO] SQL update statement has been successfully submitted to the cluster:

Job ID: a29a4655930b10c0464e906f21bcef97